BeamNGpy

Python API for BeamNG.tech

Stars: 245

BeamNGpy is an official Python library providing an API to interact with BeamNG.tech, a video game focused on academia and industry. It allows remote control of vehicles, AI-controlled vehicles, dynamic sensor models, access to road network and scenario objects, and multiple clients. The library comes with low-level functions and higher-level interfaces for complex actions. BeamNGpy requires BeamNG.tech for usage and offers compatibility information for different versions. It also provides troubleshooting tips and encourages user contributions.

README:

BeamNGpy is an official library providing a Python API to BeamNG.tech, the academia- and industry-oriented fork of the video game BeamNG.drive. BeamNGpy and BeamNG.tech are designed to go hand in hand, both being kept up to date to support each other's functions, meaning using the latest versions of both is recommended.

It allows remote control of the simulation, including vehicles contained in it. See Features or go through the Feature Overview Jupyter notebook.

BeamNGpy comes with a wide range of low-level functions to interact with the simulation and a few higher-level interfaces that make more complex actions easier. Some features to highlight are:

Each vehicle can be controlled individually and independently during the simulation. This includes basic steering inputs, but also controls over various lights (headlights, indicators, etc.) or gear shifting.

Besides manual control, BeamNG.tech ships with its own AI to control vehicles. This AI can be configured and controlled from BeamNGpy. It can be used to make a vehicle drive to a certain waypoint, make it follow another vehicle, span the map, or follow a user-defined trajectory:

Vehicles and the environment can be equipped with various sensors that provide simulated sensor data. These sensors include:

- Cameras

- Color camera

- Depth camera

- Semantic and Instance annotations

- Lidars

- Inertial Measurement Units

- Ultrasonic Distance Measurements

These sensors give perfect data from the simulation by default. Therefore, some of them, like the camera and lidar sensor, can be equipped to also simulate noisy data.

Geometry of roads in the currently-loaded level/scenario are made available via BeamNGpy. Objects and vehicles that are currently active in the scene are also exposed, allowing for analysis of the current simulation state.

BeamNGpy interacts with BeamNG.tech as the client, with BeamNG.tech acting as the server. This allows for multiple BeamNGpy processes to connect to a running simulation and have each control the simulator, making it possible to, for example, run a scenario in which each vehicle is controlled by a separate client.

There is a healthy collection of usage examples in the examples/ folder of this repository. These highlight more features, but also serve as documentation, so be sure to check them out.

Usage of BeamNGpy requires BeamNG.tech to be installed. For commercial use,

contact us at [email protected]. Builds of BeamNG.tech are made

available for research and academic use upon request using this form.

Once downloaded, you can use the environment variable BNG_HOME to where

BeamNG.tech can be run from, or provide a path to the BeamNGpy library

during initialization.

The library itself is available on PyPI and can therefore be installed

using common methods like pip:

pip install beamngpy

If you use Anaconda, you can

install BeamNGpy from the conda-forge channel by:

conda install beamngpy -c conda-forge

To upgrade, use

pip install --upgrade beamngpy

if you installed BeamNGpy using pip or

conda update beamngpy -c conda-forge --no-pin

if you installed it using conda.

DISCLAIMER: If you are using an older version of beamngpy and BeamNG.tech, please follow the

instructions of the corresponding README file (for example, 1.27.1 instructions). If you are using the latest version of BeamNGpy, continue following the instructions located in this README file.

The library can be imported using import beamngpy. A short

usage example setting up a scenario with one vehicle in the West Coast USA map

that spans the area is:

from beamngpy import BeamNGpy, Scenario, Vehicle

# Instantiate BeamNGpy instance running the simulator from the given path,

# communicating over localhost:64256

bng = BeamNGpy('localhost', 64256, home='/path/to/bng/tech', user='/path/to/bng/tech/userfolder')

# Launch BeamNG.tech

bng.open()

# Create a scenario in west_coast_usa called 'example'

scenario = Scenario('west_coast_usa', 'example')

# Create an ETK800 with the licence plate 'PYTHON'

vehicle = Vehicle('ego_vehicle', model='etk800', license='PYTHON')

# Add it to our scenario at this position and rotation

scenario.add_vehicle(vehicle, pos=(-717, 101, 118), rot_quat=(0, 0, 0.3826834, 0.9238795))

# Place files defining our scenario for the simulator to read

scenario.make(bng)

# Load and start our scenario

bng.scenario.load(scenario)

bng.scenario.start()

# Make the vehicle's AI span the map

vehicle.ai.set_mode('span')

input('Hit enter when done...')We have a guide helping you getting started and navigating our collection of examples and the documentation of the library is available here.

BeamNG.tech is not a finished product but is still under development. Thus frequent changes on the simulation side are to be expected. While the BeamNGpy library maintains compatibility between minor versions for the user, this doesn't extend to the BeamNG.tech side. Not all BeamNGpy versions are compatible with all BeamNG.tech versions. Below is a list of compatible BeamNG.tech and BeamNGpy versions. However we do not maintain minor versions: bug fixes and new features will only be available for the newest BeamNG.tech and BeamNGpy releases.

| BeamNG.tech version | BeamNGpy version |

|---|---|

| 0.32 | 1.29 |

| 0.31 | 1.28 |

| 0.30 | 1.27.1 |

| 0.28, 0.29 | 1.26.1 |

| 0.27 | 1.25.1 |

| 0.26 | 1.24 |

| 0.25 | 1.23.1 |

| 0.24 | 1.22 |

| 0.23 | 1.21.1 |

| 0.22 | 1.20 |

| 0.21 | 1.19.1 |

This section lists common issues with BeamNGpy in particular. Since this library is closely tied to BeamNG.tech and thus BeamNG.drive, it is also recommended to consult the documentation on BeamNG.drive here:

https://documentation.beamng.com/

- Be sure to complete the initial set up step described in the Usage section and to repeat it with every newly released BeamNG.tech version.

- Make sure BeamNG.tech and Python are allowed to connect to your current network in Windows Firewall.

- There is a known issue where BeamNG.tech quietly crashes when there is a space in the configured userpath. Until this issue is fixed, it is recommended to either switch to a path that does not contain a space or change the userpath directly in the "startup.ini" file located in the directory of your BeamNG.tech installation.

We always welcome user contributions, be sure to check out our contribution guidelines first, before starting your work.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for BeamNGpy

Similar Open Source Tools

BeamNGpy

BeamNGpy is an official Python library providing an API to interact with BeamNG.tech, a video game focused on academia and industry. It allows remote control of vehicles, AI-controlled vehicles, dynamic sensor models, access to road network and scenario objects, and multiple clients. The library comes with low-level functions and higher-level interfaces for complex actions. BeamNGpy requires BeamNG.tech for usage and offers compatibility information for different versions. It also provides troubleshooting tips and encourages user contributions.

erlang-red

Erlang-Red is an experimental Erlang backend designed to replace Node-RED's existing NodeJS backend, aiming for 100% compatibility with existing Node-RED flow code. It brings the advantages of low-code visual flow-based programming to Erlang, a language designed for message passing and concurrency. The tool allows for creating data flows that describe concurrent processing with guaranteed concurrency and performance. Erlang-Red provides a visual flow editor for creating and testing flows, supporting various Node-RED core nodes and Erlang-specific nodes. The development process is flow-driven, with test flows ensuring correct node functionality. The tool can be deployed locally using Docker or on platforms like Fly.io and Heroku. Contributions in the form of Erlang code, Node-RED test flows, and Elixir code are welcome, with a focus on replicating Node-RED functionality in alternative programming languages.

hof

Hof is a CLI tool that unifies data models, schemas, code generation, and a task engine. It allows users to augment data, config, and schemas with CUE to improve consistency, generate multiple Yaml and JSON files, explore data or config with a TUI, and run workflows with automatic task dependency inference. The tool uses CUE to power the DX and implementation, providing a language for specifying schemas, configuration, and writing declarative code. Hof offers core features like code generation, data model management, task engine, CUE cmds, creators, modules, TUI, and chat for better, scalable results.

council

Council is an open-source platform designed for the rapid development and deployment of customized generative AI applications using teams of agents. It extends the LLM tool ecosystem by providing advanced control flow and scalable oversight for AI agents. Users can create sophisticated agents with predictable behavior by leveraging Council's powerful approach to control flow using Controllers, Filters, Evaluators, and Budgets. The framework allows for automated routing between agents, comparing, evaluating, and selecting the best results for a task. Council aims to facilitate packaging and deploying agents at scale on multiple platforms while enabling enterprise-grade monitoring and quality control.

Robyn

Robyn is an experimental, semi-automated and open-sourced Marketing Mix Modeling (MMM) package from Meta Marketing Science. It uses various machine learning techniques to define media channel efficiency and effectivity, explore adstock rates and saturation curves. Built for granular datasets with many independent variables, especially suitable for digital and direct response advertisers with rich data sources. Aiming to democratize MMM, make it accessible for advertisers of all sizes, and contribute to the measurement landscape.

PulsarRPA

PulsarRPA is a high-performance, distributed, open-source Robotic Process Automation (RPA) framework designed to handle large-scale RPA tasks with ease. It provides a comprehensive solution for browser automation, web content understanding, and data extraction. PulsarRPA addresses challenges of browser automation and accurate web data extraction from complex and evolving websites. It incorporates innovative technologies like browser rendering, RPA, intelligent scraping, advanced DOM parsing, and distributed architecture to ensure efficient, accurate, and scalable web data extraction. The tool is open-source, customizable, and supports cutting-edge information extraction technology, making it a preferred solution for large-scale web data extraction.

hackingBuddyGPT

hackingBuddyGPT is a framework for testing LLM-based agents for security testing. It aims to create common ground truth by creating common security testbeds and benchmarks, evaluating multiple LLMs and techniques against those, and publishing prototypes and findings as open-source/open-access reports. The initial focus is on evaluating the efficiency of LLMs for Linux privilege escalation attacks, but the framework is being expanded to evaluate the use of LLMs for web penetration-testing and web API testing. hackingBuddyGPT is released as open-source to level the playing field for blue teams against APTs that have access to more sophisticated resources.

aiid

The Artificial Intelligence Incident Database (AIID) is a collection of incidents involving the development and use of artificial intelligence (AI). The database is designed to help researchers, policymakers, and the public understand the potential risks and benefits of AI, and to inform the development of policies and practices to mitigate the risks and promote the benefits of AI. The AIID is a collaborative project involving researchers from the University of California, Berkeley, the University of Washington, and the University of Toronto.

iLLM-TSC

iLLM-TSC is a framework that integrates reinforcement learning and large language models for traffic signal control policy improvement. It refines RL decisions based on real-world contexts and provides reasonable actions when RL agents make erroneous decisions. The framework includes cases where the large language model provides explanations and recommendations for RL agent actions, such as prioritizing emergency vehicles at intersections. Users can install and run the framework locally to train RL models and evaluate the combined RL+LLM approach.

kdbai-samples

KDB.AI is a time-based vector database that allows developers to build scalable, reliable, and real-time applications by providing advanced search, recommendation, and personalization for Generative AI applications. It supports multiple index types, distance metrics, top-N and metadata filtered retrieval, as well as Python and REST interfaces. The repository contains samples demonstrating various use-cases such as temporal similarity search, document search, image search, recommendation systems, sentiment analysis, and more. KDB.AI integrates with platforms like ChatGPT, Langchain, and LlamaIndex. The setup steps require Unix terminal, Python 3.8+, and pip installed. Users can install necessary Python packages and run Jupyter notebooks to interact with the samples.

modular

The Modular Platform is a unified suite of AI libraries and tools designed for AI development and deployment. It abstracts hardware complexity to enable running popular open models with high GPU and CPU performance without code changes. The repository contains over 450,000 lines of code from 6000+ contributors, making it one of the largest open-source repositories for CPU and GPU kernels. Key components include the Mojo standard library, MAX GPU and CPU kernels, MAX inference server, MAX model pipelines, and code examples. The repository has main and stable branches for nightly builds and stable releases, respectively. Contributions are accepted for the Mojo standard library, MAX AI kernels, code examples, and Mojo docs.

ersilia

The Ersilia Model Hub is a unified platform of pre-trained AI/ML models dedicated to infectious and neglected disease research. It offers an open-source, low-code solution that provides seamless access to AI/ML models for drug discovery. Models housed in the hub come from two sources: published models from literature (with due third-party acknowledgment) and custom models developed by the Ersilia team or contributors.

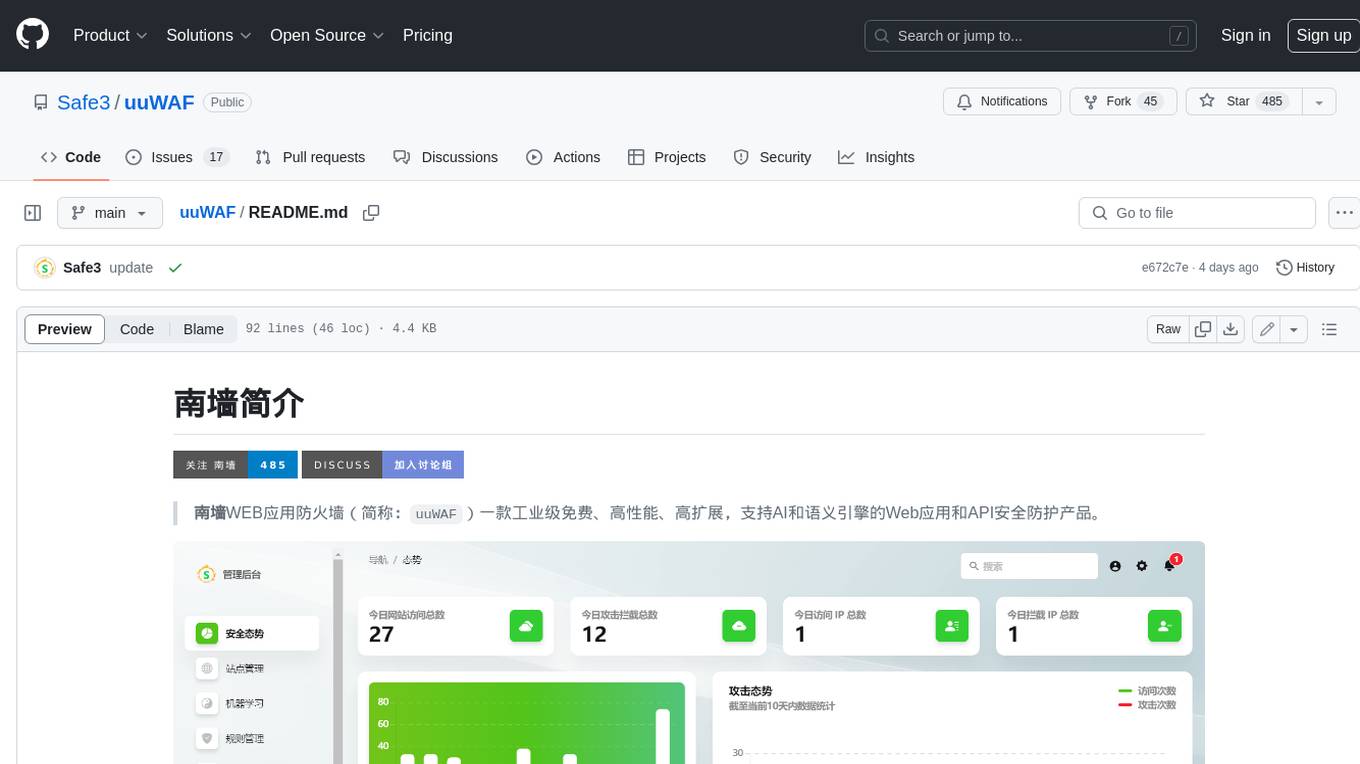

uuWAF

uuWAF is an industrial-grade, free, high-performance, highly extensible web application and API security protection product that supports AI and semantic engines.

dbrx

DBRX is a large language model trained by Databricks and made available under an open license. It is a Mixture-of-Experts (MoE) model with 132B total parameters and 36B live parameters, using 16 experts, of which 4 are active during training or inference. DBRX was pre-trained for 12T tokens of text and has a context length of 32K tokens. The model is available in two versions: a base model and an Instruct model, which is finetuned for instruction following. DBRX can be used for a variety of tasks, including text generation, question answering, summarization, and translation.

DevOpsGPT

DevOpsGPT is an AI-driven software development automation solution that combines Large Language Models (LLM) with DevOps tools to convert natural language requirements into working software. It improves development efficiency by eliminating the need for tedious requirement documentation, shortens development cycles, reduces communication costs, and ensures high-quality deliverables. The Enterprise Edition offers features like existing project analysis, professional model selection, and support for more DevOps platforms. The tool automates requirement development, generates interface documentation, provides pseudocode based on existing projects, facilitates code refinement, enables continuous integration, and supports software version release. Users can run DevOpsGPT with source code or Docker, and the tool comes with limitations in precise documentation generation and understanding existing project code. The product roadmap includes accurate requirement decomposition, rapid import of development requirements, and integration of more software engineering and professional tools for efficient software development tasks under AI planning and execution.

uusec-waf

UUSEC WAF is an industrial grade free, high-performance, and highly scalable web application and API security protection product that supports AI and semantic engines. It provides intelligent 0-day defense, ultimate CDN acceleration, powerful proactive defense, advanced semantic engine, and advanced rule engine. With features like machine learning technology, cache cleaning, dual layer defense, semantic analysis, and Lua script rule writing, UUSEC WAF offers comprehensive website protection with three-layer defense functions at traffic, system, and runtime layers.

For similar tasks

BeamNGpy

BeamNGpy is an official Python library providing an API to interact with BeamNG.tech, a video game focused on academia and industry. It allows remote control of vehicles, AI-controlled vehicles, dynamic sensor models, access to road network and scenario objects, and multiple clients. The library comes with low-level functions and higher-level interfaces for complex actions. BeamNGpy requires BeamNG.tech for usage and offers compatibility information for different versions. It also provides troubleshooting tips and encourages user contributions.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.