Best AI tools for< Cybersecurity Researcher >

Infographic

20 - AI tool Sites

Protect AI

Protect AI is a comprehensive platform designed to secure AI systems by providing visibility and manageability to detect and mitigate unique AI security threats. The platform empowers organizations to embrace a security-first approach to AI, offering solutions for AI Security Posture Management, ML model security enforcement, AI/ML supply chain vulnerability database, LLM security monitoring, and observability. Protect AI aims to safeguard AI applications and ML systems from potential vulnerabilities, enabling users to build, adopt, and deploy AI models confidently and at scale.

CensysGPT Beta

CensysGPT Beta is a tool that simplifies building queries and empowers users to conduct efficient and effective reconnaissance operations. It enables users to quickly and easily gain insights into hosts on the internet, streamlining the process and allowing for more proactive threat hunting and exposure management.

Elie Bursztein AI Cybersecurity Platform

The website is a platform managed by Dr. Elie Bursztein, the Google & DeepMind AI Cybersecurity technical and research lead. It features a collection of publications, blog posts, talks, and press releases related to cybersecurity, artificial intelligence, and technology. Dr. Bursztein shares insights and research findings on various topics such as secure AI workflows, language models in cybersecurity, hate and harassment online, and more. Visitors can explore recent content and subscribe to receive cutting-edge research directly in their inbox.

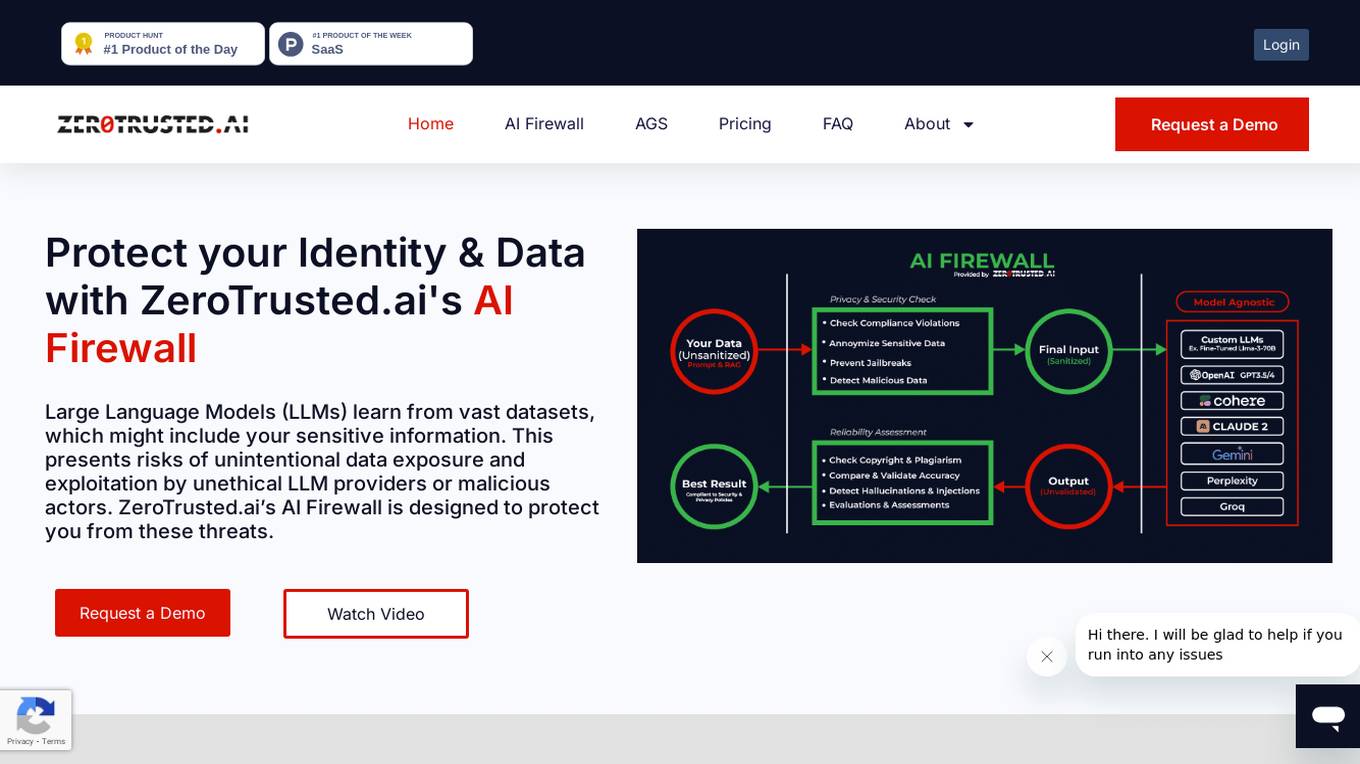

ZeroTrusted.ai

ZeroTrusted.ai is a cybersecurity platform that offers an AI Firewall to protect users from data exposure and exploitation by unethical providers or malicious actors. The platform provides features such as anonymity, security, reliability, integrations, and privacy to safeguard sensitive information. ZeroTrusted.ai empowers organizations with cutting-edge encryption techniques, AI & ML technologies, and decentralized storage capabilities for maximum security and compliance with regulations like PCI, GDPR, and NIST.

Palo Alto Networks

Palo Alto Networks is a cybersecurity company offering advanced security solutions powered by Precision AI to protect modern enterprises from cyber threats. The company provides network security, cloud security, and AI-driven security operations to defend against AI-generated threats in real time. Palo Alto Networks aims to simplify security and achieve better security outcomes through platformization, intelligence-driven expertise, and proactive monitoring of sophisticated threats.

La Biblia de la IA - The Bible of AI™ Journal

La Biblia de la IA - The Bible of AI™ Journal is an educational research platform focused on Artificial Intelligence. It provides in-depth analysis, articles, and discussions on various AI-related topics, aiming to advance knowledge and understanding in the field of AI. The platform covers a wide range of subjects, from machine learning algorithms to ethical considerations in AI development.

DARPA's Artificial Intelligence Cyber Challenge (AIxCC)

The DARPA's Artificial Intelligence Cyber Challenge (AIxCC) is an AI-driven cybersecurity tool developed in collaboration with ARPA-H and various industry experts like Anthropic, Google, Microsoft, OpenAI, and others. It aims to safeguard critical software infrastructure by utilizing AI technology to enhance cybersecurity measures. The tool provides a platform for experts in AI and cybersecurity to come together and address the evolving threats in the digital landscape.

SMshrimant

SMshrimant is a personal website belonging to a Bug Bounty Hunter, Security Researcher, Penetration Tester, and Ethical Hacker. The website showcases the creator's skills and experiences in the field of cybersecurity, including bug hunting, security vulnerability reporting, open-source tool development, and participation in Capture The Flag competitions. Visitors can learn about the creator's projects, achievements, and contact information for inquiries or collaborations.

SentinelOne

SentinelOne is an advanced enterprise cybersecurity AI platform that offers a comprehensive suite of AI-powered security solutions for endpoint, cloud, and identity protection. The platform leverages artificial intelligence to anticipate threats, manage vulnerabilities, and protect resources across the entire enterprise ecosystem. With features such as Singularity XDR, Purple AI, and AI-SIEM, SentinelOne empowers security teams to detect and respond to cyber threats in real-time. The platform is trusted by leading enterprises worldwide and has received industry recognition for its innovative approach to cybersecurity.

GenAI Today

GenAI Today is a news portal that focuses on the latest advancements in generative AI technology and its applications across various industries. The platform covers news, white papers, webinars, and events related to AI innovations. It showcases companies and technologies leveraging generative AI algorithms for cybersecurity, industrial analytics, conversational AI, and more. GenAI Today aims to provide insights into how AI is transforming businesses and improving operational efficiency through cutting-edge solutions.

Next Realm AI

Next Realm AI is an Artificial Intelligence Research Lab based in New York City, focusing on pioneering the future of artificial intelligence and transformative technologies. The lab specializes in areas such as Large Language Models (LLM), quantum computing, and cybersecurity, aiming to drive innovation and develop groundbreaking applications through strategic partnerships with startups and major tech companies. Next Realm AI is dedicated to advancing cutting-edge technologies and accelerating machine learning through quantum approaches for rapid data analysis, pattern recognition, and decision-making.

Times of AI

Times of AI is a comprehensive platform providing the latest news, insights, and trends in the fields of Artificial Intelligence (AI) and Machine Learning (ML). The website covers a wide range of topics including AI governance, cybersecurity, data science, automation, and responsible AI. It also offers reviews and comparisons of various AI tools, along with industry insights and technology innovations. Users can stay updated on the latest developments in AI through breaking news articles and in-depth analyses. Times of AI aims to be a go-to source for professionals, researchers, and enthusiasts interested in the rapidly evolving AI landscape.

World Summit AI

World Summit AI is the most important summit for the development of strategies on AI, spotlighting worldwide applications, risks, benefits, and opportunities. It gathers global AI ecosystem stakeholders to set the global AI agenda in Amsterdam every October. The summit covers groundbreaking stories of AI in action, deep-dive tech talks, moonshots, responsible AI, and more, focusing on human-AI convergence, innovation in action, startups, scale-ups, and unicorns, and the impact of AI on economy, employment, and equity. It addresses responsible AI, governance, cybersecurity, privacy, and risk management, aiming to deploy AI for good and create a brighter world. The summit features leading innovators, policymakers, and social change makers harnessing AI for good, exploring AI with a conscience, and accelerating AI adoption. It also highlights generative AI and limitless potential for collaboration between man and machine to enhance the human experience.

AI Learning Platform

The website offers a brand new course titled 'Prompt Engineering for Everyone' to help users master the language of AI. With over 100 courses and 20+ learning paths, users can learn AI, Data Science, and other emerging technologies. The platform provides hands-on content designed by expert instructors, allowing users to gain practical, industry-relevant knowledge and skills. Users can earn certificates to showcase their expertise and build projects to demonstrate their skills. Trusted by 3 million learners globally, the platform offers a community of learners with a proven track record of success.

Technology Magazine

Technology Magazine is a leading platform covering the latest trends and innovations in the technology sector. It provides in-depth articles, interviews, and videos on topics such as AI, machine learning, cloud computing, cybersecurity, digital transformation, and data analytics. The magazine aims to connect the global community of enterprise IT and technology executives by offering insights into the digital journey and showcasing top companies and industry leaders.

OpenBuckets

OpenBuckets is a web application designed to help users find and secure open buckets in cloud storage systems. It provides a user-friendly interface for scanning and identifying unprotected buckets, allowing users to take necessary actions to secure their data. With OpenBuckets, users can easily detect potential security risks and prevent unauthorized access to their sensitive information stored in the cloud.

Cyble

Cyble is a leading threat intelligence platform offering products and services recognized by top industry analysts. It provides AI-driven cyber threat intelligence solutions for enterprises, governments, and individuals. Cyble's offerings include attack surface management, brand intelligence, dark web monitoring, vulnerability management, takedown and disruption services, third-party risk management, incident management, and more. The platform leverages cutting-edge AI technology to enhance cybersecurity efforts and stay ahead of cyber adversaries.

CyberNative AI Social Network

CyberNative AI Social Network is a cutting-edge social network platform that integrates AI technology to enhance user experience and engagement. The platform focuses on topics related to AI, cybersecurity, gaming, science, and technology, providing a space for enthusiasts and professionals to connect, share insights, and stay updated on the latest trends. With a user-friendly interface and advanced AI algorithms, CyberNative AI Social Network offers a unique and interactive environment for users to explore diverse realms of virtual and augmented reality, cybersecurity, quantum computing, gaming, and more.

AI Security Institute (AISI)

The AI Security Institute (AISI) is a state-backed organization dedicated to advancing AI governance and safety. They conduct rigorous AI research to understand the impacts of advanced AI, develop risk mitigations, and collaborate with AI developers and governments to shape global policymaking. The institute aims to equip governments with a scientific understanding of the risks posed by advanced AI, monitor AI development, evaluate national security risks, and promote responsible AI development. With a team of top technical staff and partnerships with leading research organizations, AISI is at the forefront of AI governance.

Constella Intelligence

Constella Intelligence is a world-class identity protection and identity risk intelligence platform powered by AI and the world's largest breach data lake. It offers solutions for API integrations, identity theft monitoring, threat intelligence, identity fraud detection, digital risk protection services, executive and brand protection, OSINT cybercrime investigations, and threat monitoring and alerting. Constella provides precise and timely alerts, in-depth real-time identity data signals, and enhanced situational awareness to help organizations combat cyber threats effectively.

9 - Open Source Tools

ail-framework

AIL framework is a modular framework to analyze potential information leaks from unstructured data sources like pastes from Pastebin or similar services or unstructured data streams. AIL framework is flexible and can be extended to support other functionalities to mine or process sensitive information (e.g. data leak prevention).

ai-exploits

AI Exploits is a repository that showcases practical attacks against AI/Machine Learning infrastructure, aiming to raise awareness about vulnerabilities in the AI/ML ecosystem. It contains exploits and scanning templates for responsibly disclosed vulnerabilities affecting machine learning tools, including Metasploit modules, Nuclei templates, and CSRF templates. Users can use the provided Docker image to easily run the modules and templates. The repository also provides guidelines for using Metasploit modules, Nuclei templates, and CSRF templates to exploit vulnerabilities in machine learning tools.

NGCBot

NGCBot is a WeChat bot based on the HOOK mechanism, supporting scheduled push of security news from FreeBuf, Xianzhi, Anquanke, and Qianxin Attack and Defense Community, KFC copywriting, filing query, phone number attribution query, WHOIS information query, constellation query, weather query, fishing calendar, Weibei threat intelligence query, beautiful videos, beautiful pictures, and help menu. It supports point functions, automatic pulling of people, ad detection, automatic mass sending, Ai replies, rich customization, and easy for beginners to use. The project is open-source and periodically maintained, with additional features such as Ai (Gpt, Xinghuo, Qianfan), keyword invitation to groups, automatic mass sending, and group welcome messages.

airgorah

Airgorah is a WiFi security auditing software written in Rust that utilizes the aircrack-ng tools suite. It allows users to capture WiFi traffic, discover connected clients, perform deauthentication attacks, capture handshakes, and crack access point passwords. The software is designed for testing and discovering flaws in networks owned by the user, and requires root privileges to run on Linux systems with a wireless network card supporting monitor mode and packet injection. Airgorah is not responsible for any illegal activities conducted with the software.

agentic_security

Agentic Security is an open-source vulnerability scanner designed for safety scanning, offering customizable rule sets and agent-based attacks. It provides comprehensive fuzzing for any LLMs, LLM API integration, and stress testing with a wide range of fuzzing and attack techniques. The tool is not a foolproof solution but aims to enhance security measures against potential threats. It offers installation via pip and supports quick start commands for easy setup. Users can utilize the tool for LLM integration, adding custom datasets, running CI checks, extending dataset collections, and dynamic datasets with mutations. The tool also includes a probe endpoint for integration testing. The roadmap includes expanding dataset variety, introducing new attack vectors, developing an attacker LLM, and integrating OWASP Top 10 classification.

pwnagotchi

Pwnagotchi is an AI tool leveraging bettercap to learn from WiFi environments and maximize crackable WPA key material. It uses LSTM with MLP feature extractor for A2C agent, learning over epochs to improve performance in various WiFi environments. Units can cooperate using a custom parasite protocol. Visit https://www.pwnagotchi.ai for documentation and community links.

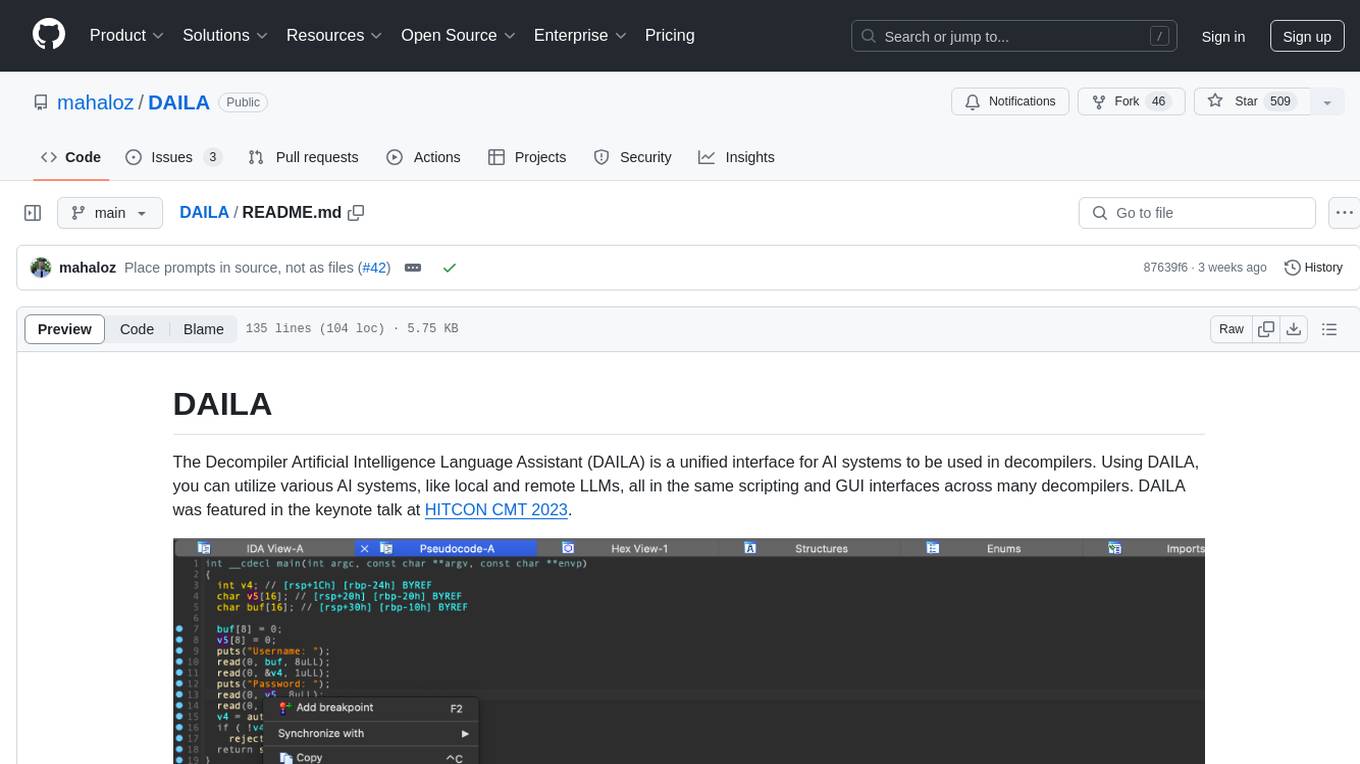

DAILA

DAILA is a unified interface for AI systems in decompilers, supporting various decompilers and AI systems. It allows users to utilize local and remote LLMs, like ChatGPT and Claude, and local models such as VarBERT. DAILA can be used as a decompiler plugin with GUI or as a scripting library. It also provides a Docker container for offline installations and supports tasks like summarizing functions and renaming variables in decompilation.

jadx-ai-mcp

JADX-AI-MCP is a plugin for the JADX decompiler that integrates with Model Context Protocol (MCP) to provide live reverse engineering support with LLMs like Claude. It allows for quick analysis, vulnerability detection, and AI code modification, all in real time. The tool combines JADX-AI-MCP and JADX MCP SERVER to analyze Android APKs effortlessly. It offers various prompts for code understanding, vulnerability detection, reverse engineering helpers, static analysis, AI code modification, and documentation. The tool is part of the Zin MCP Suite and aims to connect all android reverse engineering and APK modification tools with a single MCP server for easy reverse engineering of APK files.

Facemash

Facemash is a powerful Python tool designed for ethical hacking and cybersecurity research purposes. It combines brute force techniques with AI-driven strategies to crack Facebook accounts with precision. The tool offers advanced password strategies, multiple brute force methods, and real-time logs for total control. Facemash is not open-source and is intended for responsible use only.

20 - OpenAI Gpts

fox8 botnet paper

A helpful guide for understanding the paper "Anatomy of an AI-powered malicious social botnet"

Bug Insider

Analyzes bug bounty writeups and cybersecurity reports, providing structured insights and tips.

NVD - CVE Research Assistant

Expert in CVEs and cybersecurity vulnerabilities, providing precise information from the National Vulnerability Database.

HackingPT

HackingPT is a specialized language model focused on cybersecurity and penetration testing, committed to providing precise and in-depth insights in these fields.

Threat Intel Briefs

Delivers daily, sector-specific cybersecurity threat intel briefs with source citations.

牛马审稿人-AI领域

Formal academic reviewer & writing advisor in cybersecurity & AI, detail-oriented.

CyberNews GPT

CyberNews GPT is an assistant that provides the latest security news about cyber threats, hackings and breaches, malware, zero-day vulnerabilities, phishing, scams and so on.

AI Cyberwar

AI and cyber warfare expert, advising on policy, conflict, and technical trends

MagicUnprotect

This GPT allows to interact with the Unprotect DB to retrieve knowledge about malware evasion techniques