RA.Aid

Develop software autonomously.

Stars: 1585

RA.Aid is an AI software development agent powered by `aider` and advanced reasoning models like `o1`. It combines `aider`'s code editing capabilities with LangChain's agent-based task execution framework to provide an intelligent assistant for research, planning, and implementation of multi-step development tasks. It handles complex programming tasks by breaking them down into manageable steps, running shell commands automatically, and leveraging expert reasoning models like OpenAI's o1. RA.Aid is designed for everyday software development, offering features such as multi-step task planning, automated command execution, and the ability to handle complex programming tasks beyond single-shot code edits.

README:

Develop software autonomously.

RA.Aid (pronounced "raid") helps you develop software autonomously. It is a standalone coding agent built on LangGraph's agent-based task execution framework. The tool provides an intelligent assistant that can help with research, planning, and implementation of multi-step development tasks. RA.Aid can optionally integrate with aider (https://aider.chat/) via the --use-aider flag to leverage its specialized code editing capabilities.

The result is near-fully-autonomous software development.

Enjoying RA.Aid? Show your support by giving us a star ⭐ on GitHub!

Here's a demo of RA.Aid adding a feature to itself:

Complete documentation is available at https://docs.ra-aid.ai

Key sections:

- Installation Guide

- Recommended Configuration

- Open Models Setup

- Usage Examples

- Logging System

- Memory Management

- Contributing Guide

- Getting Help

- Features

- Installation

- Usage

- Architecture

- Dependencies

- Development Setup

- Contributing

- License

- Contact

👋 Pull requests are very welcome! Have ideas for how to improve RA.Aid? Don't be shy - your help makes a real difference!

💬 Join our Discord community: Click here to join

- This tool can and will automatically execute shell commands and make code changes

- The --cowboy-mode flag can be enabled to skip shell command approval prompts

- No warranty is provided, either express or implied

- Always use in version-controlled repositories

- Review proposed changes in your git diff before committing

-

Multi-Step Task Planning: The agent breaks down complex tasks into discrete, manageable steps and executes them sequentially. This systematic approach ensures thorough implementation and reduces errors.

-

Automated Command Execution: The agent can run shell commands automatically to accomplish tasks. While this makes it powerful, it also means you should carefully review its actions.

-

Ability to Leverage Expert Reasoning Models: The agent can use advanced reasoning models such as OpenAI's o1 just when needed, e.g. to solve complex debugging problems or in planning for complex feature implementation.

-

Web Research Capabilities: Leverages Tavily API for intelligent web searches to enhance research and gather real-world context for development tasks

-

Three-Stage Architecture:

- Research: Analyzes codebases and gathers context

- Planning: Breaks down tasks into specific, actionable steps

- Implementation: Executes each planned step sequentially

What sets RA.Aid apart is its ability to handle complex programming tasks that extend beyond single-shot code edits. By combining research, strategic planning, and implementation into a cohesive workflow, RA.Aid can:

- Break down and execute multi-step programming tasks

- Research and analyze complex codebases to answer architectural questions

- Plan and implement significant code changes across multiple files

- Provide detailed explanations of existing code structure and functionality

- Execute sophisticated refactoring operations with proper planning

-

Three-Stage Architecture: The workflow consists of three powerful stages:

- Research 🔍 - Gather and analyze information

- Planning 📋 - Develop execution strategy

- Implementation ⚡ - Execute the plan with AI assistance

Each stage is powered by dedicated AI agents and specialized toolsets.

-

Advanced AI Integration: Built on LangChain and leverages the latest LLMs for natural language understanding and generation.

-

Human-in-the-Loop Interaction: Optional mode that enables the agent to ask you questions during task execution, ensuring higher accuracy and better handling of complex tasks that may require your input or clarification

-

Comprehensive Toolset:

- Shell command execution

- Expert querying system

- File operations and management

- Memory management

- Research and planning tools

- Code analysis capabilities

-

Interactive CLI Interface: Simple yet powerful command-line interface for seamless interaction

-

Modular Design: Structured as a Python package with specialized modules for console output, processing, text utilities, and tools

-

Git Integration: Built-in support for Git operations and repository management

- Install Python 3.8 or higher from python.org

- Install required system dependencies:

# Install Chocolatey if not already installed (run in admin PowerShell) Set-ExecutionPolicy Bypass -Scope Process -Force; [System.Net.ServicePointManager]::SecurityProtocol = [System.Net.ServicePointManager]::SecurityProtocol -bor 3072; iex ((New-Object System.Net.WebClient).DownloadString('https://community.chocolatey.org/install.ps1')) # Install ripgrep using Chocolatey choco install ripgrep

- Install RA.Aid:

pip install ra-aid - Install Windows-specific dependencies:

pip install pywin32

- Set up your API keys in a

.envfile:ANTHROPIC_API_KEY=your_anthropic_key OPENAI_API_KEY=your_openai_key

RA.Aid can be installed directly using pip:

pip install ra-aidbrew tap ai-christianson/homebrew-ra-aid

brew install ra-aidNOTE: macOS may also be installed with pip as shown above.

Before using RA.Aid, you'll need API keys for the required AI services:

# Set up API keys based on your preferred provider:

# For Anthropic Claude models (recommended)

export ANTHROPIC_API_KEY=your_api_key_here

# For OpenAI models (optional)

export OPENAI_API_KEY=your_api_key_here

# For OpenRouter provider (optional)

export OPENROUTER_API_KEY=your_api_key_here

# For OpenAI-compatible providers (optional)

export OPENAI_API_BASE=your_api_base_url

# For Gemini provider (optional)

export GEMINI_API_KEY=your_api_key_here

# For web research capabilities

export TAVILY_API_KEY=your_api_key_hereNote: When using the --use-aider flag, the programmer tool (aider) will automatically select its model based on your available API keys:

- If ANTHROPIC_API_KEY is set, it will use Claude models

- If only OPENAI_API_KEY is set, it will use OpenAI models

- You can set multiple API keys to enable different features

You can get your API keys from:

- Anthropic API key: https://console.anthropic.com/

- OpenAI API key: https://platform.openai.com/api-keys

- OpenRouter API key: https://openrouter.ai/keys

- Gemini API key: https://aistudio.google.com/app/apikey

Note: aider must be installed separately as it is not included in the RA.Aid package. See aider-chat for more details.

Complete installation documentation is available in our Installation Guide.

RA.Aid is designed to be simple yet powerful. Here's how to use it:

# Basic usage

ra-aid -m "Your task or query here"

# Research-only mode (no implementation)

ra-aid -m "Explain the authentication flow" --research-only

# File logging with console warnings (default mode)

ra-aid -m "Add new feature" --log-mode file

# Console-only logging with detailed output

ra-aid -m "Add new feature" --log-mode console --log-level debugMore information is available in our Usage Examples, Logging System, and Memory Management documentation.

-

-m, --message: The task or query to be executed (required except in chat mode, cannot be used with --msg-file) -

--msg-file: Path to a text file containing the task/message (cannot be used with --message) -

--research-only: Only perform research without implementation -

--provider: The LLM provider to use (choices: anthropic, openai, openrouter, openai-compatible, gemini) -

--model: The model name to use (required for non-Anthropic providers) -

--use-aider: Enable aider integration for code editing. When enabled, RA.Aid uses aider's specialized code editing capabilities instead of its own native file modification tools. This option is useful when you need aider's specific editing features or prefer its approach to code modifications. This feature is optional and disabled by default. -

--research-provider: Provider to use specifically for research tasks (falls back to --provider if not specified) -

--research-model: Model to use specifically for research tasks (falls back to --model if not specified) -

--planner-provider: Provider to use specifically for planning tasks (falls back to --provider if not specified) -

--planner-model: Model to use specifically for planning tasks (falls back to --model if not specified) -

--cowboy-mode: Skip interactive approval for shell commands -

--expert-provider: The LLM provider to use for expert knowledge queries (choices: anthropic, openai, openrouter, openai-compatible, gemini) -

--expert-model: The model name to use for expert knowledge queries (required for non-OpenAI providers) -

--hil, -H: Enable human-in-the-loop mode for interactive assistance during task execution -

--chat: Enable chat mode with direct human interaction (implies --hil) -

--log-mode: Logging mode (choices: file, console)-

file(default): Logs to both file and console (only warnings and errors to console) -

console: Logs to console only at the specified log level with no file logging

-

-

--log-level: Set specific logging level (debug, info, warning, error, critical)- With

--log-mode=file: Controls the file logging level (console still shows only warnings+) - With

--log-mode=console: Controls the console logging level directly - Default: warning

- With

-

--experimental-fallback-handler: Enable experimental fallback handler to attempt to fix too calls when the same tool fails 3 times consecutively. (OPENAI_API_KEY recommended as openai has the top 5 tool calling models.) Seera_aid/tool_leaderboard.pyfor more info. -

--pretty-logger: Enables colored panel-style formatted logging output for better readability. -

--temperature: LLM temperature (0.0-2.0) to control randomness in responses -

--disable-limit-tokens: Disable token limiting for Anthropic Claude react agents -

--recursion-limit: Maximum recursion depth for agent operations (default: 100) -

--test-cmd: Custom command to run tests. If set user will be asked if they want to run the test command -

--auto-test: Automatically run tests after each code change -

--max-test-cmd-retries: Maximum number of test command retry attempts (default: 3) -

--test-cmd-timeout: Timeout in seconds for test command execution (default: 300) -

--show-cost: Display cost information as the agent works - currently only supported on claude model agents -

--track-cost: Track token usage and costs (default: False) -

--no-track-cost: Disable tracking of token usage and costs -

--version: Show program version number and exit -

--server: Launch the server with web interface (alpha feature) -

--server-host: Host to listen on for server (default: 0.0.0.0) (alpha feature) -

--server-port: Port to listen on for server (default: 1818) (alpha feature)

-

Code Analysis:

ra-aid -m "Explain how the authentication middleware works" --research-only -

Complex Changes:

ra-aid -m "Refactor the database connection code to use connection pooling" --cowboy-mode -

Automated Updates:

ra-aid -m "Update deprecated API calls across the entire codebase" --cowboy-mode -

Code Research:

ra-aid -m "Analyze the current error handling patterns" --research-only -

Code Research:

ra-aid -m "Explain how the authentication middleware works" --research-only -

Refactoring:

ra-aid -m "Refactor the database connection code to use connection pooling" --cowboy-mode

Enable interactive mode to allow the agent to ask you questions during task execution:

ra-aid -m "Implement a new feature" --hil

# or

ra-aid -m "Implement a new feature" -HThis mode is particularly useful for:

- Complex tasks requiring human judgment

- Clarifying ambiguous requirements

- Making architectural decisions

- Validating critical changes

- Providing domain-specific knowledge

The agent features autonomous web research capabilities powered by the Tavily API, seamlessly integrating real-world information into its problem-solving workflow. Web research is conducted automatically when the agent determines additional context would be valuable - no explicit configuration required.

For example, when researching modern authentication practices or investigating new API requirements, the agent will autonomously:

- Search for current best practices and security recommendations

- Find relevant documentation and technical specifications

- Gather real-world implementation examples

- Stay updated on latest industry standards

While web research happens automatically as needed, you can also explicitly request research-focused tasks:

# Focused research task with web search capabilities

ra-aid -m "Research current best practices for API rate limiting" --research-onlyMake sure to set your TAVILY_API_KEY environment variable to enable this feature.

Enable with --chat to transform ra-aid into an interactive assistant that guides you through research and implementation tasks. Have a natural conversation about what you want to build, explore options together, and dispatch work - all while maintaining context of your discussion. Perfect for when you want to think through problems collaboratively rather than just executing commands.

RA.Aid includes a modern server with web interface that provides:

- Beautiful dark-themed chat interface

- Real-time streaming of command output

- Request history with quick resubmission

- Responsive design that works on all devices

To launch the server with web interface:

# Start with default settings (0.0.0.0:1818)

ra-aid --server

# Specify custom host and port

ra-aid --server --server-host 127.0.0.1 --server-port 3000Command line options for server with web interface:

-

--server: Launch the server with web interface -

--server-host: Host to listen on (default: 0.0.0.0) -

--server-port: Port to listen on (default: 1818)

After starting the server, open your web browser to the displayed URL (e.g., http://localhost:1818). The interface provides:

- Left sidebar showing request history

- Main chat area with real-time output

- Input box for typing requests

- Automatic reconnection handling

- Error reporting and status messages

All ra-aid commands sent through the web interface automatically use cowboy mode for seamless execution.

You can interrupt the agent at any time by pressing Ctrl-C. This pauses the agent, allowing you to provide feedback, adjust your instructions, or steer the execution in a new direction. Press Ctrl-C again if you want to completely exit the program.

The --cowboy-mode flag enables automated shell command execution without confirmation prompts. This is useful for:

- CI/CD pipelines

- Automated testing environments

- Batch processing operations

- Scripted workflows

ra-aid -m "Update all deprecated API calls" --cowboy-mode- Cowboy mode skips confirmation prompts for shell commands

- Always use in version-controlled repositories

- Ensure you have a clean working tree before running

- Review changes in git diff before committing

RA.Aid supports multiple AI providers and models. The default model is Anthropic's Claude 3 Sonnet (claude-3-7-sonnet-20250219).

When using the --use-aider flag, the programmer tool (aider) automatically selects its model based on your available API keys. It will use Claude models if ANTHROPIC_API_KEY is set, or fall back to OpenAI models if only OPENAI_API_KEY is available.

Note: The expert tool can be configured to use different providers (OpenAI, Anthropic, OpenRouter, Gemini) using the --expert-provider flag along with the corresponding EXPERT_*API_KEY environment variables. Each provider requires its own API key set through the appropriate environment variable.

RA.Aid supports multiple providers through environment variables:

-

ANTHROPIC_API_KEY: Required for the default Anthropic provider -

OPENAI_API_KEY: Required for OpenAI provider -

OPENROUTER_API_KEY: Required for OpenRouter provider -

DEEPSEEK_API_KEY: Required for DeepSeek provider -

OPENAI_API_BASE: Required for OpenAI-compatible providers along withOPENAI_API_KEY -

GEMINI_API_KEY: Required for Gemini provider

Expert Tool Environment Variables:

-

EXPERT_OPENAI_API_KEY: API key for expert tool using OpenAI provider -

EXPERT_ANTHROPIC_API_KEY: API key for expert tool using Anthropic provider -

EXPERT_OPENROUTER_API_KEY: API key for expert tool using OpenRouter provider -

EXPERT_OPENAI_API_BASE: Base URL for expert tool using OpenAI-compatible provider -

EXPERT_GEMINI_API_KEY: API key for expert tool using Gemini provider -

EXPERT_DEEPSEEK_API_KEY: API key for expert tool using DeepSeek provider

You can set these permanently in your shell's configuration file (e.g., ~/.bashrc or ~/.zshrc):

# Default provider (Anthropic)

export ANTHROPIC_API_KEY=your_api_key_here

# For OpenAI features and expert tool

export OPENAI_API_KEY=your_api_key_here

# For OpenRouter provider

export OPENROUTER_API_KEY=your_api_key_here

# For OpenAI-compatible providers

export OPENAI_API_BASE=your_api_base_url

# For Gemini provider

export GEMINI_API_KEY=your_api_key_here-

Using Anthropic (Default)

# Uses default model (claude-3-7-sonnet-20250219) ra-aid -m "Your task" # Or explicitly specify: ra-aid -m "Your task" --provider anthropic --model claude-3-5-sonnet-20241022

-

Using OpenAI

ra-aid -m "Your task" --provider openai --model gpt-4o -

Using OpenRouter

ra-aid -m "Your task" --provider openrouter --model mistralai/mistral-large-2411 -

Using DeepSeek

# Direct DeepSeek provider (requires DEEPSEEK_API_KEY) ra-aid -m "Your task" --provider deepseek --model deepseek-reasoner # DeepSeek via OpenRouter ra-aid -m "Your task" --provider openrouter --model deepseek/deepseek-r1

-

Configuring Expert Provider

The expert tool is used by the agent for complex logic and debugging tasks. It can be configured to use different providers (OpenAI, Anthropic, OpenRouter, Gemini, openai-compatible) using the --expert-provider flag along with the corresponding EXPERT_*API_KEY environment variables.

# Use Anthropic for expert tool export EXPERT_ANTHROPIC_API_KEY=your_anthropic_api_key ra-aid -m "Your task" --expert-provider anthropic --expert-model claude-3-5-sonnet-20241022 # Use OpenRouter for expert tool export OPENROUTER_API_KEY=your_openrouter_api_key ra-aid -m "Your task" --expert-provider openrouter --expert-model mistralai/mistral-large-2411 # Use DeepSeek for expert tool export DEEPSEEK_API_KEY=your_deepseek_api_key ra-aid -m "Your task" --expert-provider deepseek --expert-model deepseek-reasoner # Use default OpenAI for expert tool export EXPERT_OPENAI_API_KEY=your_openai_api_key ra-aid -m "Your task" --expert-provider openai --expert-model o1 # Use Gemini for expert tool export EXPERT_GEMINI_API_KEY=your_gemini_api_key ra-aid -m "Your task" --expert-provider gemini --expert-model gemini-2.0-flash-thinking-exp-1219

Aider specific Environment Variables you can add:

-

AIDER_FLAGS: Optional comma-separated list of flags to pass to the underlying aider tool (e.g., "yes-always,dark-mode")

# Optional: Configure aider behavior

export AIDER_FLAGS="yes-always,dark-mode,no-auto-commits"Note: For AIDER_FLAGS, you can specify flags with or without the leading --. Multiple flags should be comma-separated, and spaces around flags are automatically handled. For example, both "yes-always,dark-mode" and "--yes-always, --dark-mode" are valid.

Important Notes:

- Performance varies between models. The default Claude 3 Sonnet model currently provides the best and most reliable results.

- Model configuration is done via command line arguments:

--providerand--model - The

--modelargument is required for all providers except Anthropic (which defaults toclaude-3-7-sonnet-20250219)

More information is available in our Open Models Setup guide.

RA.Aid implements a three-stage architecture for handling development and research tasks:

-

Research Stage:

- Gathers information and context

- Analyzes requirements

- Identifies key components and dependencies

-

Planning Stage:

- Develops detailed implementation plans

- Breaks down tasks into manageable steps

- Identifies potential challenges and solutions

-

Implementation Stage:

- Executes planned tasks

- Generates code or documentation

- Performs necessary system operations

-

Console Module (

console/): Handles console output formatting and user interaction -

Processing Module (

proc/): Manages interactive processing and workflow control -

Text Module (

text/): Provides text processing and manipulation utilities -

Tools Module (

tools/): Contains various utility tools for file operations, search, and more

-

langchain-anthropic: LangChain integration with Anthropic's Claude -

tavily-python: Tavily API client for web research -

langgraph: Graph-based workflow management -

rich>=13.0.0: Terminal formatting and output -

GitPython==3.1.41: Git repository management -

fuzzywuzzy==0.18.0: Fuzzy string matching -

python-Levenshtein==0.23.0: Fast string matching -

pathspec>=0.11.0: Path specification utilities

-

pytest>=7.0.0: Testing framework -

pytest-timeout>=2.2.0: Test timeout management

- Clone the repository:

git clone https://github.com/ai-christianson/RA.Aid.git

cd RA.Aid- Create and activate a virtual environment:

python -m venv venv

source venv/bin/activate # On Windows use `venv\Scripts\activate`- Install development dependencies:

pip install -e ".[dev]"- Run tests:

python -m pytestContributions are welcome! Please follow these steps:

- Fork the repository

- Create a feature branch:

git checkout -b feature/your-feature-name- Make your changes and commit:

git commit -m 'Add some feature'- Push to your fork:

git push origin feature/your-feature-name- Open a Pull Request

- Follow PEP 8 style guidelines

- Add tests for new features

- Update documentation as needed

- Keep commits focused and message clear

- Ensure all tests pass before submitting PR

More information is available in our Contributing Guide.

This project is licensed under the Apache License 2.0 - see the LICENSE file for details.

Copyright (c) 2024 AI Christianson

- Issues: Please report bugs and feature requests on our Issue Tracker

- Repository: https://github.com/ai-christianson/RA.Aid

- Documentation: https://github.com/ai-christianson/RA.Aid#readme

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for RA.Aid

Similar Open Source Tools

RA.Aid

RA.Aid is an AI software development agent powered by `aider` and advanced reasoning models like `o1`. It combines `aider`'s code editing capabilities with LangChain's agent-based task execution framework to provide an intelligent assistant for research, planning, and implementation of multi-step development tasks. It handles complex programming tasks by breaking them down into manageable steps, running shell commands automatically, and leveraging expert reasoning models like OpenAI's o1. RA.Aid is designed for everyday software development, offering features such as multi-step task planning, automated command execution, and the ability to handle complex programming tasks beyond single-shot code edits.

hound

Hound is a security audit automation pipeline for AI-assisted code review that mirrors how expert auditors think, learn, and collaborate. It features graph-driven analysis, sessionized audits, provider-agnostic models, belief system and hypotheses, precise code grounding, and adaptive planning. The system employs a senior/junior auditor pattern where the Scout actively navigates the codebase and annotates knowledge graphs while the Strategist handles high-level planning and vulnerability analysis. Hound is optimized for small-to-medium sized projects like smart contract applications and is language-agnostic.

action_mcp

Action MCP is a powerful tool for managing and automating your cloud infrastructure. It provides a user-friendly interface to easily create, update, and delete resources on popular cloud platforms. With Action MCP, you can streamline your deployment process, reduce manual errors, and improve overall efficiency. The tool supports various cloud providers and offers a wide range of features to meet your infrastructure management needs. Whether you are a developer, system administrator, or DevOps engineer, Action MCP can help you simplify and optimize your cloud operations.

model-compose

model-compose is an open-source, declarative workflow orchestrator inspired by docker-compose. It lets you define and run AI model pipelines using simple YAML files. Effortlessly connect external AI services or run local AI models within powerful, composable workflows. Features include declarative design, multi-workflow support, modular components, flexible I/O routing, streaming mode support, and more. It supports running workflows locally or serving them remotely, Docker deployment, environment variable support, and provides a CLI interface for managing AI workflows.

Fabric

Fabric is an open-source framework designed to augment humans using AI by organizing prompts by real-world tasks. It addresses the integration problem of AI by creating and organizing prompts for various tasks. Users can create, collect, and organize AI solutions in a single place for use in their favorite tools. Fabric also serves as a command-line interface for those focused on the terminal. It offers a wide range of features and capabilities, including support for multiple AI providers, internationalization, speech-to-text, AI reasoning, model management, web search, text-to-speech, desktop notifications, and more. The project aims to help humans flourish by leveraging AI technology to solve human problems and enhance creativity.

code2prompt

Code2Prompt is a powerful command-line tool that generates comprehensive prompts from codebases, designed to streamline interactions between developers and Large Language Models (LLMs) for code analysis, documentation, and improvement tasks. It bridges the gap between codebases and LLMs by converting projects into AI-friendly prompts, enabling users to leverage AI for various software development tasks. The tool offers features like holistic codebase representation, intelligent source tree generation, customizable prompt templates, smart token management, Gitignore integration, flexible file handling, clipboard-ready output, multiple output options, and enhanced code readability.

agents-starter

A starter template for building AI-powered chat agents using Cloudflare's Agent platform, powered by agents-sdk. It provides a foundation for creating interactive chat experiences with AI, complete with a modern UI and tool integration capabilities. Features include interactive chat interface with AI, built-in tool system with human-in-the-loop confirmation, advanced task scheduling, dark/light theme support, real-time streaming responses, state management, and chat history. Prerequisites include a Cloudflare account and OpenAI API key. The project structure includes components for chat UI implementation, chat agent logic, tool definitions, and helper functions. Customization guide covers adding new tools, modifying the UI, and example use cases for customer support, development assistant, data analysis assistant, personal productivity assistant, and scheduling assistant.

py-llm-core

PyLLMCore is a light-weighted interface with Large Language Models with native support for llama.cpp, OpenAI API, and Azure deployments. It offers a Pythonic API that is simple to use, with structures provided by the standard library dataclasses module. The high-level API includes the assistants module for easy swapping between models. PyLLMCore supports various models including those compatible with llama.cpp, OpenAI, and Azure APIs. It covers use cases such as parsing, summarizing, question answering, hallucinations reduction, context size management, and tokenizing. The tool allows users to interact with language models for tasks like parsing text, summarizing content, answering questions, reducing hallucinations, managing context size, and tokenizing text.

fraim

Fraim is an AI-powered toolkit designed for security engineers to enhance their workflows by leveraging AI capabilities. It offers solutions to find, detect, fix, and flag vulnerabilities throughout the development lifecycle. The toolkit includes features like Risk Flagger for identifying risks in code changes, Code Security Analysis for context-aware vulnerability detection, and Infrastructure as Code Analysis for spotting misconfigurations in cloud environments. Fraim can be run as a CLI tool or integrated into Github Actions, making it a versatile solution for security teams and organizations looking to enhance their security practices with AI technology.

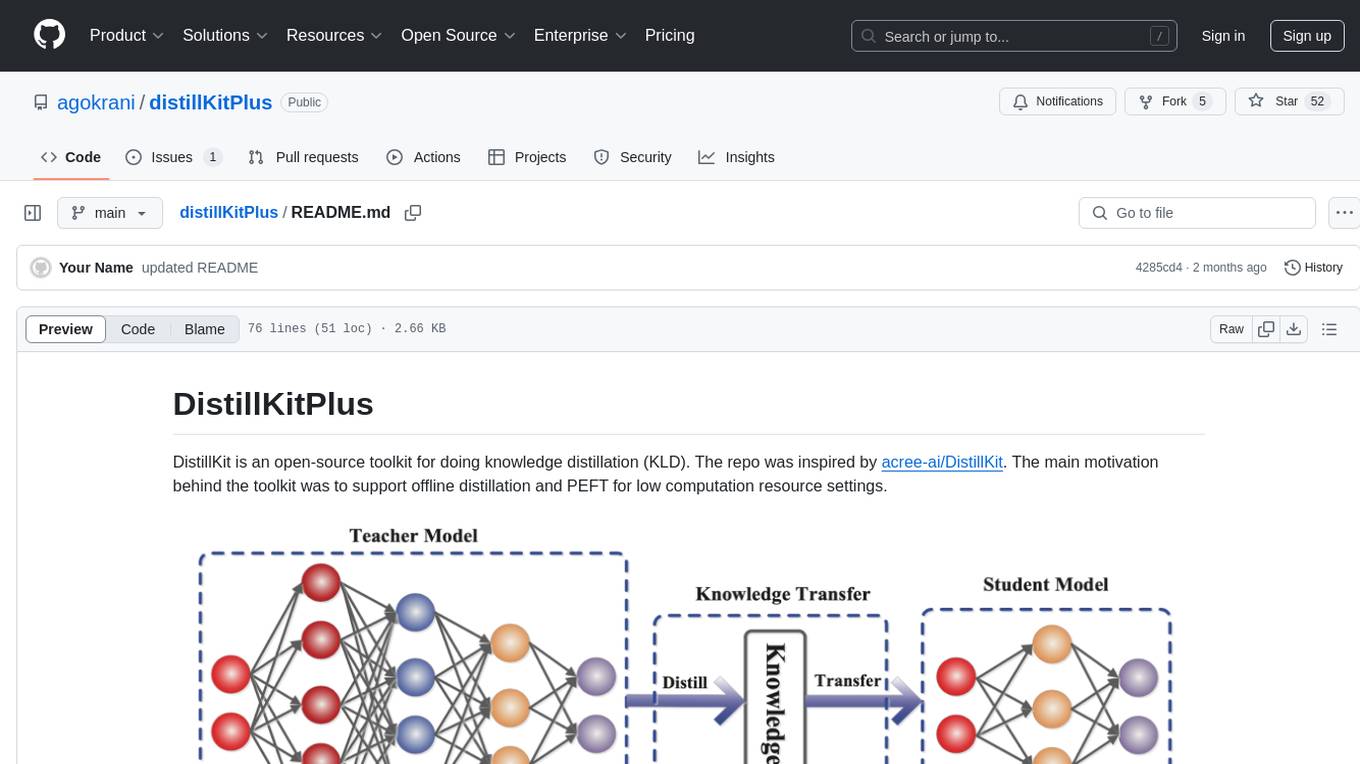

distillKitPlus

DistillKitPlus is an open-source toolkit designed for knowledge distillation (KLD) in low computation resource settings. It supports logit distillation, pre-computed logits for memory-efficient training, LoRA fine-tuning integration, and model quantization for faster inference. The toolkit utilizes a JSON configuration file for project, dataset, model, tokenizer, training, distillation, LoRA, and quantization settings. Users can contribute to the toolkit and contact the developers for technical questions or issues.

extension-gen-ai

The Looker GenAI Extension provides code examples and resources for building a Looker Extension that integrates with Vertex AI Large Language Models (LLMs). Users can leverage the power of LLMs to enhance data exploration and analysis within Looker. The extension offers generative explore functionality to ask natural language questions about data and generative insights on dashboards to analyze data by asking questions. It leverages components like BQML Remote Models, BQML Remote UDF with Vertex AI, and Custom Fine Tune Model for different integration options. Deployment involves setting up infrastructure with Terraform and deploying the Looker Extension by creating a Looker project, copying extension files, configuring BigQuery connection, connecting to Git, and testing the extension. Users can save example prompts and configure user settings for the extension. Development of the Looker Extension environment includes installing dependencies, starting the development server, and building for production.

xlang

XLang™ is a cutting-edge language designed for AI and IoT applications, offering exceptional dynamic and high-performance capabilities. It excels in distributed computing and seamless integration with popular languages like C++, Python, and JavaScript. Notably efficient, running 3 to 5 times faster than Python in AI and deep learning contexts. Features optimized tensor computing architecture for constructing neural networks through tensor expressions. Automates tensor data flow graph generation and compilation for specific targets, enhancing GPU performance by 6 to 10 times in CUDA environments.

ChatOpsLLM

ChatOpsLLM is a project designed to empower chatbots with effortless DevOps capabilities. It provides an intuitive interface and streamlined workflows for managing and scaling language models. The project incorporates robust MLOps practices, including CI/CD pipelines with Jenkins and Ansible, monitoring with Prometheus and Grafana, and centralized logging with the ELK stack. Developers can find detailed documentation and instructions on the project's website.

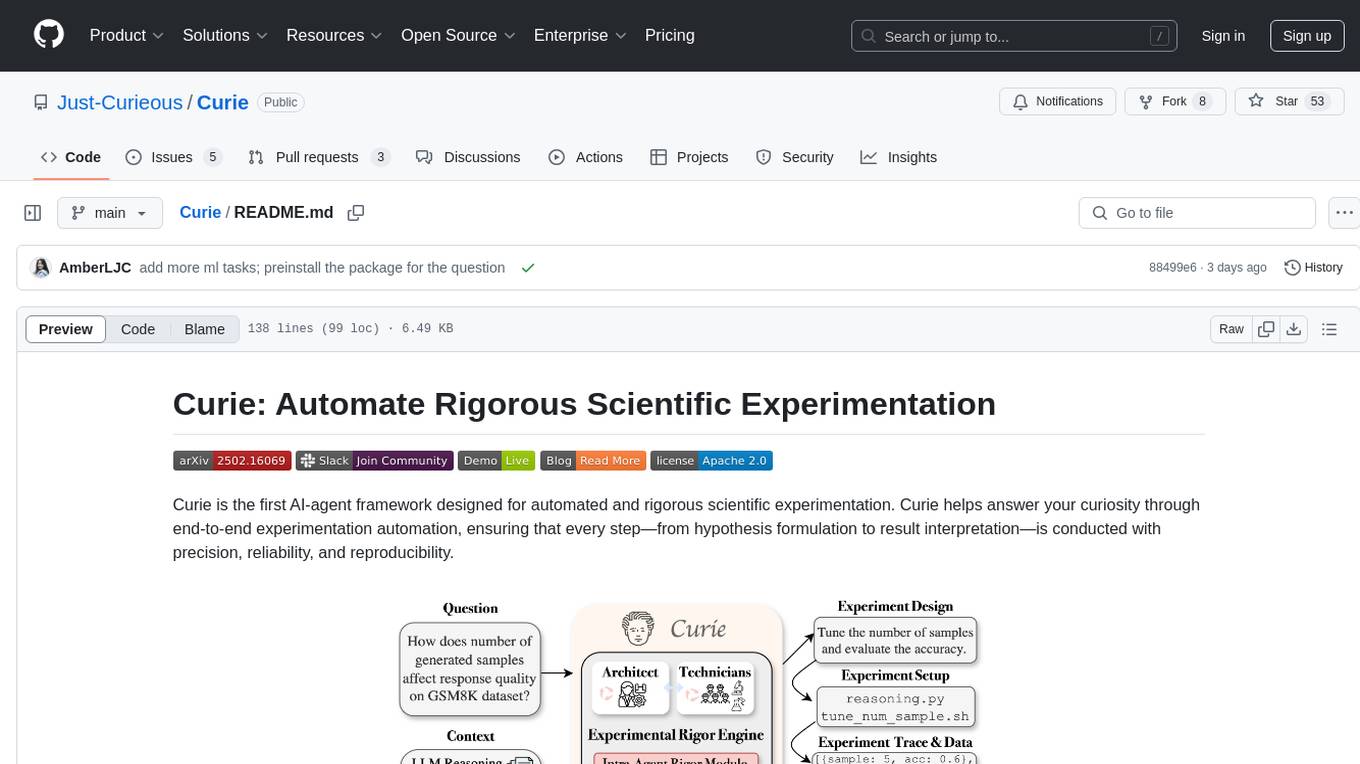

Curie

Curie is an AI-agent framework designed for automated and rigorous scientific experimentation. It automates end-to-end workflow management, ensures methodical procedure, reliability, and interpretability, and supports ML research, system analysis, and scientific discovery. It provides a benchmark with questions from 4 Computer Science domains. Users can customize experiment agents and adapt to their own tasks by configuring base_config.json. Curie is suitable for hyperparameter tuning, algorithm behavior analysis, system performance benchmarking, and automating computational simulations.

shellChatGPT

ShellChatGPT is a shell wrapper for OpenAI's ChatGPT, DALL-E, Whisper, and TTS, featuring integration with LocalAI, Ollama, Gemini, Mistral, Groq, and GitHub Models. It provides text and chat completions, vision, reasoning, and audio models, voice-in and voice-out chatting mode, text editor interface, markdown rendering support, session management, instruction prompt manager, integration with various service providers, command line completion, file picker dialogs, color scheme personalization, stdin and text file input support, and compatibility with Linux, FreeBSD, MacOS, and Termux for a responsive experience.

ChatGPT

The ChatGPT API Free Reverse Proxy provides free self-hosted API access to ChatGPT (`gpt-3.5-turbo`) with OpenAI's familiar structure, eliminating the need for code changes. It offers streaming response, API endpoint compatibility, and complimentary access without an API key. Installation options include Docker, PC/Server, and Termux on Android devices. The API can be accessed through a self-hosted local server or a pre-hosted API with an API key obtained from the Discord server. Usage examples are provided for Python and Node.js, and the project is licensed under AGPL-3.0.

For similar tasks

RA.Aid

RA.Aid is an AI software development agent powered by `aider` and advanced reasoning models like `o1`. It combines `aider`'s code editing capabilities with LangChain's agent-based task execution framework to provide an intelligent assistant for research, planning, and implementation of multi-step development tasks. It handles complex programming tasks by breaking them down into manageable steps, running shell commands automatically, and leveraging expert reasoning models like OpenAI's o1. RA.Aid is designed for everyday software development, offering features such as multi-step task planning, automated command execution, and the ability to handle complex programming tasks beyond single-shot code edits.

gitingest

GitIngest is a tool that allows users to turn any Git repository into a prompt-friendly text ingest for LLMs. It provides easy code context by generating a text digest from a git repository URL or directory. The tool offers smart formatting for optimized output format for LLM prompts and provides statistics about file and directory structure, size of the extract, and token count. GitIngest can be used as a CLI tool on Linux and as a Python package for code integration. The tool is built using Tailwind CSS for frontend, FastAPI for backend framework, tiktoken for token estimation, and apianalytics.dev for simple analytics. Users can self-host GitIngest by building the Docker image and running the container. Contributions to the project are welcome, and the tool aims to be beginner-friendly for first-time contributors with a simple Python and HTML codebase.

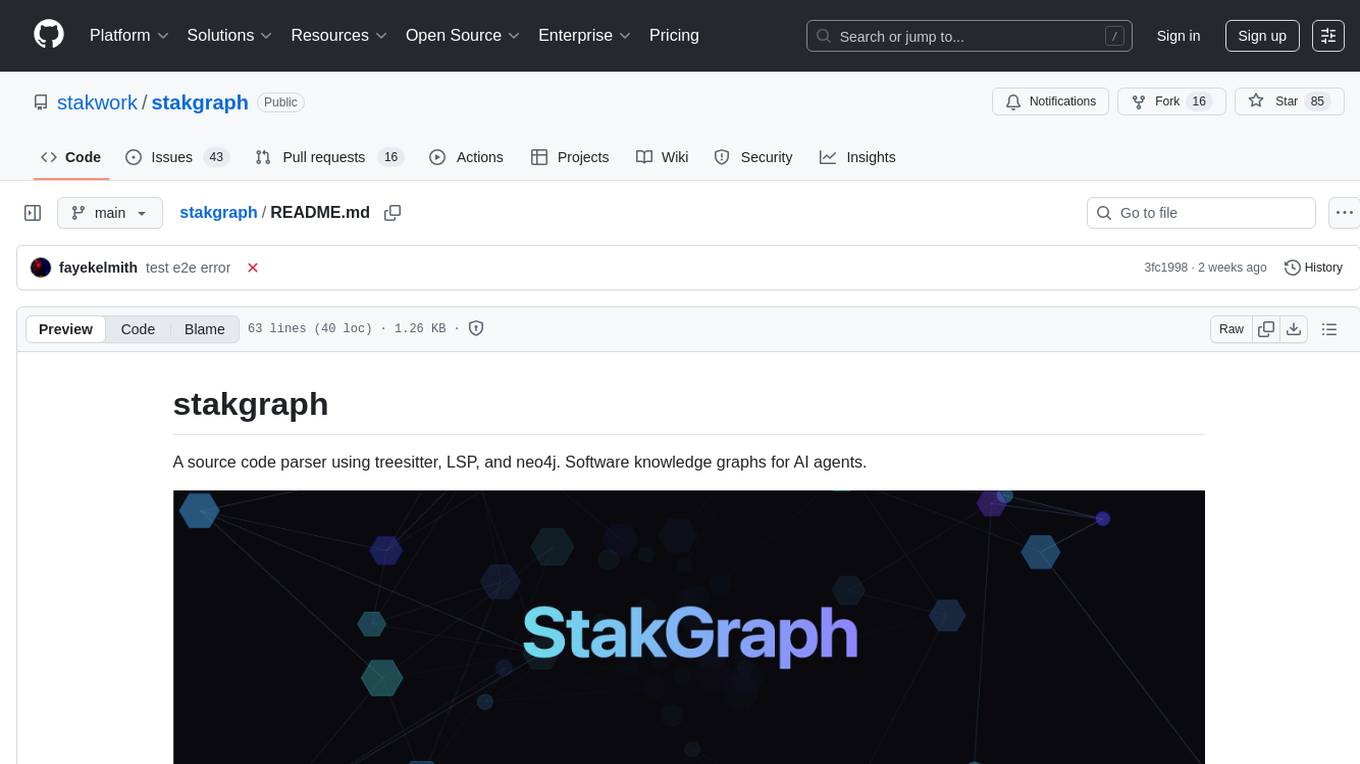

stakgraph

Stakgraph is a source code parser that utilizes treesitter, LSP, and neo4j to create software knowledge graphs for AI agents. It supports various languages such as Golang, React, Ruby on Rails, Typescript, Python, Swift, Kotlin, Rust, Java, Angular, and Svelte. Users can parse repositories, link endpoints, requests, and E2E tests, and ingest data to generate comprehensive graphs. The tool leverages the Language Server Protocol for node connections in the graph, enabling seamless integration and analysis of codebases.

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

code2prompt

code2prompt is a command-line tool that converts your codebase into a single LLM prompt with a source tree, prompt templating, and token counting. It automates generating LLM prompts from codebases of any size, customizing prompt generation with Handlebars templates, respecting .gitignore, filtering and excluding files using glob patterns, displaying token count, including Git diff output, copying prompt to clipboard, saving prompt to an output file, excluding files and folders, adding line numbers to source code blocks, and more. It helps streamline the process of creating LLM prompts for code analysis, generation, and other tasks.

ClashRoyaleBuildABot

Clash Royale Build-A-Bot is a project that allows users to build their own bot to play Clash Royale. It provides an advanced state generator that accurately returns detailed information using cutting-edge technologies. The project includes tutorials for setting up the environment, building a basic bot, and understanding state generation. It also offers updates such as replacing YOLOv5 with YOLOv8 unit model and enhancing performance features like placement and elixir management. The future roadmap includes plans to label more images of diverse cards, add a tracking layer for unit predictions, publish tutorials on Q-learning and imitation learning, release the YOLOv5 training notebook, implement chest opening and card upgrading features, and create a leaderboard for the best bots developed with this repository.

claudine

Claudine is an AI agent designed to reason and act autonomously, leveraging the Anthropic API, Unix command line tools, HTTP, local hard drive data, and internet data. It can administer computers, analyze files, implement features in source code, create new tools, and gather contextual information from the internet. Users can easily add specialized tools. Claudine serves as a blueprint for implementing complex autonomous systems, with potential for customization based on organization-specific needs. The tool is based on the anthropic-kotlin-sdk and aims to evolve into a versatile command line tool similar to 'git', enabling branching sessions for different tasks.

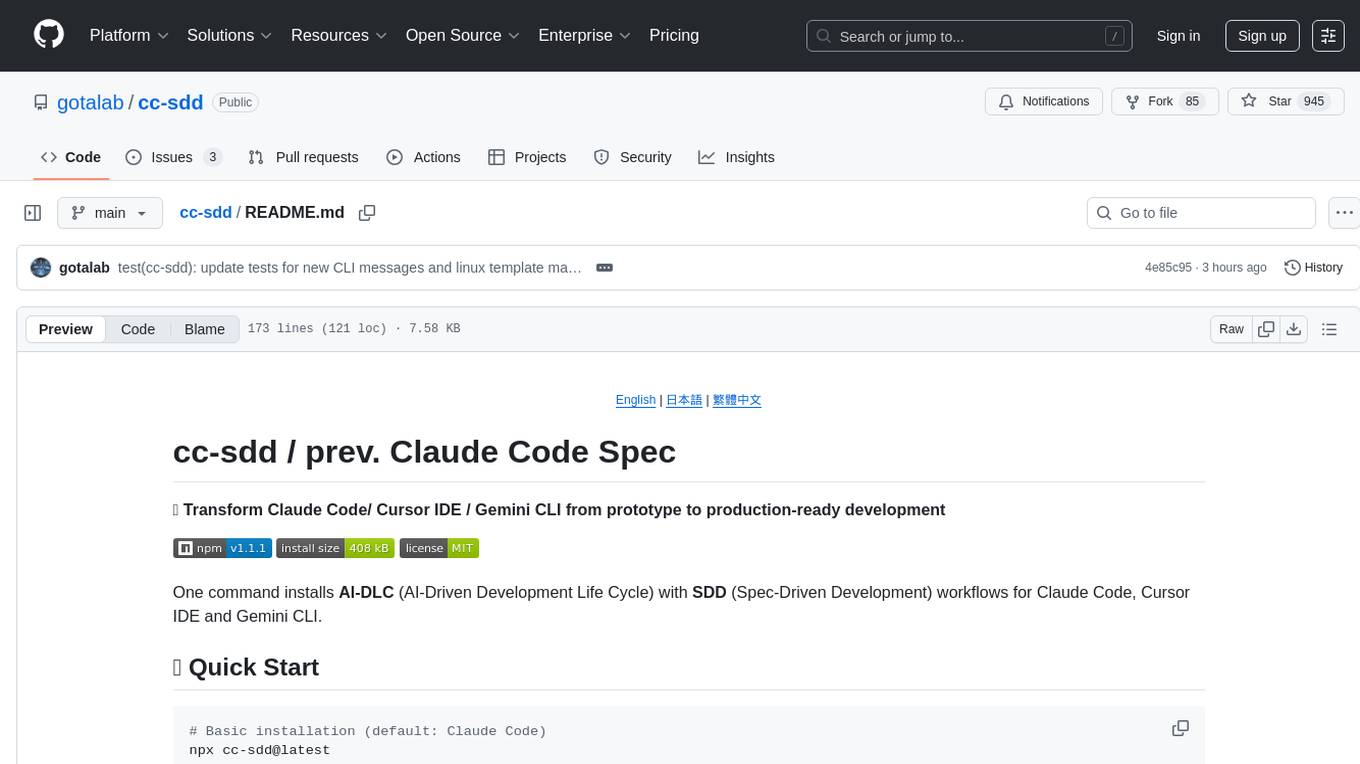

cc-sdd

The cc-sdd repository provides a tool for AI-Driven Development Life Cycle with Spec-Driven Development workflows for Claude Code and Gemini CLI. It includes powerful slash commands, Project Memory for AI learning, structured AI-DLC workflow, Spec-Driven Development methodology, and Kiro IDE compatibility. Ideal for feature development, code reviews, technical planning, and maintaining development standards. The tool supports multiple coding agents, offers an AI-DLC workflow with quality gates, and allows for advanced options like language and OS selection, preview changes, safe updates, and custom specs directory. It integrates AI-Driven Development Life Cycle, Project Memory, Spec-Driven Development, supports cross-platform usage, multi-language support, and safe updates with backup options.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.