aidermacs

Emacs AI Pair Programming Solution

Stars: 376

Aidermacs is an AI pair programming tool for Emacs that integrates Aider, a powerful open-source AI pair programming tool. It provides top performance on the SWE Bench, support for multi-file edits, real-time file synchronization, and broad language support. Aidermacs delivers an Emacs-centric experience with features like intelligent model selection, flexible terminal backend support, smarter syntax highlighting, enhanced file management, and streamlined transient menus. It thrives on community involvement, encouraging contributions, issue reporting, idea sharing, and documentation improvement.

README:

Aidermacs brings AI-powered development to Emacs by integrating Aider, one of the most powerful open-source AI pair programming tools. If you're missing Cursor but prefer living in Emacs, Aidermacs provides similar AI capabilities while staying true to Emacs workflows.

- Intelligent model selection with multiple backends

- Built-in Ediff integration for AI-generated changes

- Enhanced file management from Emacs

- Great customizability and flexible ways to add content

Here's what the community is saying about Aidermacs:

"Are you using aidermacs? For me superior to cursor." - u/berenddeboer

"This is amazing... every time I upgrade my packages I see your new commits. I feel this the authentic aider for emacs" - u/wchmbo

"Between Aidermacs and Gptel it's wild how bleeding edge Emacs is with this stuff. My workplace is exploring MCP registries and even clients that are all the rage (E.g Cursor) lag behind what I can do with mcp.el and gptel for tool use." - u/no_good_names_avail

"This looks amazing... I have been using ellama with local llms, looks like that will work here too. Great stuff!!" - u/lugpocalypse

"Honestly huge fan of this. Thank you for the updates!" - u/ieoa

- Requirements

- Download Aidermacs through Melpa or Non-GNU Elpa, or clone manually

- Modify this sample config and place it in your Emacs

init.el:

(use-package aidermacs

:bind (("C-c a" . aidermacs-transient-menu))

:config

; Set API_KEY in .bashrc, that will automatically picked up by aider or in elisp

(setenv "ANTHROPIC_API_KEY" "sk-...")

; defun my-get-openrouter-api-key yourself elsewhere for security reasons

(setenv "OPENROUTER_API_KEY" (my-get-openrouter-api-key))

:custom

; See the Configuration section below

(aidermacs-use-architect-mode t)

(aidermacs-default-model "sonnet"))- Open a project and run

M-x aidermacs-transient-menuorC-c a(where you bind it) - Add files and start coding with AI!

The main interface to Aidermacs is through its transient menu system (similar to Magit). Access it with:

M-x aidermacs-transient-menu

Or bind it to a key in your config:

(global-set-key (kbd "C-c a") 'aidermacs-transient-menu)Once the transient menu is open, you can navigate and execute commands using the displayed keys. Here's a summary of the main menu structure:

-

a: Start/Open Session (auto-detects project root) -

.: Start in Current Directory (good for monorepos) -

l: Clear Chat History -

s: Reset Session -

x: Exit Session

-

1: Code Mode -

2: Chat/Ask Mode -

3: Architect Mode -

4: Help Mode

-

^: Show Last Commit (if auto-commits enabled) -

u: Undo Last Commit (if auto-commits enabled) -

R: Refresh Repo Map -

h: Session History -

o: Change Main Model -

?: Aider Meta-level Help

-

f: Add File (C-u: read-only) -

F: Add Current File -

d: Add From Directory (same type) -

w: Add From Window -

m: Add From Dired (marked) -

j: Drop File -

J: Drop Current File -

k: Drop From Dired (marked) -

K: Drop All Files -

S: Create Session Scratchpad -

G: Add File to Session -

A: List Added Files

-

c: Code Change -

e: Question Code -

r: Architect Change -

q: General Question -

p: Question This Symbol -

g: Accept Proposed Changes -

i: Implement TODO -

t: Write Test -

T: Fix Test -

!: Debug Exception

The All File Actions and All Code Actions entries open submenus with more specialized commands. Use the displayed keys to navigate these submenus.

When using Aidermacs, you have the flexibility to decide which files the AI should read and edit. Here are some guidelines:

- Editable Files: Add files you want the AI to potentially edit. This grants the AI permission to both read and modify these files if necessary.

-

Read-Only Files: If you want the AI to read a file without editing it, you can add it as read-only. In Aidermacs, all add file commands can be prefixed with

C-uto specify read-only access. -

Session Scratchpads: Use the session scratchpads (

S) to paste notes or documentation that will be fed to the AI as read-only. -

External Files: The "Add file to session" (

G) command allows you to include files outside the current project (or files in.gitignore), as Aider doesn't automatically include these files in its context.

The AI can sometimes determine relevant files on its own, depending on the model and the context of the codebase. However, for precise control, it's often beneficial to manually specify files, especially when dealing with complex projects.

Aider encourages a collaborative approach, similar to working with a human co-worker. Sometimes the AI will need explicit guidance, while other times it can infer the necessary context on its own.

Aidermacs provides a minor mode that makes it easy to work with prompt files and other Aider-related files. When enabled, the minor mode provides these convenient keybindings:

-

C-c C-norC-<return>: Send line/region line-by-line -

C-c C-c: Send block/region as whole -

C-c C-z: Switch to Aidermacs buffer

The minor mode is automatically enabled for:

-

.aider.prompt.orgfiles (create withM-x aidermacs-open-prompt-file) -

.aider.chat.mdfiles -

.aider.chat.history.mdfiles -

.aider.input.historyfiles

You can use the aidermacs-before-run-backend-hook to run custom setup code before starting the Aider backend. This is particularly useful for:

- Setting environment variables

- Injecting secrets

- Performing any other pre-run configuration

Example usage to securely set an OpenAI API key from password-store:

(add-hook 'aidermacs-before-run-backend-hook

(lambda ()

(setenv "OPENAI_API_KEY" (password-store-get "code/openai_api_key"))))This approach keeps sensitive information out of your dotfiles while still making it available to Aidermacs.

You can customize the default AI model used by Aidermacs by setting the aidermacs-default-model variable:

(setq aidermacs-default-model "sonnet")This enables easy switching between different AI models without modifying the aidermacs-extra-args variable.

Note: This configuration will be overwritten by the existence of an .aider.conf.yml file (see details).

Aidermacs offers intelligent model selection for solo (non-Architect) mode, automatically detecting and integrating with multiple AI providers:

- Automatically fetches available models from supported providers (OpenAI, Anthropic, DeepSeek, Google Gemini, OpenRouter)

- Caches model lists for quick access

- Supports both popular pre-configured models and dynamically discovered ones

- Handles API keys and authentication automatically from your .bashrc

- Provides model compatibility checking

The dynamic model selection is only for the solo (non-Architect) mode.

To change models in solo mode:

- Use

M-x aidermacs-change-modelor pressoin the transient menu - Select from either:

- Popular pre-configured models (fast)

- Dynamically fetched models from all supported providers (comprehensive)

The system will automatically filter models to only show ones that are:

- Supported by your current Aider version

- Available through your configured API keys

- Compatible with your current workflow

Aidermacs features an experimental mode using two specialized models for each coding task: an Architect model for reasoning and an Editor model for code generation. This approach has achieved state-of-the-art (SOTA) results on aider's code editing benchmark, as detailed in this blog post.

To enable this mode, set aidermacs-use-architect-mode to t. You must also configure the aidermacs-architect-model variable to specify the model to use for the Architect role.

By default, the aidermacs-editor-model is the same as aidermacs-default-model. You only need to set aidermacs-editor-model if you want to use a different model for the Editor role.

When Architect mode is enabled, the aidermacs-default-model setting is ignored, and aidermacs-architect-model and aidermacs-editor-model are used instead.

(setq aidermacs-use-architect-mode t)You can switch to it persistently by M-x aidermacs-switch-to-architect-mode (3 in aidermacs-transient-menu), or temporarily with M-x aidermacs-architect-this-code (r in aidermacs-transient-menu).

You can configure each model independently:

;; Default model used for all modes unless overridden

(setq aidermacs-default-model "sonnet")

;; Optional: Set specific model for architect reasoning

(setq aidermacs-architect-model "deepseek/deepseek-reasoner")

;; Optional: Set specific model for code generation

(setq aidermacs-editor-model "deepseek/deepseek-chat")The model hierarchy works as follows:

- When Architect mode is enabled:

- The Architect model handles high-level reasoning and solution design

- The Editor model executes the actual code changes

- When Architect mode is disabled, only

aidermacs-default-modelis used - You can configure specific models or let them automatically use the default model

Models will reflect changes to aidermacs-default-model unless they've been explicitly set to a different value.

Note: These configurations will be overwritten by the existence of an .aider.conf.yml file (see details).

The Weak model is used for commit messages (if you have aidermacs-auto-commits set to t) and chat history summarization (default depends on –model). You can customize it using

;; default to nil

(setq aidermacs-weak-model "deepseek/deepseek-chat")You can change the Weak model during a session by using C-u o (aidermacs-change-model with a prefix argument). In most cases, you won't need to change this as Aider will automatically select an appropriate Weak model based on your main model.

Note: These configurations will be overwritten by the existence of an .aider.conf.yml file (see details).

By default, Aidermacs requires explicit confirmation before applying changes proposed in Architect mode. This gives you a chance to review the AI's plan before any code is modified.

If you prefer to automatically accept all Architect mode changes without confirmation (similar to Aider's default behavior), you can enable this with:

(setq aidermacs-auto-accept-architect t)Note: These configurations will be overwritten by the existence of an .aider.conf.yml file (see details).

Choose your preferred terminal backend by setting aidermacs-backend:

vterm offers better terminal compatibility, while comint provides a simple, built-in option that remains fully compatible with Aidermacs.

;; Use vterm backend (default is comint)

(setq aidermacs-backend 'vterm)Available backends:

-

comint(default): Uses Emacs' built-in terminal emulation -

vterm: Leverages vterm for better terminal compatibility

The vterm backend will use the faces defined by your active Emacs theme to set the colors for aider. It tries to guess some reasonable color values based on your themes. In some cases this will not work perfectly; if text is unreadable for you, you can turn this off as follows:

;; don't match emacs theme colors

(setopt aidermacs-vterm-use-theme-colors nil)You can customize keybindings for multiline input, this key allows you to enter multiple lines without sending the command to Aider. Press RET normally to send the command.

;; Comint backend:

(setq aidermacs-comint-multiline-newline-key "S-<return>")

;; Vterm backend:

(setq aidermacs-vterm-multiline-newline-key "S-<return>")Aidermacs fully supports working with remote files through Emacs' Tramp mode. This allows you to use Aidermacs on files hosted on remote servers via SSH, Docker, and other protocols supported by Tramp.

When working with remote files:

- File paths are automatically localized for the remote system

- All Aidermacs features work seamlessly across local and remote files

- Edits are applied directly to the remote files

- Diffs and change reviews work as expected

Example usage:

;; Open a remote file via SSH

(find-file "/ssh:user@remotehost:/path/to/file.py")

;; Start Aidermacs session - it will automatically detect the remote context

M-x aidermacs-transient-menuAidermacs makes it easy to reuse prompts through:

- Prompt History - Your previously used prompts are saved and can be quickly selected

-

Common Prompts - A curated list of frequently used prompts for common tasks defined in

aidermacs-common-prompts:

When entering a prompt, you can:

- Select from your history or common prompts using completion

- Still type custom prompts when needed

The prompt history and common prompts are available across all sessions.

Control whether to show diffs for AI-generated changes with aidermacs-show-diff-after-change:

;; Enable/disable showing diffs after changes (default: t)

(setq aidermacs-show-diff-after-change t)When enabled, Aidermacs will:

- Capture the state of files before AI edits

- Show diffs using Emacs' built-in ediff interface

- Allow you to review and accept/reject changes

Aider automatically commits AI-generated changes by default. We consider this behavior very intrusive, so we've disabled it. You can re-enable auto-commits by setting aidermacs-auto-commits to t:

;; Enable auto-commits

(setq aidermacs-auto-commits t)With auto-commits disabled, you must manually commit changes using your preferred Git workflow.

Note: This configuration will be overwritten by the existence of an .aider.conf.yml file (see details).

If these configurations aren't sufficient, the aidermacs-extra-args variable enables passing any Aider-supported command-line options.

See the Aider configuration documentation for a full list of available options.

;; Set the verbosity:

(add-to-list 'aidermacs-extra-args "--verbose")These arguments will be appended to the Aider command when it is run. Note that the --model argument is automatically handled by aidermacs-default-model and should not be included in aidermacs-extra-args.

Aidermacs supports project-specific configurations via .aider.conf.yml files. To enable this:

-

Create a

.aider.conf.ymlin your home dir, project's root, or the current directory, defining your desired settings. See the Aider documentation for available options. -

Tell Aidermacs to use the config file in one of two ways:

;; Set the `aidermacs-config-file` variable in your Emacs config: (setq aidermacs-config-file "/path/to/your/project/.aider.conf.yml") ;; *Or*, include the `--config` or `-c` flag in `aidermacs-extra-args`: (setq aidermacs-extra-args '("--config" "/path/to/your/project/.aider.conf.yml"))

Note: You can also rely on Aider's default behavior of automatically searching for .aider.conf.yml in the home directory, project root, or current directory, in that order. In this case, you do not need to set aidermacs-config-file or include --config in aidermacs-extra-args.

-

Important: When using a config file, all other Aidermacs configuration variables supplying an argument option (e.g.,

aidermacs-default-model,aidermacs-architect-model,aidermacs-use-architect-mode) are IGNORED. Aider will only use the settings specified in your.aider.conf.ymlfile. Do not attempt to combine these Emacs settings with a config file, as the results will be unpredictable. -

Precedence: Settings in

.aider.conf.ymlalways take precedence when a config file is explicitly specified. -

Avoid Conflicts: When using a config file, do not include model-related arguments (like

--model,--architect, etc.) inaidermacs-extra-args. Configure all settings within your.aider.conf.ymlfile.

Aider can work with Sonnet 3.7's new thinking tokens. You can now enable and configure thinking tokens more easily using the following methods:

-

In-Chat Command: Use the

/think-tokenscommand followed by the desired token budget. For example:/think-tokens 8kor/think-tokens 10000. Supported formats include8096,8k,10.5k, and0.5M. -

Command-Line Argument: Set the

--thinking-tokensargument when starting Aidermacs. For example, you can add this to youraidermacs-extra-args:(setq aidermacs-extra-args '("--thinking-tokens" "16k"))

These methods provide a more streamlined way to control thinking tokens without requiring manual configuration of .aider.model.settings.yml files.

Note: If you are using an .aider.conf.yml file, you can also set the thinking_tokens option there.

The .aider.prompt.org file is particularly useful for:

- Storing frequently used prompts

- Documenting common workflows

- Quick access to complex instructions

You can customize which files automatically enable the minor mode by configuring aidermacs-auto-mode-files:

(setq aidermacs-auto-mode-files

'(".aider.prompt.org"

".aider.chat.md"

".aider.chat.history.md"

".aider.input.history"

"my-custom-aider-file.org")) ; Add your own filesPlease check Aider's FAQ for Aider related questions.

Yes! Aidermacs supports any OpenAI-compatible API endpoint. Check Aider documentation on Ollama and LiteLLM.

Yes, the code you add to the session is sent to the AI provider. Be mindful of sensitive code.

Aider only support Python 3.12 currently, you can use uv install aider:

uv tool install --force --python python3.12 aider-chat@latestIf you encounter a proxy-related issue , such as the error indicating that the 'socksio' package is not installed, please use:

uv tool install --force --python python3.12 aider-chat@latest --with 'httpx[socks]'And adjust aidermacs program with below config.

(setq aidermacs-program (expand-file-name "~/.local/bin/aider"))Aidermacs thrives on community involvement. We believe collaborative development with user and contributor input creates the best software. We encourage you to:

- Contribute Code: Submit pull requests with bug fixes, new features, or improvements to existing functionality.

- Report Issues: Let us know about any bugs, unexpected behavior, or feature requests through GitHub Issues.

- Share Ideas: Participate in discussions and propose new ideas for making Aidermacs even better.

- Improve Documentation: Help us make the documentation clearer, more comprehensive, and easier to use.

Your contributions are essential for making Aidermacs the best AI pair programming tool in Emacs!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aidermacs

Similar Open Source Tools

aidermacs

Aidermacs is an AI pair programming tool for Emacs that integrates Aider, a powerful open-source AI pair programming tool. It provides top performance on the SWE Bench, support for multi-file edits, real-time file synchronization, and broad language support. Aidermacs delivers an Emacs-centric experience with features like intelligent model selection, flexible terminal backend support, smarter syntax highlighting, enhanced file management, and streamlined transient menus. It thrives on community involvement, encouraging contributions, issue reporting, idea sharing, and documentation improvement.

org-ai

org-ai is a minor mode for Emacs org-mode that provides access to generative AI models, including OpenAI API (ChatGPT, DALL-E, other text models) and Stable Diffusion. Users can use ChatGPT to generate text, have speech input and output interactions with AI, generate images and image variations using Stable Diffusion or DALL-E, and use various commands outside org-mode for prompting using selected text or multiple files. The tool supports syntax highlighting in AI blocks, auto-fill paragraphs on insertion, and offers block options for ChatGPT, DALL-E, and other text models. Users can also generate image variations, use global commands, and benefit from Noweb support for named source blocks.

llama.vim

llama.vim is a plugin that provides local LLM-assisted text completion for Vim users. It offers features such as auto-suggest on cursor movement, manual suggestion toggling, suggestion acceptance with Tab and Shift+Tab, control over text generation time, context configuration, ring context with chunks from open and edited files, and performance stats display. The plugin requires a llama.cpp server instance to be running and supports FIM-compatible models. It aims to be simple, lightweight, and provide high-quality and performant local FIM completions even on consumer-grade hardware.

shellChatGPT

ShellChatGPT is a shell wrapper for OpenAI's ChatGPT, DALL-E, Whisper, and TTS, featuring integration with LocalAI, Ollama, Gemini, Mistral, Groq, and GitHub Models. It provides text and chat completions, vision, reasoning, and audio models, voice-in and voice-out chatting mode, text editor interface, markdown rendering support, session management, instruction prompt manager, integration with various service providers, command line completion, file picker dialogs, color scheme personalization, stdin and text file input support, and compatibility with Linux, FreeBSD, MacOS, and Termux for a responsive experience.

consult-llm-mcp

Consult LLM MCP is an MCP server that enables users to consult powerful AI models like GPT-5.2, Gemini 3.0 Pro, and DeepSeek Reasoner for complex problem-solving. It supports multi-turn conversations, direct queries with optional file context, git changes inclusion for code review, comprehensive logging with cost estimation, and various CLI modes for Gemini and Codex. The tool is designed to simplify the process of querying AI models for assistance in resolving coding issues and improving code quality.

llm-vscode

llm-vscode is an extension designed for all things LLM, utilizing llm-ls as its backend. It offers features such as code completion with 'ghost-text' suggestions, the ability to choose models for code generation via HTTP requests, ensuring prompt size fits within the context window, and code attribution checks. Users can configure the backend, suggestion behavior, keybindings, llm-ls settings, and tokenization options. Additionally, the extension supports testing models like Code Llama 13B, Phind/Phind-CodeLlama-34B-v2, and WizardLM/WizardCoder-Python-34B-V1.0. Development involves cloning llm-ls, building it, and setting up the llm-vscode extension for use.

agenticSeek

AgenticSeek is a voice-enabled AI assistant powered by DeepSeek R1 agents, offering a fully local alternative to cloud-based AI services. It allows users to interact with their filesystem, code in multiple languages, and perform various tasks autonomously. The tool is equipped with memory to remember user preferences and past conversations, and it can divide tasks among multiple agents for efficient execution. AgenticSeek prioritizes privacy by running entirely on the user's hardware without sending data to the cloud.

hash

HASH is a self-building, open-source database which grows, structures and checks itself. With it, we're creating a platform for decision-making, which helps you integrate, understand and use data in a variety of different ways.

kwaak

Kwaak is a tool that allows users to run a team of autonomous AI agents locally from their own machine. It enables users to write code, improve test coverage, update documentation, and enhance code quality while focusing on building innovative projects. Kwaak is designed to run multiple agents in parallel, interact with codebases, answer questions about code, find examples, write and execute code, create pull requests, and more. It is free and open-source, allowing users to bring their own API keys or models via Ollama. Kwaak is part of the bosun.ai project, aiming to be a platform for autonomous code improvement.

gptel-aibo

gptel-aibo is an AI writing assistant system built on top of gptel. It helps users create and manage content in Emacs, including code, documentation, and novels. Users can interact with the Language Model (LLM) to receive suggestions and apply them easily. The tool provides features like sending requests, applying suggestions, and completing content at the current position based on context. Users can customize settings and face settings for a better user experience. gptel-aibo aims to enhance productivity and efficiency in content creation and management within Emacs environment.

chatgpt-cli

ChatGPT CLI provides a powerful command-line interface for seamless interaction with ChatGPT models via OpenAI and Azure. It features streaming capabilities, extensive configuration options, and supports various modes like streaming, query, and interactive mode. Users can manage thread-based context, sliding window history, and provide custom context from any source. The CLI also offers model and thread listing, advanced configuration options, and supports GPT-4, GPT-3.5-turbo, and Perplexity's models. Installation is available via Homebrew or direct download, and users can configure settings through default values, a config.yaml file, or environment variables.

magic-cli

Magic CLI is a command line utility that leverages Large Language Models (LLMs) to enhance command line efficiency. It is inspired by projects like Amazon Q and GitHub Copilot for CLI. The tool allows users to suggest commands, search across command history, and generate commands for specific tasks using local or remote LLM providers. Magic CLI also provides configuration options for LLM selection and response generation. The project is still in early development, so users should expect breaking changes and bugs.

gpt-cli

gpt-cli is a command-line interface tool for interacting with various chat language models like ChatGPT, Claude, and others. It supports model customization, usage tracking, keyboard shortcuts, multi-line input, markdown support, predefined messages, and multiple assistants. Users can easily switch between different assistants, define custom assistants, and configure model parameters and API keys in a YAML file for easy customization and management.

fish-ai

fish-ai is a tool that adds AI functionality to Fish shell. It can be integrated with various AI providers like OpenAI, Azure OpenAI, Google, Hugging Face, Mistral, or a self-hosted LLM. Users can transform comments into commands, autocomplete commands, and suggest fixes. The tool allows customization through configuration files and supports switching between contexts. Data privacy is maintained by redacting sensitive information before submission to the AI models. Development features include debug logging, testing, and creating releases.

abliteration

Abliteration is a tool that allows users to create abliterated models using transformers quickly and easily. It is not a tool for uncensorship, but rather for making models that will not explicitly refuse users. Users can clone the repository, install dependencies, and make abliterations using the provided commands. The tool supports adjusting parameters for stubborn models and offers various options for customization. Abliteration can be used for creating modified models for specific tasks or topics.

OpenAI-sublime-text

The OpenAI Completion plugin for Sublime Text provides first-class code assistant support within the editor. It utilizes LLM models to manipulate code, engage in chat mode, and perform various tasks. The plugin supports OpenAI, llama.cpp, and ollama models, allowing users to customize their AI assistant experience. It offers separated chat histories and assistant settings for different projects, enabling context-specific interactions. Additionally, the plugin supports Markdown syntax with code language syntax highlighting, server-side streaming for faster response times, and proxy support for secure connections. Users can configure the plugin's settings to set their OpenAI API key, adjust assistant modes, and manage chat history. Overall, the OpenAI Completion plugin enhances the Sublime Text editor with powerful AI capabilities, streamlining coding workflows and fostering collaboration with AI assistants.

For similar tasks

aidermacs

Aidermacs is an AI pair programming tool for Emacs that integrates Aider, a powerful open-source AI pair programming tool. It provides top performance on the SWE Bench, support for multi-file edits, real-time file synchronization, and broad language support. Aidermacs delivers an Emacs-centric experience with features like intelligent model selection, flexible terminal backend support, smarter syntax highlighting, enhanced file management, and streamlined transient menus. It thrives on community involvement, encouraging contributions, issue reporting, idea sharing, and documentation improvement.

ai-code-interface.el

AI Code Interface is an Emacs package designed for AI-assisted software development, providing a uniform interface for various AI backends. It offers context-aware AI coding actions and seamless integration with AI-driven agile development workflows. The package supports multiple AI coding CLIs such as Claude Code, Gemini CLI, OpenAI Codex, GitHub Copilot CLI, Opencode, Grok CLI, Cursor CLI, Kiro CLI, CodeBuddy Code CLI, and Aider CLI. It aims to streamline the use of different AI tools within Emacs while maintaining a consistent user experience.

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

sourcegraph

Sourcegraph is a code search and navigation tool that helps developers read, write, and fix code in large, complex codebases. It provides features such as code search across all repositories and branches, code intelligence for navigation and refactoring, and the ability to fix and refactor code across multiple repositories at once.

continue

Continue is an open-source autopilot for VS Code and JetBrains that allows you to code with any LLM. With Continue, you can ask coding questions, edit code in natural language, generate files from scratch, and more. Continue is easy to use and can help you save time and improve your coding skills.

cody

Cody is a free, open-source AI coding assistant that can write and fix code, provide AI-generated autocomplete, and answer your coding questions. Cody fetches relevant code context from across your entire codebase to write better code that uses more of your codebase's APIs, impls, and idioms, with less hallucination.

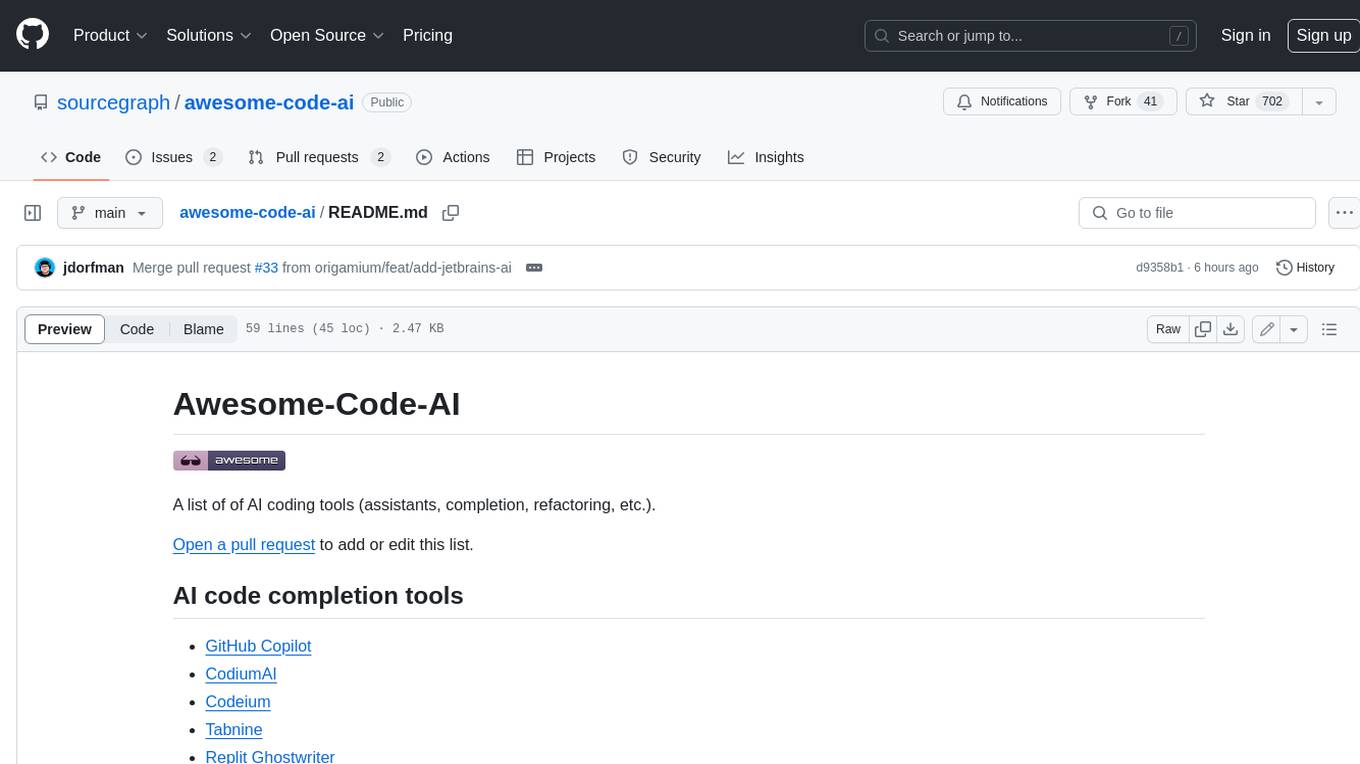

awesome-code-ai

A curated list of AI coding tools, including code completion, refactoring, and assistants. This list includes both open-source and commercial tools, as well as tools that are still in development. Some of the most popular AI coding tools include GitHub Copilot, CodiumAI, Codeium, Tabnine, and Replit Ghostwriter.

commanddash

Dash AI is an open-source coding assistant for Flutter developers. It is designed to not only write code but also run and debug it, allowing it to assist beyond code completion and automate routine tasks. Dash AI is powered by Gemini, integrated with the Dart Analyzer, and specifically tailored for Flutter engineers. The vision for Dash AI is to create a single-command assistant that can automate tedious development tasks, enabling developers to focus on creativity and innovation. It aims to assist with the entire process of engineering a feature for an app, from breaking down the task into steps to generating exploratory tests and iterating on the code until the feature is complete. To achieve this vision, Dash AI is working on providing LLMs with the same access and information that human developers have, including full contextual knowledge, the latest syntax and dependencies data, and the ability to write, run, and debug code. Dash AI welcomes contributions from the community, including feature requests, issue fixes, and participation in discussions. The project is committed to building a coding assistant that empowers all Flutter developers.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.