podscript

Generate podcast transcripts using language and speech-to-text models

Stars: 149

Podscript is a tool designed to generate transcripts for podcasts and similar audio files using Language Model Models (LLMs) and Speech-to-Text (STT) APIs. It provides a command-line interface (CLI) for transcribing audio from various sources, including YouTube videos and audio files, using different speech-to-text services like Deepgram, Assembly AI, and Groq. Additionally, Podscript offers a web-based user interface for convenience. Users can configure keys for supported services, transcribe audio, and customize the transcription models. The tool aims to simplify the process of creating accurate transcripts for audio content.

README:

podscript is a tool to generate transcripts for podcasts (and other similar audio files), using LLMs and Speech-to-Text (STT) APIs.

> go install github.com/deepakjois/podscript@latest

> ~/go/bin/podscript --helpPodscript has a web based UI for convenience

> podscript web

Starting server on port 8080This runs a web server on at http://localhost:8080

For more advanced usage, see the CLI section below.

# Configure keys for supported services (OpenAI, Anthropic, Deepgram etc)

# and write them to $HOME/.podscript.toml

podscript configure

# Transcribe a YouTube Video by formatting and cleaning up autogenerated captions

podscript ytt https://www.youtube.com/watch?v=aO1-6X_f74M

# Transcribe audio from a URL using deepgram speech-to-text API

#

# Deepgram and AssemblyAI subcommands support `--from-url` for

# passing audio URLs, and `--from-file` to pass audio files.

podscript deepgram --from-url https://audio.listennotes.com/e/p/d6cc86364eb540c1a30a1cac2b77b82c/

# Transcribe audio from a file using Groq's whisper model

# Groq only supports audio files.

podscript groq --file huberman.mp3The ytt subommand uses the gpt-4o model by default. Use --model flag to set a different model. The following are supported:

- OpenAI

gpt-4ogpt-4o-mini

- Google Gemini

gemini-2.0-flash

- Llama (via Groq)

llama-3.3-70b-versatilellama-3.1-8b-instant

- Anthropic

claude-3-5-sonnet-20241022claude-3-5-haiku-20241022

- Anthropic via Amazon Bedrock

-

anthropic.claude-3-5-sonnet-20241022-v2:0(via AWS) -

anthropic.claude-3-5-haiku-20241022-v1:0(via AWS)

-

[!TIP] You can find the audio download link for a podcast on ListenNotes under the More menu

podscript supports the following Speech-To-Text (STT) APIs:

- Deepgram (which as of Jan 2025 provides $200 free signup credit!)

- Assembly AI (which as of Oct 2024 is free to use within your credit limits and they provide $50 credits free on signup).

- Groq (which as of Jul 2024 is in beta and free to use within your rate limits).

Want to contribute? Here's how to build and run the project locally:

- Install

npm: https://docs.npmjs.com/downloading-and-installing-node-js-and-npm?ref=meilisearch-blog - Install

caddy: https://caddyserver.com/docs/install

Build and run the frontend:

cd web/frontend

npm run devBuild the backend server and run it in dev mode:

go build -o podscript

./podscript web --devThis will start the backend server and expose only the API endpoints without bundling the frontend assets

To connect the two:

cd web

caddy runThis should setup everything such that you can visit http://localhost:8080 and have the frontend connected to the backend via the Caddy reverse proxy

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for podscript

Similar Open Source Tools

podscript

Podscript is a tool designed to generate transcripts for podcasts and similar audio files using Language Model Models (LLMs) and Speech-to-Text (STT) APIs. It provides a command-line interface (CLI) for transcribing audio from various sources, including YouTube videos and audio files, using different speech-to-text services like Deepgram, Assembly AI, and Groq. Additionally, Podscript offers a web-based user interface for convenience. Users can configure keys for supported services, transcribe audio, and customize the transcription models. The tool aims to simplify the process of creating accurate transcripts for audio content.

yoyak

Yoyak is a small CLI tool powered by LLM for summarizing and translating web pages. It provides shell completion scripts for bash, fish, and zsh. Users can set the model they want to use and summarize web pages with the 'yoyak summary' command. Additionally, translation to other languages is supported using the '-l' option with ISO 639-1 language codes. Yoyak supports various models for summarization and translation tasks.

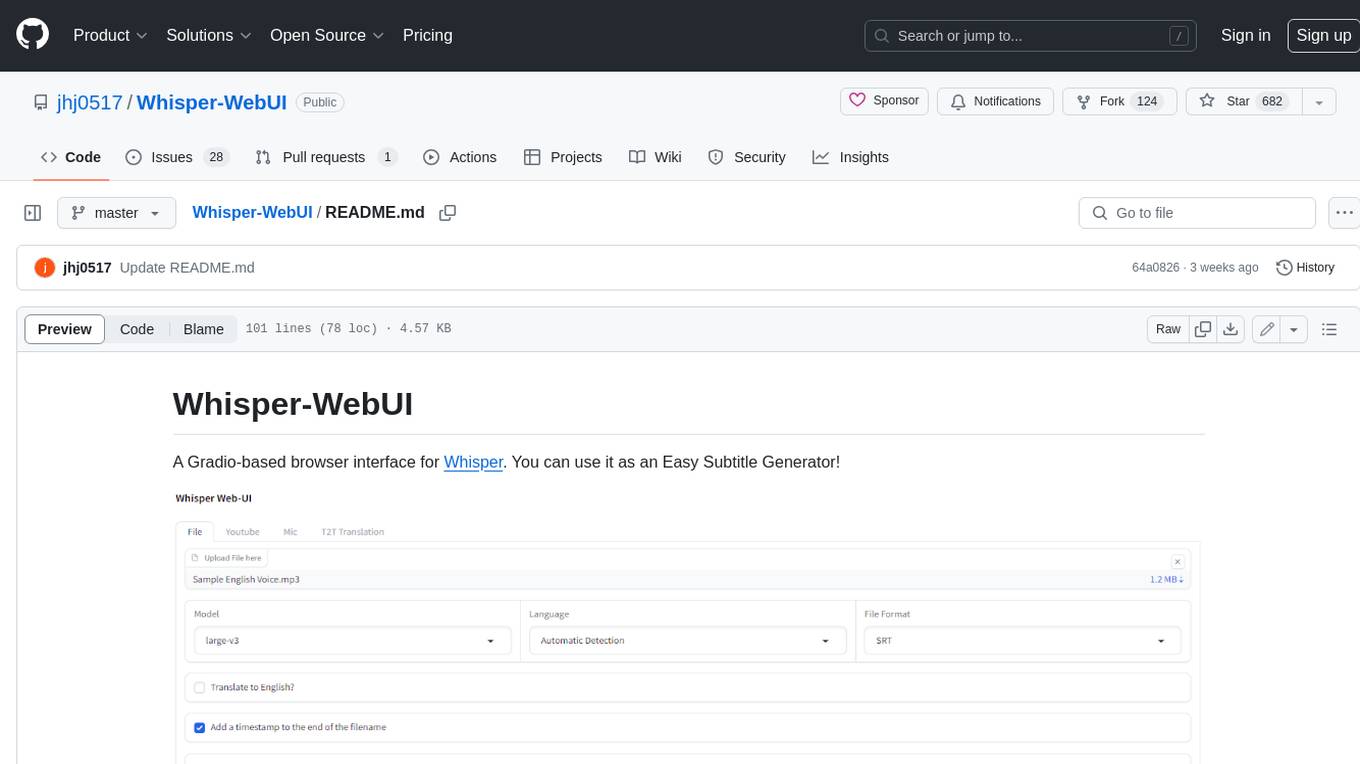

Whisper-WebUI

Whisper-WebUI is a Gradio-based browser interface for Whisper, serving as an Easy Subtitle Generator. It supports generating subtitles from various sources such as files, YouTube, and microphone. The tool also offers speech-to-text and text-to-text translation features, utilizing Facebook NLLB models and DeepL API. Users can translate subtitle files from other languages to English and vice versa. The project integrates faster-whisper for improved VRAM usage and transcription speed, providing efficiency metrics for optimized whisper models. Additionally, users can choose from different Whisper models based on size and language requirements.

openedai-speech

OpenedAI Speech is a free, private text-to-speech server compatible with the OpenAI audio/speech API. It offers custom voice cloning and supports various models like tts-1 and tts-1-hd. Users can map their own piper voices and create custom cloned voices. The server provides multilingual support with XTTS voices and allows fixing incorrect sounds with regex. Recent changes include bug fixes, improved error handling, and updates for multilingual support. Installation can be done via Docker or manual setup, with usage instructions provided. Custom voices can be created using Piper or Coqui XTTS v2, with guidelines for preparing audio files. The tool is suitable for tasks like generating speech from text, creating custom voices, and multilingual text-to-speech applications.

trieve

Trieve is an advanced relevance API for hybrid search, recommendations, and RAG. It offers a range of features including self-hosting, semantic dense vector search, typo tolerant full-text/neural search, sub-sentence highlighting, recommendations, convenient RAG API routes, the ability to bring your own models, hybrid search with cross-encoder re-ranking, recency biasing, tunable popularity-based ranking, filtering, duplicate detection, and grouping. Trieve is designed to be flexible and customizable, allowing users to tailor it to their specific needs. It is also easy to use, with a simple API and well-documented features.

sosumi.ai

sosumi.ai provides Apple Developer documentation in an AI-readable format by converting JavaScript-rendered pages into Markdown. It offers an HTTP API to access Apple docs, supports external Swift-DocC sites, integrates with MCP server, and provides tools like searchAppleDocumentation and fetchAppleDocumentation. The project can be self-hosted and is currently hosted on Cloudflare Workers. It is built with Hono and supports various runtimes. The application is designed for accessibility-first, on-demand rendering of Apple Developer pages to Markdown.

agenticSeek

AgenticSeek is a voice-enabled AI assistant powered by DeepSeek R1 agents, offering a fully local alternative to cloud-based AI services. It allows users to interact with their filesystem, code in multiple languages, and perform various tasks autonomously. The tool is equipped with memory to remember user preferences and past conversations, and it can divide tasks among multiple agents for efficient execution. AgenticSeek prioritizes privacy by running entirely on the user's hardware without sending data to the cloud.

gitingest

GitIngest is a tool that allows users to turn any Git repository into a prompt-friendly text ingest for LLMs. It provides easy code context by generating a text digest from a git repository URL or directory. The tool offers smart formatting for optimized output format for LLM prompts and provides statistics about file and directory structure, size of the extract, and token count. GitIngest can be used as a CLI tool on Linux and as a Python package for code integration. The tool is built using Tailwind CSS for frontend, FastAPI for backend framework, tiktoken for token estimation, and apianalytics.dev for simple analytics. Users can self-host GitIngest by building the Docker image and running the container. Contributions to the project are welcome, and the tool aims to be beginner-friendly for first-time contributors with a simple Python and HTML codebase.

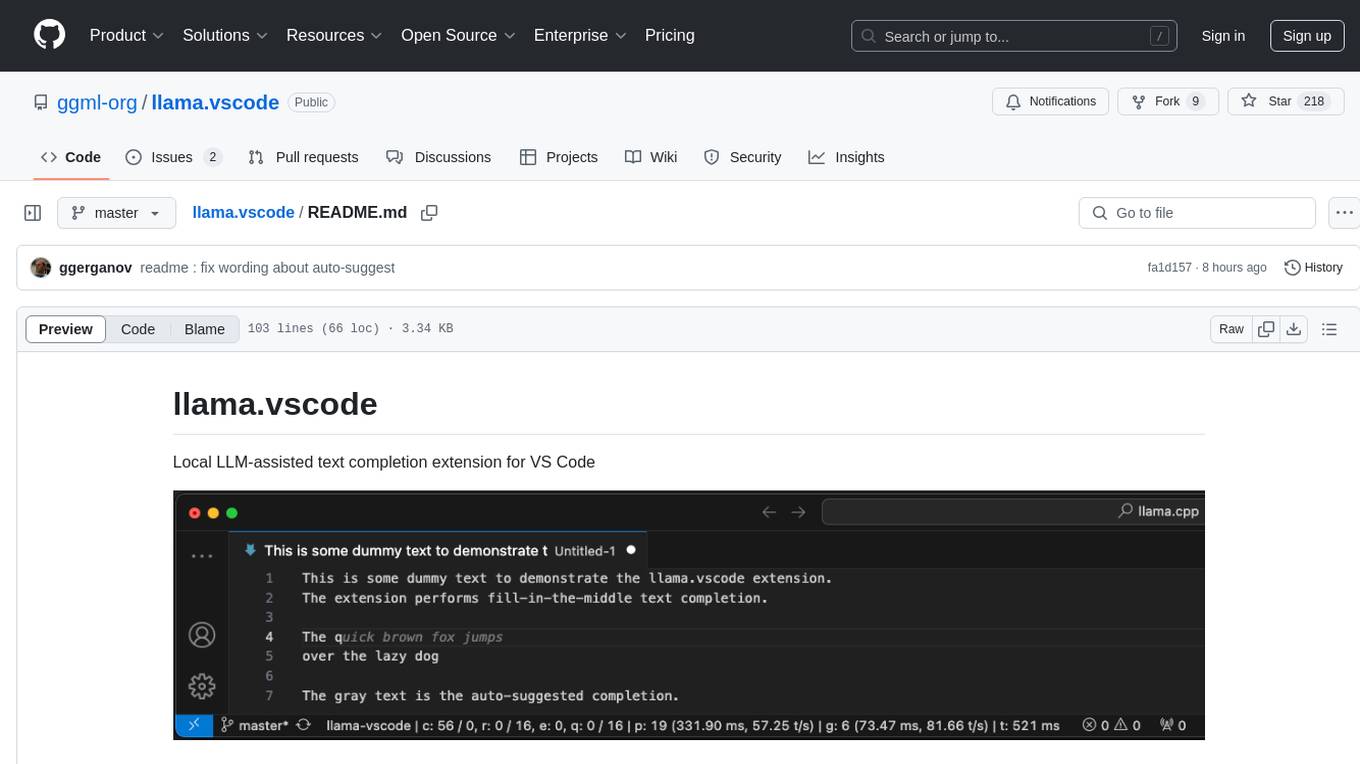

llama.vscode

llama.vscode is a local LLM-assisted text completion extension for Visual Studio Code. It provides auto-suggestions on input, allows accepting suggestions with shortcuts, and offers various features to enhance text completion. The extension is designed to be lightweight and efficient, enabling high-quality completions even on low-end hardware. Users can configure the scope of context around the cursor and control text generation time. It supports very large contexts and displays performance statistics for better user experience.

joinly

joinly.ai is a connector middleware designed to enable AI agents to actively participate in video calls, providing essential meeting tools for AI agents to perform tasks and interact in real time. It supports live interaction, conversational flow, cross-platform compatibility, bring-your-own-LLM, and choose-your-preferred-TTS/STT services. The tool is 100% open-source, self-hosted, and privacy-first, aiming to make meetings accessible to AI agents by joining and participating in video calls.

cursor-tools

cursor-tools is a CLI tool designed to enhance AI agents with advanced skills, such as web search, repository context, documentation generation, GitHub integration, Xcode tools, and browser automation. It provides features like Perplexity for web search, Gemini 2.0 for codebase context, and Stagehand for browser operations. The tool requires API keys for Perplexity AI and Google Gemini, and supports global installation for system-wide access. It offers various commands for different tasks and integrates with Cursor Composer for AI agent usage.

ChatGPT

The ChatGPT API Free Reverse Proxy provides free self-hosted API access to ChatGPT (`gpt-3.5-turbo`) with OpenAI's familiar structure, eliminating the need for code changes. It offers streaming response, API endpoint compatibility, and complimentary access without an API key. Installation options include Docker, PC/Server, and Termux on Android devices. The API can be accessed through a self-hosted local server or a pre-hosted API with an API key obtained from the Discord server. Usage examples are provided for Python and Node.js, and the project is licensed under AGPL-3.0.

shellChatGPT

ShellChatGPT is a shell wrapper for OpenAI's ChatGPT, DALL-E, Whisper, and TTS, featuring integration with LocalAI, Ollama, Gemini, Mistral, Groq, and GitHub Models. It provides text and chat completions, vision, reasoning, and audio models, voice-in and voice-out chatting mode, text editor interface, markdown rendering support, session management, instruction prompt manager, integration with various service providers, command line completion, file picker dialogs, color scheme personalization, stdin and text file input support, and compatibility with Linux, FreeBSD, MacOS, and Termux for a responsive experience.

rclip

rclip is a command-line photo search tool powered by the OpenAI's CLIP neural network. It allows users to search for images using text queries, similar image search, and combining multiple queries. The tool extracts features from photos to enable searching and indexing, with options for previewing results in supported terminals or custom viewers. Users can install rclip on Linux, macOS, and Windows using different installation methods. The repository follows the Conventional Commits standard and welcomes contributions from the community.

rag-gpt

RAG-GPT is a tool that allows users to quickly launch an intelligent customer service system with Flask, LLM, and RAG. It includes frontend, backend, and admin console components. The tool supports cloud-based and local LLMs, enables deployment of conversational service robots in minutes, integrates diverse knowledge bases, offers flexible configuration options, and features an attractive user interface.

aidermacs

Aidermacs is an AI pair programming tool for Emacs that integrates Aider, a powerful open-source AI pair programming tool. It provides top performance on the SWE Bench, support for multi-file edits, real-time file synchronization, and broad language support. Aidermacs delivers an Emacs-centric experience with features like intelligent model selection, flexible terminal backend support, smarter syntax highlighting, enhanced file management, and streamlined transient menus. It thrives on community involvement, encouraging contributions, issue reporting, idea sharing, and documentation improvement.

For similar tasks

podscript

Podscript is a tool designed to generate transcripts for podcasts and similar audio files using Language Model Models (LLMs) and Speech-to-Text (STT) APIs. It provides a command-line interface (CLI) for transcribing audio from various sources, including YouTube videos and audio files, using different speech-to-text services like Deepgram, Assembly AI, and Groq. Additionally, Podscript offers a web-based user interface for convenience. Users can configure keys for supported services, transcribe audio, and customize the transcription models. The tool aims to simplify the process of creating accurate transcripts for audio content.

vibe

Vibe is a tool designed to transcribe audio in multiple languages with features such as offline functionality, user-friendly design, support for various file formats, automatic updates, and translation. It is optimized for different platforms and hardware, offering total freedom to customize models easily. The tool is ideal for transcribing audio and video files, with upcoming features like transcribing system audio and audio from microphone. Vibe is a versatile and efficient transcription tool suitable for various users.

Customer-Service-Conversational-Insights-with-Azure-OpenAI-Services

This solution accelerator is built on Azure Cognitive Search Service and Azure OpenAI Service to synthesize post-contact center transcripts for intelligent contact center scenarios. It converts raw transcripts into customer call summaries to extract insights around product and service performance. Key features include conversation summarization, key phrase extraction, speech-to-text transcription, sensitive information extraction, sentiment analysis, and opinion mining. The tool enables data professionals to quickly analyze call logs for improvement in contact center operations.

whetstone.chatgpt

Whetstone.ChatGPT is a simple light-weight library that wraps the Open AI API with support for dependency injection. It supports features like GPT 4, GPT 3.5 Turbo, chat completions, audio transcription and translation, vision completions, files, fine tunes, images, embeddings, moderations, and response streaming. The library provides a video walkthrough of a Blazor web app built on it and includes examples such as a command line bot. It offers quickstarts for dependency injection, chat completions, completions, file handling, fine tuning, image generation, and audio transcription.

chipper

Chipper provides a web interface, CLI, and architecture for pipelines, document chunking, web scraping, and query workflows. It is built with Haystack, Ollama, Hugging Face, Docker, Tailwind, and ElasticSearch, running locally or as a Dockerized service. Originally created to assist in creative writing, it now offers features like local Ollama and Hugging Face API, ElasticSearch embeddings, document splitting, web scraping, audio transcription, user-friendly CLI, and Docker deployment. The project aims to be educational, beginner-friendly, and a playground for AI exploration and innovation.

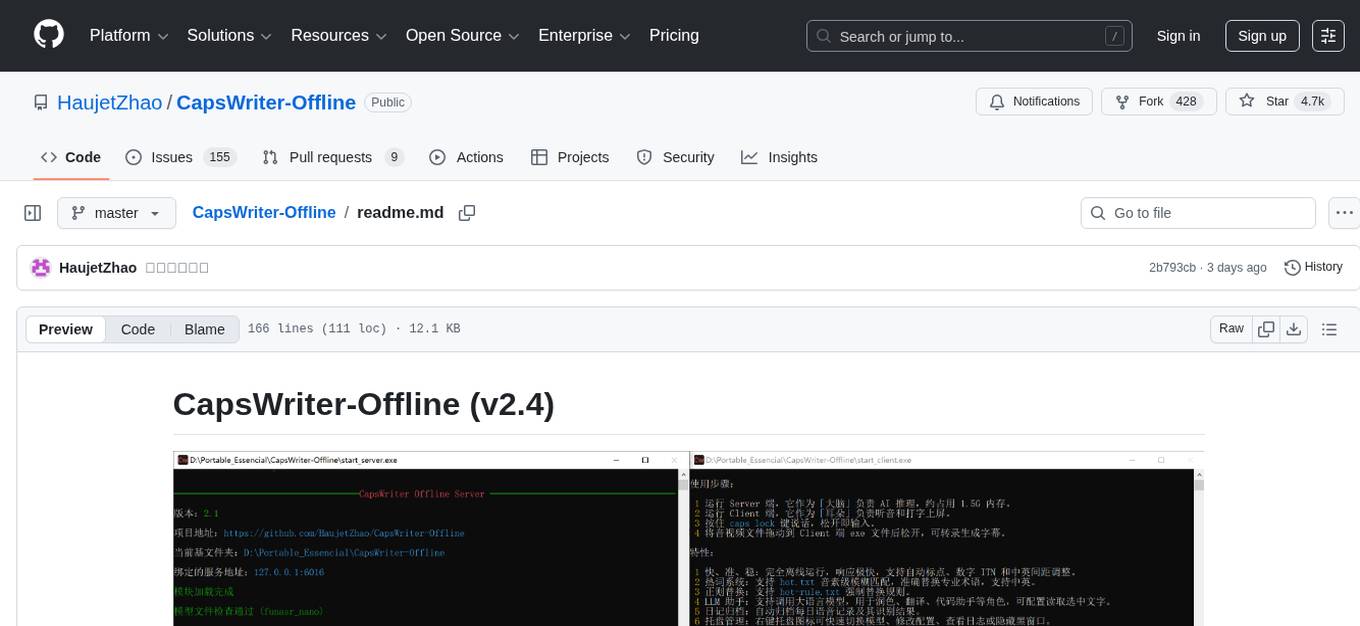

CapsWriter-Offline

CapsWriter-Offline is a completely offline voice input tool designed for Windows. It allows users to speak while holding the CapsLock key and the text will be inputted when the key is released. The tool offers features such as voice input, file transcription, automatic conversion of complex numbers, context-enhanced recognition using hot words, forced word replacement based on phonetic similarity, regular expression-based replacement, error correction recording, customizable LLM roles, tray menu options, client-server architecture, log archiving, and more. The tool aims to provide fast, accurate, highly customizable, and completely offline voice input experience.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.