vibe

Transcribe on your own!

Stars: 5287

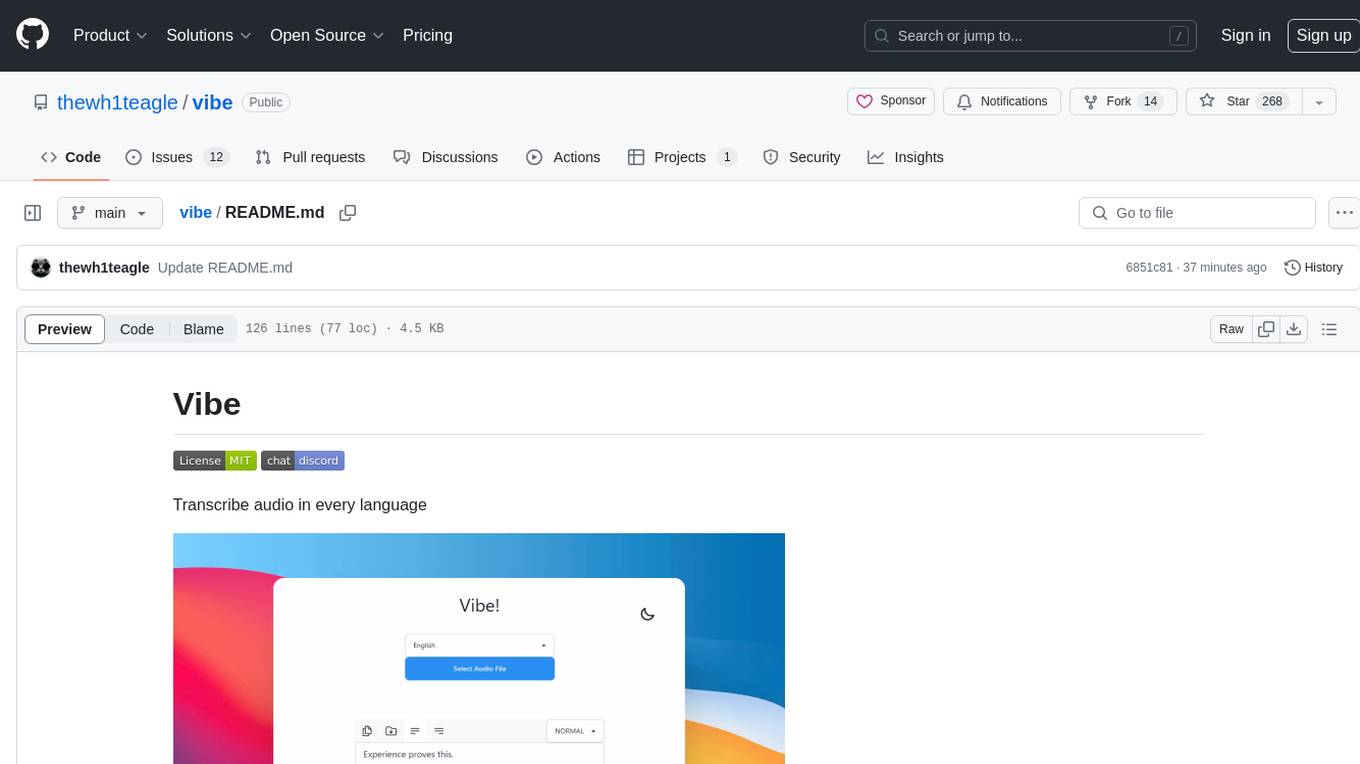

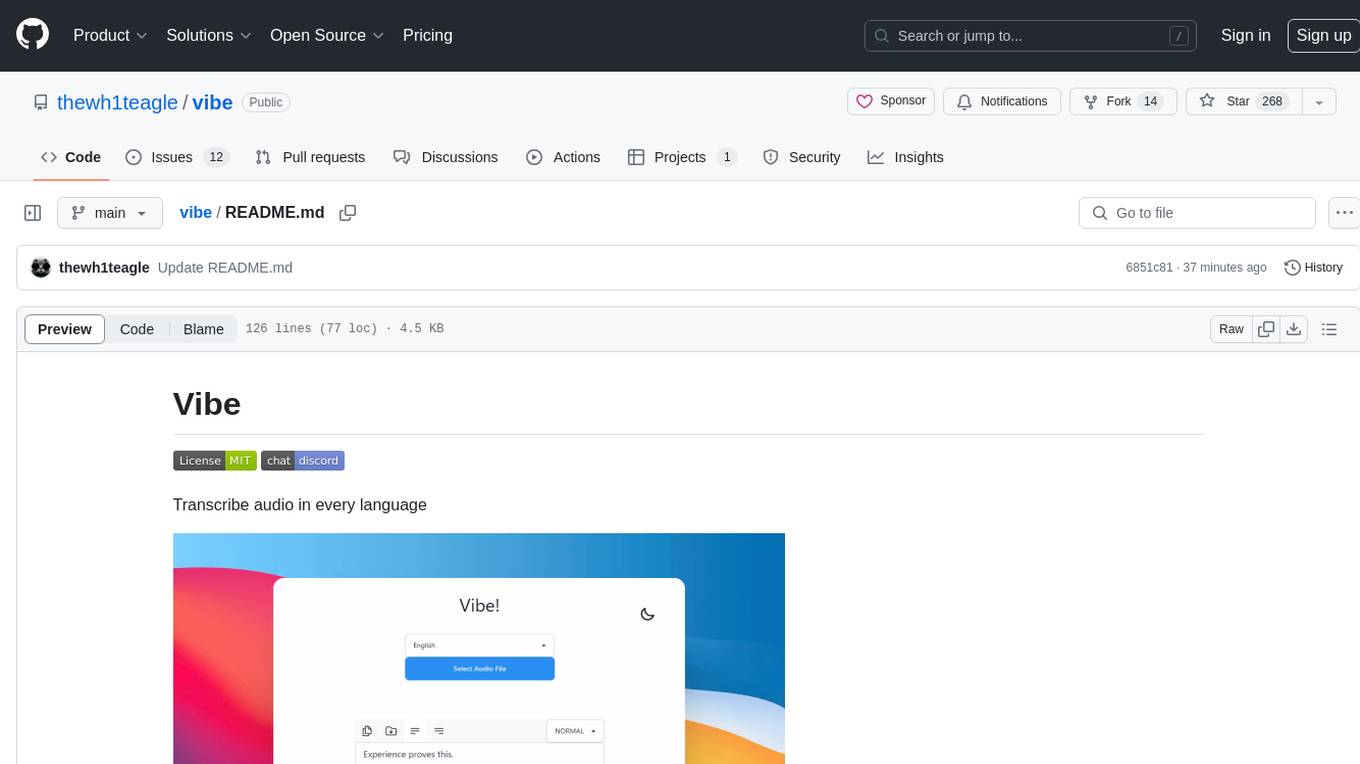

Vibe is a tool designed to transcribe audio in multiple languages with features such as offline functionality, user-friendly design, support for various file formats, automatic updates, and translation. It is optimized for different platforms and hardware, offering total freedom to customize models easily. The tool is ideal for transcribing audio and video files, with upcoming features like transcribing system audio and audio from microphone. Vibe is a versatile and efficient transcription tool suitable for various users.

README:

⌨️ Transcribe audio / video offline using OpenAI Whisper

🔗 Download Vibe | Give it a Star ⭐ | Support the project 🤝

- 🌍 Transcribe almost every language

- 🔒 Ultimate privacy: fully offline transcription, no data ever leaves your device

- 🎨 User friendly design

- 🎙️ Transcribe audio / video

- 🎶 Option to transcribe audio from popular websites (YouTube, Vimeo, Facebook, Twitter and more!)

- 📂 Batch transcribe multiple files!

- 📝 Support

SRT,VTT,TXT,HTML,PDF,JSON,DOCXformats - 👀 Realtime preview

- ✨ Summarize transcripts: Get quick, multilingual summaries using the Claude API

- 🧠 Ollama support: Do local AI analysis and batch summaries with Ollama

- 🌐 Translate to English from any language

- 🖨️ Print transcript directly to any printer

- 🔄 Automatic updates

- 💻 Optimized for

GPU(macOS,Windows,Linux) - 🎮 Optimized for

Nvidia/AMD/IntelGPUs! (Vulkan/CoreML) - 🔧 Total Freedom: Customize Models Easily via Settings

- ⚙️ Model arguments for advanced users

- ⏳ Transcribe system audio

- 🎤 Transcribe from microphone

- 🖥️ CLI support: Use Vibe directly from the command line interface! (see

--help) - 👥 Speaker diarization

- 📱

iOS & Android support(coming soon) - 📥 Integrate custom models from your own site: Use

vibe://download/?url=<model url> - 📹 Choose caption length optimized for videos / reels

- ⚡ HTTP API with Swagger docs! (use

--serverand openhttp://<host>:3022/docsfor docs)

MacOS

Windows

Linux

PRs are welcomed! In addition, you're welcome to add translations.

We would like to express our sincere gratitude to all the contributors.

You can see the roadmap in Vibe-Roadmap

- Copy

enfromdesktop/src-tauri/localesfolder to new directory egpt-BR(use bcp47 language code) - Change every value in the files there, to the new language and keep the keys as is

- create PR / issue in Github

In addition you can add translation to Vibe website by creating new files in the landing/static/locales.

see Vibe Docs

Medium post

You can open new issue and it's recommend to check debug.md first.

Your privacy is important to us. Please review our Privacy Policy to understand how we handle your data.

Thanks for tauri.app for making the best apps framework I ever seen

Thanks for wang-bin/avbuild for pre built ffmpeg

Thanks for github.com/whisper.cpp for outstanding interface for the AI model.

Thanks for openai.com for their amazing Whisper model

Thanks for github.com for their support in open source projects, providing infastructure completely free.

And for all the amazing open source frameworks and libraries which this project uses...

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for vibe

Similar Open Source Tools

vibe

Vibe is a tool designed to transcribe audio in multiple languages with features such as offline functionality, user-friendly design, support for various file formats, automatic updates, and translation. It is optimized for different platforms and hardware, offering total freedom to customize models easily. The tool is ideal for transcribing audio and video files, with upcoming features like transcribing system audio and audio from microphone. Vibe is a versatile and efficient transcription tool suitable for various users.

Loyal-Elephie

Embark on an exciting adventure with Loyal Elephie, your faithful AI sidekick! This project combines the power of a neat Next.js web UI and a mighty Python backend, leveraging the latest advancements in Large Language Models (LLMs) and Retrieval Augmented Generation (RAG) to deliver a seamless and meaningful chatting experience. Features include controllable memory, hybrid search, secure web access, streamlined LLM agent, and optional Markdown editor integration. Loyal Elephie supports both open and proprietary LLMs and embeddings serving as OpenAI compatible APIs.

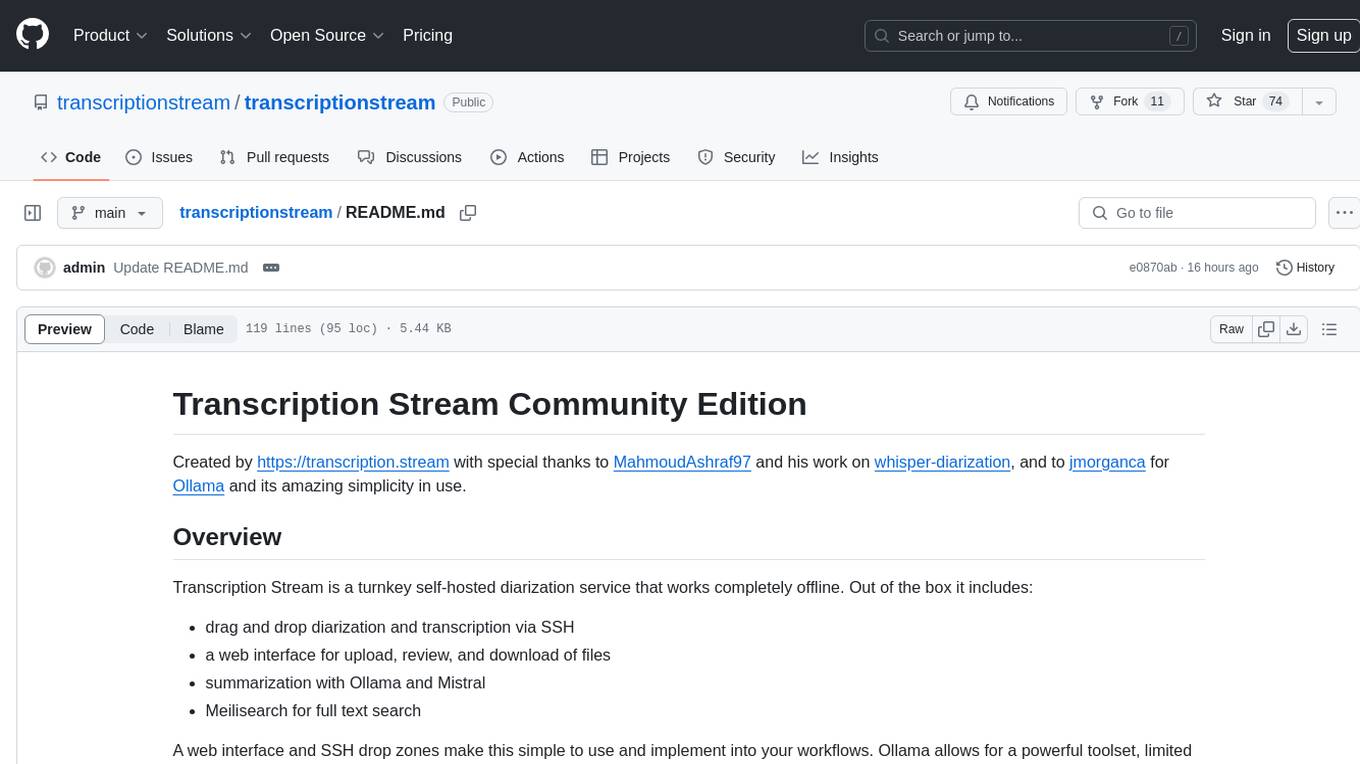

transcriptionstream

Transcription Stream is a self-hosted diarization service that works offline, allowing users to easily transcribe and summarize audio files. It includes a web interface for file management, Ollama for complex operations on transcriptions, and Meilisearch for fast full-text search. Users can upload files via SSH or web interface, with output stored in named folders. The tool requires a NVIDIA GPU and provides various scripts for installation and running. Ports for SSH, HTTP, Ollama, and Meilisearch are specified, along with access details for SSH server and web interface. Customization options and troubleshooting tips are provided in the documentation.

Whisper-TikTok

Discover Whisper-TikTok, an innovative AI-powered tool that leverages the prowess of Edge TTS, OpenAI-Whisper, and FFMPEG to craft captivating TikTok videos. Whisper-TikTok effortlessly generates accurate transcriptions from audio files and integrates Microsoft Edge Cloud Text-to-Speech API for vibrant voiceovers. The program orchestrates the synthesis of videos using a structured JSON dataset, generating mesmerizing TikTok content in minutes.

nlux

nlux is an open-source Javascript and React JS library that makes it super simple to integrate powerful large language models (LLMs) like ChatGPT into your web app or website. With just a few lines of code, you can add conversational AI capabilities and interact with your favourite LLM.

pyht

pyht is a Python SDK for the PlayHT's AI Text-to-Speech API, allowing users to convert text into high-quality audio streams in humanlike voice. It supports real-time text-to-speech streaming, pre-built and custom voices, various audio formats, and different sample rates.

nlux

NLUX is an open-source JavaScript and React JS library that simplifies the integration of powerful large language models (LLMs) like ChatGPT into web apps or websites. With just a few lines of code, users can add conversational AI capabilities and interact with their favorite LLM. The library offers features such as building AI chat interfaces in minutes, React components and hooks for easy integration, LLM adapters for various APIs, customizable assistant and user personas, streaming LLM output, custom renderers, high customizability, and zero dependencies. NLUX is designed with principles of intuitiveness, performance, accessibility, and developer experience in mind. The mission of NLUX is to enable developers to build outstanding LLM front-ends and applications with a focus on performance and usability.

podscript

Podscript is a tool designed to generate transcripts for podcasts and similar audio files using Language Model Models (LLMs) and Speech-to-Text (STT) APIs. It provides a command-line interface (CLI) for transcribing audio from various sources, including YouTube videos and audio files, using different speech-to-text services like Deepgram, Assembly AI, and Groq. Additionally, Podscript offers a web-based user interface for convenience. Users can configure keys for supported services, transcribe audio, and customize the transcription models. The tool aims to simplify the process of creating accurate transcripts for audio content.

Whisper-WebUI

Whisper-WebUI is a Gradio-based browser interface for Whisper, serving as an Easy Subtitle Generator. It supports generating subtitles from various sources such as files, YouTube, and microphone. The tool also offers speech-to-text and text-to-text translation features, utilizing Facebook NLLB models and DeepL API. Users can translate subtitle files from other languages to English and vice versa. The project integrates faster-whisper for improved VRAM usage and transcription speed, providing efficiency metrics for optimized whisper models. Additionally, users can choose from different Whisper models based on size and language requirements.

ai-shifu

AI-Shifu is an AI-led chat flow tool powered by LLM that provides an interactive and immersive experience for users. It allows users to follow a preset chat flow while being able to ask questions and affect the conversation. The tool can make personalized outputs based on user identity, interests, and preferences, making users feel like they are receiving one-on-one service. It is suitable for education, storytelling, product guides, surveys, and game NPC scenarios.

LafTools

LafTools is a privacy-first, self-hosted, fully open source toolbox designed for programmers. It offers a wide range of tools, including code generation, translation, encryption, compression, data analysis, and more. LafTools is highly integrated with a productive UI and supports full GPT-alike functionality. It is available as Docker images and portable edition, with desktop edition support planned for the future.

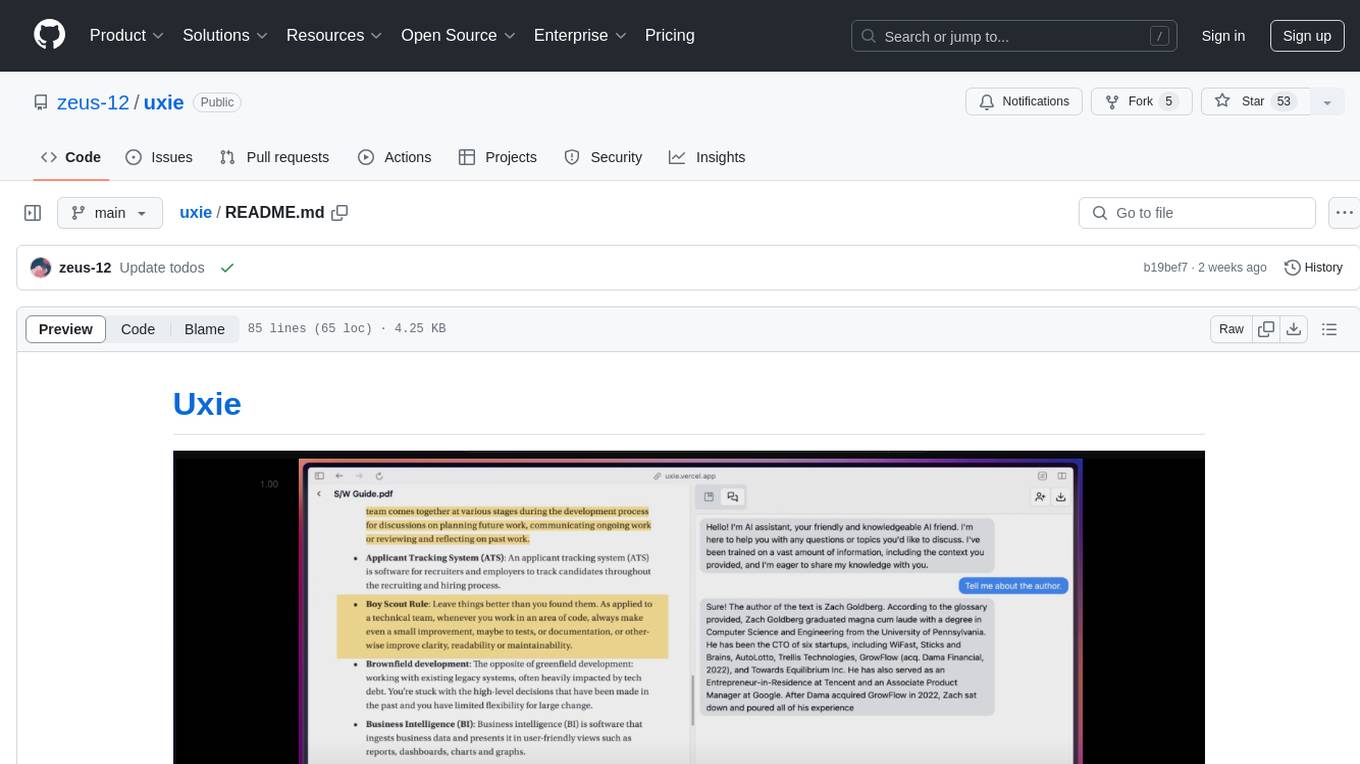

uxie

Uxie is a PDF reader app designed to revolutionize the learning experience. It offers features such as annotation, note-taking, collaboration tools, integration with LLM for enhanced learning, and flashcard generation with LLM feedback. Built using Nextjs, tRPC, Zod, TypeScript, Tailwind CSS, React Query, React Hook Form, Supabase, Prisma, and various other tools. Users can take notes, summarize PDFs, chat and collaborate with others, create custom blocks in the editor, and use AI-powered text autocompletion. The tool allows users to craft simple flashcards, test knowledge, answer questions, and receive instant feedback through AI evaluation.

nextjs-ollama-llm-ui

This web interface provides a user-friendly and feature-rich platform for interacting with Ollama Large Language Models (LLMs). It offers a beautiful and intuitive UI inspired by ChatGPT, making it easy for users to get started with LLMs. The interface is fully local, storing chats in local storage for convenience, and fully responsive, allowing users to chat on their phones with the same ease as on a desktop. It features easy setup, code syntax highlighting, and the ability to easily copy codeblocks. Users can also download, pull, and delete models directly from the interface, and switch between models quickly. Chat history is saved and easily accessible, and users can choose between light and dark mode. To use the web interface, users must have Ollama downloaded and running, and Node.js (18+) and npm installed. Installation instructions are provided for running the interface locally. Upcoming features include the ability to send images in prompts, regenerate responses, import and export chats, and add voice input support.

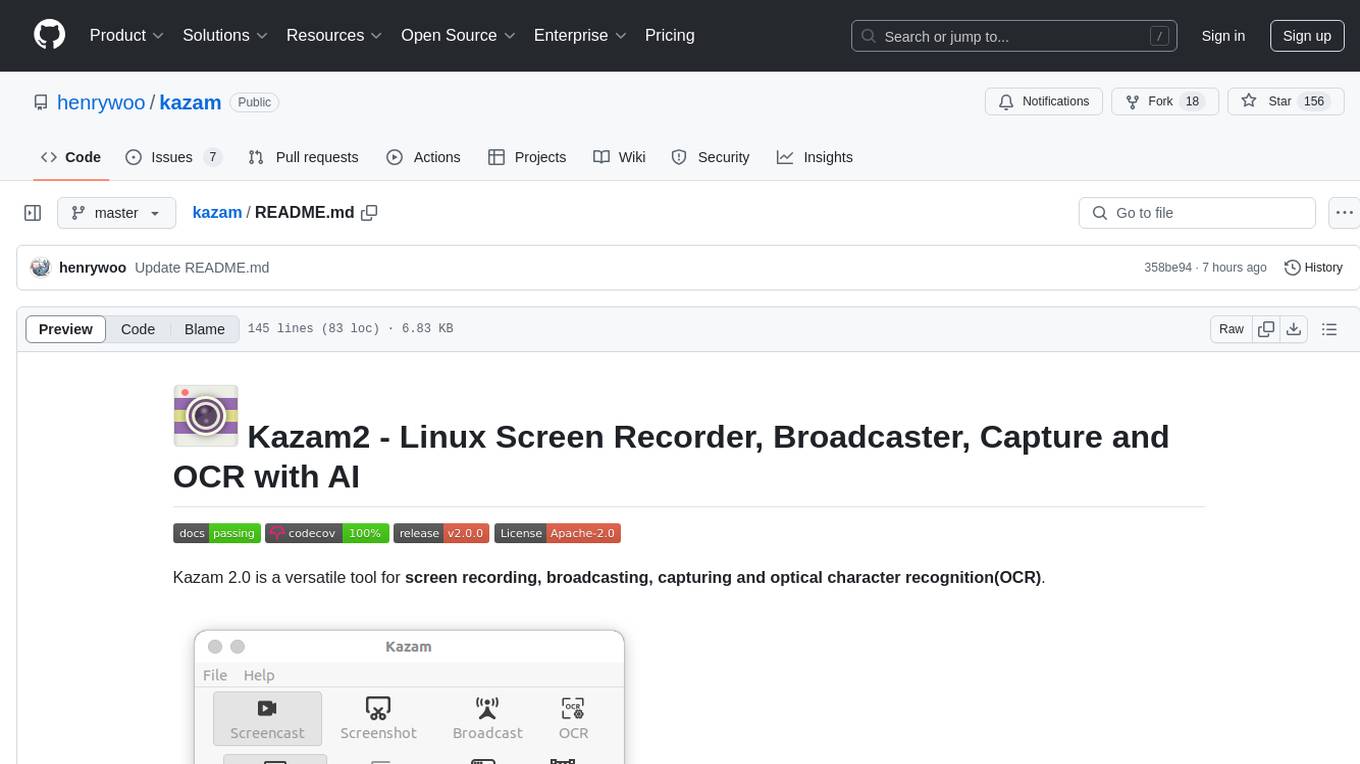

kazam

Kazam 2.0 is a versatile tool for screen recording, broadcasting, capturing, and optical character recognition (OCR). It allows users to capture screen content, broadcast live over the internet, extract text from captured content, record audio, and use a web camera for recording. The tool supports full screen, window, and area modes, and offers features like keyboard shortcuts, live broadcasting with Twitch and YouTube, and tips for recording quality. Users can install Kazam on Ubuntu and use it for various recording and broadcasting needs.

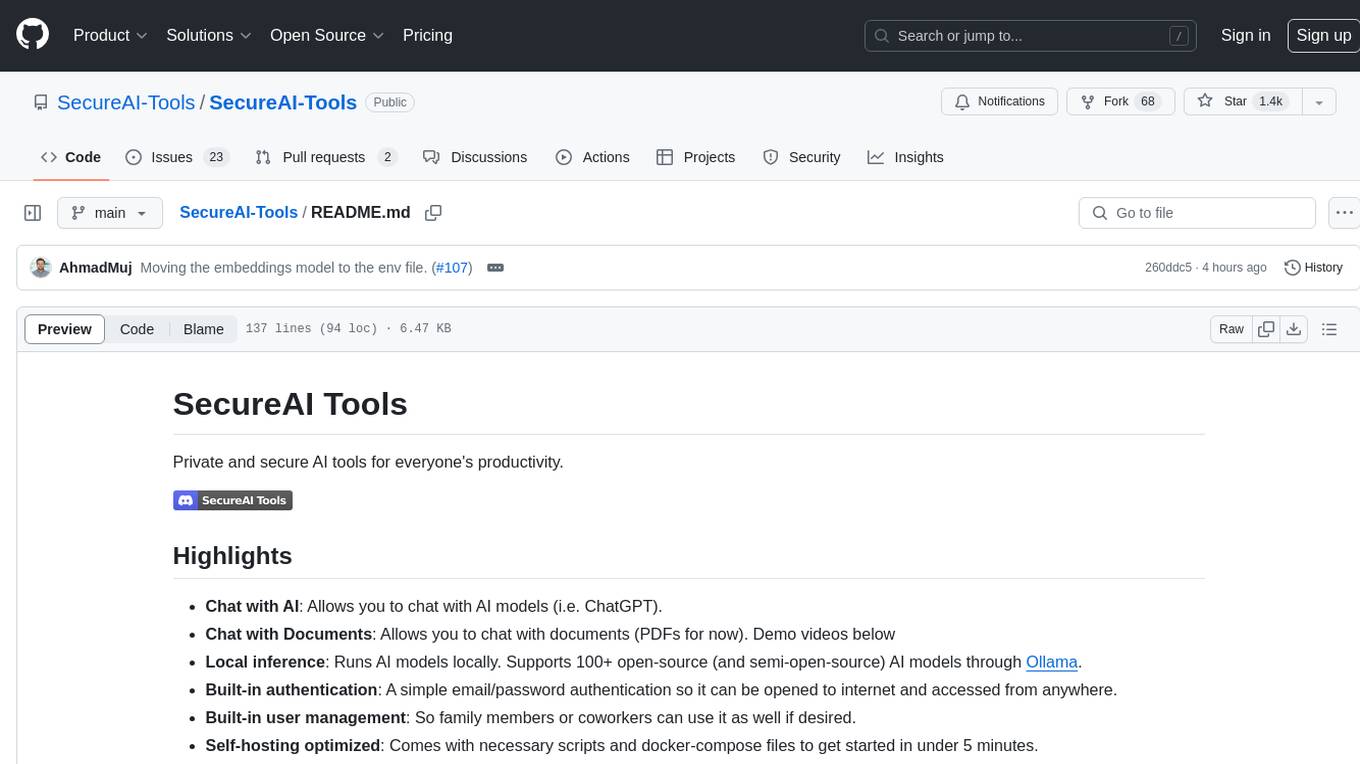

SecureAI-Tools

SecureAI Tools is a private and secure AI tool that allows users to chat with AI models, chat with documents (PDFs), and run AI models locally. It comes with built-in authentication and user management, making it suitable for family members or coworkers. The tool is self-hosting optimized and provides necessary scripts and docker-compose files for easy setup in under 5 minutes. Users can customize the tool by editing the .env file and enabling GPU support for faster inference. SecureAI Tools also supports remote OpenAI-compatible APIs, with lower hardware requirements for using remote APIs only. The tool's features wishlist includes chat sharing, mobile-friendly UI, and support for more file types and markdown rendering.

crawlee-python

Crawlee-python is a web scraping and browser automation library that covers crawling and scraping end-to-end, helping users build reliable scrapers fast. It allows users to crawl the web for links, scrape data, and store it in machine-readable formats without worrying about technical details. With rich configuration options, users can customize almost any aspect of Crawlee to suit their project's needs.

For similar tasks

vibe

Vibe is a tool designed to transcribe audio in multiple languages with features such as offline functionality, user-friendly design, support for various file formats, automatic updates, and translation. It is optimized for different platforms and hardware, offering total freedom to customize models easily. The tool is ideal for transcribing audio and video files, with upcoming features like transcribing system audio and audio from microphone. Vibe is a versatile and efficient transcription tool suitable for various users.

podscript

Podscript is a tool designed to generate transcripts for podcasts and similar audio files using Language Model Models (LLMs) and Speech-to-Text (STT) APIs. It provides a command-line interface (CLI) for transcribing audio from various sources, including YouTube videos and audio files, using different speech-to-text services like Deepgram, Assembly AI, and Groq. Additionally, Podscript offers a web-based user interface for convenience. Users can configure keys for supported services, transcribe audio, and customize the transcription models. The tool aims to simplify the process of creating accurate transcripts for audio content.

Customer-Service-Conversational-Insights-with-Azure-OpenAI-Services

This solution accelerator is built on Azure Cognitive Search Service and Azure OpenAI Service to synthesize post-contact center transcripts for intelligent contact center scenarios. It converts raw transcripts into customer call summaries to extract insights around product and service performance. Key features include conversation summarization, key phrase extraction, speech-to-text transcription, sensitive information extraction, sentiment analysis, and opinion mining. The tool enables data professionals to quickly analyze call logs for improvement in contact center operations.

whetstone.chatgpt

Whetstone.ChatGPT is a simple light-weight library that wraps the Open AI API with support for dependency injection. It supports features like GPT 4, GPT 3.5 Turbo, chat completions, audio transcription and translation, vision completions, files, fine tunes, images, embeddings, moderations, and response streaming. The library provides a video walkthrough of a Blazor web app built on it and includes examples such as a command line bot. It offers quickstarts for dependency injection, chat completions, completions, file handling, fine tuning, image generation, and audio transcription.

chipper

Chipper provides a web interface, CLI, and architecture for pipelines, document chunking, web scraping, and query workflows. It is built with Haystack, Ollama, Hugging Face, Docker, Tailwind, and ElasticSearch, running locally or as a Dockerized service. Originally created to assist in creative writing, it now offers features like local Ollama and Hugging Face API, ElasticSearch embeddings, document splitting, web scraping, audio transcription, user-friendly CLI, and Docker deployment. The project aims to be educational, beginner-friendly, and a playground for AI exploration and innovation.

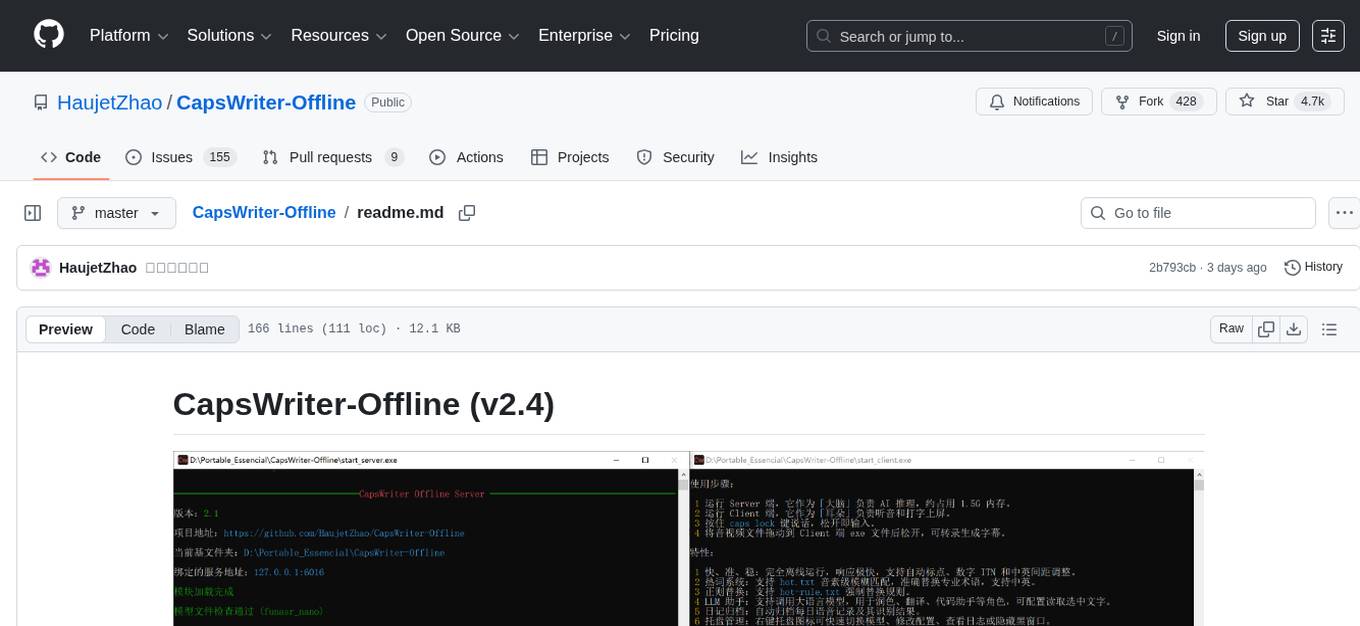

CapsWriter-Offline

CapsWriter-Offline is a completely offline voice input tool designed for Windows. It allows users to speak while holding the CapsLock key and the text will be inputted when the key is released. The tool offers features such as voice input, file transcription, automatic conversion of complex numbers, context-enhanced recognition using hot words, forced word replacement based on phonetic similarity, regular expression-based replacement, error correction recording, customizable LLM roles, tray menu options, client-server architecture, log archiving, and more. The tool aims to provide fast, accurate, highly customizable, and completely offline voice input experience.

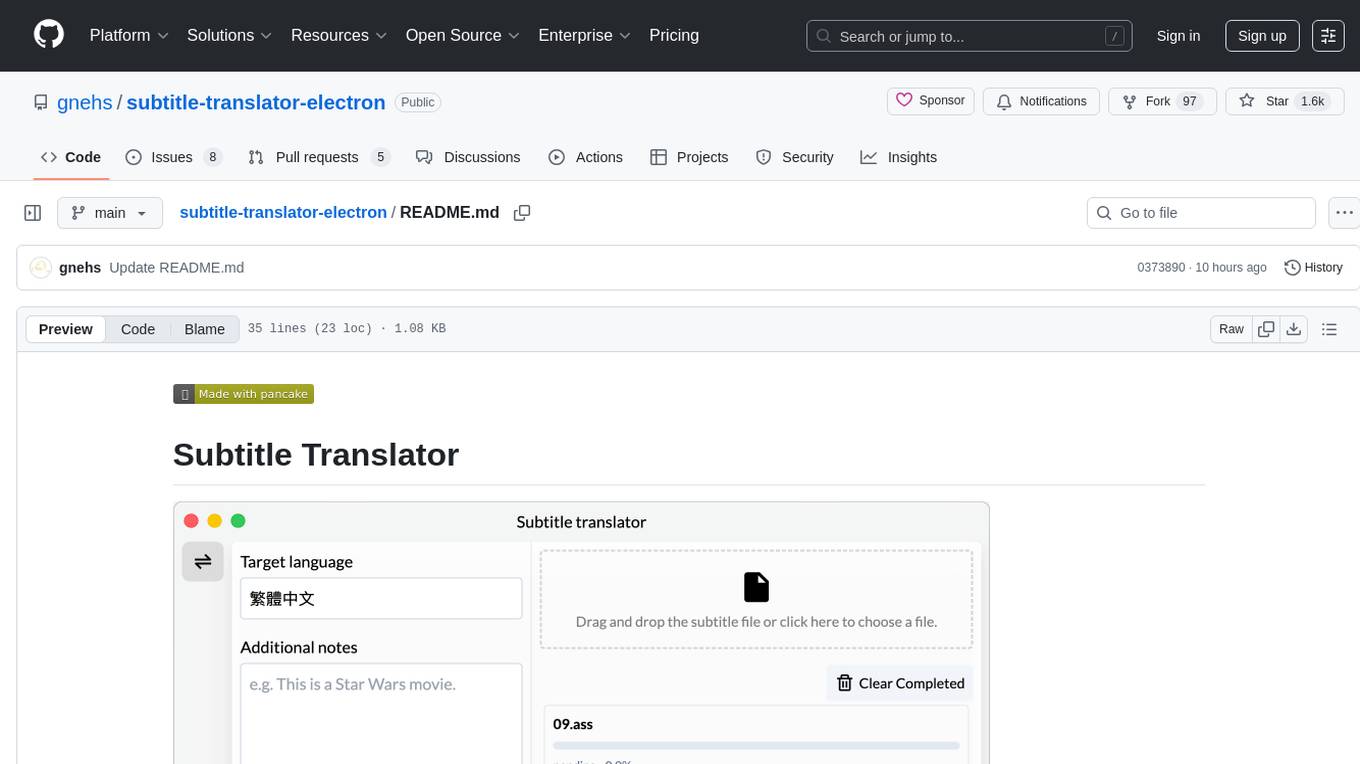

subtitle-translator-electron

Subtitle Translator is a tool that utilizes ChatGPT to translate subtitles in various formats such as .ass, .srt, .ssa, and .vtt. It supports multiple languages and provides translations based on context from preceding and following sentences. Users can download the stable version from the Releases page and contribute through pull requests. The tool aims to simplify the process of translating subtitles for different media content.

For similar jobs

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

exif-photo-blog

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

SillyTavern

SillyTavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create. SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

Twitter-Insight-LLM

This project enables you to fetch liked tweets from Twitter (using Selenium), save it to JSON and Excel files, and perform initial data analysis and image captions. This is part of the initial steps for a larger personal project involving Large Language Models (LLMs).

AISuperDomain

Aila Desktop Application is a powerful tool that integrates multiple leading AI models into a single desktop application. It allows users to interact with various AI models simultaneously, providing diverse responses and insights to their inquiries. With its user-friendly interface and customizable features, Aila empowers users to engage with AI seamlessly and efficiently. Whether you're a researcher, student, or professional, Aila can enhance your AI interactions and streamline your workflow.

ChatGPT-On-CS

This project is an intelligent dialogue customer service tool based on a large model, which supports access to platforms such as WeChat, Qianniu, Bilibili, Douyin Enterprise, Douyin, Doudian, Weibo chat, Xiaohongshu professional account operation, Xiaohongshu, Zhihu, etc. You can choose GPT3.5/GPT4.0/ Lazy Treasure Box (more platforms will be supported in the future), which can process text, voice and pictures, and access external resources such as operating systems and the Internet through plug-ins, and support enterprise AI applications customized based on their own knowledge base.

obs-localvocal

LocalVocal is a live-streaming AI assistant plugin for OBS that allows you to transcribe audio speech into text and perform various language processing functions on the text using AI / LLMs (Large Language Models). It's privacy-first, with all data staying on your machine, and requires no GPU, cloud costs, network, or downtime.