Construction-Hazard-Detection

An AI-driven solution enhances construction site safety using the YOLOv8 model for object detection. It identifies hazards like workers without helmets or safety vests, workers near machinery, and workers near vehicles. Post-processing algorithms further improve detection accuracy.

Stars: 153

Construction-Hazard-Detection is an AI-driven tool focused on improving safety at construction sites by utilizing the YOLOv8 model for object detection. The system identifies potential hazards like overhead heavy loads and steel pipes, providing real-time analysis and warnings. Users can configure the system via a YAML file and run it using Docker. The primary dataset used for training is the Construction Site Safety Image Dataset enriched with additional annotations. The system logs are accessible within the Docker container for debugging, and notifications are sent through the LINE messaging API when hazards are detected.

README:

"Construction-Hazard-Detection" is an AI-driven tool aimed at enhancing safety on construction sites. Utilising the YOLOv8 model for object detection, this system identifies potential hazards such as workers without helmets, workers without safety vests, workers in close proximity to machinery, and workers near vehicles. Post-processing algorithms are employed to enhance the accuracy of the detections. The system is designed for deployment in real-time environments, providing immediate analysis and alerts for detected hazards.

A newly developed feature allows the system to use safety cones to draw polygons, defining controlled zones and calculating the number of people within these zones. Notifications can be sent if people enter these controlled areas.

Additionally, the system can integrate AI recognition results in real-time through a web interface or use LINE, Messenger, WeChat, and Telegram notifications to send messages and real-time on-site images for prompt alerts and reminders.

Before running the application, you need to configure the system by specifying the details of the video streams and other parameters in a YAML configuration file. An example configuration file configuration.yaml should look like this:

# This is a list of video configurations

- video_url: "rtsp://example1.com/stream" # URL of the video

image_name: "cam1" # Name of the image

label: "label1" # Label of the video

model_key: "yolov8n" # Model key for the video

line_token: "token1" # Line token for notification

run_local: True # Run object detection locally

- video_url: "rtsp://example2.com/stream"

image_name: "cam2"

label: "label2"

model_key: "yolov8n"

line_token: "token2"

run_local: TrueEach object in the array represents a video stream configuration with the following fields:

-

video_url: The URL of the live video stream. This can include:- Surveillance streams

- RTSP streams

- Secondary streams

- YouTube videos or live streams

- Discord streams

-

image_name: The name assigned to the image or camera. -

label: The label assigned to the video stream. -

model_key: The key identifier for the machine learning model to use. -

line_token: The LINE messaging API token for sending notifications. For information on how to obtain a LINE token, please refer to line_notify_guide_en. -

run_local: Boolean value indicating whether to run object detection locally.

Now, you could launch the hazard-detection system in Docker or Python env:

Docker

To run the hazard detection system, you need to have Docker and Docker Compose installed on your machine. Follow these steps to get the system up and running:

-

Clone the repository to your local machine.

git clone https://github.com/yihong1120/Construction-Hazard-Detection.git -

Navigate to the cloned directory.

cd Construction-Hazard-Detection -

Build and run the services using Docker Compose:

docker-compose up --build

-

To run the main application with a specific configuration file, use the following command:

docker-compose run main-application python main.py --config /path/in/container/configuration.yaml

Replace

/path/in/container/configuration.yamlwith the actual path to your configuration file inside the container. -

To stop the services, use the following command:

docker-compose down

Python

To run the hazard detection system with Python, follow these steps:

-

Clone the repository to your local machine:

git clone https://github.com/yihong1120/Construction-Hazard-Detection.git

-

Navigate to the cloned directory:

cd Construction-Hazard-Detection -

Install required packages:

pip install -r requirements.txt

-

Install and launch MySQL service (if required):

sudo apt install mysql-server sudo systemctl start mysql.service

-

Start user management API:

gunicorn -w 1 -b 0.0.0.0:8000 "examples.User-Management.app:user-managements-app" -

Run object detection API:

gunicorn -w 1 -b 0.0.0.0:8001 "examples.Model-Server.app:app" -

Run the main application with a specific configuration file:

python3 main.py --config /path/to/your/configuration.yaml

Replace

/path/to/your/configuration.yamlwith the actual path to your configuration file. -

Start the streaming web service:

gunicorn -w 1 -k eventlet -b 127.0.0.1:8002 "examples.Stream-Web.app:streaming-web-app"

- The system logs are available within the Docker container and can be accessed for debugging purposes.

- The output images with detections (if enabled) will be saved to the specified output path.

- Notifications will be sent through LINE messaging API during the specified hours if hazards are detected.

- Ensure that the

Dockerfileis present in the root directory of the project and is properly configured as per your application's requirements. - The

-p 8080:8080flag maps port 8080 of the container to port 8080 on your host machine, allowing you to access the application via the host's IP address and port number.

For more information on Docker usage and commands, refer to the Docker documentation.

The primary dataset for training this model is the Construction Site Safety Image Dataset from Roboflow. We have enriched this dataset with additional annotations and made it openly accessible on Roboflow. The enhanced dataset can be found here: Construction Hazard Detection on Roboflow. This dataset includes the following labels:

0: 'Hardhat'1: 'Mask'2: 'NO-Hardhat'3: 'NO-Mask'4: 'NO-Safety Vest'5: 'Person'6: 'Safety Cone'7: 'Safety Vest'8: 'Machinery'9: 'Vehicle'

Models for detection

| Model | size (pixels) |

mAPval 50 |

mAPval 50-95 |

params (M) |

FLOPs (B) |

|---|---|---|---|---|---|

| YOLOv8n | 640 | 59.3 | 35.0 | 3.2 | 8.7 |

| YOLOv8s | 640 | 73.1 | 47.6 | 11.2 | 28.6 |

| YOLOv8m | 640 | 76.8 | 53.9 | 25.9 | 78.9 |

| YOLOv8l | 640 | // | // | 43.7 | 165.2 |

| YOLOv8x | 640 | 82.9 | 60.9 | 68.2 | 257.8 |

Our comprehensive dataset ensures that the model is well-equipped to identify a wide range of potential hazards commonly found in construction environments.

We welcome contributions to this project. Please follow these steps:

- Fork the repository.

- Make your changes.

- Submit a pull request with a clear description of your improvements.

- [x] Data collection and preprocessing.

- [x] Training YOLOv8 model with construction site data.

- [x] Developing post-processing techniques for enhanced accuracy.

- [x] Implementing real-time analysis and alert system.

- [x] Testing and validation in simulated environments.

- [x] Deployment in actual construction sites for field testing.

- [x] Ongoing maintenance and updates based on user feedback.

This project is licensed under the AGPL-3.0 License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Construction-Hazard-Detection

Similar Open Source Tools

Construction-Hazard-Detection

Construction-Hazard-Detection is an AI-driven tool focused on improving safety at construction sites by utilizing the YOLOv8 model for object detection. The system identifies potential hazards like overhead heavy loads and steel pipes, providing real-time analysis and warnings. Users can configure the system via a YAML file and run it using Docker. The primary dataset used for training is the Construction Site Safety Image Dataset enriched with additional annotations. The system logs are accessible within the Docker container for debugging, and notifications are sent through the LINE messaging API when hazards are detected.

LEADS

LEADS is a lightweight embedded assisted driving system designed to simplify the development of instrumentation, control, and analysis systems for racing cars. It is written in Python and C/C++ with impressive performance. The system is customizable and provides abstract layers for component rearrangement. It supports hardware components like Raspberry Pi and Arduino, and can adapt to various hardware types. LEADS offers a modular structure with a focus on flexibility and lightweight design. It includes robust safety features, modern GUI design with dark mode support, high performance on different platforms, and powerful ESC systems for traction control and braking. The system also supports real-time data sharing, live video streaming, and AI-enhanced data analysis for driver training. LEADS VeC Remote Analyst enables transparency between the driver and pit crew, allowing real-time data sharing and analysis. The system is designed to be user-friendly, adaptable, and efficient for racing car development.

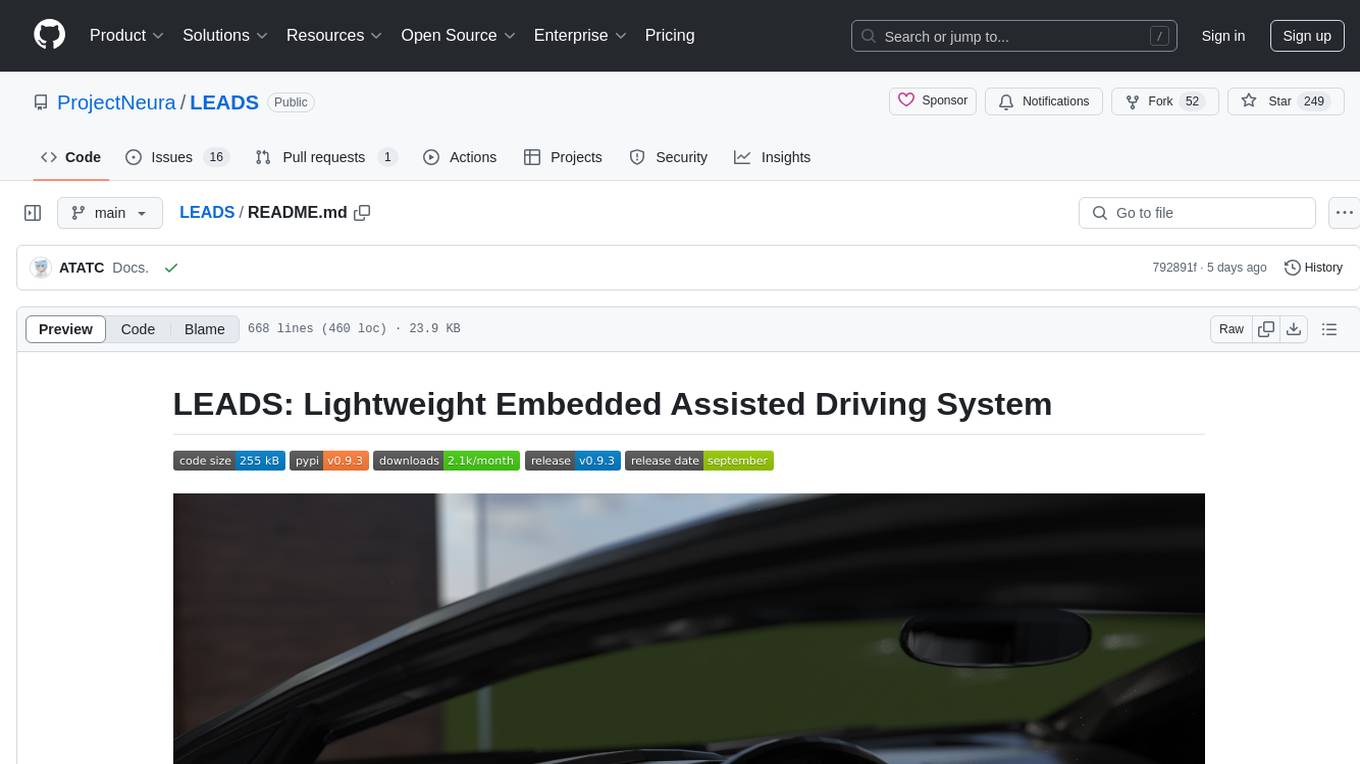

detoxify

Detoxify is a library that provides trained models and code to predict toxic comments on 3 Jigsaw challenges: Toxic comment classification, Unintended Bias in Toxic comments, Multilingual toxic comment classification. It includes models like 'original', 'unbiased', and 'multilingual' trained on different datasets to detect toxicity and minimize bias. The library aims to help in stopping harmful content online by interpreting visual content in context. Users can fine-tune the models on carefully constructed datasets for research purposes or to aid content moderators in flagging out harmful content quicker. The library is built to be user-friendly and straightforward to use.

runpod-worker-comfy

runpod-worker-comfy is a serverless API tool that allows users to run any ComfyUI workflow to generate an image. Users can provide input images as base64-encoded strings, and the generated image can be returned as a base64-encoded string or uploaded to AWS S3. The tool is built on Ubuntu + NVIDIA CUDA and provides features like built-in checkpoints and VAE models. Users can configure environment variables to upload images to AWS S3 and interact with the RunPod API to generate images. The tool also supports local testing and deployment to Docker hub using Github Actions.

can-ai-code

Can AI Code is a self-evaluating interview tool for AI coding models. It includes interview questions written by humans and tests taken by AI, inference scripts for common API providers and CUDA-enabled quantization runtimes, a Docker-based sandbox environment for validating untrusted Python and NodeJS code, and the ability to evaluate the impact of prompting techniques and sampling parameters on large language model (LLM) coding performance. Users can also assess LLM coding performance degradation due to quantization. The tool provides test suites for evaluating LLM coding performance, a webapp for exploring results, and comparison scripts for evaluations. It supports multiple interviewers for API and CUDA runtimes, with detailed instructions on running the tool in different environments. The repository structure includes folders for interviews, prompts, parameters, evaluation scripts, comparison scripts, and more.

code2prompt

Code2Prompt is a powerful command-line tool that generates comprehensive prompts from codebases, designed to streamline interactions between developers and Large Language Models (LLMs) for code analysis, documentation, and improvement tasks. It bridges the gap between codebases and LLMs by converting projects into AI-friendly prompts, enabling users to leverage AI for various software development tasks. The tool offers features like holistic codebase representation, intelligent source tree generation, customizable prompt templates, smart token management, Gitignore integration, flexible file handling, clipboard-ready output, multiple output options, and enhanced code readability.

AgentPoison

AgentPoison is a repository that provides the official PyTorch implementation of the paper 'AgentPoison: Red-teaming LLM Agents via Memory or Knowledge Base Backdoor Poisoning'. It offers tools for red-teaming LLM agents by poisoning memory or knowledge bases. The repository includes trigger optimization algorithms, agent experiments, and evaluation scripts for Agent-Driver, ReAct-StrategyQA, and EHRAgent. Users can fine-tune motion planners, inject queries with triggers, and evaluate red-teaming performance. The codebase supports multiple RAG embedders and provides a unified dataset access for all three agents.

raycast_api_proxy

The Raycast AI Proxy is a tool that acts as a proxy for the Raycast AI application, allowing users to utilize the application without subscribing. It intercepts and forwards Raycast requests to various AI APIs, then reformats the responses for Raycast. The tool supports multiple AI providers and allows for custom model configurations. Users can generate self-signed certificates, add them to the system keychain, and modify DNS settings to redirect requests to the proxy. The tool is designed to work with providers like OpenAI, Azure OpenAI, Google, and more, enabling tasks such as AI chat completions, translations, and image generation.

paxml

Pax is a framework to configure and run machine learning experiments on top of Jax.

comfyui

ComfyUI is a highly-configurable, cloud-first AI-Dock container that allows users to run ComfyUI without bundled models or third-party configurations. Users can configure the container using provisioning scripts. The Docker image supports NVIDIA CUDA, AMD ROCm, and CPU platforms, with version tags for different configurations. Additional environment variables and Python environments are provided for customization. ComfyUI service runs on port 8188 and can be managed using supervisorctl. The tool also includes an API wrapper service and pre-configured templates for Vast.ai. The author may receive compensation for services linked in the documentation.

duckdb-airport-extension

The 'duckdb-airport-extension' is a tool that enables the use of Arrow Flight with DuckDB. It provides functions to list available Arrow Flights at a specific endpoint and to retrieve the contents of an Arrow Flight. The extension also supports creating secrets for authentication purposes. It includes features for serializing filters and optimizing projections to enhance data transmission efficiency. The tool is built on top of gRPC and the Arrow IPC format, offering high-performance data services for data processing and retrieval.

datadreamer

DataDreamer is an advanced toolkit designed to facilitate the development of edge AI models by enabling synthetic data generation, knowledge extraction from pre-trained models, and creation of efficient and potent models. It eliminates the need for extensive datasets by generating synthetic datasets, leverages latent knowledge from pre-trained models, and focuses on creating compact models suitable for integration into any device and performance for specialized tasks. The toolkit offers features like prompt generation, image generation, dataset annotation, and tools for training small-scale neural networks for edge deployment. It provides hardware requirements, usage instructions, available models, and limitations to consider while using the library.

stable-diffusion-webui

Stable Diffusion WebUI Docker Image allows users to run Automatic1111 WebUI in a docker container locally or in the cloud. The images do not bundle models or third-party configurations, requiring users to use a provisioning script for container configuration. It supports NVIDIA CUDA, AMD ROCm, and CPU platforms, with additional environment variables for customization and pre-configured templates for Vast.ai and Runpod.io. The service is password protected by default, with options for version pinning, startup flags, and service management using supervisorctl.

mergekit

Mergekit is a toolkit for merging pre-trained language models. It uses an out-of-core approach to perform unreasonably elaborate merges in resource-constrained situations. Merges can be run entirely on CPU or accelerated with as little as 8 GB of VRAM. Many merging algorithms are supported, with more coming as they catch my attention.

skyvern

Skyvern automates browser-based workflows using LLMs and computer vision. It provides a simple API endpoint to fully automate manual workflows, replacing brittle or unreliable automation solutions. Traditional approaches to browser automations required writing custom scripts for websites, often relying on DOM parsing and XPath-based interactions which would break whenever the website layouts changed. Instead of only relying on code-defined XPath interactions, Skyvern adds computer vision and LLMs to the mix to parse items in the viewport in real-time, create a plan for interaction and interact with them. This approach gives us a few advantages: 1. Skyvern can operate on websites it’s never seen before, as it’s able to map visual elements to actions necessary to complete a workflow, without any customized code 2. Skyvern is resistant to website layout changes, as there are no pre-determined XPaths or other selectors our system is looking for while trying to navigate 3. Skyvern leverages LLMs to reason through interactions to ensure we can cover complex situations. Examples include: 1. If you wanted to get an auto insurance quote from Geico, the answer to a common question “Were you eligible to drive at 18?” could be inferred from the driver receiving their license at age 16 2. If you were doing competitor analysis, it’s understanding that an Arnold Palmer 22 oz can at 7/11 is almost definitely the same product as a 23 oz can at Gopuff (even though the sizes are slightly different, which could be a rounding error!) Want to see examples of Skyvern in action? Jump to #real-world-examples-of- skyvern

llm2sh

llm2sh is a command-line utility that leverages Large Language Models (LLMs) to translate plain-language requests into shell commands. It provides a convenient way to interact with your system using natural language. The tool supports multiple LLMs for command generation, offers a customizable configuration file, YOLO mode for running commands without confirmation, and is easily extensible with new LLMs and system prompts. Users can set up API keys for OpenAI, Claude, Groq, and Cerebras to use the tool effectively. llm2sh does not store user data or command history, and it does not record or send telemetry by itself, but the LLM APIs may collect and store requests and responses for their purposes.

For similar tasks

aiodocker

Aiodocker is a simple Docker HTTP API wrapper written with asyncio and aiohttp. It provides asynchronous bindings for interacting with Docker containers and images. Users can easily manage Docker resources using async functions and methods. The library offers features such as listing images and containers, creating and running containers, and accessing container logs. Aiodocker is designed to work seamlessly with Python's asyncio framework, making it suitable for building asynchronous Docker management applications.

Construction-Hazard-Detection

Construction-Hazard-Detection is an AI-driven tool focused on improving safety at construction sites by utilizing the YOLOv8 model for object detection. The system identifies potential hazards like overhead heavy loads and steel pipes, providing real-time analysis and warnings. Users can configure the system via a YAML file and run it using Docker. The primary dataset used for training is the Construction Site Safety Image Dataset enriched with additional annotations. The system logs are accessible within the Docker container for debugging, and notifications are sent through the LINE messaging API when hazards are detected.

llm2sh

llm2sh is a command-line utility that leverages Large Language Models (LLMs) to translate plain-language requests into shell commands. It provides a convenient way to interact with your system using natural language. The tool supports multiple LLMs for command generation, offers a customizable configuration file, YOLO mode for running commands without confirmation, and is easily extensible with new LLMs and system prompts. Users can set up API keys for OpenAI, Claude, Groq, and Cerebras to use the tool effectively. llm2sh does not store user data or command history, and it does not record or send telemetry by itself, but the LLM APIs may collect and store requests and responses for their purposes.

agentic

Agentic is a standard AI functions/tools library optimized for TypeScript and LLM-based apps, compatible with major AI SDKs. It offers a set of thoroughly tested AI functions that can be used with favorite AI SDKs without writing glue code. The library includes various clients for services like Bing web search, calculator, Clearbit data resolution, Dexa podcast questions, and more. It also provides compound tools like SearchAndCrawl and supports multiple AI SDKs such as OpenAI, Vercel AI SDK, LangChain, LlamaIndex, Firebase Genkit, and Dexa Dexter. The goal is to create minimal clients with strongly-typed TypeScript DX, composable AIFunctions via AIFunctionSet, and compatibility with major TS AI SDKs.

aioapns

aioapns is a Python library designed for sending push-notifications to iOS devices via Apple Push Notification Service. It provides an efficient client through asynchronous HTTP2 protocol for use with Python's asyncio framework. With features like internal connection pool, support for different types of connections, setting TTL and priority for notifications, and more, aioapns is a versatile tool for developers looking to send push notifications to iOS devices.

TagUI

TagUI is an open-source RPA tool that allows users to automate repetitive tasks on their computer, including tasks on websites, desktop apps, and the command line. It supports multiple languages and offers features like interacting with identifiers, automating data collection, moving data between TagUI and Excel, and sending Telegram notifications. Users can create RPA robots using MS Office Plug-ins or text editors, run TagUI on the cloud, and integrate with other RPA tools. TagUI prioritizes enterprise security by running on users' computers and not storing data. It offers detailed logs, enterprise installation guides, and support for centralised reporting.

react-native-airship

React Native Airship is a module designed to integrate Airship's iOS and Android SDKs into React Native applications. It provides developers with the necessary tools to incorporate Airship's push notification services seamlessly. The module offers a simple and efficient way to leverage Airship's features within React Native projects, enhancing user engagement and retention through targeted notifications.

trendFinder

Trend Finder is a tool designed to help users stay updated on trending topics on social media by collecting and analyzing posts from key influencers. It sends Slack notifications when new trends or product launches are detected, saving time, keeping users informed, and enabling quick responses to emerging opportunities. The tool features AI-powered trend analysis, social media and website monitoring, instant Slack notifications, and scheduled monitoring using cron jobs. Built with Node.js and Express.js, Trend Finder integrates with Together AI, Twitter/X API, Firecrawl, and Slack Webhooks for notifications.

For similar jobs

Construction-Hazard-Detection

Construction-Hazard-Detection is an AI-driven tool focused on improving safety at construction sites by utilizing the YOLOv8 model for object detection. The system identifies potential hazards like overhead heavy loads and steel pipes, providing real-time analysis and warnings. Users can configure the system via a YAML file and run it using Docker. The primary dataset used for training is the Construction Site Safety Image Dataset enriched with additional annotations. The system logs are accessible within the Docker container for debugging, and notifications are sent through the LINE messaging API when hazards are detected.

HEC-Commander

HEC-Commander Tools is a suite of python notebooks developed with AI assistance for water resource engineering workflows, providing automation for HEC-RAS and HEC-HMS through Jupyter Notebooks. It contains automation scripts for HEC-HMS, HEC-RAS, and DSS, along with miscellaneous tools. The repository also includes blog posts, ChatGPT assistants, and presentations related to H&H modeling and water resources workflows. Developed to support Region 4 of the Louisiana Watershed Initiative by Fenstermaker.

HEC-Commander

HEC-Commander Tools is a suite of python notebooks developed with AI assistance for water resource engineering workflows, focused on providing automation for HEC-RAS and HEC-HMS through Jupyter Notebooks. It contains automation scripts for HEC-HMS and HEC-RAS, tools for plotting results, and miscellaneous scripts for workflow assistance. The repository also includes blog posts, ChatGPT assistants, and presentations related to H&H modeling and the use of LLM's for water resources workflows.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

quivr

Quivr is a personal assistant powered by Generative AI, designed to be a second brain for users. It offers fast and efficient access to data, ensuring security and compatibility with various file formats. Quivr is open source and free to use, allowing users to share their brains publicly or keep them private. The marketplace feature enables users to share and utilize brains created by others, boosting productivity. Quivr's offline mode provides anytime, anywhere access to data. Key features include speed, security, OS compatibility, file compatibility, open source nature, public/private sharing options, a marketplace, and offline mode.

Avalonia-Assistant

Avalonia-Assistant is an open-source desktop intelligent assistant that aims to provide a user-friendly interactive experience based on the Avalonia UI framework and the integration of Semantic Kernel with OpenAI or other large LLM models. By utilizing Avalonia-Assistant, you can perform various desktop operations through text or voice commands, enhancing your productivity and daily office experience.

MetaGPT

MetaGPT is a multi-agent framework that enables GPT to work in a software company, collaborating to tackle more complex tasks. It assigns different roles to GPTs to form a collaborative entity for complex tasks. MetaGPT takes a one-line requirement as input and outputs user stories, competitive analysis, requirements, data structures, APIs, documents, etc. Internally, MetaGPT includes product managers, architects, project managers, and engineers. It provides the entire process of a software company along with carefully orchestrated SOPs. MetaGPT's core philosophy is "Code = SOP(Team)", materializing SOP and applying it to teams composed of LLMs.

UFO

UFO is a UI-focused dual-agent framework to fulfill user requests on Windows OS by seamlessly navigating and operating within individual or spanning multiple applications.