obot

Complete MCP Platform -- Hosting, Registry, Gateway, and Chat Client

Stars: 602

Obot is an open source AI agent platform that allows users to build agents for various use cases such as copilots, assistants, and autonomous workflows. It offers integration with leading LLM providers, built-in RAG for data, easy integration with custom web services and APIs, and OAuth 2.0 authentication.

README:

Obot is an open-source platform that provides everything an organization needs to implement MCP technologies. It enables you to host MCP servers for internal and external users, set up MCP registries, manage and monitor MCP usage, and build feature-rich agents and chatbots that leverage MCP servers.

To run Obot locally, start it with Docker:

docker run -d --name obot -p 8080:8080 \

-v /var/run/docker.sock:/var/run/docker.sock \

-e OPENAI_API_KEY=<API KEY> \

ghcr.io/obot-platform/obot:latestOpen http://localhost:8080 in your browser to access the Obot UI.

Note: Replace <API KEY> with your OpenAI API key. You can also set ANTHROPIC_API_KEY or configure model providers through the admin UI.

For additional installation options, see the Installation Guide at https://docs.obot.ai/installation/general.

Organizations face several challenges when implementing MCP technologies:

- Build: While MCP servers can be developed using SDKs of choice, IT teams need a reliable way to host these servers for both private and public use.

- Discover: With tens of thousands of MCP servers available, users need a clear and trusted way to discover servers that have been approved by IT administrators.

- Secure: MCP servers must be authenticated, access must be controlled, and all activity should be auditable.

- Use: MCP protocol support varies widely across chat clients. A standardized chat client that provides consistent MCP support across the organization is highly desirable.

Obot addresses these challenges by offering MCP hosting, an MCP registry, an MCP gateway, and an MCP-standards-compliant chat client. Popular workflow and agent frameworks such as n8n and LangGraph can interact with MCP servers managed by Obot. In addition, clients like ChatGPT, Claude Desktop, and GitHub Copilot can also leverage MCP servers managed by Obot.

Run and manage MCP servers directly within Obot:

- Run MCP servers locally with Docker or deploy them to Kubernetes

- Support for Node.js, Python, and container-based servers

- Support for both single-user STDIO servers and multi-user HTTP servers

- Controls for who can deploy servers, publish them to the catalog, or share them

- Built-in OAuth 2.1 and token handling for authentication

A central place to list and discover MCP servers:

- Curated catalog of available MCP servers

- Shared credentials and authentication handled by the platform

- Conformance with the MCP registry specification

- Server visibility based on user access

A single entry point for accessing MCP servers:

- Access rules for users and groups

- Logging of MCP requests and responses

- Usage visibility to understand which servers are being used

- Request inspection and filtering before requests reach servers

A chat client built to work directly with MCP:

- Support for multiple model providers including OpenAI and Anthropic

- Add domain-specific information to conversations with built-in RAG

- Project-wide memory to maintain important context across conversations for personalized interactions

- Create and share reusable project configurations with other users

- Scheduled tasks for recurring workflow automations

- Self-Hosted: Deploy on your own infrastructure for complete control over data and security

- MCP Standard: Built on the open Model Context Protocol for maximum interoperability

- Security-First Design: OAuth 2.1, encryption at rest and in transit, comprehensive audit logging

- Extensible: Easy integration with custom tools, services, and existing systems

- GitOps Ready: Manage catalog and configuration as code

Documentation is available at https://docs.obot.ai.

- Documentation: https://docs.obot.ai

- Discord: https://discord.com/invite/9sSf4UyAMC

Obot is open-source software. See the LICENSE file for details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for obot

Similar Open Source Tools

obot

Obot is an open source AI agent platform that allows users to build agents for various use cases such as copilots, assistants, and autonomous workflows. It offers integration with leading LLM providers, built-in RAG for data, easy integration with custom web services and APIs, and OAuth 2.0 authentication.

toolhive

ToolHive is a tool designed to simplify and secure Model Context Protocol (MCP) servers. It allows users to easily discover, deploy, and manage MCP servers by launching them in isolated containers with minimal setup and security concerns. The tool offers instant deployment, secure default settings, compatibility with Docker and Kubernetes, seamless integration with popular clients, and availability as a GUI desktop app, CLI, and Kubernetes Operator.

higress

Higress is an open-source cloud-native API gateway built on the core of Istio and Envoy, based on Alibaba's internal practice of Envoy Gateway. It is designed for AI-native API gateway, serving AI businesses such as Tongyi Qianwen APP, Bailian Big Model API, and Machine Learning PAI platform. Higress provides capabilities to interface with LLM model vendors, AI observability, multi-model load balancing/fallback, AI token flow control, and AI caching. It offers features for AI gateway, Kubernetes Ingress gateway, microservices gateway, and security protection gateway, with advantages in production-level scalability, stream processing, extensibility, and ease of use.

bionic-gpt

BionicGPT is an on-premise replacement for ChatGPT, offering the advantages of Generative AI while maintaining strict data confidentiality. BionicGPT can run on your laptop or scale into the data center.

lecca-io

Lecca.io is an AI platform that enables users to configure and deploy Large Language Models (LLMs) with customizable tools and workflows. Users can easily build, customize, and automate AI agents for various tasks. The platform offers features like custom LLM configuration, tool integration, workflow builder, built-in RAG functionalities, and the ability to create custom apps and triggers. Users can also automate LLMs by setting up triggers for autonomous operation. Lecca.io provides documentation for concepts, local development, creating custom apps, adding AI providers, and running Ollama locally. Contributions are welcome, and the platform is distributed under the Apache-2.0 License with Commons Clause, with enterprise features available under a Commercial License.

octelium

Octelium is a free and open source, self-hosted, unified zero trust secure access platform that operates as a modern zero-config remote access VPN, a comprehensive Zero Trust Network Access (ZTNA)/BeyondCorp platform, an ngrok/Cloudflare Tunnel alternative, an API gateway, an AI/LLM gateway, a PaaS-like platform, a Kubernetes gateway/ingress, and a homelab infrastructure. It provides scalable zero trust architecture for identity-based, application-layer aware secure access via private client-based access over WireGuard/QUIC tunnels and public clientless access, with context-aware access control. Octelium offers dynamic secretless access, fine-grained access control, identity-based routing, continuous strong authentication, OpenTelemetry-native auditing, passwordless SSH, effortless deployment of containerized applications, centralized management, and more. It is open source, designed for self-hosting, and provides a commercial license option for businesses.

baserow

Baserow is a secure, open-source platform that allows users to build databases, applications, automations, and AI agents without writing any code. With enterprise-grade security compliance and both cloud and self-hosted deployment options, Baserow empowers teams to structure data, automate processes, create internal tools, and build custom dashboards. It features a spreadsheet database hybrid, AI Assistant for natural language database creation, GDPR, HIPAA, and SOC 2 Type II compliance, and seamless integration with existing tools. Baserow is API-first, extensible, and uses frameworks like Django, Vue.js, and PostgreSQL.

fridon-ai

FridonAI is an open-source project offering AI-powered tools for cryptocurrency analysis and blockchain operations. It includes modules like FridonAnalytics for price analysis, FridonSearch for technical indicators, FridonNotifier for custom alerts, FridonBlockchain for blockchain operations, and FridonChat as a unified chat interface. The platform empowers users to create custom AI chatbots, access crypto tools, and interact effortlessly through chat. The core functionality is modular, with plugins, tools, and utilities for easy extension and development. FridonAI implements a scoring system to assess user interactions and incentivize engagement. The application uses Redis extensively for communication and includes a Nest.js backend for system operations.

CSGHub

CSGHub is an open source, trustworthy large model asset management platform that can assist users in governing the assets involved in the lifecycle of LLM and LLM applications (datasets, model files, codes, etc). With CSGHub, users can perform operations on LLM assets, including uploading, downloading, storing, verifying, and distributing, through Web interface, Git command line, or natural language Chatbot. Meanwhile, the platform provides microservice submodules and standardized OpenAPIs, which could be easily integrated with users' own systems. CSGHub is committed to bringing users an asset management platform that is natively designed for large models and can be deployed On-Premise for fully offline operation. CSGHub offers functionalities similar to a privatized Huggingface(on-premise Huggingface), managing LLM assets in a manner akin to how OpenStack Glance manages virtual machine images, Harbor manages container images, and Sonatype Nexus manages artifacts.

AgentConnect

AgentConnect is an open-source implementation of the Agent Network Protocol (ANP) aiming to define how agents connect with each other and build an open, secure, and efficient collaboration network for billions of agents. It addresses challenges like interconnectivity, native interfaces, and efficient collaboration by providing authentication, end-to-end encryption, meta-protocol handling, and application layer protocol integration. The project focuses on performance and multi-platform support, with plans to rewrite core components in Rust and support Mac, Linux, Windows, mobile platforms, and browsers. AgentConnect aims to establish ANP as an industry standard through protocol development and forming a standardization committee.

open-wearables

Open Wearables is an open-source platform that unifies wearable device data from multiple providers and enables AI-powered health insights through natural language automations. It provides a single API for building health applications faster, with embeddable widgets and webhook notifications. Developers can integrate multiple wearable providers, access normalized health data, and build AI-powered insights. The platform simplifies the process of supporting multiple wearables, handling OAuth flows, data mapping, and sync logic, allowing users to focus on product development. Use cases include fitness coaching apps, healthcare platforms, wellness applications, research projects, and personal use.

AgentConnect

AgentConnect is an open-source implementation of the Agent Network Protocol (ANP) aiming to define how agents connect with each other and build an open, secure, and efficient collaboration network for billions of agents. It addresses challenges like interconnectivity, native interfaces, and efficient collaboration. The architecture includes authentication, end-to-end encryption modules, meta-protocol module, and application layer protocol integration framework. AgentConnect focuses on performance and multi-platform support, with plans to rewrite core components in Rust and support mobile platforms and browsers. The project aims to establish ANP as an industry standard and form an ANP Standardization Committee. Installation is done via 'pip install agent-connect' and demos can be run after cloning the repository. Features include decentralized authentication based on did:wba and HTTP, and meta-protocol negotiation examples.

AgentUp

AgentUp is an active development tool that provides a developer-first agent framework for creating AI agents with enterprise-grade infrastructure. It allows developers to define agents with configuration, ensuring consistent behavior across environments. The tool offers secure design, configuration-driven architecture, extensible ecosystem for customizations, agent-to-agent discovery, asynchronous task architecture, deterministic routing, and MCP support. It supports multiple agent types like reactive agents and iterative agents, making it suitable for chatbots, interactive applications, research tasks, and more. AgentUp is built by experienced engineers from top tech companies and is designed to make AI agents production-ready, secure, and reliable.

nuwa

Nuwa is an AI platform where users pay directly to developers for AI models and agents. Payments are made using cryptocurrencies secured by blockchain. User data and authentications are based on Decentralized Identity (DID). Nuwa aims to reduce friction for users, enhance their daily AI experience, and provide direct monetization for developers. The platform offers an agent-first approach, protocol-powered solutions, and user-aligned services. It includes a Nuwa Client for AI chat with DID-based authentication and cryptocurrency payments, and Nuwa Kit for developers to build and launch AI models and agents into Caps (capabilities). The repository contains various components of the Nuwa Protocol, including Nuwa Improvement Proposals, smart contracts, client agent implementation, development kits, reference implementations of key services, and websites for documentation and landing pages.

Upsonic

Upsonic offers a cutting-edge enterprise-ready framework for orchestrating LLM calls, agents, and computer use to complete tasks cost-effectively. It provides reliable systems, scalability, and a task-oriented structure for real-world cases. Key features include production-ready scalability, task-centric design, MCP server support, tool-calling server, computer use integration, and easy addition of custom tools. The framework supports client-server architecture and allows seamless deployment on AWS, GCP, or locally using Docker.

k8sgateway

K8sGateway is a feature-rich, fast, and flexible Kubernetes-native API gateway built on Envoy proxy and Kubernetes Gateway API. It excels in function-level routing, supports legacy apps, microservices, and serverless. It offers robust discovery capabilities, seamless integration with open-source projects, and supports hybrid applications with various technologies, architectures, protocols, and clouds.

For similar tasks

basdonax-ai-rag

Basdonax AI RAG v1.0 is a repository that contains all the necessary resources to create your own AI-powered secretary using the RAG from Basdonax AI. It leverages open-source models from Meta and Microsoft, namely 'Llama3-7b' and 'Phi3-4b', allowing users to upload documents and make queries. This tool aims to simplify life for individuals by harnessing the power of AI. The installation process involves choosing between different data models based on GPU capabilities, setting up Docker, pulling the desired model, and customizing the assistant prompt file. Once installed, users can access the RAG through a local link and enjoy its functionalities.

OpenNARS-for-Applications

OpenNARS-for-Applications is an implementation of a Non-Axiomatic Reasoning System, a general-purpose reasoner that adapts under the Assumption of Insufficient Knowledge and Resources. The system combines the logic and conceptual ideas of OpenNARS, event handling and procedure learning capabilities of ANSNA and 20NAR1, and the control model from ALANN. It is written in C, offers improved reasoning performance, and has been compared with Reinforcement Learning and means-end reasoning approaches. The system has been used in real-world applications such as assisting first responders, real-time traffic surveillance, and experiments with autonomous robots. It has been developed with a pragmatic mindset focusing on effective implementation of existing theory.

obot

Obot is an open source AI agent platform that allows users to build agents for various use cases such as copilots, assistants, and autonomous workflows. It offers integration with leading LLM providers, built-in RAG for data, easy integration with custom web services and APIs, and OAuth 2.0 authentication.

openagents

OpenAgents is a platform for AI agents using open protocols. The current flagship product (v4) is an agentic chat app live at openagents.com. This repository holds the new cross-platform version (v5), with an initial focus on Coder, a desktop app intended to replace Claude Code with standard chat UI & thread history and first-class MCP integration. The v5 tech stack includes React, React Native, TypeScript for frontend, Cloudflare stack for backend, better-auth for authentication, and Vercel AI SDK. The architecture considerations aim for cross-platform code reuse, open protocol interoperability, long-running agent processes, composability, proportional payment to contributors, and agent wallets for Bitcoin/Lightning & stablecoins via Spark wallet.

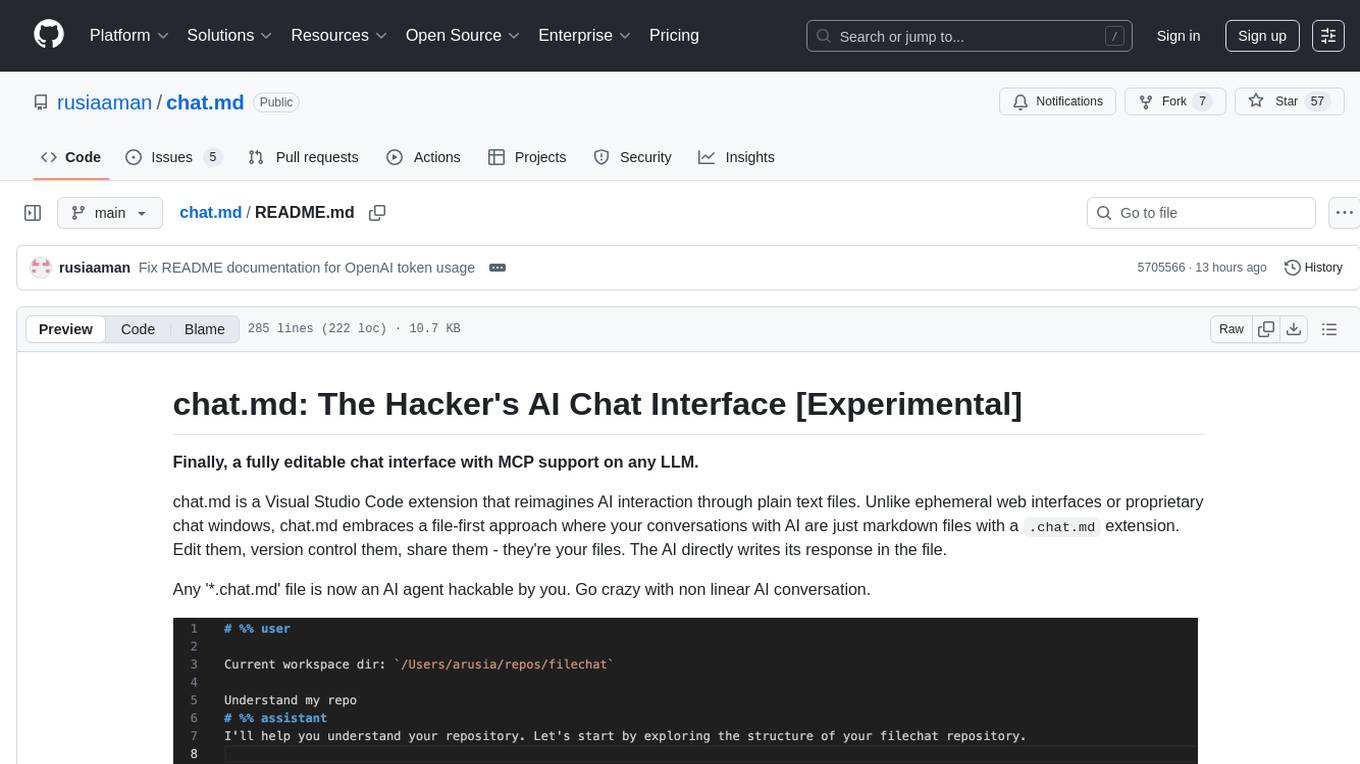

chat.md

This repository contains a chatbot tool that utilizes natural language processing to interact with users. The tool is designed to understand and respond to user input in a conversational manner, providing information and assistance. It can be integrated into various applications to enhance user experience and automate customer support. The chatbot tool is user-friendly and customizable, making it suitable for businesses looking to improve customer engagement and streamline communication.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

airbroke

Airbroke is an open-source error catcher tool designed for modern web applications. It provides a PostgreSQL-based backend with an Airbrake-compatible HTTP collector endpoint and a React-based frontend for error management. The tool focuses on simplicity, maintaining a small database footprint even under heavy data ingestion. Users can ask AI about issues, replay HTTP exceptions, and save/manage bookmarks for important occurrences. Airbroke supports multiple OAuth providers for secure user authentication and offers occurrence charts for better insights into error occurrences. The tool can be deployed in various ways, including building from source, using Docker images, deploying on Vercel, Render.com, Kubernetes with Helm, or Docker Compose. It requires Node.js, PostgreSQL, and specific system resources for deployment.

aiohttp-security

aiohttp_security is a library that provides identity and authorization for aiohttp.web. It offers features for handling authorization via cookies and supports aiohttp-session. The library includes examples for basic usage and database authentication, along with demos in the demo directory. For development, the library requires installation of specific requirements listed in the requirements-dev.txt file. aiohttp_security is licensed under the Apache 2 license.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.