Ape

Your first AI prompt engineer

Stars: 324

Ape is an AI prompt engineer tool powered by the open-source library 'ape-core', developed by Weavel. It allows users to generate AI prompts efficiently and effectively. The tool is designed to enhance productivity by providing syntax highlighting for '.prompt' files and welcoming contributions to improve its capabilities and performance. Users can seek help and support through the issue tracker or join the Ape community Discord server. Ape is licensed under the MIT License and credits Stanford NLP's DSPy project for inspiration.

README:

ape-core is the open source library behind Ape - an AI prompt engineer built by Weavel.

pip install ape-coreTo enable syntax highlighting for .prompt files, you can use the Promptfile IntelliSense extension for VS Code.

We welcome contributions to enhance Ape's capabilities and improve its performance. To contribute, please see the CONTRIBUTING.md file for steps.

If you have any questions, feedback, or suggestions, please feel free to reach out to us. you can raise an issue in the issue tracker.

We also have a Discord server for the Ape community, where you can connect with other users, share your experiences, ask questions, and collaborate on the project. To join Weavel's community Discord server, click here.

ape-core is released under the MIT License. See the LICENSE file for more information.

Thanks to Stanford NLP's DSPy project for the inspiration.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Ape

Similar Open Source Tools

Ape

Ape is an AI prompt engineer tool powered by the open-source library 'ape-core', developed by Weavel. It allows users to generate AI prompts efficiently and effectively. The tool is designed to enhance productivity by providing syntax highlighting for '.prompt' files and welcoming contributions to improve its capabilities and performance. Users can seek help and support through the issue tracker or join the Ape community Discord server. Ape is licensed under the MIT License and credits Stanford NLP's DSPy project for inspiration.

dialog

Dialog is an API-focused tool designed to simplify the deployment of Large Language Models (LLMs) for programmers interested in AI. It allows users to deploy any LLM based on the structure provided by dialog-lib, enabling them to spend less time coding and more time training their models. The tool aims to humanize Retrieval-Augmented Generative Models (RAGs) and offers features for better RAG deployment and maintenance. Dialog requires a knowledge base in CSV format and a prompt configuration in TOML format to function effectively. It provides functionalities for loading data into the database, processing conversations, and connecting to the LLM, with options to customize prompts and parameters. The tool also requires specific environment variables for setup and configuration.

Virtual_Avatar_ChatBot

Virtual_Avatar_ChatBot is a free AI Chatbot with visual movement that runs on your local computer with minimal GPU requirement. It supports various features like Oogbabooga, betacharacter.ai, and Locall LLM. The tool requires Windows 7 or above, Python, C++ Compiler, Git, and other dependencies. Users can contribute to the open-source project by reporting bugs, creating pull requests, or suggesting new features. The goal is to enhance Voicevox functionality, support local LLM inference, and give the waifu access to the internet. The project references various tools like desktop-waifu, CharacterAI, Whisper, PYVTS, COQUI-AI, VOICEVOX, and VOICEVOX API.

generative_ai_with_langchain

Generative AI with LangChain is a code repository for building large language model (LLM) apps with Python, ChatGPT, and other LLMs. The repository provides code examples, instructions, and configurations for creating generative AI applications using the LangChain framework. It covers topics such as setting up the development environment, installing dependencies with Conda or Pip, using Docker for environment setup, and setting API keys securely. The repository also emphasizes stability, code updates, and user engagement through issue reporting and feedback. It aims to empower users to leverage generative AI technologies for tasks like building chatbots, question-answering systems, software development aids, and data analysis applications.

cody-vs

Sourcegraph’s AI code assistant, Cody for Visual Studio, enhances developer productivity by providing a natural and intuitive way to work. It offers features like chat, auto-edit, prompts, and works with various IDEs. Cody focuses on team productivity, offering whole codebase context and shared prompts for consistency. Users can choose from different LLM models like Claude, Gemini Pro, and OpenAI's GPT. Engineered for enterprise use, Cody supports flexible deployment and enterprise security. Suitable for any programming language, Cody excels with Python, Go, JavaScript, and TypeScript code.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

commanddash

Dash AI is an open-source coding assistant for Flutter developers. It is designed to not only write code but also run and debug it, allowing it to assist beyond code completion and automate routine tasks. Dash AI is powered by Gemini, integrated with the Dart Analyzer, and specifically tailored for Flutter engineers. The vision for Dash AI is to create a single-command assistant that can automate tedious development tasks, enabling developers to focus on creativity and innovation. It aims to assist with the entire process of engineering a feature for an app, from breaking down the task into steps to generating exploratory tests and iterating on the code until the feature is complete. To achieve this vision, Dash AI is working on providing LLMs with the same access and information that human developers have, including full contextual knowledge, the latest syntax and dependencies data, and the ability to write, run, and debug code. Dash AI welcomes contributions from the community, including feature requests, issue fixes, and participation in discussions. The project is committed to building a coding assistant that empowers all Flutter developers.

crosspublic

Support Channels into a Public FAQ with AI. Extract customer conversations into an FAQ accessible via public URL. Connects to Discord, converts conversations into FAQ using ChatGPT. Monorepo with NX containing backend, Discord bot, panel, tenants website, marketing website, and documentation. Self-funded project seeking community support.

FlowTest

FlowTestAI is the world’s first GenAI powered OpenSource Integrated Development Environment (IDE) designed for crafting, visualizing, and managing API-first workflows. It operates as a desktop app, interacting with the local file system, ensuring privacy and enabling collaboration via version control systems. The platform offers platform-specific binaries for macOS, with versions for Windows and Linux in development. It also features a CLI for running API workflows from the command line interface, facilitating automation and CI/CD processes.

OpenHands

OpenHands is a community focused on AI-driven development, offering a Software Agent SDK, CLI, Local GUI, Cloud deployment, and Enterprise solutions. The SDK is a Python library for defining and running agents, the CLI provides an easy way to start using OpenHands, the Local GUI allows running agents on a laptop with REST API, the Cloud deployment offers hosted infrastructure with integrations, and the Enterprise solution enables self-hosting via Kubernetes with extended support and access to the research team. OpenHands is available under the MIT license.

autoMate

autoMate is an AI-powered local automation tool designed to help users automate repetitive tasks and reclaim their time. It leverages AI and RPA technology to operate computer interfaces, understand screen content, make autonomous decisions, and support local deployment for data security. With natural language task descriptions, users can easily automate complex workflows without the need for programming knowledge. The tool aims to transform work by freeing users from mundane activities and allowing them to focus on tasks that truly create value, enhancing efficiency and liberating creativity.

NeoHaskell

NeoHaskell is a newcomer-friendly and productive dialect of Haskell. It aims to be easy to learn and use, while also powerful enough for app development with minimal effort and maximum confidence. The project prioritizes design and documentation before implementation, with ongoing work on design documents for community sharing.

AgentStack

AgentStack is a command-line tool that helps users create AI agent projects quickly and efficiently. It offers CLI utilities for code generation and simplifies the process of building agents and tasks. The tool is designed to work on macOS, Windows, and Linux, providing a seamless experience for developers. AgentStack aims to streamline the development process by offering pre-built templates, easy access to tools, and a curated experience on top of popular agent frameworks and LLM providers. It is not a low-code solution but rather a head-start for starting agent projects from scratch.

godot_rl_agents

Godot RL Agents is an open-source package that facilitates the integration of Machine Learning algorithms with games created in the Godot Engine. It provides interfaces for popular RL frameworks, support for memory-based agents, 2D and 3D games, AI sensors, and is licensed under MIT. Users can train agents in the Godot editor, create custom environments, export trained agents in ONNX format, and utilize advanced features like different RL training frameworks.

fuji-web

Fuji-Web is an intelligent AI partner designed for full browser automation. It autonomously navigates websites and performs tasks on behalf of the user while providing explanations for each action step. Users can easily install the extension in their browser, access the Fuji icon to input tasks, and interact with the tool to streamline web browsing tasks. The tool aims to enhance user productivity by automating repetitive web actions and providing a seamless browsing experience.

dewhale

Dewhale is a GitHub-Powered AI tool designed for effortless development. It utilizes prompt engineering techniques under the GPT-4 model to issue commands, allowing users to generate code with lower usage costs and easy customization. The tool seamlessly integrates with GitHub, providing version control, code review, and collaborative features. Users can join discussions on the design philosophy of Dewhale and explore detailed instructions and examples for setting up and using the tool.

For similar tasks

devchat

DevChat is an open-source workflow engine that enables developers to create intelligent, automated workflows for engaging with users through a chat panel within their IDEs. It combines script writing flexibility, latest AI models, and an intuitive chat GUI to enhance user experience and productivity. DevChat simplifies the integration of AI in software development, unlocking new possibilities for developers.

lowcode-vscode

This repository is a low-code tool that supports ChatGPT and other LLM models. It provides functionalities such as OCR translation, generating specified format JSON, translating Chinese to camel case, translating current directory to English, and quickly creating code templates. Users can also generate CURD operations for managing backend list pages. The tool allows users to select templates, initialize query form configurations using OCR, initialize table configurations using OCR, translate Chinese fields using ChatGPT, and generate code without writing a single line. It aims to enhance productivity by simplifying code generation and development processes.

AI-Prompt-Genius

AI Prompt Genius is a Chrome extension that allows you to curate a custom library of AI prompts. It is built using React web app and Tailwind CSS with DaisyUI components. The extension enables users to create and manage AI prompts for various purposes. It provides a user-friendly interface for organizing and accessing AI prompts efficiently. AI Prompt Genius is designed to enhance productivity and creativity by offering a personalized collection of prompts tailored to individual needs. Users can easily install the extension from the Chrome Web Store and start using it to generate AI prompts for different tasks.

second-brain-agent

The Second Brain AI Agent Project is a tool designed to empower personal knowledge management by automatically indexing markdown files and links, providing a smart search engine powered by OpenAI, integrating seamlessly with different note-taking methods, and enhancing productivity by accessing information efficiently. The system is built on LangChain framework and ChromaDB vector store, utilizing a pipeline to process markdown files and extract text and links for indexing. It employs a Retrieval-augmented generation (RAG) process to provide context for asking questions to the large language model. The tool is beneficial for professionals, students, researchers, and creatives looking to streamline workflows, improve study sessions, delve deep into research, and organize thoughts and ideas effortlessly.

AI-scripts

AI-scripts is a repository containing various AI scripts used for daily tasks. It includes tools like 'holefill' for filling code snippets in VIM, 'aiemu' for emulation purposes, and 'chatsh [model]' for terminal-based ChatGPT functionality. The repository aims to streamline AI-related workflows and enhance productivity by providing convenient scripts for common tasks.

magic-cli

Magic CLI is a command line utility that leverages Large Language Models (LLMs) to enhance command line efficiency. It is inspired by projects like Amazon Q and GitHub Copilot for CLI. The tool allows users to suggest commands, search across command history, and generate commands for specific tasks using local or remote LLM providers. Magic CLI also provides configuration options for LLM selection and response generation. The project is still in early development, so users should expect breaking changes and bugs.

readme-ai

README-AI is a developer tool that auto-generates README.md files using a combination of data extraction and generative AI. It streamlines documentation creation and maintenance, enhancing developer productivity. This project aims to enable all skill levels, across all domains, to better understand, use, and contribute to open-source software. It offers flexible README generation, supports multiple large language models (LLMs), provides customizable output options, works with various programming languages and project types, and includes an offline mode for generating boilerplate README files without external API calls.

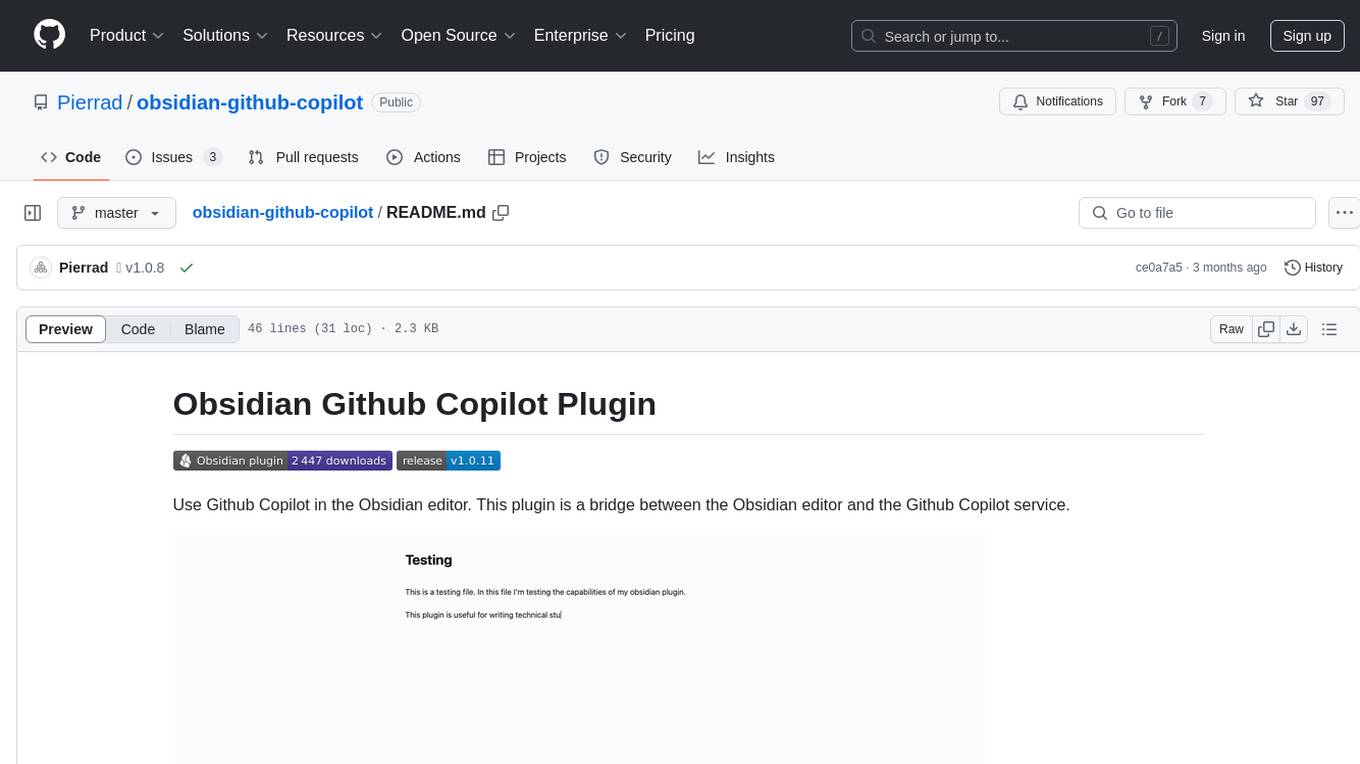

obsidian-github-copilot

Obsidian Github Copilot Plugin is a tool that enables users to utilize Github Copilot within the Obsidian editor. It acts as a bridge between Obsidian and the Github Copilot service, allowing for enhanced code completion and suggestion features. Users can configure various settings such as suggestion generation delay, key bindings, and visibility of suggestions. The plugin requires a Github Copilot subscription, Node.js 18 or later, and a network connection to interact with the Copilot service. It simplifies the process of writing code by providing helpful completions and suggestions directly within the Obsidian editor.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.