AI-scripts

Some handy AI scripts

Stars: 673

AI-scripts is a repository containing various AI scripts used for daily tasks. It includes tools like 'holefill' for filling code snippets in VIM, 'aiemu' for emulation purposes, and 'chatsh [model]' for terminal-based ChatGPT functionality. The repository aims to streamline AI-related workflows and enhance productivity by providing convenient scripts for common tasks.

README:

Some AI scripts I use daily.

-

chatsh: like ChatGPT but in the terminal (example chat) (example coding) (example refactor) -

holefill: I use it on VIM to fill code snippets

For VIM integration, this is my messy vimrc.

This repo in general is kinda gambiarra. Note: currently updating to ts!

Just npm install -g and run the given command the terminal.

You'll need to add Anthropic/OpenAI/etc. keys to ~/.config.

Most of this scripts will save a log to ~/.ai. If you don't want this

behavior, edit the scripts to suit your needs. (TODO: add a proper option.)

Everything here is MIT-licensed.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AI-scripts

Similar Open Source Tools

AI-scripts

AI-scripts is a repository containing various AI scripts used for daily tasks. It includes tools like 'holefill' for filling code snippets in VIM, 'aiemu' for emulation purposes, and 'chatsh [model]' for terminal-based ChatGPT functionality. The repository aims to streamline AI-related workflows and enhance productivity by providing convenient scripts for common tasks.

ai-hero

AI Hero is a course designed to help individuals transition from frontend, backend, or full-stack development to working with AI. The course includes examples, exercises, libraries & SDKs, and articles. The repository provides self-contained code samples to demonstrate various AI concepts and techniques. Users can follow the quickstart guide to install dependencies, set up API keys, and run examples. AI Hero aims to equip learners with the skills needed to become fully-fledged AI engineers.

code-companion

CodeCompanion.AI is an AI coding assistant desktop app that helps with various coding tasks. It features an interactive chat interface, file system operations, web search capabilities, semantic code search, a fully functional terminal, code preview and approval, unlimited context window, dynamic context management, and more. Users can save chat conversations and set custom instructions per project.

fasttrackml

FastTrackML is an experiment tracking server focused on speed and scalability, fully compatible with MLFlow. It provides a user-friendly interface to track and visualize your machine learning experiments, making it easy to compare different models and identify the best performing ones. FastTrackML is open source and can be easily installed and run with pip or Docker. It is also compatible with the MLFlow Python package, making it easy to integrate with your existing MLFlow workflows.

labs-ai-tools-for-devs

This repository provides AI tools for developers through Docker containers, enabling agentic workflows. It allows users to create complex workflows using Dockerized tools and Markdown, leveraging various LLM models. The core features include Dockerized tools, conversation loops, multi-model agents, project-first design, and trackable prompts stored in a git repo.

anon-kode

ANON KODE is a terminal-based AI coding tool that utilizes any model supporting the OpenAI-style API. It helps in fixing spaghetti code, explaining function behavior, running tests and shell commands, and more based on the model used. Users can easily set up models, submit bugs, and ensure data privacy with no telemetry or backend servers other than chosen AI providers.

TypeGPT

TypeGPT is a Python application that enables users to interact with ChatGPT or Google Gemini from any text field in their operating system using keyboard shortcuts. It provides global accessibility, keyboard shortcuts for communication, and clipboard integration for larger text inputs. Users need to have Python 3.x installed along with specific packages and API keys from OpenAI for ChatGPT access. The tool allows users to run the program normally or in the background, manage processes, and stop the program. Users can use keyboard shortcuts like `/ask`, `/see`, `/stop`, `/chatgpt`, `/gemini`, `/check`, and `Shift + Cmd + Enter` to interact with the application in any text field. Customization options are available by modifying files like `keys.txt` and `system_prompt.txt`. Contributions are welcome, and future plans include adding support for other APIs and a user-friendly GUI.

srcbook

Srcbook is an open-source interactive programming environment for TypeScript that allows users to create, run, and share reproducible programs and ideas. It features AI capabilities for exploring and iterating on ideas, supports exporting to valid markdown format, and enables diagraming with mermaid for rich annotations. Users can locally execute programs through a web interface, powered by Node.js under the Apache2 license.

dravid

Dravid (DRD) is an advanced, AI-powered CLI coding framework designed to follow user instructions until the job is completed, including fixing errors. It can generate code, fix errors, handle image queries, manage file operations, integrate with external APIs, and provide a development server with error handling. Dravid is extensible and requires Python 3.7+ and CLAUDE_API_KEY. Users can interact with Dravid through CLI commands for various tasks like creating projects, asking questions, generating content, handling metadata, and file-specific queries. It supports use cases like Next.js project development, working with existing projects, exploring new languages, Ruby on Rails project development, and Python project development. Dravid's project structure includes directories for source code, CLI modules, API interaction, utility functions, AI prompt templates, metadata management, and tests. Contributions are welcome, and development setup involves cloning the repository, installing dependencies with Poetry, setting up environment variables, and using Dravid for project enhancements.

vscode-reborn-ai

VSCode Reborn AI is a tool that allows users to write, refactor, and improve code in Visual Studio Code using artificial intelligence. Users can work offline with AI using a local LLM. The tool provides enhanced support for OpenRouter.ai API and ollama. It also offers compatibility with various local LLMs and alternative APIs. Additionally, it includes features such as internationalization, development setup instructions, testing in VS Code, packaging for VS Code, tech stack details, and licensing information.

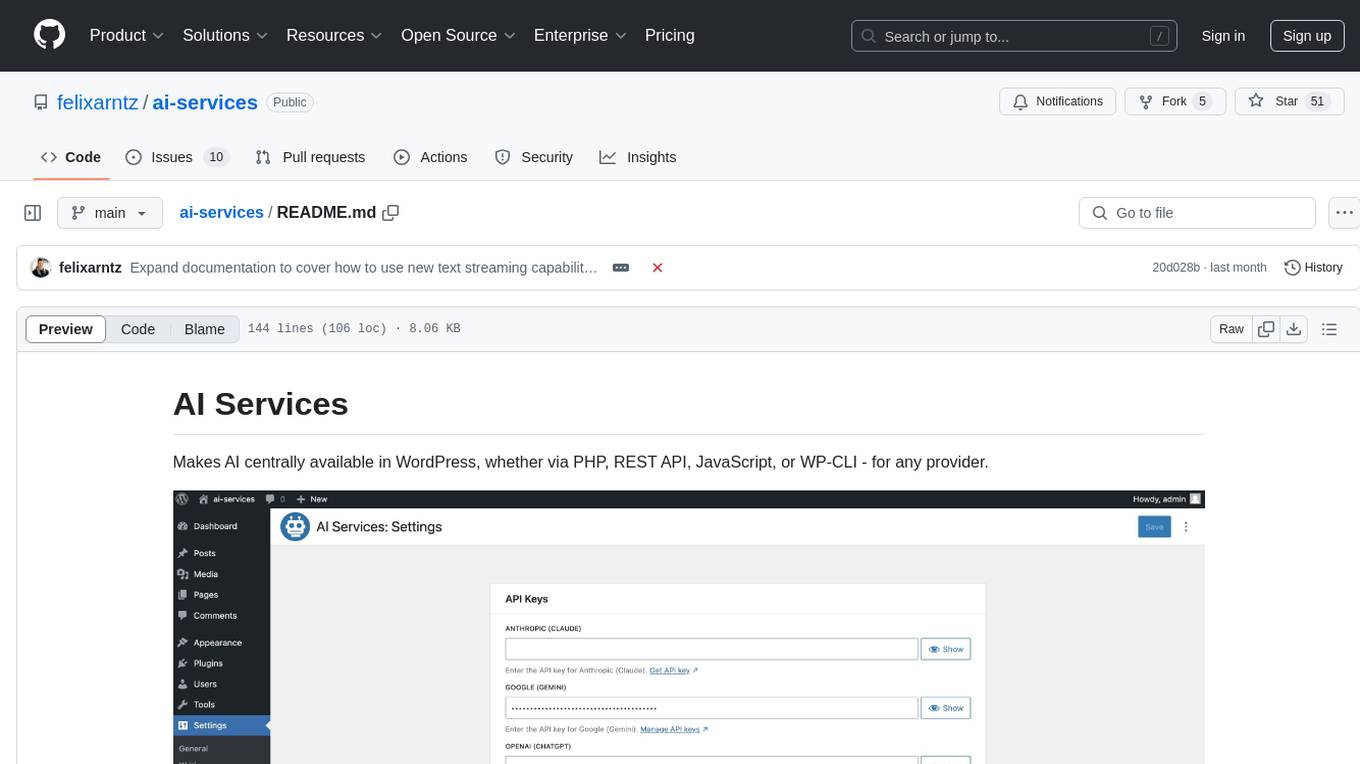

ai-services

AI Services is a WordPress plugin that provides a centralized infrastructure for integrating AI capabilities into WordPress websites. It allows other plugins to utilize AI services via a common API, making it easier for developers to incorporate AI features without the need to implement separate API layers. The plugin supports various AI services such as Anthropic, Google, and OpenAI, enabling users to choose their preferred service. It simplifies the process of configuring AI APIs and unlocks AI capabilities for smaller plugins or features. The plugin is still in early stages, with ongoing enhancements and improvements planned to streamline API usage and enhance user experience.

mentat

Mentat is an AI tool designed to assist with coding tasks directly from the command line. It combines human creativity with computer-like processing to help users understand new codebases, add new features, and refactor existing code. Unlike other tools, Mentat coordinates edits across multiple locations and files, with the context of the project already in mind. The tool aims to enhance the coding experience by providing seamless assistance and improving edit quality.

generative-ai-swift

The Google AI SDK for Swift enables developers to use Google's state-of-the-art generative AI models (like Gemini) to build AI-powered features and applications. This SDK supports use cases like: - Generate text from text-only input - Generate text from text-and-images input (multimodal) - Build multi-turn conversations (chat)

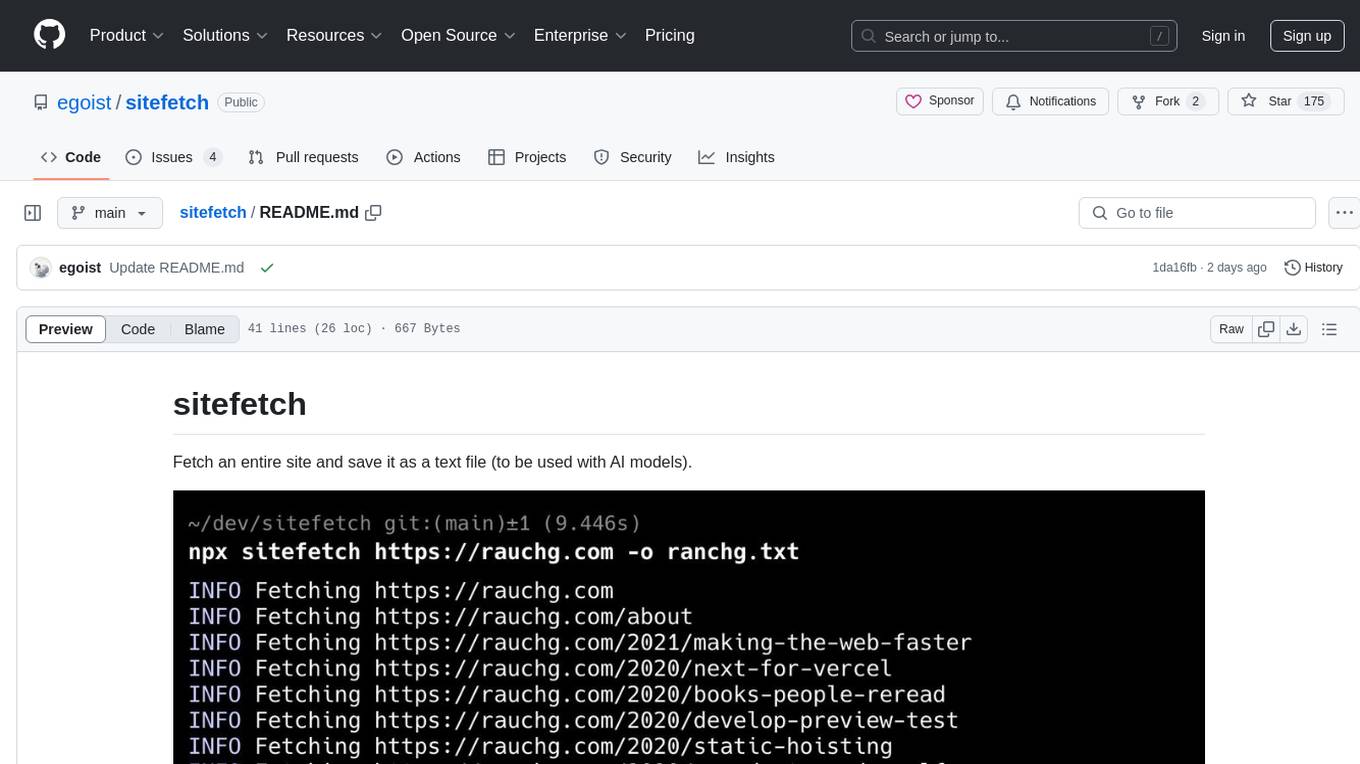

sitefetch

sitefetch is a tool designed to fetch an entire website and save it as a text file, primarily intended for use with AI models. It provides a simple and efficient way to download website content for further analysis or processing. The tool supports fetching multiple pages concurrently and offers both one-off and global installation options for ease of use.

ai-deadlines

AI Deadlines is a web app that displays submission deadlines for top AI conferences like NeurIPS and ICLR. It helps researchers know when to submit their papers. The data is fetched from a GitHub repository and updated automatically using a CRON job. The project is based on an existing repository and features a new UI. Users can contribute by updating conference deadlines in the provided YAML file. The app can be run locally with Node.js and npm or deployed using Docker. It is built with Vite, TypeScript, React, shadcn-ui, and Tailwind CSS. The project is licensed under MIT.

PrAIvateSearch

PrAIvateSearch is a NextJS web application that aims to implement similar features to SearchGPT in an open-source, local, and private way. It allows users to search the web using their own AI model. The application provides a user-friendly interface for interacting with the AI model and accessing search results. PrAIvateSearch is designed to be easy to install and use, with detailed instructions provided in the readme file. The project is in beta stage and welcomes contributions from the community to improve and enhance its functionality. Users are encouraged to support the project through funding to help it grow and continue to be maintained as an open-source tool under the MIT license.

For similar tasks

AI-scripts

AI-scripts is a repository containing various AI scripts used for daily tasks. It includes tools like 'holefill' for filling code snippets in VIM, 'aiemu' for emulation purposes, and 'chatsh [model]' for terminal-based ChatGPT functionality. The repository aims to streamline AI-related workflows and enhance productivity by providing convenient scripts for common tasks.

devchat

DevChat is an open-source workflow engine that enables developers to create intelligent, automated workflows for engaging with users through a chat panel within their IDEs. It combines script writing flexibility, latest AI models, and an intuitive chat GUI to enhance user experience and productivity. DevChat simplifies the integration of AI in software development, unlocking new possibilities for developers.

lowcode-vscode

This repository is a low-code tool that supports ChatGPT and other LLM models. It provides functionalities such as OCR translation, generating specified format JSON, translating Chinese to camel case, translating current directory to English, and quickly creating code templates. Users can also generate CURD operations for managing backend list pages. The tool allows users to select templates, initialize query form configurations using OCR, initialize table configurations using OCR, translate Chinese fields using ChatGPT, and generate code without writing a single line. It aims to enhance productivity by simplifying code generation and development processes.

AI-Prompt-Genius

AI Prompt Genius is a Chrome extension that allows you to curate a custom library of AI prompts. It is built using React web app and Tailwind CSS with DaisyUI components. The extension enables users to create and manage AI prompts for various purposes. It provides a user-friendly interface for organizing and accessing AI prompts efficiently. AI Prompt Genius is designed to enhance productivity and creativity by offering a personalized collection of prompts tailored to individual needs. Users can easily install the extension from the Chrome Web Store and start using it to generate AI prompts for different tasks.

second-brain-agent

The Second Brain AI Agent Project is a tool designed to empower personal knowledge management by automatically indexing markdown files and links, providing a smart search engine powered by OpenAI, integrating seamlessly with different note-taking methods, and enhancing productivity by accessing information efficiently. The system is built on LangChain framework and ChromaDB vector store, utilizing a pipeline to process markdown files and extract text and links for indexing. It employs a Retrieval-augmented generation (RAG) process to provide context for asking questions to the large language model. The tool is beneficial for professionals, students, researchers, and creatives looking to streamline workflows, improve study sessions, delve deep into research, and organize thoughts and ideas effortlessly.

magic-cli

Magic CLI is a command line utility that leverages Large Language Models (LLMs) to enhance command line efficiency. It is inspired by projects like Amazon Q and GitHub Copilot for CLI. The tool allows users to suggest commands, search across command history, and generate commands for specific tasks using local or remote LLM providers. Magic CLI also provides configuration options for LLM selection and response generation. The project is still in early development, so users should expect breaking changes and bugs.

readme-ai

README-AI is a developer tool that auto-generates README.md files using a combination of data extraction and generative AI. It streamlines documentation creation and maintenance, enhancing developer productivity. This project aims to enable all skill levels, across all domains, to better understand, use, and contribute to open-source software. It offers flexible README generation, supports multiple large language models (LLMs), provides customizable output options, works with various programming languages and project types, and includes an offline mode for generating boilerplate README files without external API calls.

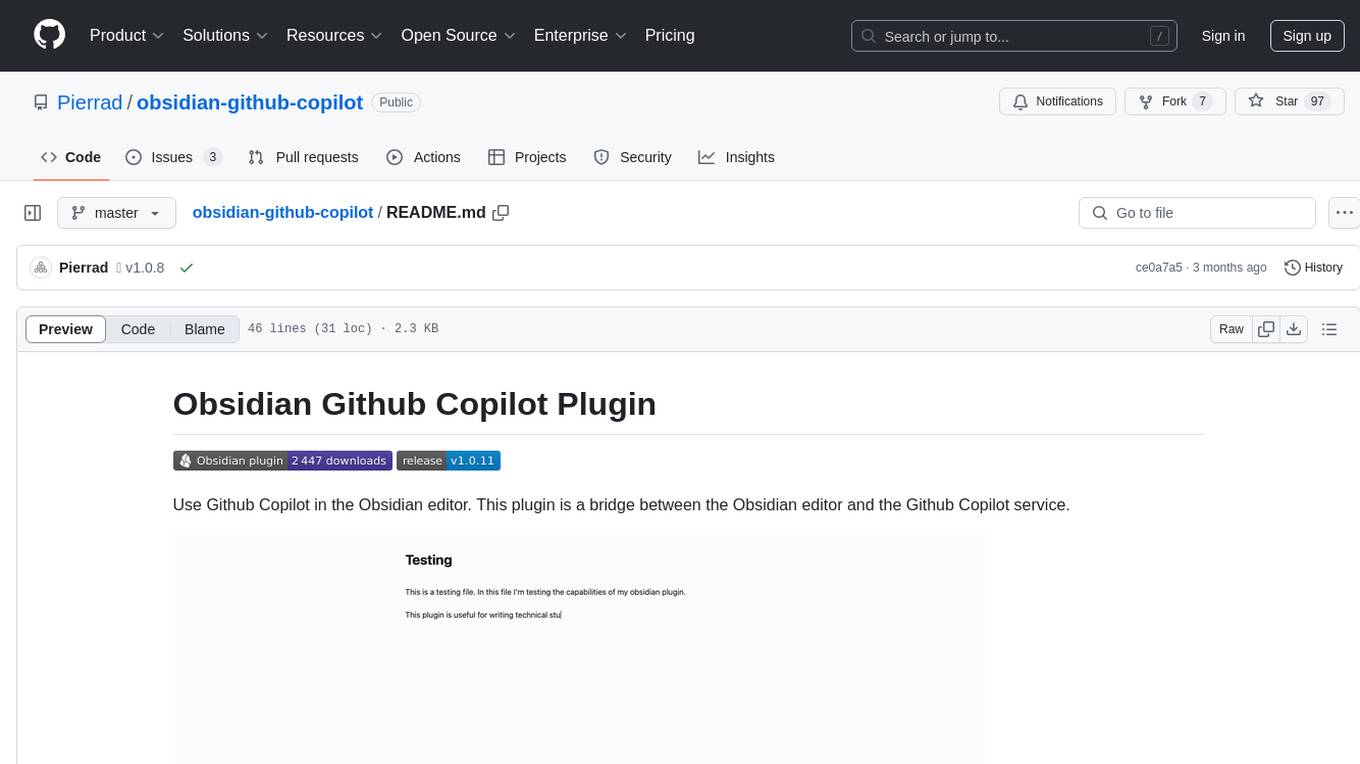

obsidian-github-copilot

Obsidian Github Copilot Plugin is a tool that enables users to utilize Github Copilot within the Obsidian editor. It acts as a bridge between Obsidian and the Github Copilot service, allowing for enhanced code completion and suggestion features. Users can configure various settings such as suggestion generation delay, key bindings, and visibility of suggestions. The plugin requires a Github Copilot subscription, Node.js 18 or later, and a network connection to interact with the Copilot service. It simplifies the process of writing code by providing helpful completions and suggestions directly within the Obsidian editor.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.