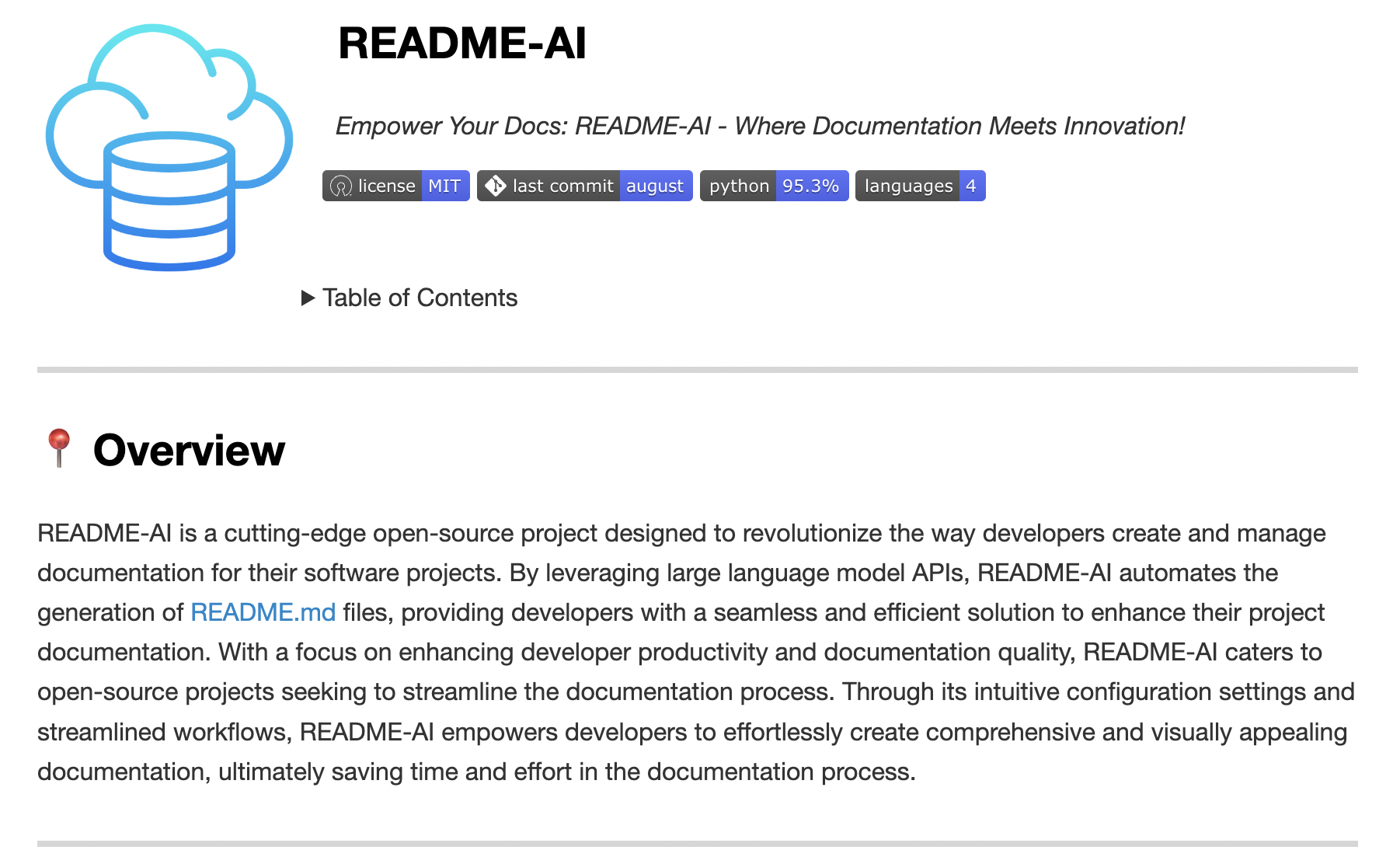

readme-ai

README file generator, powered by AI.

Stars: 1491

README-AI is a developer tool that auto-generates README.md files using a combination of data extraction and generative AI. It streamlines documentation creation and maintenance, enhancing developer productivity. This project aims to enable all skill levels, across all domains, to better understand, use, and contribute to open-source software. It offers flexible README generation, supports multiple large language models (LLMs), provides customizable output options, works with various programming languages and project types, and includes an offline mode for generating boilerplate README files without external API calls.

README:

Designed for simplicity, customization, and developer productivity.

[!IMPORTANT] ✨ See the Official Documentation for more details.

Objective

README-AI is a developer tool for automatically generating README markdown files using a robust repository processor engine and generative AI. Simply provide a repository URL or local path to your codebase, and a well-structured and detailed README file will be generated for you.

Motivation

This project aims to streamline the documentation process for developers, ensuring projects are properly documented and easy to understand. Whether you're working on an open-source project, enterprise software, or a personal project, README-AI is here to help you create high-quality documentation quickly and efficiently.

Running from the command line:

Running directly in your browser:

- Automated Documentation: Synchronize data from third-party sources and generates documentation automatically.

- Customizable Output: Dozens of options for styling/formatting, badges, header designs, and more.

- Language Agnostic: Works across a wide range of programming languages and project types.

-

Multi-LLM Support: Compatible with

OpenAI,Ollama,Anthropic,Google GeminiandOffline Mode. - Offline Mode: Generate a boilerplate README without calling an external API.

- Markdown Best Practices: Leverage best practices in Markdown formatting for clean, professional-looking docs.

A few combinations of README styles and configurations:

See the Configuration section for a complete list of CLI options.

📍 Overview

|

Overview ◎ High-level introduction of the project, focused on the value proposition and use-cases, rather than technical aspects. |

|

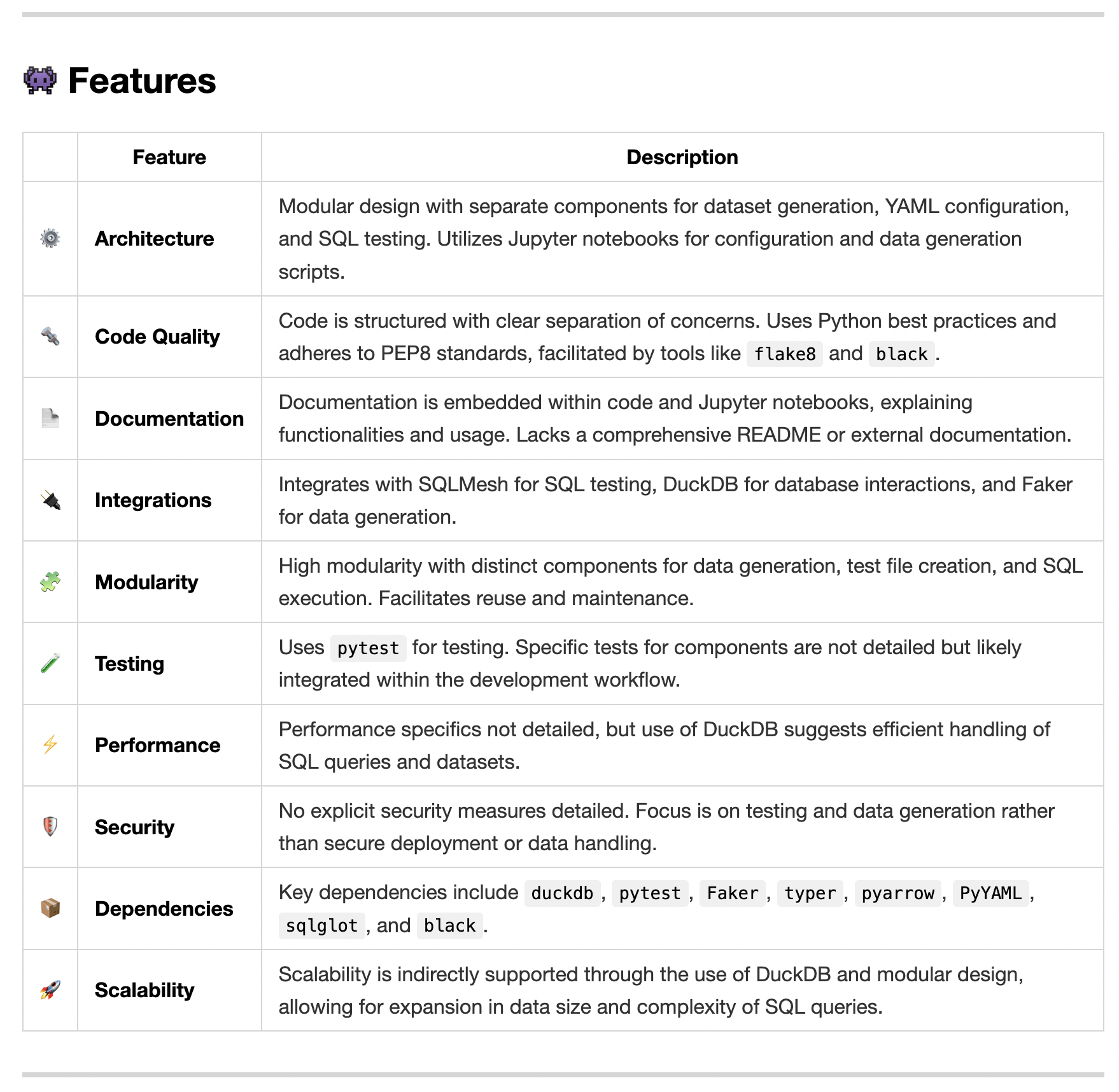

✨ Features

|

Features Table ◎ Generated markdown table that highlights the key technical features and components of the codebase. This table is generated using a structured prompt template. |

|

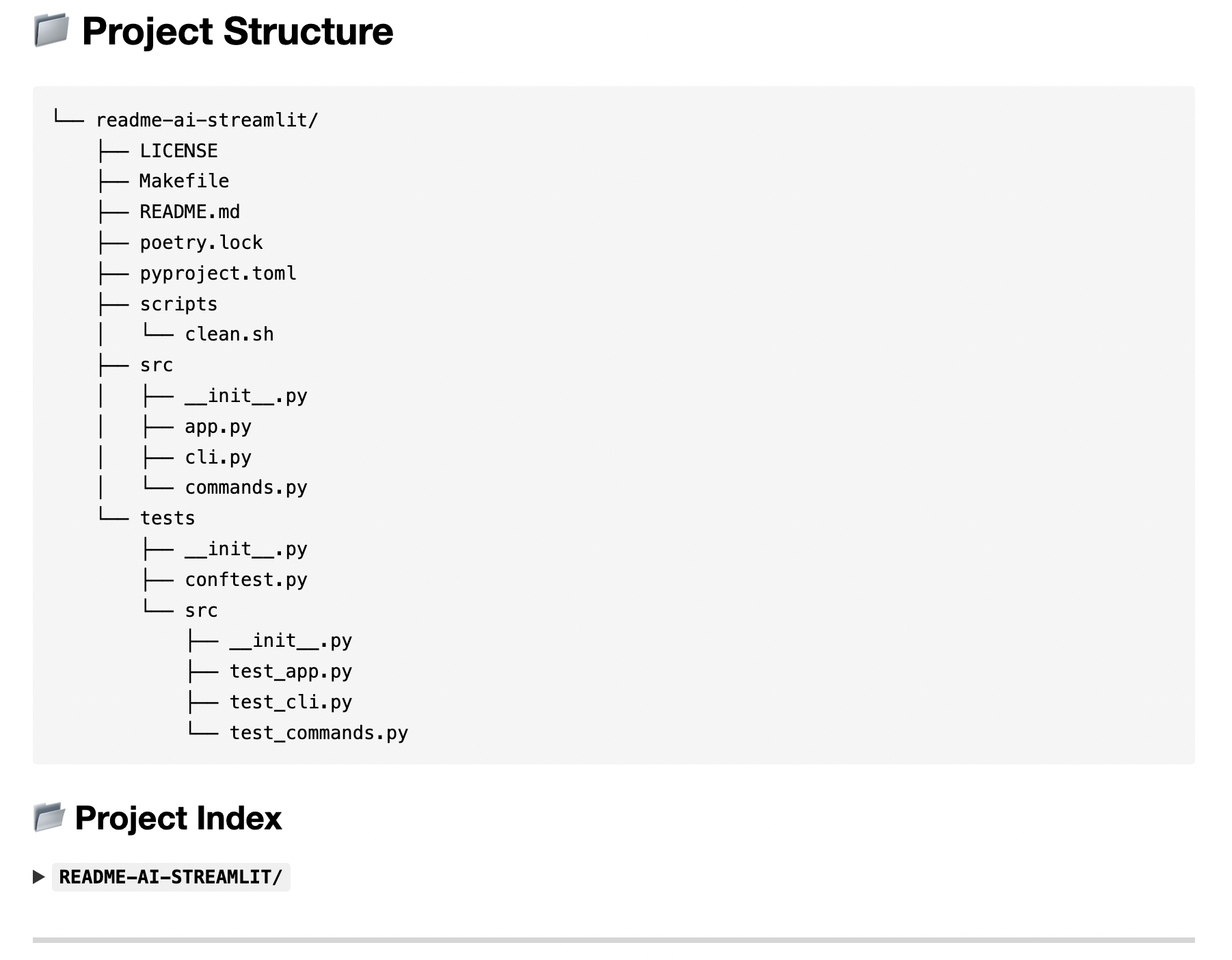

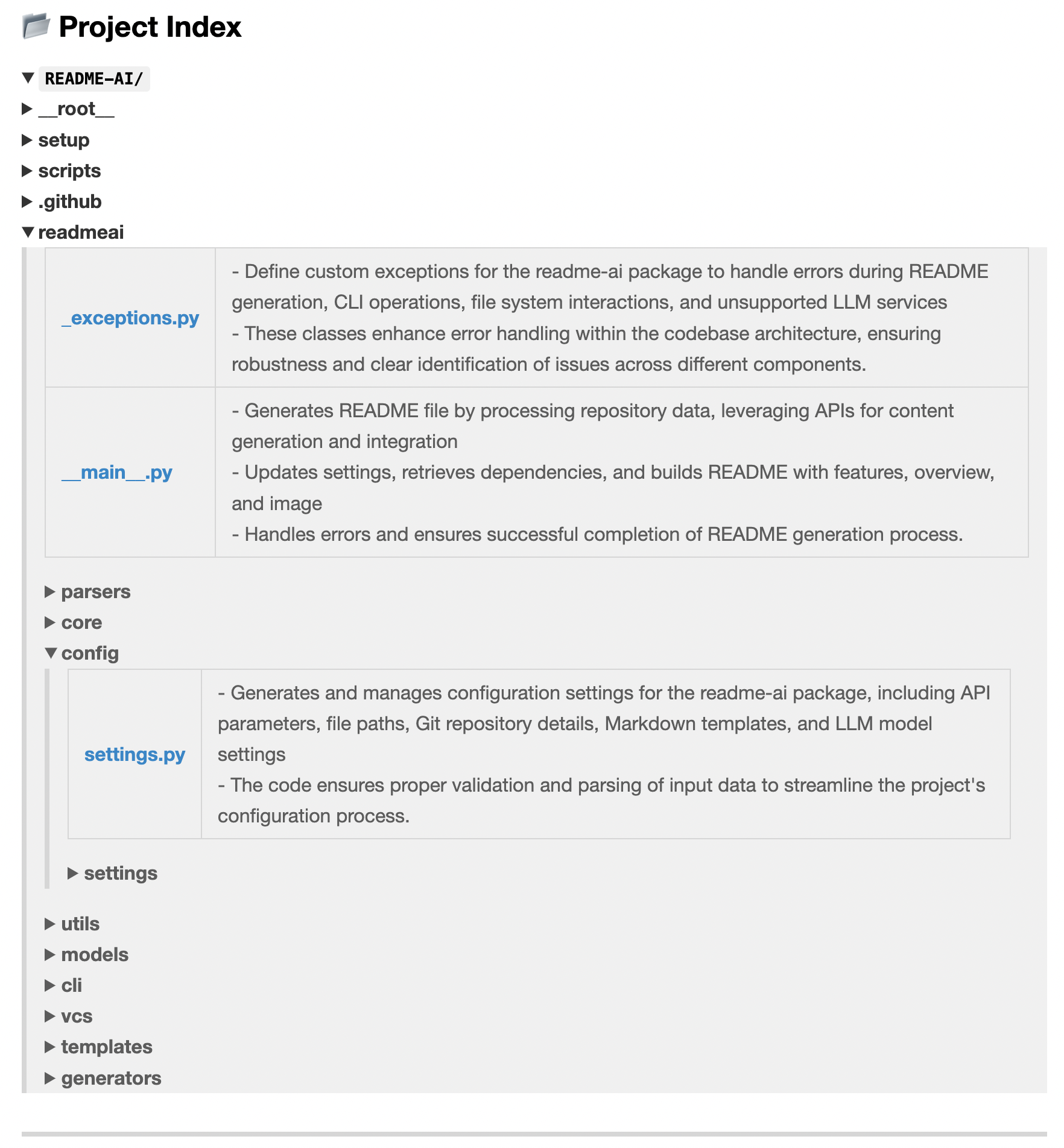

📃 Codebase Documentation

|

Directory Tree ◎ The project's directory structure is generated using pure Python and embedded in the README. See readmeai.generators.tree. for more details. |

|

|

File Summaries ◎ Summarizes key modules of the project, which are also used as context for downstream prompts. |

|

🚀 Quickstart Instructions

|

Getting Started Guides ◎ Prerequisites and system requirements are extracted from the codebase during preprocessing. The parsers handles the majority of this logic currently. |

|

|

Installation Guide ◎ |

|

🔰 Contributing Guidelines

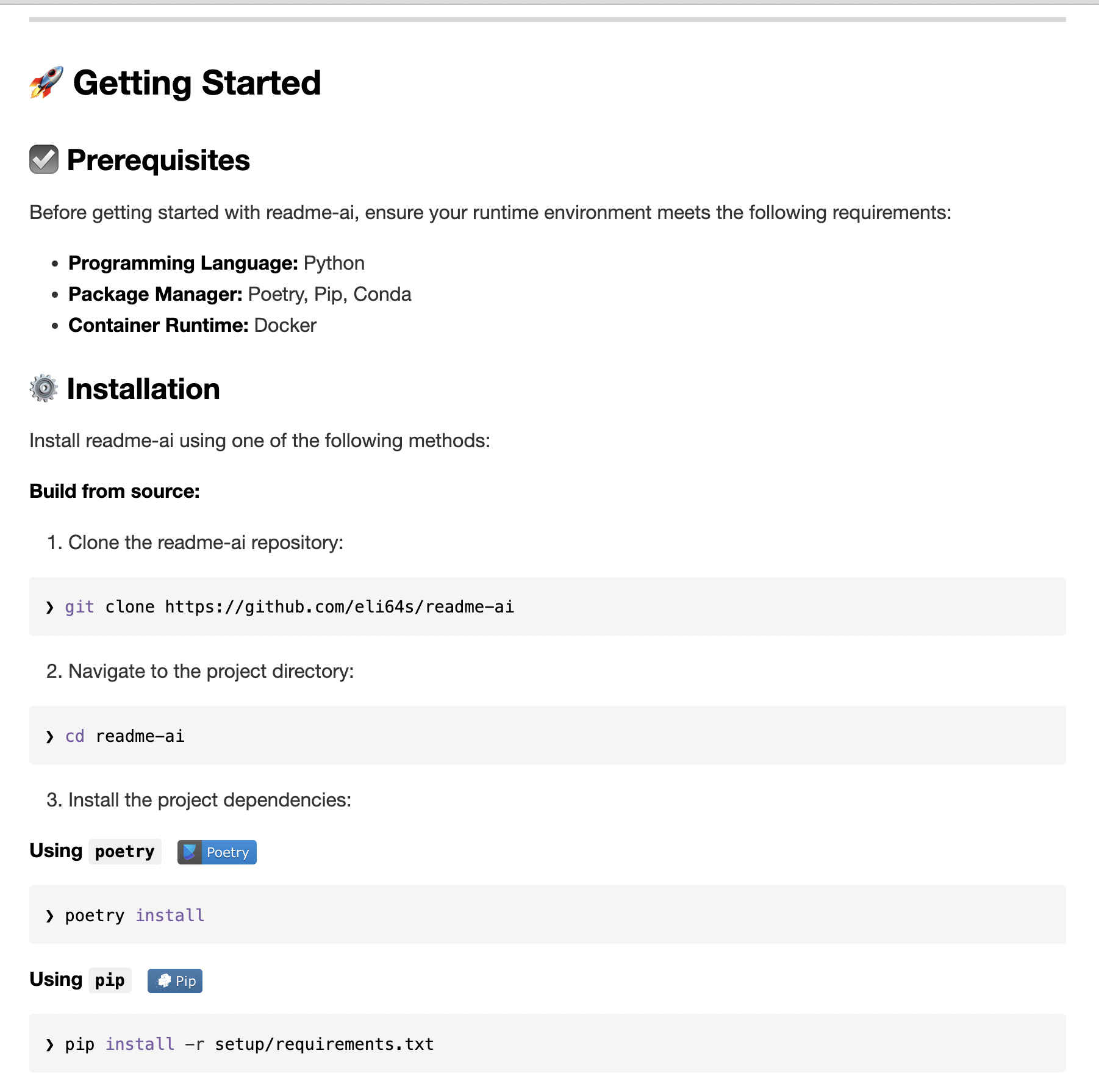

System Requirements:

- Python

3.9+ - Package Manager/Container:

pip,pipx,docker - LLM API Service:

OpenAI,Ollama,Anthropic,Google Gemini,Offline Mode

Repository URL or Path:

Make sure to have a repository URL or local directory path ready for the CLI.

LLM API Service:

- OpenAI: Recommended, requires an account setup and API key.

- Ollama: Free and open-source, potentially slower and more resource-intensive.

- Anthropic: Requires an Anthropic account and API key.

- Google Gemini: Requires a Google Cloud account and API key.

- Offline Mode: Generates a boilerplate README without making API calls.

Install readme-ai using your preferred package manager, container, or directly from the source.

❯ pip install readmeai❯ pipx install readmeai[! TIP]

Use pipx to install and run Python command-line applications without causing dependency conflicts with other packages!

Pull the latest Docker image from the Docker Hub repository.

❯ docker pull zeroxeli/readme-ai:latestBuild readme-ai

❯ bash setup/setup.sh- Clone the repository:

❯ git clone https://github.com/eli64s/readme-ai- Navigate to the

readme-aidirectory:

❯ cd readme-ai- Install dependencies using

poetry:

❯ poetry install- Enter the

poetryshell environment:

❯ poetry shellTo use the Anthropic and Google Gemini clients, install the optional dependencies.

Anthropic:

❯ pip install readmeai[anthropic]Google Gemini:

❯ pip install readmeai[gemini]OpenAI

Generate a OpenAI API key and set it as the environment variable OPENAI_API_KEY .

# Using Linux or macOS

❯ export OPENAI_API_KEY=<your_api_key>

# Using Windows

❯ set OPENAI_API_KEY=<your_api_key>Ollama

Pull your model of choice from the Ollama repository:

❯ ollama pull mistral:latestStart the Ollama server:

❯ export OLLAMA_HOST=127.0.0.1 && ollama serveSee all available models from Ollama here.

Anthropic

Generate an Anthropic API key and set the following environment variables:

❯ export ANTHROPIC_API_KEY=<your_api_key>Google Gemini

Generate a Google API key and set the following environment variables:

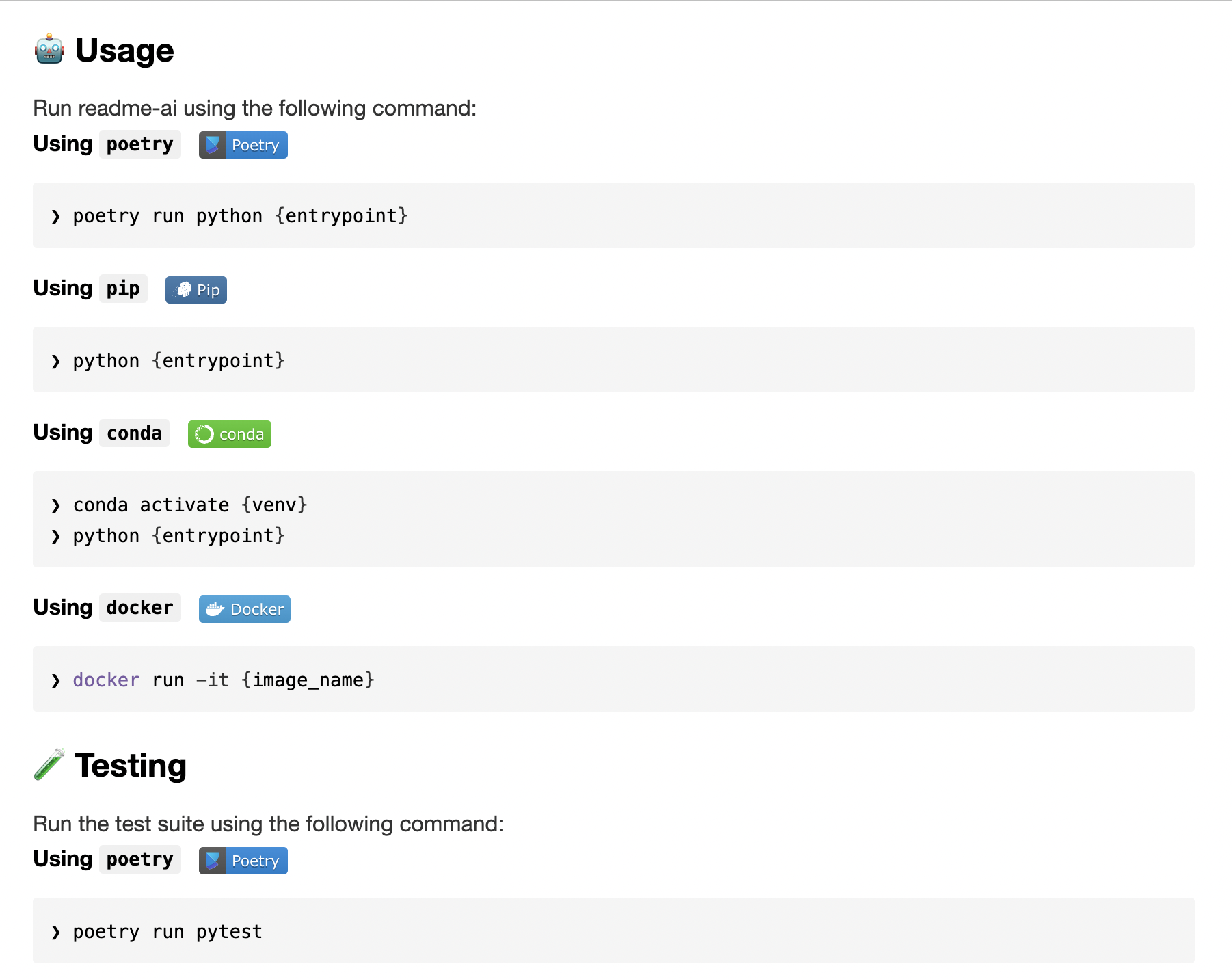

❯ export GOOGLE_API_KEY=<your_api_key>With OpenAI:

❯ readmeai --api openai --repository https://github.com/eli64s/readme-ai[! IMPORTANT] By default, the

gpt-3.5-turbomodel is used. Higher costs may be incurred when more advanced models.

With Ollama:

❯ readmeai --api ollama --model llama3 --repository https://github.com/eli64s/readme-aiWith Anthropic:

❯ readmeai --api anthropic -m claude-3-5-sonnet-20240620 -r https://github.com/eli64s/readme-aiWith Gemini:

❯ readmeai --api gemini -m gemini-1.5-flash -r https://github.com/eli64s/readme-aiAdding more customization options:

❯ readmeai --repository https://github.com/eli64s/readme-ai \

--output readmeai.md \

--api openai \

--model gpt-4 \

--badge-color A931EC \

--badge-style flat-square \

--header-style compact \

--toc-style fold \

--temperature 0.9 \

--tree-depth 2

--image LLM \

--emojisRunning the Docker container with the OpenAI API:

❯ docker run -it \

-e OPENAI_API_KEY=$OPENAI_API_KEY \

-v "$(pwd)":/app zeroxeli/readme-ai:latest \

-r https://github.com/eli64s/readme-aiTry readme-ai directly in your browser, no installation required. See the readme-ai-streamlit repository for more details.

Using readme-ai

❯ conda activate readmeai

❯ python3 -m readmeai.cli.main -r https://github.com/eli64s/readme-ai ❯ poetry shell

❯ poetry run python3 -m readmeai.cli.main -r https://github.com/eli64s/readme-aiThe pytest framework and nox automation tool are used for testing the application.

❯ make test❯ make test-nox[!TIP] Use nox to test application against multiple Python environments and dependencies!

Customize your README generation using these CLI options:

| Option | Description | Default |

|---|---|---|

--align |

Text align in header | center |

--api |

LLM API service provider | offline |

--badge-color |

Badge color name or hex code | 0080ff |

--badge-style |

Badge icon style type | flat |

--base-url |

Base URL for the repository | v1/chat/completions |

--context-window |

Maximum context window of the LLM API | 3900 |

--emojis |

Adds emojis to the README header sections | False |

--header-style |

Header template style | classic |

--image |

Project logo image | blue |

--model |

Specific LLM model to use | gpt-3.5-turbo |

--output |

Output filename | readme-ai.md |

--rate-limit |

Maximum API requests per minute | 10 |

--repository |

Repository URL or local directory path | None |

--temperature |

Creativity level for content generation | 0.1 |

--toc-style |

Table of contents template style | bullet |

--top-p |

Probability of the top-p sampling method | 0.9 |

--tree-depth |

Maximum depth of the directory tree structure | 2 |

[!TIP] For a full list of options, run

readmeai --helpin your terminal.

To see the full list of customization options, check out the Configuration section in the official documentation. This section provides a detailed overview of all available CLI options and how to use them, including badge styles, header templates, and more.

| Language/Framework | Output File | Input Repository | Description |

|---|---|---|---|

| Python | readme-python.md | readme-ai | Core readme-ai project |

| TypeScript & React | readme-typescript.md | ChatGPT App | React Native ChatGPT app |

| PostgreSQL & DuckDB | readme-postgres.md | Buenavista | Postgres proxy server |

| Kotlin & Android | readme-kotlin.md | file.io Client | Android file sharing app |

| Streamlit | readme-streamlit.md | readme-ai-streamlit | Streamlit UI for readme-ai app |

| Rust & C | readme-rust-c.md | CallMon | System call monitoring tool |

| Docker & Go | readme-go.md | docker-gs-ping | Dockerized Go app |

| Java | readme-java.md | Minimal-Todo | Minimalist todo Java app |

| FastAPI & Redis | readme-fastapi-redis.md | async-ml-inference | Async ML inference service |

| Jupyter Notebook | readme-mlops.md | mlops-course | MLOps course repository |

| Apache Flink | readme-local.md | Local Directory | Example using a local directory |

See additional README files generated by readme-ai here

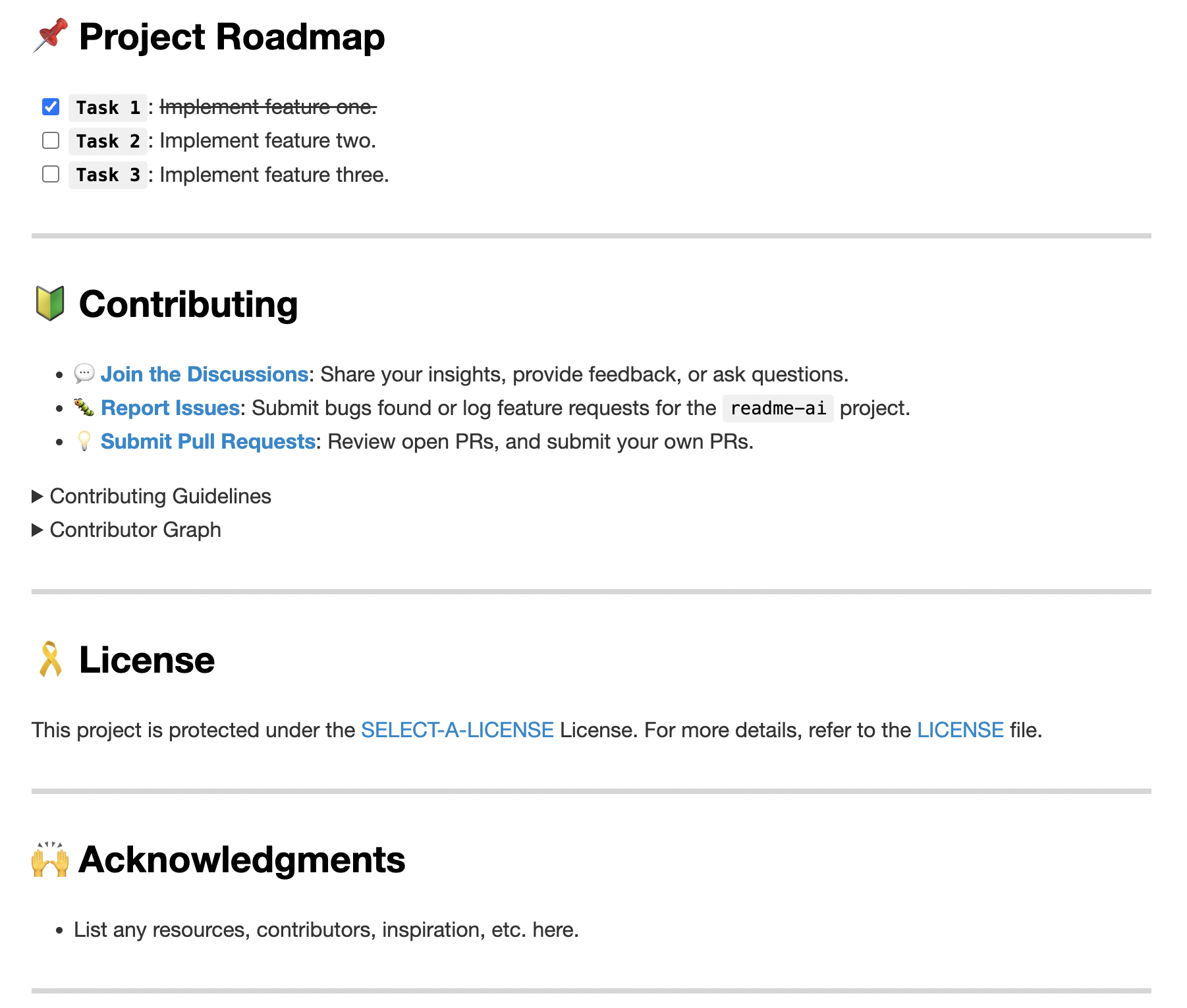

- [ ] Release

readmeai 1.0.0with enhanced documentation management features. - [ ] Develop

Vscode Extensionto generate README files directly in the editor. - [ ] Develop

GitHub Actionsto automate documentation updates. - [ ] Add

badge packsto provide additional badge styles and options.- [ ] Code coverage, CI/CD status, project version, and more.

Contributions are welcome and encouraged! If interested, please begin by reviewing the resources below:

- 💡 Contributing Guide: Learn about our contribution process, coding standards, and how to submit your ideas.

- 💬 Start a Discussion: Have questions or suggestions? Join our community discussions to share your thoughts and engage with others.

- 🐛 Report an Issue: Found a bug or have a feature request? Let us know by opening an issue so we can address it promptly.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for readme-ai

Similar Open Source Tools

readme-ai

README-AI is a developer tool that auto-generates README.md files using a combination of data extraction and generative AI. It streamlines documentation creation and maintenance, enhancing developer productivity. This project aims to enable all skill levels, across all domains, to better understand, use, and contribute to open-source software. It offers flexible README generation, supports multiple large language models (LLMs), provides customizable output options, works with various programming languages and project types, and includes an offline mode for generating boilerplate README files without external API calls.

OSA

OSA (Open-Source-Advisor) is a tool designed to improve the quality of scientific open source projects by automating the generation of README files, documentation, CI/CD scripts, and providing advice and recommendations for repositories. It supports various LLMs accessible via API, local servers, or osa_bot hosted on ITMO servers. OSA is currently under development with features like README file generation, documentation generation, automatic implementation of changes, LLM integration, and GitHub Action Workflow generation. It requires Python 3.10 or higher and tokens for GitHub/GitLab/Gitverse and LLM API key. Users can install OSA using PyPi or build from source, and run it using CLI commands or Docker containers.

stylekit

StyleKit is a comprehensive design system toolkit that helps both humans and AI generate consistent, high-quality UI code. It provides structured style specifications, design tokens, component recipes, prompt templates, and export tools — everything needed to go from 'I want a glassmorphism SaaS dashboard' to production-ready frontend code. With 90+ visual styles, 20+ page templates, 25+ UI components, and AI-powered tools like Prompt Builder, Smart Recommender, Style Linter, Style Analyzer, and Style Blender, StyleKit offers a platform with GitHub OAuth, ratings & comments, style submissions, instant community availability, favorites, bilingual support, PWA, and dark/light mode themes.

mcp-context-forge

MCP Context Forge is a powerful tool for generating context-aware data for machine learning models. It provides functionalities to create diverse datasets with contextual information, enhancing the performance of AI algorithms. The tool supports various data formats and allows users to customize the context generation process easily. With MCP Context Forge, users can efficiently prepare training data for tasks requiring contextual understanding, such as sentiment analysis, recommendation systems, and natural language processing.

tunacode

TunaCode CLI is an AI-powered coding assistant that provides a command-line interface for developers to enhance their coding experience. It offers features like model selection, parallel execution for faster file operations, and various commands for code management. The tool aims to improve coding efficiency and provide a seamless coding environment for developers.

GraphGen

GraphGen is a framework for synthetic data generation guided by knowledge graphs. It enhances supervised fine-tuning for large language models (LLMs) by generating synthetic data based on a fine-grained knowledge graph. The tool identifies knowledge gaps in LLMs, prioritizes generating QA pairs targeting high-value knowledge, incorporates multi-hop neighborhood sampling, and employs style-controlled generation to diversify QA data. Users can use LLaMA-Factory and xtuner for fine-tuning LLMs after data generation.

superset

Superset is a turbocharged terminal that allows users to run multiple CLI coding agents simultaneously, isolate tasks in separate worktrees, monitor agent status, review changes quickly, and enhance development workflow. It supports any CLI-based coding agent and offers features like parallel execution, worktree isolation, agent monitoring, built-in diff viewer, workspace presets, universal compatibility, quick context switching, and IDE integration. Users can customize keyboard shortcuts, configure workspace setup, and teardown, and contribute to the project. The tech stack includes Electron, React, TailwindCSS, Bun, Turborepo, Vite, Biome, Drizzle ORM, Neon, and tRPC. The community provides support through Discord, Twitter, GitHub Issues, and GitHub Discussions.

llamafarm

LlamaFarm is a comprehensive AI framework that empowers users to build powerful AI applications locally, with full control over costs and deployment options. It provides modular components for RAG systems, vector databases, model management, prompt engineering, and fine-tuning. Users can create differentiated AI products without needing extensive ML expertise, using simple CLI commands and YAML configs. The framework supports local-first development, production-ready components, strategy-based configuration, and deployment anywhere from laptops to the cloud.

openbrowser-ai

OpenBrowser is a framework for intelligent browser automation that combines direct CDP communication with a CodeAgent architecture. It allows users to navigate, interact with, and extract information from web pages autonomously. The tool supports various LLM providers, offers vision support for screenshot analysis, and includes a MCP server for Model Context Protocol support. Users can record browser sessions as video files and benefit from features like video recording and full documentation available at docs.openbrowser.me.

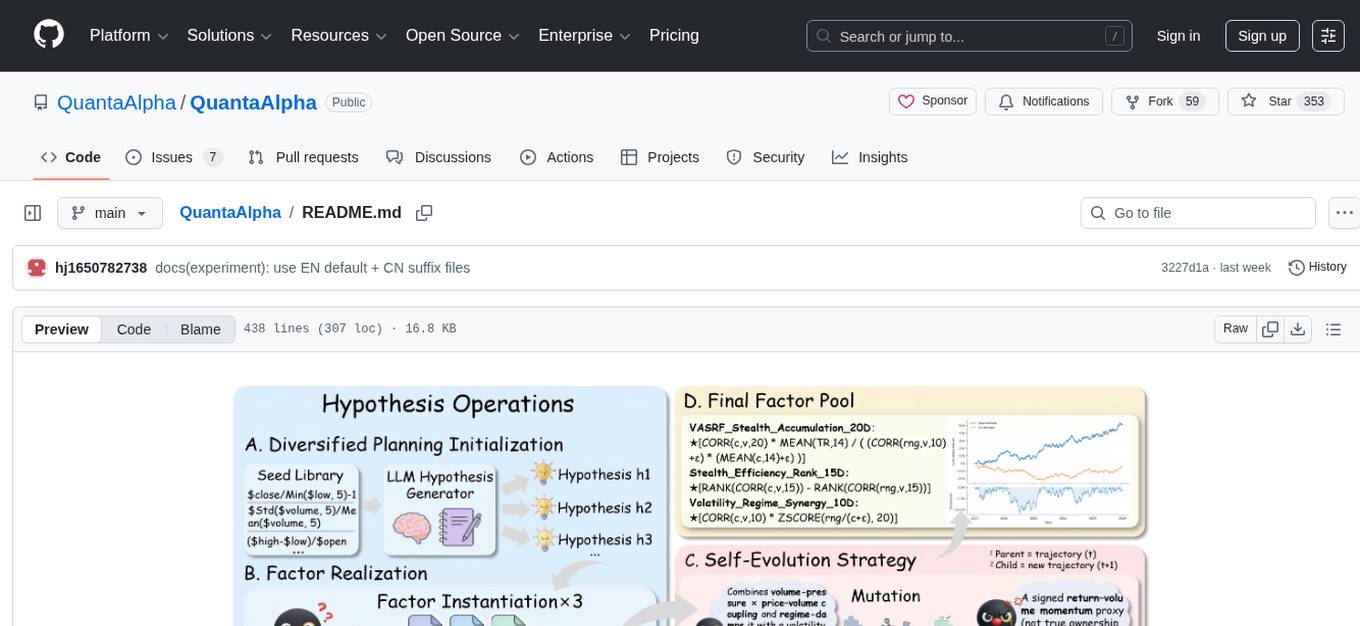

QuantaAlpha

QuantaAlpha is a framework designed for factor mining in quantitative alpha research. It combines LLM intelligence with evolutionary strategies to automatically mine, evolve, and validate alpha factors through self-evolving trajectories. The framework provides a trajectory-based approach with diversified planning initialization and structured hypothesis-code constraint. Users can describe their research direction and observe the automatic factor mining process. QuantaAlpha aims to transform how quantitative alpha factors are discovered by leveraging advanced technologies and self-evolving methodologies.

new-api

New API is a next-generation large model gateway and AI asset management system that provides a wide range of features, including a new UI interface, multi-language support, online recharge function, key query for usage quota, compatibility with the original One API database, model charging by usage count, channel weighted randomization, data dashboard, token grouping and model restrictions, support for various authorization login methods, support for Rerank models, OpenAI Realtime API, Claude Messages format, reasoning effort setting, content reasoning, user-specific model rate limiting, request format conversion, cache billing support, and various model support such as gpts, Midjourney-Proxy, Suno API, custom channels, Rerank models, Claude Messages format, Dify, and more.

ai-dev-kit

The AI Dev Kit is a comprehensive toolkit designed to enhance AI-driven development on Databricks. It provides trusted sources for AI coding assistants like Claude Code and Cursor to build faster and smarter on Databricks. The kit includes features such as Spark Declarative Pipelines, Databricks Jobs, AI/BI Dashboards, Unity Catalog, Genie Spaces, Knowledge Assistants, MLflow Experiments, Model Serving, Databricks Apps, and more. Users can choose from different adventures like installing the kit, using the visual builder app, teaching AI assistants Databricks patterns, executing Databricks actions, or building custom integrations with the core library. The kit also includes components like databricks-tools-core, databricks-mcp-server, databricks-skills, databricks-builder-app, and ai-dev-project.

Automodel

Automodel is a Python library for automating the process of building and evaluating machine learning models. It provides a set of tools and utilities to streamline the model development workflow, from data preprocessing to model selection and evaluation. With Automodel, users can easily experiment with different algorithms, hyperparameters, and feature engineering techniques to find the best model for their dataset. The library is designed to be user-friendly and customizable, allowing users to define their own pipelines and workflows. Automodel is suitable for data scientists, machine learning engineers, and anyone looking to quickly build and test machine learning models without the need for manual intervention.

auto-engineer

Auto Engineer is a tool designed to automate the Software Development Life Cycle (SDLC) by building production-grade applications with a combination of human and AI agents. It offers a plugin-based architecture that allows users to install only the necessary functionality for their projects. The tool guides users through key stages including Flow Modeling, IA Generation, Deterministic Scaffolding, AI Coding & Testing Loop, and Comprehensive Quality Checks. Auto Engineer follows a command/event-driven architecture and provides a modular plugin system for specific functionalities. It supports TypeScript with strict typing throughout and includes a built-in message bus server with a web dashboard for monitoring commands and events.

ASTRA.ai

ASTRA is an open-source platform designed for developing applications utilizing large language models. It merges the ideas of Backend-as-a-Service and LLM operations, allowing developers to swiftly create production-ready generative AI applications. Additionally, it empowers non-technical users to engage in defining and managing data operations for AI applications. With ASTRA, you can easily create real-time, multi-modal AI applications with low latency, even without any coding knowledge.

google_workspace_mcp

The Google Workspace MCP Server is a production-ready server that integrates major Google Workspace services with AI assistants. It supports single-user and multi-user authentication via OAuth 2.1, making it a powerful backend for custom applications. Built with FastMCP for optimal performance, it features advanced authentication handling, service caching, and streamlined development patterns. The server provides full natural language control over Google Calendar, Drive, Gmail, Docs, Sheets, Slides, Forms, Tasks, and Chat through all MCP clients, AI assistants, and developer tools. It supports free Google accounts and Google Workspace plans with expanded app options like Chat & Spaces. The server also offers private cloud instance options.

For similar tasks

readme-ai

README-AI is a developer tool that auto-generates README.md files using a combination of data extraction and generative AI. It streamlines documentation creation and maintenance, enhancing developer productivity. This project aims to enable all skill levels, across all domains, to better understand, use, and contribute to open-source software. It offers flexible README generation, supports multiple large language models (LLMs), provides customizable output options, works with various programming languages and project types, and includes an offline mode for generating boilerplate README files without external API calls.

AIDE-unipi

AIDE @ unipi is a repository containing students' material for the course in Artificial Intelligence and Data Engineering at University of Pisa. It includes slides, students' notes, information about exams methods, oral questions, past exams, and links to past students' projects. The material is unofficial and created by students for students, checked only by students. Contributions are welcome through pull requests, issues, or contacting maintainers. The repository aims to provide non-profit resources for the course, with the opportunity for contributors to be acknowledged and credited. It also offers links to Telegram and WhatsApp groups for further interaction and a Google Drive folder with additional resources for AIDE published by past students.

figma-console-mcp

Figma Console MCP is a Model Context Protocol server that bridges design and development, giving AI assistants complete access to Figma for extraction, creation, and debugging. It connects AI assistants like Claude to Figma, enabling plugin debugging, visual debugging, design system extraction, design creation, variable management, real-time monitoring, and three installation methods. The server offers 53+ tools for NPX and Local Git setups, while Remote SSE provides read-only access with 16 tools. Users can create and modify designs with AI, contribute to projects, or explore design data. The server supports authentication via personal access tokens and OAuth, and offers tools for navigation, console debugging, visual debugging, design system extraction, design creation, design-code parity, variable management, and AI-assisted design creation.

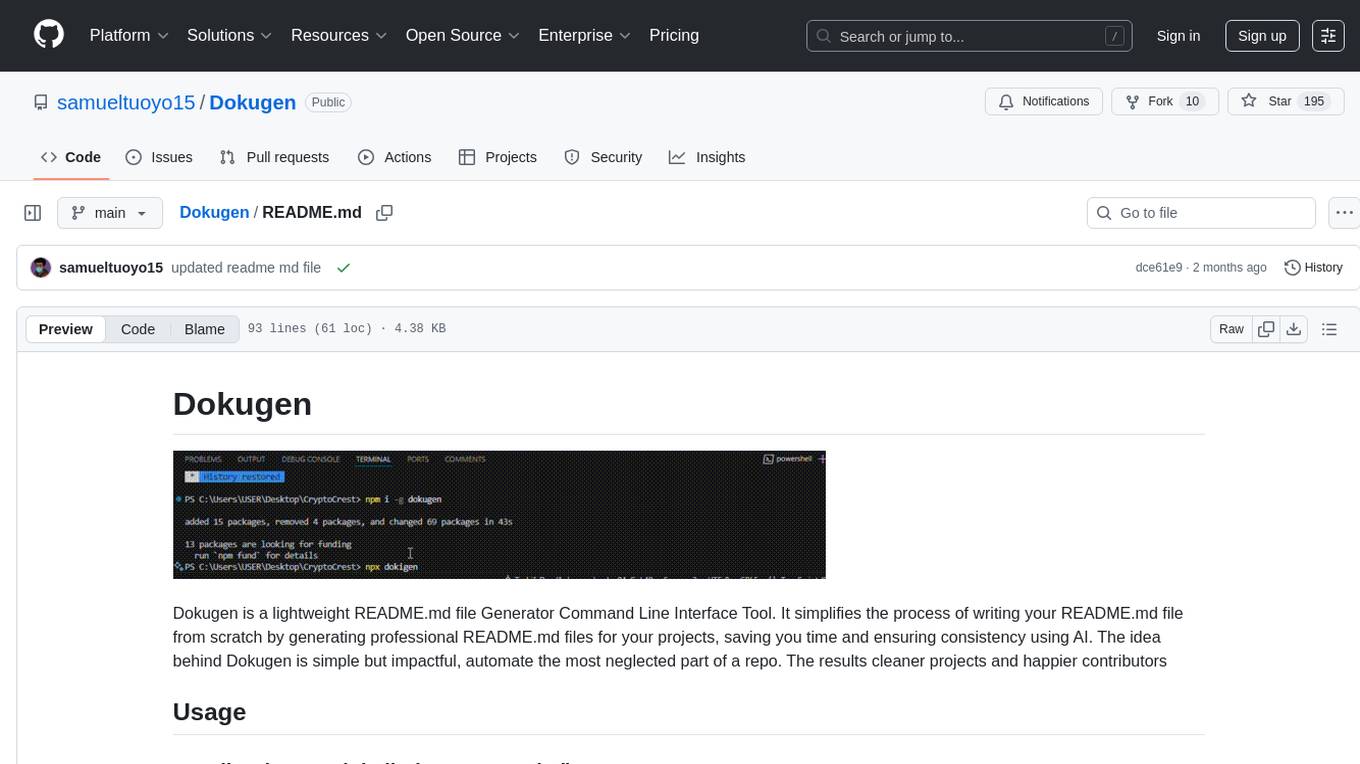

Dokugen

Dokugen is a lightweight README.md file Generator Command Line Interface Tool that simplifies the process of writing README.md files by generating professional READMEs for projects, saving time and ensuring consistency using AI. It automates the most neglected part of a repo, resulting in cleaner projects and happier contributors.

devchat

DevChat is an open-source workflow engine that enables developers to create intelligent, automated workflows for engaging with users through a chat panel within their IDEs. It combines script writing flexibility, latest AI models, and an intuitive chat GUI to enhance user experience and productivity. DevChat simplifies the integration of AI in software development, unlocking new possibilities for developers.

lowcode-vscode

This repository is a low-code tool that supports ChatGPT and other LLM models. It provides functionalities such as OCR translation, generating specified format JSON, translating Chinese to camel case, translating current directory to English, and quickly creating code templates. Users can also generate CURD operations for managing backend list pages. The tool allows users to select templates, initialize query form configurations using OCR, initialize table configurations using OCR, translate Chinese fields using ChatGPT, and generate code without writing a single line. It aims to enhance productivity by simplifying code generation and development processes.

AI-Prompt-Genius

AI Prompt Genius is a Chrome extension that allows you to curate a custom library of AI prompts. It is built using React web app and Tailwind CSS with DaisyUI components. The extension enables users to create and manage AI prompts for various purposes. It provides a user-friendly interface for organizing and accessing AI prompts efficiently. AI Prompt Genius is designed to enhance productivity and creativity by offering a personalized collection of prompts tailored to individual needs. Users can easily install the extension from the Chrome Web Store and start using it to generate AI prompts for different tasks.

second-brain-agent

The Second Brain AI Agent Project is a tool designed to empower personal knowledge management by automatically indexing markdown files and links, providing a smart search engine powered by OpenAI, integrating seamlessly with different note-taking methods, and enhancing productivity by accessing information efficiently. The system is built on LangChain framework and ChromaDB vector store, utilizing a pipeline to process markdown files and extract text and links for indexing. It employs a Retrieval-augmented generation (RAG) process to provide context for asking questions to the large language model. The tool is beneficial for professionals, students, researchers, and creatives looking to streamline workflows, improve study sessions, delve deep into research, and organize thoughts and ideas effortlessly.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.