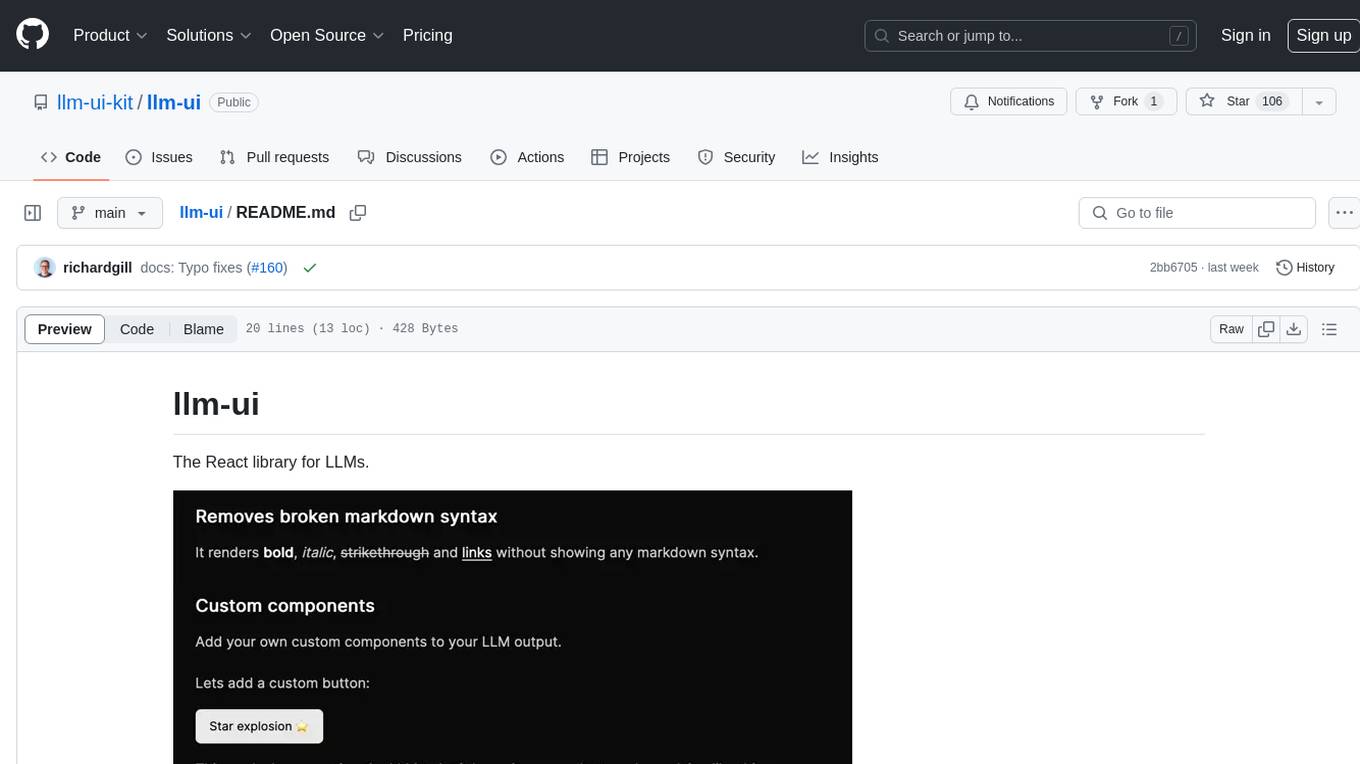

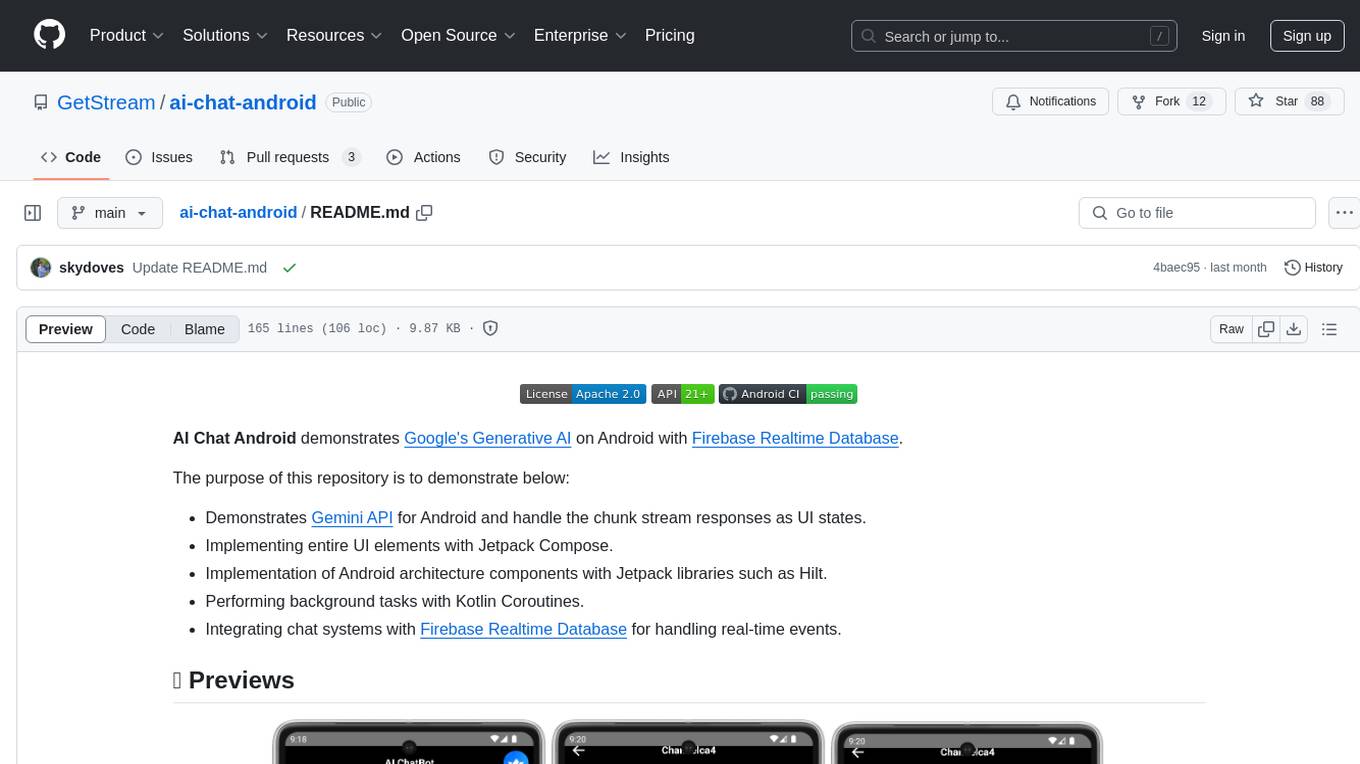

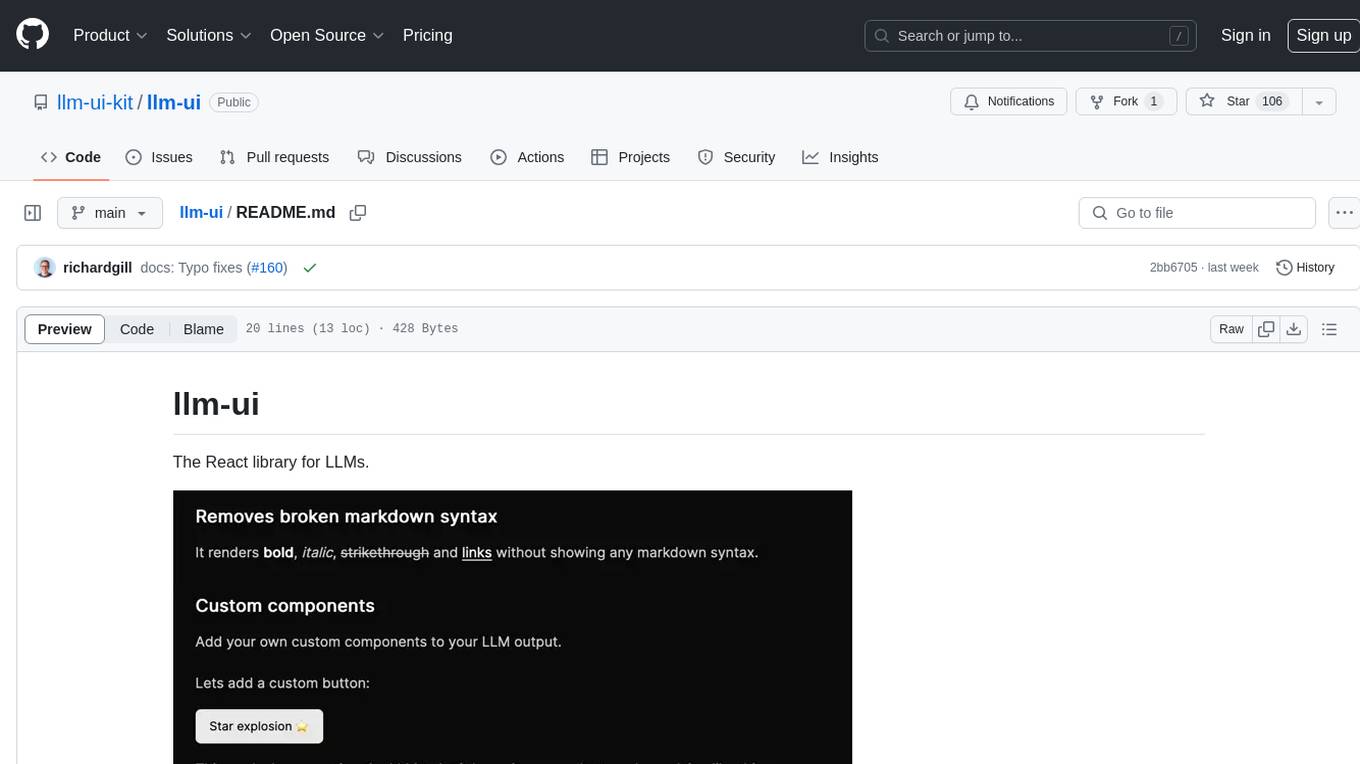

llm-ui

The React library for LLMs

Stars: 253

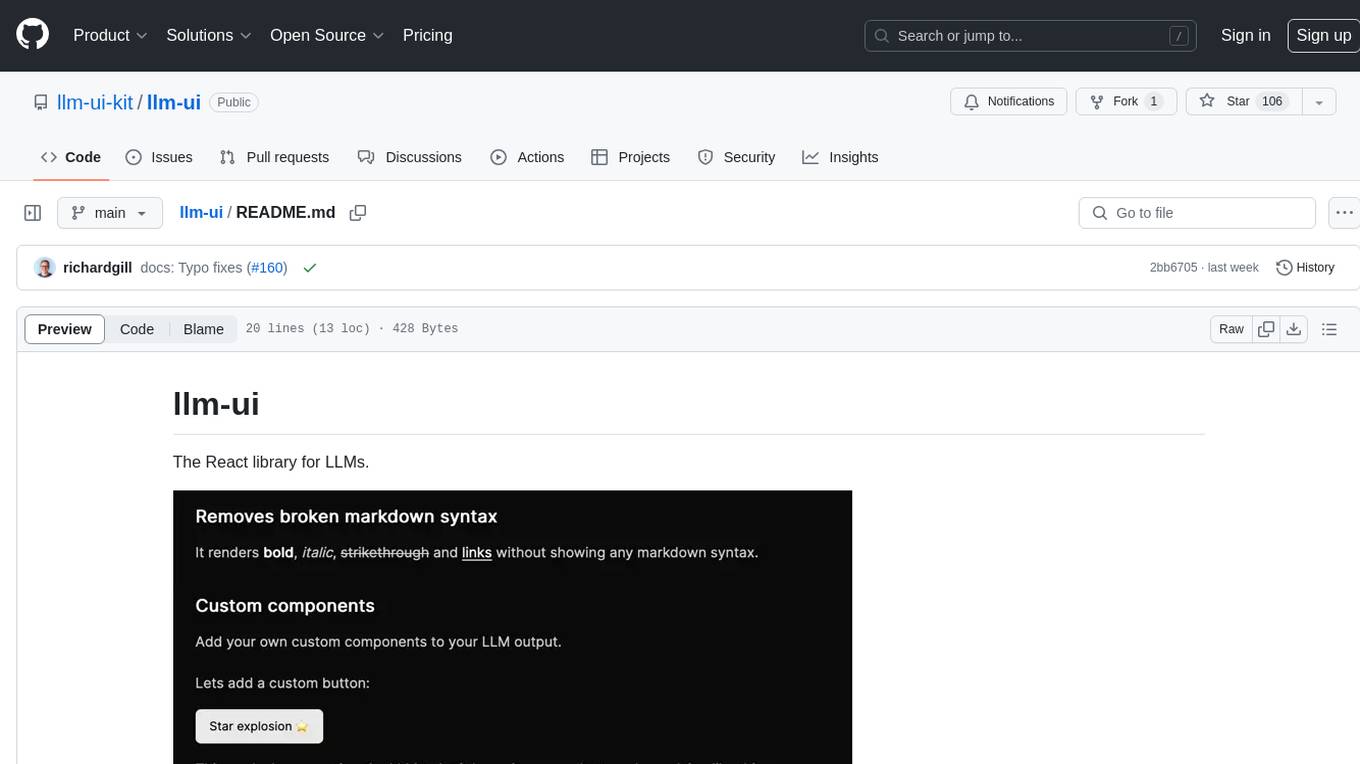

llm-ui is a React library designed for LLMs, providing features such as removing broken markdown syntax, adding custom components to LLM output, smoothing out pauses in streamed output, rendering at native frame rate, supporting code blocks for every language with Shiki, and being headless to allow for custom styles. The library aims to enhance the user experience and flexibility when working with LLMs.

README:

The React library for LLMs.

- Removes broken markdown syntax

- Add your own custom components to LLM output.

- Throttling smooths out pauses in the LLM’s streamed output

- Renders output at native frame rate

- Code blocks for every language with Shiki

- Headless: Bring your own styles

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llm-ui

Similar Open Source Tools

llm-ui

llm-ui is a React library designed for LLMs, providing features such as removing broken markdown syntax, adding custom components to LLM output, smoothing out pauses in streamed output, rendering at native frame rate, supporting code blocks for every language with Shiki, and being headless to allow for custom styles. The library aims to enhance the user experience and flexibility when working with LLMs.

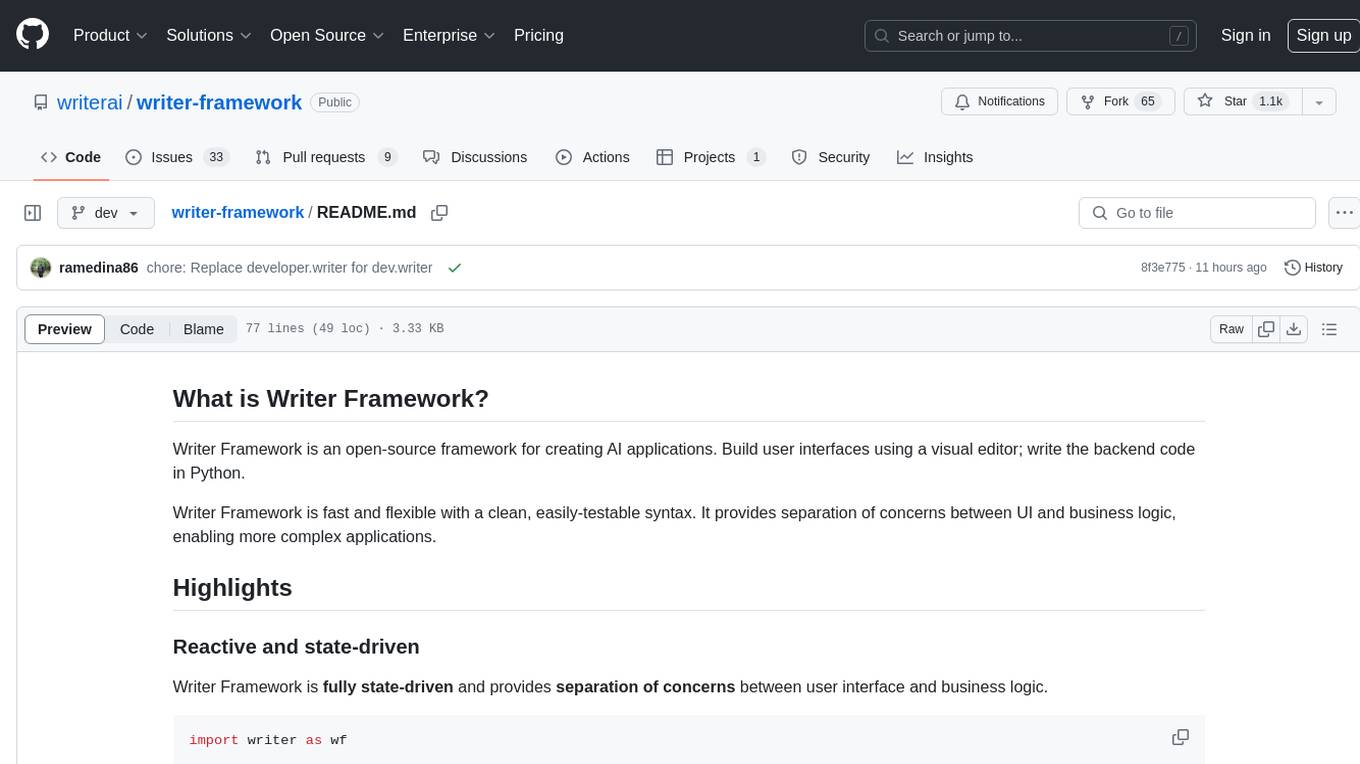

writer-framework

Writer Framework is an open-source framework for creating AI applications. It allows users to build user interfaces using a visual editor and write the backend code in Python. The framework is fast, flexible, and developer-friendly, providing separation of concerns between UI and business logic. It is reactive and state-driven, allowing for highly customizable elements without the need for CSS. Writer Framework is designed to be fast, with minimal overhead on Python code, and uses WebSockets for synchronization. It is contained in a standard Python package, supports local code editing with instant refreshes, and enables editing the UI while the app is running.

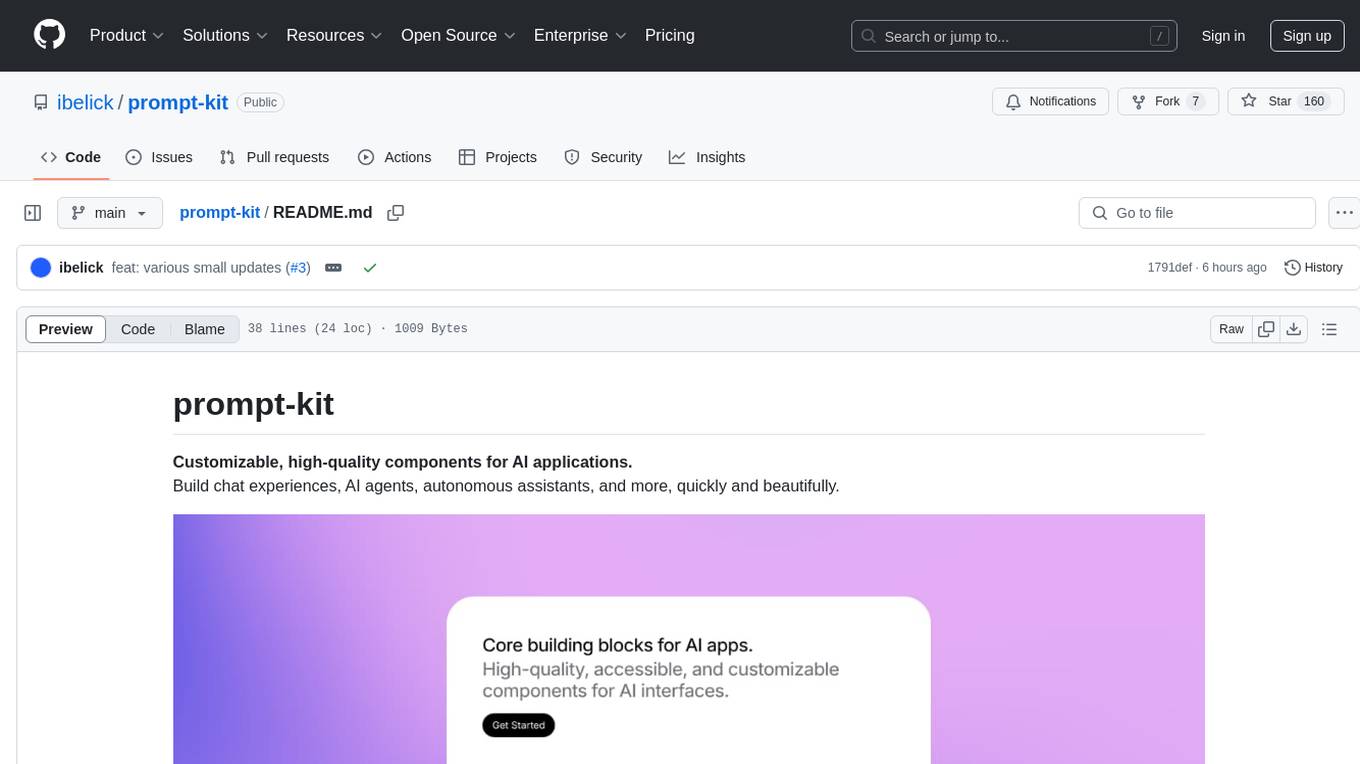

prompt-kit

Prompt-kit is a collection of customizable, high-quality components designed for building AI applications such as chat experiences, AI agents, and autonomous assistants. It offers a quick and beautiful way to create interactive interfaces for various AI-related projects. The components provided include PromptInput for customizable input, Message for displaying chat messages, Markdown for rendering rich content, and CodeBlock for displaying syntax-highlighted code blocks. With prompt-kit, developers can easily enhance their AI applications with visually appealing and functional UI elements.

writer-framework

Writer Framework is an open-source framework for creating AI applications. It allows users to build user interfaces using a visual editor and write the backend code in Python. The framework is fast, flexible, and provides separation of concerns between UI and business logic. It is reactive and state-driven, highly customizable without requiring CSS, fast in event handling, developer-friendly with easy installation and quick start options, and contains full documentation for using its AI module and deployment options.

bytechef

ByteChef is an open-source, low-code, extendable API integration and workflow automation platform. It provides an intuitive UI Workflow Editor, event-driven & scheduled workflows, multiple flow controls, built-in code editor supporting Java, JavaScript, Python, and Ruby, rich component ecosystem, extendable with custom connectors, AI-ready with built-in AI components, developer-ready to expose workflows as APIs, version control friendly, self-hosted, scalable, and resilient. It allows users to build and visualize workflows, automate tasks across SaaS apps, internal APIs, and databases, and handle millions of workflows with high availability and fault tolerance.

genkit

Firebase Genkit (beta) is a framework with powerful tooling to help app developers build, test, deploy, and monitor AI-powered features with confidence. Genkit is cloud optimized and code-centric, integrating with many services that have free tiers to get started. It provides unified API for generation, context-aware AI features, evaluation of AI workflow, extensibility with plugins, easy deployment to Firebase or Google Cloud, observability and monitoring with OpenTelemetry, and a developer UI for prototyping and testing AI features locally. Genkit works seamlessly with Firebase or Google Cloud projects through official plugins and templates.

Genkit

Genkit is an open-source framework for building full-stack AI-powered applications, used in production by Google's Firebase. It provides SDKs for JavaScript/TypeScript (Stable), Go (Beta), and Python (Alpha) with unified interface for integrating AI models from providers like Google, OpenAI, Anthropic, Ollama. Rapidly build chatbots, automations, and recommendation systems using streamlined APIs for multimodal content, structured outputs, tool calling, and agentic workflows. Genkit simplifies AI integration with open-source SDK, unified APIs, and offers text and image generation, structured data generation, tool calling, prompt templating, persisted chat interfaces, AI workflows, and AI-powered data retrieval (RAG).

awesome-ai-devtools

Awesome AI-Powered Developer Tools is a curated list of AI-powered developer tools that leverage AI to assist developers in tasks such as code completion, refactoring, debugging, documentation, and more. The repository includes a wide range of tools, from IDEs and Git clients to assistants, agents, app generators, UI generators, snippet generators, documentation tools, code generation tools, agent platforms, OpenAI plugins, search tools, and testing tools. These tools are designed to enhance developer productivity and streamline various development tasks by integrating AI capabilities.

poml

POML (Prompt Orchestration Markup Language) is a novel markup language designed to bring structure, maintainability, and versatility to advanced prompt engineering for Large Language Models (LLMs). It addresses common challenges in prompt development, such as lack of structure, complex data integration, format sensitivity, and inadequate tooling. POML provides a systematic way to organize prompt components, integrate diverse data types seamlessly, and manage presentation variations, empowering developers to create more sophisticated and reliable LLM applications.

prompty

Prompty is an asset class and format for LLM prompts designed to enhance observability, understandability, and portability for developers. The primary goal is to accelerate the developer inner loop. This repository contains the Prompty Language Specification and a documentation site. The Visual Studio Code extension offers a prompt playground to streamline the prompt engineering process.

dagger

Dagger is an open-source runtime for composable workflows, ideal for systems requiring repeatability, modularity, observability, and cross-platform support. It features a reproducible execution engine, a universal type system, a powerful data layer, native SDKs for multiple languages, an open ecosystem, an interactive command-line environment, batteries-included observability, and seamless integration with various platforms and frameworks. It also offers LLM augmentation for connecting to LLM endpoints. Dagger is suitable for AI agents and CI/CD workflows.

languine

Languine is a CLI tool powered by AI that helps developers streamline the localization process by providing AI-powered translations, automation features, consistent localization, developer-centric design, and time-saving workflows. It automates the identification of translation keys, supports multiple file formats, delivers accurate translations in over 100 languages, aligns translations with the original text's tone and intent, extracts translation keys from codebase, and supports hooks for content formatting with Biome or Prettier. Languine is designed to simplify and enhance the localization experience for developers.

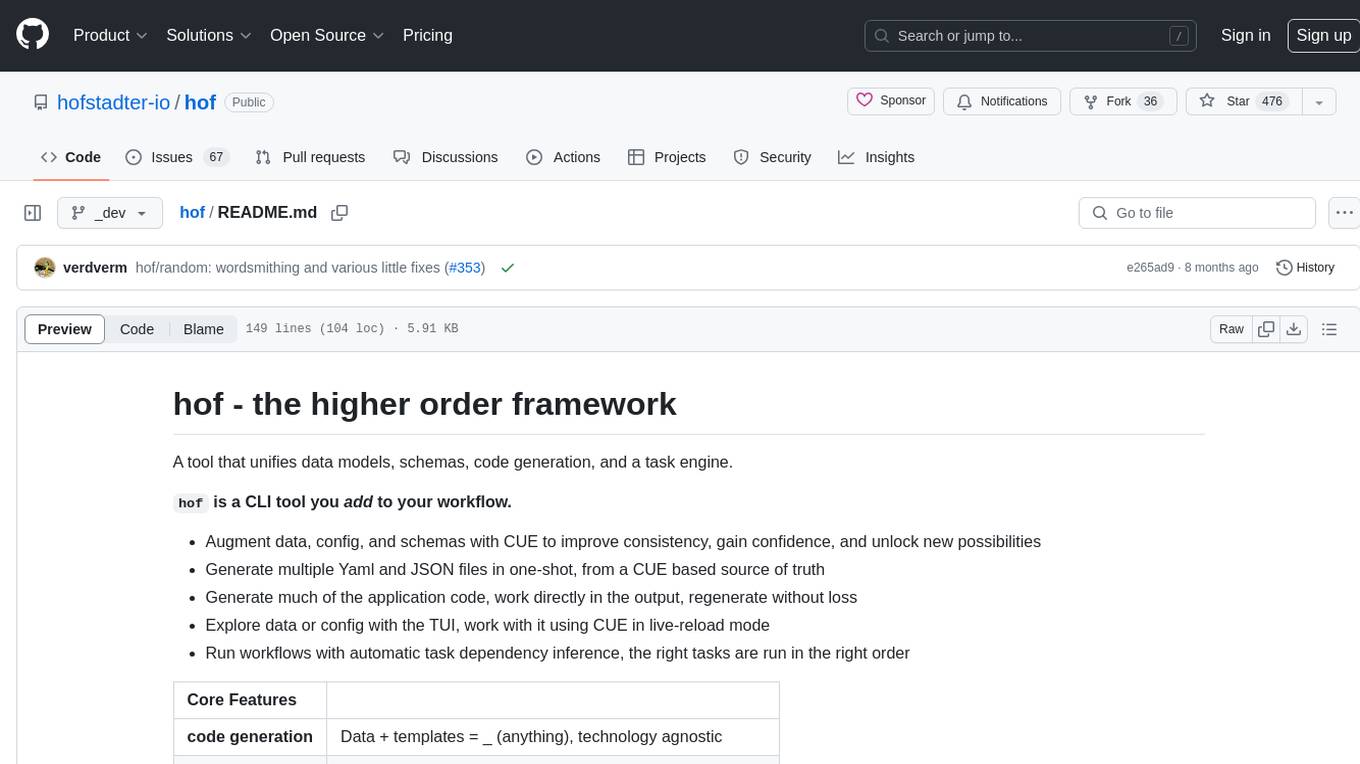

hof

Hof is a CLI tool that unifies data models, schemas, code generation, and a task engine. It allows users to augment data, config, and schemas with CUE to improve consistency, generate multiple Yaml and JSON files, explore data or config with a TUI, and run workflows with automatic task dependency inference. The tool uses CUE to power the DX and implementation, providing a language for specifying schemas, configuration, and writing declarative code. Hof offers core features like code generation, data model management, task engine, CUE cmds, creators, modules, TUI, and chat for better, scalable results.

bmf

BMF (Babit Multimedia Framework) is a cross-platform, multi-language, customizable multimedia processing framework developed by ByteDance. It offers native compatibility with Linux, Windows, and macOS, Python, Go, and C++ APIs, and high performance with strong GPU acceleration. BMF allows developers to enhance its features independently and provides efficient data conversion across popular frameworks and hardware devices. BMFLite is a client-side lightweight framework used in apps like Douyin/Xigua, serving over one billion users daily. BMF is widely used in video streaming, live transcoding, cloud editing, and mobile pre/post processing scenarios.

ai-chat-android

AI Chat Android demonstrates Google's Generative AI on Android with Firebase Realtime Database. It showcases Gemini API integration, Jetpack Compose UI elements, Android architecture components with Hilt, Kotlin Coroutines for background tasks, and Firebase Realtime Database integration for real-time events. The project follows Google's official architecture guidance with a modularized structure for reusability, parallel building, and decentralized focusing.

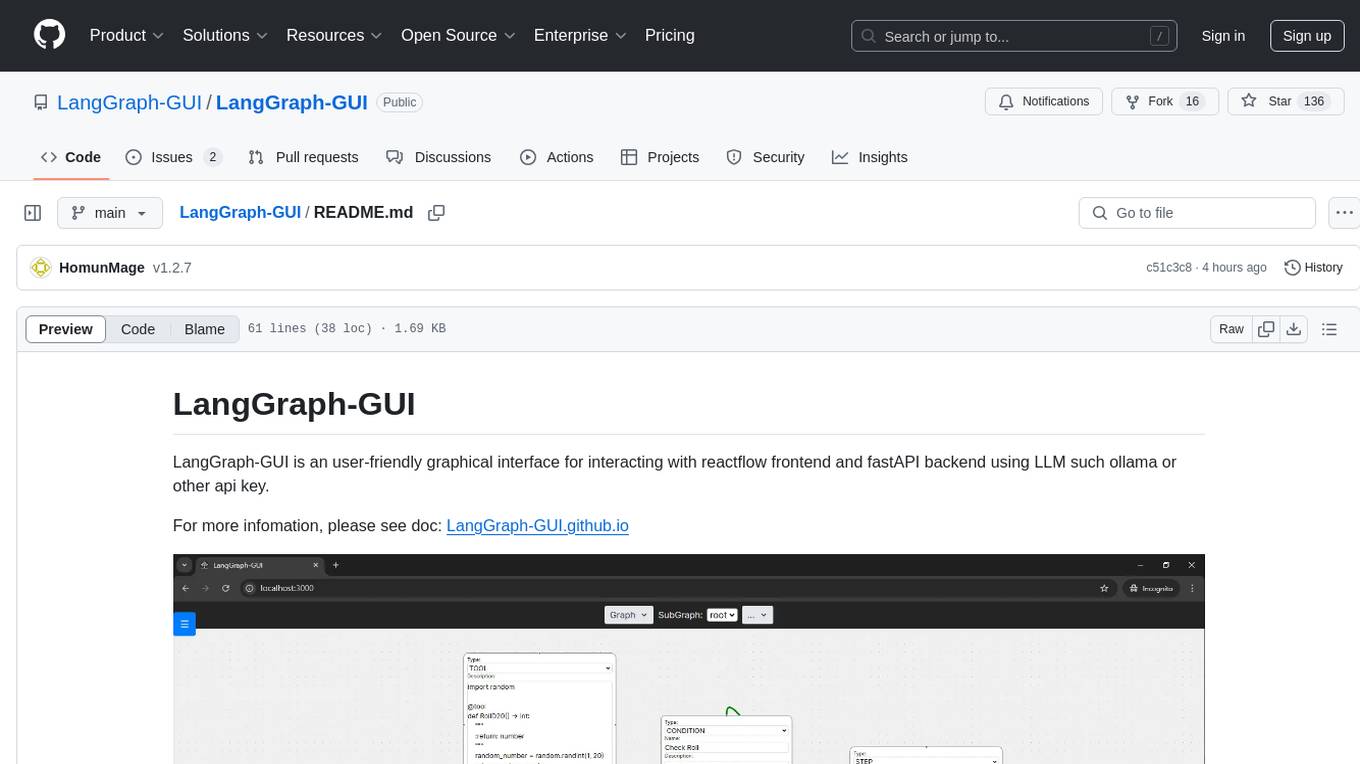

LangGraph-GUI

LangGraph-GUI is a user-friendly graphical interface for interacting with reactflow frontend and fastAPI backend using LLM such as ollama or other API key. It provides a convenient way to work with language models and APIs, offering a seamless experience for users to visualize and interact with the data flow. The tool simplifies the process of setting up the environment and accessing the application, making it easier for users to leverage the power of language models in their projects.

For similar tasks

llm-ui

llm-ui is a React library designed for LLMs, providing features such as removing broken markdown syntax, adding custom components to LLM output, smoothing out pauses in streamed output, rendering at native frame rate, supporting code blocks for every language with Shiki, and being headless to allow for custom styles. The library aims to enhance the user experience and flexibility when working with LLMs.

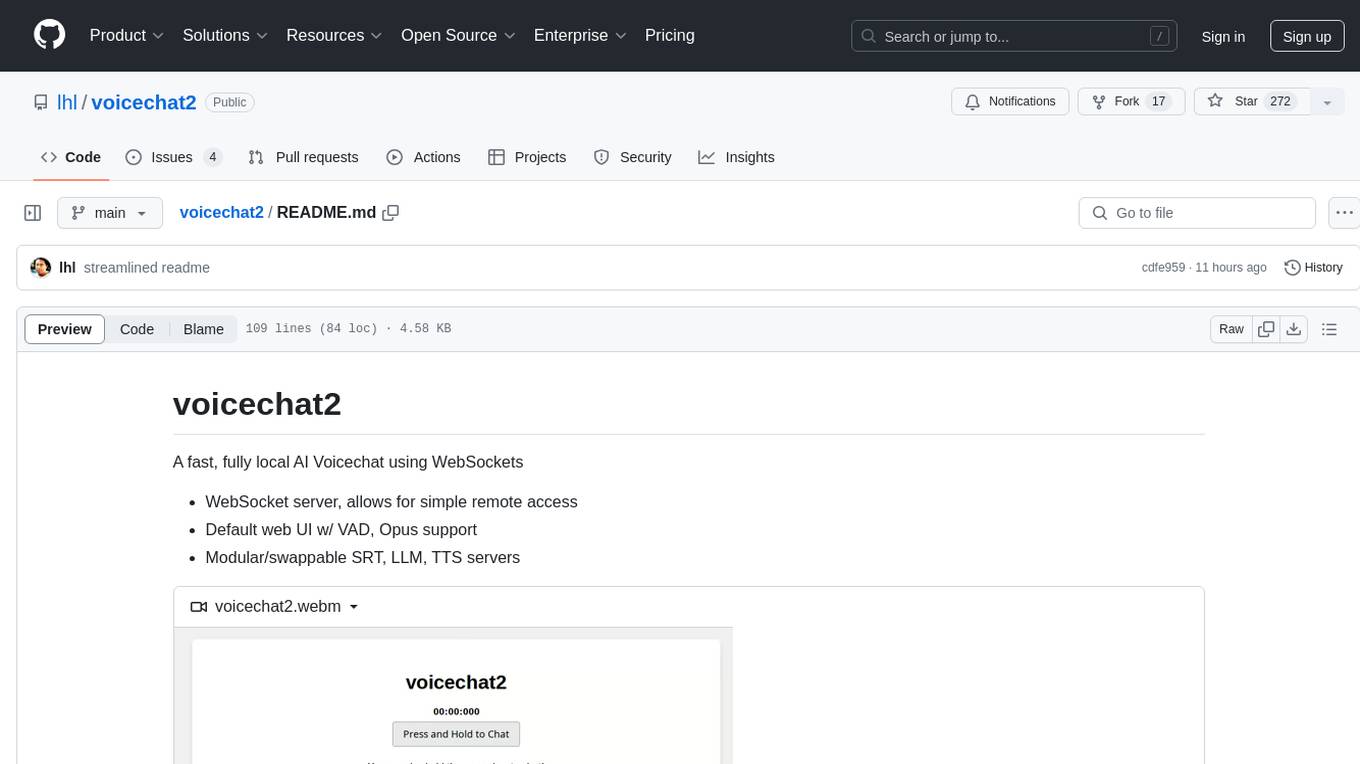

voicechat2

Voicechat2 is a fast, fully local AI voice chat tool that uses WebSockets for communication. It includes a WebSocket server for remote access, default web UI with VAD and Opus support, and modular/swappable SRT, LLM, TTS servers. Users can customize components like SRT, LLM, and TTS servers, and run different models for voice-to-voice communication. The tool aims to reduce latency in voice communication and provides flexibility in server configurations.

david-ai

David UI is a free and open-source collection of customizable, production-ready UI components built with Tailwind CSS. It is designed to be developer-friendly and performance-focused, streamlining the creation of modern, visually appealing interfaces to help deliver high-quality user experiences faster.

ai-cms-grapesjs

The Aimeos GrapesJS CMS extension provides a simple to use but powerful page editor for creating content pages based on extensible components. It integrates seamlessly with Laravel applications and allows users to easily manage and display CMS content. The tool also supports Google reCAPTCHA v3 for enhanced security. Users can create and customize pages with various components and manage multi-language setups effortlessly. The extension simplifies the process of creating and managing content pages, making it ideal for developers and businesses looking to enhance their website's content management capabilities.

continew-admin

Continew-admin is a responsive admin dashboard template built with Bootstrap 4. It provides a clean and intuitive user interface for managing and visualizing data in web applications. The template includes various components and widgets that can be easily customized to suit different project requirements. With Continew-admin, developers can quickly set up a professional-looking admin panel for their web applications.

tunix

Tunix is a JAX-based library designed for post-training Large Language Models. It provides efficient support for supervised fine-tuning, reinforcement learning, and knowledge distillation. Tunix leverages JAX for accelerated computation and integrates seamlessly with the Flax NNX modeling framework. The library is modular, efficient, and designed for distributed training on accelerators like TPUs. Currently in early development, Tunix aims to expand its capabilities, usability, and performance.

For similar jobs

Protofy

Protofy is a full-stack, batteries-included low-code enabled web/app and IoT system with an API system and real-time messaging. It is based on Protofy (protoflow + visualui + protolib + protodevices) + Expo + Next.js + Tamagui + Solito + Express + Aedes + Redbird + Many other amazing packages. Protofy can be used to fast prototype Apps, webs, IoT systems, automations, or APIs. It is a ultra-extensible CMS with supercharged capabilities, mobile support, and IoT support (esp32 thanks to esphome).

react-native-vision-camera

VisionCamera is a powerful, high-performance Camera library for React Native. It features Photo and Video capture, QR/Barcode scanner, Customizable devices and multi-cameras ("fish-eye" zoom), Customizable resolutions and aspect-ratios (4k/8k images), Customizable FPS (30..240 FPS), Frame Processors (JS worklets to run facial recognition, AI object detection, realtime video chats, ...), Smooth zooming (Reanimated), Fast pause and resume, HDR & Night modes, Custom C++/GPU accelerated video pipeline (OpenGL).

dev-conf-replay

This repository contains information about various IT seminars and developer conferences in South Korea, allowing users to watch replays of past events. It covers a wide range of topics such as AI, big data, cloud, infrastructure, devops, blockchain, mobility, games, security, mobile development, frontend, programming languages, open source, education, and community events. Users can explore upcoming and past events, view related YouTube channels, and access additional resources like free programming ebooks and data structures and algorithms tutorials.

OpenDevin

OpenDevin is an open-source project aiming to replicate Devin, an autonomous AI software engineer capable of executing complex engineering tasks and collaborating actively with users on software development projects. The project aspires to enhance and innovate upon Devin through the power of the open-source community. Users can contribute to the project by developing core functionalities, frontend interface, or sandboxing solutions, participating in research and evaluation of LLMs in software engineering, and providing feedback and testing on the OpenDevin toolset.

polyfire-js

Polyfire is an all-in-one managed backend for AI apps that allows users to build AI applications directly from the frontend, eliminating the need for a separate backend. It simplifies the process by providing most backend services in just a few lines of code. With Polyfire, users can easily create chatbots, transcribe audio files, generate simple text, manage long-term memory, and generate images. The tool also offers starter guides and tutorials to help users get started quickly and efficiently.

sdfx

SDFX is the ultimate no-code platform for building and sharing AI apps with beautiful UI. It enables the creation of user-friendly interfaces for complex workflows by combining Comfy workflow with a UI. The tool is designed to merge the benefits of form-based UI and graph-node based UI, allowing users to create intricate graphs with a high-level UI overlay. SDFX is fully compatible with ComfyUI, abstracting the need for installing ComfyUI. It offers features like animated graph navigation, node bookmarks, UI debugger, custom nodes manager, app and template export, image and mask editor, and more. The tool compiles as a native app or web app, making it easy to maintain and add new features.

aimeos-laravel

Aimeos Laravel is a professional, full-featured, and ultra-fast Laravel ecommerce package that can be easily integrated into existing Laravel applications. It offers a wide range of features including multi-vendor, multi-channel, and multi-warehouse support, fast performance, support for various product types, subscriptions with recurring payments, multiple payment gateways, full RTL support, flexible pricing options, admin backend, REST and GraphQL APIs, modular structure, SEO optimization, multi-language support, AI-based text translation, mobile optimization, and high-quality source code. The package is highly configurable and extensible, making it suitable for e-commerce SaaS solutions, marketplaces, and online shops with millions of vendors.

llm-ui

llm-ui is a React library designed for LLMs, providing features such as removing broken markdown syntax, adding custom components to LLM output, smoothing out pauses in streamed output, rendering at native frame rate, supporting code blocks for every language with Shiki, and being headless to allow for custom styles. The library aims to enhance the user experience and flexibility when working with LLMs.