Native-LLM-for-Android

Demonstration of running a native LLM on Android device.

Stars: 226

This repository provides a demonstration of running a native Large Language Model (LLM) on Android devices. It supports various models such as Qwen2.5-Instruct, MiniCPM-DPO/SFT, Yuan2.0, Gemma2-it, StableLM2-Chat/Zephyr, and Phi3.5-mini-instruct. The demo models are optimized for extreme execution speed after being converted from HuggingFace or ModelScope. Users can download the demo models from the provided drive link, place them in the assets folder, and follow specific instructions for decompression and model export. The repository also includes information on quantization methods and performance benchmarks for different models on various devices.

README:

Demonstration of running a native Large Language Model (LLM) on Android devices. Currently supported models include:

- Qwen3: 0.6B, 1.7B, 4B...

- Qwen3-VL: 2B, 4B...

- Qwen2.5-Instruct: 0.5B, 1.5B, 3B...

- Qwen2.5-VL: 3B

- DeepSeek-R1-Distill-Qwen: 1.5B

- MiniCPM-DPO/SFT: 1B, 2.7B

- Gemma-3-it: 1B, 4B...

- Phi-4-mini-Instruct: 3.8B

- Llama-3.2-Instruct: 1B

- InternVL-Mono: 2B

- InternLM-3: 8B

- Seed-X: PRO-7B, Instruct-7B

- HunYuan: MT-1.5-1.8B/7B

- 2026/01/04:Update HunYuan-MT-1.5

- 2025/11/11:Update Qwen3-VL.

- 2025/09/07:Update HunYuan-MT.

- 2025/08/02:Update Seed-X.

- 2025/04/29:Update Qwen3.

- 2025/04/05:Update Qwen2.5, InternVL-Mono

q4f32+dynamic_axes. - 2025/02/22:Support loading with low memory mode:

Qwen,QwenVL,MiniCPM_2B_single; Setlow_memory_mode = trueinMainActivity.java. - 2025/02/07:DeepSeek-R1-Distill-Qwen: 1.5B (Please using

Qwen v2.5 Qwen_Export.py)

-

Download Models:

- Quick Try: Qwen3-1.7B-Android

-

Setup Instructions:

- Place the downloaded model files into the

assetsfolder. - Decompress the

*.sofiles stored in thelibs/arm64-v8afolder.

- Place the downloaded model files into the

-

Model Notes:

- Demo models are converted from HuggingFace or ModelScope and optimized for extreme execution speed.

- Inputs and outputs may differ slightly from the original models.

- For Qwen2VL / Qwen2.5VL, adjust the key variables to match the model parameters.

GLRender.java: Line 37, 38, 39project.h: Line 14, 15, 16, 35, 36, 41, 59, 60

-

ONNX Export Considerations:

- It is recommended to use dynamic axes and q4f32 quantization.

- The

tokenizer.cppandtokenizer.hppfiles are sourced from the mnn-llm repository.

- Navigate to the

Export_ONNXfolder. - Follow the comments in the Python scripts to set the folder paths.

- Execute the

***_Export.pyscript to export the model. - Quantize or optimize the ONNX model manually.

- Use

onnxruntime.tools.convert_onnx_models_to_ortto convert models to*.ortformat. Note that this process automatically addsCastoperators that change FP16 multiplication to FP32. - The quantization methods are detailed in the

Do_Quantizefolder.

- Explore more projects: DakeQQ Projects

| OS | Device | Backend | Model | Inference (1024 Context) |

|---|---|---|---|---|

| Android 13 | Nubia Z50 | 8_Gen2-CPU | Qwen-2-1.5B-Instruct q8f32 |

20 token/s |

| Android 15 | Vivo x200 Pro | MediaTek_9400-CPU | Qwen-3-1.7B-Instruct q4f32 dynamic |

37 token/s |

| Harmony 4 | P40 | Kirin_990_5G-CPU | Qwen-3-1.7B-Instruct q4f32 dynamic |

18.5 token/s |

| Harmony 4 | P40 | Kirin_990_5G-CPU | Qwen-2.5-1.5B-Instruct q4f32 dynamic |

20.5 token/s |

| Harmony 4 | P40 | Kirin_990_5G-CPU | Qwen-2-1.5B-Instruct q8f32 |

13 token/s |

| Harmony 3 | 荣耀 20S | Kirin_810-CPU | Qwen-2-1.5B-Instruct q8f32 |

7 token/s |

| OS | Device | Backend | Model | Inference (1024 Context) |

|---|---|---|---|---|

| Android 13 | Nubia Z50 | 8_Gen2-CPU | QwenVL-2-2B q8f32 |

15 token/s |

| Harmony 4 | P40 | Kirin_990_5G-CPU | QwenVL-2-2B q8f32 |

9 token/s |

| Harmony 4 | P40 | Kirin_990_5G-CPU | QwenVL-2.5-3B q4f32 dynamic |

9 token/s |

惑

| OS | Device | Backend | Model | Inference (1024 Context) |

|---|---|---|---|---|

| Android 13 | Nubia Z50 | 8_Gen2-CPU | Distill-Qwen-1.5B q4f32 dynamic |

34.5 token/s |

| Harmony 4 | P40 | Kirin_990_5G-CPU | Distill-Qwen-1.5B q4f32 dynamic |

20.5 token/s |

| Harmony 4 | P40 | Kirin_990_5G-CPU | Distill-Qwen-1.5B q8f32 |

13 token/s |

| HyperOS 2 | Xiaomi-14T-Pro | MediaTek_9300+-CPU | Distill-Qwen-1.5B q8f32 |

22 token/s |

| OS | Device | Backend | Model | Inference (1024 Context) |

|---|---|---|---|---|

| Android 15 | Nubia Z50 | 8_Gen2-CPU | MiniCPM4-0.5B q4f32 |

78 token/s |

| Android 13 | Nubia Z50 | 8_Gen2-CPU | MiniCPM-2.7B q8f32 |

9.5 token/s |

| Android 13 | Nubia Z50 | 8_Gen2-CPU | MiniCPM-1.3B q8f32 |

16.5 token/s |

| Harmony 4 | P40 | Kirin_990_5G-CPU | MiniCPM-2.7B q8f32 |

6 token/s |

| Harmony 4 | P40 | Kirin_990_5G-CPU | MiniCPM-1.3B q8f32 |

11 token/s |

| OS | Device | Backend | Model | Inference (1024 Context) |

|---|---|---|---|---|

| Android 13 | Nubia Z50 | 8_Gen2-CPU | Gemma-1.1-it-2B q8f32 |

16 token/s |

| OS | Device | Backend | Model | Inference (1024 Context) |

|---|---|---|---|---|

| Android 13 | Nubia Z50 | 8_Gen2-CPU | Phi-2-2B-Orange-V2 q8f32 |

9.5 token/s |

| Harmony 4 | P40 | Kirin_990_5G-CPU | Phi-2-2B-Orange-V2 q8f32 |

5.8 token/s |

| OS | Device | Backend | Model | Inference (1024 Context) |

|---|---|---|---|---|

| Android 13 | Nubia Z50 | 8_Gen2-CPU | Llama-3.2-1B-Instruct q8f32 |

25 token/s |

| Harmony 4 | P40 | Kirin_990_5G-CPU | Llama-3.2-1B-Instruct q8f32 |

16 token/s |

| OS | Device | Backend | Model | Inference (1024 Context) |

|---|---|---|---|---|

| Harmony 4 | P40 | Kirin_990_5G-CPU | Mono-2B-S1-3 q4f32 dynamic |

10.5 token/s |

| OS | Device | Backend | Model | Inference (1024 Context) |

|---|---|---|---|---|

| Android 15 | Nubia Z50 | 8_Gen2-CPU | MiniCPM4-0.5B q4f32 |

78 token/s |

展示在 Android 设备上运行原生大型语言模型 (LLM) 的示范。目前支持的模型包括:

- Qwen3: 0.6B, 1.7B, 4B...

- Qwen3-VL: 2B, 4B...

- Qwen2.5-Instruct: 0.5B, 1.5B, 3B...

- Qwen2.5-VL: 3B

- DeepSeek-R1-Distill-Qwen: 1.5B

- MiniCPM-DPO/SFT: 1B, 2.7B

- Gemma-3-it: 1B, 4B...

- Phi-4-mini-Instruct: 3.8B

- Llama-3.2-Instruct: 1B

- InternVL-Mono: 2B

- InternLM-3: 8B

- Seed-X: PRO-7B, Instruct-7B

- HunYuan: MT-1.5-1.8B/7B

- 2026/01/04:Update HunYuan-MT-1.5

- 2025/11/11:更新 Qwen3-VL。

- 2025/09/07:更新 HunYuan-MT。

- 2025/08/02:更新 Seed-X。

- 2025/04/29:更新 Qwen3。

- 2025/04/05: 更新 Qwen2.5, InternVL-Mono

q4f32+dynamic_axes。 - 2025/02/22:支持低内存模式加载:

Qwen,QwenVL,MiniCPM_2B_single; Setlow_memory_mode = trueinMainActivity.java. - 2025/02/07:DeepSeek-R1-Distill-Qwen: 1.5B (请使用

Qwen v2.5 Qwen_Export.py)。

-

下载模型:

- Quick Try: Qwen3-1.7B-Android

-

设置说明:

- 将下载的模型文件放入

assets文件夹。 - 解压存储在

libs/arm64-v8a文件夹中的*.so文件。

- 将下载的模型文件放入

-

模型说明:

- 演示模型是从 HuggingFace 或 ModelScope 转换而来,并针对极限执行速度进行了优化。

- 输入和输出可能与原始模型略有不同。

- 对于Qwen2VL / Qwen2.5VL,请调整关键变量以匹配模型参数。

GLRender.java: Line 37, 38, 39project.h: Line 14, 15, 16, 35, 36, 41, 59, 60

-

ONNX 导出注意事项:

- 推荐使用动态轴以及

q4f32量化。

- 推荐使用动态轴以及

-

tokenizer.cpp和tokenizer.hpp文件来源于 mnn-llm 仓库。

- 进入

Export_ONNX文件夹。 - 按照 Python 脚本中的注释设置文件夹路径。

- 执行

***_Export.py脚本以导出模型。 - 手动量化或优化 ONNX 模型。

- 使用

onnxruntime.tools.convert_onnx_models_to_ort将模型转换为*.ort格式。注意该过程会自动添加Cast操作符,将 FP16 乘法改为 FP32。 - 量化方法详见

Do_Quantize文件夹。

- 探索更多项目:DakeQQ Projects

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Native-LLM-for-Android

Similar Open Source Tools

Native-LLM-for-Android

This repository provides a demonstration of running a native Large Language Model (LLM) on Android devices. It supports various models such as Qwen2.5-Instruct, MiniCPM-DPO/SFT, Yuan2.0, Gemma2-it, StableLM2-Chat/Zephyr, and Phi3.5-mini-instruct. The demo models are optimized for extreme execution speed after being converted from HuggingFace or ModelScope. Users can download the demo models from the provided drive link, place them in the assets folder, and follow specific instructions for decompression and model export. The repository also includes information on quantization methods and performance benchmarks for different models on various devices.

agentica

Agentica is a human-centric framework for building large language model agents. It provides functionalities for planning, memory management, tool usage, and supports features like reflection, planning and execution, RAG, multi-agent, multi-role, and workflow. The tool allows users to quickly code and orchestrate agents, customize prompts, and make API calls to various services. It supports API calls to OpenAI, Azure, Deepseek, Moonshot, Claude, Ollama, and Together. Agentica aims to simplify the process of building AI agents by providing a user-friendly interface and a range of functionalities for agent development.

speechless

Speechless.AI is committed to integrating the superior language processing and deep reasoning capabilities of large language models into practical business applications. By enhancing the model's language understanding, knowledge accumulation, and text creation abilities, and introducing long-term memory, external tool integration, and local deployment, our aim is to establish an intelligent collaborative partner that can independently interact, continuously evolve, and closely align with various business scenarios.

Element-Plus-X

Element-Plus-X is an out-of-the-box enterprise-level AI component library based on Vue 3 + Element-Plus. It features built-in scenario components such as chatbots and voice interactions, seamless integration with zero configuration based on Element-Plus design system, and support for on-demand loading with Tree Shaking optimization.

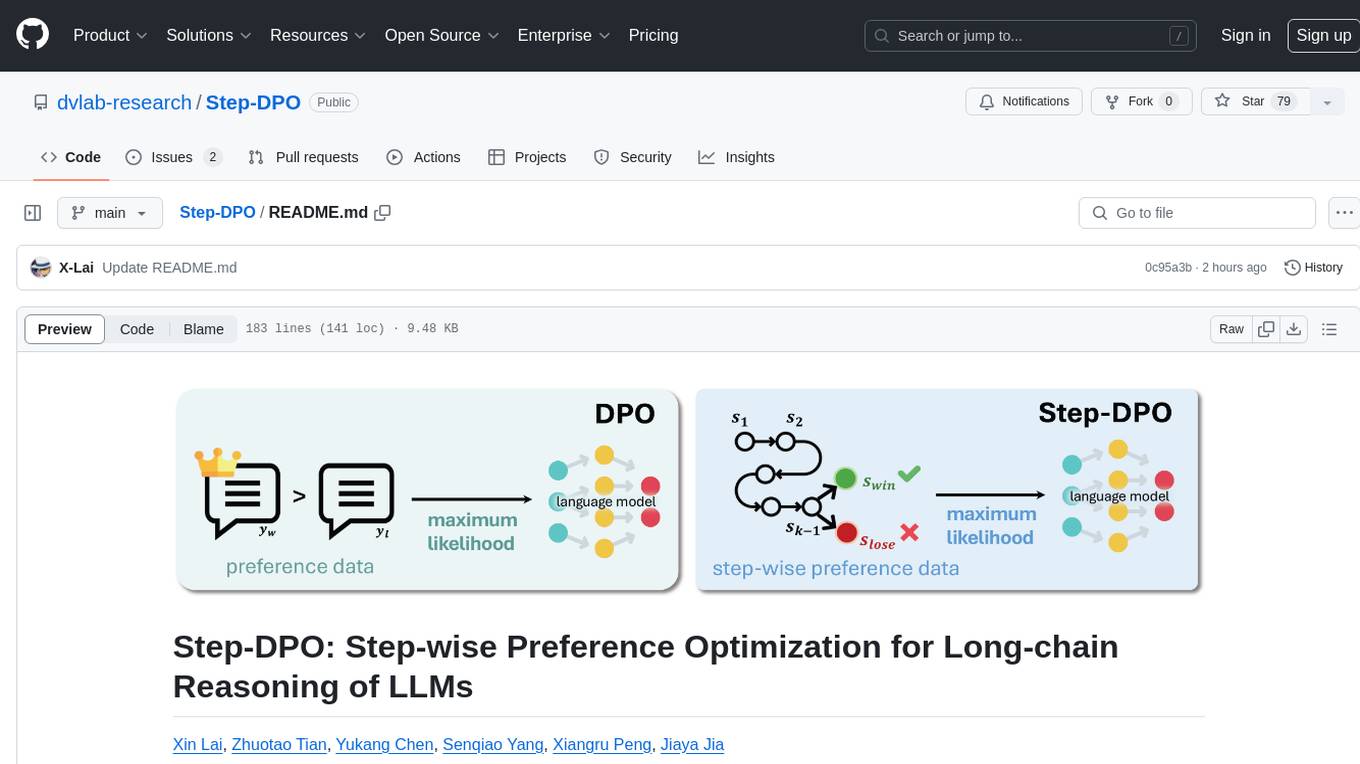

Step-DPO

Step-DPO is a method for enhancing long-chain reasoning ability of LLMs with a data construction pipeline creating a high-quality dataset. It significantly improves performance on math and GSM8K tasks with minimal data and training steps. The tool fine-tunes pre-trained models like Qwen2-7B-Instruct with Step-DPO, achieving superior results compared to other models. It provides scripts for training, evaluation, and deployment, along with examples and acknowledgements.

DataFlow

DataFlow is a data preparation and training system designed to parse, generate, process, and evaluate high-quality data from noisy sources, improving the performance of large language models in specific domains. It constructs diverse operators and pipelines, validated to enhance domain-oriented LLM's performance in fields like healthcare, finance, and law. DataFlow also features an intelligent DataFlow-agent capable of dynamically assembling new pipelines by recombining existing operators on demand.

MOSS-TTS

MOSS-TTS Family is an open-source speech and sound generation model family designed for high-fidelity, high-expressiveness, and complex real-world scenarios. It includes five production-ready models: MOSS-TTS, MOSS-TTSD, MOSS-VoiceGenerator, MOSS-TTS-Realtime, and MOSS-SoundEffect, each serving specific purposes in speech generation, dialogue, voice design, real-time interactions, and sound effect generation. The models offer features like long-speech generation, fine-grained control over phonemes and duration, multilingual synthesis, voice cloning, and real-time voice agents.

runanywhere-sdks

RunAnywhere is an on-device AI tool for mobile apps that allows users to run LLMs, speech-to-text, text-to-speech, and voice assistant features locally, ensuring privacy, offline functionality, and fast performance. The tool provides a range of AI capabilities without relying on cloud services, reducing latency and ensuring that no data leaves the device. RunAnywhere offers SDKs for Swift (iOS/macOS), Kotlin (Android), React Native, and Flutter, making it easy for developers to integrate AI features into their mobile applications. The tool supports various models for LLM, speech-to-text, and text-to-speech, with detailed documentation and installation instructions available for each platform.

prisma-ai

Prisma-AI is an open-source tool designed to assist users in their job search process by addressing common challenges such as lack of project highlights, mismatched resumes, difficulty in learning, and lack of answers in interview experiences. The tool utilizes AI to analyze user experiences, generate actionable project highlights, customize resumes for specific job positions, provide study materials for efficient learning, and offer structured interview answers. It also features a user-friendly interface for easy deployment and supports continuous improvement through user feedback and collaboration.

DeepAI

DeepAI is a proxy server that enhances the interaction experience of large language models (LLMs) by integrating the 'thinking chain' process. It acts as an intermediary layer, receiving standard OpenAI API compatible requests, using independent 'thinking services' to generate reasoning processes, and then forwarding the enhanced requests to the LLM backend of your choice. This ensures that responses are not only generated by the LLM but also based on pre-inference analysis, resulting in more insightful and coherent answers. DeepAI supports seamless integration with applications designed for the OpenAI API, providing endpoints for '/v1/chat/completions' and '/v1/models', making it easy to integrate into existing applications. It offers features such as reasoning chain enhancement, flexible backend support, API key routing, weighted random selection, proxy support, comprehensive logging, and graceful shutdown.

FaceAISDK_Android

FaceAI SDK is an on-device offline face detection, recognition, liveness detection, anti-spoofing, and 1:N/M:N face search SDK. It enables quick integration to achieve on-device face recognition, face search, and other functions. The SDK performs all functions offline on the device without the need for internet connection, ensuring privacy and security. It supports various actions for liveness detection, custom camera management, and clear imaging even in challenging lighting conditions.

tiny-llm-zh

Tiny LLM zh is a project aimed at building a small-parameter Chinese language large model for quick entry into learning large model-related knowledge. The project implements a two-stage training process for large models and subsequent human alignment, including tokenization, pre-training, instruction fine-tuning, human alignment, evaluation, and deployment. It is deployed on ModeScope Tiny LLM website and features open access to all data and code, including pre-training data and tokenizer. The project trains a tokenizer using 10GB of Chinese encyclopedia text to build a Tiny LLM vocabulary. It supports training with Transformers deepspeed, multiple machine and card support, and Zero optimization techniques. The project has three main branches: llama2_torch, main tiny_llm, and tiny_llm_moe, each with specific modifications and features.

AI0x0.com

AI 0x0 is a versatile AI query generation desktop floating assistant application that supports MacOS and Windows. It allows users to utilize AI capabilities in any desktop software to query and generate text, images, audio, and video data, helping them work more efficiently. The application features a dynamic desktop floating ball, floating dialogue bubbles, customizable presets, conversation bookmarking, preset packages, network acceleration, query mode, input mode, mouse navigation, deep customization of ChatGPT Next Web, support for full-format libraries, online search, voice broadcasting, voice recognition, voice assistant, application plugins, multi-model support, online text and image generation, image recognition, frosted glass interface, light and dark theme adaptation for each language model, and free access to all language models except Chat0x0 with a key.

WeClone

WeClone is a tool that fine-tunes large language models using WeChat chat records. It utilizes approximately 20,000 integrated and effective data points, resulting in somewhat satisfactory outcomes that are occasionally humorous. The tool's effectiveness largely depends on the quantity and quality of the chat data provided. It requires a minimum of 16GB of GPU memory for training using the default chatglm3-6b model with LoRA method. Users can also opt for other models and methods supported by LLAMA Factory, which consume less memory. The tool has specific hardware and software requirements, including Python, Torch, Transformers, Datasets, Accelerate, and other optional packages like CUDA and Deepspeed. The tool facilitates environment setup, data preparation, data preprocessing, model downloading, parameter configuration, model fine-tuning, and inference through a browser demo or API service. Additionally, it offers the ability to deploy a WeChat chatbot, although users should be cautious due to the risk of account suspension by WeChat.

UltraRAG

The UltraRAG framework is a researcher and developer-friendly RAG system solution that simplifies the process from data construction to model fine-tuning in domain adaptation. It introduces an automated knowledge adaptation technology system, supporting no-code programming, one-click synthesis and fine-tuning, multidimensional evaluation, and research-friendly exploration work integration. The architecture consists of Frontend, Service, and Backend components, offering flexibility in customization and optimization. Performance evaluation in the legal field shows improved results compared to VanillaRAG, with specific metrics provided. The repository is licensed under Apache-2.0 and encourages citation for support.

SakuraLLM

SakuraLLM is a project focused on building large language models for Japanese to Chinese translation in the light novel and galgame domain. The models are based on open-source large models and are pre-trained and fine-tuned on general Japanese corpora and specific domains. The project aims to provide high-performance language models for galgame/light novel translation that are comparable to GPT3.5 and can be used offline. It also offers an API backend for running the models, compatible with the OpenAI API format. The project is experimental, with version 0.9 showing improvements in style, fluency, and accuracy over GPT-3.5.

For similar tasks

lighteval

LightEval is a lightweight LLM evaluation suite that Hugging Face has been using internally with the recently released LLM data processing library datatrove and LLM training library nanotron. We're releasing it with the community in the spirit of building in the open. Note that it is still very much early so don't expect 100% stability ^^' In case of problems or question, feel free to open an issue!

Firefly

Firefly is an open-source large model training project that supports pre-training, fine-tuning, and DPO of mainstream large models. It includes models like Llama3, Gemma, Qwen1.5, MiniCPM, Llama, InternLM, Baichuan, ChatGLM, Yi, Deepseek, Qwen, Orion, Ziya, Xverse, Mistral, Mixtral-8x7B, Zephyr, Vicuna, Bloom, etc. The project supports full-parameter training, LoRA, QLoRA efficient training, and various tasks such as pre-training, SFT, and DPO. Suitable for users with limited training resources, QLoRA is recommended for fine-tuning instructions. The project has achieved good results on the Open LLM Leaderboard with QLoRA training process validation. The latest version has significant updates and adaptations for different chat model templates.

Awesome-Text2SQL

Awesome Text2SQL is a curated repository containing tutorials and resources for Large Language Models, Text2SQL, Text2DSL, Text2API, Text2Vis, and more. It provides guidelines on converting natural language questions into structured SQL queries, with a focus on NL2SQL. The repository includes information on various models, datasets, evaluation metrics, fine-tuning methods, libraries, and practice projects related to Text2SQL. It serves as a comprehensive resource for individuals interested in working with Text2SQL and related technologies.

create-million-parameter-llm-from-scratch

The 'create-million-parameter-llm-from-scratch' repository provides a detailed guide on creating a Large Language Model (LLM) with 2.3 million parameters from scratch. The blog replicates the LLaMA approach, incorporating concepts like RMSNorm for pre-normalization, SwiGLU activation function, and Rotary Embeddings. The model is trained on a basic dataset to demonstrate the ease of creating a million-parameter LLM without the need for a high-end GPU.

StableToolBench

StableToolBench is a new benchmark developed to address the instability of Tool Learning benchmarks. It aims to balance stability and reality by introducing features such as a Virtual API System with caching and API simulators, a new set of solvable queries determined by LLMs, and a Stable Evaluation System using GPT-4. The Virtual API Server can be set up either by building from source or using a prebuilt Docker image. Users can test the server using provided scripts and evaluate models with Solvable Pass Rate and Solvable Win Rate metrics. The tool also includes model experiments results comparing different models' performance.

BetaML.jl

The Beta Machine Learning Toolkit is a package containing various algorithms and utilities for implementing machine learning workflows in multiple languages, including Julia, Python, and R. It offers a range of supervised and unsupervised models, data transformers, and assessment tools. The models are implemented entirely in Julia and are not wrappers for third-party models. Users can easily contribute new models or request implementations. The focus is on user-friendliness rather than computational efficiency, making it suitable for educational and research purposes.

AI-TOD

AI-TOD is a dataset for tiny object detection in aerial images, containing 700,621 object instances across 28,036 images. Objects in AI-TOD are smaller with a mean size of 12.8 pixels compared to other aerial image datasets. To use AI-TOD, download xView training set and AI-TOD_wo_xview, then generate the complete dataset using the provided synthesis tool. The dataset is publicly available for academic and research purposes under CC BY-NC-SA 4.0 license.

UMOE-Scaling-Unified-Multimodal-LLMs

Uni-MoE is a MoE-based unified multimodal model that can handle diverse modalities including audio, speech, image, text, and video. The project focuses on scaling Unified Multimodal LLMs with a Mixture of Experts framework. It offers enhanced functionality for training across multiple nodes and GPUs, as well as parallel processing at both the expert and modality levels. The model architecture involves three training stages: building connectors for multimodal understanding, developing modality-specific experts, and incorporating multiple trained experts into LLMs using the LoRA technique on mixed multimodal data. The tool provides instructions for installation, weights organization, inference, training, and evaluation on various datasets.

For similar jobs

react-native-vision-camera

VisionCamera is a powerful, high-performance Camera library for React Native. It features Photo and Video capture, QR/Barcode scanner, Customizable devices and multi-cameras ("fish-eye" zoom), Customizable resolutions and aspect-ratios (4k/8k images), Customizable FPS (30..240 FPS), Frame Processors (JS worklets to run facial recognition, AI object detection, realtime video chats, ...), Smooth zooming (Reanimated), Fast pause and resume, HDR & Night modes, Custom C++/GPU accelerated video pipeline (OpenGL).

iris_android

This repository contains an offline Android chat application based on llama.cpp example. Users can install, download models, and run the app completely offline and privately. To use the app, users need to go to the releases page, download and install the app. Building the app requires downloading Android Studio, cloning the repository, and importing it into Android Studio. The app can be run offline by following specific steps such as enabling developer options, wireless debugging, and downloading the stable LM model. The project is maintained by Nerve Sparks and contributions are welcome through creating feature branches and pull requests.

aiolauncher_scripts

AIO Launcher Scripts is a collection of Lua scripts that can be used with AIO Launcher to enhance its functionality. These scripts can be used to create widget scripts, search scripts, and side menu scripts. They provide various functions such as displaying text, buttons, progress bars, charts, and interacting with app widgets. The scripts can be used to customize the appearance and behavior of the launcher, add new features, and interact with external services.

gemini-android

Gemini Android is a repository showcasing Google's Generative AI on Android using Stream Chat SDK for Compose. It demonstrates the Gemini API for Android, implements UI elements with Jetpack Compose, utilizes Android architecture components like Hilt and AppStartup, performs background tasks with Kotlin Coroutines, and integrates chat systems with Stream Chat Compose SDK for real-time event handling. The project also provides technical content, instructions on building the project, tech stack details, architecture overview, modularization strategies, and a contribution guideline. It follows Google's official architecture guidance and offers a real-world example of app architecture implementation.

blinkid-android

The BlinkID Android SDK is a comprehensive solution for implementing secure document scanning and extraction. It offers powerful capabilities for extracting data from a wide range of identification documents. The SDK provides features for integrating document scanning into Android apps, including camera requirements, SDK resource pre-bundling, customizing the UX, changing default strings and localization, troubleshooting integration difficulties, and using the SDK through various methods. It also offers options for completely custom UX with low-level API integration. The SDK size is optimized for different processor architectures, and API documentation is available for reference. For any questions or support, users can contact the Microblink team at help.microblink.com.

react-native-airship

React Native Airship is a module designed to integrate Airship's iOS and Android SDKs into React Native applications. It provides developers with the necessary tools to incorporate Airship's push notification services seamlessly. The module offers a simple and efficient way to leverage Airship's features within React Native projects, enhancing user engagement and retention through targeted notifications.

gpt_mobile

GPT Mobile is a chat assistant for Android that allows users to chat with multiple models at once. It supports various platforms such as OpenAI GPT, Anthropic Claude, and Google Gemini. Users can customize temperature, top p (Nucleus sampling), and system prompt. The app features local chat history, Material You style UI, dark mode support, and per app language setting for Android 13+. It is built using 100% Kotlin, Jetpack Compose, and follows a modern app architecture for Android developers.

Native-LLM-for-Android

This repository provides a demonstration of running a native Large Language Model (LLM) on Android devices. It supports various models such as Qwen2.5-Instruct, MiniCPM-DPO/SFT, Yuan2.0, Gemma2-it, StableLM2-Chat/Zephyr, and Phi3.5-mini-instruct. The demo models are optimized for extreme execution speed after being converted from HuggingFace or ModelScope. Users can download the demo models from the provided drive link, place them in the assets folder, and follow specific instructions for decompression and model export. The repository also includes information on quantization methods and performance benchmarks for different models on various devices.