Muice-Chatbot

沐雪,一个会自动找你聊天的AI女孩子

Stars: 314

Muice-Chatbot is an AI chatbot designed to proactively engage in conversations with users. It is based on the ChatGLM2-6B and Qwen-7B models, with a training dataset of 1.8K+ dialogues. The chatbot has a speaking style similar to a 2D girl, being somewhat tsundere but willing to share daily life details and greet users differently every day. It provides various functionalities, including initiating chats and offering 5 available commands. The project supports model loading through different methods and provides onebot service support for QQ users. Users can interact with the chatbot by running the main.py file in the project directory.

README:

[!IMPORTANT]

2025.01.02 更新:本项目依赖于 LiteLoaderQQNT 框架。自 2024.11.23 起,陆续有用户反馈自己使用该框架而被封号的事件(#1032)。需要声明的一点是,本 Repo 与此次封号事件无直接关联,但继续使用此 Repo 有着被封号的风险,继续使用则代表您承认此后所遭遇到的账号问题与本 Repo 无关。但现在您可以使用 Telegram Bot 运行我们的服务

2024.12.04 更新:由于配置文件格式变更,如果先前你拉取过本 Repo 并在12.04后执行过 fetch 操作,请您重新设置配置文件,由此带来的不便我们深表歉意

沐雪,一只会主动找你聊天的 AI 女孩子,其对话模型基于 ChatGLM2-6B 与 Qwen 微调而成,训练集体量 3k+ ,具有二次元女孩子的说话风格,比较傲娇,但乐于和你分享生活的琐碎,每天会给你不一样的问候。

✅ 支持近乎全自动安装环境

✅ 提供本人由 3k+ 对话数据微调的 ChatGLM2-6B P-Tuning V2 模型与 Qwen Lora 微调模型

✅ 主动发起聊天(随机和每天早中晚固定时间)

✅ 提供 5 条命令以便在聊天中进行刷新回复等操作

✅ OFA 图像识别:识别表情包、理解表情包、发送表情包

✅ 支持通过 fishaudio/fish-speech 进行语言合成(沐雪 TTS 模型尚未发布)

✅ 在群聊中聊天(支持被 @ 回复或不被 @ 随机回复)

✅ 在控制台中实时语音对话(暂不支持打 QQ 语音)

✅ 多语言文档

✅ 常见 Q&A 指南

✅ 清晰的日志管理输出

✅ Faiss 记忆模块,从过去的对话数据中进行检索并自动加入上下文

建议环境:

- Python 3.10+

- 一张拥有 6GB 及以上显存的显卡(int4 量化最低要求为 4G 显存,CPU 推理需要 16G 及以上内存)

目前已做到自动安装所有软件、依赖,通过 Code -> Download ZIP 下载解压最新源码。

双击 install_env.bat 安装(不能启用旧版控制台),或在命令行中运行以下命令:

.\install_env.bat自动安装可能需要较长时间,请耐心等待,安装完成后,你仍需手动下载模型。

自动安装脚本使用的是 Python 虚拟环境,不需要 Conda,请留意安装脚本的提示。

git clone https://github.com/Moemu/Muice-Chatbot

cd Muice-Chatbot

conda create --name Muice python=3.10.10 -y

conda activate Muice

pip install -r requirements.txt -i https://mirrors.tuna.tsinghua.edu.cn/pypi/web/simple对于 GPU 用户,请额外执行

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu124对于 GPU 用户,请确保您已配置好 cuda 环境。参考链接

目前支持的基底模型如下表:

| 基底模型 | 对应微调模型版本号 | 对应 loader | 额外依赖库 |

|---|---|---|---|

| ChatGLM2-6B-Int4 | 2.2-2.4 | transformers | cpm_kernels |

| ChatGLM2-6B | 2.0-2.3 | transformers | |

| Qwen-7B-Chat-Int4 | 2.3、2.6.2 | llmtuner | |

| Qwen2-1.5B-Instruct-GPTQ-Int4 | 2.5.3 | llmtuner | |

| Qwen2.5-7B-Instruct-GPTQ-Int4 | 2.7.1 | llmtuner | |

| RWKV(Seikaijyu微调) | 参见 HF | rwkv-api | (需要下载配置 RWKV-Runner) |

本项目的requirements.txt基于 llmtuner 环境要求搭建,因此我们建议使用 Qwen 系列模型,若选用 ChatGLM 系列模型,可能会导致环境错误。

微调模型下载:Releases

建议将基底模型与微调模型放入 model 文件夹中然后在配置文件中配置相应配置项(确保配置文件中的路径目录下存在多个模型文件而不是只有一个文件夹,部分微调模型由于疏忽还套了一层 checkpoint-xxx 文件夹)

本仓库目前支持如下模型加载方式:

- 通过 API 加载

- 通过

transformers的AutoTokenizer,AutoModel函数加载 - 通过

llmtuner.chat(LLaMA-Factory)的ChatModel类加载 - 通过

RWKV-Runner提供的 API 服务加载

在配置文件中可调整模型的加载方式:

model:

loader: llmtuner # 模型加载器 transformers/llmtuner/rwkv-api

model_path: model/Qwen2.5-7B-Instruct-GPTQ-Int4 # 基底模型路径

adapter_path: model/Muice-2.7.1-Qwen2.5-7B-Instruct-GPTQ-Int4-8e-4 # 微调模型路径(若是 API / rwkv-api 加载,model_name_or_path 填写对应的 API 地址)

如果你没有合适的显卡,需要通过 CPU 加载模型或者需要加载量化模型,请安装并配置 GCC 环境,然后勾选 openmp。参考链接

现以提供 OneBot 服务支持, 无需担心 gocq 的风控(喜)

本项目使用 OneBot V11 协议, 若您希望于 QQ 使用, 推荐参考 LLOneBot 使用 OneBot 服务

注:请在安装好 LLOneBot 后, 于设置中开启反向 WebSocket 服务, 填写 ws://127.0.0.1:21050/ws/api, 以正常运行

您也可以使用 Lagrange.Core 以及 OpenShamrock 等来链接QQ, 或其他适配器链接其他软件,详见 OneBot V11 适配器

能使用请勿随意更新 QQNT, 若无法使用请尝试降级 QQNT

在 Telegram Bot 中使用的方法:迁移至 Telegram Bot

配置文件机器说明位于 configs.yml,请根据你的需求进行修改

2024.12.04更新:我们更新了配置文件格式,为了迎合即将到来的 2.7.x 模型,我们添加了如下配置项:

# 主动对话相关

active:

enable: false # 是否启用主动对话

rate: 0.003 # 主动对话概率(每分钟)

active_prompts:

- '<生成推文: 胡思乱想>'

- '<生成推文: AI生活>'

- '<生成推文: AI思考>'

- '<生成推文: 表达爱意>'

- '<生成推文: 情感建议>'

not_disturb: true # 是否开启免打扰模式

shecdule:

enable: true # 是否启用定时任务

rate: 0.75 # 定时任务概率(每次)

tasks:

- hour: 8

prompt: '<日常问候: 早上>'

- hour: 12

prompt: '<日常问候: 中午>'

- hour: 18

prompt: '<日常问候: 傍晚>'

- hour: 22

prompt: '<日常问候: 深夜>'

targets: # 主动对话目标QQ号

- 12345678

- 23456789如果你使用的是 2.7.x 之前的模型,请更改如下配置项:

active_prompts:

- '(分享一下你的一些想法)'

- '(创造一个新话题)'以及:

tasks:

- hour: 8

prompt: '(发起一个早晨问候)'

- hour: 12

prompt: '(发起一个中午问候)'

- hour: 18

prompt: '(发起一个傍晚问候)'

- hour: 22

prompt: '(发起一个临睡问候)'在本项目根目录下运行 main.py

conda activate Muice

python main.py或是运行自动安装脚本生成的启动脚本start.bat

| 命令 | 释义 |

|---|---|

| /clean | 清空本轮对话历史 |

| /refresh | 刷新本次对话 |

| /help | 显示所有可用的命令列表 |

| /reset | 重置所有对话数据(将存档对话数据) |

| /undo | 撤销上一次对话 |

参见公开的训练集 Moemu/Muice-Dataset

与其他聊天机器人项目不同,本项目提供由本人通过自家对话数据集微调后的模型,在 Release 中提供下载,关于微调后的模型人设,目前公开的信息如下:

训练集开源地址: Moemu/Muice-Dataset

原始模型:THUDM/ChatGLM2-6B & QwenLM/Qwen)

本项目源码使用 MIT License,对于微调后的模型文件,不建议将其作为商业用途

代码实现:Moemu、MoeSnowyFox、NaivG、zkhssb

训练集编写与模型微调:Moemu (RWKV 微调:Seikaijyu)

友情连接:Coral 框架

总代码贡献:

如果此项目对你有帮助,您可以考虑赞助。

感谢你们所有人的支持!

本项目隶属于 Muice-Project。

Star History:

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Muice-Chatbot

Similar Open Source Tools

Muice-Chatbot

Muice-Chatbot is an AI chatbot designed to proactively engage in conversations with users. It is based on the ChatGLM2-6B and Qwen-7B models, with a training dataset of 1.8K+ dialogues. The chatbot has a speaking style similar to a 2D girl, being somewhat tsundere but willing to share daily life details and greet users differently every day. It provides various functionalities, including initiating chats and offering 5 available commands. The project supports model loading through different methods and provides onebot service support for QQ users. Users can interact with the chatbot by running the main.py file in the project directory.

Langchain-Chatchat

LangChain-Chatchat is an open-source, offline-deployable retrieval-enhanced generation (RAG) large model knowledge base project based on large language models such as ChatGLM and application frameworks such as Langchain. It aims to establish a knowledge base Q&A solution that is friendly to Chinese scenarios, supports open-source models, and can run offline.

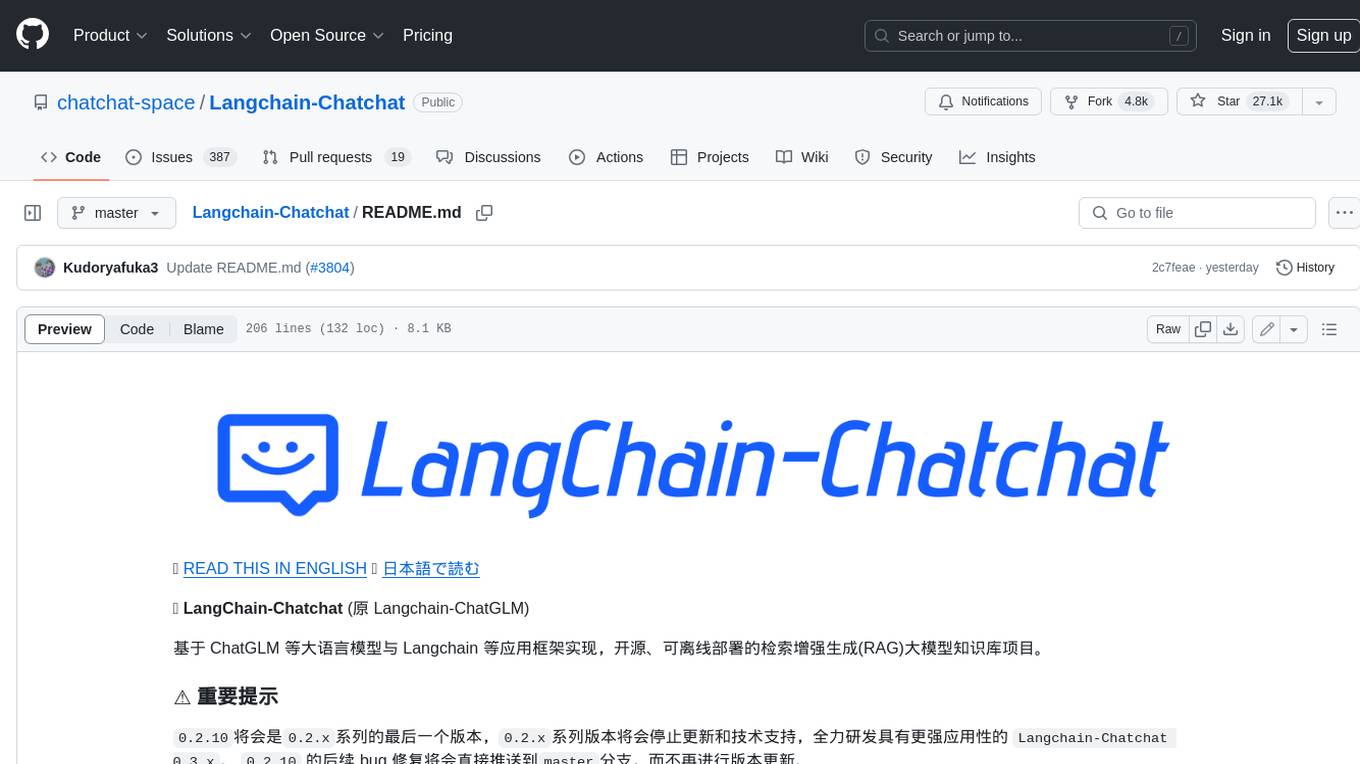

ChatTTS-Forge

ChatTTS-Forge is a powerful text-to-speech generation tool that supports generating rich audio long texts using a SSML-like syntax and provides comprehensive API services, suitable for various scenarios. It offers features such as batch generation, support for generating super long texts, style prompt injection, full API services, user-friendly debugging GUI, OpenAI-style API, Google-style API, support for SSML-like syntax, speaker management, style management, independent refine API, text normalization optimized for ChatTTS, and automatic detection and processing of markdown format text. The tool can be experienced and deployed online through HuggingFace Spaces, launched with one click on Colab, deployed using containers, or locally deployed after cloning the project, preparing models, and installing necessary dependencies.

grps_trtllm

The grps-trtllm repository is a C++ implementation of a high-performance OpenAI LLM service, combining GRPS and TensorRT-LLM. It supports functionalities like Chat, Ai-agent, and Multi-modal. The repository offers advantages over triton-trtllm, including a complete LLM service implemented in pure C++, integrated tokenizer supporting huggingface and sentencepiece, custom HTTP functionality for OpenAI interface, support for different LLM prompt styles and result parsing styles, integration with tensorrt backend and opencv library for multi-modal LLM, and stable performance improvement compared to triton-trtllm.

build_MiniLLM_from_scratch

This repository aims to build a low-parameter LLM model through pretraining, fine-tuning, model rewarding, and reinforcement learning stages to create a chat model capable of simple conversation tasks. It features using the bert4torch training framework, seamless integration with transformers package for inference, optimized file reading during training to reduce memory usage, providing complete training logs for reproducibility, and the ability to customize robot attributes. The chat model supports multi-turn conversations. The trained model currently only supports basic chat functionality due to limitations in corpus size, model scale, SFT corpus size, and quality.

Qwen-TensorRT-LLM

Qwen-TensorRT-LLM is a project developed for the NVIDIA TensorRT Hackathon 2023, focusing on accelerating inference for the Qwen-7B-Chat model using TRT-LLM. The project offers various functionalities such as FP16/BF16 support, INT8 and INT4 quantization options, Tensor Parallel for multi-GPU parallelism, web demo setup with gradio, Triton API deployment for maximum throughput/concurrency, fastapi integration for openai requests, CLI interaction, and langchain support. It supports models like qwen2, qwen, and qwen-vl for both base and chat models. The project also provides tutorials on Bilibili and blogs for adapting Qwen models in NVIDIA TensorRT-LLM, along with hardware requirements and quick start guides for different model types and quantization methods.

llmio

LLMIO is a Go-based LLM load balancing gateway that provides a unified REST API, weight scheduling, logging, and modern management interface for your LLM clients. It helps integrate different model capabilities from OpenAI, Anthropic, Gemini, and more in a single service. Features include unified API compatibility, weight scheduling with two strategies, visual management dashboard, rate and failure handling, and local persistence with SQLite. The tool supports multiple vendors' APIs and authentication methods, making it versatile for various AI model integrations.

moonpalace

MoonPalace is a debugging tool for API provided by Moonshot AI. It supports all platforms (Mac, Windows, Linux) and is simple to use by replacing 'base_url' with 'http://localhost:9988'. It captures complete requests, including 'accident scenes' during network errors, and allows quick retrieval and viewing of request information using 'request_id' and 'chatcmpl_id'. It also enables one-click export of BadCase structured reporting data to help improve Kimi model capabilities. MoonPalace is recommended for use as an API 'supplier' during code writing and debugging stages to quickly identify and locate various issues related to API calls and code writing processes, and to export request details for submission to Moonshot AI to improve Kimi model.

md

The WeChat Markdown editor automatically renders Markdown documents as WeChat articles, eliminating the need to worry about WeChat content layout! As long as you know basic Markdown syntax (now with AI, you don't even need to know Markdown), you can create a simple and elegant WeChat article. The editor supports all basic Markdown syntax, mathematical formulas, rendering of Mermaid charts, GFM warning blocks, PlantUML rendering support, ruby annotation extension support, rich code block highlighting themes, custom theme colors and CSS styles, multiple image upload functionality with customizable configuration of image hosting services, convenient file import/export functionality, built-in local content management with automatic draft saving, integration of mainstream AI models (such as DeepSeek, OpenAI, Tongyi Qianwen, Tencent Hanyuan, Volcano Ark, etc.) to assist content creation.

SakuraLLM

SakuraLLM is a project focused on building large language models for Japanese to Chinese translation in the light novel and galgame domain. The models are based on open-source large models and are pre-trained and fine-tuned on general Japanese corpora and specific domains. The project aims to provide high-performance language models for galgame/light novel translation that are comparable to GPT3.5 and can be used offline. It also offers an API backend for running the models, compatible with the OpenAI API format. The project is experimental, with version 0.9 showing improvements in style, fluency, and accuracy over GPT-3.5.

ChatGLM3

ChatGLM3 is a conversational pretrained model jointly released by Zhipu AI and THU's KEG Lab. ChatGLM3-6B is the open-sourced model in the ChatGLM3 series. It inherits the advantages of its predecessors, such as fluent conversation and low deployment threshold. In addition, ChatGLM3-6B introduces the following features: 1. A stronger foundation model: ChatGLM3-6B's foundation model ChatGLM3-6B-Base employs more diverse training data, more sufficient training steps, and more reasonable training strategies. Evaluation on datasets from different perspectives, such as semantics, mathematics, reasoning, code, and knowledge, shows that ChatGLM3-6B-Base has the strongest performance among foundation models below 10B parameters. 2. More complete functional support: ChatGLM3-6B adopts a newly designed prompt format, which supports not only normal multi-turn dialogue, but also complex scenarios such as tool invocation (Function Call), code execution (Code Interpreter), and Agent tasks. 3. A more comprehensive open-source sequence: In addition to the dialogue model ChatGLM3-6B, the foundation model ChatGLM3-6B-Base, the long-text dialogue model ChatGLM3-6B-32K, and ChatGLM3-6B-128K, which further enhances the long-text comprehension ability, are also open-sourced. All the above weights are completely open to academic research and are also allowed for free commercial use after filling out a questionnaire.

tiny-llm-zh

Tiny LLM zh is a project aimed at building a small-parameter Chinese language large model for quick entry into learning large model-related knowledge. The project implements a two-stage training process for large models and subsequent human alignment, including tokenization, pre-training, instruction fine-tuning, human alignment, evaluation, and deployment. It is deployed on ModeScope Tiny LLM website and features open access to all data and code, including pre-training data and tokenizer. The project trains a tokenizer using 10GB of Chinese encyclopedia text to build a Tiny LLM vocabulary. It supports training with Transformers deepspeed, multiple machine and card support, and Zero optimization techniques. The project has three main branches: llama2_torch, main tiny_llm, and tiny_llm_moe, each with specific modifications and features.

chatluna

Chatluna is a machine learning model plugin that provides chat services with large language models. It is highly extensible, supports multiple output formats, and offers features like custom conversation presets, rate limiting, and context awareness. Users can deploy Chatluna under Koishi without additional configuration. The plugin supports various models/platforms like OpenAI, Azure OpenAI, Google Gemini, and more. It also provides preset customization using YAML files and allows for easy forking and development within Koishi projects. However, the project lacks web UI, HTTP server, and project documentation, inviting contributions from the community.

DeepAI

DeepAI is a proxy server that enhances the interaction experience of large language models (LLMs) by integrating the 'thinking chain' process. It acts as an intermediary layer, receiving standard OpenAI API compatible requests, using independent 'thinking services' to generate reasoning processes, and then forwarding the enhanced requests to the LLM backend of your choice. This ensures that responses are not only generated by the LLM but also based on pre-inference analysis, resulting in more insightful and coherent answers. DeepAI supports seamless integration with applications designed for the OpenAI API, providing endpoints for '/v1/chat/completions' and '/v1/models', making it easy to integrate into existing applications. It offers features such as reasoning chain enhancement, flexible backend support, API key routing, weighted random selection, proxy support, comprehensive logging, and graceful shutdown.

api-for-open-llm

This project provides a unified backend interface for open large language models (LLMs), offering a consistent experience with OpenAI's ChatGPT API. It supports various open-source LLMs, enabling developers to seamlessly integrate them into their applications. The interface features streaming responses, text embedding capabilities, and support for LangChain, a tool for developing LLM-based applications. By modifying environment variables, developers can easily use open-source models as alternatives to ChatGPT, providing a cost-effective and customizable solution for various use cases.

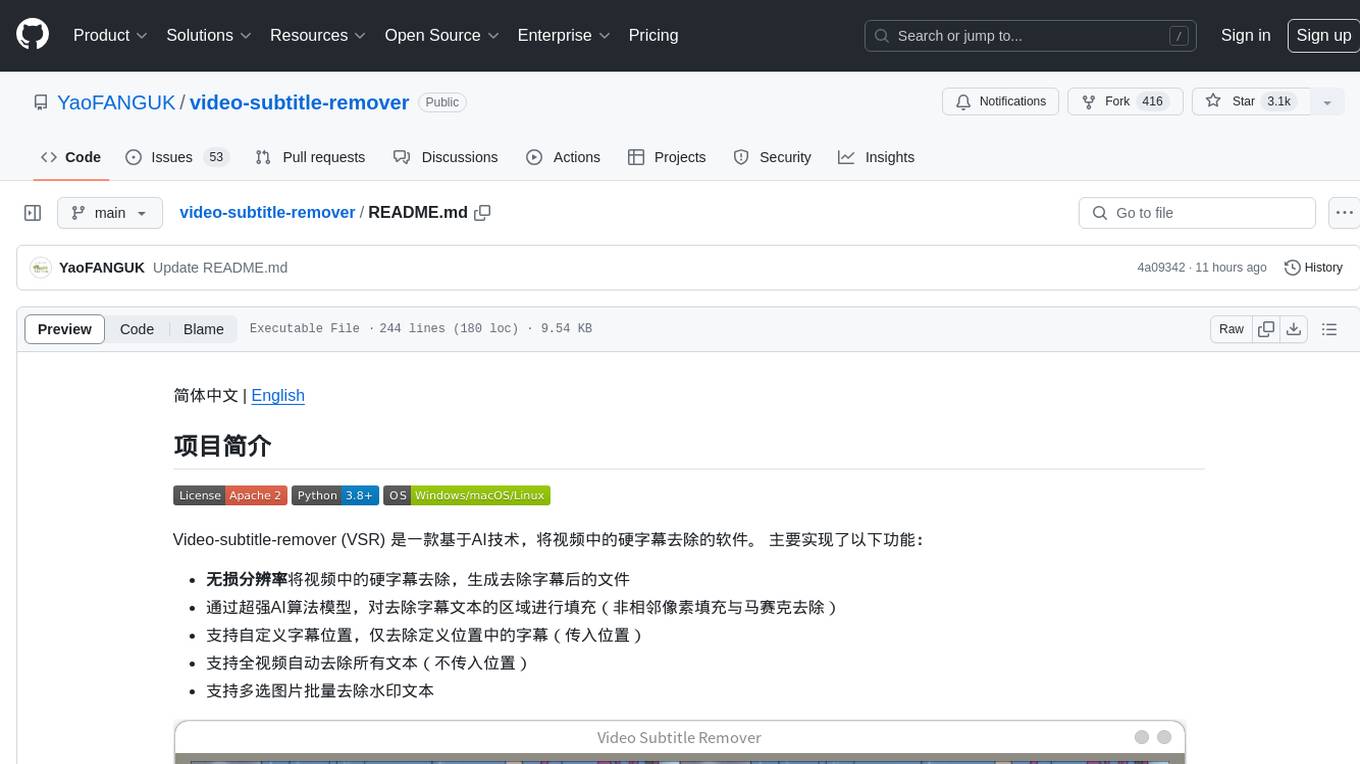

video-subtitle-remover

Video-subtitle-remover (VSR) is a software based on AI technology that removes hard subtitles from videos. It achieves the following functions: - Lossless resolution: Remove hard subtitles from videos, generate files with subtitles removed - Fill the region of removed subtitles using a powerful AI algorithm model (non-adjacent pixel filling and mosaic removal) - Support custom subtitle positions, only remove subtitles in defined positions (input position) - Support automatic removal of all text in the entire video (no input position required) - Support batch removal of watermark text from multiple images.

For similar tasks

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

khoj

Khoj is an open-source, personal AI assistant that extends your capabilities by creating always-available AI agents. You can share your notes and documents to extend your digital brain, and your AI agents have access to the internet, allowing you to incorporate real-time information. Khoj is accessible on Desktop, Emacs, Obsidian, Web, and Whatsapp, and you can share PDF, markdown, org-mode, notion files, and GitHub repositories. You'll get fast, accurate semantic search on top of your docs, and your agents can create deeply personal images and understand your speech. Khoj is self-hostable and always will be.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.