spiceai

A portable accelerated SQL query, search, and LLM-inference engine, written in Rust, for data-grounded AI apps and agents.

Stars: 2805

Spice is a portable runtime written in Rust that offers developers a unified SQL interface to materialize, accelerate, and query data from any database, data warehouse, or data lake. It connects, fuses, and delivers data to applications, machine-learning models, and AI-backends, functioning as an application-specific, tier-optimized Database CDN. Built with industry-leading technologies such as Apache DataFusion, Apache Arrow, Apache Arrow Flight, SQLite, and DuckDB. Spice makes it fast and easy to query data from one or more sources using SQL, co-locating a managed dataset with applications or machine learning models, and accelerating it with Arrow in-memory, SQLite/DuckDB, or attached PostgreSQL for fast, high-concurrency, low-latency queries.

README:

📄 Docs | ⚡️ Quickstart | 🧑🍳 Cookbook

Spice is a SQL query, search, and LLM-inference engine, written in Rust, for data apps and agents.

Spice provides four industry standard APIs in a lightweight, portable runtime (single binary/container):

-

SQL Query & Search: HTTP, Arrow Flight, Arrow Flight SQL, ODBC, JDBC, and ADBC APIs;

vector_searchandtext_searchUDTFs. - OpenAI-Compatible APIs: HTTP APIs for OpenAI SDK compatibility, local model serving (CUDA/Metal accelerated), and hosted model gateway.

- Iceberg Catalog REST APIs: A unified Iceberg REST Catalog API.

- MCP HTTP+SSE APIs: Integration with external tools via Model Context Protocol (MCP) using HTTP and Server-Sent Events (SSE).

🎯 Goal: Developers can focus on building data apps and AI agents confidently, knowing they are grounded in data.

Spice's primary features include:

- Data Federation: SQL query across any database, data warehouse, or data lake. Scale from single-node to distributed multi-node query execution. Learn More.

- Data Materialization and Acceleration: Materialize, accelerate, and cache database queries with Arrow, DuckDB, SQLite, PostgreSQL, or Spice Cayenne (Vortex). Read the MaterializedView interview - Building a CDN for Databases

- Enterprise Search: Keyword, vector, and full-text search with Tantivy-powered BM25 and petabyte-scale vector similarity search via Amazon S3 Vectors or pgvector for structured and unstructured data.

- AI apps and agents: An AI-database powering retrieval-augmented generation (RAG) and intelligent agents with OpenAI-compatible APIs and MCP integration. Learn More.

If you want to build with DataFusion, DuckDB, or Vortex, Spice provides a simple, flexible, and production-ready engine you can just use.

📣 Read the Spice.ai 1.0-stable announcement.

Spice is built-on industry leading technologies including Apache DataFusion, Apache Arrow, Arrow Flight, SQLite, and DuckDB.

🎥 Watch the CMU Databases Accelerating Data and AI with Spice.ai Open-Source

🎥 Watch How to Query Data using Spice, OpenAI, and MCP

🎥 Watch How to search with Amazon S3 Vectors

Spice simplifies building data-driven AI applications and agents by making it fast and easy to query, federate, and accelerate data from one or more sources using SQL, while grounding AI in real-time, reliable data. Co-locate datasets with apps and AI models to power AI feedback loops, enable RAG and search, and deliver fast, low-latency data-query and AI-inference with full control over cost and performance.

- Spice Cayenne Data Accelerator: Simplified multi-file acceleration using the Vortex columnar format + SQLite metadata. Delivers DuckDB-comparable performance without single-file scaling limitations.

- Multi-Node Distributed Query: Scale query execution across multiple nodes with Apache Ballista integration for improved performance on large datasets.

- Acceleration Snapshots: Bootstrap accelerations from S3 for fast cold starts (seconds vs. minutes). Supports ephemeral storage with persistent recovery.

-

Iceberg Table Writes: Write to Iceberg tables using standard SQL

INSERT INTOfor data ingestion and transformation—no Spark required. - Petabyte-Scale Vector Search: Native Amazon S3 Vectors integration manages the full vector lifecycle from ingestion to embedding to querying. SQL-integrated hybrid search with RRF.

-

AI-Native Runtime: Spice combines data query and AI inference in a single engine, for data-grounded AI and accurate AI.

-

Application-Focused: Designed to run distributed at the application and agent level, often as a 1:1 or 1:N mapping between app and Spice instance, unlike traditional data systems built for many apps on one centralized database. It’s common to spin up multiple Spice instances—even one per tenant or customer.

-

Dual-Engine Acceleration: Supports both OLAP (Arrow/DuckDB) and OLTP (SQLite/PostgreSQL) engines at the dataset level, providing flexible performance across analytical and transactional workloads.

-

Disaggregated Storage: Separation of compute from disaggregated storage, co-locating local, materialized working sets of data with applications, dashboards, or ML pipelines while accessing source data in its original storage.

-

Edge to Cloud Native: Deploy as a standalone instance, Kubernetes sidecar, microservice, or cluster—across edge/POP, on-prem, and public clouds. Chain multiple Spice instances for tier-optimized, distributed deployments.

| Feature | Spice | Trino / Presto | Dremio | ClickHouse | Materialize |

|---|---|---|---|---|---|

| Primary Use-Case | Data & AI apps/agents | Big data analytics | Interactive analytics | Real-time analytics | Real-time analytics |

| Primary deployment model | Sidecar | Cluster | Cluster | Cluster | Cluster |

| Federated Query Support | ✅ | ✅ | ✅ | ― | ― |

| Acceleration/Materialization | ✅ (Arrow, SQLite, DuckDB, PostgreSQL) | Intermediate storage | Reflections (Iceberg) | Materialized views | ✅ (Real-time views) |

| Catalog Support | ✅ (Iceberg, Unity Catalog, AWS Glue) | ✅ | ✅ | ― | ― |

| Query Result Caching | ✅ | ✅ | ✅ | ✅ | Limited |

| Multi-Modal Acceleration | ✅ (OLAP + OLTP) | ― | ― | ― | ― |

| Change Data Capture (CDC) | ✅ (Debezium) | ― | ― | ― | ✅ (Debezium) |

| Feature | Spice | LangChain | LlamaIndex | AgentOps.ai | Ollama |

|---|---|---|---|---|---|

| Primary Use-Case | Data & AI apps | Agentic workflows | RAG apps | Agent operations | LLM apps |

| Programming Language | Any language (HTTP interface) | JavaScript, Python | Python | Python | Any language (HTTP interface) |

| Unified Data + AI Runtime | ✅ | ― | ― | ― | ― |

| Federated Data Query | ✅ | ― | ― | ― | ― |

| Accelerated Data Access | ✅ | ― | ― | ― | ― |

| Tools/Functions | ✅ (MCP HTTP+SSE) | ✅ | ✅ | Limited | Limited |

| LLM Memory | ✅ | ✅ | ― | ✅ | ― |

| Evaluations (Evals) | ✅ | Limited | ― | Limited | ― |

| Hybrid Search | ✅ (Keyword, Vector, & Full-Text-Search) | ✅ | ✅ | Limited | Limited |

| Caching | ✅ (Query and results caching) | Limited | ― | ― | ― |

| Embeddings | ✅ (Built-in & pluggable models/DBs) | ✅ | ✅ | Limited | ― |

✅ = Fully supported ❌ = Not supported Limited = Partial or restricted support

- OpenAI-compatible API: Connect to hosted models (OpenAI, Anthropic, xAI, Amazon Bedrock) or deploy locally (Llama, NVIDIA NIM) with OpenAI Responses API support for advanced interactions. AI Gateway Recipe

- Federated Data Access: Query using SQL and NSQL (text-to-SQL) across databases, data warehouses, and data lakes with advanced query push-down for fast retrieval. Scale to distributed multi-node query execution with Apache Ballista. Federated SQL Query Recipe

-

Search and RAG: Search and retrieve context with accelerated embeddings for retrieval-augmented generation (RAG) workflows. Native Amazon S3 Vectors integration for petabyte-scale vector search. Full-text search (FTS) via Tantivy-powered BM25 and vector similarity search (VSS) integrated into SQL via

text_searchandvector_searchUDTFs. Reciprocal rank fusion (RRF) for hybrid search. Amazon S3 Vectors Cookbook Recipe - LLM Memory and Observability: Store and retrieve history and context for AI agents while gaining deep visibility into data flows, model performance, and traces. LLM Memory Recipe | Observability & Monitoring Features Documentation

-

Data Acceleration: Co-locate materialized datasets in Arrow, SQLite, DuckDB, PostgreSQL, or Cayenne (Vortex+SQLite) with applications for sub-second query. Bootstrap from snapshots stored in S3 for fast cold starts. Write to Iceberg tables with standard SQL

INSERT INTO. DuckDB Data Accelerator Recipe - Resiliency and Local Dataset Replication: Maintain application availability with local replicas of critical datasets. Recover from federated source outages using acceleration snapshots. Local Dataset Replication Recipe

- Responsive Dashboards: Enable fast, real-time analytics by accelerating data for frontends and BI tools with configurable refresh schedules. Sales BI Dashboard Demo

- Simplified Legacy Migration: Use a single endpoint to unify legacy systems with modern infrastructure, including federated SQL querying across multiple sources. Federated SQL Query Recipe

-

Unified Search with Vector Similarity: Perform efficient vector similarity search across structured and unstructured data sources with native Amazon S3 Vectors integration for petabyte-scale vector storage and querying. The Spice runtime manages the vector lifecycle: ingesting data, embedding it using AWS Bedrock (Amazon Titan, Cohere), HuggingFace models, or Model2Vec (500x faster static embeddings), and storing in S3 Vector buckets or pgvector. Supports cosine similarity, Euclidean distance, or dot product. SQL-integrated search via

vector_searchandtext_searchUDTFs with hybrid search using reciprocal rank fusion (RRF). Example:SELECT * FROM vector_search(my_table, 'search query', 10) WHERE condition ORDER BY _score;. Amazon S3 Vectors Cookbook Recipe - Semantic Knowledge Layer: Define a semantic context model to enrich data for AI. Semantic Model Feature Documentation

- Text-to-SQL: Convert natural language queries into SQL using built-in NSQL and sampling tools for accurate query. Text-to-SQL Recipe

- Model and Data Evaluations: Assess model performance and data quality with integrated evaluation tools. Language Model Evaluations Recipe

-

Is Spice a cache? No specifically; you can think of Spice data acceleration as an active cache, materialization, or data prefetcher. A cache would fetch data on a cache-miss while Spice prefetches and materializes filtered data on an interval, trigger, or as data changes using CDC. In addition to acceleration Spice supports results caching.

-

Is Spice a CDN for databases? Yes, a common use-case for Spice is as a CDN for different data sources. Using CDN concepts, Spice enables you to ship (load) a working set of your database (or data lake, or data warehouse) where it's most frequently accessed, like from a data-intensive application or for AI context.

https://github.com/spiceai/spiceai/assets/80174/7735ee94-3f4a-4983-a98e-fe766e79e03a

See more demos on YouTube.

| Name | Description | Status | Protocol/Format |

|---|---|---|---|

databricks (mode: delta_lake) |

Databricks | Stable | S3/Delta Lake |

delta_lake |

Delta Lake | Stable | Delta Lake |

dremio |

Dremio | Stable | Arrow Flight |

duckdb |

DuckDB | Stable | Embedded |

file |

File | Stable | Parquet, CSV |

github |

GitHub | Stable | GitHub API |

postgres |

PostgreSQL | Stable | |

s3 |

S3 | Stable | Parquet, CSV |

mysql |

MySQL | Stable | |

spice.ai |

Spice.ai | Stable | Arrow Flight |

graphql |

GraphQL | Release Candidate | JSON |

dynamodb |

Amazon DynamoDB | Release Candidate | |

databricks (mode: spark_connect) |

Databricks | Beta | Spark Connect |

flightsql |

FlightSQL | Beta | Arrow Flight SQL |

iceberg |

Apache Iceberg | Beta | Parquet |

mssql |

Microsoft SQL Server | Beta | Tabular Data Stream (TDS) |

odbc |

ODBC | Beta | ODBC |

snowflake |

Snowflake | Beta | Arrow |

spark |

Spark | Beta | Spark Connect |

oracle |

Oracle | Alpha | Oracle ODPI-C |

abfs |

Azure BlobFS | Alpha | Parquet, CSV |

clickhouse |

Clickhouse | Alpha | |

debezium |

Debezium CDC | Alpha | Kafka + JSON |

gcs, gs

|

Google Cloud Storage | Alpha | Parquet, CSV, JSON |

kafka |

Kafka | Alpha | Kafka + JSON |

ftp, sftp

|

FTP/SFTP | Alpha | Parquet, CSV |

glue |

AWS Glue | Alpha | Iceberg, Parquet, CSV |

http, https

|

HTTP(s) | Alpha | Parquet, CSV, JSON |

imap |

IMAP | Alpha | IMAP Emails |

localpod |

Local dataset replication | Alpha | |

mongodb |

MongoDB | Alpha | |

sharepoint |

Microsoft SharePoint | Alpha | Unstructured UTF-8 documents |

scylladb |

ScyllaDB | Alpha | |

smb |

SMB (Server Message Block) | Alpha | SMB |

elasticsearch |

ElasticSearch | Roadmap |

| Name | Description | Status | Engine Modes |

|---|---|---|---|

arrow |

In-Memory Arrow Records | Stable | memory |

cayenne |

Spice Cayenne (Vortex) | Stable | file |

duckdb |

Embedded DuckDB | Stable |

memory, file

|

postgres |

Attached PostgreSQL | Release Candidate | N/A |

sqlite |

Embedded SQLite | Release Candidate |

memory, file

|

| Name | Description | Status | ML Format(s) | LLM Format(s) |

|---|---|---|---|---|

openai |

OpenAI (or compatible) LLM endpoint | Release Candidate | - | OpenAI-compatible HTTP endpoint |

file |

Local filesystem | Release Candidate | ONNX | GGUF, GGML, SafeTensor |

huggingface |

Models hosted on HuggingFace | Release Candidate | ONNX | GGUF, GGML, SafeTensor |

spice.ai |

Models hosted on the Spice.ai Cloud Platform | ONNX | OpenAI-compatible HTTP endpoint | |

azure |

Azure OpenAI | - | OpenAI-compatible HTTP endpoint | |

bedrock |

Amazon Bedrock (Nova models) | Alpha | - | OpenAI-compatible HTTP endpoint |

anthropic |

Models hosted on Anthropic | Alpha | - | OpenAI-compatible HTTP endpoint |

xai |

Models hosted on xAI | Alpha | - | OpenAI-compatible HTTP endpoint |

| Name | Description | Status | ML Format(s) | LLM Format(s)* |

|---|---|---|---|---|

openai |

OpenAI (or compatible) LLM endpoint | Release Candidate | - | OpenAI-compatible HTTP endpoint |

file |

Local filesystem | Release Candidate | ONNX | GGUF, GGML, SafeTensor |

huggingface |

Models hosted on HuggingFace | Release Candidate | ONNX | GGUF, GGML, SafeTensor |

model2vec |

Static embeddings (500x faster) | Release Candidate | Model2Vec | - |

azure |

Azure OpenAI | Alpha | - | OpenAI-compatible HTTP endpoint |

bedrock |

AWS Bedrock (e.g., Titan, Cohere) | Alpha | - | OpenAI-compatible HTTP endpoint |

| Name | Description | Status |

|---|---|---|

s3_vectors |

Amazon S3 Vectors for petabyte-scale vector storage and querying | Alpha |

pgvector |

PostgreSQL with pgvector extension | Alpha |

duckdb_vector |

DuckDB with vector extension for efficient vector storage and search | Alpha |

sqlite_vec |

SQLite with sqlite-vec extension for lightweight vector operations | Alpha |

Catalog Connectors connect to external catalog providers and make their tables available for federated SQL query in Spice. Configuring accelerations for tables in external catalogs is not supported. The schema hierarchy of the external catalog is preserved in Spice.

| Name | Description | Status | Protocol/Format |

|---|---|---|---|

spice.ai |

Spice.ai Cloud Platform | Stable | Arrow Flight |

unity_catalog |

Unity Catalog | Stable | Delta Lake |

databricks |

Databricks | Beta | Spark Connect, S3/Delta Lake |

iceberg |

Apache Iceberg | Beta | Parquet |

glue |

AWS Glue | Alpha | CSV, Parquet, Iceberg |

https://github.com/spiceai/spiceai/assets/88671039/85cf9a69-46e7-412e-8b68-22617dcbd4e0

Install the Spice CLI:

On macOS, Linux, and WSL:

curl https://install.spiceai.org | /bin/bashOr using brew:

brew install spiceai/spiceai/spiceOn Windows using PowerShell:

iex ((New-Object System.Net.WebClient).DownloadString("https://install.spiceai.org/Install.ps1"))Step 1. Initialize a new Spice app with the spice init command:

spice init spice_qsA spicepod.yaml file is created in the spice_qs directory. Change to that directory:

cd spice_qsStep 2. Start the Spice runtime:

spice runExample output will be shown as follows:

2025/01/20 11:26:10 INFO Spice.ai runtime starting...

2025-01-20T19:26:10.679068Z INFO runtime::init::dataset: No datasets were configured. If this is unexpected, check the Spicepod configuration.

2025-01-20T19:26:10.679716Z INFO runtime::flight: Spice Runtime Flight listening on 127.0.0.1:50051

2025-01-20T19:26:10.679786Z INFO runtime::metrics_server: Spice Runtime Metrics listening on 127.0.0.1:9090

2025-01-20T19:26:10.680140Z INFO runtime::http: Spice Runtime HTTP listening on 127.0.0.1:8090

2025-01-20T19:26:10.879126Z INFO runtime::init::results_cache: Initialized sql results cache; max size: 128.00 MiB, item ttl: 1sThe runtime is now started and ready for queries.

Step 3. In a new terminal window, add the spiceai/quickstart Spicepod. A Spicepod is a package of configuration defining datasets and ML models.

spice add spiceai/quickstartThe spicepod.yaml file will be updated with the spiceai/quickstart dependency.

version: v1

kind: Spicepod

name: spice_qs

dependencies:

- spiceai/quickstartThe spiceai/quickstart Spicepod will add a taxi_trips data table to the runtime which is now available to query by SQL.

2025-01-20T19:26:30.011633Z INFO runtime::init::dataset: Dataset taxi_trips registered (s3://spiceai-demo-datasets/taxi_trips/2024/), acceleration (arrow), results cache enabled.

2025-01-20T19:26:30.013002Z INFO runtime::accelerated_table::refresh_task: Loading data for dataset taxi_trips

2025-01-20T19:26:40.312839Z INFO runtime::accelerated_table::refresh_task: Loaded 2,964,624 rows (399.41 MiB) for dataset taxi_trips in 10s 299msStep 4. Start the Spice SQL REPL:

spice sqlThe SQL REPL inferface will be shown:

Welcome to the Spice.ai SQL REPL! Type 'help' for help.

show tables; -- list available tables

sql>Enter show tables; to display the available tables for query:

sql> show tables;

+---------------+--------------+---------------+------------+

| table_catalog | table_schema | table_name | table_type |

+---------------+--------------+---------------+------------+

| spice | public | taxi_trips | BASE TABLE |

| spice | runtime | query_history | BASE TABLE |

| spice | runtime | metrics | BASE TABLE |

+---------------+--------------+---------------+------------+

Time: 0.022671708 seconds. 3 rows.Enter a query to display the longest taxi trips:

SELECT trip_distance, total_amount FROM taxi_trips ORDER BY trip_distance DESC LIMIT 10;Output:

+---------------+--------------+

| trip_distance | total_amount |

+---------------+--------------+

| 312722.3 | 22.15 |

| 97793.92 | 36.31 |

| 82015.45 | 21.56 |

| 72975.97 | 20.04 |

| 71752.26 | 49.57 |

| 59282.45 | 33.52 |

| 59076.43 | 23.17 |

| 58298.51 | 18.63 |

| 51619.36 | 24.2 |

| 44018.64 | 52.43 |

+---------------+--------------+

Time: 0.045150667 seconds. 10 rows.Using the Docker image locally:

docker pull spiceai/spiceaiIn a Dockerfile:

from spiceai/spiceai:latestUsing Helm:

helm repo add spiceai https://helm.spiceai.org

helm install spiceai spiceai/spiceaiThe Spice.ai Cookbook is a collection of recipes and examples for using Spice. Find it at https://github.com/spiceai/cookbook.

Access ready-to-use Spicepods and datasets hosted on the Spice.ai Cloud Platform using the Spice runtime. A list of public Spicepods is available on Spicerack: https://spicerack.org/.

To use public datasets, create a free account on Spice.ai:

-

Visit spice.ai and click Try for Free.

-

After creating an account, create an app to generate an API key.

Once set up, you can access ready-to-use Spicepods including datasets. For this demonstration, use the taxi_trips dataset from the Spice.ai Quickstart.

Step 1. Initialize a new project.

# Initialize a new Spice app

spice init spice_app

# Change to app directory

cd spice_appStep 2. Log in and authenticate from the command line using the spice login command. A pop up browser window will prompt you to authenticate:

spice loginStep 3. Start the runtime:

# Start the runtime

spice runStep 4. Configure the dataset:

In a new terminal window, configure a new dataset using the spice dataset configure command:

spice dataset configureEnter a dataset name that will be used to reference the dataset in queries. This name does not need to match the name in the dataset source.

dataset name: (spice_app) taxi_tripsEnter the description of the dataset:

description: Taxi trips datasetEnter the location of the dataset:

from: spice.ai/spiceai/quickstart/datasets/taxi_tripsSelect y when prompted whether to accelerate the data:

Locally accelerate (y/n)? yYou should see the following output from your runtime terminal:

2024-12-16T05:12:45.803694Z INFO runtime::init::dataset: Dataset taxi_trips registered (spice.ai/spiceai/quickstart/datasets/taxi_trips), acceleration (arrow, 10s refresh), results cache enabled.

2024-12-16T05:12:45.805494Z INFO runtime::accelerated_table::refresh_task: Loading data for dataset taxi_trips

2024-12-16T05:13:24.218345Z INFO runtime::accelerated_table::refresh_task: Loaded 2,964,624 rows (8.41 GiB) for dataset taxi_trips in 38s 412ms.Step 5. In a new terminal window, use the Spice SQL REPL to query the dataset

spice sqlSELECT tpep_pickup_datetime, passenger_count, trip_distance from taxi_trips LIMIT 10;The output displays the results of the query along with the query execution time:

+----------------------+-----------------+---------------+

| tpep_pickup_datetime | passenger_count | trip_distance |

+----------------------+-----------------+---------------+

| 2024-01-11T12:55:12 | 1 | 0.0 |

| 2024-01-11T12:55:12 | 1 | 0.0 |

| 2024-01-11T12:04:56 | 1 | 0.63 |

| 2024-01-11T12:18:31 | 1 | 1.38 |

| 2024-01-11T12:39:26 | 1 | 1.01 |

| 2024-01-11T12:18:58 | 1 | 5.13 |

| 2024-01-11T12:43:13 | 1 | 2.9 |

| 2024-01-11T12:05:41 | 1 | 1.36 |

| 2024-01-11T12:20:41 | 1 | 1.11 |

| 2024-01-11T12:37:25 | 1 | 2.04 |

+----------------------+-----------------+---------------+

Time: 0.00538925 seconds. 10 rows.You can experiment with the time it takes to generate queries when using non-accelerated datasets. You can change the acceleration setting from true to false in the datasets.yaml file.

Comprehensive documentation is available at spiceai.org/docs.

Over 45 quickstarts and samples available in the Spice Cookbook.

Spice.ai is designed to be extensible with extension points documented at EXTENSIBILITY.md. Build custom Data Connectors, Data Accelerators, Catalog Connectors, Secret Stores, Models, or Embeddings.

🚀 See the Roadmap for upcoming features.

We greatly appreciate and value your support! You can help Spice in a number of ways:

- Build an app with Spice.ai and send us feedback and suggestions at [email protected] or on Discord, X, or LinkedIn.

- File an issue if you see something not quite working correctly.

- Join our team (We’re hiring!)

- Contribute code or documentation to the project (see CONTRIBUTING.md).

- Follow our blog at spiceai.org/blog

⭐️ star this repo! Thank you for your support! 🙏

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for spiceai

Similar Open Source Tools

spiceai

Spice is a portable runtime written in Rust that offers developers a unified SQL interface to materialize, accelerate, and query data from any database, data warehouse, or data lake. It connects, fuses, and delivers data to applications, machine-learning models, and AI-backends, functioning as an application-specific, tier-optimized Database CDN. Built with industry-leading technologies such as Apache DataFusion, Apache Arrow, Apache Arrow Flight, SQLite, and DuckDB. Spice makes it fast and easy to query data from one or more sources using SQL, co-locating a managed dataset with applications or machine learning models, and accelerating it with Arrow in-memory, SQLite/DuckDB, or attached PostgreSQL for fast, high-concurrency, low-latency queries.

mosaico

Mosaico is a blazing-fast data platform designed to bridge the gap between Robotics and Physical AI. It streamlines data management, compression, and search by replacing monolithic files with a structured archive powered by Rust and Python. The platform operates on a standard client-server model, with the server daemon, mosaicod, handling heavy lifting tasks like data conversion, compression, and organized storage. The Python SDK (mosaico-sdk-py) and Rust backend (mosaicod) are included in this monorepo configuration to simplify testing and reduce compatibility issues. Mosaico enables the ingestion of standard ROS sequences, transforming them into synchronized, randomized dataframes for Physical AI applications. Efficiency is built into the architecture, with data batches streamed directly from the Mosaico data platform, eliminating the need to download massive datasets locally.

databerry

Chaindesk is a no-code platform that allows users to easily set up a semantic search system for personal data without technical knowledge. It supports loading data from various sources such as raw text, web pages, files (Word, Excel, PowerPoint, PDF, Markdown, Plain Text), and upcoming support for web sites, Notion, and Airtable. The platform offers a user-friendly interface for managing datastores, querying data via a secure API endpoint, and auto-generating ChatGPT Plugins for each datastore. Chaindesk utilizes a Vector Database (Qdrant), Openai's text-embedding-ada-002 for embeddings, and has a chunk size of 1024 tokens. The technology stack includes Next.js, Joy UI, LangchainJS, PostgreSQL, Prisma, and Qdrant, inspired by the ChatGPT Retrieval Plugin.

qsv

qsv is a command line program for querying, slicing, indexing, analyzing, filtering, enriching, transforming, sorting, validating, joining, formatting & converting tabular data (CSV, spreadsheets, DBs, parquet, etc). Commands are simple, composable & 'blazing fast'. It is a blazing-fast data-wrangling toolkit with a focus on speed, processing very large files, and being a complete data-wrangling toolkit. It is designed to be portable, easy to use, secure, and easy to contribute to. qsv follows the RFC 4180 CSV standard, requires UTF-8 encoding, and supports various file formats. It has extensive shell completion support, automatic compression/decompression using Snappy, and supports environment variables and dotenv files. qsv has a comprehensive test suite and is dual-licensed under MIT or the UNLICENSE.

oramacore

OramaCore is a database designed for AI projects, answer engines, copilots, and search functionalities. It offers features such as a full-text search engine, vector database, LLM interface, and various utilities. The tool is currently under active development and not recommended for production use due to potential API changes. OramaCore aims to provide a comprehensive solution for managing data and enabling advanced search capabilities in AI applications.

GraphLLM

GraphLLM is a graph-based framework designed to process data using LLMs. It offers a set of tools including a web scraper, PDF parser, YouTube subtitles downloader, Python sandbox, and TTS engine. The framework provides a GUI for building and debugging graphs with advanced features like loops, conditionals, parallel execution, streaming of results, hierarchical graphs, external tool integration, and dynamic scheduling. GraphLLM is a low-level framework that gives users full control over the raw prompt and output of models, with a steeper learning curve. It is tested with llama70b and qwen 32b, under heavy development with breaking changes expected.

firecrawl

Firecrawl is an API service that empowers AI applications with clean data from any website. It features advanced scraping, crawling, and data extraction capabilities. The repository is still in development, integrating custom modules into the mono repo. Users can run it locally but it's not fully ready for self-hosted deployment yet. Firecrawl offers powerful capabilities like scraping, crawling, mapping, searching, and extracting structured data from single pages, multiple pages, or entire websites with AI. It supports various formats, actions, and batch scraping. The tool is designed to handle proxies, anti-bot mechanisms, dynamic content, media parsing, change tracking, and more. Firecrawl is available as an open-source project under the AGPL-3.0 license, with additional features offered in the cloud version.

llms

llms.py is a lightweight CLI, API, and ChatGPT-like alternative to Open WebUI for accessing multiple LLMs. It operates entirely offline, ensuring all data is kept private in browser storage. The tool provides a convenient way to interact with various LLM models without the need for an internet connection, prioritizing user privacy and data security.

SQLBot

SQLBot is a versatile tool for executing SQL queries and managing databases. It provides a user-friendly interface for interacting with databases, allowing users to easily query, insert, update, and delete data. SQLBot supports various database systems such as MySQL, PostgreSQL, and SQLite, making it a valuable tool for developers, data analysts, and database administrators. With SQLBot, users can streamline their database management tasks and improve their productivity by quickly accessing and manipulating data without the need for complex SQL commands.

distill

Distill is a reliability layer for LLM context that provides deterministic deduplication to remove redundancy before reaching the model. It aims to reduce redundant data, lower costs, provide faster responses, and offer more efficient and deterministic results. The tool works by deduplicating, compressing, summarizing, and caching context to ensure reliable outputs. It offers various installation methods, including binary download, Go install, Docker usage, and building from source. Distill can be used for tasks like deduplicating chunks, connecting to vector databases, integrating with AI assistants, analyzing files for duplicates, syncing vectors to Pinecone, querying from the command line, and managing configuration files. The tool supports self-hosting via Docker, Docker Compose, building from source, Fly.io deployment, Render deployment, and Railway integration. Distill also provides monitoring capabilities with Prometheus-compatible metrics, Grafana dashboard, and OpenTelemetry tracing.

AIaW

AIaW is a next-generation LLM client with full functionality, lightweight, and extensible. It supports various basic functions such as streaming transfer, image uploading, and latex formulas. The tool is cross-platform with a responsive interface design. It supports multiple service providers like OpenAI, Anthropic, and Google. Users can modify questions, regenerate in a forked manner, and visualize conversations in a tree structure. Additionally, it offers features like file parsing, video parsing, plugin system, assistant market, local storage with real-time cloud sync, and customizable interface themes. Users can create multiple workspaces, use dynamic prompt word variables, extend plugins, and benefit from detailed design elements like real-time content preview, optimized code pasting, and support for various file types.

DB-GPT

DB-GPT is an open source AI native data app development framework with AWEL(Agentic Workflow Expression Language) and agents. It aims to build infrastructure in the field of large models, through the development of multiple technical capabilities such as multi-model management (SMMF), Text2SQL effect optimization, RAG framework and optimization, Multi-Agents framework collaboration, AWEL (agent workflow orchestration), etc. Which makes large model applications with data simpler and more convenient.

runhouse

Runhouse is a tool that allows you to build, run, and deploy production-quality AI apps and workflows on your own compute. It provides simple, powerful APIs for the full lifecycle of AI development, from research to evaluation to production to updates to scaling to management, and across any infra. By automatically packaging your apps into scalable, secure, and observable services, Runhouse can also turn otherwise redundant AI activities into common reusable components across your team or company, which improves cost, velocity, and reproducibility.

llama_index

LlamaIndex is a data framework for building LLM applications. It provides tools for ingesting, structuring, and querying data, as well as integrating with LLMs and other tools. LlamaIndex is designed to be easy to use for both beginner and advanced users, and it provides a comprehensive set of features for building LLM applications.

logfire

Pydantic Logfire is an observability platform that provides simple and powerful dashboard, Python-centric insights, SQL querying, OpenTelemetry integration, and Pydantic validation analytics. It offers unparalleled visibility into Python applications' behavior and allows querying data using standard SQL. Logfire is an opinionated wrapper around OpenTelemetry, supporting traces, metrics, and logs. The Python SDK for logfire is open source, while the server application for recording and displaying data is closed source.

simple-data-analysis

Simple data analysis (SDA) is an easy-to-use and high-performance TypeScript library for data analysis. It can be used with tabular and geospatial data. The library is maintained by Nael Shiab, a computational journalist and senior data producer for CBC News. SDA is based on DuckDB, a fast in-process analytical database, and it sends SQL queries to be executed by DuckDB. The library provides methods inspired by Pandas (Python) and the Tidyverse (R), and it also supports writing custom SQL queries and processing data with JavaScript. Additionally, SDA offers methods for leveraging large language models (LLMs) for data cleaning, extraction, categorization, and natural language interaction, as well as for embeddings and semantic search.

For similar tasks

spiceai

Spice is a portable runtime written in Rust that offers developers a unified SQL interface to materialize, accelerate, and query data from any database, data warehouse, or data lake. It connects, fuses, and delivers data to applications, machine-learning models, and AI-backends, functioning as an application-specific, tier-optimized Database CDN. Built with industry-leading technologies such as Apache DataFusion, Apache Arrow, Apache Arrow Flight, SQLite, and DuckDB. Spice makes it fast and easy to query data from one or more sources using SQL, co-locating a managed dataset with applications or machine learning models, and accelerating it with Arrow in-memory, SQLite/DuckDB, or attached PostgreSQL for fast, high-concurrency, low-latency queries.

Pathway-AI-Bootcamp

Welcome to the μLearn x Pathway Initiative, an exciting adventure into the world of Artificial Intelligence (AI)! This comprehensive course, developed in collaboration with Pathway, will empower you with the knowledge and skills needed to navigate the fascinating world of AI, with a special focus on Large Language Models (LLMs).

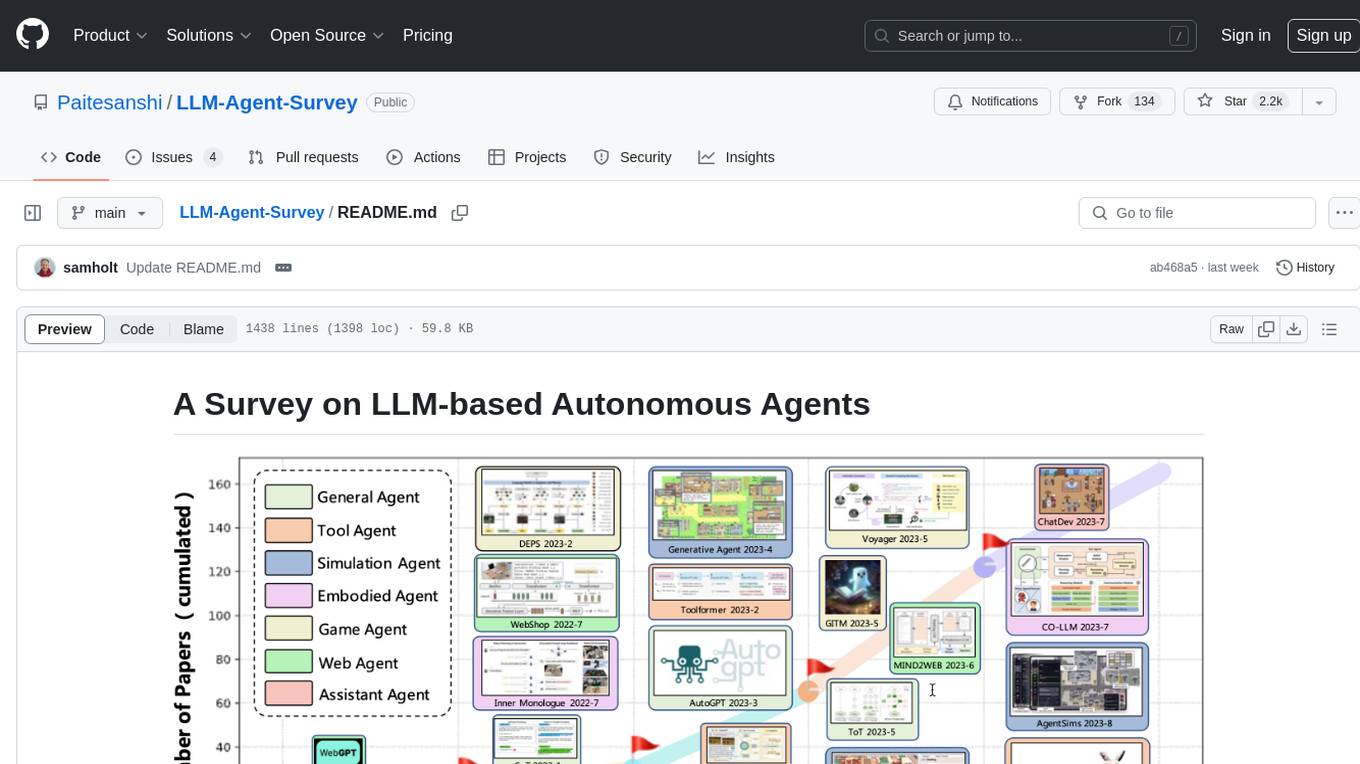

LLM-Agent-Survey

Autonomous agents are designed to achieve specific objectives through self-guided instructions. With the emergence and growth of large language models (LLMs), there is a growing trend in utilizing LLMs as fundamental controllers for these autonomous agents. This repository conducts a comprehensive survey study on the construction, application, and evaluation of LLM-based autonomous agents. It explores essential components of AI agents, application domains in natural sciences, social sciences, and engineering, and evaluation strategies. The survey aims to be a resource for researchers and practitioners in this rapidly evolving field.

genkit

Firebase Genkit (beta) is a framework with powerful tooling to help app developers build, test, deploy, and monitor AI-powered features with confidence. Genkit is cloud optimized and code-centric, integrating with many services that have free tiers to get started. It provides unified API for generation, context-aware AI features, evaluation of AI workflow, extensibility with plugins, easy deployment to Firebase or Google Cloud, observability and monitoring with OpenTelemetry, and a developer UI for prototyping and testing AI features locally. Genkit works seamlessly with Firebase or Google Cloud projects through official plugins and templates.

vector-cookbook

The Vector Cookbook is a collection of recipes and sample application starter kits for building AI applications with LLMs using PostgreSQL and Timescale Vector. Timescale Vector enhances PostgreSQL for AI applications by enabling the storage of vector, relational, and time-series data with faster search, higher recall, and more efficient time-based filtering. The repository includes resources, sample applications like TSV Time Machine, and guides for creating, storing, and querying OpenAI embeddings with PostgreSQL and pgvector. Users can learn about Timescale Vector, explore performance benchmarks, and access Python client libraries and tutorials.

cogai

The W3C Cognitive AI Community Group focuses on advancing Cognitive AI through collaboration on defining use cases, open source implementations, and application areas. The group aims to demonstrate the potential of Cognitive AI in various domains such as customer services, healthcare, cybersecurity, online learning, autonomous vehicles, manufacturing, and web search. They work on formal specifications for chunk data and rules, plausible knowledge notation, and neural networks for human-like AI. The group positions Cognitive AI as a combination of symbolic and statistical approaches inspired by human thought processes. They address research challenges including mimicry, emotional intelligence, natural language processing, and common sense reasoning. The long-term goal is to develop cognitive agents that are knowledgeable, creative, collaborative, empathic, and multilingual, capable of continual learning and self-awareness.

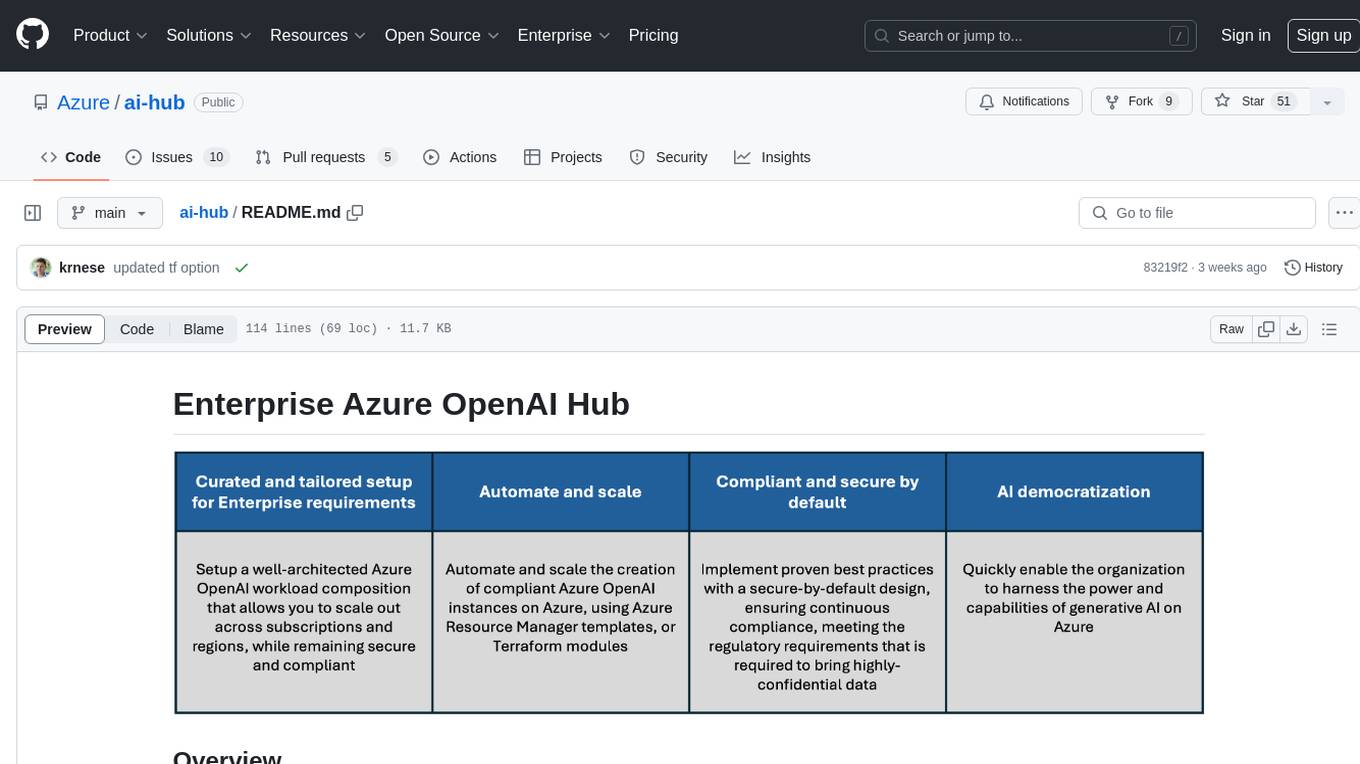

ai-hub

The Enterprise Azure OpenAI Hub is a comprehensive repository designed to guide users through the world of Generative AI on the Azure platform. It offers a structured learning experience to accelerate the transition from concept to production in an Enterprise context. The hub empowers users to explore various use cases with Azure services, ensuring security and compliance. It provides real-world examples and playbooks for practical insights into solving complex problems and developing cutting-edge AI solutions. The repository also serves as a library of proven patterns, aligning with industry standards and promoting best practices for secure and compliant AI development.

earth2studio

Earth2Studio is a Python-based package designed to enable users to quickly get started with AI weather and climate models. It provides access to pre-trained models, diagnostic tools, data sources, IO utilities, perturbation methods, and sample workflows for building custom weather prediction workflows. The package aims to empower users to explore AI-driven meteorology through modular components and seamless integration with other Nvidia packages like Modulus.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.

)