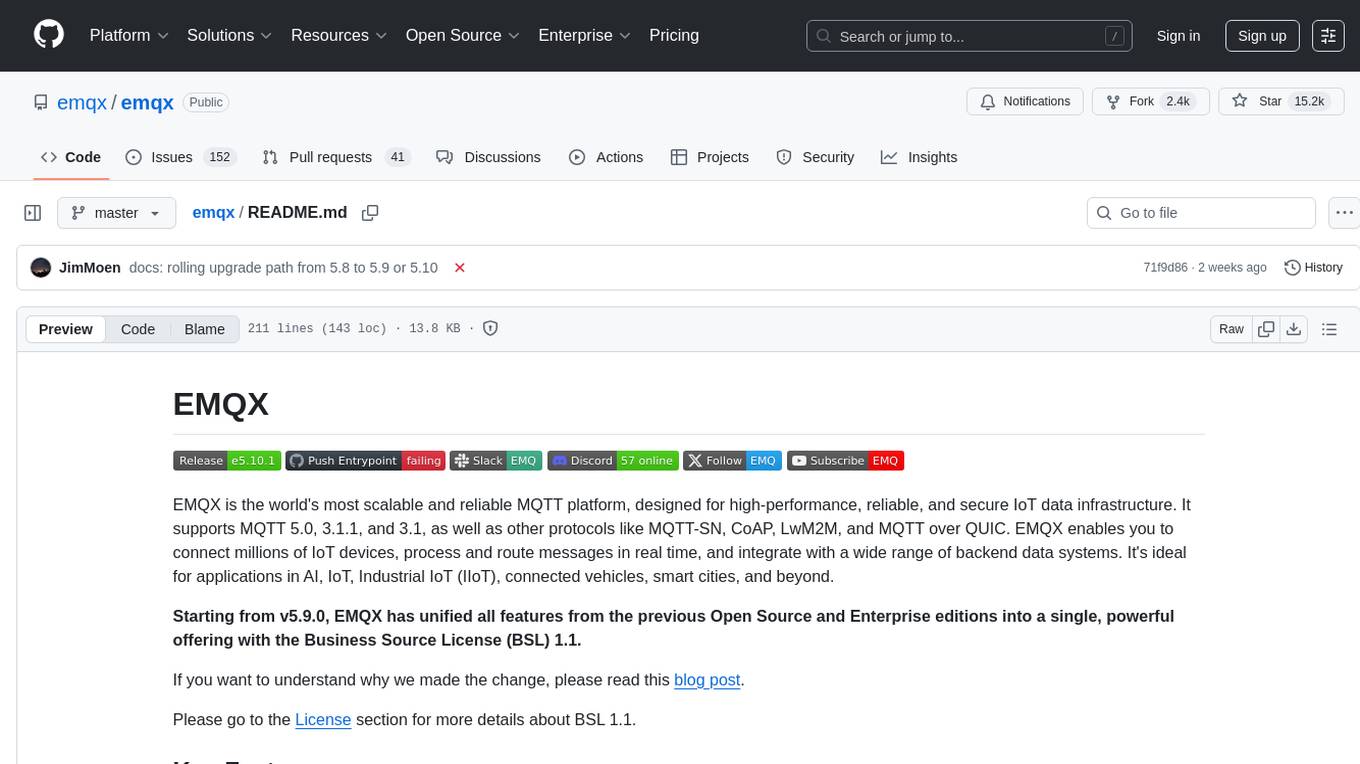

emqx

The most scalable and reliable MQTT broker for AI, IoT, IIoT and connected vehicles

Stars: 15263

EMQX is a highly scalable and reliable MQTT platform designed for IoT data infrastructure. It supports various protocols like MQTT 5.0, 3.1.1, and 3.1, as well as MQTT-SN, CoAP, LwM2M, and MQTT over QUIC. EMQX allows connecting millions of IoT devices, processing messages in real time, and integrating with backend data systems. It is suitable for applications in AI, IoT, IIoT, connected vehicles, smart cities, and more. The tool offers features like massive scalability, powerful rule engine, flow designer, AI processing, robust security, observability, management, extensibility, and a unified experience with the Business Source License (BSL) 1.1.

README:

EMQX is the world's most scalable and reliable MQTT platform, designed for high-performance, reliable, and secure IoT data infrastructure. It supports MQTT 5.0, 3.1.1, and 3.1, as well as other protocols like MQTT-SN, CoAP, LwM2M, and MQTT over QUIC. EMQX enables you to connect millions of IoT devices, process and route messages in real time, and integrate with a wide range of backend data systems. It's ideal for applications in AI, IoT, Industrial IoT (IIoT), connected vehicles, smart cities, and beyond.

Starting from v5.9.0, EMQX has unified all features from the previous Open Source and Enterprise editions into a single, powerful offering with the Business Source License (BSL) 1.1.

If you want to understand why we made the change, please read this blog post.

Please go to the License section for more details about BSL 1.1.

EMQX delivers a powerful set of capabilities for modern connected systems:

- Full MQTT v5.0, v3.1.1, and v3.1 support.

- MQTT over QUIC: Leverage the benefits of QUIC for faster connection establishment, reduced head-of-line blocking, and seamless connection migration.

- Support for other IoT protocols like LwM2M, CoAP, MQTT-SN, and more through gateways.

- Connect 100M+ of concurrent MQTT clients with a single cluster.

- Process millions of messages per second with sub-millisecond latency.

- Masterless clustering for high availability and fault tolerance.

- Seamless global communication with EMQX Cluster Linking.

- SQL-based Rule Engine to process, transform, enrich, and filter in-flight data.

- Seamless data bridging and integration with 50+ cloud services and enterprise systems, including:

- Message Queues: Kafka, RabbitMQ, Pulsar, RocketMQ, etc.

- Databases: PostgreSQL, MySQL, MongoDB, Redis, ClickHouse, InfluxDB, etc.

- Cloud Services: AWS Kinesis, GCP Pub/Sub, Azure Event, Confluent Cloud, and more.

- Webhook support for easy integration with custom services.

- Drag‑and‑drop canvas to orchestrate real‑time data pipelines with zero code, using nodes for rules, integrations, and AI tasks.

- Schema Registry: Define, store, and manage data schemas to ensure consistency.

- Schema Validation: Validate incoming data against registered schemas to maintain data integrity.

- Message Transformation: Convert data between different formats and structures to facilitate seamless integration.

- Native AI processing capabilities for IoT data streams.

- Integration with popular AI services.

- Support for AI-driven decision making at the edge or in the cloud.

Robust Security

- Secure connections with TLS/SSL and WSS.

- Flexible authentication mechanisms: username/password, JWT, PSK, X.509 certificates, etc.

- Granular access control with ACLs.

- Integration with external authentication databases (LDAP, SQL, Redis).

- Comprehensive monitoring with Prometheus, Grafana, Datadog, and OpenTelemetry.

- Detailed logging and tracing capabilities.

- User-friendly Dashboard for cluster overview and management.

- Rich HTTP API for automation and third-party integration.

- Plugin architecture for extending functionality.

- Hooks for customizing behavior at various points in the message lifecycle.

- With the BSL 1.1 license (from v5.9.0), all features, including those previously exclusive to the enterprise edition, are available to all developers.

The simplest way to set up EMQX is to create a managed deployment with EMQX Cloud. You can try EMQX Cloud for free.

docker run -d --name emqx \

-p 1883:1883 -p 8083:8083 -p 8084:8084 \

-p 8883:8883 -p 18083:18083 \

emqx/emqx-enterprise:latest

Next, please follow the Install EMQX Using Docker guide for further instructions.

Please refer to the official EMQX Operator documentation for details.

If you prefer to install and manage EMQX yourself, you can download the latest version from the official site.

For more installation options, see the EMQX installation documentation

- EMQX self-hosted: docs.emqx.com/en/emqx/latest.

- EMQX Cloud: docs.emqx.com/en/cloud/latest.

Please see our contributing guide.

For more organised improvement proposals, you can send pull requests to EIP.

- Follow us on: X, YouTube.

- Ask Questions: GitHub Discussions or EMQX Community Slack.

- Report Bugs: GitHub Issues.

- Discord: EMQX Discord Server.

- EMQX Website: emqx.com

- EMQX Blog: emqx.com/en/blog

- MQTT Client Programming: Tutorials

- MQTT SDKs: Popular SDKs

- MQTT Tool: MQTTX

The master branch tracks the latest version 5.

- EMQX 5.4 and newer can be built with OTP 25 or 26

- EMQX 5.9+ can be built with OTP 27

git clone https://github.com/emqx/emqx.git

cd emqx

make

_build/emqx-enterprise/rel/emqx/bin/emqx consoleFor 4.2 or earlier versions, release has to be built from another repo.

git clone https://github.com/emqx/emqx-rel.git

cd emqx-rel

make

_build/emqx/rel/emqx/bin/emqx consoleBelow is the matrix supported rolling upgrade paths since 5.0.

- Version numbers end with

?e.g.6.0?are future releases. - ✅: Supported, or planed to support.

⚠️ : May experience issues, require manual resolution.- ❌: Not supported.

- 🔄: Tentative support for future versions.

See release notes for detailed information.

| From\To | 5.1 | 5.2 | 5.3 | 5.4 | 5.5 | 5.6 | 5.7 | 5.8 | 5.9 | 5.10? | 6.0? |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 5.0 | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ❌[2] | ❌[2] | |

| 5.1 | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ❌[2] | ❌[2] |

| 5.2 | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ❌[2] | ❌[2] | |

| 5.3 | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ❌[2] | ❌[2] | ||

| 5.4 | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | 🔄 | ||||

| 5.5 | ✅ | ✅ | ✅ | ✅ | ✅ | 🔄 | |||||

| 5.6 | ✅ | ✅ | ✅ | ✅ | ✅ | 🔄 | |||||

| 5.7 | ✅ | ✅ | ✅ | ✅ | 🔄 | ||||||

| 5.8 | ✅ | 🔄 | |||||||||

| 5.9 | ✅ | ✅ | ✅ | ||||||||

| 5.10? | ✅ | ✅ | |||||||||

| 6.0? | ✅ |

- [1] Old limiter configs should be deleted from the config files (

etc/emqx.confanddata/configs/cluster-override.conf) before upgrade. - [2] Pre-5.4 routing table will be deleted. Upgrade to 5.9 first, then perform a full-cluster restart (not rolling) before upgrade to 5.10 or later.

- [3] Opentelemetry headers configuration support was introduced in 5.8.7. This release date is later than 5.9.0 and 5.10.0. 5.8 versions running 5.8.7 or later require a rolling upgrade to version 5.9.1 or 5.10.1. Alternatively, remove the header configuration for OpenTelemetry integration during the upgrade.

Effective from version 5.9.0, EMQX has transitioned from Apache 2.0 to the Business Source License (BSL) 1.1.

Starting with EMQX v5.9.0, due to the license change and the unification of all features, deploying an EMQX cluster (more than 1 node) requires a license file to be loaded.

Please refer to the following resources for details on license acquisition, application, and the specifics of the BSL 1.1.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for emqx

Similar Open Source Tools

emqx

EMQX is a highly scalable and reliable MQTT platform designed for IoT data infrastructure. It supports various protocols like MQTT 5.0, 3.1.1, and 3.1, as well as MQTT-SN, CoAP, LwM2M, and MQTT over QUIC. EMQX allows connecting millions of IoT devices, processing messages in real time, and integrating with backend data systems. It is suitable for applications in AI, IoT, IIoT, connected vehicles, smart cities, and more. The tool offers features like massive scalability, powerful rule engine, flow designer, AI processing, robust security, observability, management, extensibility, and a unified experience with the Business Source License (BSL) 1.1.

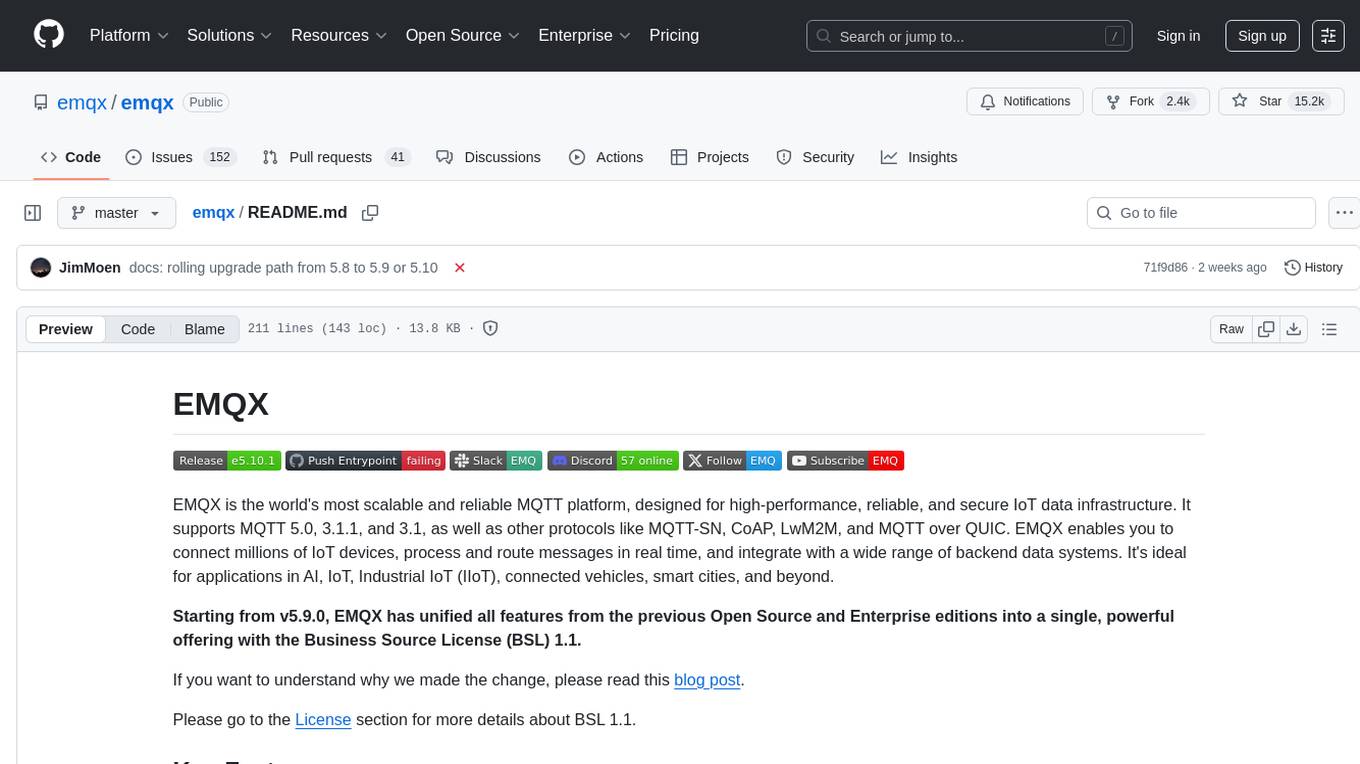

huf

HUF is an AI-native engine designed to centralize intelligence and execution into a single engine, enabling AI to operate inside real business systems. It offers multi-provider AI connectivity, intelligent tools, knowledge grounding, event-driven execution, visual workflow builder, full auditability, and cost control. HUF can be used as AI infrastructure for products, internal intelligence platform, automation & orchestration engine, embedded AI layer for SaaS, and enterprise AI control plane. Core capabilities include agent system, knowledge management, trigger system, visual flow builder, and observability. The tech stack includes Frappe Framework, Python 3.10+, LiteLLM, SQLite FTS5, React 18, TypeScript, Tailwind CSS, and MariaDB.

koog

Koog is a Kotlin-based framework for building and running AI agents entirely in idiomatic Kotlin. It allows users to create agents that interact with tools, handle complex workflows, and communicate with users. Key features include pure Kotlin implementation, MCP integration, embedding capabilities, custom tool creation, ready-to-use components, intelligent history compression, powerful streaming API, persistent agent memory, comprehensive tracing, flexible graph workflows, modular feature system, scalable architecture, and multiplatform support.

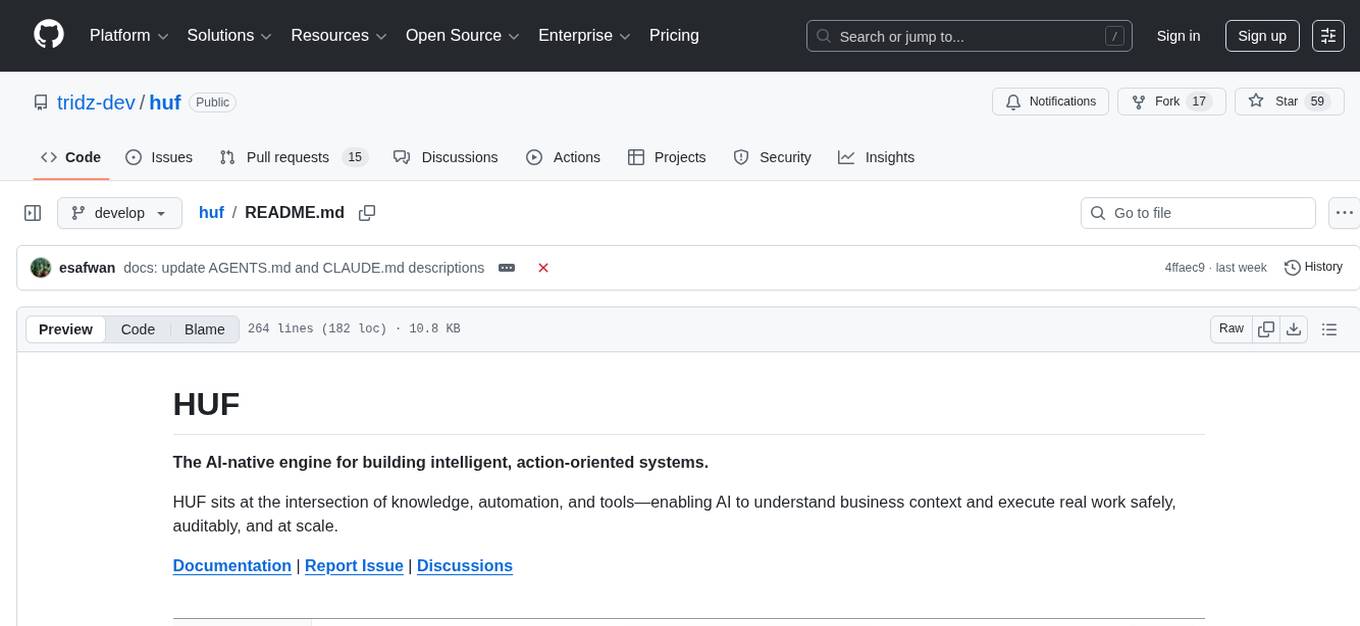

foundationallm

FoundationaLLM is a platform designed for deploying, scaling, securing, and governing generative AI in enterprises. It allows users to create AI agents grounded in enterprise data, integrate REST APIs, experiment with various large language models, centrally manage AI agents and their assets, deploy scalable vectorization data pipelines, enable non-developer users to create their own AI agents, control access with role-based access controls, and harness capabilities from Azure AI and Azure OpenAI. The platform simplifies integration with enterprise data sources, provides fine-grain security controls, scalability, extensibility, and addresses the challenges of delivering enterprise copilots or AI agents.

runhouse

Runhouse is a tool that allows you to build, run, and deploy production-quality AI apps and workflows on your own compute. It provides simple, powerful APIs for the full lifecycle of AI development, from research to evaluation to production to updates to scaling to management, and across any infra. By automatically packaging your apps into scalable, secure, and observable services, Runhouse can also turn otherwise redundant AI activities into common reusable components across your team or company, which improves cost, velocity, and reproducibility.

mastra

Mastra is an opinionated Typescript framework designed to help users quickly build AI applications and features. It provides primitives such as workflows, agents, RAG, integrations, syncs, and evals. Users can run Mastra locally or deploy it to a serverless cloud. The framework supports various LLM providers, offers tools for building language models, workflows, and accessing knowledge bases. It includes features like durable graph-based state machines, retrieval-augmented generation, integrations, syncs, and automated tests for evaluating LLM outputs.

req_llm

ReqLLM is a Req-based library for LLM interactions, offering a unified interface to AI providers through a plugin-based architecture. It brings composability and middleware advantages to LLM interactions, with features like auto-synced providers/models, typed data structures, ergonomic helpers, streaming capabilities, usage & cost extraction, and a plugin-based provider system. Users can easily generate text, structured data, embeddings, and track usage costs. The tool supports various AI providers like Anthropic, OpenAI, Groq, Google, and xAI, and allows for easy addition of new providers. ReqLLM also provides API key management, detailed documentation, and a roadmap for future enhancements.

TuyaOpen

TuyaOpen is an open source AI+IoT development framework supporting cross-chip platforms and operating systems. It provides core functionalities for AI+IoT development, including pairing, activation, control, and upgrading. The SDK offers robust security and compliance capabilities, meeting data compliance requirements globally. TuyaOpen enables the development of AI+IoT products that can leverage the Tuya APP ecosystem and cloud services. It continues to expand with more cloud platform integration features and capabilities like voice, video, and facial recognition.

tools

Strands Agents Tools is a community-driven project that provides a powerful set of tools for your agents to use. It bridges the gap between large language models and practical applications by offering ready-to-use tools for file operations, system execution, API interactions, mathematical operations, and more. The tools cover a wide range of functionalities including file operations, shell integration, memory storage, web infrastructure, HTTP client, Slack client, Python execution, mathematical tools, AWS integration, image and video processing, audio output, environment management, task scheduling, advanced reasoning, swarm intelligence, dynamic MCP client, parallel tool execution, browser automation, diagram creation, RSS feed management, and computer automation.

bifrost

Bifrost is a high-performance AI gateway that unifies access to multiple providers through a single OpenAI-compatible API. It offers features like automatic failover, load balancing, semantic caching, and enterprise-grade functionalities. Users can deploy Bifrost in seconds with zero configuration, benefiting from its core infrastructure, advanced features, enterprise and security capabilities, and developer experience. The repository structure is modular, allowing for maximum flexibility. Bifrost is designed for quick setup, easy configuration, and seamless integration with various AI models and tools.

redb-open

reDB Node is a distributed, policy-driven data mesh platform that enables True Data Portability across various databases, warehouses, clouds, and environments. It unifies data access, data mobility, and schema transformation into one open platform. Built for developers, architects, and AI systems, reDB addresses the challenges of fragmented data ecosystems in modern enterprises by providing multi-database interoperability, automated schema versioning, zero-downtime migration, real-time developer data environments with obfuscation, quantum-resistant encryption, and policy-based access control. The project aims to build a foundation for future-proof data infrastructure.

holmesgpt

HolmesGPT is an open-source DevOps assistant powered by OpenAI or any tool-calling LLM of your choice. It helps in troubleshooting Kubernetes, incident response, ticket management, automated investigation, and runbook automation in plain English. The tool connects to existing observability data, is compliance-friendly, provides transparent results, supports extensible data sources, runbook automation, and integrates with existing workflows. Users can install HolmesGPT using Brew, prebuilt Docker container, Python Poetry, or Docker. The tool requires an API key for functioning and supports OpenAI, Azure AI, and self-hosted LLMs.

graphbit

GraphBit is an industry-grade agentic AI framework built for developers and AI teams that demand stability, scalability, and low resource usage. It is written in Rust for maximum performance and safety, delivering significantly lower CPU usage and memory footprint compared to leading alternatives. The framework is designed to run multi-agent workflows in parallel, persist memory across steps, recover from failures, and ensure 100% task success under load. With lightweight architecture, observability, and concurrency support, GraphBit is suitable for deployment in high-scale enterprise environments and low-resource edge scenarios.

llm-on-openshift

This repository provides resources, demos, and recipes for working with Large Language Models (LLMs) on OpenShift using OpenShift AI or Open Data Hub. It includes instructions for deploying inference servers for LLMs, such as vLLM, Hugging Face TGI, Caikit-TGIS-Serving, and Ollama. Additionally, it offers guidance on deploying serving runtimes, such as vLLM Serving Runtime and Hugging Face Text Generation Inference, in the Single-Model Serving stack of Open Data Hub or OpenShift AI. The repository also covers vector databases that can be used as a Vector Store for Retrieval Augmented Generation (RAG) applications, including Milvus, PostgreSQL+pgvector, and Redis. Furthermore, it provides examples of inference and application usage, such as Caikit, Langchain, Langflow, and UI examples.

odoo-llm

This repository provides a comprehensive framework for integrating Large Language Models (LLMs) into Odoo. It enables seamless interaction with AI providers like OpenAI, Anthropic, Ollama, and Replicate for chat completions, text embeddings, and more within the Odoo environment. The architecture includes external AI clients connecting via `llm_mcp_server` and Odoo AI Chat with built-in chat interface. The core module `llm` offers provider abstraction, model management, and security, along with tools for CRUD operations and domain-specific tool packs. Various AI providers, infrastructure components, and domain-specific tools are available for different tasks such as content generation, knowledge base management, and AI assistants creation.

atomic-agents

The Atomic Agents framework is a modular and extensible tool designed for creating powerful applications. It leverages Pydantic for data validation and serialization. The framework follows the principles of Atomic Design, providing small and single-purpose components that can be combined. It integrates with Instructor for AI agent architecture and supports various APIs like Cohere, Anthropic, and Gemini. The tool includes documentation, examples, and testing features to ensure smooth development and usage.

For similar tasks

OmAgent

OmAgent is an open-source agent framework designed to streamline the development of on-device multimodal agents. It enables agents to empower various hardware devices, integrates speed-optimized SOTA multimodal models, provides SOTA multimodal agent algorithms, and focuses on optimizing the end-to-end computing pipeline for real-time user interaction experience. Key features include easy connection to diverse devices, scalability, flexibility, and workflow orchestration. The architecture emphasizes graph-based workflow orchestration, native multimodality, and device-centricity, allowing developers to create bespoke intelligent agent programs.

emqx

EMQX is a highly scalable and reliable MQTT platform designed for IoT data infrastructure. It supports various protocols like MQTT 5.0, 3.1.1, and 3.1, as well as MQTT-SN, CoAP, LwM2M, and MQTT over QUIC. EMQX allows connecting millions of IoT devices, processing messages in real time, and integrating with backend data systems. It is suitable for applications in AI, IoT, IIoT, connected vehicles, smart cities, and more. The tool offers features like massive scalability, powerful rule engine, flow designer, AI processing, robust security, observability, management, extensibility, and a unified experience with the Business Source License (BSL) 1.1.

activepieces

Activepieces is an open source replacement for Zapier, designed to be extensible through a type-safe pieces framework written in Typescript. It features a user-friendly Workflow Builder with support for Branches, Loops, and Drag and Drop. Activepieces integrates with Google Sheets, OpenAI, Discord, and RSS, along with 80+ other integrations. The list of supported integrations continues to grow rapidly, thanks to valuable contributions from the community. Activepieces is an open ecosystem; all piece source code is available in the repository, and they are versioned and published directly to npmjs.com upon contributions. If you cannot find a specific piece on the pieces roadmap, please submit a request by visiting the following link: Request Piece Alternatively, if you are a developer, you can quickly build your own piece using our TypeScript framework. For guidance, please refer to the following guide: Contributor's Guide

Juggle

Juggle is a low-code tool for interface orchestration, which can quickly orchestrate simple APIs into a complex interface. The orchestrated interface can be directly used by the front end, greatly improving development efficiency and reducing development costs.

ai-tutor-rag-system

The AI Tutor RAG System repository contains Jupyter notebooks supporting the RAG course, focusing on enhancing AI models with retrieval-based methods. It covers foundational and advanced concepts in retrieval-augmented generation, including data retrieval techniques, model integration with retrieval systems, and practical applications of RAG in real-world scenarios.

rai

This repository contains core sources related to Robotics & AI. It serves as a submodule in integrated projects, providing a minimal Ubuntu-specific build system and development tests. The code originated around 2004 in Edinburgh and has grown over the years to encompass various functionalities for Robotics, ML, and AI. Users are advised to explore example projects using this bare code for a better understanding of its capabilities.

solon

Solon is a Java enterprise application development framework that is restrained, efficient, and open. It offers better cost performance for computing resources with 700% higher concurrency and 50% memory savings. It enables faster development productivity with less code and easy startup, 10 times faster than traditional methods. Solon provides a better production and deployment experience by packing applications 90% smaller. It supports a greater range of compatibility with non-Java-EE architecture and compatibility with Java 8 to Java 24, including GraalVM native image support. Solon is built from scratch with flexible interface specifications and an open ecosystem.

llm-zoomcamp

LLM Zoomcamp is a free online course focusing on real-life applications of Large Language Models (LLMs). Over 10 weeks, participants will learn to build an AI bot capable of answering questions based on a knowledge base. The course covers topics such as LLMs, RAG, open-source LLMs, vector databases, orchestration, monitoring, and advanced RAG systems. Pre-requisites include comfort with programming, Python, and the command line, with no prior exposure to AI or ML required. The course features a pre-course workshop and is led by instructors Alexey Grigorev and Magdalena Kuhn, with support from sponsors and partners.

For similar jobs

llmops-promptflow-template

LLMOps with Prompt flow is a template and guidance for building LLM-infused apps using Prompt flow. It provides centralized code hosting, lifecycle management, variant and hyperparameter experimentation, A/B deployment, many-to-many dataset/flow relationships, multiple deployment targets, comprehensive reporting, BYOF capabilities, configuration-based development, local prompt experimentation and evaluation, endpoint testing, and optional Human-in-loop validation. The tool is customizable to suit various application needs.

azure-search-vector-samples

This repository provides code samples in Python, C#, REST, and JavaScript for vector support in Azure AI Search. It includes demos for various languages showcasing vectorization of data, creating indexes, and querying vector data. Additionally, it offers tools like Azure AI Search Lab for experimenting with AI-enabled search scenarios in Azure and templates for deploying custom chat-with-your-data solutions. The repository also features documentation on vector search, hybrid search, creating and querying vector indexes, and REST API references for Azure AI Search and Azure OpenAI Service.

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and enhancing collaboration between teams. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, setting project and model configuration, launching and monitoring training jobs, and media upload and prediction. The SDK also includes tutorial-style Jupyter notebooks demonstrating its usage.

booster

Booster is a powerful inference accelerator designed for scaling large language models within production environments or for experimental purposes. It is built with performance and scaling in mind, supporting various CPUs and GPUs, including Nvidia CUDA, Apple Metal, and OpenCL cards. The tool can split large models across multiple GPUs, offering fast inference on machines with beefy GPUs. It supports both regular FP16/FP32 models and quantised versions, along with popular LLM architectures. Additionally, Booster features proprietary Janus Sampling for code generation and non-English languages.

xFasterTransformer

xFasterTransformer is an optimized solution for Large Language Models (LLMs) on the X86 platform, providing high performance and scalability for inference on mainstream LLM models. It offers C++ and Python APIs for easy integration, along with example codes and benchmark scripts. Users can prepare models in a different format, convert them, and use the APIs for tasks like encoding input prompts, generating token ids, and serving inference requests. The tool supports various data types and models, and can run in single or multi-rank modes using MPI. A web demo based on Gradio is available for popular LLM models like ChatGLM and Llama2. Benchmark scripts help evaluate model inference performance quickly, and MLServer enables serving with REST and gRPC interfaces.

amazon-transcribe-live-call-analytics

The Amazon Transcribe Live Call Analytics (LCA) with Agent Assist Sample Solution is designed to help contact centers assess and optimize caller experiences in real time. It leverages Amazon machine learning services like Amazon Transcribe, Amazon Comprehend, and Amazon SageMaker to transcribe and extract insights from contact center audio. The solution provides real-time supervisor and agent assist features, integrates with existing contact centers, and offers a scalable, cost-effective approach to improve customer interactions. The end-to-end architecture includes features like live call transcription, call summarization, AI-powered agent assistance, and real-time analytics. The solution is event-driven, ensuring low latency and seamless processing flow from ingested speech to live webpage updates.

ai-lab-recipes

This repository contains recipes for building and running containerized AI and LLM applications with Podman. It provides model servers that serve machine-learning models via an API, allowing developers to quickly prototype new AI applications locally. The recipes include components like model servers and AI applications for tasks such as chat, summarization, object detection, etc. Images for sample applications and models are available in `quay.io`, and bootable containers for AI training on Linux OS are enabled.

XLearning

XLearning is a scheduling platform for big data and artificial intelligence, supporting various machine learning and deep learning frameworks. It runs on Hadoop Yarn and integrates frameworks like TensorFlow, MXNet, Caffe, Theano, PyTorch, Keras, XGBoost. XLearning offers scalability, compatibility, multiple deep learning framework support, unified data management based on HDFS, visualization display, and compatibility with code at native frameworks. It provides functions for data input/output strategies, container management, TensorBoard service, and resource usage metrics display. XLearning requires JDK >= 1.7 and Maven >= 3.3 for compilation, and deployment on CentOS 7.2 with Java >= 1.7 and Hadoop 2.6, 2.7, 2.8.