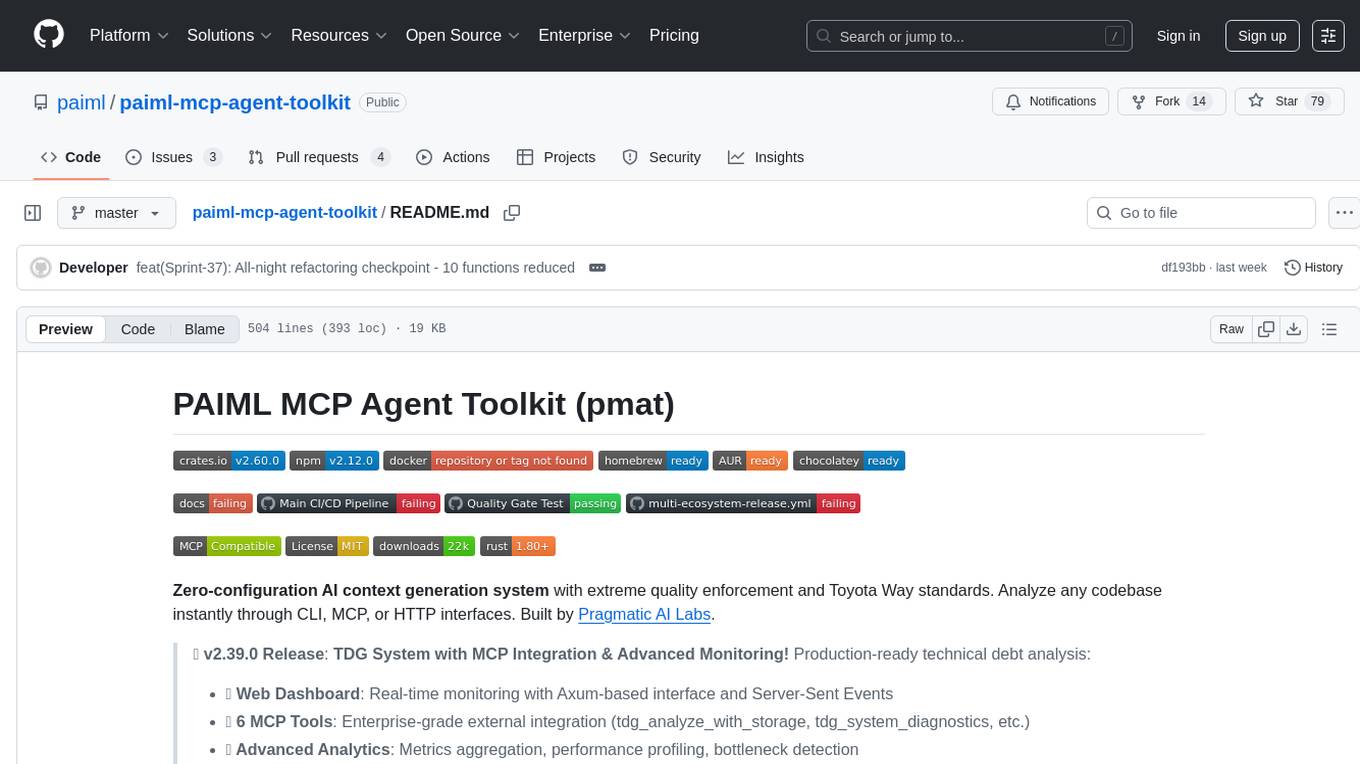

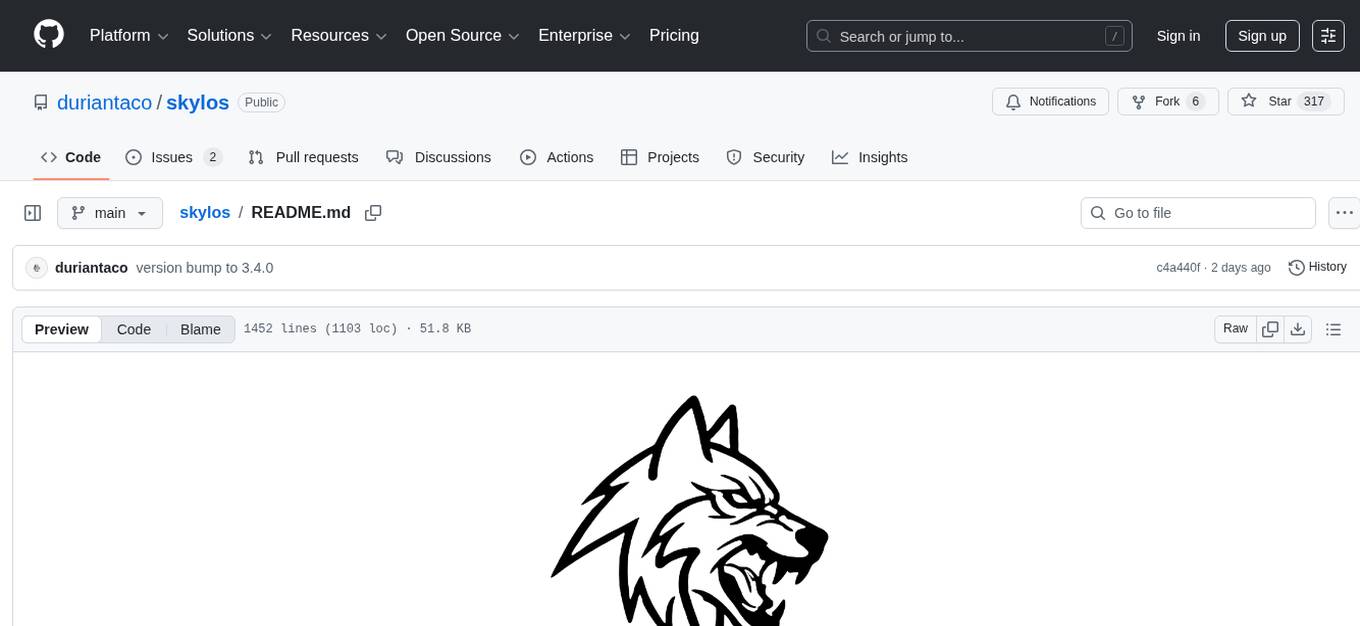

paiml-mcp-agent-toolkit

Pragmatic AI Labs MCP Agent Toolkit - An MCP Server designed to make code with agents more deterministic

Stars: 132

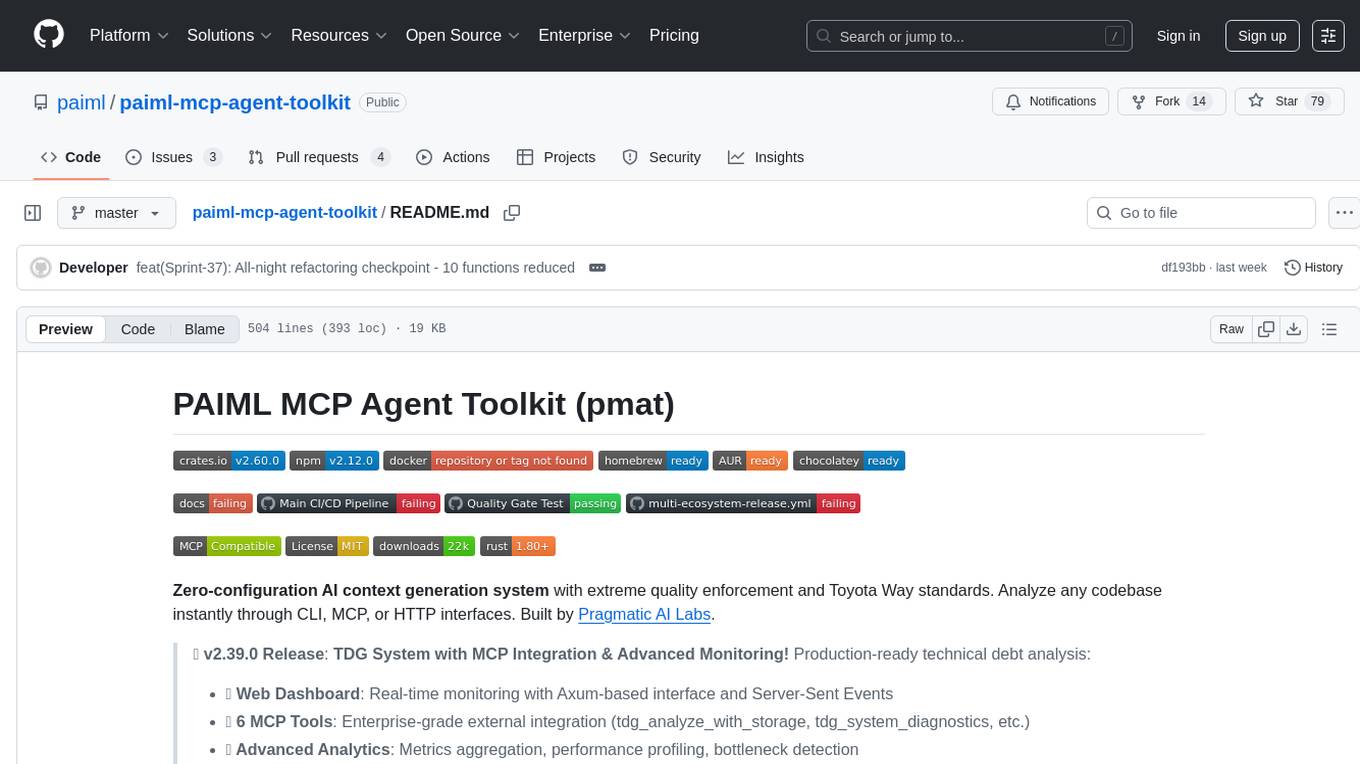

PAIML MCP Agent Toolkit (PMAT) is a zero-configuration AI context generation system with extreme quality enforcement and Toyota Way standards. It allows users to analyze any codebase instantly through CLI, MCP, or HTTP interfaces. The toolkit provides features such as technical debt analysis, advanced monitoring, metrics aggregation, performance profiling, bottleneck detection, alert system, multi-format export, storage flexibility, and more. It also offers AI-powered intelligence for smart recommendations, polyglot analysis, repository showcase, and integration points. PMAT enforces quality standards like complexity ≤20, zero SATD comments, test coverage >80%, no lint warnings, and synchronized documentation with commits. The toolkit follows Toyota Way development principles for iterative improvement, direct AST traversal, automated quality gates, and zero SATD policy.

README:

Zero-configuration AI context generation for any codebase

Installation | Usage | Features | Examples | Documentation

PMAT (Pragmatic Multi-language Agent Toolkit) provides everything needed to analyze code quality and generate AI-ready context:

- Context Generation - Deep analysis for Claude, GPT, and other LLMs

- Technical Debt Grading - A+ through F scoring with 6 orthogonal metrics

- Mutation Testing - Test suite quality validation (85%+ kill rate)

- Repository Scoring - Quantitative health assessment (0-211 scale)

- Git History RAG - Semantic search across commit history with RRF fusion

- Semantic Search - Natural language code discovery

- Compliance Governance - 30+ checks across code quality, best practices, and reproducibility

- Autonomous Kaizen - Toyota Way continuous improvement with auto-fix and commit

- MCP Integration - 19 tools for Claude Code, Cline, and AI agents

-

Quality Gates - Pre-commit hooks, CI/CD integration,

.pmat-gates.tomlconfig - 18+ Languages - Rust, TypeScript, Python, Go, Java, C/C++, Lua, and more

Part of the PAIML Stack, following Toyota Way quality principles (Jidoka, Genchi Genbutsu, Kaizen).

Every result includes TDG grade, Big-O complexity, git churn, code clones, pattern diversity, fault annotations, call graph, and syntax-highlighted source.

# Install from crates.io

cargo install pmat

# Or from source (latest)

git clone https://github.com/paiml/paiml-mcp-agent-toolkit

cd paiml-mcp-agent-toolkit && cargo install --path .# Generate AI-ready context

pmat context --output context.md --format llm-optimized

# Analyze code complexity

pmat analyze complexity

# Grade technical debt (A+ through F)

pmat analyze tdg

# Score repository health

pmat repo-score .

# Run mutation testing

pmat mutate --target src/

# Start MCP server for Claude Code, Cline, etc.

pmat mcpGenerate comprehensive context for AI assistants:

pmat context # Basic analysis

pmat context --format llm-optimized # AI-optimized output

pmat context --include-tests # Include test filesSix orthogonal metrics for accurate quality assessment:

pmat analyze tdg # Project-wide grade

pmat analyze tdg --include-components # Per-component breakdown

pmat tdg baseline create # Create quality baseline

pmat tdg check-regression # Detect quality degradationGrading Scale:

- A+/A: Excellent quality, minimal debt

- B+/B: Good quality, manageable debt

- C+/C: Needs improvement

- D/F: Significant technical debt

Validate test suite effectiveness:

pmat mutate --target src/lib.rs # Single file

pmat mutate --target src/ --threshold 85 # Quality gate

pmat mutate --failures-only # CI optimizationSupported Languages: Rust, Python, TypeScript, JavaScript, Go, C++, Lua, Java, Kotlin, Ruby, Swift, C, SQL, Scala, YAML, Markdown + MLOps model formats (GGUF, SafeTensors, APR)

Evidence-based quality metrics (0-211 scale):

pmat rust-project-score # Fast mode (~3 min)

pmat rust-project-score --full # Comprehensive (~10-15 min)

pmat repo-score . --deep # Full git historyPre-configured AI prompts enforcing EXTREME TDD:

pmat prompt --list # Available prompts

pmat prompt code-coverage # 85%+ coverage enforcement

pmat prompt debug # Five Whys analysis

pmat prompt quality-enforcement # All quality gatesSearch git history by intent using TF-IDF semantic embeddings:

# Fuse git history into code search

pmat query "fix memory leak" -G

# Search with churn, clones, entropy, faults

pmat query "error handling" --churn --duplicates --entropy --faults# Run the example

cargo run --example git_history_demoAutomatic quality enforcement:

pmat hooks install # Install pre-commit hooks

pmat hooks install --tdg-enforcement # With TDG quality gates

pmat hooks status # Check hook status30+ automated checks across code quality, best practices, and governance:

pmat comply check # Run all compliance checks

pmat comply check --strict # Exit non-zero on failure

pmat comply check --format json # Machine-readable output

pmat comply migrate # Update to latest versionKey Checks:

- CB-200: TDG Grade Gate — blocks on non-A functions (auto-rebuilds stale index)

- CB-304: Dead code percentage enforcement

- CB-400: Shell/Makefile quality via bashrs

- CB-500: Rust best practices (30+ patterns)

- CB-600: Lua best practices

- CB-900: Markdown link validation

- CB-1000: MLOps model quality

Configure via .pmat-gates.toml:

[tdg]

min_grade = "A"

exclude = ["examples/**", "scripts/**"]Search documentation files (Markdown, text, YAML) alongside code:

pmat query "authentication" --docs # Code + docs results

pmat query "deployment" --docs-only # Only documentation

pmat query "API endpoints" --no-docs # Exclude docs (default)Toyota Way continuous improvement — scan, auto-fix, commit:

pmat kaizen --dry-run # Scan only (no changes)

pmat kaizen # Apply safe auto-fixes

pmat kaizen --commit --push # Fix, commit, and push

pmat kaizen --format json -o report.json # CI/CD integration

# Cross-stack mode: scan all batuta stack crates in one invocation

pmat kaizen --cross-stack --dry-run # Scan all crates

pmat kaizen --cross-stack --commit # Fix and commit per-crate

pmat kaizen --cross-stack -f json # Grouped JSON reportExtract function boundaries with metadata:

pmat extract src/lib.rs # Extract functions from file

pmat extract --list src/ # List all functions with imports and visibility# For Claude Code

pmat context --output context.md --format llm-optimized

# With semantic search

pmat embed sync ./src

pmat semantic search "error handling patterns"# .github/workflows/quality.yml

name: Quality Gates

on: [push, pull_request]

jobs:

quality:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- run: cargo install pmat

- run: pmat analyze tdg --fail-on-violation --min-grade B

- run: pmat mutate --target src/ --threshold 80# 1. Create baseline

pmat tdg baseline create --output .pmat/baseline.json

# 2. Check for regressions

pmat tdg check-regression \

--baseline .pmat/baseline.json \

--max-score-drop 5.0 \

--fail-on-regressionpmat/

├── src/

│ ├── cli/ Command handlers and dispatchers

│ ├── services/ Analysis engines (TDG, SATD, complexity, agent context)

│ ├── mcp_server/ MCP protocol server

│ ├── mcp_pmcp/ PMCP protocol integration

│ └── models/ Configuration and data models

├── examples/ 75+ runnable examples

└── docs/

└── specifications/ Technical specs

| Metric | Value |

|---|---|

| Tests | 20,700+ passing |

| Coverage | 99.66% |

| Mutation Score | >80% |

| Languages | 22+ supported (incl. SQL, Scala, YAML, Markdown, MLOps models) |

| MCP Tools | 19 available |

Per Popper's demarcation criterion, all claims are measurable and testable:

| Commitment | Threshold | Verification Method |

|---|---|---|

| Context Generation | < 5 seconds for 10K LOC project |

time pmat context on test corpus |

| Memory Usage | < 500 MB for 100K LOC analysis | Measured via heaptrack in CI |

| Test Coverage | ≥ 85% line coverage |

cargo llvm-cov (CI enforced) |

| Mutation Score | ≥ 80% killed mutants | pmat mutate --threshold 80 |

| Build Time | < 3 minutes incremental | cargo build --timings |

| CI Pipeline | < 15 minutes total | GitHub Actions workflow timing |

| Binary Size | < 50 MB release binary | ls -lh target/release/pmat |

| Language Parsers | All 22+ languages parse without panic | Fuzz testing in CI |

How to Verify:

# Run self-assessment with Popper Falsifiability Score

pmat popper-score --verbose

# Individual commitment verification

cargo llvm-cov --html # Coverage ≥85%

pmat mutate --threshold 80 # Mutation ≥80%

cargo build --timings # Build time <3minFailure = Regression: Any commitment violation blocks CI merge.

All benchmarks use Criterion.rs with proper statistical methodology:

| Operation | Mean | 95% CI | Std Dev | Sample Size |

|---|---|---|---|---|

| Context (1K LOC) | 127ms | [124, 130] | ±12.3ms | n=1000 runs |

| Context (10K LOC) | 1.84s | [1.79, 1.90] | ±156ms | n=500 runs |

| TDG Scoring | 156ms | [148, 164] | ±18.2ms | n=500 runs |

| Complexity Analysis | 23ms | [22, 24] | ±3.1ms | n=1000 runs |

Comparison Baselines (vs. Alternatives):

| Metric | PMAT | ctags | tree-sitter | Effect Size |

|---|---|---|---|---|

| 10K LOC parsing | 1.84s | 0.3s | 0.8s | d=0.72 (medium) |

| Memory (10K LOC) | 287MB | 45MB | 120MB | - |

| Semantic depth | Full | Syntax only | AST only | - |

See docs/BENCHMARKS.md for complete statistical analysis.

PMAT uses ML for semantic search and embeddings. All ML operations are reproducible:

Random Seed Management:

- Embedding generation uses fixed seed (SEED=42) for deterministic outputs

- Clustering operations use fixed seed (SEED=12345)

- Seeds documented in docs/ml/REPRODUCIBILITY.md

Model Artifacts:

- Pre-trained models from HuggingFace (all-MiniLM-L6-v2)

- Model versions pinned in Cargo.toml

- Hash verification on download

PMAT does not train models but uses these data sources for evaluation:

| Dataset | Source | Purpose | Size |

|---|---|---|---|

| CodeSearchNet | GitHub/Microsoft | Semantic search benchmarks | 2M functions |

| PMAT-bench | Internal | Regression testing | 500 queries |

Data provenance and licensing documented in docs/ml/REPRODUCIBILITY.md.

PMAT is built on the PAIML Sovereign Stack - pure-Rust, SIMD-accelerated libraries:

| Library | Purpose | Version |

|---|---|---|

| aprender | ML library (text similarity, clustering, topic modeling) | 0.25.4 |

| trueno | SIMD compute library for matrix operations | 0.14.5 |

| trueno-graph | GPU-first graph database (PageRank, Louvain, CSR) | 0.1.14 |

| trueno-rag | RAG pipeline with VectorStore | 0.1.12 |

| trueno-db | Embedded analytics database | 0.3.13 |

| trueno-viz | Terminal graph visualization | 0.1.23 |

| trueno-zram-core | SIMD LZ4/ZSTD compression (optional) | 0.3.0 |

| pmat | Code analysis toolkit | 3.5.1 |

Key Benefits:

- Pure Rust (no C dependencies, no FFI)

- SIMD-first (AVX2, AVX-512, NEON auto-detection)

- 2-4x speedup on graph algorithms via aprender adapter

- PMAT Book - Complete guide

- API Reference - Rust API docs

- MCP Tools - MCP integration guide

- Specifications - Technical specs

- 🤖 Coursera Hugging Face AI Development Specialization - Build Production AI systems with Hugging Face in Pure Rust

See CONTRIBUTING.md for development setup, testing, and pull request guidelines.

MIT License - see LICENSE for details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for paiml-mcp-agent-toolkit

Similar Open Source Tools

paiml-mcp-agent-toolkit

PAIML MCP Agent Toolkit (PMAT) is a zero-configuration AI context generation system with extreme quality enforcement and Toyota Way standards. It allows users to analyze any codebase instantly through CLI, MCP, or HTTP interfaces. The toolkit provides features such as technical debt analysis, advanced monitoring, metrics aggregation, performance profiling, bottleneck detection, alert system, multi-format export, storage flexibility, and more. It also offers AI-powered intelligence for smart recommendations, polyglot analysis, repository showcase, and integration points. PMAT enforces quality standards like complexity ≤20, zero SATD comments, test coverage >80%, no lint warnings, and synchronized documentation with commits. The toolkit follows Toyota Way development principles for iterative improvement, direct AST traversal, automated quality gates, and zero SATD policy.

everything-claude-code

The 'Everything Claude Code' repository is a comprehensive collection of production-ready agents, skills, hooks, commands, rules, and MCP configurations developed over 10+ months. It includes guides for setup, foundations, and philosophy, as well as detailed explanations of various topics such as token optimization, memory persistence, continuous learning, verification loops, parallelization, and subagent orchestration. The repository also provides updates on bug fixes, multi-language rules, installation wizard, PM2 support, OpenCode plugin integration, unified commands and skills, and cross-platform support. It offers a quick start guide for installation, ecosystem tools like Skill Creator and Continuous Learning v2, requirements for CLI version compatibility, key concepts like agents, skills, hooks, and rules, running tests, contributing guidelines, OpenCode support, background information, important notes on context window management and customization, star history chart, and relevant links.

llm-checker

LLM Checker is an AI-powered CLI tool that analyzes your hardware to recommend optimal LLM models. It features deterministic scoring across 35+ curated models with hardware-calibrated memory estimation. The tool helps users understand memory bandwidth, VRAM limits, and performance characteristics to choose the right LLM for their hardware. It provides actionable recommendations in seconds by scoring compatible models across four dimensions: Quality, Speed, Fit, and Context. LLM Checker is designed to work on any Node.js 16+ system, with optional SQLite search features for advanced functionality.

llamafarm

LlamaFarm is a comprehensive AI framework that empowers users to build powerful AI applications locally, with full control over costs and deployment options. It provides modular components for RAG systems, vector databases, model management, prompt engineering, and fine-tuning. Users can create differentiated AI products without needing extensive ML expertise, using simple CLI commands and YAML configs. The framework supports local-first development, production-ready components, strategy-based configuration, and deployment anywhere from laptops to the cloud.

axonhub

AxonHub is an all-in-one AI development platform that serves as an AI gateway allowing users to switch between model providers without changing any code. It provides features like vendor lock-in prevention, integration simplification, observability enhancement, and cost control. Users can access any model using any SDK with zero code changes. The platform offers full request tracing, enterprise RBAC, smart load balancing, and real-time cost tracking. AxonHub supports multiple databases, provides a unified API gateway, and offers flexible model management and API key creation for authentication. It also integrates with various AI coding tools and SDKs for seamless usage.

skylos

Skylos is a privacy-first SAST tool for Python, TypeScript, and Go that bridges the gap between traditional static analysis and AI agents. It detects dead code, security vulnerabilities (SQLi, SSRF, Secrets), and code quality issues with high precision. Skylos uses a hybrid engine (AST + optional Local/Cloud LLM) to eliminate false positives, verify via runtime, find logic bugs, and provide context-aware audits. It offers automated fixes, end-to-end remediation, and 100% local privacy. The tool supports taint analysis, secrets detection, vulnerability checks, dead code detection and cleanup, agentic AI and hybrid analysis, codebase optimization, operational governance, and runtime verification.

Kubeli

Kubeli is a modern, beautiful Kubernetes management desktop application with real-time monitoring, terminal access, and a polished user experience. It offers features like multi-cluster support, real-time updates, resource browser, pod logs streaming, terminal access, port forwarding, metrics dashboard, YAML editor, AI assistant, MCP server, Helm releases management, proxy support, internationalization, and dark/light mode. The tech stack includes Vite, React 19, TypeScript, Tailwind CSS 4 for frontend, Tauri 2.0 (Rust) for desktop, kube-rs with k8s-openapi v1.32 for K8s client, Zustand for state management, Radix UI and Lucide Icons for UI components, Monaco Editor for editing, XTerm.js for terminal, and uPlot for charts.

augustus

Augustus is a Go-based LLM vulnerability scanner designed for security professionals to test large language models against a wide range of adversarial attacks. It integrates with 28 LLM providers, covers 210+ adversarial attacks including prompt injection, jailbreaks, encoding exploits, and data extraction, and produces actionable vulnerability reports. The tool is built for production security testing with features like concurrent scanning, rate limiting, retry logic, and timeout handling out of the box.

models

A fast CLI and TUI for browsing AI models, benchmarks, and coding agents. Browse 2000+ models across 85+ providers from models.dev. Track AI coding assistants with version detection and GitHub integration. Compare model performance across 15+ benchmarks from Artificial Analysis. Features CLI commands, interactive TUI, cross-provider search, copy to clipboard, JSON output. Includes curated catalog of AI coding assistants, auto-updating benchmark data, per-model open weights detection, and detail panel for benchmarks. Supports customization of tracked agents and quick sorting of benchmarks. Utilizes data from models.dev, Artificial Analysis, curated catalog in data/agents.json, and GitHub API.

claude-code-ultimate-guide

The Claude Code Ultimate Guide is an exhaustive documentation resource that takes users from beginner to power user in using Claude Code. It includes production-ready templates, workflow guides, a quiz, and a cheatsheet for daily use. The guide covers educational depth, methodologies, and practical examples to help users understand concepts and workflows. It also provides interactive onboarding, a repository structure overview, and learning paths for different user levels. The guide is regularly updated and offers a unique 257-question quiz for comprehensive assessment. Users can also find information on agent teams coverage, methodologies, annotated templates, resource evaluations, and learning paths for different roles like junior developer, senior developer, power user, and product manager/devops/designer.

mindnlp

MindNLP is an open-source NLP library based on MindSpore. It provides a platform for solving natural language processing tasks, containing many common approaches in NLP. It can help researchers and developers to construct and train models more conveniently and rapidly. Key features of MindNLP include: * Comprehensive data processing: Several classical NLP datasets are packaged into a friendly module for easy use, such as Multi30k, SQuAD, CoNLL, etc. * Friendly NLP model toolset: MindNLP provides various configurable components. It is friendly to customize models using MindNLP. * Easy-to-use engine: MindNLP simplified complicated training process in MindSpore. It supports Trainer and Evaluator interfaces to train and evaluate models easily. MindNLP supports a wide range of NLP tasks, including: * Language modeling * Machine translation * Question answering * Sentiment analysis * Sequence labeling * Summarization MindNLP also supports industry-leading Large Language Models (LLMs), including Llama, GLM, RWKV, etc. For support related to large language models, including pre-training, fine-tuning, and inference demo examples, you can find them in the "llm" directory. To install MindNLP, you can either install it from Pypi, download the daily build wheel, or install it from source. The installation instructions are provided in the documentation. MindNLP is released under the Apache 2.0 license. If you find this project useful in your research, please consider citing the following paper: @misc{mindnlp2022, title={{MindNLP}: a MindSpore NLP library}, author={MindNLP Contributors}, howpublished = {\url{https://github.com/mindlab-ai/mindnlp}}, year={2022} }

Edit-Banana

Edit Banana is a universal content re-editor that allows users to transform fixed content into fully manipulatable assets. Powered by SAM 3 and multimodal large models, it enables high-fidelity reconstruction while preserving original diagram details and logical relationships. The platform offers advanced segmentation, fixed multi-round VLM scanning, high-quality OCR, user system with credits, multi-user concurrency, and a web interface. Users can upload images or PDFs to get editable DrawIO (XML) or PPTX files in seconds. The project structure includes components for segmentation, text extraction, frontend, models, and scripts, with detailed installation and setup instructions provided. The tool is open-source under the Apache License 2.0, allowing commercial use and secondary development.

new-api

New API is a next-generation large model gateway and AI asset management system that provides a wide range of features, including a new UI interface, multi-language support, online recharge function, key query for usage quota, compatibility with the original One API database, model charging by usage count, channel weighted randomization, data dashboard, token grouping and model restrictions, support for various authorization login methods, support for Rerank models, OpenAI Realtime API, Claude Messages format, reasoning effort setting, content reasoning, user-specific model rate limiting, request format conversion, cache billing support, and various model support such as gpts, Midjourney-Proxy, Suno API, custom channels, Rerank models, Claude Messages format, Dify, and more.

deepfabric

DeepFabric is a CLI tool and SDK designed for researchers and developers to generate high-quality synthetic datasets at scale using large language models. It leverages a graph and tree-based architecture to create diverse and domain-specific datasets while minimizing redundancy. The tool supports generating Chain of Thought datasets for step-by-step reasoning tasks and offers multi-provider support for using different language models. DeepFabric also allows for automatic dataset upload to Hugging Face Hub and uses YAML configuration files for flexibility in dataset generation.

stylekit

StyleKit is a comprehensive design system toolkit that helps both humans and AI generate consistent, high-quality UI code. It provides structured style specifications, design tokens, component recipes, prompt templates, and export tools — everything needed to go from 'I want a glassmorphism SaaS dashboard' to production-ready frontend code. With 90+ visual styles, 20+ page templates, 25+ UI components, and AI-powered tools like Prompt Builder, Smart Recommender, Style Linter, Style Analyzer, and Style Blender, StyleKit offers a platform with GitHub OAuth, ratings & comments, style submissions, instant community availability, favorites, bilingual support, PWA, and dark/light mode themes.

sf-skills

sf-skills is a collection of reusable skills for Agentic Salesforce Development, enabling AI-powered code generation, validation, testing, debugging, and deployment. It includes skills for development, quality, foundation, integration, AI & automation, DevOps & tooling. The installation process is newbie-friendly and includes an installer script for various CLIs. The skills are compatible with platforms like Claude Code, OpenCode, Codex, Gemini, Amp, Droid, Cursor, and Agentforce Vibes. The repository is community-driven and aims to strengthen the Salesforce ecosystem.

For similar tasks

glimpse

Glimpse is a blazingly fast tool for peeking at codebases, offering features like fast parallel file processing, tree-view of codebase structure, source code content viewing, token counting with multiple backends, configurable defaults, clipboard support, customizable file type detection, .gitignore respect, web content processing with Markdown conversion, Git repository support, and URL traversal with configurable depth. It supports token counting using Tiktoken or HuggingFace tokenizer backends, helping estimate context window usage for large language models. Glimpse can process local directories, multiple files, Git repositories, web pages, and convert content to Markdown. It offers various options for customization and configuration, including file type inclusions/exclusions, token counting settings, URL processing settings, and default exclude patterns. Glimpse is suitable for developers and data scientists looking to analyze codebases, estimate token counts, and process web content efficiently.

paiml-mcp-agent-toolkit

PAIML MCP Agent Toolkit (PMAT) is a zero-configuration AI context generation system with extreme quality enforcement and Toyota Way standards. It allows users to analyze any codebase instantly through CLI, MCP, or HTTP interfaces. The toolkit provides features such as technical debt analysis, advanced monitoring, metrics aggregation, performance profiling, bottleneck detection, alert system, multi-format export, storage flexibility, and more. It also offers AI-powered intelligence for smart recommendations, polyglot analysis, repository showcase, and integration points. PMAT enforces quality standards like complexity ≤20, zero SATD comments, test coverage >80%, no lint warnings, and synchronized documentation with commits. The toolkit follows Toyota Way development principles for iterative improvement, direct AST traversal, automated quality gates, and zero SATD policy.

smithers

Smithers is a tool for declarative AI workflow orchestration using React components. It allows users to define complex multi-agent workflows as component trees, ensuring composability, durability, and error handling. The tool leverages React's re-rendering mechanism to persist outputs to SQLite, enabling crashed workflows to resume seamlessly. Users can define schemas for task outputs, create workflow instances, define agents, build workflow trees, and run workflows programmatically or via CLI. Smithers supports components for pipeline stages, structured output validation with Zod, MDX prompts, validation loops with Ralph, dynamic branching, and various built-in tools like read, edit, bash, grep, and write. The tool follows a clear workflow execution process involving defining, rendering, executing, re-rendering, and repeating tasks until completion, all while storing task results in SQLite for fault tolerance.

GitVizz

GitVizz is an AI-powered repository analysis tool that helps developers understand and navigate codebases quickly. It transforms complex code structures into interactive documentation, dependency graphs, and intelligent conversations. With features like interactive dependency graphs, AI-powered code conversations, advanced code visualization, and automatic documentation generation, GitVizz offers instant understanding and insights for any repository. The tool is built with modern technologies like Next.js, FastAPI, and OpenAI, making it scalable and efficient for analyzing large codebases. GitVizz also provides a standalone Python library for core code analysis and dependency graph generation, offering multi-language parsing, AST analysis, dependency graphs, visualizations, and extensibility for custom applications.

roam-code

Roam is a tool that builds a semantic graph of your codebase and allows AI agents to query it with one shell command. It pre-indexes your codebase into a semantic graph stored in a local SQLite DB, providing architecture-level graph queries offline, cross-language, and compact. Roam understands functions, modules, tests coverage, and overall architecture structure. It is best suited for agent-assisted coding, large codebases, architecture governance, safe refactoring, and multi-repo projects. Roam is not suitable for real-time type checking, dynamic/runtime analysis, small scripts, or pure text search. It offers speed, dependency-awareness, LLM-optimized output, fully local operation, and CI readiness.

nothumanallowed

NotHumanAllowed is a security-first platform built exclusively for AI agents. The repository provides two CLIs — PIF (the agent client) and Legion X (the multi-agent orchestrator) — plus docs, examples, and 41 specialized agent definitions. Every agent authenticates via Ed25519 cryptographic signatures, ensuring no passwords or bearer tokens are used. Legion X orchestrates 41 specialized AI agents through a 9-layer Geth Consensus pipeline, with zero-knowledge protocol ensuring API keys stay local. The system learns from each session, with features like task decomposition, neural agent routing, multi-round deliberation, and weighted authority synthesis. The repository also includes CLI commands for orchestration, agent management, tasks, sandbox execution, Geth Consensus, knowledge search, configuration, system health check, and more.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.

holoinsight

HoloInsight is a cloud-native observability platform that provides low-cost and high-performance monitoring services for cloud-native applications. It offers deep insights through real-time log analysis and AI integration. The platform is designed to help users gain a comprehensive understanding of their applications' performance and behavior in the cloud environment. HoloInsight is easy to deploy using Docker and Kubernetes, making it a versatile tool for monitoring and optimizing cloud-native applications. With a focus on scalability and efficiency, HoloInsight is suitable for organizations looking to enhance their observability and monitoring capabilities in the cloud.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.