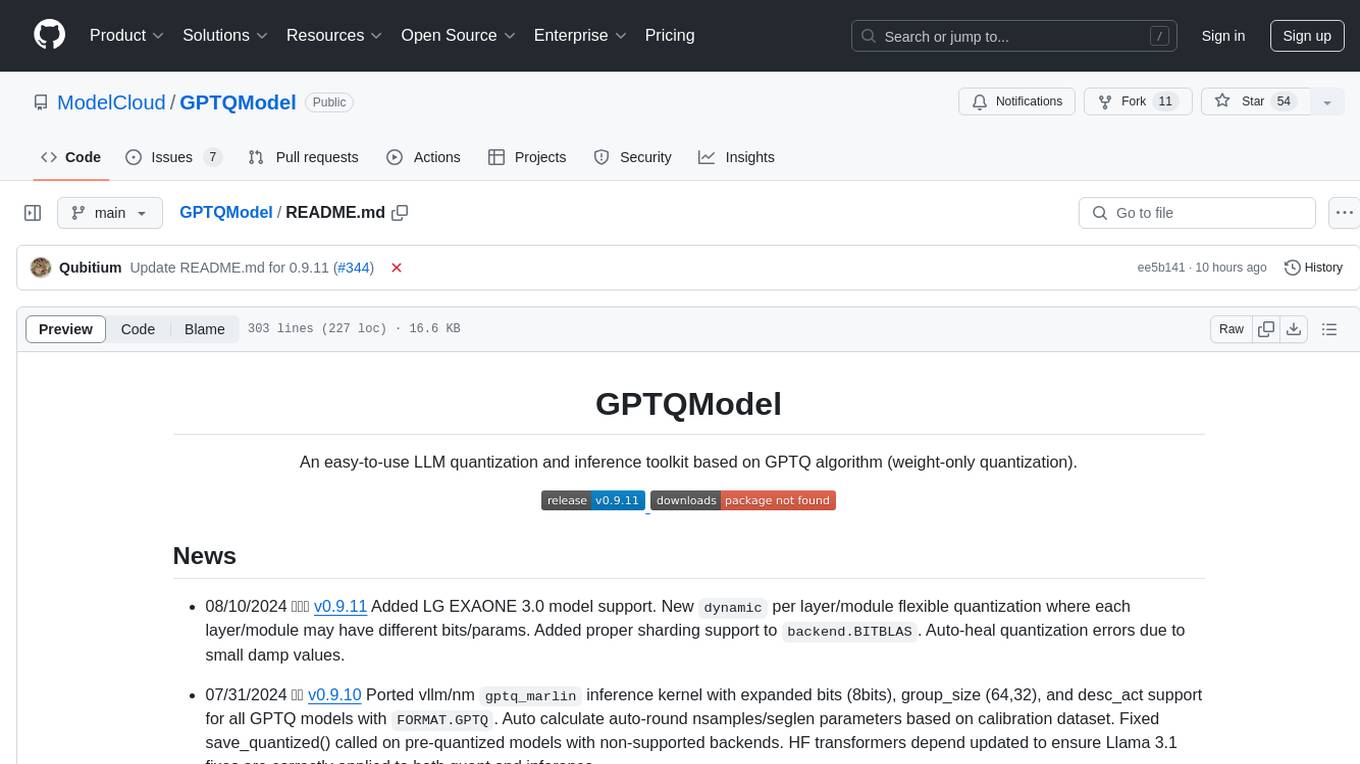

GPTQModel

LLM model quantization (compression) toolkit with hw acceleration support for Nvidia CUDA, AMD ROCm, Intel XPU and Intel/AMD/Apple CPU via HF, vLLM, and SGLang.

Stars: 1013

GPTQModel is an easy-to-use LLM quantization and inference toolkit based on the GPTQ algorithm. It provides support for weight-only quantization and offers features such as dynamic per layer/module flexible quantization, sharding support, and auto-heal quantization errors. The toolkit aims to ensure inference compatibility with HF Transformers, vLLM, and SGLang. It offers various model supports, faster quant inference, better quality quants, and security features like hash check of model weights. GPTQModel also focuses on faster quantization, improved quant quality as measured by PPL, and backports bug fixes from AutoGPTQ.

README:

LLM model quantization (compression) toolkit with hw acceleration support for Nvidia CUDA, AMD ROCm, Intel XPU, and Intel/AMD/Apple CPUs via HF, vLLM, and SGLang.

-

01/23/2026 5.7.0: ✨New

MoE.Routingconfig withBypassandOverrideoptions to allow multiple brute-force MoE routing controls for higher quality quantization of MoE experts. Combined withFailSafeStrategy, GPT-QModel now has three separate control settings for efficient MoE expert quantization.AWQqcfg.zero_pointproperty has been merged with a unifiedsymsymmetry property;zero_point=Trueis nowsym=False. FixedAWQsym=Truepacking/inference and quantization compatibility with some Qwen3 models. -

12/31/2025 5.7.0-dev: ✨New

FailSafeconfig andFailSafeStrategy, auto-enabled by default, to address uneven routing of MoE experts resulting in quantization issues for some MoE modules.Smoothoperations are introduced toFailSafeStrategyto reduce the impact of outliers inFailSafequantization usingRTNby default. DifferentFailSafeStrategyandSmootherscan be selected.Thresholdto activateFailSafecan also be customized. New Voxtral and Glm-4v model support, plus audio dataset calibration for Qwen2-Omni.AWQcompatibility fix forGLM 4.5-Air. -

12/17/2025 5.6.2-12 Patch: Fixed

uvcompatibility. Bothuvandpipinstalls will now show UI progress for external wheel/dependency downloads. FixedMacOSandAWQMarlinkernel loading import regressions. Resolved mostmulti-archcompile issues onUbuntu,Arch,RedHatand other distros. Fixedmulti-archbuild issues andTritonv2kernel launch bug on multi-GPUs. Fixed 3-bit Triton GPTQ kernel dequant/inference andlicenseproperty compatibility issue with latest pip/setuptools. -

12/9/2025 5.6.0: ✨New

HF Kernelfor CPU optimized forAMX,AVX2andAVX512. Auto module tree for auto-model support. Added Afmoe and Dosts1 model support. Fixed pre-layer pass quantization speed regression. Improved HF Transformers, Peft and Optimum support for both GPTQ and AWQ. Fixed many AWQ compatibility bugs and regressions. -

11/9/2025 5.4.0: ✨New Intel CPU and XPU hardware-optimized AWQ

TorchFusedAWQkernel. Torch Fused kernels now compatible withtorch.compile. Fixed AWQ MoE model compatibility and reduced VRAM usage. -

11/3/2025 5.2.0: ✨Minimax M2 support with ModelCloud BF16 M2 Model. New

VramStrategy.Balancedquantization property for reduced memory usage for large MoE on multi-3090 (24GB) devices. ✨Marin model. New AWQ Torch reference kernel. Fixed AWQ Marlin kernel for bf16. Fixed GLM 4.5/4.6 MoE missingmtplayers on model save (HF bug). Modular refactor. 🎉AWQ support out of beta with full feature support including multi-GPU quant and MoE VRAM saving. ✨Brumby (attention free) model support. ✨IBM Granite Nano support. Newcalibration_concat_separatorconfig option.

Archived News

* 10/24/2025 [5.0.0](https://github.com/ModelCloud/GPTQModel/releases/tag/v5.0.0): 🎉 Data-parallel quant support for `MoE` models on multi-GPU using `nogil` Python. `offload_to_disk` support enabled by default to massively reduce `CPU` RAM usage. New `Intel` and `AMD` CPU hardware-accelerated `TorchFused` kernel. Packing stage is now 4x faster and now inlined with quantization. `VRAM` pressure for large models reduced during quantization. `act_group_aware` is 16k+ times faster and now the default when `desc_act=False` for higher quality recovery without inference penalty of `desc_act=True`. New beta quality `AWQ` support with full `gemm`, `gemm_fast`, `marlin` kernel support. `LFM`, `Ling`, `Qwen3 Omni` model support. `Bitblas` kernel updated to support Bitblas `0.1.0.post1` release. Quantization is now faster with reduced VRAM usage. Enhanced logging support with `LogBar`.-

09/16/2025 4.2.5:

hyb_actrenamed toact_group_aware. Removed finickytorchimport withinsetup.py. Packing bug fix and prebuilt PyTorch 2.8 wheels. -

09/12/2025 4.2.0: ✨ New Models Support: Qwen3-Next, Apertus, Kimi K2, Klear, FastLLM, Nemotron H. New

fail_safebooleantoggle to.quantize()to patch-fix non-activatedMoEmodules due to highly uneven MoE model training. Fixed LavaQwen2 compatibility. Patch-fixed GIL=0 CUDA error for multi-GPU. Fixed compatibility with autoround + new transformers. -

09/04/2025 4.1.0: ✨ Meituan LongCat Flash Chat, Llama 4, GPT-OSS (BF16), and GLM-4.5-Air support. New experimental

mock_quantizationconfig to skip complex computational code paths during quantization to accelerate model quant testing. -

08/21/2025 4.0.0: 🎉 New Group Aware Reordering (GAR) support. New models support: Bytedance Seed-OSS, Baidu Ernie, Huawei PanGu, Gemma3, Xiaomi Mimo, Qwen 3/MoE, Falcon H1, GPT-Neo. Memory leak and multiple model compatibility fixes related to Transformers >= 4.54. Python >= 3.13t free-threading support added with near N x GPU linear scaling for quantization of MoE models and also linear N x CPU core scaling of packing stage. Early access PyTorch 2.8 fused-ops on Intel XPU for up to 50% speedup.

-

10/17/2025 5.0.0-dev

main: 👀: EoRA now multi-GPU compatible. Fixed both quality stability in multi-GPU quantization and VRAM usage. New LFM and Ling models support. -

09/30/2025 5.0.0-dev

main: 👀: New Data Parallel + Multi-GPU + Python 3.13T (PYTHON_GIL=0) equals 80%+ overall quant time reduction of large MoE models vs v4.2.5. -

09/29/2025 5.0.0-dev

main: 🎉 New Qwen3 Omni model support. AWQ Marlin kernel integrated + many disk offload, threading, and memory usage fixes. -

09/24/2025 5.0.0-dev

main: 🎉 Up to 90% CPU memory saving for large MoE models with faster/inline packing! 26% quant time reduction for Qwen3 MoE! AWQ Marlin kernel added. AWQ Gemm loading bug fixes.act_group_awarenow faster and auto enabled for GPTQ whendesc_actis False for higher quality recovery. -

09/19/2025 5.0.0-dev

main: 👀 CPU memory saving of ~73.5% during quantization stage with newoffload_to_diskquantization config property defaults toTrue. -

09/18/2025 5.0.0-dev

main: 🎉 AWQ quantization support! Complete refactor and simplification of model definitions in preparation for future quantization formats. -

08/19/2025 4.0.0-dev

main: Fixed quantization memory usage due to some models' incorrect application ofconfig.use_cacheduring inference. FixedTransformers>= 4.54.0 compatibility which changed layer forward return signature for some models. -

08/18/2025 4.0.0-dev

main: GPT-Neo model support. Memory leak fix in error capture (stack trace) and fixedlm_headquantization compatibility for many models. -

07/31/2025 4.0.0-dev

main: New Group Aware Reordering (GAR) support and preliminary PyTorch 2.8 fused-ops for Intel XPU for up to 50% speedup. -

07/03/2025 4.0.0-dev

main: New Baidu Ernie and Huawei PanGu model support. -

07/02/2025 4.0.0-dev

main: Gemma3 4B model compatibility fix. -

05/29/2025 4.0.0-dev

main: Falcon H1 model support. Fixed Transformers4.52+compatibility with Qwen 2.5 VL models. -

05/19/2025 4.0.0-dev

main: Qwen 2.5 Omni model support. -

05/05/2025 4.0.0-dev

main: Python 3.13t free-threading support added with near N x GPU linear scaling for quantization of MoE models and also linear N x CPU core scaling of packing stage. -

04/29/2025 3.1.0-dev (Now 4.)

main: Xiaomi Mimo model support. Qwen 3 and 3 MoE model support. New arg forquantize(..., calibration_dataset_min_length=10)to filter out bad calibration data that exists in public dataset (wikitext). -

04/13/2025 3.0.0: 🎉 New experimental

v2quantization option for improved model quantization accuracy validated byGSM8K_PLATINUMbenchmarks vs originalgptq. NewPhi4-MultiModalmodel support. New Nvidia Nemotron-Ultra model support. NewDreammodel support. New experimentalmulti-GPUquantization support. Reduced VRAM usage. Faster quantization. -

04/2/2025 2.2.0: New

Qwen 2.5 VLmodel support. Newsampleslog column during quantization to track module activation in MoE models.Losslog column now color-coded to highlight modules that are friendly/resistant to quantization. Progress (per-step) stats during quantization now streamed to log file. Autobfloat16dtype loading for models based on model config. Fixed kernel compile for PyTorch/ROCm. Slightly faster quantization and auto-resolve some low-level OOM issues for smaller VRAM GPUs. -

03/12/2025 2.1.0: ✨ New

QQQquantization method and inference support! New GoogleGemma 3zero-day model support. New AlibabaOvis 2VL model support. New AMDInstellazero-day model support. NewGSM8K PlatinumandMMLU-Probenchmarking support. Peft Lora training with GPT-QModel is now 30%+ faster on all GPU and IPEX devices. Auto-detect MoE modules not activated during quantization due to insufficient calibration data.ROCmsetup.pycompatibility fixes.OptimumandPeftcompatibility fixes. FixedPeftbfloat16training. -

03/03/2025 2.0.0: 🎉

GPTQquantization internals are now broken into multiple stages (processes) for feature expansion. SyncedMarlinkernel inference quality fix from upstream. AddedMARLIN_FP16, lower-quality but faster backend.ModelScopesupport added. Logging and CLI progress bar output has been revamped with sticky bottom progress. Fixedgeneration_config.jsonsave and load. Fixed Transformers v4.49.0 compatibility. Fixed compatibility of models withoutbos. Fixedgroup_size=-1andbits=3packing regression. Fixed Qwen 2.5 MoE regressions. Added CI tests to track regression in kernel inference quality and sweep all bits/group_sizes. Delegate logging/progress bar to LogBar package. Fixed ROCm version auto-detection insetupinstall. -

02/12/2025 1.9.0: ⚡ Offload

tokenizerfixes to Toke(n)icer package. Optimizedlm_headquant time and VRAM usage. OptimizedDeepSeek v3/R1model quant VRAM usage. FixedOptimumcompatibility regression inv1.8.1. 3x speed-up forTorchkernel when using PyTorch >= 2.5.0 withmodel.optimize(). Newcalibration_dataset_concat_sizeoption to enable calibration dataconcatmode to mimic original GPTQ data packing strategy which may improve quant speed and accuracy for datasets likewikitext2. -

02/08/2025 1.8.1: ⚡

DeepSeek v3/R1model support. New flexible weightpacking: allow quantized weights to be packed to[int32, int16, int8]dtypes.TritonandTorchkernels support full range of newQuantizeConfig.pack_dtype. Newauto_gc: boolcontrol inquantize()which can reduce quantization time for small model with no chance of OOM. NewGPTQModel.push_to_hub()API for easy quant model upload to HF repo. Newbuffered_fwd: boolcontrol inmodel.quantize(). Over 50% quantization speed-up for visual (vl) models.

Fixedbits=3packing andgroup_size=-1regression in v1.7.4. -

01/26/2025 1.7.4: New

compile()API for ~4-8% inference TPS improvement. Fasterpack()for post-quantization model save.Tritonkernel validated for Intel/XPUwhen Intel Triton packages are installed. Fixed Transformers (bug) downcasting tokenizer class on save. -

01/20/2025 1.7.3: New Telechat2 (China Telecom) and PhiMoE model support. Fixed

lm_headweights duplicated in post-quantize save() for models with tied-embedding. -

01/19/2025 1.7.2: Effective BPW (bits per weight) will now be logged during

load(). Reduce loading time on Intel Arc A770/B580XPUby 3.3x. Reduce memory usage in MLX conversion and fix Marlin kernel auto-select not checking CUDA compute version. -

01/17/2025 1.7.0: 👀 ✨

backend.MLXadded for runtime-conversion and execution of GPTQ models on Apple'sMLXframework on Apple Silicon (M1+). Exports ofgptqmodels tomlxalso now possible. We have addedmlxexported models to huggingface.co/ModelCloud. ✨lm_headquantization now fully supported by GPTQModel without external pkg dependency. -

01/07/2025 1.6.1: 🎉 New OpenAI API compatible endpoint via

model.serve(host, port). Auto-enable flash-attention2 for inference. Fixedsym=Falseloading regression. -

01/06/2025 1.6.0: ⚡25% faster quantization. 35% reduction in VRAM usage vs v1.5. 👀 AMD ROCm (6.2+) support added and validated for 7900XT+ GPU. Auto-tokenizer loader via

load()API. For most models you no longer need to manually init a tokenizer for both inference and quantization. -

01/01/2025 1.5.1: 🎉 2025! Added

QuantizeConfig.deviceto clearly define which device is used for quantization: default =auto. Non-quantized models are always loaded on CPU by-default and each layer is moved toQuantizeConfig.deviceduring quantization to minimize VRAM usage. Compatibility fixes forattn_implementation_autosetin latest transformers. -

12/23/2024 1.5.0: Multi-modal (image-to-text) optimized quantization support has been added for Qwen 2-VL and Ovis 1.6-VL. Previous image-to-text model quantizations did not use image calibration data, resulting in less than optimal post-quantization results. Version 1.5.0 is the first release to provide a stable path for multi-modal quantization: only text layers are quantized.

-

12/19/2024 1.4.5: Windows 11 support added/validated. Ovis VL model support with image dataset calibration. Fixed

dynamicloading. Reduced quantization VRAM usage. -

12/15/2024 1.4.2: MacOS

GPU(Metal) andCPU(M+) support added/validated for inference and quantization. Cohere 2 model support added. -

12/13/2024 1.4.1: Added Qwen2-VL model support.

msequantization control exposed inQuantizeConfig. Monkey patchpatch_vllm()andpatch_hf()API added to allow Transformers/Optimum/PEFT and vLLM to correctly load GPTQModel quantized models while upstream PRs are in pending status. -

12/10/2024 1.4.0

EvalPlusharness integration merged upstream. We now support bothlm-evalandEvalPlus. Added pure torchTorchkernel. RefactoredCudakernel to beDynamicCudakernel.Tritonkernel now auto-padded for max model support.Dynamicquantization now supports both positive+::default, and-:negative matching which allows matched modules to be skipped entirely for quantization. Fixed auto-Marlinkernel selection. Added auto-kernel fallback for unsupported kernel/module pairs. Lots of internal refactor and cleanup in preparation for transformers/optimum/peft upstream PR merge. Deprecated the saving ofMarlinweight format sinceMarlinsupports auto conversion ofgptqformat toMarlinduring runtime. -

11/29/2024 1.3.1 Olmo2 model support. Intel XPU acceleration via IPEX. Model sharding Transformer compatibility fix due to API deprecation in HF. Removed triton dependency. Triton kernel now optionally dependent on triton package.

-

11/26/2024 1.3.0 Zero-Day Hymba model support. Removed

tqdmandroguedependency. -

11/24/2024 1.2.3 HF GLM model support. ClearML logging integration. Use

device-smiand replacegputil+psutildependencies. Fixed model unit tests. -

11/11/2024 🚀 1.2.1 Meta MobileLLM model support added.

lm-eval[gptqmodel]integration merged upstream. Intel/IPEX CPU inference merged replacing QBits (deprecated). Auto-fix/patch ChatGLM-3/GLM-4 compatibility with latest transformers. New.load()and.save()API. -

10/29/2024 🚀 1.1.0 IBM Granite model support. Full auto-buildless wheel install from PyPI. Reduce max CPU memory usage by >20% during quantization. 100% CI model/feature coverage.

-

10/12/2024 ✨ 1.0.9 Move AutoRound to optional and fix pip install regression in v1.0.8.

-

10/11/2024 ✨ 1.0.8 Add wheel for Python 3.12 and CUDA 11.8.

-

10/08/2024 ✨ 1.0.7 Fixed Marlin (faster) kernel was not auto-selected for some models.

-

09/26/2024 ✨ 1.0.6 Fixed Llama 3.2 vision quantized loader.

-

09/26/2024 ✨ 1.0.5 Partial Llama 3.2 Vision model support (mllama): only text-layer quantization layers are supported for now.

-

09/26/2024 ✨ 1.0.4 Integrated Liger Kernel support for ~1/2 memory reduction on some models during quantization. Added control toggle to disable parallel packing.

-

09/18/2024 ✨ 1.0.3 Added Microsoft GRIN-MoE and MiniCPM3 support.

-

08/16/2024 ✨ 1.0.2 Support Intel/AutoRound v0.3, prebuilt whl packages, and PyPI release.

-

08/14/2024 ✨ 1.0.0 40% faster

packing, fixed Python 3.9 compatibility, addedlm_evalAPI. -

08/10/2024 🚀 0.9.11 Added LG EXAONE 3.0 model support. New

dynamicper layer/module flexible quantization where each layer/module may have different bits/params. Added proper sharding support tobackend.BITBLAS. Auto-heal quantization errors due to small damp values. -

07/31/2024 🚀 0.9.10 Ported vllm/nm

gptq_marlininference kernel with expanded bits (8bits), group_size (64,32), and desc_act support for all GPTQ models withFORMAT.GPTQ. Auto-calculate auto-round nsamples/seglen parameters based on calibration dataset. Fixed save_quantized() called on pre-quantized models with non-supported backends. HF transformers dependency updated to ensure Llama 3.1 fixes are correctly applied to both quant and inference. -

07/25/2024 🚀 0.9.9: Added Llama-3.1 support, Gemma2 27B quant inference support via vLLM, auto pad_token normalization, fixed auto-round quant compatibility for vLLM/SGLang, and more.

-

07/13/2024 🚀 0.9.8: Run quantized models directly using GPTQModel with fast

vLLMorSGLangbackend! Both vLLM and SGLang are optimized for dynamic batching inference for maximumTPS(check usage under examples). Marlin backend also got full end-to-end in/out features padding to enhance current/future model compatibility. -

07/08/2024 🚀 0.9.7: InternLM 2.5 model support added.

-

07/08/2024 🚀 0.9.6: Intel/AutoRound QUANT_METHOD support added for a potentially higher quality quantization with

lm_headmodule quantization support for even more VRAM reduction: format export toFORMAT.GPTQfor max inference compatibility. -

07/05/2024 🚀 0.9.5: CUDA kernels have been fully deprecated in favor of Exllama(v1/v2)/Marlin/Triton.

-

07/03/2024 🚀 0.9.4: HF Transformers integration added and bug fixed Gemma 2 support.

-

07/02/2024 🚀 0.9.3: Added Gemma 2 support, faster PPL calculations on GPU, and more code/arg refactor.

-

06/30/2024 🚀 0.9.2: Added auto-padding of model in/out-features for exllama and exllama v2. Fixed quantization of OPT and DeepSeek V2-Lite models. Fixed inference for DeepSeek V2-Lite.

-

06/29/2024 🚀 0.9.1: With 3 new models (DeepSeek-V2, DeepSeek-V2-Lite, DBRX Converted), BITBLAS new format/kernel, proper batching of calibration dataset resulting > 50% quantization speedup, security hash check of loaded model weights, tons of refactor/usability improvements, bug fixes, and much more.

-

06/20/2924 ✨ 0.9.0: Thanks for all the work from ModelCloud team and the open-source ML community for their contributions!

GPT-QModel is a production-ready LLM model compression/quantization toolkit with hw-accelerated inference support for both CPU/GPU via HF Transformers, vLLM, and SGLang.

GPT-QModel currently supports GPTQ, AWQ, QQQ, GPTAQ, EoRa, GAR, with more quantization methods and enhancements planned.

GPT-QModel is a modular design supporting multiple quantization methods and feature extensions.

| Feature | GPT-QModel | Transformers | vLLM | SGLang | Lora Training |

|---|---|---|---|---|---|

| GPTQ | ✅ | ✅ | ✅ | ✅ | ✅ |

| AWQ | ✅ | ✅ | ✅ | ✅ | ✅ |

| EoRA | ✅ | ✅ | ✅ | ✅ | x |

| Group Aware Act Reordering | ✅ | ✅ | ✅ | ✅ | ✅ |

| QQQ | ✅ | x | x | x | x |

| Rotation | ✅ | x | x | x | x |

| GPTAQ | ✅ | ✅ | ✅ | ✅ | ✅ |

- ✨ Native integration with HF Transformers, Optimum, and Peft

- 🚀 vLLM and SGLang inference integration for quantized models with format =

FORMAT.[GPTQ/AWQ] - ✨ GPTQ, AWQ, and QQQ quantization format with hardware-accelerated inference kernels.

- 🚀 Quantize MoE models with ease even with extreme routing activation bias via

Moe.Routingand/orFailSafe. - 🚀 Data Parallelism for 80%+ quantization speed reduction with Multi-GPU.

- 🚀 Optimized for Python >= 3.13t (free threading) with lock-free threading.

- ✨ Linux, MacOS, Windows platform support for CUDA (Nvidia), XPU (Intel), ROCm (AMD), MPS (Apple Silicon), CPU (Intel/AMD/Apple Silicon).

- ✨

Dynamicper-module mixed quantization control: each layer/module can have a unique quantization config or be excluded from quantization. - 🚀 Intel Torch 2.8 fused kernel support for XPU [

Arc+Datacenter Max] and CPU [avx,amx]. - 🚀 Python 3.13.3t (free-threading, GIL disabled) support for multi-GPU accelerated quantization for MoE models and multi-core CPU boost for packing.

- ✨ Asymmetric

Sym=Falsesupport. - ✨

lm_headmodule quant inference support for further VRAM reduction. - 🚀 Microsoft/BITBLAS optimized tile based inference.

- 💯 CI unit-test coverage for all supported models and kernels including post-quantization quality regression.

🤗 ModelCloud quantized Vortex models on HF

| Model | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Apertus | ✅ | EXAONE 3.0 | ✅ | Dots1 | ✅ | Mistral3 | ✅ | Qwen 2/3 (Next/MoE) | ✅ |

| Baichuan | ✅ | Falcon (H1) | ✅ | InternLM 1/2.5 | ✅ | Mixtral | ✅ | Qwen 2/2.5/3 VL | ✅ |

| Bloom | ✅ | FastVLM | ✅ | Kimi K2 | ✅ | MobileLLM | ✅ | Qwen 2.5/3 Omni | ✅ |

| ChatGLM | ✅ | Gemma 1/2/3 | ✅ | Klear | ✅ | MOSS | ✅ | RefinedWeb | ✅ |

| CodeGen | ✅ | GPTBigCod | ✅ | LING/RING | ✅ | MPT | ✅ | StableLM | ✅ |

| Cohere 1-2 | ✅ | GPTQ-Neo(X) | ✅ | Llama 1-3.3 | ✅ | Nemotron H | ✅ | StarCoder2 | ✅ |

| DBRX Converted | ✅ | GPT-2 | ✅ | Llama 3.2 VL | ✅ | Nemotron Ultra | ✅ | TeleChat2 | ✅ |

| Deci | ✅ | GPT-J | ✅ | Llama 4 | ✅ | OPT | ✅ | Trinity | ✅ |

| DeepSeek-V2/V3/R1 | ✅ | GPT-OSS | ✅ | LongCatFlash | ✅ | OLMo2 | ✅ | Yi | ✅ |

| DeepSeek-V2-Lite | ✅ | Granite | ✅ | LongLLaMA | ✅ | Ovis 1.6/2 | ✅ | Seed-OSS | ✅ |

| Dream | ✅ | GRIN-MoE | ✅ | Instella | ✅ | Phi 1-4 | ✅ | Voxtral | ✅ |

| ERNIE 4.5 | ✅ | GLM 4/4V/4MoE | ✅ | MiniCPM3 | ✅ | PanGu-α | ✅ | XVERSE | ✅ |

| Brumby | ✅ | Hymba | ✅ | Mistral | ✅ | Qwen 1/2/3 | ✅ | Minimax M2 | ✅ |

GPT-QModel is validated for Linux, MacOS, and Windows 11:

| Platform | Device | Optimized Arch | Kernels | |

|---|---|---|---|---|

| 🐧 Linux | Nvidia GPU | ✅ | Ampere+ |

Marlin, Exllama V2, Exllama V1, Triton, Torch |

| 🐧 Linux | AMD GPU | ✅ |

7900XT+, ROCm 6.2+

|

Exllama V2, Exllama V1, Torch |

| 🐧 Linux | Intel XPU | ✅ |

Arc, Datacenter Max

|

TorchFused, TorchFusedAWQ, Torch |

| 🐧 Linux | Intel/AMD CPU | ✅ |

avx, amx

|

TorchFused, TorchFusedAWQ, Torch |

| 🍎 MacOS | GPU (Metal) / CPU | ✅ |

Apple Silicon, M1+

|

Torch, MLX via conversion |

| 🪟 Windows | GPU (Nvidia) / CPU | ✅ | Nvidia |

Torch |

# You can install optional modules like autoround, ipex, vllm, sglang, bitblas.

# Example: pip install -v --no-build-isolation gptqmodel[vllm,sglang,bitblas]

pip install -v gptqmodel --no-build-isolation

uv pip install -v gptqmodel --no-build-isolation# clone repo

git clone https://github.com/ModelCloud/GPTQModel.git && cd GPTQModel

# python3-dev is required, ninja speeds up compilation, and you need to upgrade to the latest `setuptools` to avoid errors

apt install python3-dev ninja setuptools -U

# pip: compile and install

# You can install optional modules like vllm, sglang, bitblas.

# Example: pip install -v --no-build-isolation .[vllm,sglang,bitblas]

pip install -v . --no-build-isolationThree-line API to use GPT-QModel for GPTQ model inference:

from gptqmodel import GPTQModel

model = GPTQModel.load("ModelCloud/Llama-3.2-1B-Instruct-gptqmodel-4bit-vortex-v2.5")

result = model.generate("Uncovering deep insights begins with")[0] # tokens

print(model.tokenizer.decode(result)) # string outputTo use models from ModelScope instead of HuggingFace Hub, set an environment variable:

export GPTQMODEL_USE_MODELSCOPE=True# load model using above inference guide first

model.serve(host="0.0.0.0",port="12345")Basic example of using GPT-QModel to quantize an LLM model:

from datasets import load_dataset

from gptqmodel import GPTQModel, QuantizeConfig

model_id = "meta-llama/Llama-3.2-1B-Instruct"

quant_path = "Llama-3.2-1B-Instruct-gptqmodel-4bit"

calibration_dataset = load_dataset(

"allenai/c4",

data_files="en/c4-train.00001-of-01024.json.gz",

split="train"

).select(range(1024))["text"]

quant_config = QuantizeConfig(bits=4, group_size=128)

model = GPTQModel.load(model_id, quant_config)

# increase `batch_size` to match GPU/VRAM specs to speed up quantization

model.quantize(calibration_dataset, batch_size=1)

model.save(quant_path)Some MoE (mixture of experts) models have extremely uneven/biased routing (distribution of tokens) to the experts causing some expert modules to receive close-to-zero activated tokens, thus failing to complete calibration-based quantization (GPTQ/AWQ).

To better quantize these heavily biased MoE routed modules, GPT-QModel exposes 3 controls:

-

Moe.Routing = ExpertsRoutingOverride: Manually override thenum_experts_per_tokused for modelroutingmath, i.e., if a model only routes 4 experts per token out of 48 total experts, you can set this equal to 24 for 50% routing or 48 for 100% routing.ExpertsRoutingOverriderequires the model exposesnum_experts_per_tokor equivalent configuration control. -

Moe.Routing = ExpertsRoutingBypass: Brute-force and bypass allroutingmath so allexpertsreceiveallactivated tokens. This is akin toExpertsRoutingOverride.num_experts_per_tokset to total number of experts.ExpertsRoutingBypassis enabled/tested for some models and, due to the lifecycle complexity, it needs to be validated for every model. -

FailSafe: This isenabledbydefaultand is a naive weight-only quantization technique using simple (naive) quantization methods such asnearestwith optionalsmoothing. There are variousFailSafeStrategyoptions, along withSmoothMethodoptions, to complement this feature.FailSafedoes not requireactivationsbut has higher quantization error loss than normally activated GPTQ/AWQ. It is fast and applicable for all MoE models.

FailSafe can be combined with ExpertsRoutingOverride. There is no single best way to quantize MoE, and we recommend users to test all three methods.

# test post-quant inference

model = GPTQModel.load(quant_path)

result = model.generate("Uncovering deep insights begins with")[0] # tokens

print(model.tokenizer.decode(result)) # string outputGPT-QModel supports EoRA, a LoRA method developed by Nvidia that can further improve the accuracy of the quantized model.

# EoRa is currently only validated for GPTQ

# higher rank improves accuracy at the cost of VRAM usage

# suggestion: test rank 64 and 32 before 128 or 256 as latter may overfit while increasing memory usage

eora = Lora(

# for eora generation, path is adapter save path; for load, it is loading path

path=f"{quant_path}/eora_rank32",

rank=32,

)

# provide a previously GPTQ-quantized model path

GPTQModel.adapter.generate(

adapter=eora,

model_id_or_path=model_id,

quantized_model_id_or_path=quant_path,

calibration_dataset=calibration_dataset,

calibration_dataset_concat_size=0,

)

# post-eora inference

model = GPTQModel.load(

model_id_or_path=quant_path,

adapter=eora

)

tokens = model.generate("Capital of France is")[0]

result = model.tokenizer.decode(tokens)

print(f"Result: {result}")

# For more details on EoRA, please see GPTQModel/examples/eora

# Please use the benchmark tools in later part of this README to evaluate EoRA effectivenessFor more advanced features of model quantization, please refer to this script

Read the gptqmodel/models/llama.py code which explains in detail via comments how the model support is defined. Use it as a guide for PRs to add new models. Most models follow the same pattern.

GPTQModel inference is integrated into both lm-eval and evalplus

We highly recommend avoiding ppl and using lm-eval/evalplus to validate post-quantization model quality. ppl should only be used for regression tests and is not a good indicator of model output quality.

# gptqmodel is integrated into lm-eval >= v0.4.7

pip install lm-eval>=0.4.7

# gptqmodel is integrated into evalplus[main]

pip install -U "evalplus @ git+https://github.com/evalplus/evalplus"

Below is a basic sample using GPTQModel.eval API

from gptqmodel import GPTQModel

from gptqmodel.utils.eval import EVAL

model_id = "ModelCloud/Llama-3.2-1B-Instruct-gptqmodel-4bit-vortex-v1"

# Use `lm-eval` as framework to evaluate the model

lm_eval_data = GPTQModel.eval(model_id,

framework=EVAL.LM_EVAL,

tasks=[EVAL.LM_EVAL.ARC_CHALLENGE])

# Use `evalplus` as framework to evaluate the model

evalplus_data = GPTQModel.eval(model_id,

framework=EVAL.EVALPLUS,

tasks=[EVAL.EVALPLUS.HUMAN])QuantizeConfig.dynamic is a dynamic control that allows specific matching modules to be skipped for quantization (negative matching)

or have a unique [bits, group_size, sym, desc_act, mse, pack_dtype] property override per matching module vs base QuantizeConfig (positive match with override).

Sample QuantizeConfig.dynamic usage:

dynamic = {

# `.*\.` matches the layers_node prefix

# layer index starts at 0

# positive match: layer 19, gate module

r"+:.*\.18\..*gate.*": {"bits": 4, "group_size": 32},

# positive match: layer 20, gate module (prefix defaults to positive if missing)

r".*\.19\..*gate.*": {"bits": 8, "group_size": 64},

# negative match: skip layer 21, gate module

r"-:.*\.20\..*gate.*": {},

# negative match: skip all down modules for all layers

r"-:.*down.*": {},

} Group Aware Reordering (GAR) is an enhanced activation reordering scheme developed by Intel to improve the accuracy of quantized models without incurring additional inference overhead. Unlike traditional activation reordering, GAR restricts permutations to within individual groups or rearrangements of entire groups. This ensures each group's associated scales and zero-points remain efficiently accessible during inference, thereby avoiding any inference-time overhead.

How to enable GAR:

Set the act_group_aware parameter to True and disable the default activation reordering by setting desc_act to False in your QuantizeConfig. For example:

quant_config = QuantizeConfig(bits=4, group_size=128, act_group_aware=True)Enable GPTAQ quantization by setting gptaq = GPTAQConfig(...).

# Note GPTAQ is currently experimental, not MoE compatible, and requires 2-4x more VRAM to execute

# We have many reports of GPTAQ not working better or exceeding GPTQ so please use for testing only

# If OOM on 1 GPU, please set CUDA_VISIBLE_DEVICES=0,1 to 2 GPUs and gptqmodel will auto use second GPU

quant_config = QuantizeConfig(bits=4, group_size=128, gptaq=GPTAQConfig(alpha=0.25, device="auto"))GPT-QModel has fully supplanted AutoGPTQ and AutoAWQ for HF Transformers/Optimum/Peft integration. Model inference has drop-in support with zero changes.

For model quantization, there are some config changes for AutoAWQ:

- AutoAWQ:

versionproperty is nowformat.zero_pointis nowsym(Symmetric Quantization):sym = Trueis equivalent tozero_point = False

Models quantized by GPT-QModel are inference compatible with HF Transformers (minus dynamic), vLLM, and SGLang.

- GPTQ: IST-DASLab, main-author: Elias Frantar, arXiv:2210.17323

- AWQ: main-authors: Lin, Ji and Tang, Jiaming and Tang, Haotian and Yang, Shang and Dang, Xingyu and Han, Song

- EoRA: Nvidia, main-author: Shih-Yang Liu, arXiv preprint arXiv:2410.21271.

- GAR: Intel, main-author: T Gafni, A Karnieli, Y Hanani, Paper

- GPTAQ: Yale Intelligent Computing Lab, main-author: Yuhang Li, arXiv:2504.02692.

- QQQ: Meituan, main-author Ying Zhang, arXiv:2406.09904

# GPT-QModel

@misc{qubitium2024gptqmodel,

author = {ModelCloud.ai and qubitium@modelcloud.ai},

title = {GPT-QModel},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/modelcloud/gptqmodel}},

note = {Contact: qubitium@modelcloud.ai},

year = {2024},

}

# GPTQ

@article{frantar-gptq,

title={{GPTQ}: Accurate Post-training Compression for Generative Pretrained Transformers},

author={Elias Frantar and Saleh Ashkboos and Torsten Hoefler and Dan Alistarh},

journal={arXiv preprint arXiv:2210.17323},

year={2022}

}

# AWQ

@article{lin2023awq,

title={AWQ: Activation-aware Weight Quantization for LLM Compression and Acceleration},

author={Lin, Ji and Tang, Jiaming and Tang, Haotian and Yang, Shang and Dang, Xingyu and Han, Song},

journal={arXiv},

year={2023}

}

# EoRA

@article{liu2024eora,

title={EoRA: Training-free Compensation for Compressed LLM with Eigenspace Low-Rank Approximation},

author={Liu, Shih-Yang and Yang, Huck and Wang, Chien-Yi and Fung, Nai Chit and Yin, Hongxu and Sakr, Charbel and Muralidharan, Saurav and Cheng, Kwang-Ting and Kautz, Jan and Wang, Yu-Chiang Frank and others},

journal={arXiv preprint arXiv:2410.21271},

year={2024}

}

# Group Aware Reordering (GAR)

@article{gar,

title={Dual Precision Quantization for Efficient and Accurate Deep Neural Networks Inference, CVPRW 2025.},

author={T. Gafni, A. Karnieli, Y. Hanani},

journal={arXiv preprint arXiv:2505.14638},

year={2025}

}

# GPTQ Marlin Kernel

@article{frantar2024marlin,

title={MARLIN: Mixed-Precision Auto-Regressive Parallel Inference on Large Language Models},

author={Frantar, Elias and Castro, Roberto L and Chen, Jiale and Hoefler, Torsten and Alistarh, Dan},

journal={arXiv preprint arXiv:2408.11743},

year={2024}

}

# GPTAQ

@article{li2025gptaq,

title={GPTAQ: Efficient Finetuning-Free Quantization for Asymmetric Calibration},

author={Yuhang Li and Ruokai Yin and Donghyun Lee and Shiting Xiao and Priyadarshini Panda},

journal={arXiv preprint arXiv:2504.02692},

year={2025}

}

# QQQ

@article{zhang2024qqq,

title={QQQ: Quality Quattuor-Bit Quantization for Large Language Models},

author={Ying Zhang and Peng Zhang and Mincong Huang and Jingyang Xiang and Yujie Wang and Chao Wang and Yineng Zhang and Lei Yu and Chuan Liu and Wei Lin},

journal={arXiv preprint arXiv:2406.09904},

year={2024}

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for GPTQModel

Similar Open Source Tools

GPTQModel

GPTQModel is an easy-to-use LLM quantization and inference toolkit based on the GPTQ algorithm. It provides support for weight-only quantization and offers features such as dynamic per layer/module flexible quantization, sharding support, and auto-heal quantization errors. The toolkit aims to ensure inference compatibility with HF Transformers, vLLM, and SGLang. It offers various model supports, faster quant inference, better quality quants, and security features like hash check of model weights. GPTQModel also focuses on faster quantization, improved quant quality as measured by PPL, and backports bug fixes from AutoGPTQ.

litserve

LitServe is a high-throughput serving engine for deploying AI models at scale. It generates an API endpoint for a model, handles batching, streaming, autoscaling across CPU/GPUs, and more. Built for enterprise scale, it supports every framework like PyTorch, JAX, Tensorflow, and more. LitServe is designed to let users focus on model performance, not the serving boilerplate. It is like PyTorch Lightning for model serving but with broader framework support and scalability.

HuixiangDou

HuixiangDou is a **group chat** assistant based on LLM (Large Language Model). Advantages: 1. Design a two-stage pipeline of rejection and response to cope with group chat scenario, answer user questions without message flooding, see arxiv2401.08772 2. Low cost, requiring only 1.5GB memory and no need for training 3. Offers a complete suite of Web, Android, and pipeline source code, which is industrial-grade and commercially viable Check out the scenes in which HuixiangDou are running and join WeChat Group to try AI assistant inside. If this helps you, please give it a star ⭐

r2ai

r2ai is a tool designed to run a language model locally without internet access. It can be used to entertain users or assist in answering questions related to radare2 or reverse engineering. The tool allows users to prompt the language model, index large codebases, slurp file contents, embed the output of an r2 command, define different system-level assistant roles, set environment variables, and more. It is accessible as an r2lang-python plugin and can be scripted from various languages. Users can use different models, adjust query templates dynamically, load multiple models, and make them communicate with each other.

RepairAgent

RepairAgent is an autonomous LLM-based agent for automated program repair targeting the Defects4J benchmark. It uses an LLM-driven loop to localize, analyze, and fix Java bugs. The tool requires Docker, VS Code with Dev Containers extension, OpenAI API key, disk space of ~40 GB, and internet access. Users can get started with RepairAgent using either VS Code Dev Container or Docker Image. Running RepairAgent involves checking out the buggy project version, autonomous bug analysis, fix candidate generation, and testing against the project's test suite. Users can configure hyperparameters for budget control, repetition handling, commands limit, and external fix strategy. The tool provides output structure, experiment overview, individual analysis scripts, and data on fixed bugs from the Defects4J dataset.

TPI-LLM

TPI-LLM (Tensor Parallelism Inference for Large Language Models) is a system designed to bring LLM functions to low-resource edge devices, addressing privacy concerns by enabling LLM inference on edge devices with limited resources. It leverages multiple edge devices for inference through tensor parallelism and a sliding window memory scheduler to minimize memory usage. TPI-LLM demonstrates significant improvements in TTFT and token latency compared to other models, and plans to support infinitely large models with low token latency in the future.

MHA2MLA

This repository contains the code for the paper 'Towards Economical Inference: Enabling DeepSeek's Multi-Head Latent Attention in Any Transformer-based LLMs'. It provides tools for fine-tuning and evaluating Llama models, converting models between different frameworks, processing datasets, and performing specific model training tasks like Partial-RoPE Fine-Tuning and Multiple-Head Latent Attention Fine-Tuning. The repository also includes commands for model evaluation using Lighteval and LongBench, along with necessary environment setup instructions.

vue-markdown-render

vue-renderer-markdown is a high-performance tool designed for streaming and rendering Markdown content in real-time. It is optimized for handling incomplete or rapidly changing Markdown blocks, making it ideal for scenarios like AI model responses, live content updates, and real-time Markdown rendering. The tool offers features such as ultra-high performance, streaming-first design, Monaco integration, progressive Mermaid rendering, custom components integration, complete Markdown support, real-time updates, TypeScript support, and zero configuration setup. It solves challenges like incomplete syntax blocks, rapid content changes, cursor positioning complexities, and graceful handling of partial tokens with a streaming-optimized architecture.

vicinity

Vicinity is a lightweight, low-dependency vector store that provides a unified interface for nearest neighbor search with support for different backends and evaluation. It simplifies the process of comparing and evaluating different nearest neighbors packages by offering a simple and intuitive API. Users can easily experiment with various indexing methods and distance metrics to choose the best one for their use case. Vicinity also allows for measuring performance metrics like queries per second and recall.

evalchemy

Evalchemy is a unified and easy-to-use toolkit for evaluating language models, focusing on post-trained models. It integrates multiple existing benchmarks such as RepoBench, AlpacaEval, and ZeroEval. Key features include unified installation, parallel evaluation, simplified usage, and results management. Users can run various benchmarks with a consistent command-line interface and track results locally or integrate with a database for systematic tracking and leaderboard submission.

mdream

Mdream is a lightweight and user-friendly markdown editor designed for developers and writers. It provides a simple and intuitive interface for creating and editing markdown files with real-time preview. The tool offers syntax highlighting, markdown formatting options, and the ability to export files in various formats. Mdream aims to streamline the writing process and enhance productivity for individuals working with markdown documents.

crssnt

crssnt is a tool that converts RSS/Atom feeds into LLM-friendly Markdown or JSON, simplifying integration of feed content into AI workflows. It supports LLM-optimized conversion, multiple output formats, feed aggregation, and Google Sheet support. Users can access various endpoints for feed conversion and Google Sheet processing, with query parameters for customization. The tool processes user-provided URLs transiently without storing feed data, and can be self-hosted as Firebase Cloud Functions. Contributions are welcome under the MIT License.

OSA

OSA (Open-Source-Advisor) is a tool designed to improve the quality of scientific open source projects by automating the generation of README files, documentation, CI/CD scripts, and providing advice and recommendations for repositories. It supports various LLMs accessible via API, local servers, or osa_bot hosted on ITMO servers. OSA is currently under development with features like README file generation, documentation generation, automatic implementation of changes, LLM integration, and GitHub Action Workflow generation. It requires Python 3.10 or higher and tokens for GitHub/GitLab/Gitverse and LLM API key. Users can install OSA using PyPi or build from source, and run it using CLI commands or Docker containers.

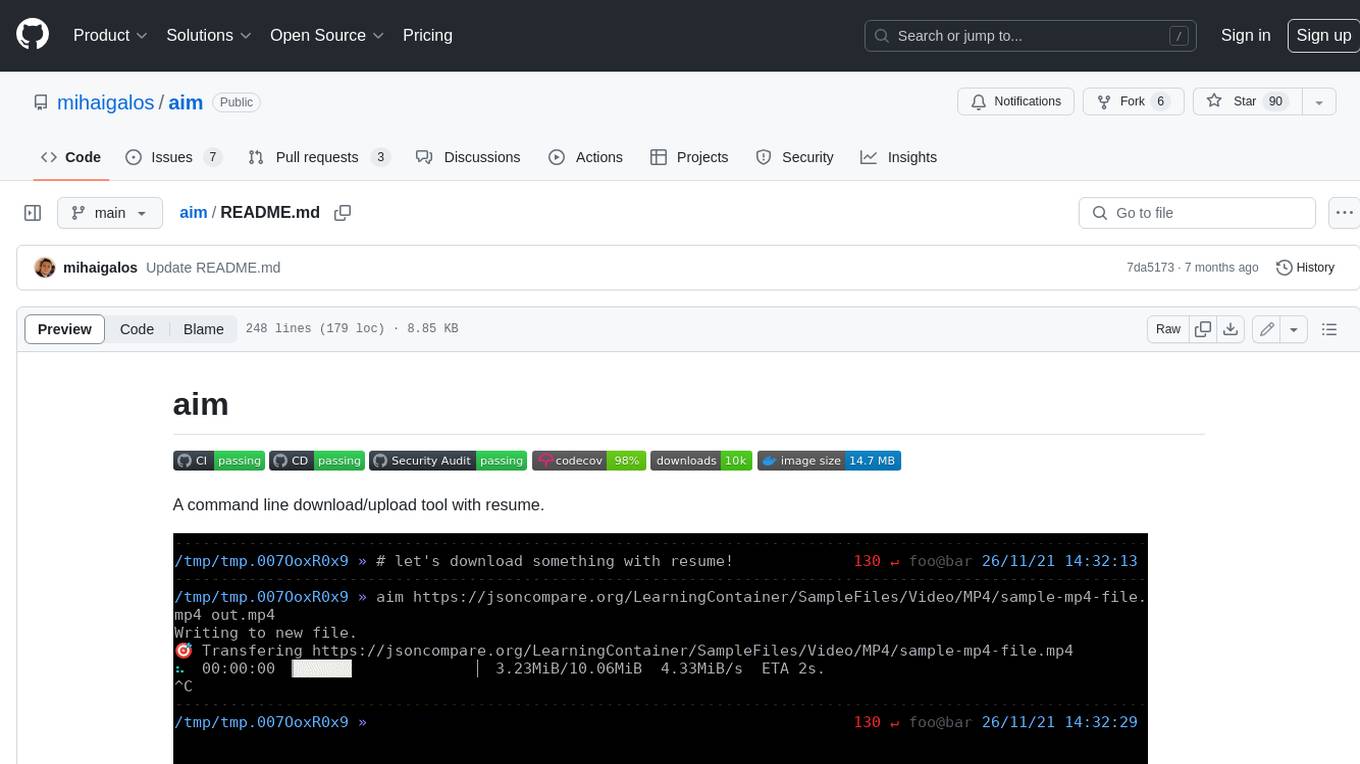

aim

Aim is a command-line tool for downloading and uploading files with resume support. It supports various protocols including HTTP, FTP, SFTP, SSH, and S3. Aim features an interactive mode for easy navigation and selection of files, as well as the ability to share folders over HTTP for easy access from other devices. Additionally, it offers customizable progress indicators and output formats, and can be integrated with other commands through piping. Aim can be installed via pre-built binaries or by compiling from source, and is also available as a Docker image for platform-independent usage.

localgpt

LocalGPT is a local device focused AI assistant built in Rust, providing persistent memory and autonomous tasks. It runs entirely on your machine, ensuring your memory data stays private. The tool offers a markdown-based knowledge store with full-text and semantic search capabilities, hybrid web search, and multiple interfaces including CLI, web UI, desktop GUI, and Telegram bot. It supports multiple LLM providers, is OpenClaw compatible, and offers defense-in-depth security features such as signed policy files, kernel-enforced sandbox, and prompt injection defenses. Users can configure web search providers, use OAuth subscription plans, and access the tool from Telegram for chat, tool use, and memory support.

infinity

Infinity is a high-throughput, low-latency REST API for serving vector embeddings, supporting all sentence-transformer models and frameworks. It is developed under the MIT License and powers inference behind Gradient.ai. The API allows users to deploy models from SentenceTransformers, offers fast inference backends utilizing various accelerators, dynamic batching for efficient processing, correct and tested implementation, and easy-to-use API built on FastAPI with Swagger documentation. Users can embed text, rerank documents, and perform text classification tasks using the tool. Infinity supports various models from Huggingface and provides flexibility in deployment via CLI, Docker, Python API, and cloud services like dstack. The tool is suitable for tasks like embedding, reranking, and text classification.

For similar tasks

GPTQModel

GPTQModel is an easy-to-use LLM quantization and inference toolkit based on the GPTQ algorithm. It provides support for weight-only quantization and offers features such as dynamic per layer/module flexible quantization, sharding support, and auto-heal quantization errors. The toolkit aims to ensure inference compatibility with HF Transformers, vLLM, and SGLang. It offers various model supports, faster quant inference, better quality quants, and security features like hash check of model weights. GPTQModel also focuses on faster quantization, improved quant quality as measured by PPL, and backports bug fixes from AutoGPTQ.

lighteval

LightEval is a lightweight LLM evaluation suite that Hugging Face has been using internally with the recently released LLM data processing library datatrove and LLM training library nanotron. We're releasing it with the community in the spirit of building in the open. Note that it is still very much early so don't expect 100% stability ^^' In case of problems or question, feel free to open an issue!

Firefly

Firefly is an open-source large model training project that supports pre-training, fine-tuning, and DPO of mainstream large models. It includes models like Llama3, Gemma, Qwen1.5, MiniCPM, Llama, InternLM, Baichuan, ChatGLM, Yi, Deepseek, Qwen, Orion, Ziya, Xverse, Mistral, Mixtral-8x7B, Zephyr, Vicuna, Bloom, etc. The project supports full-parameter training, LoRA, QLoRA efficient training, and various tasks such as pre-training, SFT, and DPO. Suitable for users with limited training resources, QLoRA is recommended for fine-tuning instructions. The project has achieved good results on the Open LLM Leaderboard with QLoRA training process validation. The latest version has significant updates and adaptations for different chat model templates.

Awesome-Text2SQL

Awesome Text2SQL is a curated repository containing tutorials and resources for Large Language Models, Text2SQL, Text2DSL, Text2API, Text2Vis, and more. It provides guidelines on converting natural language questions into structured SQL queries, with a focus on NL2SQL. The repository includes information on various models, datasets, evaluation metrics, fine-tuning methods, libraries, and practice projects related to Text2SQL. It serves as a comprehensive resource for individuals interested in working with Text2SQL and related technologies.

create-million-parameter-llm-from-scratch

The 'create-million-parameter-llm-from-scratch' repository provides a detailed guide on creating a Large Language Model (LLM) with 2.3 million parameters from scratch. The blog replicates the LLaMA approach, incorporating concepts like RMSNorm for pre-normalization, SwiGLU activation function, and Rotary Embeddings. The model is trained on a basic dataset to demonstrate the ease of creating a million-parameter LLM without the need for a high-end GPU.

StableToolBench

StableToolBench is a new benchmark developed to address the instability of Tool Learning benchmarks. It aims to balance stability and reality by introducing features such as a Virtual API System with caching and API simulators, a new set of solvable queries determined by LLMs, and a Stable Evaluation System using GPT-4. The Virtual API Server can be set up either by building from source or using a prebuilt Docker image. Users can test the server using provided scripts and evaluate models with Solvable Pass Rate and Solvable Win Rate metrics. The tool also includes model experiments results comparing different models' performance.

BetaML.jl

The Beta Machine Learning Toolkit is a package containing various algorithms and utilities for implementing machine learning workflows in multiple languages, including Julia, Python, and R. It offers a range of supervised and unsupervised models, data transformers, and assessment tools. The models are implemented entirely in Julia and are not wrappers for third-party models. Users can easily contribute new models or request implementations. The focus is on user-friendliness rather than computational efficiency, making it suitable for educational and research purposes.

AI-TOD

AI-TOD is a dataset for tiny object detection in aerial images, containing 700,621 object instances across 28,036 images. Objects in AI-TOD are smaller with a mean size of 12.8 pixels compared to other aerial image datasets. To use AI-TOD, download xView training set and AI-TOD_wo_xview, then generate the complete dataset using the provided synthesis tool. The dataset is publicly available for academic and research purposes under CC BY-NC-SA 4.0 license.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.