star-vector

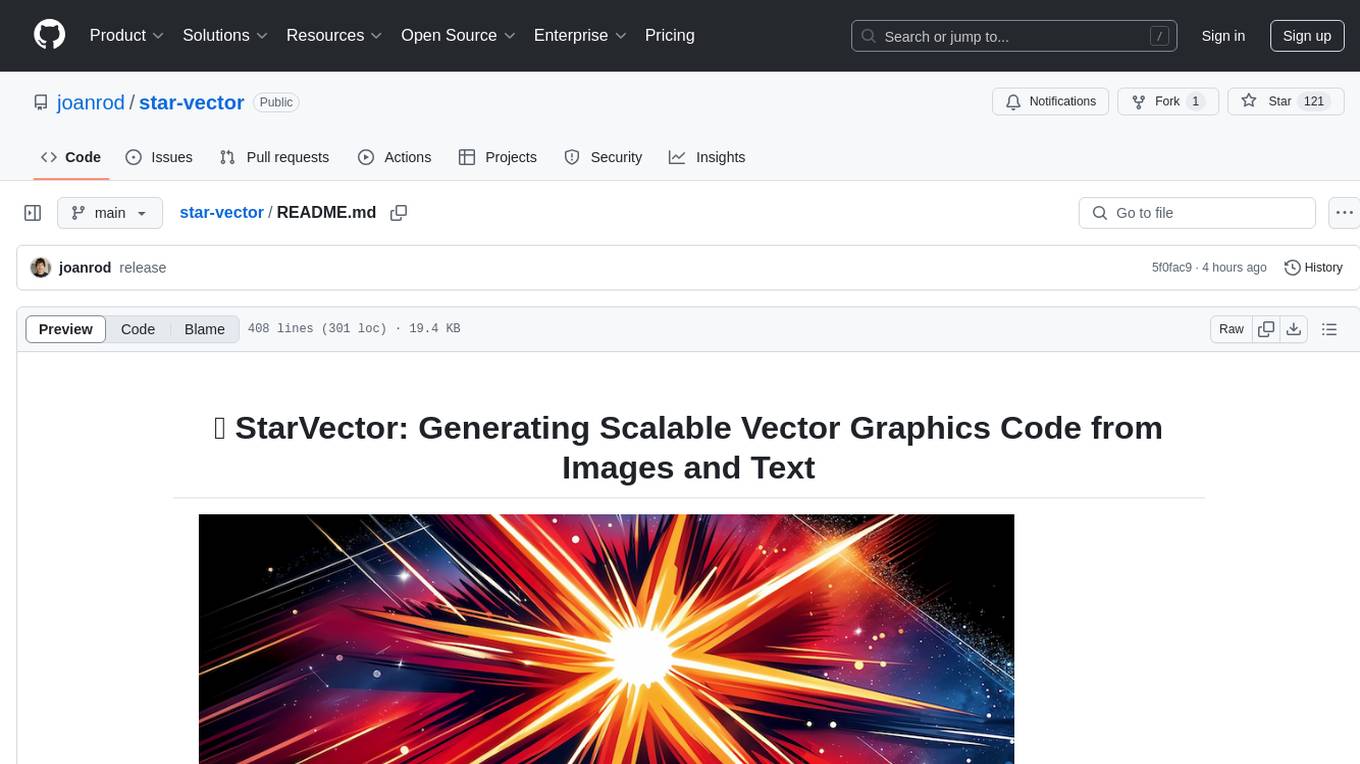

StarVector is a foundation model for SVG generation that transforms vectorization into a code generation task. Using a vision-language modeling architecture, StarVector processes both visual and textual inputs to produce high-quality SVG code with remarkable precision.

Stars: 118

StarVector is a multimodal vision-language model for Scalable Vector Graphics (SVG) generation. It can be used to perform image2SVG and text2SVG generation. StarVector works directly in the SVG code space, leveraging visual understanding to apply accurate SVG primitives. It achieves state-of-the-art performance in producing compact and semantically rich SVGs. The tool provides Hugging Face model checkpoints for image2SVG vectorization, with models like StarVector-8B and StarVector-1B. It also offers datasets like SVG-Stack, SVG-Fonts, SVG-Icons, SVG-Emoji, and SVG-Diagrams for evaluation. StarVector can be trained using Deepspeed or FSDP for tasks like Image2SVG and Text2SVG generation. The tool provides a demo with options for HuggingFace generation or VLLM backend for faster generation speed.

README:

- March 2025: StarVector Accepted at CVPR 2025,

StarVector is a multimodal vision-language model for Scalable Vector Graphics (SVG) generation. It can be used to perform image2SVG and text2SVG generation. We pose image generation as a code generation task, using the power of multimodal VLMs

Abstract: Scalable Vector Graphics (SVGs) are vital for modern image rendering due to their scalability and versatility. Previous SVG generation methods have focused on curve-based vectorization, lacking semantic understanding, often producing artifacts, and struggling with SVG primitives beyond \textit{path} curves. To address these issues, we introduce StarVector, a multimodal large language model for SVG generation. It performs image vectorization by understanding image semantics and using SVG primitives for compact, precise outputs. Unlike traditional methods, StarVector works directly in the SVG code space, leveraging visual understanding to apply accurate SVG primitives. To train StarVector, we create SVG-Stack, a diverse dataset of 2M samples that enables generalization across vectorization tasks and precise use of primitives like ellipses, polygons, and text. We address challenges in SVG evaluation, showing that pixel-based metrics like MSE fail to capture the unique qualities of vector graphics. We introduce SVG-Bench, a benchmark across 10 datasets, and 3 tasks: Image-to-SVG, Text-to-SVG generation, and diagram generation. Using this setup, StarVector achieves state-of-the-art performance, producing more compact and semantically rich SVGs.

StarVector uses a multimodal architecture to process images and text. When performing Image-to-SVG (or image vectorization), the image is projected into visual tokens, and SVG code is generated. When performing Text-to-SVG, the model only recieves the text instruction (no image is provided), and a novel SVG is created. The LLM is based of StarCoder, which we leverage to transfer coding skills to SVG generation.

- 💿 Installation

- 🏎️ Quick Start - Image2SVG Generation

- 🎨 Models

- 📊 Datasets

- 🏋️♂️ Training

- 🏆 Evaluation on SVG-Bench

- 🧩 Demo

- 📚 Citation

- 📝 License

- Clone this repository and navigate to star-vector folder

git clone https://github.com/joanrod/star-vector.git

cd star-vector- Install Package

conda create -n starvector python=3.11.3 -y

conda activate starvector

pip install --upgrade pip # enable PEP 660 support

pip install -e .- Install additional packages for training

pip install -e ".[train]"

git pull

pip install -e .from PIL import Image

from starvector.model.starvector_arch import StarVectorForCausalLM

from starvector.data.util import process_and_rasterize_svg

model_name = "starvector/starvector-8b-im2svg"

starvector = StarVectorForCausalLM.from_pretrained(model_name)

starvector.cuda()

starvector.eval()

image_pil = Image.open('assets/examples/sample-0.png')

image = starvector.process_images([image_pil])[0].cuda()

batch = {"image": image}

raw_svg = starvector.generate_im2svg(batch, max_length=1000)[0]

svg, raster_image = process_and_rasterize_svg(raw_svg)from PIL import Image

from transformers import AutoModelForCausalLM, AutoTokenizer, AutoProcessor

from starvector.data.util import process_and_rasterize_svg

import torch

model_name = "starvector/starvector-8b-im2svg"

starvector = AutoModelForCausalLM.from_pretrained(model_name, torch_dtype=torch.float16, trust_remote_code=True)

processor = starvector.model.processor

tokenizer = starvector.model.svg_transformer.tokenizer

starvector.cuda()

starvector.eval()

image_pil = Image.open('assets/examples/sample-18.png')

image = processor(image_pil, return_tensors="pt")['pixel_values'].cuda()

if not image.shape[0] == 1:

image = image.squeeze(0)

batch = {"image": image}

raw_svg = starvector.generate_im2svg(batch, max_length=4000)[0]

svg, raster_image = process_and_rasterize_svg(raw_svg)We provide Hugging Face 🤗 model checkpoints for image2SVG vectorization, for 💫 StarVector-8B and 💫 StarVector-1B. These are the results on SVG-Bench, using the DinoScore metric.

| Method | SVG-Stack | SVG-Fonts | SVG-Icons | SVG-Emoji | SVG-Diagrams |

|---|---|---|---|---|---|

| AutoTrace | 0.942 | 0.954 | 0.946 | 0.975 | 0.874 |

| Potrace | 0.898 | 0.967 | 0.972 | 0.882 | 0.875 |

| VTracer | 0.954 | 0.964 | 0.940 | 0.981 | 0.882 |

| Im2Vec | 0.692 | 0.733 | 0.754 | 0.732 | - |

| LIVE | 0.934 | 0.956 | 0.959 | 0.969 | 0.870 |

| DiffVG | 0.810 | 0.821 | 0.952 | 0.814 | 0.822 |

| GPT-4-V | 0.852 | 0.842 | 0.848 | 0.850 | - |

| 💫 StarVector-1B (🤗 Link) | 0.926 | 0.978 | 0.975 | 0.929 | 0.943 |

| 💫 StarVector-8B (🤗 Link) | 0.966 | 0.982 | 0.984 | 0.981 | 0.959 |

Note: StarVector models will not work for natural images or illustrations, as they have not been trained on those images. They excel in vectorizing icons, logotypes, technical diagrams, graphs, and charts.

SVG-Bench is a benchmark for evaluating SVG generation models. It contains 10 datasets, and 3 tasks: Image-to-SVG, Text-to-SVG, and Diagram-to-SVG.

See our Huggingface 🤗 Dataset Collection

| Dataset | Train | Val | Test | Token Length | SVG Primitives | Annotation |

|---|---|---|---|---|---|---|

| SVG-Stack (🤗 Link) | 2.1M | 108k | 5.7k | 1,822 ± 1,808 | All | Captions |

| SVG-Stack_sim (🤗 Link) | 601k | 30.1k | 1.5k | 2k ± 918 | Vector path | - |

| SVG-Diagrams (🤗 Link) | - | - | 472 | 3,486 ± 1,918 | All | - |

| SVG-Fonts (🤗 Link) | 1.8M | 91.5k | 4.8k | 2,121 ± 1,868 | Vector path | Font letter |

| SVG-Fonts_sim (🤗 Link) | 1.4M | 71.7k | 3.7k | 1,722 ± 723 | Vector path | Font letter |

| SVG-Emoji (🤗 Link) | 8.7k | 667 | 668 | 2,551 ± 1,805 | All | - |

| SVG-Emoji_sim (🤗 Link) | 580 | 57 | 96 | 2,448 ± 1,026 | Vector Path | - |

| SVG-Icons (🤗 Link) | 80.4k | 6.2k | 2.4k | 2,449 ± 1,543 | Vector path | - |

| SVG-Icons_sim (🤗 Link) | 80,435 | 2,836 | 1,277 | 2,005 ± 824 | Vector path | - |

| SVG-FIGR (🤗 Link) | 270k | 27k | 3k | 5,342 ± 2,345 | Vector path | Class, Caption |

We offer a summary of statistics about the datasets used in our training and evaluation experiments. This datasets are included in SVG-Bench. The subscript sim stands for the simplified version of the dataset, as required by some baselines.

pip install -e ".[train]"We recommend setting the following environment variables:

export HF_HOME=<path to the folder where you want to store the models>

export HF_TOKEN=<your huggingface token>

export WANDB_API_KEY=<your wandb token>

export OUTPUT_DIR=<path/to/output>cd the root of the repository.

cd star-vectorWe have different training approaches for StarVector-1B and StarVector-8B. StarVector-1B can be trained using Deepspeed, while StarVector-8B requires FSDP.

You can use the following command to train StarVector-1B on SVG-Stack for the Image2SVG vectorization task, using Deepspeed and Accelerate

# StarVector-1B

accelerate launch --config_file configs/accelerate/deepspeed-8-gpu.yaml starvector/train/train.py config=configs/models/starvector-1b/im2svg-stack.yamlYou can use the following command to train StarVector-8B on SVG-Stack for the Image2SVG vectorization task, using FSDP and Accelerate. We provide the torchrun command to support multi-nodes and multi-GPUs.

# StarVector-8B

torchrun \

--nproc-per-node=8 \

--nnodes=1 \

starvector/train/train.py \

config=configs/models/starvector-8b/im2svg-stack.yamlAfter pretraining StarVector on image vectorization, we finetune it on additional SVG tasks like Text2SVG, and SVG-Bench datasets.

# StarVector-1B

accelerate launch --config_file config/accelerate/deepspeed-8-gpu.yaml starvector/train/train.py config=configs/models/starvector-1b/text2svg-stack.yaml

# StarVector-8B

torchrun \

--nproc-per-node=8 \

--nnodes=1 \

starvector/train/train.py \

config=configs/models/starvector-8b/text2svg-stack.yaml# StarVector-1B

accelerate launch --config_file config/accelerate/deepspeed-8-gpu.yaml starvector/train/train.py config=configs/models/starvector-1b/im2svg-{fonts,icons,emoji}.yaml

# StarVector-8B

torchrun \

--nproc-per-node=8 \

--nnodes=1 \

starvector/train/train.py \

config=configs/models/starvector-8b/im2svg-{fonts,icons,emoji}.yamlWe also provide shell scripts in scripts/train/*

We validate StarVector on ⭐ SVG-Bench Benchmark. We provide the SVGValidator class that allows you to run StarVector using 1) the HuggingFace generation backend or 2) the VLLM backend. The later is substantially faster thanks to the use of Paged Attention.

Let's start with the evaluation for StarVector-1B and StarVector-8B on SVG-Stack, using the HuggingFace generation backend (StarVectorHFAPIValidator). To override the input arguments, you can add cli args following the yaml file structure.

# StarVector-1B on SVG-Stack, using the HuggingFace backend

python starvector/validation/validate.py \

config=configs/generation/hf/starvector-1b/im2svg.yaml \

dataset.name=starvector/svg-stack

# StarVector-8B on SVG-Stack, using the vanilla HuggingFace generation API

python starvector/validation/validate.py \

config=configs/generation/hf/starvector-8b/im2svg.yaml \

dataset.name=starvector/svg-stackFor using the vLLM backend (StarVectorVLLMAPIValidator), first install our StarVector fork of VLLM, here.

git clone https://github.com/starvector/vllm.git

cd vllm

pip install -e .Then, launch the using the vllm config file (it uses StarVectorVLLMValidator):

# StarVector-1B

python starvector/validation/validate.py \

config=configs/generation/vllm/starvector-1b/im2svg.yaml \

dataset.name=starvector/svg-stack

# StarVector-8B

python starvector/validation/validate.py \

config=configs/generation/vllm/starvector-8b/im2svg.yaml \

dataset.name=starvector/svg-stackWe provide evaluation scripts in scripts/eval/*

The demo provides two options for converting images to SVG code:

- HuggingFace generation functionality

- VLLM (recommended) - offers faster generation speed

We provide a Gradio web UI for you to play with our model.

python -m starvector.serve.controller --host 0.0.0.0 --port 10000python -m starvector.serve.gradio_web_server --controller http://localhost:10000 --model-list-mode reload --port 7000You just launched the Gradio web interface. Now, you can open the web interface with the URL printed on the screen. You may notice that there is no model in the model list. Do not worry, as we have not launched any model worker yet. It will be automatically updated when you launch a model worker.

This is the actual worker that performs the inference on the GPU. Each worker is responsible for a single model specified in --model-path.

python -m starvector.serve.model_worker --host 0.0.0.0 --controller http://localhost:10000 --port 40000 --worker http://localhost:40000 --model-path joanrodai/starvector-1.4bWait until the process finishes loading the model and you see "Uvicorn running on ...". Now, refresh your Gradio web UI, and you will see the model you just launched in the model list.

You can launch as many workers as you want, and compare between different model checkpoints in the same Gradio interface. Please keep the --controller the same, and modify the --port and --worker to a different port number for each worker.

vllm serve starvector/starvector-8b-im2svg --chat-template configs/chat-template.jinja --trust-remote-code --port 8001 --max-model-len 16000

python -m starvector.serve.model_worker --host 0.0.0.0 --controller http://localhost:10000 --port <different from 40000, say 40001> --worker http://localhost:<change accordingly, i.e. 40001> --model-path <ckpt2>- Remember to clone the starvector/vllm fork (it has modifications for starvector).

git clone https://github.com/starvector/vllm.git

cd vllm

pip install -e .- Call this to launch the VLLM endpoint

vllm serve starvector/starvector-1b-im2svg --chat-template configs/chat-template.jinja --trust-remote-code --port 8000 --max-model-len 8192- Create the demo for VLLM

python -m starvector.serve.vllm_api_gradio.controller --host 0.0.0.0 --port 10000

python -m starvector.serve.vllm_api_gradio.gradio_web_server --controller http://localhost:10000 --model-list-mode reload --port 7000

python -m starvector.serve.vllm_api_gradio.model_worker --host 0.0.0.0 --controller http://localhost:10000 --port 40000 --worker http://localhost:40000 --model-name starvector/starvector-1b-im2svg --vllm-base-url http://localhost:8000- Add more models by serving them with VLLM and calling a new model worker

vllm serve starvector/starvector-8b-im2svg --chat-template configs/chat-template.jinja --trust-remote-code --port 8001 --max-model-len 16384

python -m starvector.serve.vllm_api_gradio.model_worker --host 0.0.0.0 --controller http://localhost:10000 --port 40001 --worker http://localhost:40001 --model-name starvector/starvector-8b-im2svg --vllm-base-url http://localhost:8001@misc{rodriguez2024starvector,

title={StarVector: Generating Scalable Vector Graphics Code from Images and Text},

author={Juan A. Rodriguez and Abhay Puri and Shubham Agarwal and Issam H. Laradji and Pau Rodriguez and Sai Rajeswar and David Vazquez and Christopher Pal and Marco Pedersoli},

year={2024},

eprint={2312.11556},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2312.11556},

}

This project is licensed under the Apache License, Version 2.0 - see the LICENSE file for details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for star-vector

Similar Open Source Tools

star-vector

StarVector is a multimodal vision-language model for Scalable Vector Graphics (SVG) generation. It can be used to perform image2SVG and text2SVG generation. StarVector works directly in the SVG code space, leveraging visual understanding to apply accurate SVG primitives. It achieves state-of-the-art performance in producing compact and semantically rich SVGs. The tool provides Hugging Face model checkpoints for image2SVG vectorization, with models like StarVector-8B and StarVector-1B. It also offers datasets like SVG-Stack, SVG-Fonts, SVG-Icons, SVG-Emoji, and SVG-Diagrams for evaluation. StarVector can be trained using Deepspeed or FSDP for tasks like Image2SVG and Text2SVG generation. The tool provides a demo with options for HuggingFace generation or VLLM backend for faster generation speed.

ai

The react-native-ai repository allows users to run Large Language Models (LLM) locally in a React Native app using the Universal MLC LLM Engine with compatibility for Vercel AI SDK. Please note that this project is experimental and not ready for production. The repository is licensed under MIT and was created with create-react-native-library.

agentscope

AgentScope is a multi-agent platform designed to empower developers to build multi-agent applications with large-scale models. It features three high-level capabilities: Easy-to-Use, High Robustness, and Actor-Based Distribution. AgentScope provides a list of `ModelWrapper` to support both local model services and third-party model APIs, including OpenAI API, DashScope API, Gemini API, and ollama. It also enables developers to rapidly deploy local model services using libraries such as ollama (CPU inference), Flask + Transformers, Flask + ModelScope, FastChat, and vllm. AgentScope supports various services, including Web Search, Data Query, Retrieval, Code Execution, File Operation, and Text Processing. Example applications include Conversation, Game, and Distribution. AgentScope is released under Apache License 2.0 and welcomes contributions.

ScaleLLM

ScaleLLM is a cutting-edge inference system engineered for large language models (LLMs), meticulously designed to meet the demands of production environments. It extends its support to a wide range of popular open-source models, including Llama3, Gemma, Bloom, GPT-NeoX, and more. ScaleLLM is currently undergoing active development. We are fully committed to consistently enhancing its efficiency while also incorporating additional features. Feel free to explore our **_Roadmap_** for more details. ## Key Features * High Efficiency: Excels in high-performance LLM inference, leveraging state-of-the-art techniques and technologies like Flash Attention, Paged Attention, Continuous batching, and more. * Tensor Parallelism: Utilizes tensor parallelism for efficient model execution. * OpenAI-compatible API: An efficient golang rest api server that compatible with OpenAI. * Huggingface models: Seamless integration with most popular HF models, supporting safetensors. * Customizable: Offers flexibility for customization to meet your specific needs, and provides an easy way to add new models. * Production Ready: Engineered with production environments in mind, ScaleLLM is equipped with robust system monitoring and management features to ensure a seamless deployment experience.

TokenPacker

TokenPacker is a novel visual projector that compresses visual tokens by 75%∼89% with high efficiency. It adopts a 'coarse-to-fine' scheme to generate condensed visual tokens, achieving comparable or better performance across diverse benchmarks. The tool includes TokenPacker for general use and TokenPacker-HD for high-resolution image understanding. It provides training scripts, checkpoints, and supports various compression ratios and patch numbers.

everything-claude-code

The 'Everything Claude Code' repository is a comprehensive collection of production-ready agents, skills, hooks, commands, rules, and MCP configurations developed over 10+ months. It includes guides for setup, foundations, and philosophy, as well as detailed explanations of various topics such as token optimization, memory persistence, continuous learning, verification loops, parallelization, and subagent orchestration. The repository also provides updates on bug fixes, multi-language rules, installation wizard, PM2 support, OpenCode plugin integration, unified commands and skills, and cross-platform support. It offers a quick start guide for installation, ecosystem tools like Skill Creator and Continuous Learning v2, requirements for CLI version compatibility, key concepts like agents, skills, hooks, and rules, running tests, contributing guidelines, OpenCode support, background information, important notes on context window management and customization, star history chart, and relevant links.

paperbanana

PaperBanana is an automated academic illustration tool designed for AI scientists. It implements an agentic framework for generating publication-quality academic diagrams and statistical plots from text descriptions. The tool utilizes a two-phase multi-agent pipeline with iterative refinement, Gemini-based VLM planning, and image generation. It offers a CLI, Python API, and MCP server for IDE integration, along with Claude Code skills for generating diagrams, plots, and evaluating diagrams. PaperBanana is not affiliated with or endorsed by the original authors or Google Research, and it may differ from the original system described in the paper.

ShapeLLM

ShapeLLM is the first 3D Multimodal Large Language Model designed for embodied interaction, exploring a universal 3D object understanding with 3D point clouds and languages. It supports single-view colored point cloud input and introduces a robust 3D QA benchmark, 3D MM-Vet, encompassing various variants. The model extends the powerful point encoder architecture, ReCon++, achieving state-of-the-art performance across a range of representation learning tasks. ShapeLLM can be used for tasks such as training, zero-shot understanding, visual grounding, few-shot learning, and zero-shot learning on 3D MM-Vet.

VILA

VILA is a family of open Vision Language Models optimized for efficient video understanding and multi-image understanding. It includes models like NVILA, LongVILA, VILA-M3, VILA-U, and VILA-1.5, each offering specific features and capabilities. The project focuses on efficiency, accuracy, and performance in various tasks related to video, image, and language understanding and generation. VILA models are designed to be deployable on diverse NVIDIA GPUs and support long-context video understanding, medical applications, and multi-modal design.

libllm

libLLM is an open-source project designed for efficient inference of large language models (LLM) on personal computers and mobile devices. It is optimized to run smoothly on common devices, written in C++14 without external dependencies, and supports CUDA for accelerated inference. Users can build the tool for CPU only or with CUDA support, and run libLLM from the command line. Additionally, there are API examples available for Python and the tool can export Huggingface models.

optillm

optillm is an OpenAI API compatible optimizing inference proxy implementing state-of-the-art techniques to enhance accuracy and performance of LLMs, focusing on reasoning over coding, logical, and mathematical queries. By leveraging additional compute at inference time, it surpasses frontier models across diverse tasks.

terminator

Terminator is an AI-powered desktop automation tool that is open source, MIT-licensed, and cross-platform. It works across all apps and browsers, inspired by GitHub Actions & Playwright. It is 100x faster than generic AI agents, with over 95% success rate and no vendor lock-in. Users can create automations that work across any desktop app or browser, achieve high success rates without costly consultant armies, and pre-train workflows as deterministic code.

tokscale

Tokscale is a high-performance CLI tool and visualization dashboard for tracking token usage and costs across multiple AI coding agents. It helps monitor and analyze token consumption from various AI coding tools, providing real-time pricing calculations using LiteLLM's pricing data. Inspired by the Kardashev scale, Tokscale measures token consumption as users scale the ranks of AI-augmented development. It offers interactive TUI mode, multi-platform support, real-time pricing, detailed breakdowns, web visualization, flexible filtering, and social platform features.

PDFMathTranslate

PDFMathTranslate is a tool designed for translating scientific papers and conducting bilingual comparisons. It preserves formulas, charts, table of contents, and annotations. The tool supports multiple languages and diverse translation services. It provides a command-line tool, interactive user interface, and Docker deployment. Users can try the application through online demos. The tool offers various installation methods including command-line, portable, graphic user interface, and Docker. Advanced options allow users to customize translation settings. Additionally, the tool supports secondary development through APIs for Python and HTTP. Future plans include parsing layout with DocLayNet based models, fixing page rotation and format issues, supporting non-PDF/A files, and integrating plugins for Zotero and Obsidian.

kernel-memory

Kernel Memory (KM) is a multi-modal AI Service specialized in the efficient indexing of datasets through custom continuous data hybrid pipelines, with support for Retrieval Augmented Generation (RAG), synthetic memory, prompt engineering, and custom semantic memory processing. KM is available as a Web Service, as a Docker container, a Plugin for ChatGPT/Copilot/Semantic Kernel, and as a .NET library for embedded applications. Utilizing advanced embeddings and LLMs, the system enables Natural Language querying for obtaining answers from the indexed data, complete with citations and links to the original sources. Designed for seamless integration as a Plugin with Semantic Kernel, Microsoft Copilot and ChatGPT, Kernel Memory enhances data-driven features in applications built for most popular AI platforms.

deepfabric

DeepFabric is a CLI tool and SDK designed for researchers and developers to generate high-quality synthetic datasets at scale using large language models. It leverages a graph and tree-based architecture to create diverse and domain-specific datasets while minimizing redundancy. The tool supports generating Chain of Thought datasets for step-by-step reasoning tasks and offers multi-provider support for using different language models. DeepFabric also allows for automatic dataset upload to Hugging Face Hub and uses YAML configuration files for flexibility in dataset generation.

For similar tasks

star-vector

StarVector is a multimodal vision-language model for Scalable Vector Graphics (SVG) generation. It can be used to perform image2SVG and text2SVG generation. StarVector works directly in the SVG code space, leveraging visual understanding to apply accurate SVG primitives. It achieves state-of-the-art performance in producing compact and semantically rich SVGs. The tool provides Hugging Face model checkpoints for image2SVG vectorization, with models like StarVector-8B and StarVector-1B. It also offers datasets like SVG-Stack, SVG-Fonts, SVG-Icons, SVG-Emoji, and SVG-Diagrams for evaluation. StarVector can be trained using Deepspeed or FSDP for tasks like Image2SVG and Text2SVG generation. The tool provides a demo with options for HuggingFace generation or VLLM backend for faster generation speed.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.