nekro-agent

集代码执行能力/高度可扩展性为一体的多人跨平台聊天机器人:沙盒驱动|可视化|高扩展|多模态; An Extensible Multi-person interactive Agent Framework Powered by LLM Code Generation; Support: QQ, Discord, Minecraft, Bilibili Live, SSE(SDK) ...

Stars: 502

Nekro Agent is an AI chat plugin and proxy execution bot that is highly scalable, offers high freedom, and has minimal deployment requirements. It features context-aware chat for group/private chats, custom character settings, sandboxed execution environment, interactive image resource handling, customizable extension development interface, easy deployment with docker-compose, integration with Stable Diffusion for AI drawing capabilities, support for various file types interaction, hot configuration updates and command control, native multimodal understanding, visual application management control panel, CoT (Chain of Thought) support, self-triggered timers and holiday greetings, event notification understanding, and more. It allows for third-party extensions and AI-generated extensions, and includes features like automatic context trigger based on LLM, and a variety of basic commands for bot administrators.

README:

Switch to English

🚅 源自首批大模型机器人应用 Naturel GPT 的 Agent 升级重构续作 🌈

📚 Nekro Agent 文档中心 提供完整的入门指南与开发文档 📚

💬 技术交流/答疑 -> 加入社区 QQ 交流群: 636925153 (1群|将满) | 679808796 (2群|新开) | Discord Channel 🗨️

🚀 NekroAI 云社区 提供插件和人设实时自由共享,生态观测功能等你体验! 🚀

我们很高兴地宣布,我们正在计划进行国际化改造,以更好地支持我们的全球社区。我们欢迎来自世界各地的开发者加入我们的行列。

来我们的官方 Discord 服务器上与我们聊天,分享您的想法,成为 Nekro Agent 未来的重要一员!

- 加入我们的 Discord: NekroAI Official

NekroAgent 通过强大灵活的提示词构建系统,引导 AI 生成准确的代码并在沙盒中执行,通过 RPC 通信来与真实环境交互。主要特点包括:

核心执行与扩展能力:

- 代码生成与安全沙盒:引导 AI 生成代码并在安全的容器化环境中执行,实现复杂任务与方法级扩展性的坚固基石!

- 高度可扩展的插件系统:提供多种关键节点回调、提示词注入、自定义沙盒方法,从小型工具扩展到大型系统优雅集成的超智能中枢解决方案!

- 原生多人场景互动:高效洞悉群聊场景需求,在复杂的多人对话中保持交互能力!

- 极致的降本增效:拒绝无效提示词与滥用迭代智能体,聚焦解决问题的逻辑根本。

- 自动纠错与反馈:深耕提示词纠错与反馈机制,打破困于错误与重复的循环。

连接与交互:

-

多平台适配器架构:原生支持

OneBot v11,Minecraft,B站直播等多种聊天平台,提供统一的开发接口。 - 原生多模态视觉理解:可处理图片、文件等多种资源,与用户进行多模态交互。

- 基于事件驱动的异步架构:遵循异步优先的高效响应机制。

生态与易用性:

- 云端资源共享:包括插件、人设等,拥有强大友好的社区驱动能力。

- 功能齐全的可视化界面:提供强大的应用管理与监控面板。

Nekro Agent 的核心是面向 输入/输出流 设计的。适配器(Adapter)作为连接外部平台的桥梁,仅需实现对具体平台消息的接收(输入流)和发送(输出流)。所有复杂的业务逻辑,如频道管理、插件执行、沙盒调用等,都由 Nekro Agent 的核心引擎自动接管和处理。这种设计确保了极高的扩展性和维护性,让开发者可以专注于实现适配器本身的功能,而无需关心核心的复杂实现。

graph TD

subgraph 外部平台

P1["平台 A (例如: QQ)"]

P2["平台 B (例如: Minecraft)"]

P3[...]

end

subgraph Nekro Agent

subgraph 适配器层

A1["适配器 A"]

A2["适配器 B"]

A3[...]

end

subgraph 核心引擎

Input["输入流 (collect_message)"] --> Dispatcher

Dispatcher{"消息<br>分发器"} --> Services["核心共享服务<br>(频道, 插件, 沙盒等)"]

Services --> Output["输出流 (forward_message)"]

end

end

P1 <==> A1

P2 <==> A2

P3 <==> A3

A1 --> Input

A2 --> Input

A3 --> Input

Output --> A1

Output --> A2

Output --> A3Nekro Agent 提供了强大而直观的可视化界面,方便您管理和监控 Agent 的所有行为,以及实现多种精细化的 Agent 行为策略控制等。

Nekro Agent 提供多样化的应用场景,从情感陪伴到复杂任务处理,满足各类需求:

- 💖 情感交互与陪伴:通过灵活的人设系统和先进的大语言模型,提供自然流畅的情感互动体验,支持多种角色定制和可扩展的记忆能力

- 📊 数据与文件处理:高效处理各类图像、文档及数据,无需额外软件支持,轻松完成格式转换与内容提取

- 🎮 创意与开发辅助:从网页应用生成到数据可视化,让创意实现变得简单高效

- 🔄 自动化与集成:支持事件订阅推送和多 AI 协作,实现复杂任务的智能自动化

- 📚 学习与生活助手:从学习辅导到内容创作,再到智能家居控制,全方位提升生活品质

👉 更多精彩用例与演示,请访问应用场景展示页面!

- ✅ 多平台适配:

- ✅ OneBot v11 (QQ)

- ✅ Discord

- ✅ Minecraft

- ✅ Bilibili Live (B 站直播)

- ✅ SSE+SDK (Server-Sent Events + SDK)

- ✅ ... 更多适配器开发中

- ✅ 智能聊天:群聊/私聊场景下的上下文智能聊天

- ✅ 自定义人设:支持自定义人设与云端人设市场

- ✅ 沙盒执行:安全的容器化代码执行环境

- ✅ 多模态交互:支持发送、接收和处理图片及文件资源

- ✅ 插件生态:高度可扩展的插件系统与云端插件市场

- ✅ 一键部署:基于

docker-compose的容器编排一键部署 - ✅ 热重载:配置热更新与指令控制支持

- ✅ 定时任务:支持定时自触发插件与节日祝福

- ✅ WebUI:功能齐全的可视化应用管理控制面板

- ✅ 事件支持:可响应多种平台事件通知并理解其上下文

- ✅ 外置思维链 (CoT) 能力支持

- ✅ 完善第三方插件能力及 AI 生成插件

我们提供了多种部署方式,请访问 快速开始文档 查看详细教程:

sudo -E bash -c "$(curl -fsSL https://raw.githubusercontent.com/KroMiose/nekro-agent/main/docker/install.sh)" - --with-napcat如果从 Github 下载脚本遇到网络问题,您可以使用国内的 GitCode 镜像源:

sudo -E bash -c "$(curl -fsSL https://raw.gitcode.com/gh_mirrors/ne/nekro-agent/raw/main/docker/install.sh)" - --with-napcat提示:

上述命令中的 --with-napcat 参数会启动全自动标准部署。 如果不加此参数,脚本会以交互模式启动,届时请选择 Y 以安装 Napcat。

我们欢迎所有开发者为 Nekro Agent 社区贡献代码或想法!

注意:贡献代码前请先阅读许可说明中的条款,贡献代码即表示您同意这些条款。

常见问题及解答请访问 故障排除与 FAQ

前往 Release 页面 查看重要更新日志

NekroAgent 采用 自定义的开源协议(基于 Apache License 2.0 修改)进行分发。请在遵守协议的前提下使用本项目!

感谢以下开发者对本项目做出的贡献

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for nekro-agent

Similar Open Source Tools

nekro-agent

Nekro Agent is an AI chat plugin and proxy execution bot that is highly scalable, offers high freedom, and has minimal deployment requirements. It features context-aware chat for group/private chats, custom character settings, sandboxed execution environment, interactive image resource handling, customizable extension development interface, easy deployment with docker-compose, integration with Stable Diffusion for AI drawing capabilities, support for various file types interaction, hot configuration updates and command control, native multimodal understanding, visual application management control panel, CoT (Chain of Thought) support, self-triggered timers and holiday greetings, event notification understanding, and more. It allows for third-party extensions and AI-generated extensions, and includes features like automatic context trigger based on LLM, and a variety of basic commands for bot administrators.

AIaW

AIaW is a next-generation LLM client with full functionality, lightweight, and extensible. It supports various basic functions such as streaming transfer, image uploading, and latex formulas. The tool is cross-platform with a responsive interface design. It supports multiple service providers like OpenAI, Anthropic, and Google. Users can modify questions, regenerate in a forked manner, and visualize conversations in a tree structure. Additionally, it offers features like file parsing, video parsing, plugin system, assistant market, local storage with real-time cloud sync, and customizable interface themes. Users can create multiple workspaces, use dynamic prompt word variables, extend plugins, and benefit from detailed design elements like real-time content preview, optimized code pasting, and support for various file types.

llama.ui

llama.ui is an open-source desktop application that provides a beautiful, user-friendly interface for interacting with large language models powered by llama.cpp. It is designed for simplicity and privacy, allowing users to chat with powerful quantized models on their local machine without the need for cloud services. The project offers multi-provider support, conversation management with indexedDB storage, rich UI components including markdown rendering and file attachments, advanced features like PWA support and customizable generation parameters, and is privacy-focused with all data stored locally in the browser.

ai-manus

AI Manus is a general-purpose AI Agent system that supports running various tools and operations in a sandbox environment. It offers deployment with minimal dependencies, supports multiple tools like Terminal, Browser, File, Web Search, and messaging tools, allocates separate sandboxes for tasks, manages session history, supports stopping and interrupting conversations, file upload and download, and is multilingual. The system also provides user login and authentication. The project primarily relies on Docker for development and deployment, with model capability requirements and recommended Deepseek and GPT models.

cedar-OS

Cedar OS is an open-source framework that bridges the gap between AI agents and React applications, enabling the creation of AI-native applications where agents can interact with the application state like users. It focuses on providing intuitive and powerful ways for humans to interact with AI through features like full state integration, real-time streaming, voice-first design, and flexible architecture. Cedar OS offers production-ready chat components, agentic state management, context-aware mentions, voice integration, spells & quick actions, and fully customizable UI. It differentiates itself by offering a true AI-native architecture, developer-first experience, production-ready features, and extensibility. Built with TypeScript support, Cedar OS is designed for developers working on ambitious AI-native applications.

llm

The 'llm' package for Emacs provides an interface for interacting with Large Language Models (LLMs). It abstracts functionality to a higher level, concealing API variations and ensuring compatibility with various LLMs. Users can set up providers like OpenAI, Gemini, Vertex, Claude, Ollama, GPT4All, and a fake client for testing. The package allows for chat interactions, embeddings, token counting, and function calling. It also offers advanced prompt creation and logging capabilities. Users can handle conversations, create prompts with placeholders, and contribute by creating providers.

odoo-llm

This repository provides a comprehensive framework for integrating Large Language Models (LLMs) into Odoo. It enables seamless interaction with AI providers like OpenAI, Anthropic, Ollama, and Replicate for chat completions, text embeddings, and more within the Odoo environment. The architecture includes external AI clients connecting via `llm_mcp_server` and Odoo AI Chat with built-in chat interface. The core module `llm` offers provider abstraction, model management, and security, along with tools for CRUD operations and domain-specific tool packs. Various AI providers, infrastructure components, and domain-specific tools are available for different tasks such as content generation, knowledge base management, and AI assistants creation.

MaiBot

MaiBot is an intelligent QQ group chat bot based on a large language model. It is developed using the nonebot2 framework, with LLM providing conversation abilities, MongoDB for data persistence support, and NapCat as the QQ protocol endpoint support. The project is in active development stage, with features like chat functionality, emoji functionality, schedule management, memory function, knowledge base function, and relationship function planned for future updates. The project aims to create a 'life form' active in QQ group chats, focusing on companionship and creating a more human-like presence rather than a perfect assistant. The application generates content from AI models, so users are advised to discern carefully and not use it for illegal purposes.

baibot

Baibot is a versatile chatbot framework designed to simplify the process of creating and deploying chatbots. It provides a user-friendly interface for building custom chatbots with various functionalities such as natural language processing, conversation flow management, and integration with external APIs. Baibot is highly customizable and can be easily extended to suit different use cases and industries. With Baibot, developers can quickly create intelligent chatbots that can interact with users in a seamless and engaging manner, enhancing user experience and automating customer support processes.

blurr

Panda is a proactive, on-device AI agent for Android that autonomously understands natural language commands and operates your phone's UI to achieve them. It acts as a personal operator, handling complex, multi-step tasks across different applications. With intelligent UI automation, high-quality voice, and personalized local memory, Panda simplifies interactions with technology. Built on Kotlin, Panda's architecture includes Eyes & Hands for physical device connection, The Brain for reasoning, and The Agent for execution. The project is a proof-of-concept aiming to become an indispensable assistant.

onyx

Onyx is an open-source Gen-AI and Enterprise Search tool that serves as an AI Assistant connected to company documents, apps, and people. It provides a chat interface, can be deployed anywhere, and offers features like user authentication, role management, chat persistence, and UI for configuring AI Assistants. Onyx acts as an Enterprise Search tool across various workplace platforms, enabling users to access team-specific knowledge and perform tasks like document search, AI answers for natural language queries, and integration with common workplace tools like Slack, Google Drive, Confluence, etc.

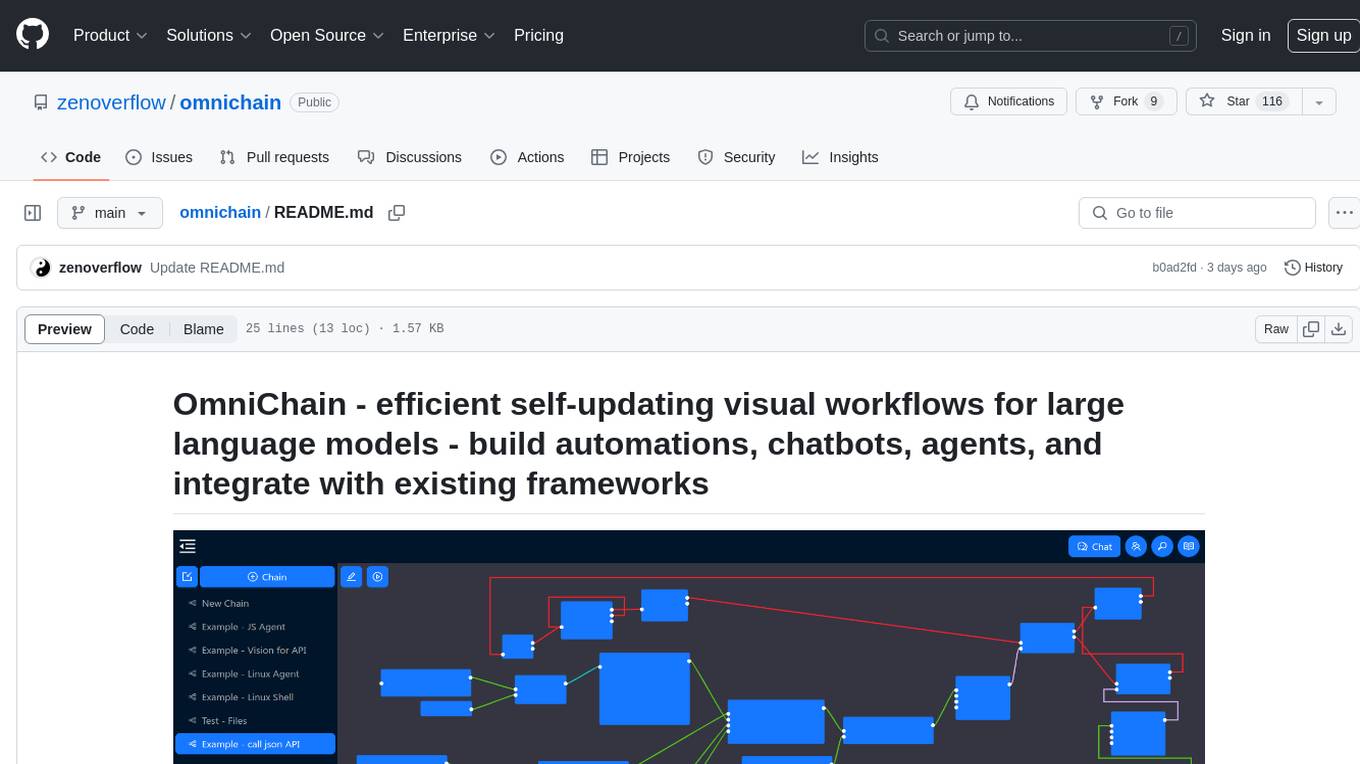

omnichain

OmniChain is a tool for building efficient self-updating visual workflows using AI language models, enabling users to automate tasks, create chatbots, agents, and integrate with existing frameworks. It allows users to create custom workflows guided by logic processes, store and recall information, and make decisions based on that information. The tool enables users to create tireless robot employees that operate 24/7, access the underlying operating system, generate and run NodeJS code snippets, and create custom agents and logic chains. OmniChain is self-hosted, open-source, and available for commercial use under the MIT license, with no coding skills required.

OllamaSharp

OllamaSharp is a .NET binding for the Ollama API, providing an intuitive API client to interact with Ollama. It offers support for all Ollama API endpoints, real-time streaming, progress reporting, and an API console for remote management. Users can easily set up the client, list models, pull models with progress feedback, stream completions, and build interactive chats. The project includes a demo console for exploring and managing the Ollama host.

hyper-mcp

hyper-mcp is a fast and secure MCP server that enables adding AI capabilities to applications through WebAssembly plugins. It supports writing plugins in various languages, distributing them via standard OCI registries, and running them in resource-constrained environments. The tool offers sandboxing with WASM for limiting access, cross-platform compatibility, and deployment flexibility. Security features include sandboxed plugins, memory-safe execution, secure plugin distribution, and fine-grained access control. Users can configure the tool for global or project-specific use, start the server with different transport options, and utilize available plugins for tasks like time calculations, QR code generation, hash generation, IP retrieval, and webpage fetching.

ai-dial

AI DIAL is an open-source project that provides a platform for developing and deploying conversational AI applications. It includes components such as DIAL Core for API exposure, DIAL SDK for development, and DIAL Chat for default UI. The project offers tutorials for launching AI DIAL Chat with different models and applications, along with a user manual and configuration guide. Additionally, there are various open-source repositories related to DIAL, including DIAL Helm for helm chart, DIAL Assistant for model agnostic assistant implementation, and DIAL Analytics Realtime for usage analytics. The project aims to simplify the development and deployment of AI-powered chat applications.

traceroot

TraceRoot is a tool that helps engineers debug production issues 10× faster using AI-powered analysis of traces, logs, and code context. It accelerates the debugging process with AI-powered insights, integrates seamlessly into the development workflow, provides real-time trace and log analysis, code context understanding, and intelligent assistance. Features include ease of use, LLM flexibility, distributed services, AI debugging interface, and integration support. Users can get started with TraceRoot Cloud for a 7-day trial or self-host the tool. SDKs are available for Python and JavaScript/TypeScript.

For similar tasks

h2ogpt

h2oGPT is an Apache V2 open-source project that allows users to query and summarize documents or chat with local private GPT LLMs. It features a private offline database of any documents (PDFs, Excel, Word, Images, Video Frames, Youtube, Audio, Code, Text, MarkDown, etc.), a persistent database (Chroma, Weaviate, or in-memory FAISS) using accurate embeddings (instructor-large, all-MiniLM-L6-v2, etc.), and efficient use of context using instruct-tuned LLMs (no need for LangChain's few-shot approach). h2oGPT also offers parallel summarization and extraction, reaching an output of 80 tokens per second with the 13B LLaMa2 model, HYDE (Hypothetical Document Embeddings) for enhanced retrieval based upon LLM responses, a variety of models supported (LLaMa2, Mistral, Falcon, Vicuna, WizardLM. With AutoGPTQ, 4-bit/8-bit, LORA, etc.), GPU support from HF and LLaMa.cpp GGML models, and CPU support using HF, LLaMa.cpp, and GPT4ALL models. Additionally, h2oGPT provides Attention Sinks for arbitrarily long generation (LLaMa-2, Mistral, MPT, Pythia, Falcon, etc.), a UI or CLI with streaming of all models, the ability to upload and view documents through the UI (control multiple collaborative or personal collections), Vision Models LLaVa, Claude-3, Gemini-Pro-Vision, GPT-4-Vision, Image Generation Stable Diffusion (sdxl-turbo, sdxl) and PlaygroundAI (playv2), Voice STT using Whisper with streaming audio conversion, Voice TTS using MIT-Licensed Microsoft Speech T5 with multiple voices and Streaming audio conversion, Voice TTS using MPL2-Licensed TTS including Voice Cloning and Streaming audio conversion, AI Assistant Voice Control Mode for hands-free control of h2oGPT chat, Bake-off UI mode against many models at the same time, Easy Download of model artifacts and control over models like LLaMa.cpp through the UI, Authentication in the UI by user/password via Native or Google OAuth, State Preservation in the UI by user/password, Linux, Docker, macOS, and Windows support, Easy Windows Installer for Windows 10 64-bit (CPU/CUDA), Easy macOS Installer for macOS (CPU/M1/M2), Inference Servers support (oLLaMa, HF TGI server, vLLM, Gradio, ExLLaMa, Replicate, OpenAI, Azure OpenAI, Anthropic), OpenAI-compliant, Server Proxy API (h2oGPT acts as drop-in-replacement to OpenAI server), Python client API (to talk to Gradio server), JSON Mode with any model via code block extraction. Also supports MistralAI JSON mode, Claude-3 via function calling with strict Schema, OpenAI via JSON mode, and vLLM via guided_json with strict Schema, Web-Search integration with Chat and Document Q/A, Agents for Search, Document Q/A, Python Code, CSV frames (Experimental, best with OpenAI currently), Evaluate performance using reward models, and Quality maintained with over 1000 unit and integration tests taking over 4 GPU-hours.

serverless-chat-langchainjs

This sample shows how to build a serverless chat experience with Retrieval-Augmented Generation using LangChain.js and Azure. The application is hosted on Azure Static Web Apps and Azure Functions, with Azure Cosmos DB for MongoDB vCore as the vector database. You can use it as a starting point for building more complex AI applications.

react-native-vercel-ai

Run Vercel AI package on React Native, Expo, Web and Universal apps. Currently React Native fetch API does not support streaming which is used as a default on Vercel AI. This package enables you to use AI library on React Native but the best usage is when used on Expo universal native apps. On mobile you get back responses without streaming with the same API of `useChat` and `useCompletion` and on web it will fallback to `ai/react`

LLamaSharp

LLamaSharp is a cross-platform library to run 🦙LLaMA/LLaVA model (and others) on your local device. Based on llama.cpp, inference with LLamaSharp is efficient on both CPU and GPU. With the higher-level APIs and RAG support, it's convenient to deploy LLM (Large Language Model) in your application with LLamaSharp.

gpt4all

GPT4All is an ecosystem to run powerful and customized large language models that work locally on consumer grade CPUs and any GPU. Note that your CPU needs to support AVX or AVX2 instructions. Learn more in the documentation. A GPT4All model is a 3GB - 8GB file that you can download and plug into the GPT4All open-source ecosystem software. Nomic AI supports and maintains this software ecosystem to enforce quality and security alongside spearheading the effort to allow any person or enterprise to easily train and deploy their own on-edge large language models.

ChatGPT-Telegram-Bot

ChatGPT Telegram Bot is a Telegram bot that provides a smooth AI experience. It supports both Azure OpenAI and native OpenAI, and offers real-time (streaming) response to AI, with a faster and smoother experience. The bot also has 15 preset bot identities that can be quickly switched, and supports custom bot identities to meet personalized needs. Additionally, it supports clearing the contents of the chat with a single click, and restarting the conversation at any time. The bot also supports native Telegram bot button support, making it easy and intuitive to implement required functions. User level division is also supported, with different levels enjoying different single session token numbers, context numbers, and session frequencies. The bot supports English and Chinese on UI, and is containerized for easy deployment.

twinny

Twinny is a free and open-source AI code completion plugin for Visual Studio Code and compatible editors. It integrates with various tools and frameworks, including Ollama, llama.cpp, oobabooga/text-generation-webui, LM Studio, LiteLLM, and Open WebUI. Twinny offers features such as fill-in-the-middle code completion, chat with AI about your code, customizable API endpoints, and support for single or multiline fill-in-middle completions. It is easy to install via the Visual Studio Code extensions marketplace and provides a range of customization options. Twinny supports both online and offline operation and conforms to the OpenAI API standard.

agnai

Agnaistic is an AI roleplay chat tool that allows users to interact with personalized characters using their favorite AI services. It supports multiple AI services, persona schema formats, and features such as group conversations, user authentication, and memory/lore books. Agnaistic can be self-hosted or run using Docker, and it provides a range of customization options through its settings.json file. The tool is designed to be user-friendly and accessible, making it suitable for both casual users and developers.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.