earth2studio

Open-source deep-learning framework for exploring, building and deploying AI weather/climate workflows.

Stars: 254

Earth2Studio is a Python-based package designed to enable users to quickly get started with AI weather and climate models. It provides access to pre-trained models, diagnostic tools, data sources, IO utilities, perturbation methods, and sample workflows for building custom weather prediction workflows. The package aims to empower users to explore AI-driven meteorology through modular components and seamless integration with other Nvidia packages like Modulus.

README:

Earth2Studio is a Python-based package designed to get users up and running with AI Earth system models fast. Our mission is to enable everyone to build, research and explore AI driven weather and climate science.

- Earth2Studio Documentation -

Install | User-Guide | Examples | API

Install Earth2Studio:

pip install earth2studio[dlwp]Run a deterministic AI weather prediction in just a few lines of code:

from earth2studio.models.px import DLWP

from earth2studio.data import GFS

from earth2studio.io import NetCDF4Backend

from earth2studio.run import deterministic as run

model = DLWP.load_model(DLWP.load_default_package())

ds = GFS()

io = NetCDF4Backend("output.nc")

run(["2024-01-01"], 10, model, ds, io)Swap out for a different AI model by just installing

and replacing DLWP references with another forecast model.

- The latest Climate in a Bottle generative AI model from NVIDIA research has been added via several APIs including a data source, infilling and super-resolution APIs. See the cBottle examples for more.

- The long awaited GraphCast 1 degree prognostic model and GraphCast Operational prognostic model are now added.

- Advanced Subseasonal-to-Seasonal (S2S) forecasting recipe added demonstrating new inference pipelines for subseasonal weather forecasts (from 2 weeks to 3 months).

For a complete list of latest features and improvements see the changelog.

Earth2Studio is an AI inference pipeline toolkit focused on weather and climate applications that is designed to ride on top of different AI frameworks, model architectures, data sources and SciML tooling while providing a unified API.

The composability of the different core components in Earth2Studio easily allows the development and deployment of increasingly complex pipelines that may chain multiple data sources, AI models and other modules together.

The unified ecosystem of Earth2Studio provides users the opportunity to rapidly swap out components for alternatives. In addition to the largest model zoo of weather/climate AI models, Earth2Studio is packed with useful functionality such as optimized data access to cloud data stores, statistical operations and more to accelerate your pipelines.

Earth2Studio can be used for seamless deployment of Earth-2 models trained in PhysicsNeMo.

Earth2Studio package focuses on supplying users the tools to build their own workflows, pipelines, APIs, packages, etc. via modular components including:

Prognostic Models

Prognostic models in Earth2Studio perform time integration, taking atmospheric fields at a specific time and auto-regressively predicting the same fields into the future (typically 6 hours per step), enabling both single time-step predictions and extended time-series forecasting.

Earth2Studio maintains the largest collection of pre-trained state-of-the-art AI weather/climate models ranging from global forecast models to regional specialized models, covering various resolutions, architectures, and forecasting capabilities to suit different computational and accuracy requirements.

Available models include but are not limited to:

| Model | Resolution | Architecture | Time Step | Coverage |

|---|---|---|---|---|

| GraphCast Small | 1.0° | Graph Neural Network | 6h | Global |

| GraphCast Operational | 0.25° | Graph Neural Network | 6h | Global |

| Pangu 3hr | 0.25° | Transformer | 3h | Global |

| Pangu 6hr | 0.25° | Transformer | 6h | Global |

| Pangu 24hr | 0.25° | Transformer | 24h | Global |

| Aurora | 0.25° | Transformer | 6h | Global |

| FuXi | 0.25° | Transformer | 6h | Global |

| AIFS | 0.25° | Transformer | 6h | Global |

| StormCast | 3km | Diffusion + Regression | 1h | Regional (US) |

| SFNO | 0.25° | Neural Operator | 6h | Global |

| DLESyM | 0.25° | Convolutional | 6h | Global |

For a complete list, see the prognostic model API docs.

Diagnostic Models

Diagnostic models in Earth2Studio perform time-independent transformations, typically taking geospatial fields at a specific time and predicting new derived quantities without performing time integration enabling users to build pipelines to predict specific quantities of interest that may not be provided by forecasting models.

Earth2Studio contains a growing collection of specialized diagnostic models for various phenomena including precipitation prediction, tropical cyclone tracking, solar radiation estimation, wind gust forecasting, and more.

Available diagnostics include but are not limited to:

| Model | Resolution | Architecture | Coverage | Output |

|---|---|---|---|---|

| PrecipitationAFNO | 0.25° | Neural Operator | Global | Total precipitation |

| SolarRadiationAFNO1H | 0.25° | Neural Operator | Global | Surface solar radiation |

| WindgustAFNO | 0.25° | AFNO | Global | Maximum wind gust |

| TCTrackerVitart | 0.25° | Algorithmic | Global | TC tracks & properties |

| CBottleInfill | 100km | Diffusion | Global | Global climate sample |

| CBottleSR | 5km | Diffusion | Regional / Global | High-res climate |

| CorrDiff | Variable | Diffusion | Regional | Fine-scale weather |

| CorrDiffTaiwan | 2km | Diffusion | Regional (Taiwan) | Taiwan fine-scale weather |

For a complete list, see the diagnostic model API docs.

Datasources

Data sources in Earth2Studio provide a standardized API for accessing weather and climate datasets from various providers (numerical models, data assimilation results, and AI-generated data), enabling seamless integration of initial conditions for model inference and validation data for scoring across different data formats and storage systems.

Earth2Studio includes data sources ranging from operational weather models (GFS, HRRR, IFS) and reanalysis datasets (ERA5 via ARCO, CDS) to AI-generated climate data (cBottle) and local file systems. Fetching data is just plain easy, Earth2Studio handles the complicated parts giving the users an easy to use Xarray data array of requested data under a shared package wide vocabulary and coordinate system.

Available data sources include but are not limited to:

| Data Source | Type | Resolution | Coverage | Data Format |

|---|---|---|---|---|

| GFS | Operational | 0.25° | Global | GRIB2 |

| GFS_FX | Forecast | 0.25° | Global | GRIB2 |

| HRRR | Operational | 3km | Regional (US) | GRIB2 |

| HRRR_FX | Forecast | 3km | Regional (US) | GRIB2 |

| ARCO ERA5 | Reanalysis | 0.25° | Global | Zarr |

| CDS | Reanalysis | 0.25° | Global | NetCDF |

| IFS | Operational | 0.25° | Global | GRIB2 |

| NCAR_ERA5 | Reanalysis | 0.25° | Global | NetCDF |

| WeatherBench2 | Reanalysis | 0.25° | Global | Zarr |

| GEFS_FX | Ensemble Forecast | 0.25° | Global | GRIB2 |

| IMERG | Precipitation | 0.1° | Global | NetCDF |

| CBottle3D | AI Generated | 100km | Global | HEALPix |

For a complete list, see the data source API docs.

IO Backends

IO backends in Earth2Studio provides a standardized interface for writing and storing pipeline outputs across different file formats and storage systems enabling users to store inference outputs for later processing.

Earth2Studio includes IO backends ranging from traditional scientific formats (NetCDF) and modern cloud-optimized formats (Zarr) to in-memory storage backends.

Available IO backends include:

| IO Backend | Format | Features | Location |

|---|---|---|---|

| ZarrBackend | Zarr | Compression, Chunking | In-Memory/Local |

| AsyncZarrBackend | Zarr | Async writes, Parallel I/O | In-Memory/Local/Remote |

| NetCDF4Backend | NetCDF4 | CF-compliant, Metadata | In-Memory/Local |

| XarrayBackend | Xarray Dataset | Rich metadata, Analysis-ready | In-Memory |

| KVBackend | Key-Value | Fast Temporary Access | In-Memory |

For a complete list, see the IO API docs.

Perturbation Methods

Perturbation methods in Earth2Studio provide a standardized interface for adding noise to data arrays, typically enabling the creation of ensembling forecast pipelines that capture uncertainty in weather and climate predictions.

Available perturbations include but are not limited to:

| Perturbation Method | Type | Spatial Correlation | Temporal Correlation |

|---|---|---|---|

| Gaussian | Noise | None | None |

| Correlated SphericalGaussian | Noise | Spherical | AR(1) process |

| Spherical Gaussian | Noise | Spherical (Matern) | None |

| Brown | Noise | 2D Fourier | None |

| Bred Vector | Dynamical | Model-dependent | Model-dependent |

| Hemispheric Centred Bred Vector | Dynamical | Hemispheric | Model-dependent |

For a complete list, see the perturbations API docs.

Statistics / Metrics

Statistics and metrics in Earth2Studio provide operations typically useful for in-pipeline evaluation of forecast performance across different dimensions (spatial, temporal, ensemble) through various statistical measures including error metrics, correlation coefficients, and ensemble verification statistics.

Available operations include but are not limited to:

| Statistic | Type | Application |

|---|---|---|

| RMSE | Error Metric | Forecast accuracy |

| ACC | Correlation | Pattern correlation |

| CRPS | Ensemble Metric | Probabilistic skill |

| Rank Histogram | Ensemble Metric | Ensemble reliability |

| Standard Deviation | Moment | Spread measure |

| Spread-Skill Ratio | Ensemble Metric | Ensemble calibration |

For a complete list, see the statistics API docs.

For a more complete list of features, be sure to view the documentation. Don't see what you need? Great news, extension and customization are at the heart of our design.

Check out the contributing document for details about the technical requirements and the userguide for higher level philosophy, structure, and design.

Earth2Studio is provided under the Apache License 2.0, please see the LICENSE file for full license text.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for earth2studio

Similar Open Source Tools

earth2studio

Earth2Studio is a Python-based package designed to enable users to quickly get started with AI weather and climate models. It provides access to pre-trained models, diagnostic tools, data sources, IO utilities, perturbation methods, and sample workflows for building custom weather prediction workflows. The package aims to empower users to explore AI-driven meteorology through modular components and seamless integration with other Nvidia packages like Modulus.

ai-devkit

The ai-devkit repository is a comprehensive toolkit for developing and deploying artificial intelligence models. It provides a wide range of tools and resources to streamline the AI development process, including pre-trained models, data processing utilities, and deployment scripts. With a focus on simplicity and efficiency, ai-devkit aims to empower developers to quickly build and deploy AI solutions across various domains and applications.

osaurus

Osaurus is a versatile open-source tool designed for data scientists and machine learning engineers. It provides a wide range of functionalities for data preprocessing, feature engineering, model training, and evaluation. With Osaurus, users can easily clean and transform raw data, extract relevant features, build and tune machine learning models, and analyze model performance. The tool supports various machine learning algorithms and techniques, making it suitable for both beginners and experienced practitioners in the field. Osaurus is actively maintained and updated to incorporate the latest advancements in the machine learning domain, ensuring users have access to state-of-the-art tools and methodologies for their projects.

ai-inference

AI Inference is a Python library that provides tools for deploying and running machine learning models in production environments. It simplifies the process of integrating AI models into applications by offering a high-level API for inference tasks. With AI Inference, developers can easily load pre-trained models, perform inference on new data, and deploy models as RESTful APIs. The library supports various deep learning frameworks such as TensorFlow and PyTorch, making it versatile for a wide range of AI applications.

awesome-ai-tools

This repository contains a curated list of awesome AI tools that can be used for various machine learning and artificial intelligence projects. It includes tools for data preprocessing, model training, evaluation, and deployment. The list is regularly updated with new tools and resources to help developers and data scientists in their AI projects.

God-Level-AI

A drill of scientific methods, processes, algorithms, and systems to build stories & models. An in-depth learning resource for humans. This repository is designed for individuals aiming to excel in the field of Data and AI, providing video sessions and text content for learning. It caters to those in leadership positions, professionals, and students, emphasizing the need for dedicated effort to achieve excellence in the tech field. The content covers various topics with a focus on practical application.

lemonai

LemonAI is a versatile machine learning library designed to simplify the process of building and deploying AI models. It provides a wide range of tools and algorithms for data preprocessing, model training, and evaluation. With LemonAI, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is well-documented and beginner-friendly, making it suitable for both novice and experienced data scientists. LemonAI aims to streamline the development of AI applications and empower users to create innovative solutions using state-of-the-art machine learning methods.

pdr_ai_v2

pdr_ai_v2 is a Python library for implementing machine learning algorithms and models. It provides a wide range of tools and functionalities for data preprocessing, model training, evaluation, and deployment. The library is designed to be user-friendly and efficient, making it suitable for both beginners and experienced data scientists. With pdr_ai_v2, users can easily build and deploy machine learning models for various applications, such as classification, regression, clustering, and more.

AI_Spectrum

AI_Spectrum is a versatile machine learning library that provides a wide range of tools and algorithms for building and deploying AI models. It offers a user-friendly interface for data preprocessing, model training, and evaluation. With AI_Spectrum, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is designed to be flexible and scalable, making it suitable for both beginners and experienced data scientists.

datasets

Datasets is a repository that provides a collection of various datasets for machine learning and data analysis projects. It includes datasets in different formats such as CSV, JSON, and Excel, covering a wide range of topics including finance, healthcare, marketing, and more. The repository aims to help data scientists, researchers, and students access high-quality datasets for training models, conducting experiments, and exploring data analysis techniques.

open-ai

Open AI is a powerful tool for artificial intelligence research and development. It provides a wide range of machine learning models and algorithms, making it easier for developers to create innovative AI applications. With Open AI, users can explore cutting-edge technologies such as natural language processing, computer vision, and reinforcement learning. The platform offers a user-friendly interface and comprehensive documentation to support users in building and deploying AI solutions. Whether you are a beginner or an experienced AI practitioner, Open AI offers the tools and resources you need to accelerate your AI projects and stay ahead in the rapidly evolving field of artificial intelligence.

arconia

Arconia is a powerful open-source tool for managing and visualizing data in a user-friendly way. It provides a seamless experience for data analysts and scientists to explore, clean, and analyze datasets efficiently. With its intuitive interface and robust features, Arconia simplifies the process of data manipulation and visualization, making it an essential tool for anyone working with data.

GEN-AI

GEN-AI is a versatile Python library for implementing various artificial intelligence algorithms and models. It provides a wide range of tools and functionalities to support machine learning, deep learning, natural language processing, computer vision, and reinforcement learning tasks. With GEN-AI, users can easily build, train, and deploy AI models for diverse applications such as image recognition, text classification, sentiment analysis, object detection, and game playing. The library is designed to be user-friendly, efficient, and scalable, making it suitable for both beginners and experienced AI practitioners.

ComparIA

Compar:IA is a tool for blindly comparing different conversational AI models to raise awareness about the challenges of generative AI (bias, environmental impact) and to build up French-language preference datasets. It provides a platform for testing with real providers, enabling mock responses for testing purposes. The tool includes backend (FastAPI + Gradio) and frontend (SvelteKit) components, with Docker support for easy setup. Users can run the tool using provided Makefile commands or manually set up the backend and frontend. Additionally, the tool offers functionalities for database initialization, migrations, model generation, dataset export, and ranking methods.

neurons.me

Neurons.me is an open-source tool designed for creating and managing neural network models. It provides a user-friendly interface for building, training, and deploying deep learning models. With Neurons.me, users can easily experiment with different architectures, hyperparameters, and datasets to optimize their neural networks for various tasks. The tool simplifies the process of developing AI applications by abstracting away the complexities of model implementation and training.

emerging-trajectories

Emerging Trajectories is an open source library for tracking and saving forecasts of political, economic, and social events. It provides a way to organize and store forecasts, as well as track their accuracy over time. This can be useful for researchers, analysts, and anyone else who wants to keep track of their predictions.

For similar tasks

Awesome-LWMs

Awesome Large Weather Models (LWMs) is a curated collection of articles and resources related to large weather models used in AI for Earth and AI for Science. It includes information on various cutting-edge weather forecasting models, benchmark datasets, and research papers. The repository serves as a hub for researchers and enthusiasts to explore the latest advancements in weather modeling and forecasting.

earth2studio

Earth2Studio is a Python-based package designed to enable users to quickly get started with AI weather and climate models. It provides access to pre-trained models, diagnostic tools, data sources, IO utilities, perturbation methods, and sample workflows for building custom weather prediction workflows. The package aims to empower users to explore AI-driven meteorology through modular components and seamless integration with other Nvidia packages like Modulus.

Pathway-AI-Bootcamp

Welcome to the μLearn x Pathway Initiative, an exciting adventure into the world of Artificial Intelligence (AI)! This comprehensive course, developed in collaboration with Pathway, will empower you with the knowledge and skills needed to navigate the fascinating world of AI, with a special focus on Large Language Models (LLMs).

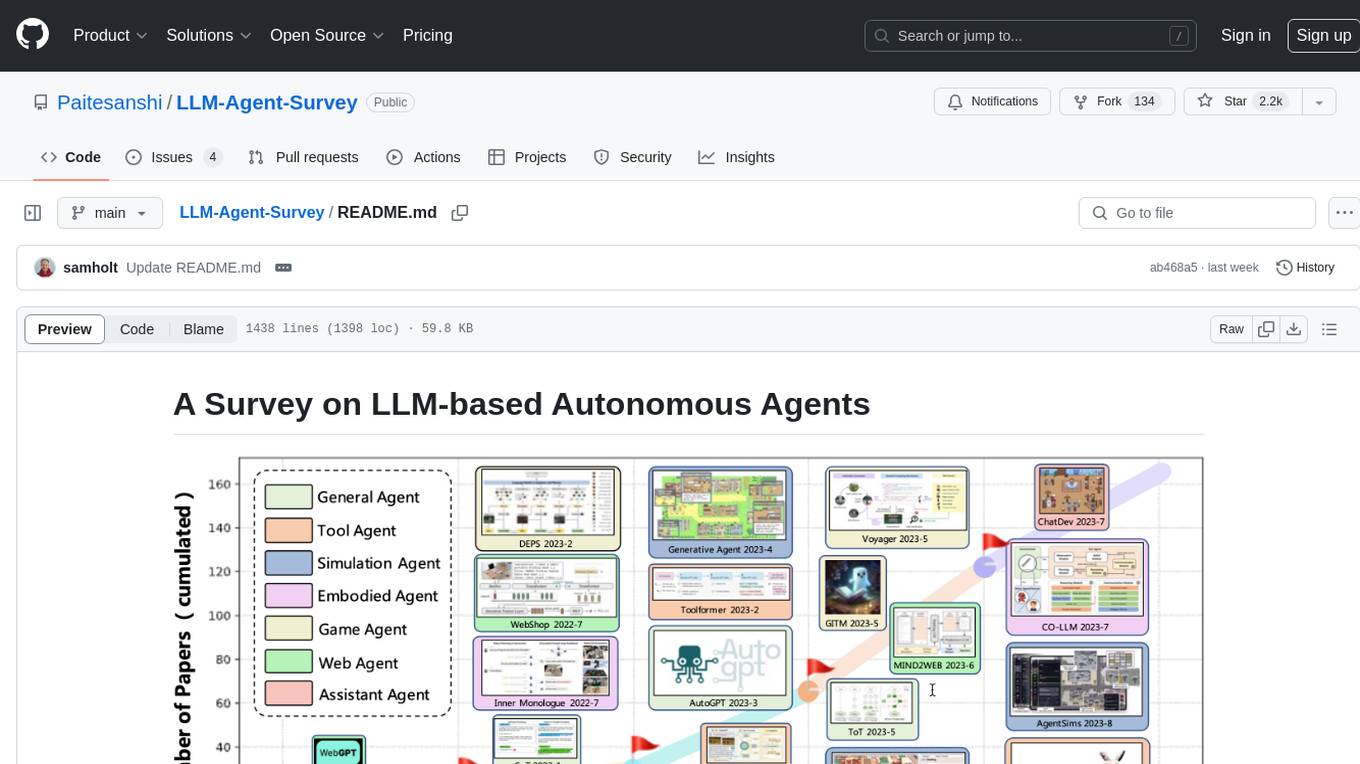

LLM-Agent-Survey

Autonomous agents are designed to achieve specific objectives through self-guided instructions. With the emergence and growth of large language models (LLMs), there is a growing trend in utilizing LLMs as fundamental controllers for these autonomous agents. This repository conducts a comprehensive survey study on the construction, application, and evaluation of LLM-based autonomous agents. It explores essential components of AI agents, application domains in natural sciences, social sciences, and engineering, and evaluation strategies. The survey aims to be a resource for researchers and practitioners in this rapidly evolving field.

genkit

Firebase Genkit (beta) is a framework with powerful tooling to help app developers build, test, deploy, and monitor AI-powered features with confidence. Genkit is cloud optimized and code-centric, integrating with many services that have free tiers to get started. It provides unified API for generation, context-aware AI features, evaluation of AI workflow, extensibility with plugins, easy deployment to Firebase or Google Cloud, observability and monitoring with OpenTelemetry, and a developer UI for prototyping and testing AI features locally. Genkit works seamlessly with Firebase or Google Cloud projects through official plugins and templates.

vector-cookbook

The Vector Cookbook is a collection of recipes and sample application starter kits for building AI applications with LLMs using PostgreSQL and Timescale Vector. Timescale Vector enhances PostgreSQL for AI applications by enabling the storage of vector, relational, and time-series data with faster search, higher recall, and more efficient time-based filtering. The repository includes resources, sample applications like TSV Time Machine, and guides for creating, storing, and querying OpenAI embeddings with PostgreSQL and pgvector. Users can learn about Timescale Vector, explore performance benchmarks, and access Python client libraries and tutorials.

cogai

The W3C Cognitive AI Community Group focuses on advancing Cognitive AI through collaboration on defining use cases, open source implementations, and application areas. The group aims to demonstrate the potential of Cognitive AI in various domains such as customer services, healthcare, cybersecurity, online learning, autonomous vehicles, manufacturing, and web search. They work on formal specifications for chunk data and rules, plausible knowledge notation, and neural networks for human-like AI. The group positions Cognitive AI as a combination of symbolic and statistical approaches inspired by human thought processes. They address research challenges including mimicry, emotional intelligence, natural language processing, and common sense reasoning. The long-term goal is to develop cognitive agents that are knowledgeable, creative, collaborative, empathic, and multilingual, capable of continual learning and self-awareness.

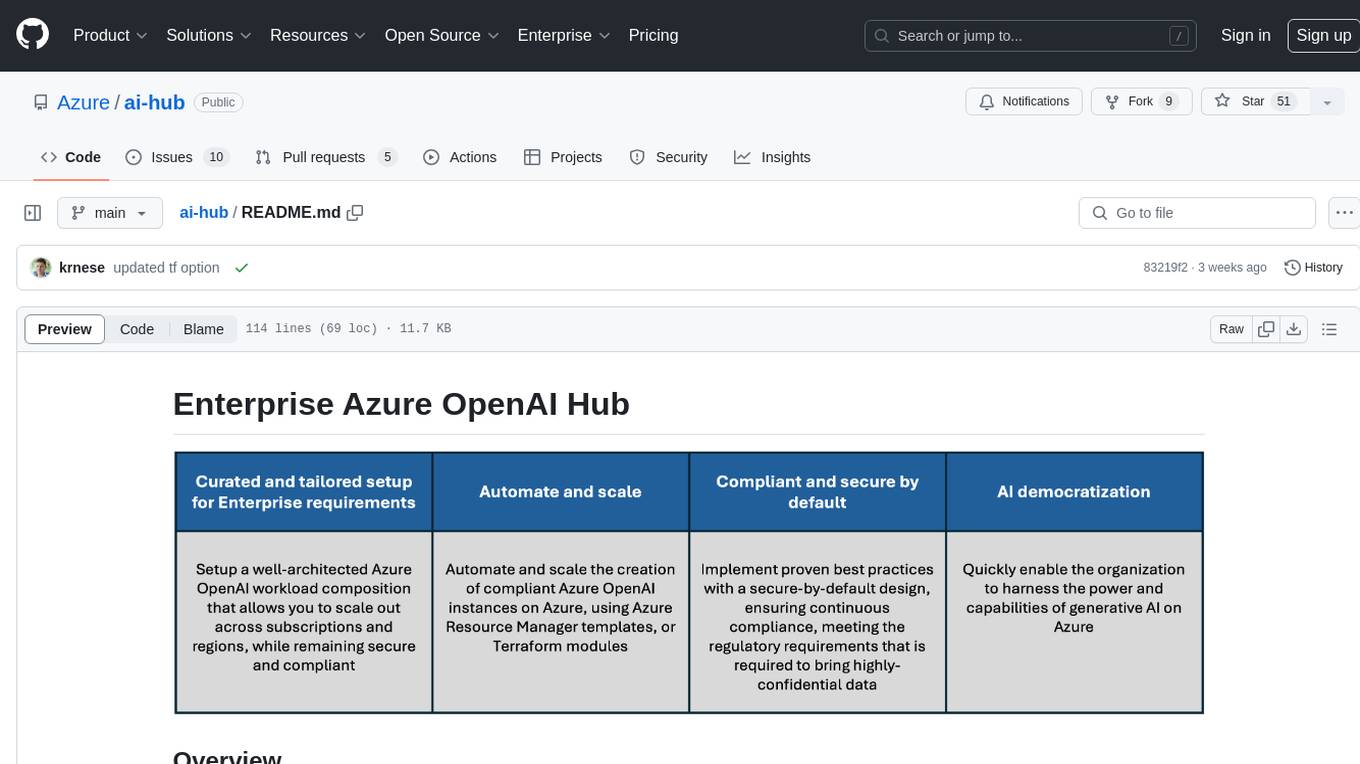

ai-hub

The Enterprise Azure OpenAI Hub is a comprehensive repository designed to guide users through the world of Generative AI on the Azure platform. It offers a structured learning experience to accelerate the transition from concept to production in an Enterprise context. The hub empowers users to explore various use cases with Azure services, ensuring security and compliance. It provides real-world examples and playbooks for practical insights into solving complex problems and developing cutting-edge AI solutions. The repository also serves as a library of proven patterns, aligning with industry standards and promoting best practices for secure and compliant AI development.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.