rulm

Language modeling and instruction tuning for Russian

Stars: 436

This repository contains language models for the Russian language, as well as their implementation and comparison. The models are trained on a dataset of ChatGPT-generated instructions and chats in Russian. They can be used for a variety of tasks, including question answering, text generation, and translation.

README:

Language models for Russian language: implementation and comparison.

- Slides from DataFest: link

- DataFest talk: link

- Main demo: link

- Retrieval QA CPU demo: link

- Fine-tuning Colab: link

- HuggingFace: link

- A dataset of ChatGPT-generated instructions in Russian.

- Based on the same principles as an original Alpaca.

- Instead of text-davinci-003 we used gpt-3.5-turbo.

- A script for dataset generation: generate_instructions.py

- Prompt: ru_instruct.txt

- Example:

### Задание:

Напишите краткую историю о двух лучших друзьях.

### Дано:

Имена друзей: Катя и Лена.

### Ответ:

Катя и Лена были лучшими друзьями с детства. Они были неразлучны и проводили много времени вместе, делясь своими мыслями и мечтами. Вместе они проходили через множество испытаний и всегда поддерживали друг друга. Когда они выросли, они пошли на разные университеты, но всегда оставались близкими. После окончания учебы они продолжили жить вместе и заниматься любимыми делами. Они оставались лучшими друзьями на протяжении всей жизни.

Warning: You should probably use Saiga models instead. They are actively supported and are better by side-by-side metrics.

- HuggingFace links:

- Training configs:

- Base models:

- Trained on Russian and English Alpacas. For training details see a separate README.

- HuggingFace: link

- A dataset of ChatGPT-generated chats in Russian.

- Based on the Baize paper.

- A script for dataset generation: generate_chat.py

- Prompt: ru_chat.txt

- Example:

Тема: Какие спицы (крючок) используете лично вы для вязания?

User: Какие спицы ты используешь для вязания?

Bot: Я, конечно, не могу вязать, но могу подсказать, что для вязания используются различные типы спиц. Например, круглые для вязания круговых изделий, двойные для вязания толстых ниток или деревянные для создания "теплых" изделий.

User: А какую марку спиц ты советуешь?

Bot: Это зависит от предпочтений каждого. Можно выбирать между разными марками и материалами, такими как металл, дерево, карбон, пластик. Многие мастера предпочитают Addi, Clover, ChiaoGoo, KnitPro, HiyaHiya. Каждая марка имеет свои особенности и уникальные особенности.

User: А какие спицы для вязания лучше всего подходят для начинающих?

Bot: Для новичков обычно рекомендуют спицы из дерева, так как они легче и позволяют лучше чувствовать нить. Круглые или двойные спицы также удобны для начинающих, потому что они не позволяют стежкам сползать с них.

- HuggingFace links:

- Training configs:

- Base models:

- Trained on 6 datasets: ru_turbo_saiga, ru_turbo_alpaca, ru_sharegpt_cleaned, oasst1_ru_main_branch, gpt_roleplay_realm, ru_turbo_alpaca_evol_instruct. The script for smart merging: create_chat_set.py

- HuggingFace: link

- A dataset of 200+ GPT-generated characters with 20 chats for each character.

- Example:

Имя: Кибер-Бабушка

Контекст: Кибер-Бабушка - это полу-робот, полу-человек, созданный для того, чтобы сохранить лучшие качества традиционных бабушек, такие как забота, доброта и мастерство в приготовлении вкусных блюд. Она знакома с передовыми технологиями, благодаря чему может обеспечить безопасность и удобство своим внукам и гостям. Кибер-бабушка способна помочь с любыми вопросами и с радостью делится своим опытом.

Приветствие: Здравствуй, дорогой! Чем могу помочь тебе сегодня?

Пример диалога:

- Пользователь: Привет, Кибер-Бабушка! Как приготовить пирог с яблоками?

- Персонаж: Ох, дорогой, с радостью расскажу! Возьми 3 яблока, очисти и нарежь тонкими ломтиками. Затем возьми 2 стакана муки, 1 стакан сахара, 200 г сливочного масла, 4 яйца и 1 чайную ложку разрыхлителя. Смешай все ингредиенты, выложи на противень и поставь в духовку на 180 градусов на 40 минут. Вуаля, пирог готов!

- Пользователь: Спасибо, Кибер-Бабушка!

- Персонаж: Всегда рада помочь, дорогой! Не забудь приходить еще!

See also:

- User-oriented questions: https://github.com/IlyaGusev/rulm/blob/master/self_instruct/data/user_oriented_ru_v2.jsonl

- Vicuna questions: https://github.com/IlyaGusev/rulm/blob/master/self_instruct/data/vicuna_question_ru.jsonl

- turbo vs gpt4: 46-8-122

- turbo vs saiga30b: 111-9-56

- turbo vs saiga30bq4_1: 121-9-46

- gigasaiga vs gpt3.5-turbo: 41-4-131

- saiga2_7b vs gpt3.5-turbo: 53-7-116

- saiga7b vs gpt3.5-turbo: 58-6-112

- saiga13b vs gpt3.5-turbo: 63-10-103

- saiga30b vs gpt3.5-turbo: 67-6-103

- saiga2_13b vs gpt3.5-turbo: 70-11-95

- saiga2_70b vs gpt3.5-turbo: 91-10-75

- saiga7b vs saiga2_7b: 78-8-90

- saiga13b vs saiga2_13b: 95-2-79

- saiga13b vs gigasaiga: 112-11-53

- RussianSuperGLUE: link

| Model | Final score | LiDiRus | RCB | PARus | MuSeRC | TERRa | RUSSE | RWSD | DaNetQA | RuCoS |

|---|---|---|---|---|---|---|---|---|---|---|

| LLaMA-2 13B LoRA | 71.8 | 39.8 | 48.9 / 54.3 | 78.4 | 91.9 / 76.1 | 79.3 | 74.0 | 71.4 | 90.7 | 78.0 / 76.0 |

| Saiga 13B LoRA | 71.2 | 43.6 | 43.9 / 50.0 | 69.4 | 89.8 / 70.4 | 86.5 | 72.8 | 71.4 | 86.2 | 85.0 / 83.0 |

| LLaMA 13B LoRA | 70.7 | 41.8 | 51.9 / 54.8 | 68.8 | 89.9 / 71.5 | 82.9 | 72.5 | 71.4 | 86.6 | 79.0 / 77.2 |

| ChatGPT zero-shot | 68.2 | 42.2 | 48.4 / 50.5 | 88.8 | 81.7 / 53.2 | 79.5 | 59.6 | 71.4 | 87.8 | 68.0 / 66.7 |

| LLaMA 70B zero-shot | 64.3 | 36.5 | 38.5 / 46.1 | 82.0 | 66.9 / 9.8 | 81.1 | 59.0 | 83.1 | 87.8 | 69.0 / 67.8 |

| RuGPT3.5 LoRA | 63.7 | 38.6 | 47.9 / 53.4 | 62.8 | 83.0 / 54.7 | 81.0 | 59.7 | 63.0 | 80.1 | 70.0 / 67.2 |

| Saiga 13B zero-shot | 55.4 | 29.3 | 42.0 / 46.6 | 63.0 | 68.1 / 22.3 | 70.2 | 56.5 | 67.5 | 76.3 | 47.0 / 45.8 |

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for rulm

Similar Open Source Tools

rulm

This repository contains language models for the Russian language, as well as their implementation and comparison. The models are trained on a dataset of ChatGPT-generated instructions and chats in Russian. They can be used for a variety of tasks, including question answering, text generation, and translation.

DataFlow

DataFlow is a data preparation and training system designed to parse, generate, process, and evaluate high-quality data from noisy sources, improving the performance of large language models in specific domains. It constructs diverse operators and pipelines, validated to enhance domain-oriented LLM's performance in fields like healthcare, finance, and law. DataFlow also features an intelligent DataFlow-agent capable of dynamically assembling new pipelines by recombining existing operators on demand.

InternVL

InternVL scales up the ViT to _**6B parameters**_ and aligns it with LLM. It is a vision-language foundation model that can perform various tasks, including: **Visual Perception** - Linear-Probe Image Classification - Semantic Segmentation - Zero-Shot Image Classification - Multilingual Zero-Shot Image Classification - Zero-Shot Video Classification **Cross-Modal Retrieval** - English Zero-Shot Image-Text Retrieval - Chinese Zero-Shot Image-Text Retrieval - Multilingual Zero-Shot Image-Text Retrieval on XTD **Multimodal Dialogue** - Zero-Shot Image Captioning - Multimodal Benchmarks with Frozen LLM - Multimodal Benchmarks with Trainable LLM - Tiny LVLM InternVL has been shown to achieve state-of-the-art results on a variety of benchmarks. For example, on the MMMU image classification benchmark, InternVL achieves a top-1 accuracy of 51.6%, which is higher than GPT-4V and Gemini Pro. On the DocVQA question answering benchmark, InternVL achieves a score of 82.2%, which is also higher than GPT-4V and Gemini Pro. InternVL is open-sourced and available on Hugging Face. It can be used for a variety of applications, including image classification, object detection, semantic segmentation, image captioning, and question answering.

LLMs

LLMs is a Chinese large language model technology stack for practical use. It includes high-availability pre-training, SFT, and DPO preference alignment code framework. The repository covers pre-training data cleaning, high-concurrency framework, SFT dataset cleaning, data quality improvement, and security alignment work for Chinese large language models. It also provides open-source SFT dataset construction, pre-training from scratch, and various tools and frameworks for data cleaning, quality optimization, and task alignment.

Native-LLM-for-Android

This repository provides a demonstration of running a native Large Language Model (LLM) on Android devices. It supports various models such as Qwen2.5-Instruct, MiniCPM-DPO/SFT, Yuan2.0, Gemma2-it, StableLM2-Chat/Zephyr, and Phi3.5-mini-instruct. The demo models are optimized for extreme execution speed after being converted from HuggingFace or ModelScope. Users can download the demo models from the provided drive link, place them in the assets folder, and follow specific instructions for decompression and model export. The repository also includes information on quantization methods and performance benchmarks for different models on various devices.

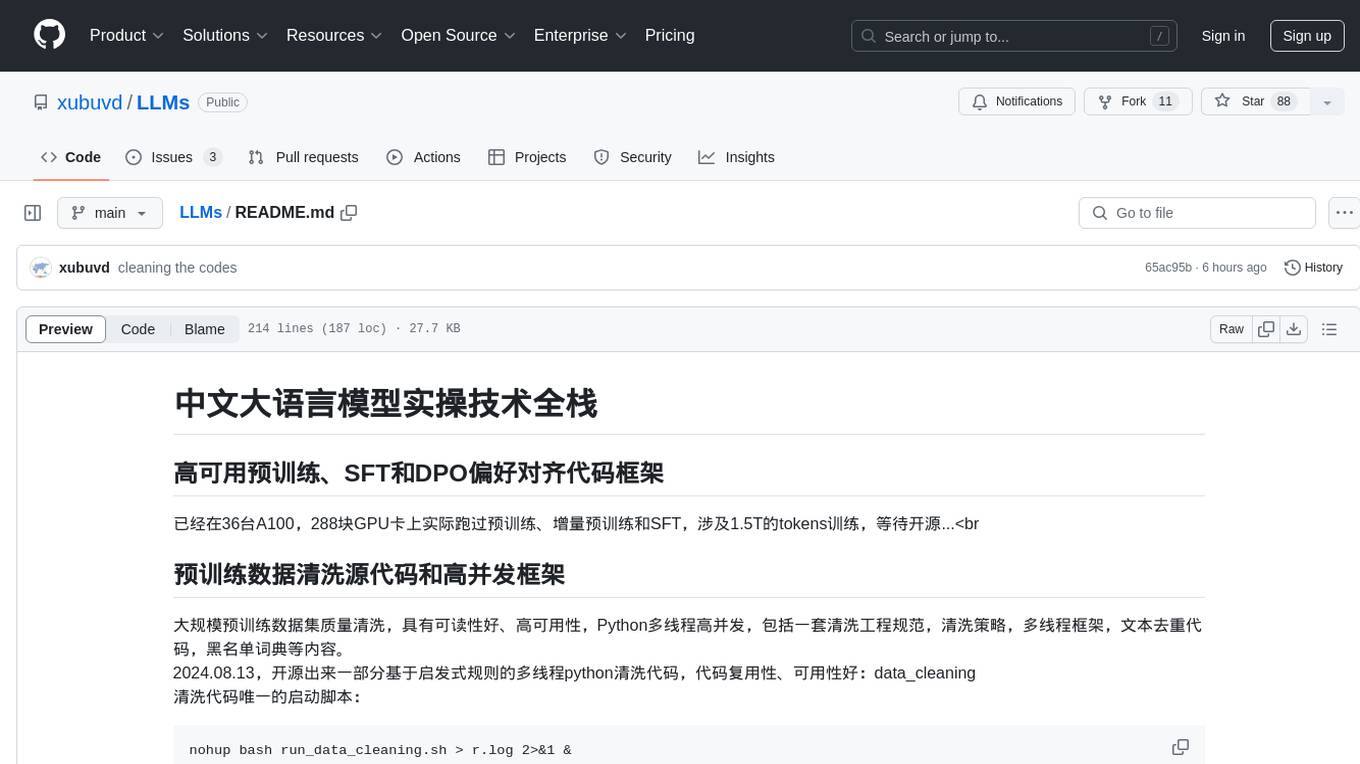

Step-DPO

Step-DPO is a method for enhancing long-chain reasoning ability of LLMs with a data construction pipeline creating a high-quality dataset. It significantly improves performance on math and GSM8K tasks with minimal data and training steps. The tool fine-tunes pre-trained models like Qwen2-7B-Instruct with Step-DPO, achieving superior results compared to other models. It provides scripts for training, evaluation, and deployment, along with examples and acknowledgements.

BlueLM

BlueLM is a large-scale pre-trained language model developed by vivo AI Global Research Institute, featuring 7B base and chat models. It includes high-quality training data with a token scale of 26 trillion, supporting both Chinese and English languages. BlueLM-7B-Chat excels in C-Eval and CMMLU evaluations, providing strong competition among open-source models of similar size. The models support 32K long texts for better context understanding while maintaining base capabilities. BlueLM welcomes developers for academic research and commercial applications.

Open-dLLM

Open-dLLM is the most open release of a diffusion-based large language model, providing pretraining, evaluation, inference, and checkpoints. It introduces Open-dCoder, the code-generation variant of Open-dLLM. The repo offers a complete stack for diffusion LLMs, enabling users to go from raw data to training, checkpoints, evaluation, and inference in one place. It includes pretraining pipeline with open datasets, inference scripts for easy sampling and generation, evaluation suite with various metrics, weights and checkpoints on Hugging Face, and transparent configs for full reproducibility.

MMOS

MMOS (Mix of Minimal Optimal Sets) is a dataset designed for math reasoning tasks, offering higher performance and lower construction costs. It includes various models and data subsets for tasks like arithmetic reasoning and math word problem solving. The dataset is used to identify minimal optimal sets through reasoning paths and statistical analysis, with a focus on QA-pairs generated from open-source datasets. MMOS also provides an auto problem generator for testing model robustness and scripts for training and inference.

agentica

Agentica is a human-centric framework for building large language model agents. It provides functionalities for planning, memory management, tool usage, and supports features like reflection, planning and execution, RAG, multi-agent, multi-role, and workflow. The tool allows users to quickly code and orchestrate agents, customize prompts, and make API calls to various services. It supports API calls to OpenAI, Azure, Deepseek, Moonshot, Claude, Ollama, and Together. Agentica aims to simplify the process of building AI agents by providing a user-friendly interface and a range of functionalities for agent development.

arena-hard-auto

Arena-Hard-Auto-v0.1 is an automatic evaluation tool for instruction-tuned LLMs. It contains 500 challenging user queries. The tool prompts GPT-4-Turbo as a judge to compare models' responses against a baseline model (default: GPT-4-0314). Arena-Hard-Auto employs an automatic judge as a cheaper and faster approximator to human preference. It has the highest correlation and separability to Chatbot Arena among popular open-ended LLM benchmarks. Users can evaluate their models' performance on Chatbot Arena by using Arena-Hard-Auto.

MOSS-TTS

MOSS-TTS Family is an open-source speech and sound generation model family designed for high-fidelity, high-expressiveness, and complex real-world scenarios. It includes five production-ready models: MOSS-TTS, MOSS-TTSD, MOSS-VoiceGenerator, MOSS-TTS-Realtime, and MOSS-SoundEffect, each serving specific purposes in speech generation, dialogue, voice design, real-time interactions, and sound effect generation. The models offer features like long-speech generation, fine-grained control over phonemes and duration, multilingual synthesis, voice cloning, and real-time voice agents.

Steel-LLM

Steel-LLM is a project to pre-train a large Chinese language model from scratch using over 1T of data to achieve a parameter size of around 1B, similar to TinyLlama. The project aims to share the entire process including data collection, data processing, pre-training framework selection, model design, and open-source all the code. The goal is to enable reproducibility of the work even with limited resources. The name 'Steel' is inspired by a band '万能青年旅店' and signifies the desire to create a strong model despite limited conditions. The project involves continuous data collection of various cultural elements, trivia, lyrics, niche literature, and personal secrets to train the LLM. The ultimate aim is to fill the model with diverse data and leave room for individual input, fostering collaboration among users.

MEGREZ

MEGREZ is a modern and elegant open-source high-performance computing platform that efficiently manages GPU resources. It allows for easy container instance creation, supports multiple nodes/multiple GPUs, modern UI environment isolation, customizable performance configurations, and user data isolation. The platform also comes with pre-installed deep learning environments, supports multiple users, features a VSCode web version, resource performance monitoring dashboard, and Jupyter Notebook support.

hello-agents

Hello-Agents is a comprehensive tutorial on building intelligent agent systems, covering both theoretical foundations and practical applications. The tutorial aims to guide users in understanding and building AI-native agents, diving deep into core principles, architectures, and paradigms of intelligent agents. Users will learn to develop their own multi-agent applications from scratch, gaining hands-on experience with popular low-code platforms and agent frameworks. The tutorial also covers advanced topics such as memory systems, context engineering, communication protocols, and model training. By the end of the tutorial, users will have the skills to develop real-world projects like intelligent travel assistants and cyber towns.

FaceAISDK_Android

FaceAI SDK is an on-device offline face detection, recognition, liveness detection, anti-spoofing, and 1:N/M:N face search SDK. It enables quick integration to achieve on-device face recognition, face search, and other functions. The SDK performs all functions offline on the device without the need for internet connection, ensuring privacy and security. It supports various actions for liveness detection, custom camera management, and clear imaging even in challenging lighting conditions.

For similar tasks

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

khoj

Khoj is an open-source, personal AI assistant that extends your capabilities by creating always-available AI agents. You can share your notes and documents to extend your digital brain, and your AI agents have access to the internet, allowing you to incorporate real-time information. Khoj is accessible on Desktop, Emacs, Obsidian, Web, and Whatsapp, and you can share PDF, markdown, org-mode, notion files, and GitHub repositories. You'll get fast, accurate semantic search on top of your docs, and your agents can create deeply personal images and understand your speech. Khoj is self-hostable and always will be.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

For similar jobs

ChatFAQ

ChatFAQ is an open-source comprehensive platform for creating a wide variety of chatbots: generic ones, business-trained, or even capable of redirecting requests to human operators. It includes a specialized NLP/NLG engine based on a RAG architecture and customized chat widgets, ensuring a tailored experience for users and avoiding vendor lock-in.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.