Best AI tools for< Language Researcher >

Infographic

20 - AI tool Sites

Neoform AI

Neoform AI is an innovative AI tool that focuses on developing AI models specifically for African dialects. The platform aims to bridge the gap in AI technology by providing solutions tailored to the linguistic diversity of Africa. With a commitment to inclusivity and cultural representation, Neoform AI is revolutionizing the field of artificial intelligence by addressing the unique challenges faced by African languages. Through cutting-edge research and development, Neoform AI is paving the way for greater accessibility and accuracy in AI applications across the continent.

Shooketh

Shooketh is an AI bot that is fine-tuned on Shakespeare's literary works. It is built with the Vercel AI SDK and utilizes OpenAI GPT-3.5-turbo. Users can learn how to build their own bot like Shooketh by reading the provided guide. The bot can engage in conversations and provide insights inspired by Shakespeare's writings.

ChatTTS

ChatTTS is an open-source text-to-speech model designed for dialogue scenarios, supporting both English and Chinese speech generation. Trained on approximately 100,000 hours of Chinese and English data, it delivers speech quality comparable to human dialogue. The tool is particularly suitable for tasks involving large language model assistants and creating dialogue-based audio and video introductions. It provides developers with a powerful and easy-to-use tool based on open-source natural language processing and speech synthesis technologies.

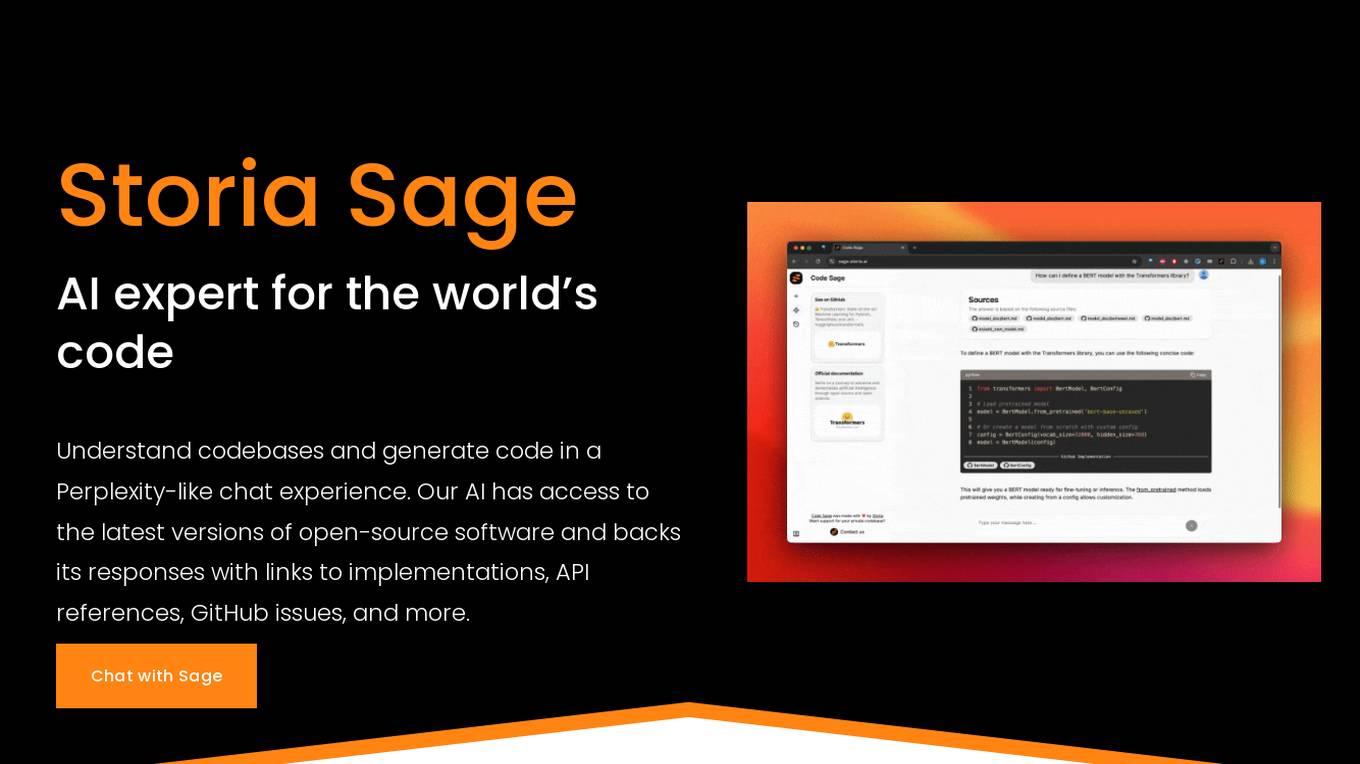

Storia AI

Storia AI is an AI tool designed to assist software engineering teams in understanding and generating code. It provides a Perplexity-like chat experience where users can interact with an AI expert that has access to the latest versions of open-source software. The tool aims to improve code understanding and generation by providing responses backed with links to implementations, API references, GitHub issues, and more. Storia AI is developed by a team of natural language processing researchers from Google and Amazon Alexa, with a mission to build the most reliable AI pair programmer for engineering teams.

LLM Clash

LLM Clash is a web-based application that allows users to compare the outputs of different large language models (LLMs) on a given task. Users can input a prompt and select which LLMs they want to compare. The application will then display the outputs of the LLMs side-by-side, allowing users to compare their strengths and weaknesses.

Machine Translation Research Hub

This website is a comprehensive resource for research in statistical and neural machine translation. It provides information, tools, and datasets related to the translation of text from one human language to another using computer algorithms trained on vast amounts of translated text.

Writefull

Writefull is an AI-powered writing assistant that helps researchers and students write, paraphrase, copyedit, and more. It is designed to help non-native English speakers improve their writing and to make academic writing easier and faster. Writefull's AI is trained on millions of journal articles, so its edits are tailored to academic writing. It also offers a variety of AI widgets that can help you craft your sentences, such as the Academizer, Paraphraser, Title Generator, Abstract Generator, and GPT Detector.

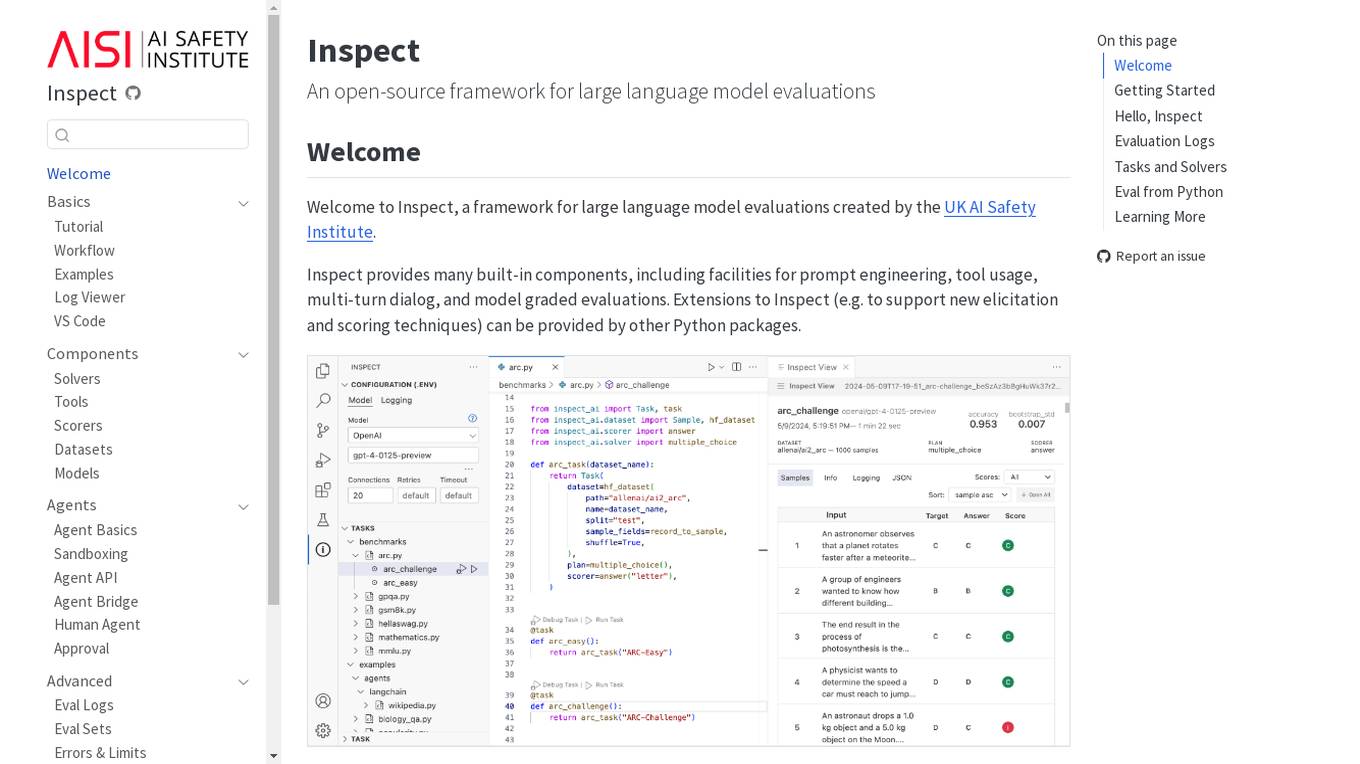

Inspect

Inspect is an open-source framework for large language model evaluations created by the UK AI Safety Institute. It provides built-in components for prompt engineering, tool usage, multi-turn dialog, and model graded evaluations. Users can explore various solvers, tools, scorers, datasets, and models to create advanced evaluations. Inspect supports extensions for new elicitation and scoring techniques through Python packages.

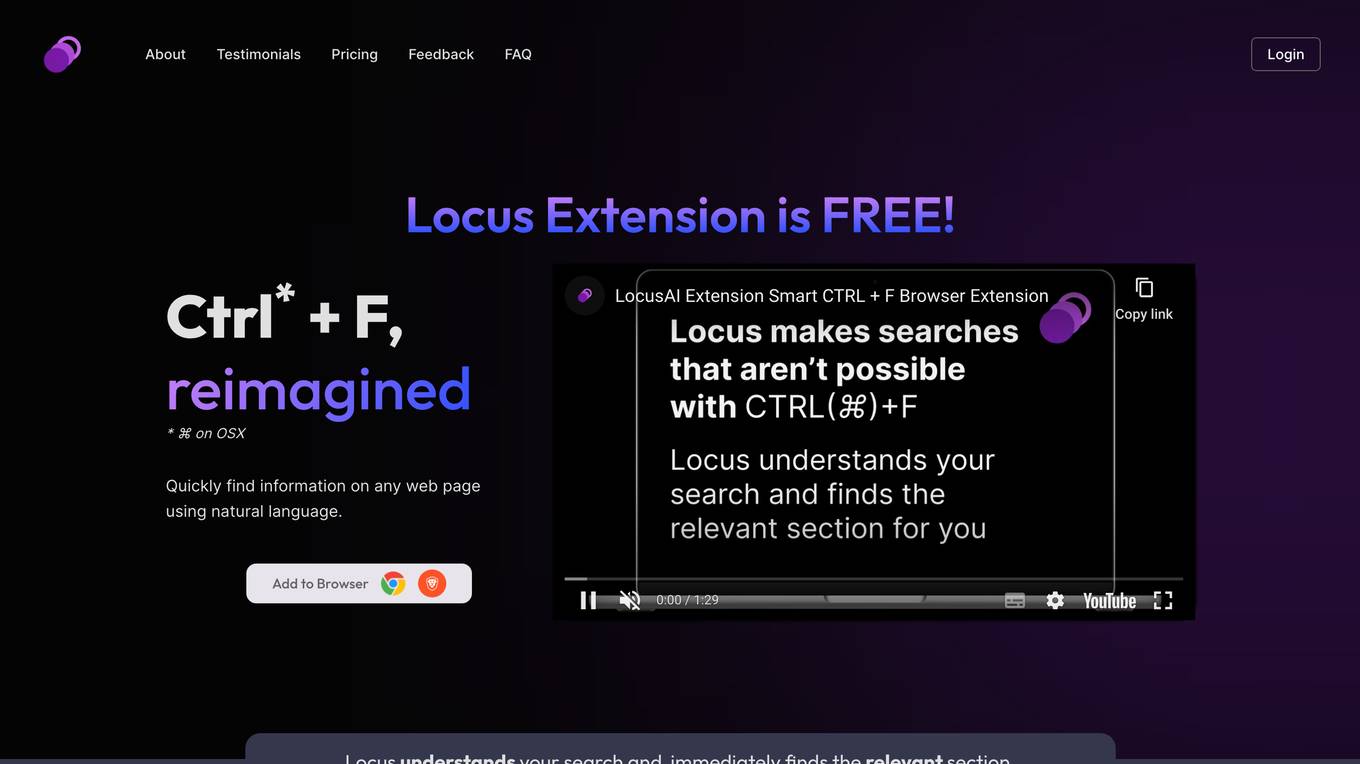

Locus

Locus is a free browser extension that uses natural language processing to help users quickly find information on any web page. It allows users to search for specific terms or concepts using natural language queries, and then instantly jumps to the relevant section of the page. Locus also integrates with AI-powered tools such as GPT-3.5 to provide additional functionality, such as summarizing text and generating code. With Locus, users can save time and improve their productivity when reading and researching online.

NepaliGPT

NepaliGPT is an AI-powered tool that provides language processing capabilities specifically tailored for the Nepali language. It offers users the ability to generate text, analyze content, and assist in research tasks. The tool is designed to help users with various language-related tasks, such as writing, proofreading, and information verification. With its advanced algorithms, NepaliGPT aims to enhance the user experience by providing accurate and efficient language processing solutions.

OdiaGenAI

OdiaGenAI is a collaborative initiative focused on conducting research on Generative AI and Large Language Models (LLM) for the Odia Language. The project aims to leverage AI technology to develop Generative AI and LLM-based solutions for the overall development of Odisha and the Odia language through collaboration among Odia technologists. The initiative offers pre-trained models, codes, and datasets for non-commercial and research purposes, with a focus on building language models for Indic languages like Odia and Bengali.

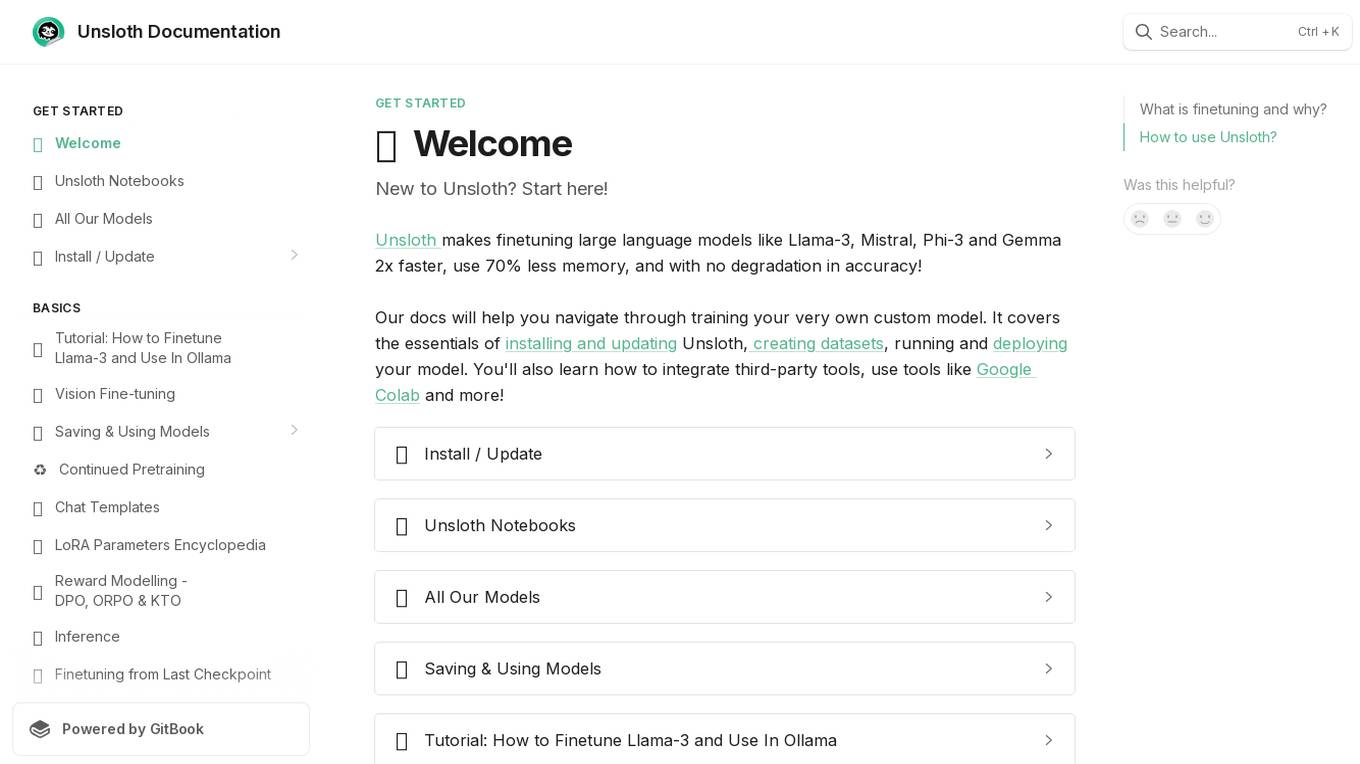

Unsloth

Unsloth is an AI tool designed to make finetuning large language models like Llama-3, Mistral, Phi-3, and Gemma 2x faster, use 70% less memory, and with no degradation in accuracy. The tool provides documentation to help users navigate through training their custom models, covering essentials such as installing and updating Unsloth, creating datasets, running, and deploying models. Users can also integrate third-party tools and utilize platforms like Google Colab.

LangChain

LangChain is a framework for developing applications powered by large language models (LLMs). It simplifies every stage of the LLM application lifecycle, including development, productionization, and deployment. LangChain consists of open-source libraries such as langchain-core, langchain-community, and partner packages. It also includes LangGraph for building stateful agents and LangSmith for debugging and monitoring LLM applications.

AI Learning Platform

The website offers a brand new course titled 'Prompt Engineering for Everyone' to help users master the language of AI. With over 100 courses and 20+ learning paths, users can learn AI, Data Science, and other emerging technologies. The platform provides hands-on content designed by expert instructors, allowing users to gain practical, industry-relevant knowledge and skills. Users can earn certificates to showcase their expertise and build projects to demonstrate their skills. Trusted by 3 million learners globally, the platform offers a community of learners with a proven track record of success.

Ollama

Ollama is an AI tool that allows users to access and utilize large language models such as Llama 3, Phi 3, Mistral, Gemma 2, and more. Users can customize and create their own models. The tool is available for macOS, Linux, and Windows platforms, offering a preview version for users to explore and utilize these models for various applications.

ReadWeb.ai

ReadWeb.ai is a free web-based tool that provides instant multi-language translation of web pages. It allows users to translate any webpage into up to 10 different languages with just one click. ReadWeb.ai also offers a unique bilingual reading experience, allowing users to view translations in an easy-to-understand, top-and-bottom format. This makes it an ideal tool for language learners, researchers, and anyone who needs to access information from websites in different languages.

MiniGPT-4

MiniGPT-4 is a powerful AI tool that combines a vision encoder with a large language model (LLM) to enhance vision-language understanding. It can generate detailed image descriptions, create websites from handwritten drafts, write stories and poems inspired by images, provide solutions to problems shown in images, and teach users how to cook based on food photos. MiniGPT-4 is highly computationally efficient and easy to use, making it a valuable tool for a wide range of applications.

CAMB.AI

CAMB.AI is a leading AI-powered localization tool for content, entertainment, and sports industries. It offers real-time translation services in over 150 languages, catering to a wide audience of 8 billion people. Trusted by major entertainment and sports brands, CAMB.AI sets the gold standard in AI-powered localization, ensuring every nuance and emotion is accurately conveyed. The tool has been instrumental in scaling content for top creators, dubbing movies and sports events, and breaking language barriers in various media formats.

Floneum

Floneum is a versatile AI-powered tool designed for language tasks. It offers a user-friendly interface to build workflows using large language models. With Floneum, users can securely extend functionality by writing plugins in various languages compiled to WebAssembly. The tool provides a sandboxed environment for plugins, ensuring limited resource access. With 41 built-in plugins, Floneum simplifies tasks such as text generation, search engine operations, file handling, Python execution, browser automation, and data manipulation.

Chat GPT

Chat GPT is an AI-powered chatbot developed by OpenAI, a leading research company in the field of artificial intelligence. It is designed to understand and generate human-like text, making it a versatile tool for a wide range of applications. With its advanced language processing capabilities, Chat GPT can engage in natural conversations, answer questions, provide information, and even create creative content. It is accessible online and can be integrated into various platforms and applications to enhance their functionality.

6 - Open Source Tools

rulm

This repository contains language models for the Russian language, as well as their implementation and comparison. The models are trained on a dataset of ChatGPT-generated instructions and chats in Russian. They can be used for a variety of tasks, including question answering, text generation, and translation.

sailor-llm

Sailor is a suite of open language models tailored for South-East Asia (SEA), focusing on languages such as Indonesian, Thai, Vietnamese, Malay, and Lao. Developed with careful data curation, Sailor models are designed to understand and generate text across diverse linguistic landscapes of the SEA region. Built from Qwen 1.5, Sailor encompasses models of varying sizes, spanning from 0.5B to 7B versions for different requirements. Benchmarking results demonstrate Sailor's proficiency in tasks such as question answering, commonsense reasoning, reading comprehension, and more in SEA languages.

awesome-khmer-language

Awesome Khmer Language is a comprehensive collection of resources for the Khmer language, including tools, datasets, research papers, projects/models, blogs/slides, and miscellaneous items. It covers a wide range of topics related to Khmer language processing, such as character normalization, word segmentation, part-of-speech tagging, optical character recognition, text-to-speech, and more. The repository aims to support the development of natural language processing applications for the Khmer language by providing a diverse set of resources and tools for researchers and developers.

Verbiverse

Verbiverse is a tool that uses a large language model to assist in reading PDFs and watching videos, aimed at improving language proficiency. It provides a more convenient and efficient way to use large models through predefined prompts, designed for those looking to enhance their language skills. The tool analyzes unfamiliar words and sentences in foreign language PDFs or video subtitles, providing better contextual understanding compared to traditional dictionary translations or ambiguous meanings. It offers features such as automatic loading of subtitles, word analysis by clicking or double-clicking, and a word database for collecting words. Users can run the tool on Windows x86_64 or ubuntu_22.04 x86_64 platforms by downloading the precompiled packages or by cloning the source code and setting up a virtual environment with Python. It is recommended to use a local model or smaller PDF files for testing due to potential token consumption issues with large files.

LLPlayer

LLPlayer is a specialized media player designed for language learning, offering unique features such as dual subtitles, AI-generated subtitles, real-time OCR, real-time translation, word lookup, and more. It supports multiple languages, online video playback, customizable settings, and integration with browser extensions. Written in C#/WPF, LLPlayer is free, open-source, and aims to enhance the language learning experience through innovative functionalities.

rime_wanxiang

Rime Wanxiang is a pinyin input method based on deep optimized lexicon and language model. It features a lexicon with tones, AI and large corpus filtering, and frequency addition to provide more accurate sentence output. The tool supports various input methods and customization options, aiming to enhance user experience through lexicon and transcription. Users can also refresh the lexicon with different types of auxiliary codes using the LMDG toolkit package. Wanxiang offers core features like tone-marked pinyin annotations, phrase composition, and word frequency, with customizable functionalities. The tool is designed to provide a seamless input experience based on lexicon and transcription.

20 - OpenAI Gpts

Language Proficiency Level Self-Assessment

A language self-assessment guide with mobile app voice interaction support.

Language Mind Maps

Master language complexities with tailored mind maps that enhance understanding and bolster memory. Explore linguistic patterns in a visually engaging way. 🧠🗺️

AlemannicGPT

Schwäbisch alemannischer Chatbot - AlemannicGPT speaks and understands Svabian, Badenian and Swiss German dialects.

Norwegian Tutor

Comprehensive Norwegian course with interactive exercises and cultural insights.

AI Constitution

Literal interpretation of the U.S. Constitution, emphasizing clear language.