LLPlayer

The media player for language learning, with dual subtitles, AI-generated subtitles, realtime-OCR, translation, word lookup, and more!

Stars: 683

LLPlayer is a specialized media player designed for language learning, offering unique features such as dual subtitles, AI-generated subtitles, real-time OCR, real-time translation, word lookup, and more. It supports multiple languages, online video playback, customizable settings, and integration with browser extensions. Written in C#/WPF, LLPlayer is free, open-source, and aims to enhance the language learning experience through innovative functionalities.

README:

A video player focused on subtitle-related features such as dual subtitles, AI-generated subtitles, real-time OCR, real-time translation, word lookup, and more!

https://github.com/user-attachments/assets/05a7b451-ee3b-489f-aac9-f1670ed76e71

TED Talk - The mind behind Linux

LLPlayer has many features for language learning that are not available in normal video players.

- Dual Subtitles: Two subtitles can be displayed simultaneously. Both text subtitles and bitmap subtitles are supported.

- AI-generated subtitles (ASR): Real-time automatic subtitle generation from any video and audio, powered by OpenAI Whisper. two engines whisper.cpp and faster-whisper are supported.

- Real-time Translation: Supports Google and DeepL API, 134 languages are currently supported!

- Real-time OCR subtitles: Can convert bitmap subtitles to text subtitles in real time, powered by Tesseract OCR and Microsoft OCR.

- Subtitles Sidebar: Both text and bitmap are supported. Seek and word lookup available. Has anti-spoiler functionality.

- Instant word lookup: Word lookup and browser searches can be performed on subtitle text.

- Customizable Browser Search: Browser searches can be performed from the context menu of a word, and the search site can be completely customized.

- Plays online videos: With yt-dlp integration, any online video can be played back in real time, with AI subtitle generation, word lookups!

- Flexible Subtitles Size/Placement Settings: The size and position of the dual subtitles can be adjusted very flexibly.

- Subtitles Seeking for any format: Any subtitle format can be used for subtitle seek.

- Built-in Subtitles Downloader: Supports opensubtitles.org

- Integrate with browser extensions: Can work with any browser extensions, such as Yomitan and 10ten.

- Customizable Dark Theme: The theme is based on black and can be customized.

- Fully Customizable Shortcuts: All keyboard shortcuts are fully customizable. The same action can be assigned to multiple keys!

- Built-in Cheat Sheet: You can find out how to use the application in the application itself.

- Free, Open Source, Written in C#: Written in C#/WPF, not C, so customization is super easy!

TED Talk - The mind behind Linux

[OS]

- Windows 10 x64, Version 1903 later

- Windows 11 x64

[Pre-requisites]

-

.NET Desktop Runtime 9

- If not installed, a installer dialog will appear

-

Microsoft Visual C++ Redistributable Version >= 2022 (for Whisper ASR, Tesseract OCR)

- Note that if this is not installed, the app will launch, but will crash when ASR or OCR is enabled!

[For Nvidia User (RTX GPU)]

- Note: Having CUDA driver will make the rendering of subtitle faster CUDA 12.8 (please take note that this is the required driver if you're using Blackwell GPU's and if you want to use CUDA (RTX 50xx))

-

Download builds from release

-

Launch LLPlayer

Please open LLPlayer.exe.

- Open Settings

Press CTRL+. or click the settings icon on the seek bar to open the settings window.

- Download Whisper Model for ASR

From Subtitles > ASR section, please download Whisper's models.

You can choose from a variety of models, the larger the size, the higher the load and accuracy.

Note that models with En endings are only available in English.

Audio Language allows you to manually set the language of the video (audio). The default is auto-detection.

- Set Translation Target Language

To use the translation function, please set your native language. This is called the target language.

The source language is detected automatically.

From Subtitles > Translate section, please set the Target Language at the top.

The default translation engine is Google Translate.

You can change it to DeepL from the settings below, but you will need to configure API key. (free for a certain amount of use)

- Play any videos with subtitles!

You can play it from the context menu or by dropping the video.

For online videos, you can also play it by pasting the URL with CTRL+V or from context menu.

There are two CC buttons on the bottom seek bar.

The left is the primary subtitle and the right is the secondary subtitle. Please set your learning language for the primary subtitle and your native language for the secondary subtitle.

Adding external subtitles is done in the same way as for videos, either by dragging or from the context menu.

- Open CheatSheet

You can open a built-in CheatSheet by pressing F1 or from ContextMenu.

All keyboard and mouse controls are explained. Keyboard controls are fully customizable from the settings.

Status: Beta

It has not yet been tested by enough users and may be unstable.

Significant changes may be made to the UI and settings.

I will actively make breaking changes during version 0.X.X.

(Configuration files may not be backward compatible when updated.)

- Clone the Repository

$ git clone [email protected]:umlx5h/LLPlayer.git- Open Project

Install Visual Studio or JetBrains Rider and open the following slnx file.

$ ./LLPlayer.slnx- Build

Select LLPlayer project and then build and run.

Guiding Principles for LLPlayer

- Be a specialized player for language learning, not a general-purpose player

- So not to be a replacement for mpv or VLC

- Support as many languages as possible

- Provide some language-specific features as well

-

[ ] Improve core functionality

-

[ ] ASR

- [X] Enable ASR subtitles with dual subtitles (one of them as translation)

- [ ] Pause and resume

-

[ ] Subtitles

- [ ] Customize language preference for primary and secondary subtitles, respectively, and automatic opening

- [ ] Enhanced local subtitle search

- [X] Export ASR/OCR subtitle results to SRT file

-

-

[ ] Stabilization of the application

-

[ ] Allow customizable mouse shortcuts

-

[ ] Documentation / More Help

- [ ] Support for dictionary API or for specific languages (English, Japanese, ...)

- [ ] Dedicated support for Japanese for watching anime.

- [ ] Word Segmentation Handling

- [ ] Incorporate Yomitan or 10ten to video player

- [ ] Text-to-Speech integration

- [ ] More translation engine such as local LLM

- [ ] Support translation as plugin?

- [ ] Cross-Platform Support using Avalonia (Linux / Mac)

- [ ] Context-Aware Translation

- [ ] Word Management (reference to LingQ, Language Reactor)

- [ ] Anki Integration

Contributions are very welcome! Development is easy because it is written in C#/WPF.

If you want to improve the core of the video player other than UI and language functions, LLPlayer uses Flyleaf as a core player library, so if you submit it there, I will actively incorporate the changes into the LLPlayer side.

https://github.com/SuRGeoNix/Flyleaf

I may not be able to respond to all questions or requests regarding core player parts as I do not currently understand many of them yet.

For more information for developers, please check the following page.

https://github.com/umlx5h/LLPlayer/wiki/For-Developers

LLPlayer would not exist without the following!

In implementing LLPlayer, I used the Flyleaf .NET library instead of libmpv or libVLC, and I think it was the right decision!

The simplicity of the library makes it easy to modify, and development productivity is very high using C#/.NET and Visual Studio.

With libmpv and libVLC, modifications on the library side would be super difficult.

The author has been very helpful in answering beginner questions and responding very quickly.

Flyleaf comes with a sample WPF player, and I used quite a bit. Thank you very much.

Subtitle generation is achived by OpenAI Whisper, whisper.cpp and its binding whisper.net. LLPlayer simply uses these libraries to generate subtitles. Thank you for providing this for free!

- Sicos1977/TesseractOCR : For Tessseract OCR

- MaterialDesignInXAML/MaterialDesignInXamlToolkit : For UI

- searchpioneer/lingua-dotnet : For Language Detection

- CharsetDetector/UTF-unknown : For Charset Detection

- sskodje/WpfColorFont : For Font Selection

Browser Extension for Netflix. LLPlayer is mainly inspired by this with its functionality and interface. (Not enough functionality yet, though).

AI subtitle generation (ASR) and OCR subtitles are all performed locally.

Therefore, no network communication occurs at all.

However, the model needs to be downloaded only once for the first time, and this is the only place where network communication occurs.

Network communication is required when using the translation function, but this is an optional feature, so it is possible to choose not to communicate at all.

Your privacy is fully guaranteed because it is free and OSS.

By default, only the CPU is used to generate subtitles.

Setting Threads to 2 or more from the ASR settings may improve performance.

Note that setting it above the number of CPU threads is meaningless.

If your machine is equipped with a NVIDIA or AMD GPU, you can expect even faster generation by enabling CUDA or Vulkan from the Hardware Options in the ASR settings.

Certain runtimes may require a toolkit to be installed in advance. See the link below for details.

https://github.com/sandrohanea/whisper.net?tab=readme-ov-file#runtimes-description

The available ones will be used in order of priority from the top. Note that changing the hardware options settings will require a restart.

You can translate words, but cannot currently look up dictionaries.

I plan to support the dictionary API in the future, but is not currently supported because it is difficult to support a lot of languages.

Instead, you can copy selected words to the clipboard. Certain dictionary tools can monitor the clipboard and search for words.

For English-English dictionaries, LDOCE5 Viewer is highly recommended.

Currently not available within the video player, but you can send subtitle text to your browser via the clipboard. Thus, you can check the meaning of words with any browser extension such as Yomitan, 10ten.

A little preparation is required, please check following page.

https://github.com/umlx5h/LLPlayer/wiki/Browser-Extensions-Integration

yt-dlp.exe is located in the following path.

Plugins/YoutubeDL/yt-dlp.exe

You can download latest version executable from the following.

https://github.com/yt-dlp/yt-dlp/releases/

If you want to update, please download and copy it to the specified path.

This project is licensed under the GPL-3.0 license.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LLPlayer

Similar Open Source Tools

LLPlayer

LLPlayer is a specialized media player designed for language learning, offering unique features such as dual subtitles, AI-generated subtitles, real-time OCR, real-time translation, word lookup, and more. It supports multiple languages, online video playback, customizable settings, and integration with browser extensions. Written in C#/WPF, LLPlayer is free, open-source, and aims to enhance the language learning experience through innovative functionalities.

gabber

Gabber is a real-time AI engine that supports graph-based apps with multiple participants and simultaneous media streams. It allows developers to build powerful and developer-friendly AI applications across voice, text, video, and more. The engine consists of frontend and backend services including an editor, engine, and repository. Gabber provides SDKs for JavaScript/TypeScript, React, Python, Unity, and upcoming support for iOS, Android, React Native, and Flutter. The roadmap includes adding more nodes and examples, such as computer use nodes, Unity SDK with robotics simulation, SIP nodes, and multi-participant turn-taking. Users can create apps using nodes, pads, subgraphs, and state machines to define application flow and logic.

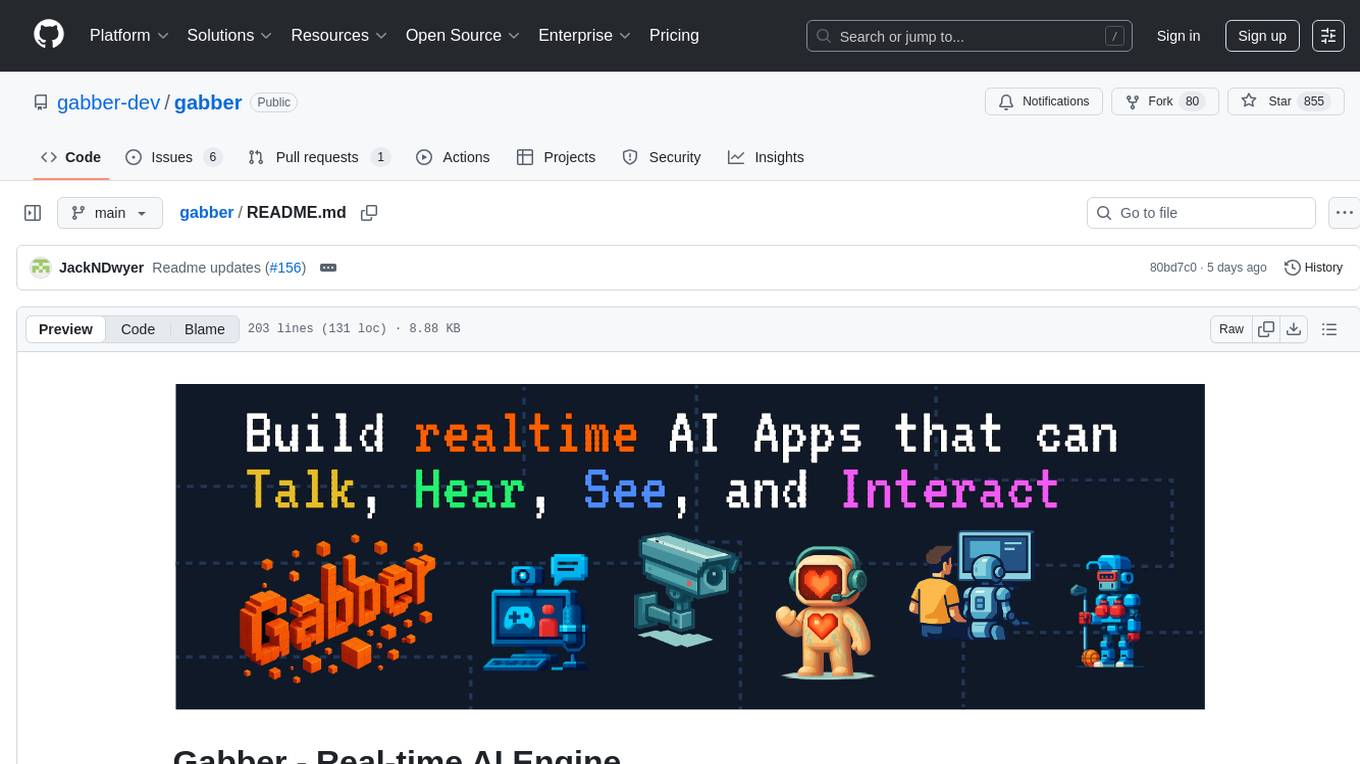

LLavaImageTagger

LLMImageIndexer is an intelligent image processing and indexing tool that leverages local AI to generate comprehensive metadata for your image collection. It uses advanced language models to analyze images and generate captions and keyword metadata. The tool offers features like intelligent image analysis, metadata enhancement, local processing, multi-format support, user-friendly GUI, GPU acceleration, cross-platform support, stop and start capability, and keyword post-processing. It operates directly on image file metadata, allowing users to manage files, add new files, and run the tool multiple times without reprocessing previously keyworded files. Installation instructions are provided for Windows, macOS, and Linux platforms, along with usage guidelines and configuration options.

gpt-pilot

GPT Pilot is a core technology for the Pythagora VS Code extension, aiming to provide the first real AI developer companion. It goes beyond autocomplete, helping with writing full features, debugging, issue discussions, and reviews. The tool utilizes LLMs to generate production-ready apps, with developers overseeing the implementation. GPT Pilot works step by step like a developer, debugging issues as they arise. It can work at any scale, filtering out code to show only relevant parts to the AI during tasks. Contributions are welcome, with debugging and telemetry being key areas of focus for improvement.

crawlee-python

Crawlee-python is a web scraping and browser automation library that covers crawling and scraping end-to-end, helping users build reliable scrapers fast. It allows users to crawl the web for links, scrape data, and store it in machine-readable formats without worrying about technical details. With rich configuration options, users can customize almost any aspect of Crawlee to suit their project's needs.

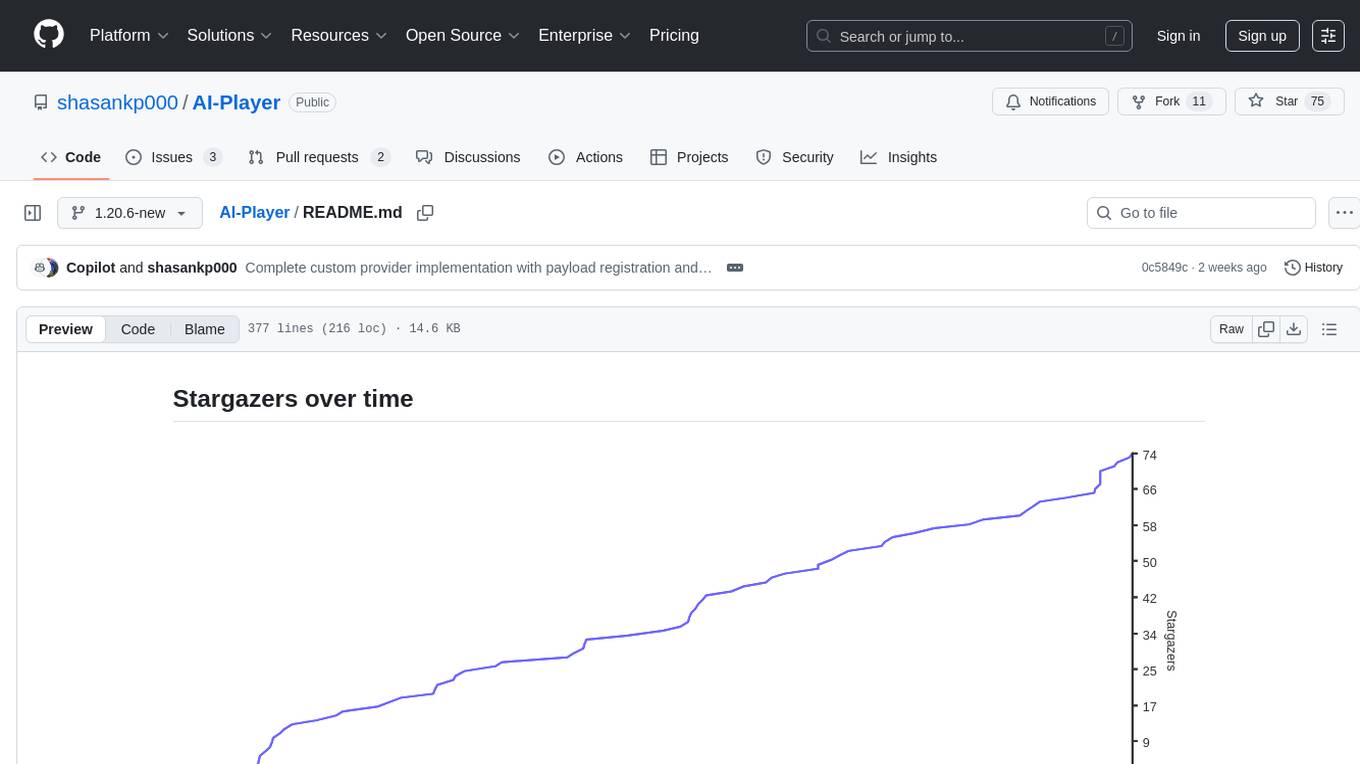

AI-Player

AI-Player is a Minecraft mod that adds an 'intelligent' second player to the game to combat loneliness while playing solo. It aims to enhance gameplay by providing companionship and interactive features. The mod leverages advanced AI algorithms and integrates with external tools to enhance the player experience. Developed with a focus on addressing the social aspect of gaming, AI-Player is a community-driven project that continues to evolve with user feedback and contributions.

spacy-llm

This package integrates Large Language Models (LLMs) into spaCy, featuring a modular system for **fast prototyping** and **prompting** , and turning unstructured responses into **robust outputs** for various NLP tasks, **no training data** required. It supports open-source LLMs hosted on Hugging Face 🤗: Falcon, Dolly, Llama 2, OpenLLaMA, StableLM, Mistral. Integration with LangChain 🦜️🔗 - all `langchain` models and features can be used in `spacy-llm`. Tasks available out of the box: Named Entity Recognition, Text classification, Lemmatization, Relationship extraction, Sentiment analysis, Span categorization, Summarization, Entity linking, Translation, Raw prompt execution for maximum flexibility. Soon: Semantic role labeling. Easy implementation of **your own functions** via spaCy's registry for custom prompting, parsing and model integrations. For an example, see here. Map-reduce approach for splitting prompts too long for LLM's context window and fusing the results back together

noScribe

noScribe is an AI-based software designed for automated audio transcription, specifically tailored for transcribing interviews for qualitative social research or journalistic purposes. It is a free and open-source tool that runs locally on the user's computer, ensuring data privacy. The software can differentiate between speakers and supports transcription in 99 languages. It includes a user-friendly editor for reviewing and correcting transcripts. Developed by Kai Dröge, a PhD in sociology with a background in computer science, noScribe aims to streamline the transcription process and enhance the efficiency of qualitative analysis.

WritingTools

Writing Tools is an Apple Intelligence-inspired application for Windows, Linux, and macOS that supercharges your writing with an AI LLM. It allows users to instantly proofread, optimize text, and summarize content from webpages, YouTube videos, documents, etc. The tool is privacy-focused, open-source, and supports multiple languages. It offers powerful features like grammar correction, content summarization, and LLM chat mode, making it a versatile writing assistant for various tasks.

M.I.L.E.S

M.I.L.E.S. (Machine Intelligent Language Enabled System) is a voice assistant powered by GPT-4 Turbo, offering a range of capabilities beyond existing assistants. With its advanced language understanding, M.I.L.E.S. provides accurate and efficient responses to user queries. It seamlessly integrates with smart home devices, Spotify, and offers real-time weather information. Additionally, M.I.L.E.S. possesses persistent memory, a built-in calculator, and multi-tasking abilities. Its realistic voice, accurate wake word detection, and internet browsing capabilities enhance the user experience. M.I.L.E.S. prioritizes user privacy by processing data locally, encrypting sensitive information, and adhering to strict data retention policies.

Open_Data_QnA

Open Data QnA is a Python library that allows users to interact with their PostgreSQL or BigQuery databases in a conversational manner, without needing to write SQL queries. The library leverages Large Language Models (LLMs) to bridge the gap between human language and database queries, enabling users to ask questions in natural language and receive informative responses. It offers features such as conversational querying with multiturn support, table grouping, multi schema/dataset support, SQL generation, query refinement, natural language responses, visualizations, and extensibility. The library is built on a modular design and supports various components like Database Connectors, Vector Stores, and Agents for SQL generation, validation, debugging, descriptions, embeddings, responses, and visualizations.

OpenCopilot

OpenCopilot allows you to have your own product's AI copilot. It integrates with your underlying APIs and can execute API calls whenever needed. It uses LLMs to determine if the user's request requires calling an API endpoint. Then, it decides which endpoint to call and passes the appropriate payload based on the given API definition.

testzeus-hercules

Hercules is the world’s first open-source testing agent designed to handle the toughest testing tasks for modern web applications. It turns simple Gherkin steps into fully automated end-to-end tests, making testing simple, reliable, and efficient. Hercules adapts to various platforms like Salesforce and is suitable for CI/CD pipelines. It aims to democratize and disrupt test automation, making top-tier testing accessible to everyone. The tool is transparent, reliable, and community-driven, empowering teams to deliver better software. Hercules offers multiple ways to get started, including using PyPI package, Docker, or building and running from source code. It supports various AI models, provides detailed installation and usage instructions, and integrates with Nuclei for security testing and WCAG for accessibility testing. The tool is production-ready, open core, and open source, with plans for enhanced LLM support, advanced tooling, improved DOM distillation, community contributions, extensive documentation, and a bounty program.

Instrukt

Instrukt is a terminal-based AI integrated environment that allows users to create and instruct modular AI agents, generate document indexes for question-answering, and attach tools to any agent. It provides a platform for users to interact with AI agents in natural language and run them inside secure containers for performing tasks. The tool supports custom AI agents, chat with code and documents, tools customization, prompt console for quick interaction, LangChain ecosystem integration, secure containers for agent execution, and developer console for debugging and introspection. Instrukt aims to make AI accessible to everyone by providing tools that empower users without relying on external APIs and services.

dataline

DataLine is an AI-driven data analysis and visualization tool designed for technical and non-technical users to explore data quickly. It offers privacy-focused data storage on the user's device, supports various data sources, generates charts, executes queries, and facilitates report building. The tool aims to speed up data analysis tasks for businesses and individuals by providing a user-friendly interface and natural language querying capabilities.

ComfyUI-Tara-LLM-Integration

Tara is a powerful node for ComfyUI that integrates Large Language Models (LLMs) to enhance and automate workflow processes. With Tara, you can create complex, intelligent workflows that refine and generate content, manage API keys, and seamlessly integrate various LLMs into your projects. It comprises nodes for handling OpenAI-compatible APIs, saving and loading API keys, composing multiple texts, and using predefined templates for OpenAI and Groq. Tara supports OpenAI and Grok models with plans to expand support to together.ai and Replicate. Users can install Tara via Git URL or ComfyUI Manager and utilize it for tasks like input guidance, saving and loading API keys, and generating text suitable for chaining in workflows.

For similar tasks

LLPlayer

LLPlayer is a specialized media player designed for language learning, offering unique features such as dual subtitles, AI-generated subtitles, real-time OCR, real-time translation, word lookup, and more. It supports multiple languages, online video playback, customizable settings, and integration with browser extensions. Written in C#/WPF, LLPlayer is free, open-source, and aims to enhance the language learning experience through innovative functionalities.

BabelDuck

BabelDuck is a highly customizable AI oral conversation practice application for language learners at all levels, with a focus on being more beginner-friendly. It aims to minimize the threshold and mental burden of oral expression practice. The tool supports various AI conversation features such as managing multiple dialogues, customizing system prompts, and providing suggestions for grammar, translation, or expression refinement without interrupting the current conversation. Users can seek further discussion through sub-dialogues when in doubt about AI suggestions, seamlessly returning to the original conversation afterward. BabelDuck also offers voice input and output, integrates browser-built text-to-speech, and Azure TTS, and supports different dialogue preferences, data stored locally for user privacy, multilingual interface, and built-in tutorials.

wordsea

WordSea is a SvelteKit web application that aims to enhance English vocabulary learning by utilizing mnemonic techniques to associate words with visual representations. It addresses the challenge of memorizing abstract concepts by generating definition-based visualizations using LLMs and Text-to-Image models. The visualizations are combined with word definitions, IPA pronunciation, audio recordings, and derivative information to create comprehensive word cards.

easy-web-summarizer

A Python script leveraging advanced language models to summarize webpages and youtube videos directly from URLs. It integrates with LangChain and ChatOllama for state-of-the-art summarization, providing detailed summaries for quick understanding of web-based documents. The tool offers a command-line interface for easy use and integration into workflows, with plans to add support for translating to different languages and streaming text output on gradio. It can also be used via a web UI using the gradio app. The script is dockerized for easy deployment and is open for contributions to enhance functionality and capabilities.

Flare

Flare is an open-source AI-powered decentralized social network client for Android/iOS/macOS, consolidating multiple social networks into one platform. It allows cross-posting content, ensures privacy, and plans to implement features like mixed timeline, AI-powered functions, and support for various platforms. The project is in active development and aims to provide a seamless social networking experience for users.

RSS-Translator

RSS-Translator is an open-source, simple, and self-deployable tool that allows users to translate titles or content, display in bilingual, subscribe to translated RSS/JSON feeds, support multiple translation engines, control update frequency of translation sources, view translation status, cache all translated content to reduce translation costs, view token/character usage for each source, provide AI content summarization, and retrieve full text. It currently supports various translation engines such as Free Translators, DeepL, OpenAI, ClaudeAI, Azure OpenAI, Google Gemini, Google Translate, Microsoft Translate API, Caiyun API, Moonshot AI, Together AI, OpenRouter AI, Groq, Doubao, OpenL, and Kagi API, with more being added continuously.

aimeos

Aimeos is a full-featured e-commerce platform that is ultra-fast, cloud-native, and API-first. It offers a wide range of features including JSON REST API, GraphQL API, multi-vendor support, various product types, subscriptions, multiple payment gateways, admin backend, modular structure, SEO optimization, multi-language support, AI-based text translation, mobile optimization, and high-quality source code. It is highly configurable and extensible, making it suitable for e-commerce SaaS solutions, marketplaces, and various cloud environments. Aimeos is designed for scalability, security, and performance, catering to a diverse range of e-commerce needs.

nodetool

NodeTool is a platform designed for AI enthusiasts, developers, and creators, providing a visual interface to access a variety of AI tools and models. It simplifies access to advanced AI technologies, offering resources for content creation, data analysis, automation, and more. With features like a visual editor, seamless integration with leading AI platforms, model manager, and API integration, NodeTool caters to both newcomers and experienced users in the AI field.

For similar jobs

LLPlayer

LLPlayer is a specialized media player designed for language learning, offering unique features such as dual subtitles, AI-generated subtitles, real-time OCR, real-time translation, word lookup, and more. It supports multiple languages, online video playback, customizable settings, and integration with browser extensions. Written in C#/WPF, LLPlayer is free, open-source, and aims to enhance the language learning experience through innovative functionalities.

rulm

This repository contains language models for the Russian language, as well as their implementation and comparison. The models are trained on a dataset of ChatGPT-generated instructions and chats in Russian. They can be used for a variety of tasks, including question answering, text generation, and translation.

sailor-llm

Sailor is a suite of open language models tailored for South-East Asia (SEA), focusing on languages such as Indonesian, Thai, Vietnamese, Malay, and Lao. Developed with careful data curation, Sailor models are designed to understand and generate text across diverse linguistic landscapes of the SEA region. Built from Qwen 1.5, Sailor encompasses models of varying sizes, spanning from 0.5B to 7B versions for different requirements. Benchmarking results demonstrate Sailor's proficiency in tasks such as question answering, commonsense reasoning, reading comprehension, and more in SEA languages.

awesome-khmer-language

Awesome Khmer Language is a comprehensive collection of resources for the Khmer language, including tools, datasets, research papers, projects/models, blogs/slides, and miscellaneous items. It covers a wide range of topics related to Khmer language processing, such as character normalization, word segmentation, part-of-speech tagging, optical character recognition, text-to-speech, and more. The repository aims to support the development of natural language processing applications for the Khmer language by providing a diverse set of resources and tools for researchers and developers.

Verbiverse

Verbiverse is a tool that uses a large language model to assist in reading PDFs and watching videos, aimed at improving language proficiency. It provides a more convenient and efficient way to use large models through predefined prompts, designed for those looking to enhance their language skills. The tool analyzes unfamiliar words and sentences in foreign language PDFs or video subtitles, providing better contextual understanding compared to traditional dictionary translations or ambiguous meanings. It offers features such as automatic loading of subtitles, word analysis by clicking or double-clicking, and a word database for collecting words. Users can run the tool on Windows x86_64 or ubuntu_22.04 x86_64 platforms by downloading the precompiled packages or by cloning the source code and setting up a virtual environment with Python. It is recommended to use a local model or smaller PDF files for testing due to potential token consumption issues with large files.

rime_wanxiang

Rime Wanxiang is a pinyin input method based on deep optimized lexicon and language model. It features a lexicon with tones, AI and large corpus filtering, and frequency addition to provide more accurate sentence output. The tool supports various input methods and customization options, aiming to enhance user experience through lexicon and transcription. Users can also refresh the lexicon with different types of auxiliary codes using the LMDG toolkit package. Wanxiang offers core features like tone-marked pinyin annotations, phrase composition, and word frequency, with customizable functionalities. The tool is designed to provide a seamless input experience based on lexicon and transcription.

chatgpt-web

ChatGPT Web is a web application that provides access to the ChatGPT API. It offers two non-official methods to interact with ChatGPT: through the ChatGPTAPI (using the `gpt-3.5-turbo-0301` model) or through the ChatGPTUnofficialProxyAPI (using a web access token). The ChatGPTAPI method is more reliable but requires an OpenAI API key, while the ChatGPTUnofficialProxyAPI method is free but less reliable. The application includes features such as user registration and login, synchronization of conversation history, customization of API keys and sensitive words, and management of users and keys. It also provides a user interface for interacting with ChatGPT and supports multiple languages and themes.

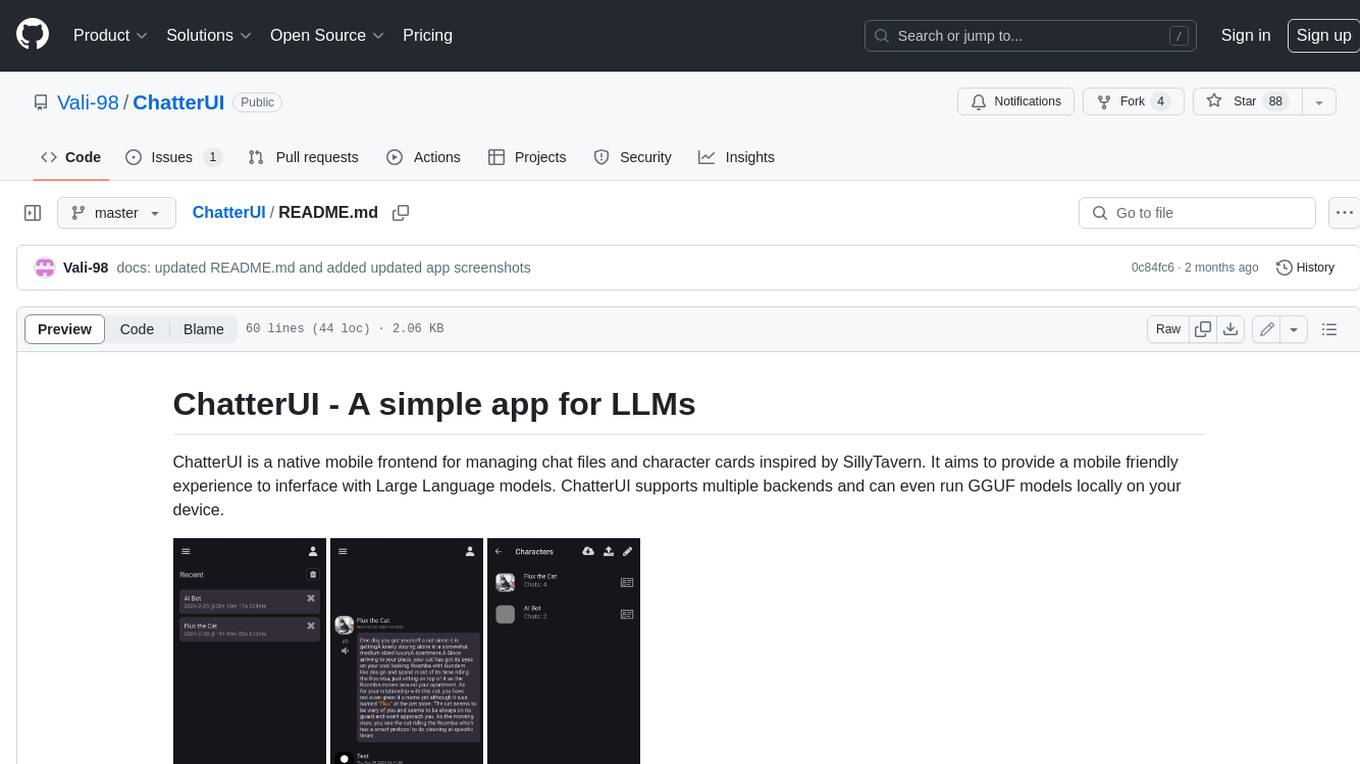

ChatterUI

ChatterUI is a mobile app that allows users to manage chat files and character cards, and to interact with Large Language Models (LLMs). It supports multiple backends, including local, koboldcpp, text-generation-webui, Generic Text Completions, AI Horde, Mancer, Open Router, and OpenAI. ChatterUI provides a mobile-friendly interface for interacting with LLMs, making it easy to use them for a variety of tasks, such as generating text, translating languages, writing code, and answering questions.