collections

Multilingual headless CMS enabling translation into 30+. AI editing and SEO to speed up website globalization.

Stars: 53

Collections is a multilingual headless CMS with AI-powered editing and SEO optimization. It supports automatic translation into +30 languages, making websites more accessible and reaching a broader audience. The platform offers features like multilingual content, Notion-like editor, and post versioning. It is built on a tech stack consisting of PostgreSQL for the database, Node.js and Express for the backend, and React with MUI for the frontend. Collections aims to simplify the process of creating and managing multilingual websites with the help of AI technology.

README:

Collections is a multilingual headless CMS that supports automatic translation into +30 languages.

AI-powered editing and SEO optimization accelerate the multilingual expansion of your website.

In the future, AI search dominates information experiences. Keywords turn into questions, and users expect AI-generated answers instead of website lists.

This makes websites more accessible, reaching a broader audience. Multilingual support, easy for both humans and AI, is key to this.

- 🪄 Editing & SEO by AI

- 🌐 Multilingual content

- 🖊 Notion-like editor

- 🕘️ Post versioning

Extended documentation is available on our website.

How to start using Collections on localhost.

// clone

git clone [email protected]:collectionscms/collections.git

cd collections

// env

cp .env.sample .env

vi .env - make it your environment

// install direnv

brew install direnv

vi ~/.zshrc - add `eval "$(direnv hook zsh)"`

source ~/.zshrc

direnv allow .

// init

yarn install

yarn db:refresh

yarn devAdd the following lines to the /etc/hosts

127.0.0.1 app.test.com

127.0.0.1 en.test.com

127.0.0.1 ja.test.comOpen http://app.test.com:4000/admin to view your running app.

When you're ready for production, run yarn build then yarn start.

To chat with other community members you can join the Collections Discord.

- DB - PostgreSQL

- Backend - Node.js, Express

- Frontend - React, MUI

Enjoy!!!

See the LICENSE file for licensing information.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for collections

Similar Open Source Tools

collections

Collections is a multilingual headless CMS with AI-powered editing and SEO optimization. It supports automatic translation into +30 languages, making websites more accessible and reaching a broader audience. The platform offers features like multilingual content, Notion-like editor, and post versioning. It is built on a tech stack consisting of PostgreSQL for the database, Node.js and Express for the backend, and React with MUI for the frontend. Collections aims to simplify the process of creating and managing multilingual websites with the help of AI technology.

ProxyAI

ProxyAI is an open-source AI copilot for JetBrains, offering advanced code assistance features powered by top-tier language models. Users can customize their coding experience, receive AI-suggested code changes, autocomplete suggestions, and context-aware naming suggestions. The tool also allows users to chat with images, reference project files and folders, web docs, git history, and search the web. ProxyAI prioritizes user privacy by not collecting sensitive information and only gathering anonymous usage data with consent.

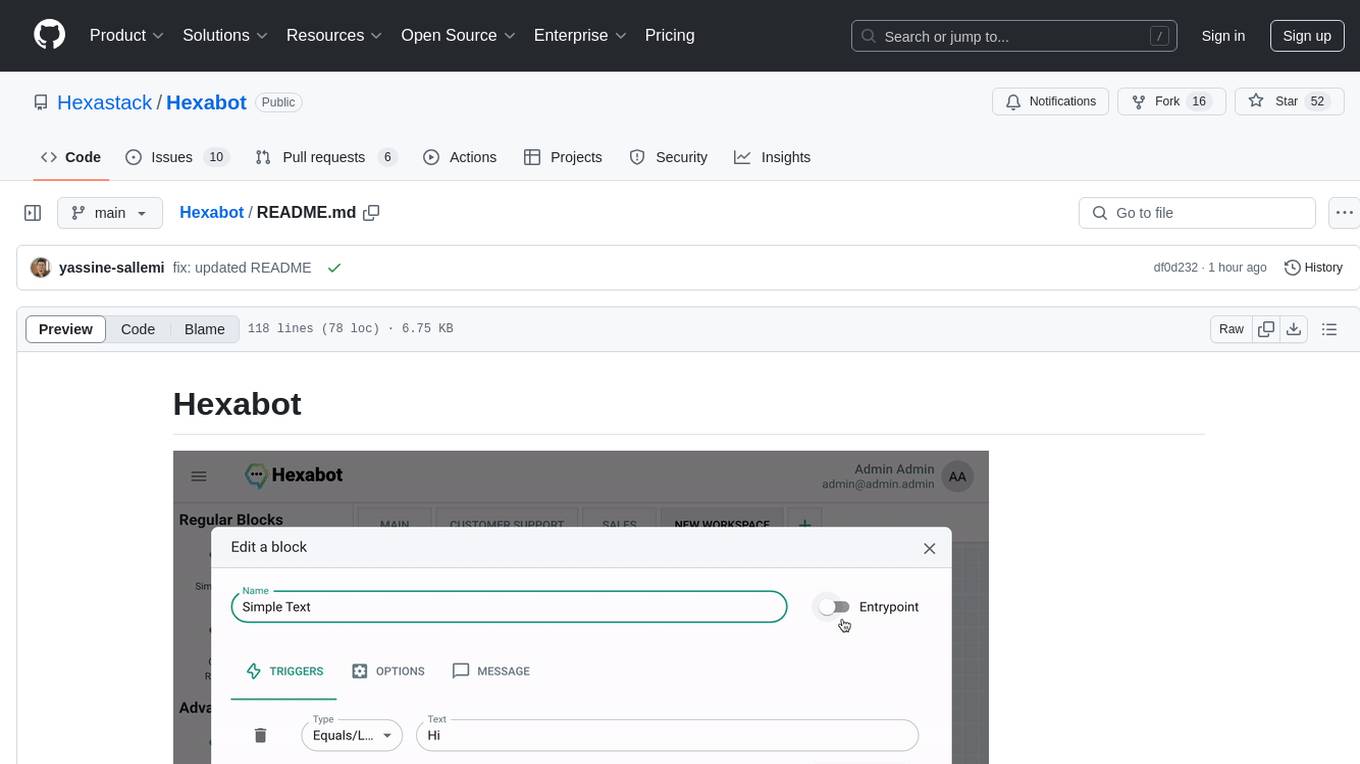

Hexabot

Hexabot Community Edition is an open-source chatbot solution designed for flexibility and customization, offering powerful text-to-action capabilities. It allows users to create and manage AI-powered, multi-channel, and multilingual chatbots with ease. The platform features an analytics dashboard, multi-channel support, visual editor, plugin system, NLP/NLU management, multi-lingual support, CMS integration, user roles & permissions, contextual data, subscribers & labels, and inbox & handover functionalities. The directory structure includes frontend, API, widget, NLU, and docker components. Prerequisites for running Hexabot include Docker and Node.js. The installation process involves cloning the repository, setting up the environment, and running the application. Users can access the UI admin panel and live chat widget for interaction. Various commands are available for managing the Docker services. Detailed documentation and contribution guidelines are provided for users interested in contributing to the project.

MyDeviceAI

MyDeviceAI is a personal AI assistant app for iPhone that brings the power of artificial intelligence directly to the device. It focuses on privacy, performance, and personalization by running AI models locally and integrating with privacy-focused web services. The app offers seamless user experience, web search integration, advanced reasoning capabilities, personalization features, chat history access, and broad device support. It requires macOS, Xcode, CocoaPods, Node.js, and a React Native development environment for installation. The technical stack includes React Native framework, AI models like Qwen 3 and BGE Small, SearXNG integration, Redux for state management, AsyncStorage for storage, Lucide for UI components, and tools like ESLint and Prettier for code quality.

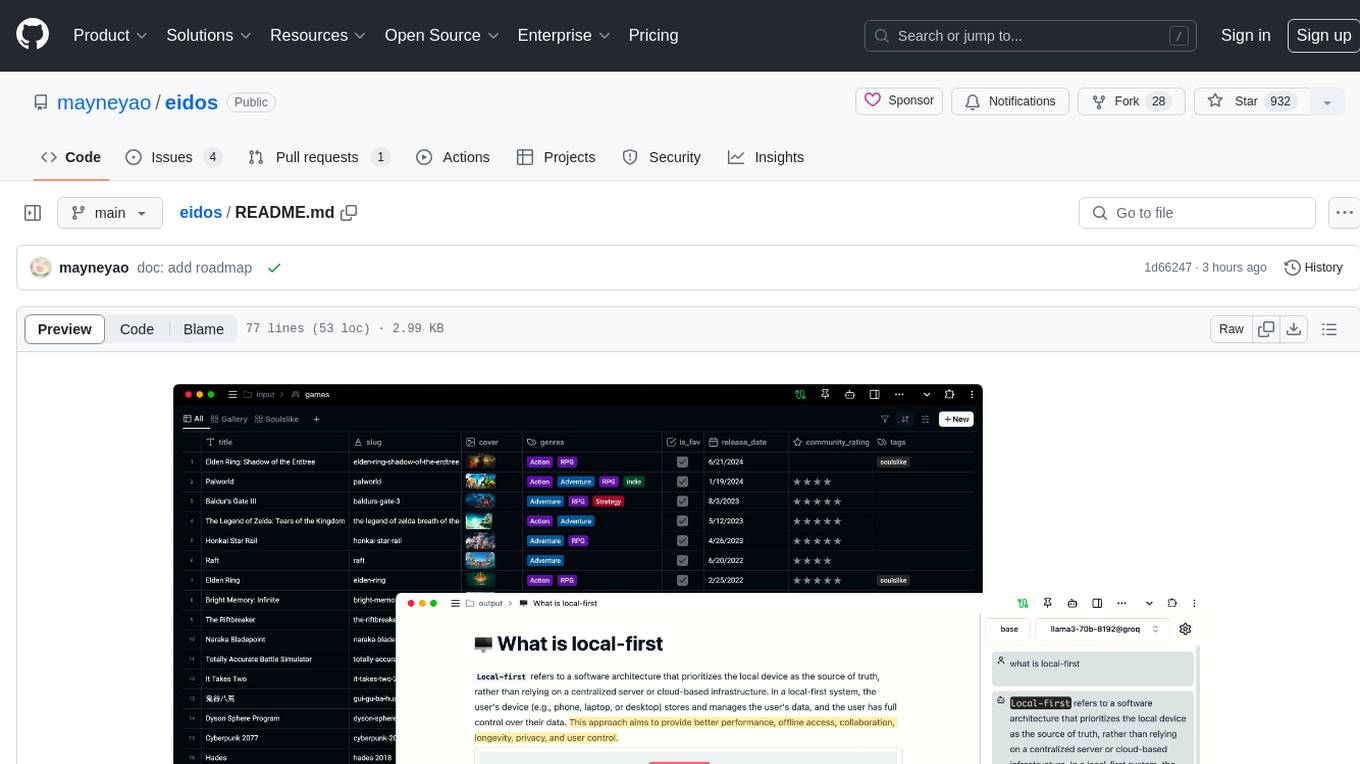

eidos

Eidos is an extensible framework for managing personal data in one place. It runs inside the browser as a PWA with offline support. It integrates AI features for translation, summarization, and data interaction. Users can customize Eidos with Prompt extension, JavaScript for Formula functions, TypeScript/JavaScript for data processing logic, and build apps using any framework. Eidos is developer-friendly with API & SDK, and uses SQLite standardization for data tables.

openroleplay.ai

Open Roleplay is an open-source alternative to Character.ai. It allows users to create their own AI characters, customize them, and generate images and voices for them. Open Roleplay also supports group chat and automatic translation. The tool is built with Next.js, React.js, Tailwind CSS, Vercel, Convex, and Clerk.

CodeGPT

CodeGPT is an extension for JetBrains IDEs that provides access to state-of-the-art large language models (LLMs) for coding assistance. It offers a range of features to enhance the coding experience, including code completions, a ChatGPT-like interface for instant coding advice, commit message generation, reference file support, name suggestions, and offline development support. CodeGPT is designed to keep privacy in mind, ensuring that user data remains secure and private.

superduper

superduper.io is a Python framework that integrates AI models, APIs, and vector search engines directly with existing databases. It allows hosting of models, streaming inference, and scalable model training/fine-tuning. Key features include integration of AI with data infrastructure, inference via change-data-capture, scalable model training, model chaining, simple Python interface, Python-first approach, working with difficult data types, feature storing, and vector search capabilities. The tool enables users to turn their existing databases into centralized repositories for managing AI model inputs and outputs, as well as conducting vector searches without the need for specialized databases.

UglyFeed

UglyFeed is a simple Python application designed to retrieve, aggregate, filter, rewrite, evaluate, and serve content (RSS feeds) written by a large language model. It provides features such as retrieving RSS feeds, aggregating feed items by similarity, rewriting content using various APIs, saving rewritten feeds to JSON files, converting JSON to valid RSS feed, serving XML feed via an HTTP server, deploying XML feed to GitHub or GitLab, and evaluating generated content. The tool can be used for smart content curation, dynamic blog generation, interactive educational tools, personalized reading experiences, brand monitoring, multilingual content delivery, enhanced RSS feeds, creative writing assistance, content repurposing, and fake news detection datasets. It is modular, extensible, and aims to empower users in content manipulation and delivery.

devchat

DevChat is an open-source workflow engine that enables developers to create intelligent, automated workflows for engaging with users through a chat panel within their IDEs. It combines script writing flexibility, latest AI models, and an intuitive chat GUI to enhance user experience and productivity. DevChat simplifies the integration of AI in software development, unlocking new possibilities for developers.

deep-research-web-ui

This web UI tool is designed to enhance the user experience of the deep-research repository by providing a safe and secure environment for conducting AI research. It offers features such as real-time feedback, search visualization, export as PDF, support for various AI models, and Docker deployment. Users can interact with multiple AI providers and web search services, making research processes more efficient and accessible. The tool also includes recent updates that improve functionality and fix bugs, ensuring a seamless experience for users.

minimal-chat

MinimalChat is a minimal and lightweight open-source chat application with full mobile PWA support that allows users to interact with various language models, including GPT-4 Omni, Claude Opus, and various Local/Custom Model Endpoints. It focuses on simplicity in setup and usage while being fully featured and highly responsive. The application supports features like fully voiced conversational interactions, multiple language models, markdown support, code syntax highlighting, DALL-E 3 integration, conversation importing/exporting, and responsive layout for mobile use.

frontman

Frontman is a framework-integrated AI coding agent that allows users to edit their frontend directly in the browser. It enables users to point at any element in their running app, describe the change they want, and Frontman edits the source code for them without the need for copy-pasting or context switching. The tool supports popular frameworks like Next.js, Astro, and Vite, providing features such as point-and-click editing, real-time streaming of AI-generated edits, and framework-aware context. Frontman also offers an open protocol layer for decoupled and extensible client, server, and framework adapters.

chainlit

Chainlit is an open-source async Python framework which allows developers to build scalable Conversational AI or agentic applications. It enables users to create ChatGPT-like applications, embedded chatbots, custom frontends, and API endpoints. The framework provides features such as multi-modal chats, chain of thought visualization, data persistence, human feedback, and an in-context prompt playground. Chainlit is compatible with various Python programs and libraries, including LangChain, Llama Index, Autogen, OpenAI Assistant, and Haystack. It offers a range of examples and a cookbook to showcase its capabilities and inspire users. Chainlit welcomes contributions and is licensed under the Apache 2.0 license.

mattermost-plugin-agents

The Mattermost Agents Plugin integrates AI capabilities directly into your Mattermost workspace, allowing users to run local LLMs on their infrastructure or connect to cloud providers. It offers multiple AI assistants with specialized personalities, thread and channel summarization, action item extraction, meeting transcription, semantic search, smart reactions, direct conversations with AI assistants, and flexible LLM support. The plugin comes with comprehensive documentation, installation instructions, system requirements, and development guidelines for users to interact with AI features and configure LLM providers.

toolmate

ToolMate AI is an advanced AI companion that integrates agents, tools, and plugins to excel in conversations, generative work, and task execution. It supports multi-step actions, allowing users to customize workflows for tackling complex projects with ease. The tool offers a wide range of AI backends and models, including Ollama, Llama.cpp, Groq Cloud API, OpenAI API, and Google Gemini via Vertex AI. Users can easily switch between backends and leverage AI models like wizardlm2 and mixtral. ToolMate AI stands out for its distinctive features such as tool calling for any LLMs, running multiple tools in one go, highly customizable plugins, and integration with popular AI tools. It also supports quick tool calling using '@' notation and enables the execution of computing tasks on demand. With features like multiple tools in one go, customizable plugins, system command and fabric integration, GPU offloading support, real-time data access, and device information retrieval, ToolMate AI offers a comprehensive solution for various tasks and content creation.

For similar tasks

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

basehub

JavaScript / TypeScript SDK for BaseHub, the first AI-native content hub. **Features:** * ✨ Infers types from your BaseHub repository... _meaning IDE autocompletion works great._ * 🏎️ No dependency on graphql... _meaning your bundle is more lightweight._ * 🌐 Works everywhere `fetch` is supported... _meaning you can use it anywhere._

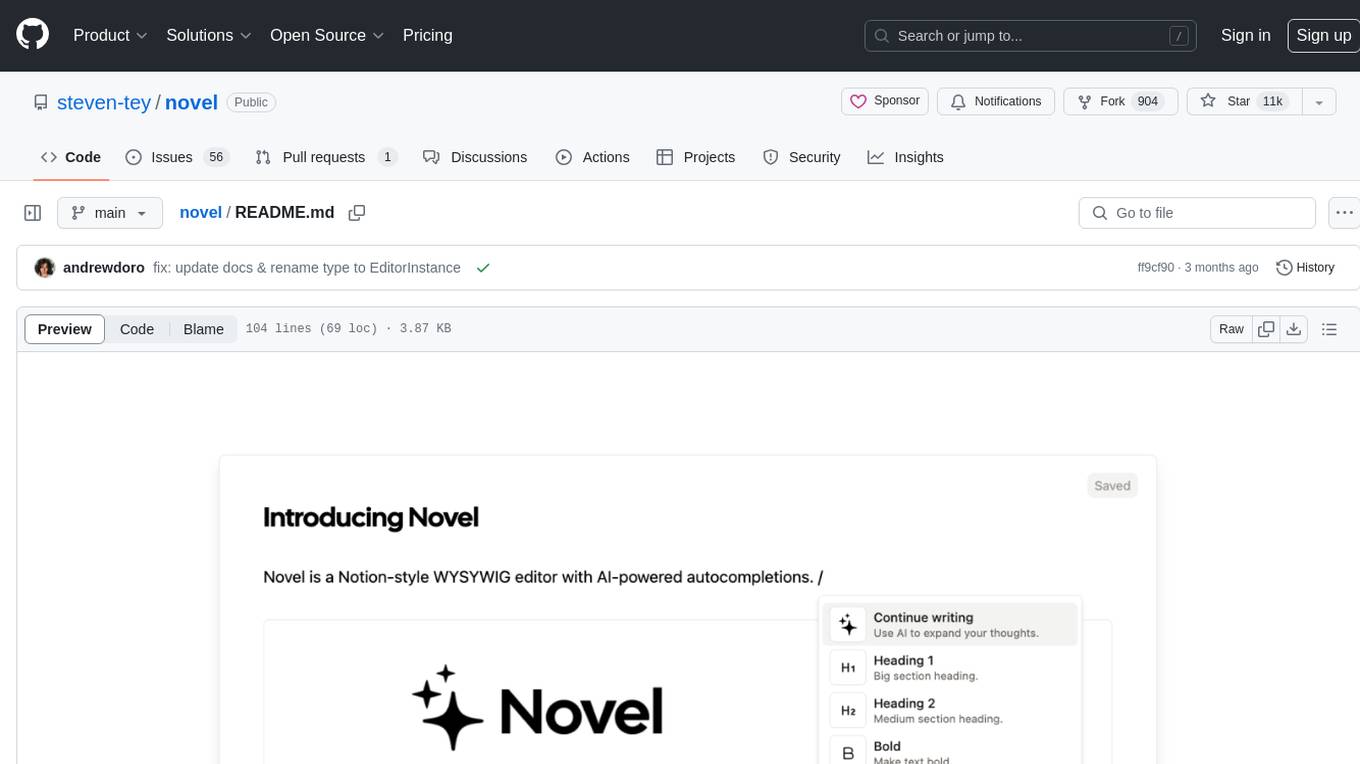

novel

Novel is an open-source Notion-style WYSIWYG editor with AI-powered autocompletions. It allows users to easily create and edit content with the help of AI suggestions. The tool is built on a modern tech stack and supports cross-framework development. Users can deploy their own version of Novel to Vercel with one click and contribute to the project by reporting bugs or making feature enhancements through pull requests.

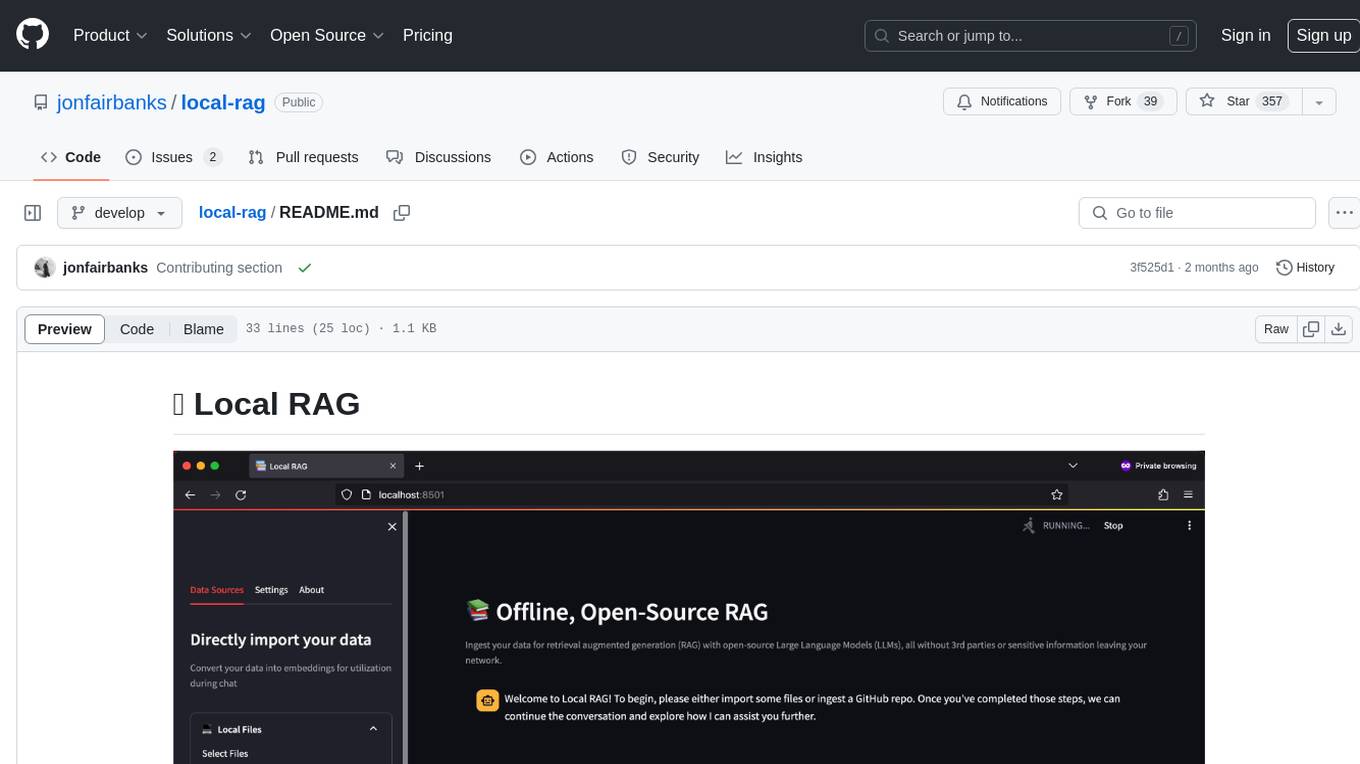

local-rag

Local RAG is an offline, open-source tool that allows users to ingest files for retrieval augmented generation (RAG) using large language models (LLMs) without relying on third parties or exposing sensitive data. It supports offline embeddings and LLMs, multiple sources including local files, GitHub repos, and websites, streaming responses, conversational memory, and chat export. Users can set up and deploy the app, learn how to use Local RAG, explore the RAG pipeline, check planned features, known bugs and issues, access additional resources, and contribute to the project.

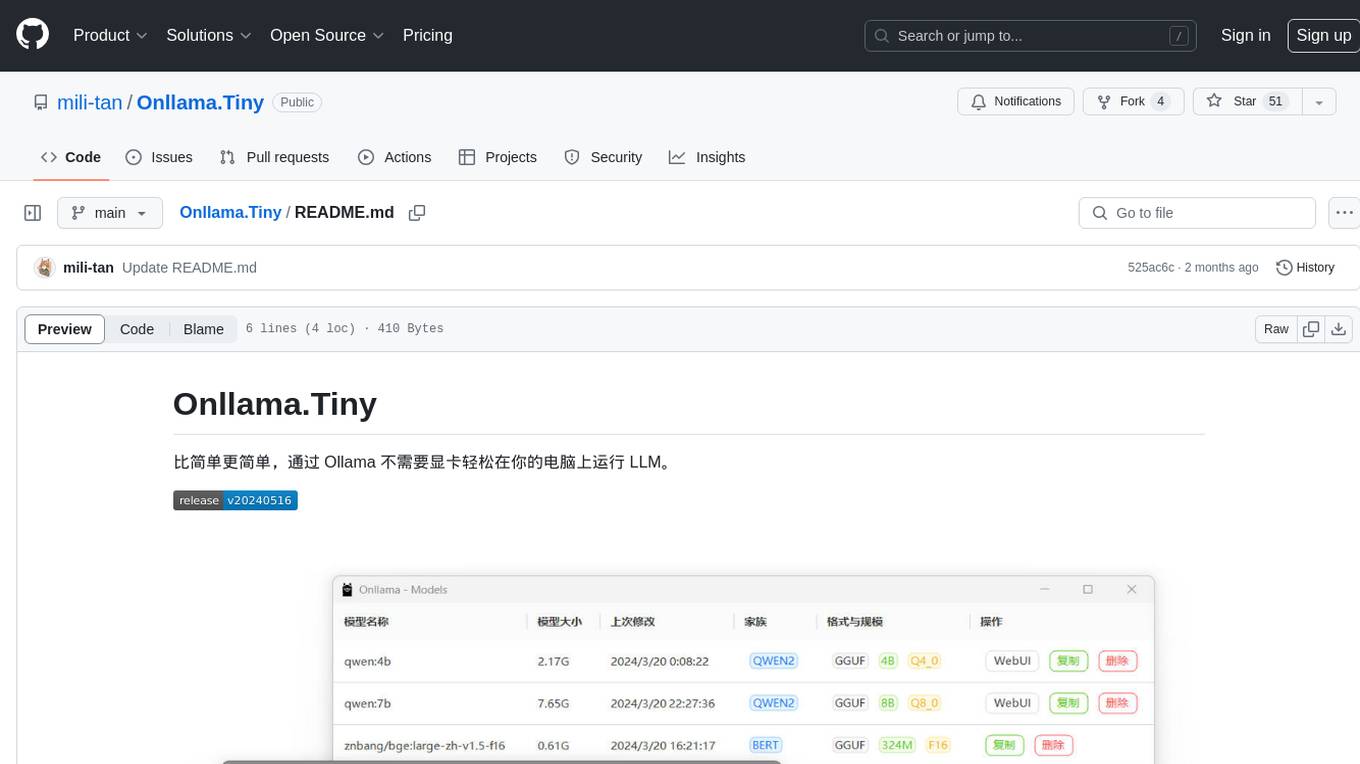

Onllama.Tiny

Onllama.Tiny is a lightweight tool that allows you to easily run LLM on your computer without the need for a dedicated graphics card. It simplifies the process of running LLM, making it more accessible for users. The tool provides a user-friendly interface and streamlines the setup and configuration required to run LLM on your machine. With Onllama.Tiny, users can quickly set up and start using LLM for various applications and projects.

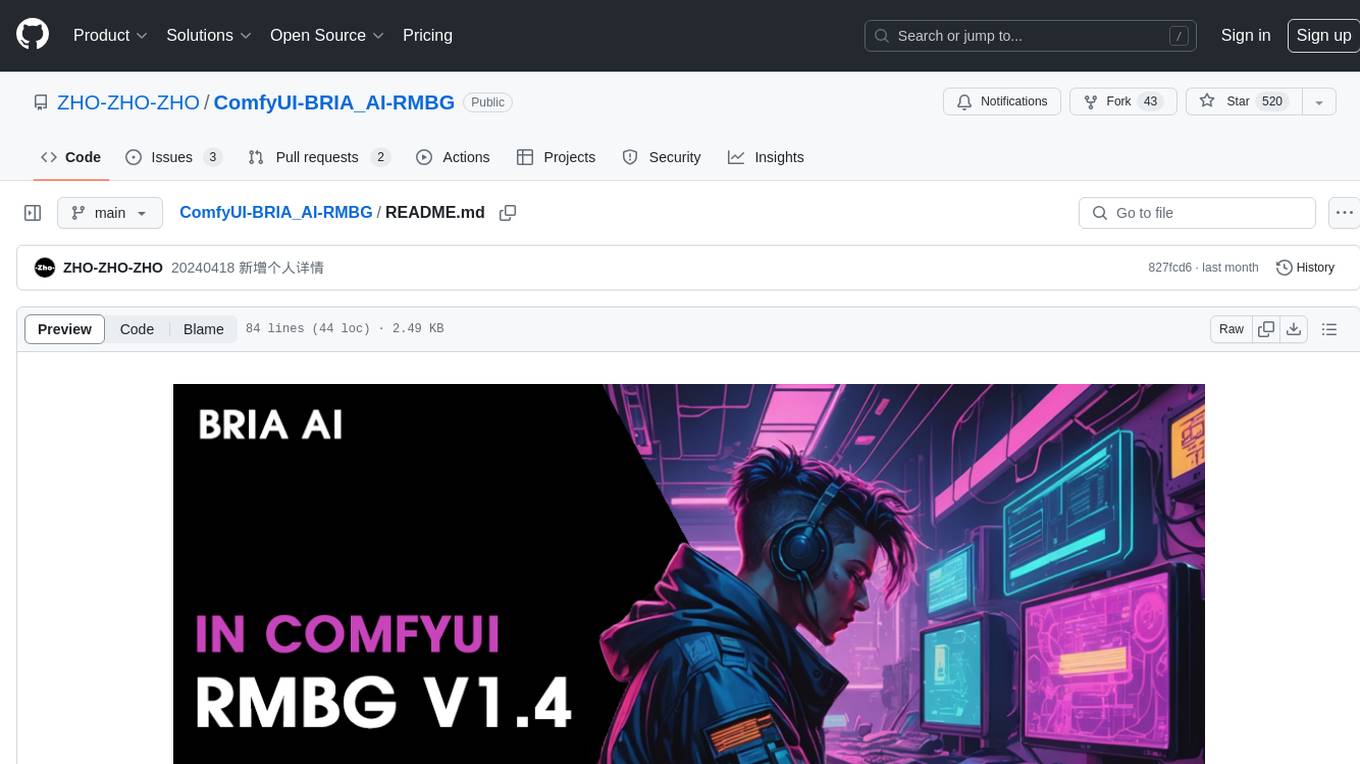

ComfyUI-BRIA_AI-RMBG

ComfyUI-BRIA_AI-RMBG is an unofficial implementation of the BRIA Background Removal v1.4 model for ComfyUI. The tool supports batch processing, including video background removal, and introduces a new mask output feature. Users can install the tool using ComfyUI Manager or manually by cloning the repository. The tool includes nodes for automatically loading the Removal v1.4 model and removing backgrounds. Updates include support for batch processing and the addition of a mask output feature.

enterprise-h2ogpte

Enterprise h2oGPTe - GenAI RAG is a repository containing code examples, notebooks, and benchmarks for the enterprise version of h2oGPTe, a powerful AI tool for generating text based on the RAG (Retrieval-Augmented Generation) architecture. The repository provides resources for leveraging h2oGPTe in enterprise settings, including implementation guides, performance evaluations, and best practices. Users can explore various applications of h2oGPTe in natural language processing tasks, such as text generation, content creation, and conversational AI.

semantic-kernel-docs

The Microsoft Semantic Kernel Documentation GitHub repository contains technical product documentation for Semantic Kernel. It serves as the home of technical content for Microsoft products and services. Contributors can learn how to make contributions by following the Docs contributor guide. The project follows the Microsoft Open Source Code of Conduct.

For similar jobs

ChatFAQ

ChatFAQ is an open-source comprehensive platform for creating a wide variety of chatbots: generic ones, business-trained, or even capable of redirecting requests to human operators. It includes a specialized NLP/NLG engine based on a RAG architecture and customized chat widgets, ensuring a tailored experience for users and avoiding vendor lock-in.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

mikupad

mikupad is a lightweight and efficient language model front-end powered by ReactJS, all packed into a single HTML file. Inspired by the likes of NovelAI, it provides a simple yet powerful interface for generating text with the help of various backends.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

firecrawl

Firecrawl is an API service that takes a URL, crawls it, and converts it into clean markdown. It crawls all accessible subpages and provides clean markdown for each, without requiring a sitemap. The API is easy to use and can be self-hosted. It also integrates with Langchain and Llama Index. The Python SDK makes it easy to crawl and scrape websites in Python code.