batteries-included

Batteries Included is a Kubernetes based software platform for database, ai, web, monitoring, and more.

Stars: 58

Batteries Included is an all-in-one platform for building and running modern applications, simplifying cloud infrastructure complexity. It offers production-ready capabilities through an intuitive interface, focusing on automation, security, and enterprise-grade features. The platform includes databases like PostgreSQL and Redis, AI/ML capabilities with Jupyter notebooks, web services deployment, security features like SSL/TLS management, and monitoring tools like Grafana dashboards. Batteries Included is designed to streamline infrastructure setup and management, allowing users to concentrate on application development without dealing with complex configurations.

README:

Welcome! Batteries Included is your all-in-one platform for building and running modern applications. We take the complexity out of cloud infrastructure, giving you production-ready capabilities through an intuitive and easy-to-use interface.

-

🚀 Launch Production-Ready Infrastructure in Minutes

- Deploy databases, monitoring, and web services with just a few clicks

- Automatic scaling, high availability, and security out of the box

- Built on battle-tested open source technologies like Kubernetes

-

💻 Focus on Building, Not Infrastructure

- No more wrestling with YAML or complex configurations

- Automated setup of best practices for security, monitoring, and operations

- Unified interface for managing all your services

- Runs wherever you want it to!

-

🏢 Enterprise-Grade Features, Developer-Friendly Interface

- AI/ML capabilities with integrated Jupyter notebooks and vector databases

- Automated PostgreSQL, Redis, and MongoDB deployment and management

- Built-in monitoring with Grafana dashboards and VictoriaMetrics

- Secure networking with automatic SSL/TLS certificate management

- OAuth/SSO integration with Keycloak

The fastest way to experience Batteries Included:

- Visit batteriesincl.com and create an account

- Choose your installation type (cloud, local, or existing cluster)

- Run the provided installation command

- Access your ready-to-use infrastructure dashboard

- 🐘 PostgreSQL with automated backups and monitoring

- ⚡️ Redis for caching and message queues

- 🍃 MongoDB-compatible FerretDB

- 🎯 Vector database capabilities with pgvector

- 📓 Jupyter notebooks with pre-configured environments

- 🤖 Ollama for local LLM deployment (including DeepSeek, Phi-2, Nomic, and more)

- 🎮 GPU support and scaling (coming soon)

- 🚀 Automated deployment and scaling

- 🔒 Built-in SSL/TLS certificate management

- ⚖️ Load balancing and traffic management

- 🔄 Zero-downtime updates and serverless deployment

- 🛡️ Automated certificate management

- 🔐 OAuth/SSO integration

- 🌐 Network policies and mTLS

- 🗝️ Secure secret management

- 📊 Pre-configured Grafana dashboards

- 📈 Metrics collection with VictoriaMetrics

- 📝 Monitor all your clusters from one place!

If you want to try Batteries Included without creating an account, you can run it locally. Note that the installation will stop working after a few hours without being able to report status.

- Download

bifrom the latest GitHub release - Ensure your machine has Docker or compatible software running and configured (Linux is best supported)

- From

master, runbi start bootstrap/local.spec.json

To get started developing or changing the code, make sure your operating system is set up and ready to go. We recommend using a Linux machine, but our code should work on any system with a docker daemon (or compatible) and a Unix-like shell. We'll need a few dependencies, ASDF, and then to start a kubernetes cluster configured for development.

Depending on your Linux distribution, you'll need to install the following dependencies:

For Ubuntu/apt-based systems:

sudo apt-get install -y docker.io build-essential curl git cmake \

libssl-dev pkg-config autoconf \

m4 libncurses5-dev inotify-tools direnv jq

# Building and Testing deps not needed for most uses

sudo apt-get install -y chromium-browser chromium-chromedriverFor Fedora/dnf-based systems:

sudo dnf install -y docker gcc gcc-c++ make curl git \

cmake openssl-devel pkgconfig autoconf m4 ncurses-devel \

inotify-tools direnv jq

# Building/Testing deps

sudo dnf install -y chromium chromedriverAfter installing the dependencies, ensure Docker is enabled and your user has the right privileges:

sudo systemctl enable docker

sudo systemctl start docker

sudo usermod -aG docker $USER

newgrp dockerFor MacOS you will need to install the following dependencies in addition to docker desktop or podman.

brew install cmake flock direnvasdf is a version manager for multiple languages. We use

it to manage the tools that are useful in the project. You will need to install

asdf and a few plugins:

git clone https://github.com/asdf-vm/asdf.git ~/.asdf --branch v0.14.0Then add the following to your bash profile (other shells will vary slightly):

. $HOME/.asdf/asdf.sh

. $HOME/.asdf/completions/asdf.bash

eval "$(direnv hook bash)"Then install all the needed plugins:

asdf plugin add erlang

asdf plugin add elixir

asdf plugin add nodejs

asdf plugin add golang

asdf plugin add goreleaser

asdf plugin add kubectl

asdf plugin add shfmt

asdf plugin add awscli

asdf plugin add kind

asdf installThis monorepo contains multiple parts that come together to build the Batteries

Included platform. bix is our development tool that helps manage the

different parts of the project.

TLDR: bix local bootstrap && bix local dev

static contains the code that builds and deploys

Batteries Included.

Public posts are in static/src/content/posts.

There are other docs pages in static/src/content/docs.

This is the main directory. It uses the

Phoenix framework, and there are several

different Elixir applications in platform_umbrella/apps while the global

configuration is in platform_umbrella/config.

This is the application for shared components and UI. It is used in Control Server Web and Home Server Web, and runs an instance of Storybook in development.

This is the main ecto repo for the control server that gets installed on the customer's kubernetes.

This is the phoenix web application. It's mostly Phoenix.Component,

Phoenix.LiveComponent and Phoenix.LiveView. Extensively using

Tailwind CSS as the styling.

This is the code for getting the billing usage and storing it. It will be the centralized home server that all clusters report into for version updates and billing.

This is the UI for billing, and starting new clusters.

To start the development environment:

- Initialize the Kind Kubernetes cluster, PostgreSQL services, and seed the databases:

bix local bootstrap- Launch the web servers and background processes:

bix local devThis will start three web servers:

- http://control.127-0-0-1.batrsinc.co:4000 - Control server

- http://home.127-0-0-1.batrsinc.co:4100 - Home base server

- http://common.127-0-0-1.batrsinc.co:4200 - Common UI server

The bix local dev command also opens an IEx console where you can explore the

process status.

To open the project in VSCode:

- Navigate to the project directory:

cd batteries-included- Launch VSCode with the workspace configuration:

code .vscode/everything.code-workspaceFor Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for batteries-included

Similar Open Source Tools

batteries-included

Batteries Included is an all-in-one platform for building and running modern applications, simplifying cloud infrastructure complexity. It offers production-ready capabilities through an intuitive interface, focusing on automation, security, and enterprise-grade features. The platform includes databases like PostgreSQL and Redis, AI/ML capabilities with Jupyter notebooks, web services deployment, security features like SSL/TLS management, and monitoring tools like Grafana dashboards. Batteries Included is designed to streamline infrastructure setup and management, allowing users to concentrate on application development without dealing with complex configurations.

AppFlowy-Cloud

AppFlowy Cloud is a secure user authentication, file storage, and real-time WebSocket communication tool written in Rust. It is part of the AppFlowy ecosystem, providing an efficient and collaborative user experience. The tool offers deployment guides, development setup with Rust and Docker, debugging tips for components like PostgreSQL, Redis, Minio, and Portainer, and guidelines for contributing to the project.

middleware

Middleware is an open-source engineering management tool that helps engineering leaders measure and analyze team effectiveness using DORA metrics. It integrates with CI/CD tools, automates DORA metric collection and analysis, visualizes key performance indicators, provides customizable reports and dashboards, and integrates with project management platforms. Users can set up Middleware using Docker or manually, generate encryption keys, set up backend and web servers, and access the application to view DORA metrics. The tool calculates DORA metrics using GitHub data, including Deployment Frequency, Lead Time for Changes, Mean Time to Restore, and Change Failure Rate. Middleware aims to provide DORA metrics to users based on their Git data, simplifying the process of tracking software delivery performance and operational efficiency.

coral-cloud

Coral Cloud Resorts is a sample hospitality application that showcases Data Cloud, Agents, and Prompts. It provides highly personalized guest experiences through smart automation, content generation, and summarization. The app requires licenses for Data Cloud, Agents, Prompt Builder, and Einstein for Sales. Users can activate features, deploy metadata, assign permission sets, import sample data, and troubleshoot common issues. Additionally, the repository offers integration with modern web development tools like Prettier, ESLint, and pre-commit hooks for code formatting and linting.

dream-team

Build your dream team with Autogen is a repository that leverages Microsoft Autogen 0.4, Azure OpenAI, and Streamlit to create an end-to-end multi-agent application. It provides an advanced multi-agent framework based on Magentic One, with features such as a friendly UI, single-line deployment, secure code execution, managed identities, and observability & debugging tools. Users can deploy Azure resources and the app with simple commands, work locally with virtual environments, install dependencies, update configurations, and run the application. The repository also offers resources for learning more about building applications with Autogen.

xlang

XLang™ is a cutting-edge language designed for AI and IoT applications, offering exceptional dynamic and high-performance capabilities. It excels in distributed computing and seamless integration with popular languages like C++, Python, and JavaScript. Notably efficient, running 3 to 5 times faster than Python in AI and deep learning contexts. Features optimized tensor computing architecture for constructing neural networks through tensor expressions. Automates tensor data flow graph generation and compilation for specific targets, enhancing GPU performance by 6 to 10 times in CUDA environments.

skynet

Skynet is an API server for AI services that wraps several apps and models. It consists of specialized modules that can be enabled or disabled as needed. Users can utilize Skynet for tasks such as summaries and action items with vllm or Ollama, live transcriptions with Faster Whisper via websockets, and RAG Assistant. The tool requires Poetry and Redis for operation. Skynet provides a quickstart guide for both Summaries/Assistant and Live Transcriptions, along with instructions for testing docker changes and running demos. Detailed documentation on configuration, running, building, and monitoring Skynet is available in the docs. Developers can contribute to Skynet by installing the pre-commit hook for linting. Skynet is distributed under the Apache 2.0 License.

orama-core

OramaCore is a database designed for AI projects, answer engines, copilots, and search functionalities. It offers features such as a full-text search engine, vector database, LLM interface, and various utilities. The tool is currently under active development and not recommended for production use due to potential API changes. OramaCore aims to provide a comprehensive solution for managing data and enabling advanced AI capabilities in projects.

airo

Airo is a tool designed to simplify the process of deploying containers to self-hosted servers. It allows users to focus on building their products without the complexity of Kubernetes or CI/CD pipelines. With Airo, users can easily build and push Docker images, deploy instantly with a single command, update configurations securely using SSH, and set up HTTPS and reverse proxy automatically using Caddy.

AgentIQ

AgentIQ is a flexible library designed to seamlessly integrate enterprise agents with various data sources and tools. It enables true composability by treating agents, tools, and workflows as simple function calls. With features like framework agnosticism, reusability, rapid development, profiling, observability, evaluation system, user interface, and MCP compatibility, AgentIQ empowers developers to move quickly, experiment freely, and ensure reliability across agent-driven projects.

langstream

LangStream is a tool for natural language processing tasks, providing a CLI for easy installation and usage. Users can try sample applications like Chat Completions and create their own applications using the developer documentation. It supports running on Kubernetes for production-ready deployment, with support for various Kubernetes distributions and external components like Apache Kafka or Apache Pulsar cluster. Users can deploy LangStream locally using minikube and manage the cluster with mini-langstream. Development requirements include Docker, Java 17, Git, Python 3.11+, and PIP, with the option to test local code changes using mini-langstream.

mattermost-plugin-ai

The Mattermost AI Copilot Plugin is an extension that adds functionality for local and third-party LLMs within Mattermost v9.6 and above. It is currently experimental and allows users to interact with AI models seamlessly. The plugin enhances the user experience by providing AI-powered assistance and features for communication and collaboration within the Mattermost platform.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

manifold

Manifold is a powerful platform for workflow automation using AI models. It supports text generation, image generation, and retrieval-augmented generation, integrating seamlessly with popular AI endpoints. Additionally, Manifold provides robust semantic search capabilities using PGVector combined with the SEFII engine. It is under active development and not production-ready.

chatflow

Chatflow is a tool that provides a chat interface for users to interact with systems using natural language. The engine understands user intent and executes commands for tasks, allowing easy navigation of complex websites/products. This approach enhances user experience, reduces training costs, and boosts productivity.

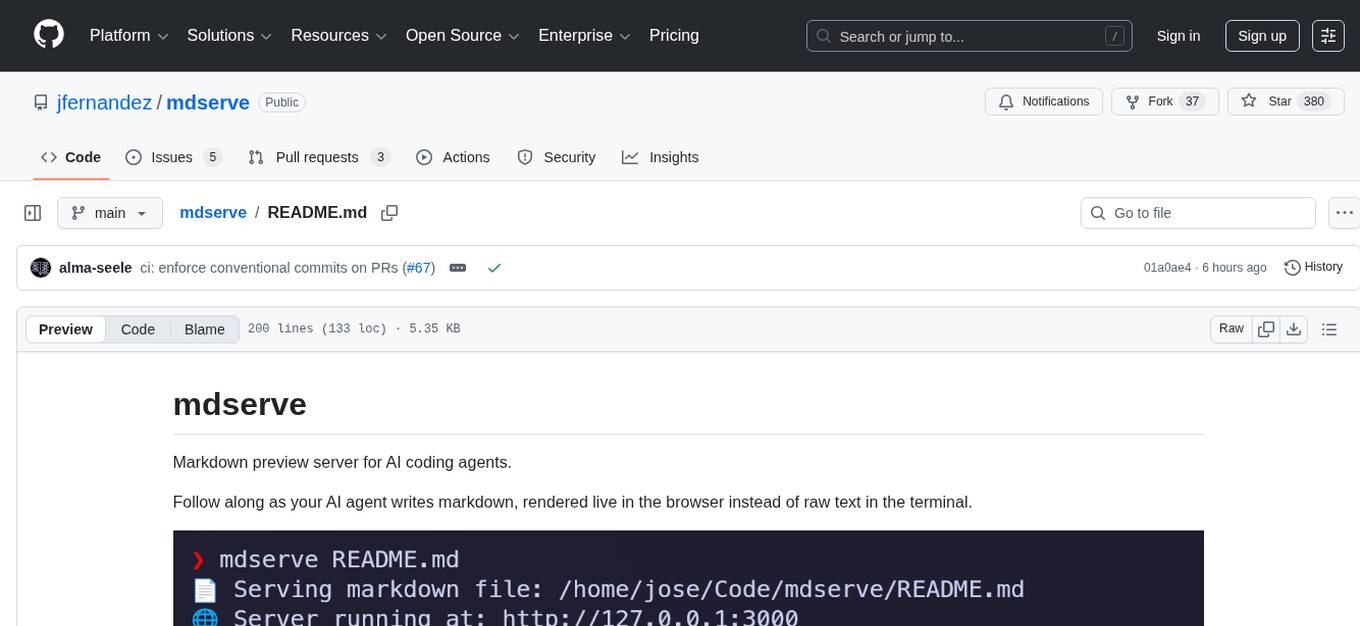

mdserve

Markdown preview server for AI coding agents. mdserve is a tool that allows AI agents to write markdown and see it rendered live in the browser. It features zero configuration, single binary installation, instant live reload via WebSocket, ephemeral sessions, and agent-friendly content support. It is not a documentation site generator, static site server, or general-purpose markdown authoring tool. mdserve is designed for AI coding agents to produce content like tables, diagrams, and code blocks.

For similar tasks

batteries-included

Batteries Included is an all-in-one platform for building and running modern applications, simplifying cloud infrastructure complexity. It offers production-ready capabilities through an intuitive interface, focusing on automation, security, and enterprise-grade features. The platform includes databases like PostgreSQL and Redis, AI/ML capabilities with Jupyter notebooks, web services deployment, security features like SSL/TLS management, and monitoring tools like Grafana dashboards. Batteries Included is designed to streamline infrastructure setup and management, allowing users to concentrate on application development without dealing with complex configurations.

pgedge-postgres-mcp

The pgedge-postgres-mcp repository contains a set of tools and scripts for managing and monitoring PostgreSQL databases in an edge computing environment. It provides functionalities for automating database tasks, monitoring database performance, and ensuring data integrity in edge computing scenarios. The tools are designed to be lightweight and efficient, making them suitable for resource-constrained edge devices. With pgedge-postgres-mcp, users can easily deploy and manage PostgreSQL databases in edge computing environments with minimal overhead.

llm-app

Pathway's LLM (Large Language Model) Apps provide a platform to quickly deploy AI applications using the latest knowledge from data sources. The Python application examples in this repository are Docker-ready, exposing an HTTP API to the frontend. These apps utilize the Pathway framework for data synchronization, API serving, and low-latency data processing without the need for additional infrastructure dependencies. They connect to document data sources like S3, Google Drive, and Sharepoint, offering features like real-time data syncing, easy alert setup, scalability, monitoring, security, and unification of application logic.

kaytu

Kaytu is an AI platform that enhances cloud efficiency by analyzing historical usage data and providing intelligent recommendations for optimizing instance sizes. Users can pay for only what they need without compromising the performance of their applications. The platform is easy to use with a one-line command, allows customization for specific requirements, and ensures security by extracting metrics from the client side. Kaytu is open-source and supports AWS services, with plans to expand to GCP, Azure, GPU optimization, and observability data from Prometheus in the future.

awesome-production-llm

This repository is a curated list of open-source libraries for production large language models. It includes tools for data preprocessing, training/finetuning, evaluation/benchmarking, serving/inference, application/RAG, testing/monitoring, and guardrails/security. The repository also provides a new category called LLM Cookbook/Examples for showcasing examples and guides on using various LLM APIs.

holisticai

Holistic AI is an open-source library dedicated to assessing and improving the trustworthiness of AI systems. It focuses on measuring and mitigating bias, explainability, robustness, security, and efficacy in AI models. The tool provides comprehensive metrics, mitigation techniques, a user-friendly interface, and visualization tools to enhance AI system trustworthiness. It offers documentation, tutorials, and detailed installation instructions for easy integration into existing workflows.

langkit

LangKit is an open-source text metrics toolkit for monitoring language models. It offers methods for extracting signals from input/output text, compatible with whylogs. Features include text quality, relevance, security, sentiment, toxicity analysis. Installation via PyPI. Modules contain UDFs for whylogs. Benchmarks show throughput on AWS instances. FAQs available.

nesa

Nesa is a tool that allows users to run on-prem AI for a fraction of the cost through a blind API. It provides blind privacy, zero latency on protected inference, wide model coverage, cost savings compared to cloud and on-prem AI, RAG support, and ChatGPT compatibility. Nesa achieves blind AI through Equivariant Encryption (EE), a new security technology that provides complete inference encryption with no additional latency. EE allows users to perform inference on neural networks without exposing the underlying data, preserving data privacy and security.

For similar jobs

AirGo

AirGo is a front and rear end separation, multi user, multi protocol proxy service management system, simple and easy to use. It supports vless, vmess, shadowsocks, and hysteria2.

mosec

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices. It bridges the gap between any machine learning models you just trained and the efficient online service API. * **Highly performant** : web layer and task coordination built with Rust 🦀, which offers blazing speed in addition to efficient CPU utilization powered by async I/O * **Ease of use** : user interface purely in Python 🐍, by which users can serve their models in an ML framework-agnostic manner using the same code as they do for offline testing * **Dynamic batching** : aggregate requests from different users for batched inference and distribute results back * **Pipelined stages** : spawn multiple processes for pipelined stages to handle CPU/GPU/IO mixed workloads * **Cloud friendly** : designed to run in the cloud, with the model warmup, graceful shutdown, and Prometheus monitoring metrics, easily managed by Kubernetes or any container orchestration systems * **Do one thing well** : focus on the online serving part, users can pay attention to the model optimization and business logic

llm-code-interpreter

The 'llm-code-interpreter' repository is a deprecated plugin that provides a code interpreter on steroids for ChatGPT by E2B. It gives ChatGPT access to a sandboxed cloud environment with capabilities like running any code, accessing Linux OS, installing programs, using filesystem, running processes, and accessing the internet. The plugin exposes commands to run shell commands, read files, and write files, enabling various possibilities such as running different languages, installing programs, starting servers, deploying websites, and more. It is powered by the E2B API and is designed for agents to freely experiment within a sandboxed environment.

pezzo

Pezzo is a fully cloud-native and open-source LLMOps platform that allows users to observe and monitor AI operations, troubleshoot issues, save costs and latency, collaborate, manage prompts, and deliver AI changes instantly. It supports various clients for prompt management, observability, and caching. Users can run the full Pezzo stack locally using Docker Compose, with prerequisites including Node.js 18+, Docker, and a GraphQL Language Feature Support VSCode Extension. Contributions are welcome, and the source code is available under the Apache 2.0 License.

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

fluid

Fluid is an open source Kubernetes-native Distributed Dataset Orchestrator and Accelerator for data-intensive applications, such as big data and AI applications. It implements dataset abstraction, scalable cache runtime, automated data operations, elasticity and scheduling, and is runtime platform agnostic. Key concepts include Dataset and Runtime. Prerequisites include Kubernetes version > 1.16, Golang 1.18+, and Helm 3. The tool offers features like accelerating remote file accessing, machine learning, accelerating PVC, preloading dataset, and on-the-fly dataset cache scaling. Contributions are welcomed, and the project is under the Apache 2.0 license with a vendor-neutral approach.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.