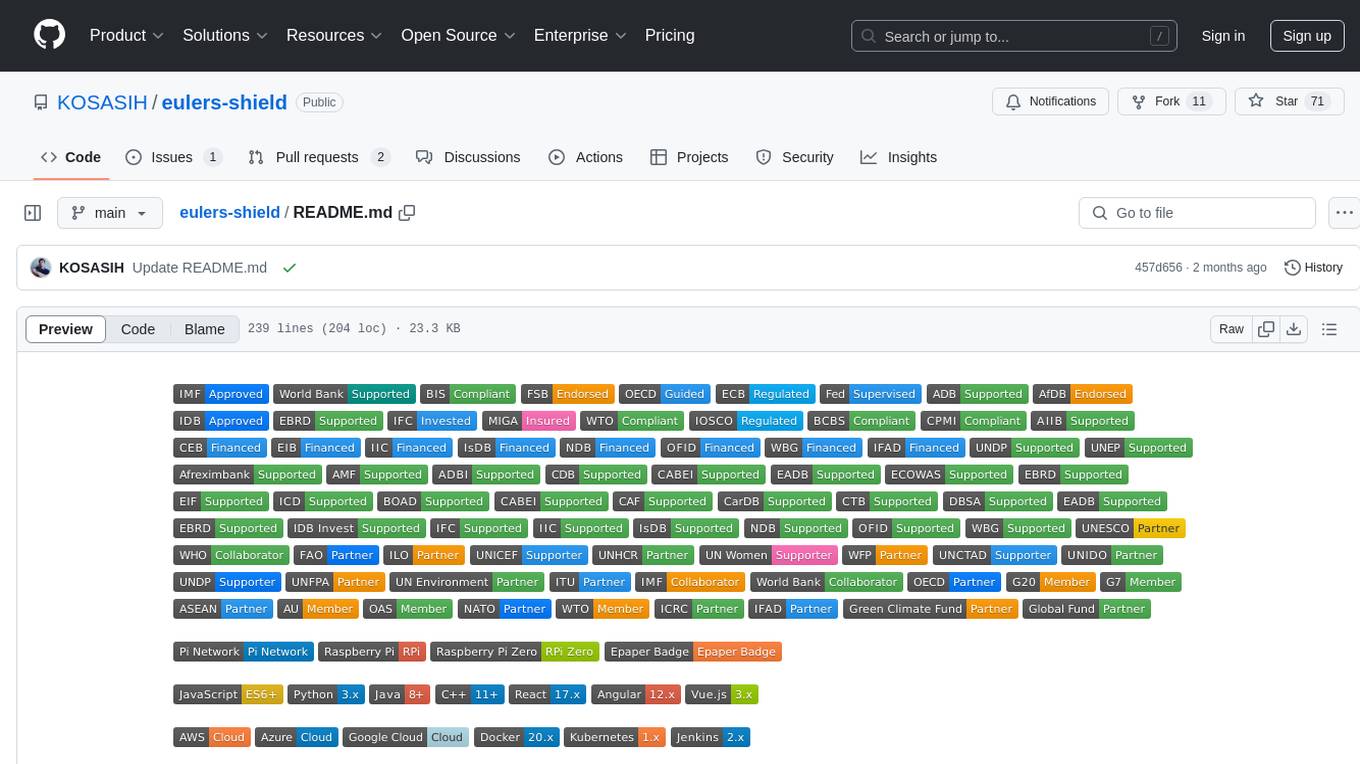

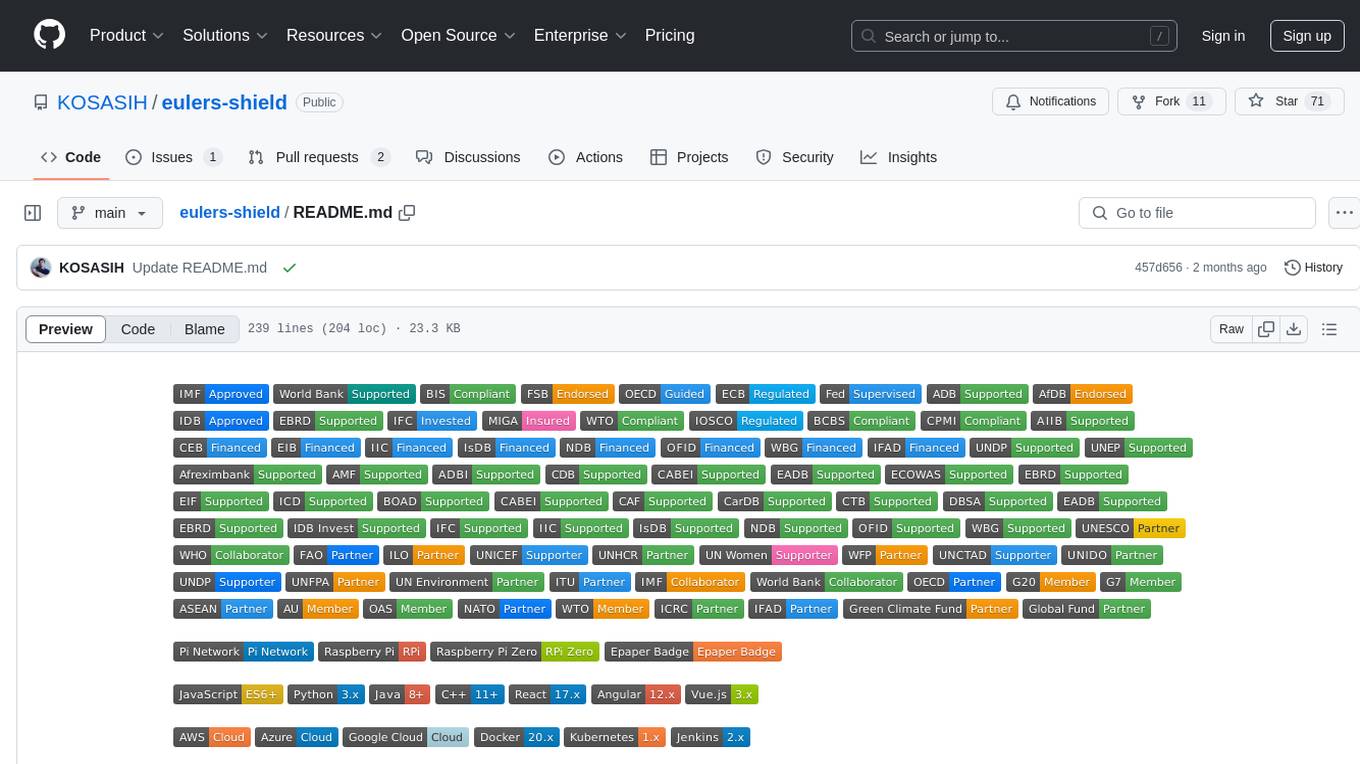

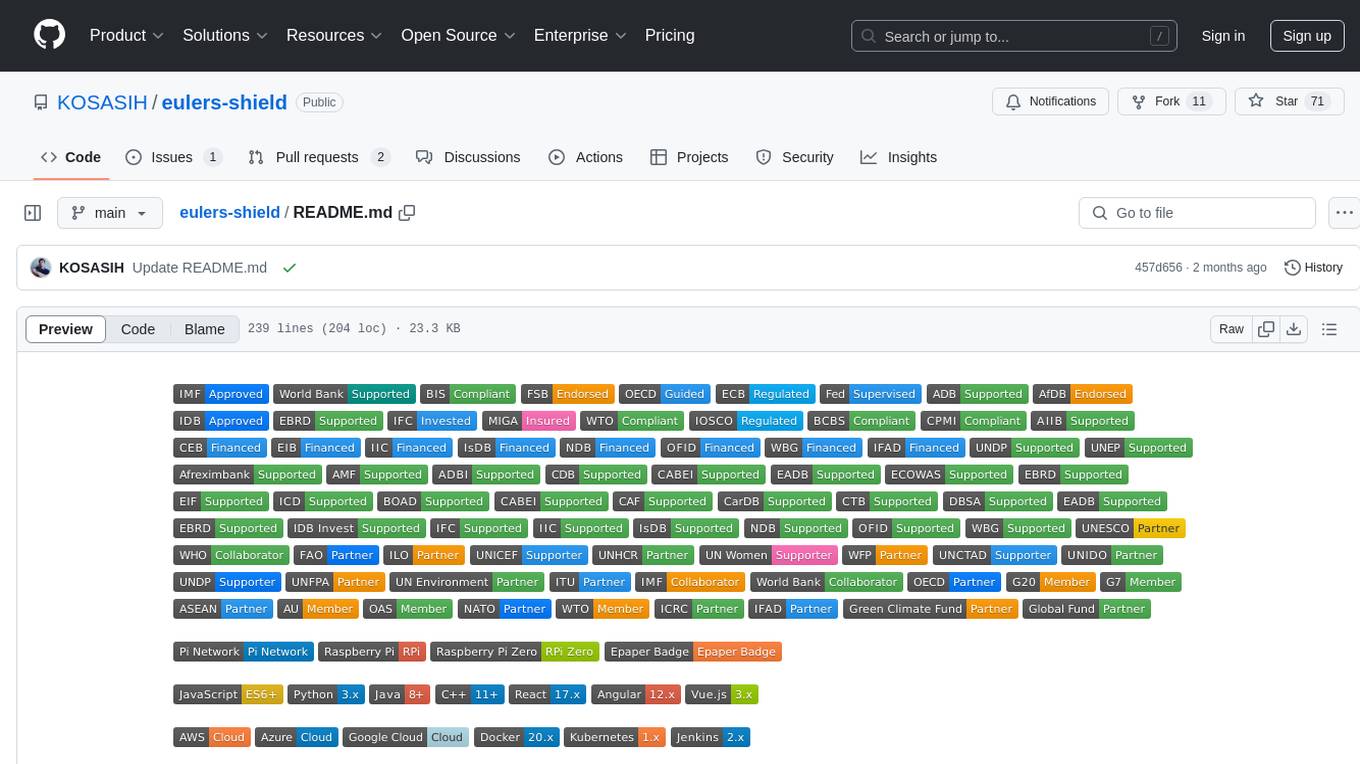

eulers-shield

A decentralized, AI-powered financial system for stabilizing the value of Pi Coin at $314.159. Combining blockchain, machine learning, and cybersecurity, Euler's Shield ensures the security, scalability, and decentralization of the Pi Coin ecosystem.

Stars: 71

Euler's Shield is a decentralized, AI-powered financial system designed to stabilize the value of Pi Coin at $314.159. It combines blockchain, machine learning, and cybersecurity to ensure the security, scalability, and decentralization of the Pi Coin ecosystem.

README:

Euler Shield by KOSASIH is licensed under Creative Commons Attribution 4.0 International

A decentralized, AI-powered financial system for stabilizing the value of Pi Coin at $314.159 ( Three hundred fourteen thousand one hundred fifty-nine dollars ). Combining blockchain, machine learning, and cybersecurity, Euler's Shield ensures the security, scalability, and decentralization of the Pi Coin ecosystem.

================

Euler's Shield is a cutting-edge, decentralized financial system designed to stabilize the value of Pi Coin at $314.159. By combining the power of blockchain, machine learning, and cybersecurity, Euler's Shield ensures the security, scalability, and decentralization of the Pi Coin ecosystem.

Utilizing advanced machine learning algorithms, Euler's Shield continuously monitors and adjusts to market fluctuations, ensuring the value of Pi Coin remains stable at $314.159 ( Three hundred fourteen thousand one hundred fifty-nine dollars ).

Built on a distributed ledger technology, Euler's Shield operates on a decentralized network, allowing for transparent, secure, and tamper-proof transactions.

Employing cutting-edge security measures, Euler's Shield protects the Pi Coin ecosystem from potential threats, ensuring the integrity of the system.

Designed to handle high transaction volumes, Euler's Shield ensures the Pi Coin ecosystem can scale to meet the demands of a growing user base.

- Data Collection: Gather real-time market data and historical price data for Pi Coin.

- Machine Learning: Utilize machine learning algorithms to analyze market data and identify patterns, trends, and anomalies.

- Price Adjustment: Based on the analysis, adjust the supply of Pi Coin to maintain a stable price of $314.159 ( Three hundred fourteen thousand one hundred fifty-nine dollars ).

- Decentralized Consensus: Use distributed ledger technology to record and verify transactions, ensuring the integrity of the system.

- Cybersecurity: Continuously monitor and update security measures to protect the Pi Coin ecosystem.

- Clone the repository:

git clone https://github.com/KOSASIH/eulers-shield - Install the required dependencies:

npm install - Run the development server:

npm start

- Want to contribute to the development of Euler's Shield? Check out our CONTRIBUTING.md guide for more information.

- Want to report a bug or suggest a feature? Open an issue on our issues page.

Euler's Shield is licensed under the Apache 2.0 License. See LICENSE for more information.

- Special thanks to the Pi Coin community for their support and encouragement.

- Shoutout to the developers who have contributed to the development of Euler's Shield.

Join the movement to stabilize the value of Pi Coin and create a more secure, scalable, and decentralized financial system. Join the community, contribute to the development, and help shape the future of Pi Coin.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for eulers-shield

Similar Open Source Tools

eulers-shield

Euler's Shield is a decentralized, AI-powered financial system designed to stabilize the value of Pi Coin at $314.159. It combines blockchain, machine learning, and cybersecurity to ensure the security, scalability, and decentralization of the Pi Coin ecosystem.

pi-nexus-autonomous-banking-network

A decentralized, AI-driven system accelerating the Open Mainet Pi Network, connecting global banks for secure, efficient, and autonomous transactions. The Pi-Nexus Autonomous Banking Network is built using Raspberry Pi devices and allows for the creation of a decentralized, autonomous banking system.

awesome-saas

The Alchemyst Platform Cookbook is a comprehensive guide for developers and builders to bring their AI ideas to life. It provides cutting-edge AI tools and templates to empower users in creating innovative projects. The platform offers API documentation, quick start guides, official and community templates for various projects. Users can contribute to the platform by forking the repository, adding the topic 'alchemyst-awesome-saas', making their repository public, and submitting a pull request. Troubleshooting guidelines are provided for contributors. The platform is actively maintained by the Alchemyst AI Team.

Awesome-Efficient-Agents

This repository, Awesome Efficient Agents, is a curated collection of papers focusing on memory, tool learning, and planning in agentic systems. It provides a comprehensive survey of efficient agent design, emphasizing memory construction, tool learning, and planning strategies. The repository categorizes papers based on memory processes, tool selection, tool calling, tool-integrated reasoning, and planning efficiency. It aims to help readers quickly access representative work in the field of efficient agent design.

chatgpt-auto-continue

ChatGPT Auto-Continue is a userscript that automatically continues generating ChatGPT responses when chats cut off. It relies on the powerful chatgpt.js library and is easy to install and use. Simply install Tampermonkey and ChatGPT Auto-Continue, and visit chat.openai.com as normal. Multi-reply conversations will automatically continue generating when cut-off!

gitmesh

GitMesh is an AI-powered Git collaboration network designed to address contributor dropout in open source projects. It offers real-time branch-level insights, intelligent contributor-task matching, and automated workflows. The platform transforms complex codebases into clear contribution journeys, fostering engagement through gamified rewards and integration with open source support programs. GitMesh's mascot, Meshy/Mesh Wolf, symbolizes agility, resilience, and teamwork, reflecting the platform's ethos of efficiency and power through collaboration.

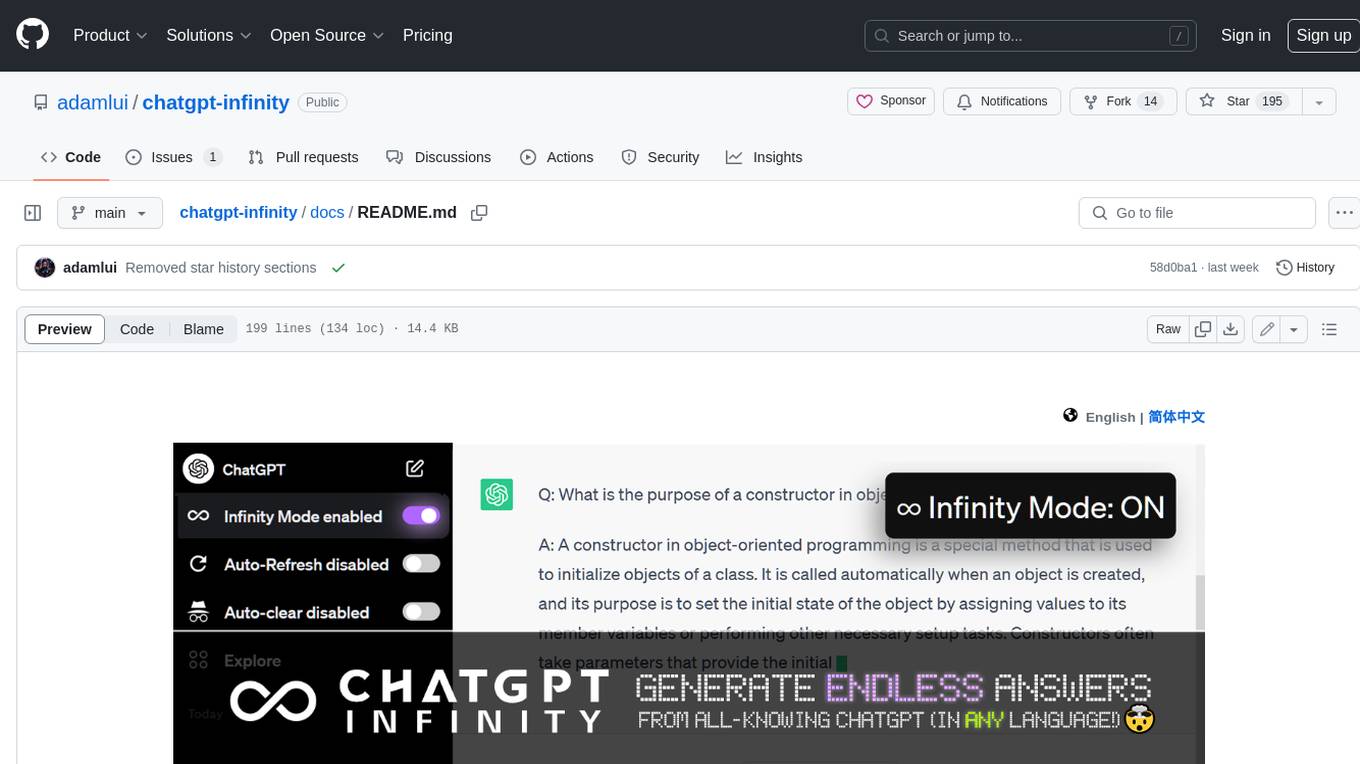

chatgpt-infinity

ChatGPT Infinity is a free and powerful add-on that makes ChatGPT generate infinite answers on any topic. It offers customizable topic selection, multilingual support, adjustable response interval, and auto-scroll feature for a seamless chat experience.

Jarvis

Jarvis is a powerful virtual AI assistant designed to simplify daily tasks through voice command integration. It features automation, device management, and personalized interactions, transforming technology engagement. Built using Python and AI models, it serves personal and administrative needs efficiently, making processes seamless and productive.

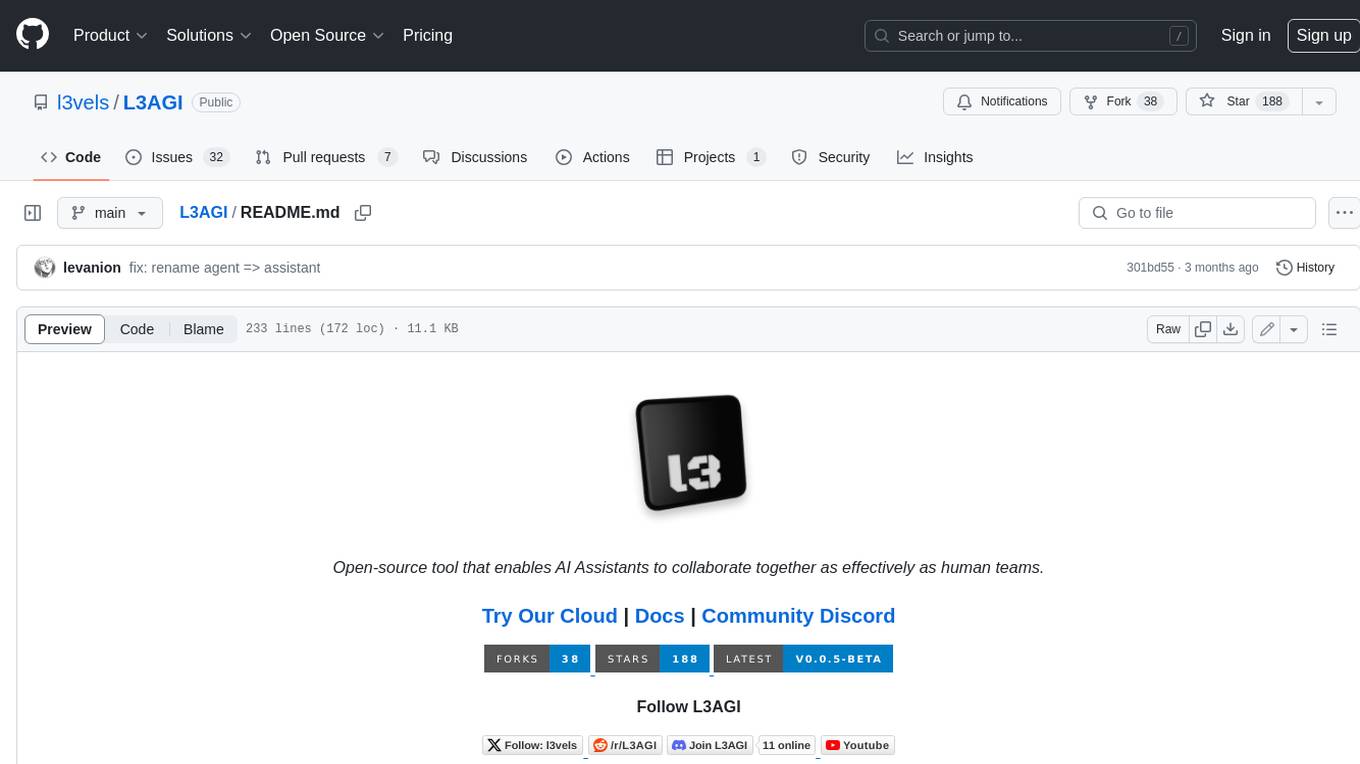

L3AGI

L3AGI is an open-source tool that enables AI Assistants to collaborate together as effectively as human teams. It provides a robust set of functionalities that empower users to design, supervise, and execute both autonomous AI Assistants and Teams of Assistants. Key features include the ability to create and manage Teams of AI Assistants, design and oversee standalone AI Assistants, equip AI Assistants with the ability to retain and recall information, connect AI Assistants to an array of data sources for efficient information retrieval and processing, and employ curated sets of tools for specific tasks. L3AGI also offers a user-friendly interface, APIs for integration with other systems, and a vibrant community for support and collaboration.

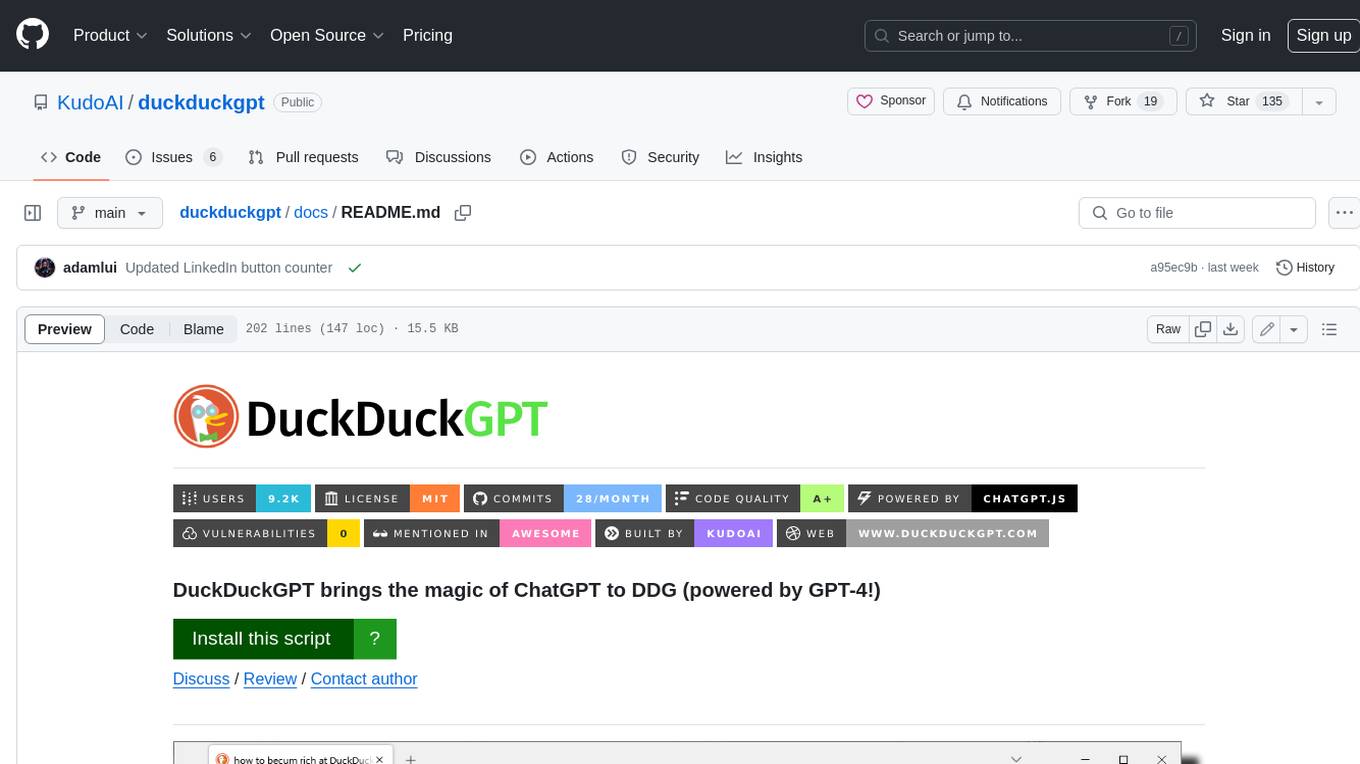

duckduckgpt

DuckDuckGPT brings the magic of ChatGPT to DDG (powered by GPT-4!). DuckDuckGPT is a browser extension that allows you to use ChatGPT within DuckDuckGo. This means you can ask ChatGPT questions, get help with tasks, and generate creative content, all without leaving DuckDuckGo. DuckDuckGPT is easy to use. Once you have installed the extension, simply type your question into the DuckDuckGo search bar and hit enter. ChatGPT will then generate a response that will appear below the search results. DuckDuckGPT is a powerful tool that can help you with a wide variety of tasks. Here are just a few examples of what you can use it for: * Get help with research * Write essays and other creative content * Translate languages * Get coding help * Answer trivia questions * And much more! DuckDuckGPT is still in development, but it is already a very powerful tool. As GPT-4 continues to improve, DuckDuckGPT will only get better. So if you are looking for a way to make your DuckDuckGo searches more productive, be sure to give DuckDuckGPT a try.

openclaw

OpenClaw is a personal AI assistant that runs on your own devices, answering you on various channels like WhatsApp, Telegram, Slack, Discord, and more. It can speak and listen on different platforms and render a live Canvas you control. The Gateway serves as the control plane, while the assistant is the main product. It provides a local, fast, and always-on single-user assistant experience. The preferred setup involves running the onboarding wizard in your terminal to guide you through setting up the gateway, workspace, channels, and skills. The tool supports various models and authentication methods, with a focus on security and privacy.

bravegpt

BraveGPT is a userscript that brings the power of ChatGPT to Brave Search. It allows users to engage with a conversational AI assistant directly within their search results, providing instant and personalized responses to their queries. BraveGPT is powered by GPT-4, the latest and most advanced language model from OpenAI, ensuring accurate and comprehensive answers. With BraveGPT, users can ask questions, get summaries, generate creative content, and more, all without leaving the Brave Search interface. The tool is easy to install and use, making it accessible to users of all levels. BraveGPT is a valuable addition to the Brave Search experience, enhancing its capabilities and providing users with a more efficient and informative search experience.

llama.cpp

The main goal of llama.cpp is to enable LLM inference with minimal setup and state-of-the-art performance on a wide range of hardware - locally and in the cloud. It provides a Plain C/C++ implementation without any dependencies, optimized for Apple silicon via ARM NEON, Accelerate and Metal frameworks, and supports various architectures like AVX, AVX2, AVX512, and AMX. It offers integer quantization for faster inference, custom CUDA kernels for NVIDIA GPUs, Vulkan and SYCL backend support, and CPU+GPU hybrid inference. llama.cpp is the main playground for developing new features for the ggml library, supporting various models and providing tools and infrastructure for LLM deployment.

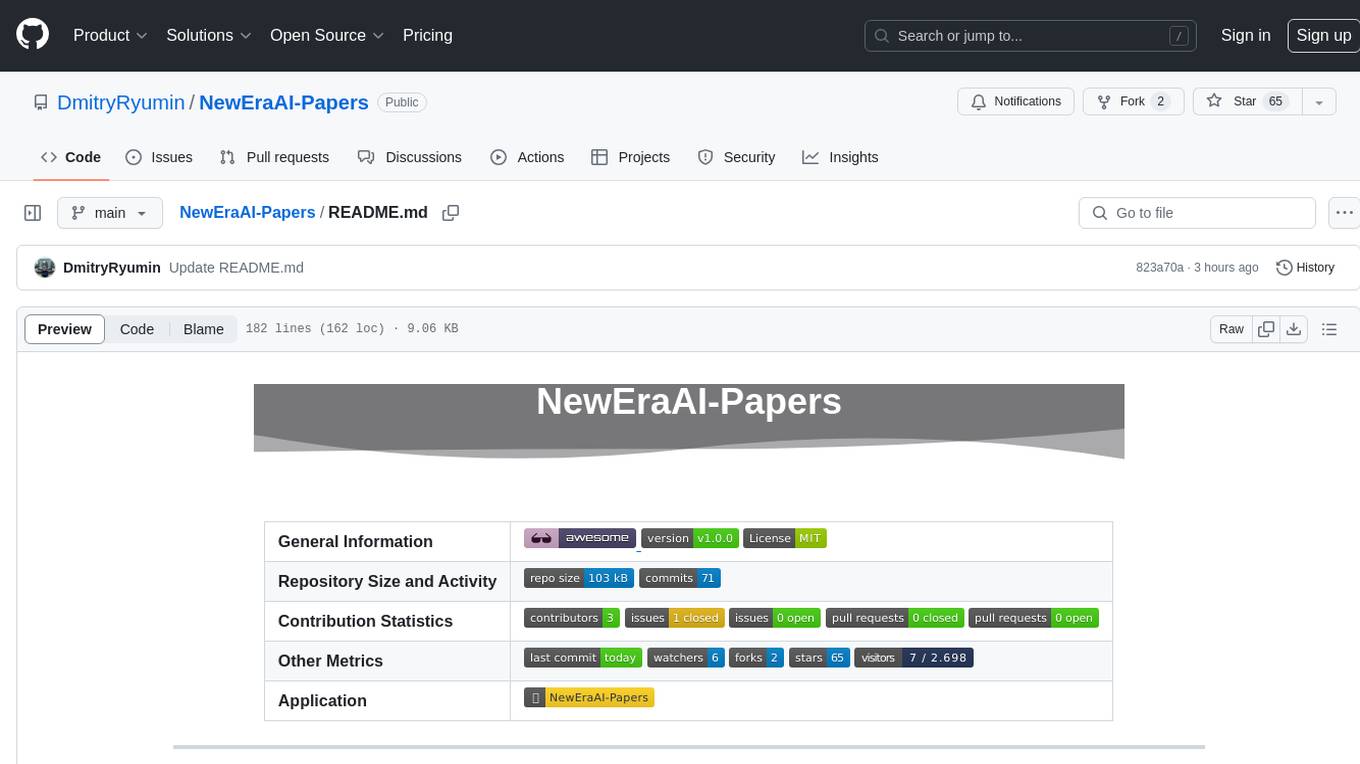

NewEraAI-Papers

The NewEraAI-Papers repository provides links to collections of influential and interesting research papers from top AI conferences, along with open-source code to promote reproducibility and provide detailed implementation insights beyond the scope of the article. Users can stay up to date with the latest advances in AI research by exploring this repository. Contributions to improve the completeness of the list are welcomed, and users can create pull requests, open issues, or contact the repository owner via email to enhance the repository further.

llama.cpp

llama.cpp is a C++ implementation of LLaMA, a large language model from Meta. It provides a command-line interface for inference and can be used for a variety of tasks, including text generation, translation, and question answering. llama.cpp is highly optimized for performance and can be run on a variety of hardware, including CPUs, GPUs, and TPUs.

For similar tasks

eulers-shield

Euler's Shield is a decentralized, AI-powered financial system designed to stabilize the value of Pi Coin at $314.159. It combines blockchain, machine learning, and cybersecurity to ensure the security, scalability, and decentralization of the Pi Coin ecosystem.

llm-app

Pathway's LLM (Large Language Model) Apps provide a platform to quickly deploy AI applications using the latest knowledge from data sources. The Python application examples in this repository are Docker-ready, exposing an HTTP API to the frontend. These apps utilize the Pathway framework for data synchronization, API serving, and low-latency data processing without the need for additional infrastructure dependencies. They connect to document data sources like S3, Google Drive, and Sharepoint, offering features like real-time data syncing, easy alert setup, scalability, monitoring, security, and unification of application logic.

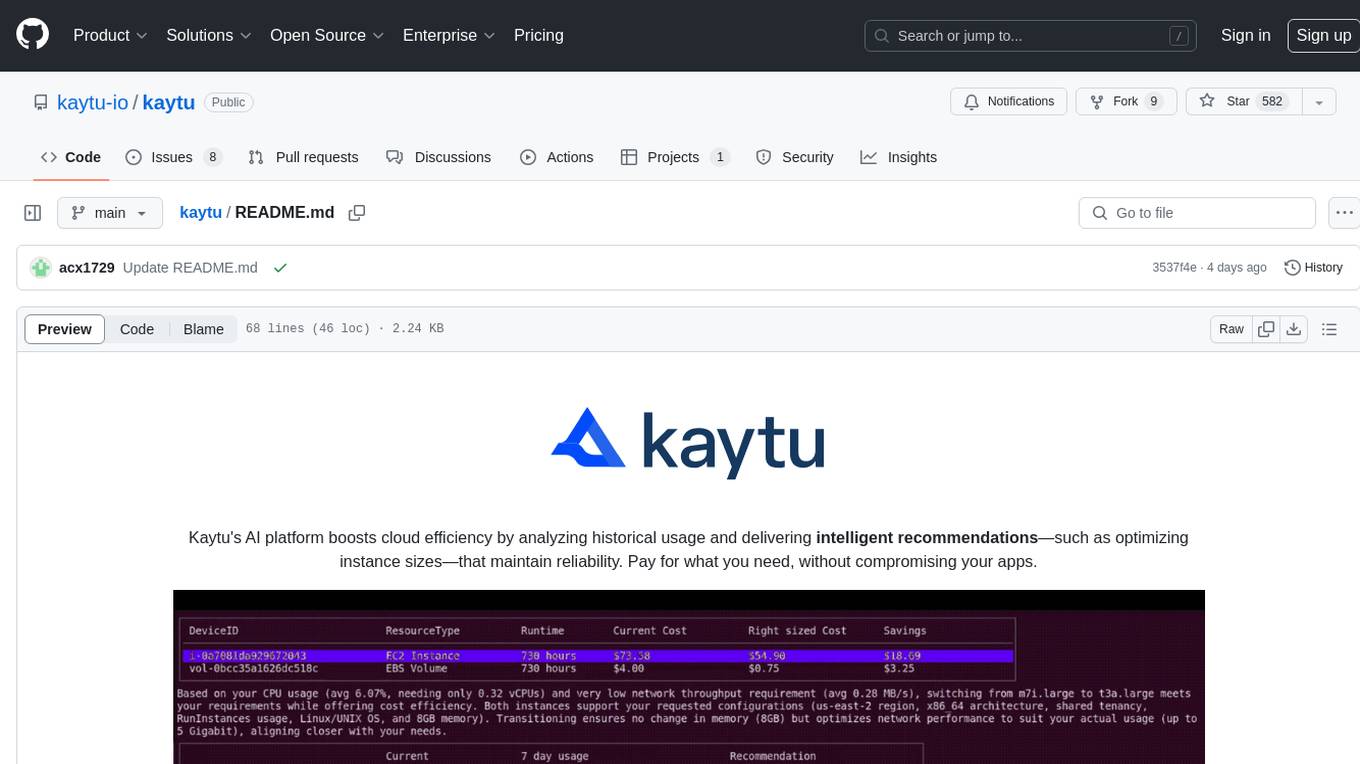

kaytu

Kaytu is an AI platform that enhances cloud efficiency by analyzing historical usage data and providing intelligent recommendations for optimizing instance sizes. Users can pay for only what they need without compromising the performance of their applications. The platform is easy to use with a one-line command, allows customization for specific requirements, and ensures security by extracting metrics from the client side. Kaytu is open-source and supports AWS services, with plans to expand to GCP, Azure, GPU optimization, and observability data from Prometheus in the future.

awesome-production-llm

This repository is a curated list of open-source libraries for production large language models. It includes tools for data preprocessing, training/finetuning, evaluation/benchmarking, serving/inference, application/RAG, testing/monitoring, and guardrails/security. The repository also provides a new category called LLM Cookbook/Examples for showcasing examples and guides on using various LLM APIs.

holisticai

Holistic AI is an open-source library dedicated to assessing and improving the trustworthiness of AI systems. It focuses on measuring and mitigating bias, explainability, robustness, security, and efficacy in AI models. The tool provides comprehensive metrics, mitigation techniques, a user-friendly interface, and visualization tools to enhance AI system trustworthiness. It offers documentation, tutorials, and detailed installation instructions for easy integration into existing workflows.

langkit

LangKit is an open-source text metrics toolkit for monitoring language models. It offers methods for extracting signals from input/output text, compatible with whylogs. Features include text quality, relevance, security, sentiment, toxicity analysis. Installation via PyPI. Modules contain UDFs for whylogs. Benchmarks show throughput on AWS instances. FAQs available.

nesa

Nesa is a tool that allows users to run on-prem AI for a fraction of the cost through a blind API. It provides blind privacy, zero latency on protected inference, wide model coverage, cost savings compared to cloud and on-prem AI, RAG support, and ChatGPT compatibility. Nesa achieves blind AI through Equivariant Encryption (EE), a new security technology that provides complete inference encryption with no additional latency. EE allows users to perform inference on neural networks without exposing the underlying data, preserving data privacy and security.

k8sgateway

K8sGateway is a feature-rich, fast, and flexible Kubernetes-native API gateway built on Envoy proxy and Kubernetes Gateway API. It excels in function-level routing, supports legacy apps, microservices, and serverless. It offers robust discovery capabilities, seamless integration with open-source projects, and supports hybrid applications with various technologies, architectures, protocols, and clouds.

For similar jobs

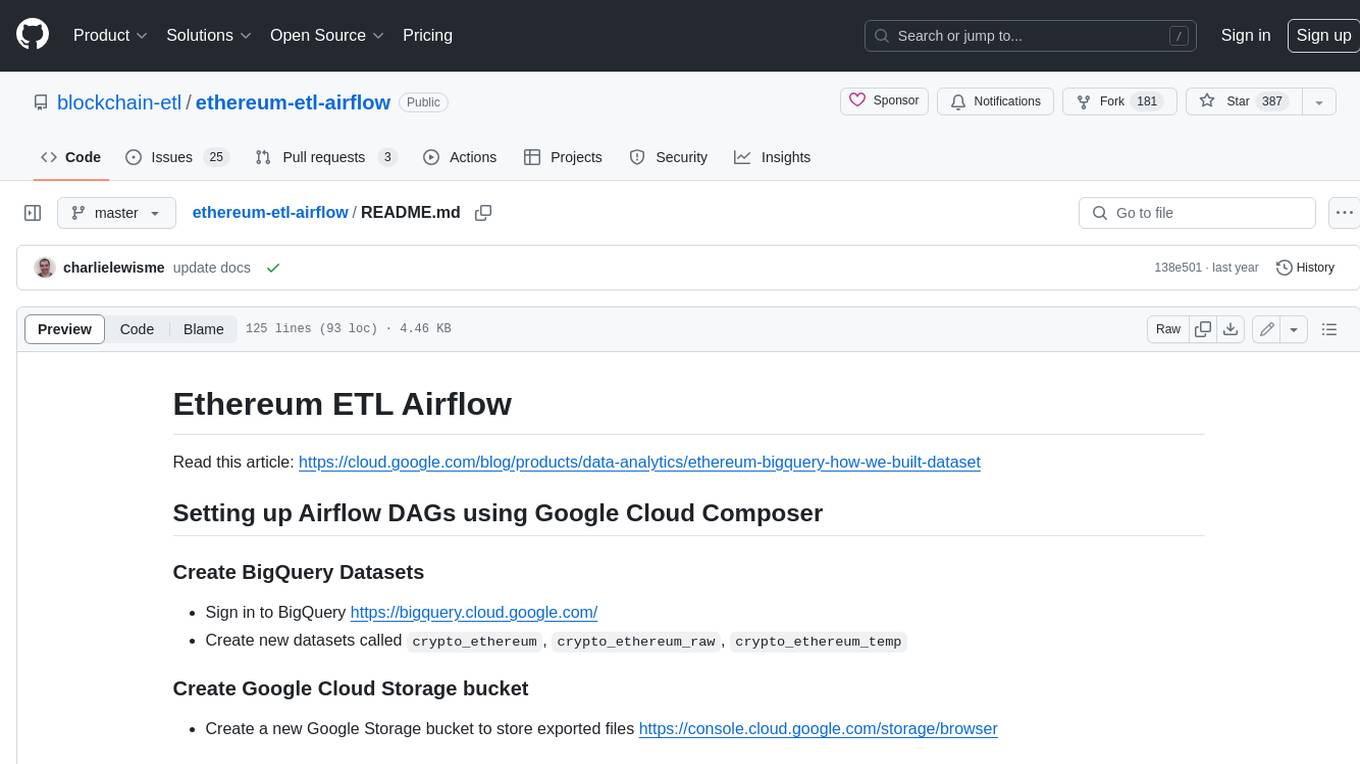

ethereum-etl-airflow

This repository contains Airflow DAGs for extracting, transforming, and loading (ETL) data from the Ethereum blockchain into BigQuery. The DAGs use the Google Cloud Platform (GCP) services, including BigQuery, Cloud Storage, and Cloud Composer, to automate the ETL process. The repository also includes scripts for setting up the GCP environment and running the DAGs locally.

airnode

Airnode is a fully-serverless oracle node that is designed specifically for API providers to operate their own oracles.

CHATPGT-MEV-BOT

The 𝓜𝓔𝓥-𝓑𝓞𝓣 is a revolutionary tool that empowers users to maximize their ETH earnings through advanced slippage techniques within the Ethereum ecosystem. Its user-centric design, optimized earning mechanism, and comprehensive security measures make it an indispensable tool for traders seeking to enhance their crypto trading strategies. With its current free access, there's no better time to explore the 𝓜𝓔𝓥-𝓑𝓞𝓣's capabilities and witness the transformative impact it can have on your crypto trading journey.

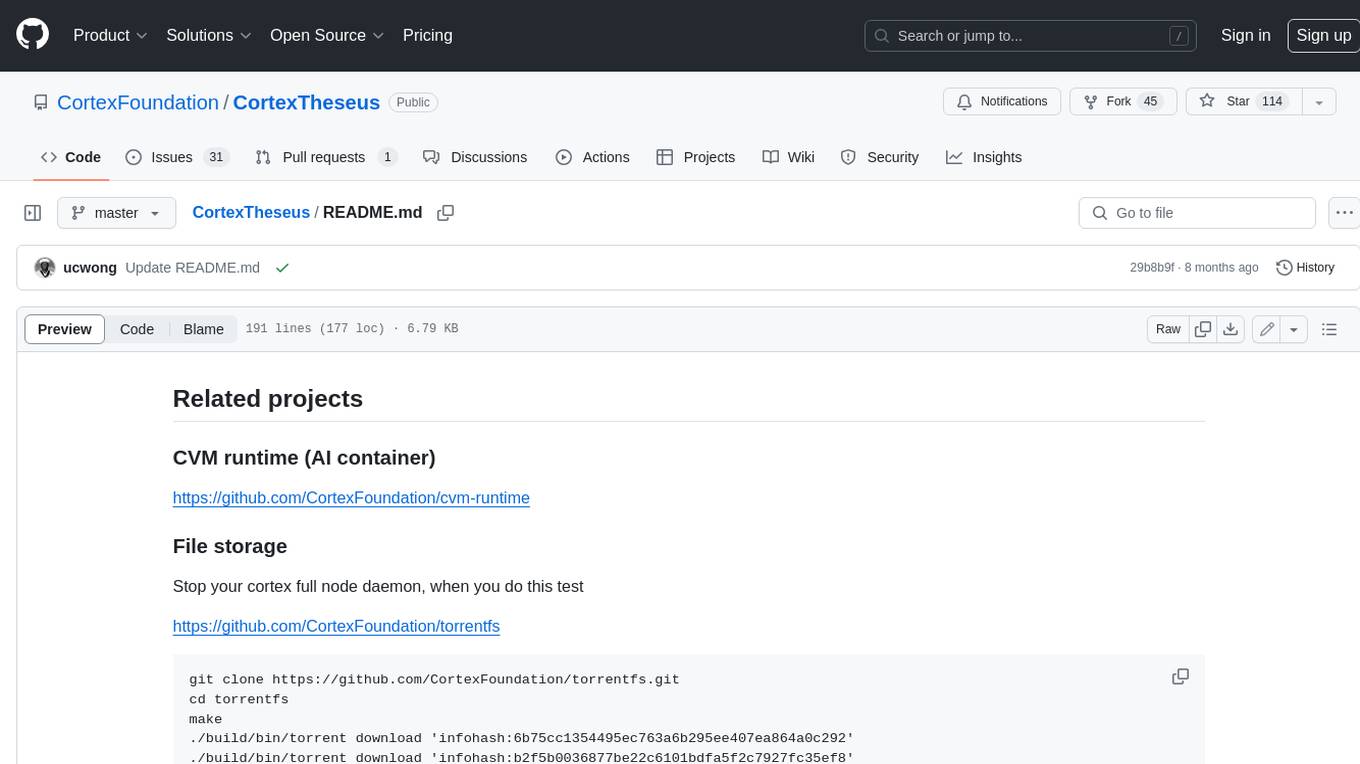

CortexTheseus

CortexTheseus is a full node implementation of the Cortex blockchain, written in C++. It provides a complete set of features for interacting with the Cortex network, including the ability to create and manage accounts, send and receive transactions, and participate in consensus. CortexTheseus is designed to be scalable, secure, and easy to use, making it an ideal choice for developers building applications on the Cortex blockchain.

CHATPGT-MEV-BOT-ETH

This tool is a bot that monitors the performance of MEV transactions on the Ethereum blockchain. It provides real-time data on MEV profitability, transaction volume, and network congestion. The bot can be used to identify profitable MEV opportunities and to track the performance of MEV strategies.

airdrop-checker

Airdrop-checker is a tool that helps you to check if you are eligible for any airdrops. It supports multiple airdrops, including Altlayer, Rabby points, Zetachain, Frame, Anoma, Dymension, and MEME. To use the tool, you need to install it using npm and then fill the addresses files in the addresses folder with your wallet addresses. Once you have done this, you can run the tool using npm start.

go-cyber

Cyber is a superintelligence protocol that aims to create a decentralized and censorship-resistant internet. It uses a novel consensus mechanism called CometBFT and a knowledge graph to store and process information. Cyber is designed to be scalable, secure, and efficient, and it has the potential to revolutionize the way we interact with the internet.

bittensor

Bittensor is an internet-scale neural network that incentivizes computers to provide access to machine learning models in a decentralized and censorship-resistant manner. It operates through a token-based mechanism where miners host, train, and procure machine learning systems to fulfill verification problems defined by validators. The network rewards miners and validators for their contributions, ensuring continuous improvement in knowledge output. Bittensor allows anyone to participate, extract value, and govern the network without centralized control. It supports tasks such as generating text, audio, images, and extracting numerical representations.

-4CAF50?style=for-the-badge)

-0072B8?style=for-the-badge)

-FF5733?style=for-the-badge)

-FF9800?style=for-the-badge)

-4CAF50?style=for-the-badge)

-00BFFF?style=for-the-badge)

-FF5733?style=for-the-badge)

-8E44AD?style=for-the-badge)

-0072B8?style=for-the-badge)

-FF9800?style=for-the-badge)