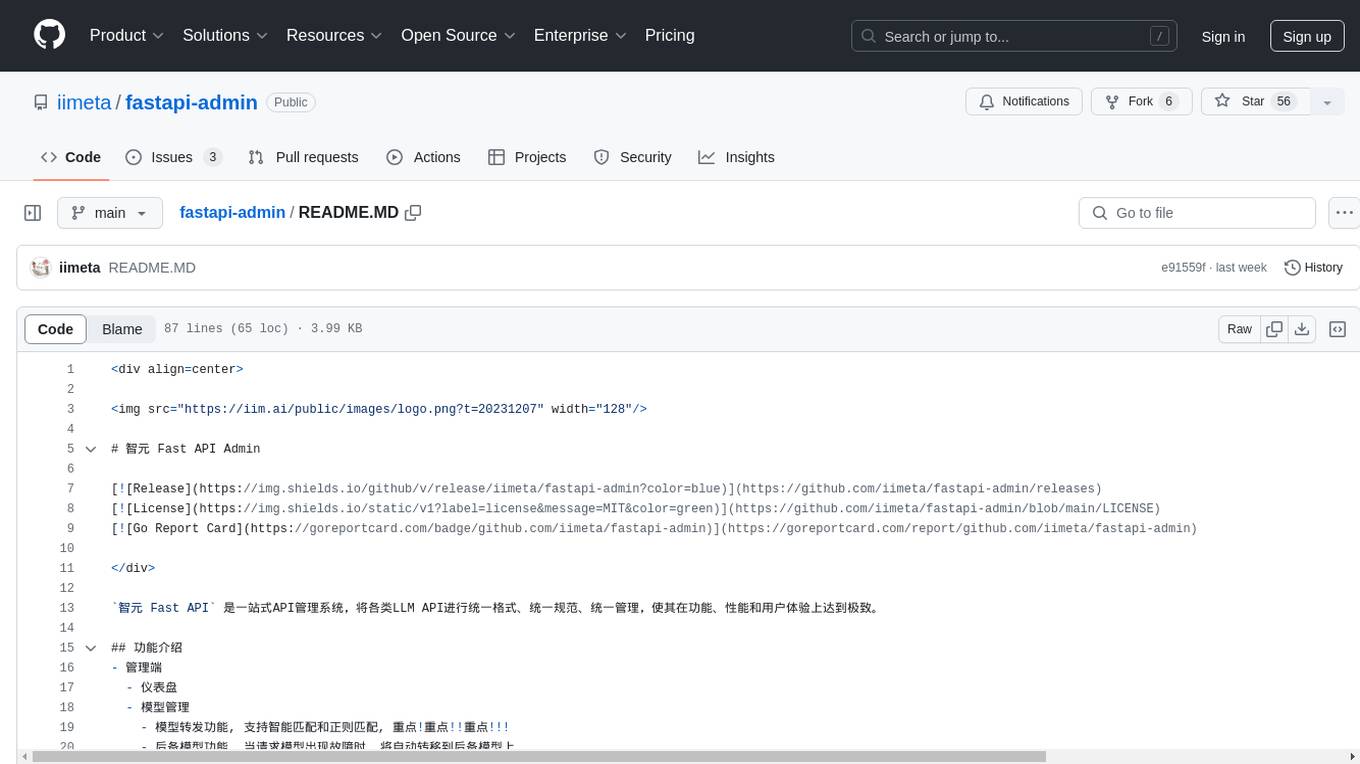

fastapi-admin

企业级 LLM API 快速集成系统,支持OpenAI、Azure、文心一言、讯飞星火、通义千问、智谱GLM、Gemini、DeepSeek、Anthropic Claude以及OpenAI格式的模型等,简洁的页面风格,轻量高效且稳定,支持Docker一键部署。

Stars: 114

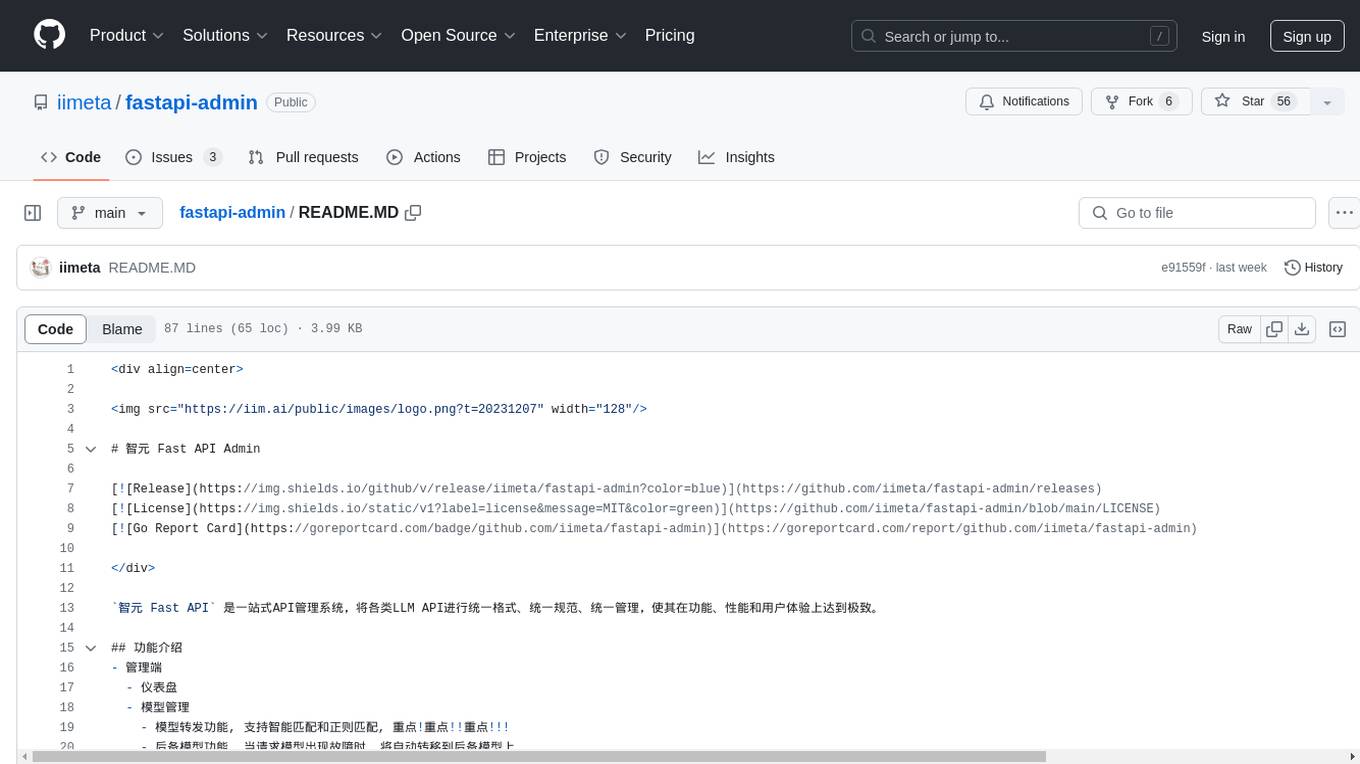

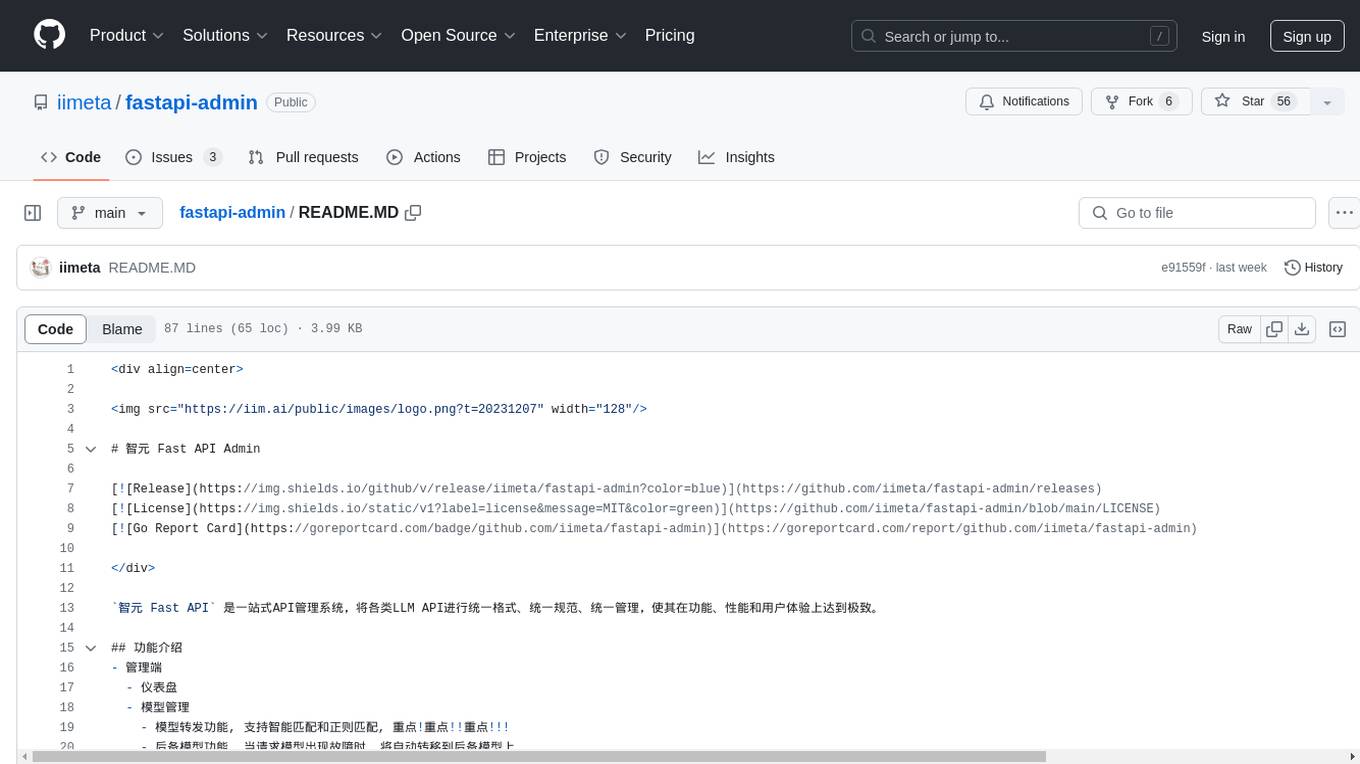

智元 Fast API is a one-stop API management system that unifies various LLM APIs in terms of format, standards, and management to achieve the ultimate in functionality, performance, and user experience. It includes features such as model management with intelligent and regex matching, backup model functionality, key management, proxy management, company management, user management, and chat management for both admin and user ends. The project supports cluster deployment, multi-site deployment, and cross-region deployment. It also provides a public API site for registration with a contact to the author for a 10 million quota. The tool offers a comprehensive dashboard, model management, application management, key management, and chat management functionalities for users.

README:

企业级 LLM API 快速集成系统,支持DeepSeek、OpenAI、Azure、文心一言、讯飞星火、通义千问、智谱GLM、豆包、Gemini、Anthropic Claude以及OpenAI格式的模型等,简洁的页面风格,轻量高效且稳定,支持Docker一键部署。业务系统只需要按照统一API标准,对接一次的开发工作量,即可无缝对接N个大模型,无需考虑N个大模型背后的各种复杂逻辑等等,可大大降低开发和维护成本...

- 管理端

- 仪表盘

- 模型管理

- 模型代理

- 密钥管理

- 分组管理

- 用户管理

- 应用管理

- 应用密钥

- 通知管理

- 消息通知

- 通知模板

- 财务中心

- 账单明细

- 交易记录

- 任务中心

- 视频任务

- 文件任务

- 批处理任务

- 日志管理

- 文本日志

- 绘图日志

- 音频日志

- 视频日志

- 文件日志

- 批处理日志

- 通用日志

- 系统管理

- 提供商

- 代理商

- 站点配置

- 配置管理

- 代理商端

- 仪表盘

- 我的模型

- 我的分组

- 用户管理

- 应用管理

- 应用密钥

- 财务中心

- 账单明细

- 交易记录

- 任务中心

- 视频任务

- 文件任务

- 批处理任务

- 日志管理

- 文本日志

- 绘图日志

- 音频日志

- 视频日志

- 文件日志

- 批处理日志

- 通用日志

- 系统管理

- 站点配置

- 用户端

- 仪表盘

- 我的模型

- 我的分组

- 应用管理

- 应用密钥

- 财务中心

- 账单明细

- 交易记录

- 任务中心

- 视频任务

- 文件任务

- 批处理任务

- 日志管理

- 文本日志

- 绘图日志

- 音频日志

- 视频日志

- 文件日志

- 批处理日志

- 通用日志

- 管理端: https://demo.fastapi.ai/admin

- 用户端: https://demo.fastapi.ai/login

- 代理商: https://demo.fastapi.ai/reseller

- 账号/密码均是: demo/123456

- 管理端: https://demo.fastapi.pro/admin

- 用户端: https://demo.fastapi.pro/login

- 代理商: https://demo.fastapi.pro/reseller

- 账号/密码均是: demo/123456

✔️ Docker部署

✔️ 集群部署

✔️ 多地部署

- 用户端: https://free.fastapi.pro/login

- 代理商: https://free.fastapi.pro/reseller

- API接口: https://api.free.fastapi.pro

- 注册就送 $1,000,000 额度, 支持注册代理商

| 仓库 | API | Web | Admin | SDK |

|---|---|---|---|---|

| 主库 | fastapi | fastapi-web | fastapi-admin | fastapi-sdk |

| 码云 | fastapi | fastapi-web | fastapi-admin | fastapi-sdk |

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for fastapi-admin

Similar Open Source Tools

fastapi-admin

智元 Fast API is a one-stop API management system that unifies various LLM APIs in terms of format, standards, and management to achieve the ultimate in functionality, performance, and user experience. It includes features such as model management with intelligent and regex matching, backup model functionality, key management, proxy management, company management, user management, and chat management for both admin and user ends. The project supports cluster deployment, multi-site deployment, and cross-region deployment. It also provides a public API site for registration with a contact to the author for a 10 million quota. The tool offers a comprehensive dashboard, model management, application management, key management, and chat management functionalities for users.

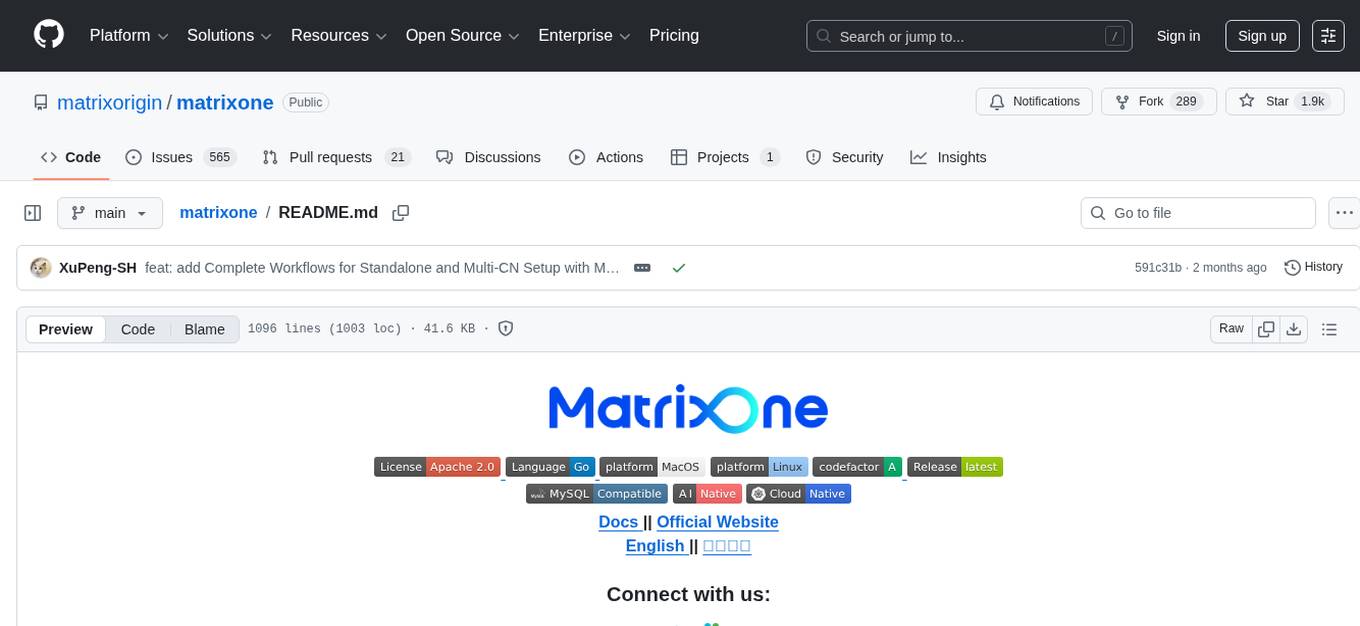

matrixone

MatrixOne is the industry's first database to bring Git-style version control to data, combined with MySQL compatibility, AI-native capabilities, and cloud-native architecture. It is a HTAP (Hybrid Transactional/Analytical Processing) database with a hyper-converged HSTAP engine that seamlessly handles transactional, analytical, full-text search, and vector search workloads in a single unified system—no data movement, no ETL, no compromises. Manage your database like code with features like instant snapshots, time travel, branch & merge, instant rollback, and complete audit trail. Built for the AI era, MatrixOne is MySQL-compatible, AI-native, and cloud-native, offering storage-compute separation, elastic scaling, and Kubernetes-native deployment. It serves as one database for everything, replacing multiple databases and ETL jobs with native OLTP, OLAP, full-text search, and vector search capabilities.

Wegent

Wegent is an open-source AI-native operating system designed to define, organize, and run intelligent agent teams. It offers various core features such as a chat agent with multi-model support, conversation history, group chat, attachment parsing, follow-up mode, error correction mode, long-term memory, sandbox execution, and extensions. Additionally, Wegent includes a code agent for cloud-based code execution, AI feed for task triggers, AI knowledge for document management, and AI device for running tasks locally. The platform is highly extensible, allowing for custom agents, agent creation wizard, organization management, collaboration modes, skill support, MCP tools, execution engines, YAML config, and an API for easy integration with other systems.

all-api-hub

All API Hub is an open-source browser extension that serves as a centralized management tool for third-party AI aggregation hubs and self-built New APIs. It automatically identifies accounts, checks balances, synchronizes models, manages keys, and supports cross-platform and cloud backups. The extension supports various aggregation hubs like one-api, new-api, Veloera, one-hub, done-hub, Neo-API, Super-API, RIX_API, and VoAPI. It offers features such as intelligent site recognition, multi-account overview panel, automatic check-ins, token and key management, model information and pricing display, model and interface validation, usage analysis and visualization, quick export integration, self-built New API and Veloera management tools, Cloudflare challenge assistant, data backup and synchronization, multi-platform support, and privacy-focused local storage.

timeline-studio

Timeline Studio is a next-generation professional video editor with AI integration that automates content creation for social media. It combines the power of desktop applications with the convenience of web interfaces. With 257 AI tools, GPU acceleration, plugin system, multi-language interface, and local processing, Timeline Studio offers complete video production automation. Users can create videos for various social media platforms like TikTok, YouTube, Vimeo, Telegram, and Instagram with optimized versions. The tool saves time, understands trends, provides professional quality, and allows for easy feature extension through plugins. Timeline Studio is open source, transparent, and offers significant time savings and quality improvements for video editing tasks.

ALwrity

ALwrity is a lightweight and user-friendly text analysis tool designed for developers and data scientists. It provides various functionalities for analyzing and processing text data, including sentiment analysis, keyword extraction, and text summarization. With ALwrity, users can easily gain insights from their text data and make informed decisions based on the analysis results. The tool is highly customizable and can be integrated into existing workflows seamlessly, making it a valuable asset for anyone working with text data in their projects.

bitcart

Bitcart is a platform designed for merchants, users, and developers, providing easy setup and usage. It includes various linked repositories for core daemons, admin panel, ready store, Docker packaging, Python library for coins connection, BitCCL scripting language, documentation, and official site. The platform aims to simplify the process for merchants and developers to interact and transact with cryptocurrencies, offering a comprehensive ecosystem for managing transactions and payments.

Proma

Proma is a next-generation integrated general Agent AI desktop application. It prioritizes local usage, supports multiple vendors, and is completely open source. Proma aims to continue implementing collaborative work between multiple Agents (personal and external), linking Agents with external entities, solidifying Tools and Skills, and utilizing user understanding and memory to actively provide software and suggestions. Proma is rapidly evolving with the help of VibeCoding tools and welcomes contributions from the community.

cia

CIA is a powerful open-source tool designed for data analysis and visualization. It provides a user-friendly interface for processing large datasets and generating insightful reports. With CIA, users can easily explore data, perform statistical analysis, and create interactive visualizations to communicate findings effectively. Whether you are a data scientist, analyst, or researcher, CIA offers a comprehensive set of features to streamline your data analysis workflow and uncover valuable insights.

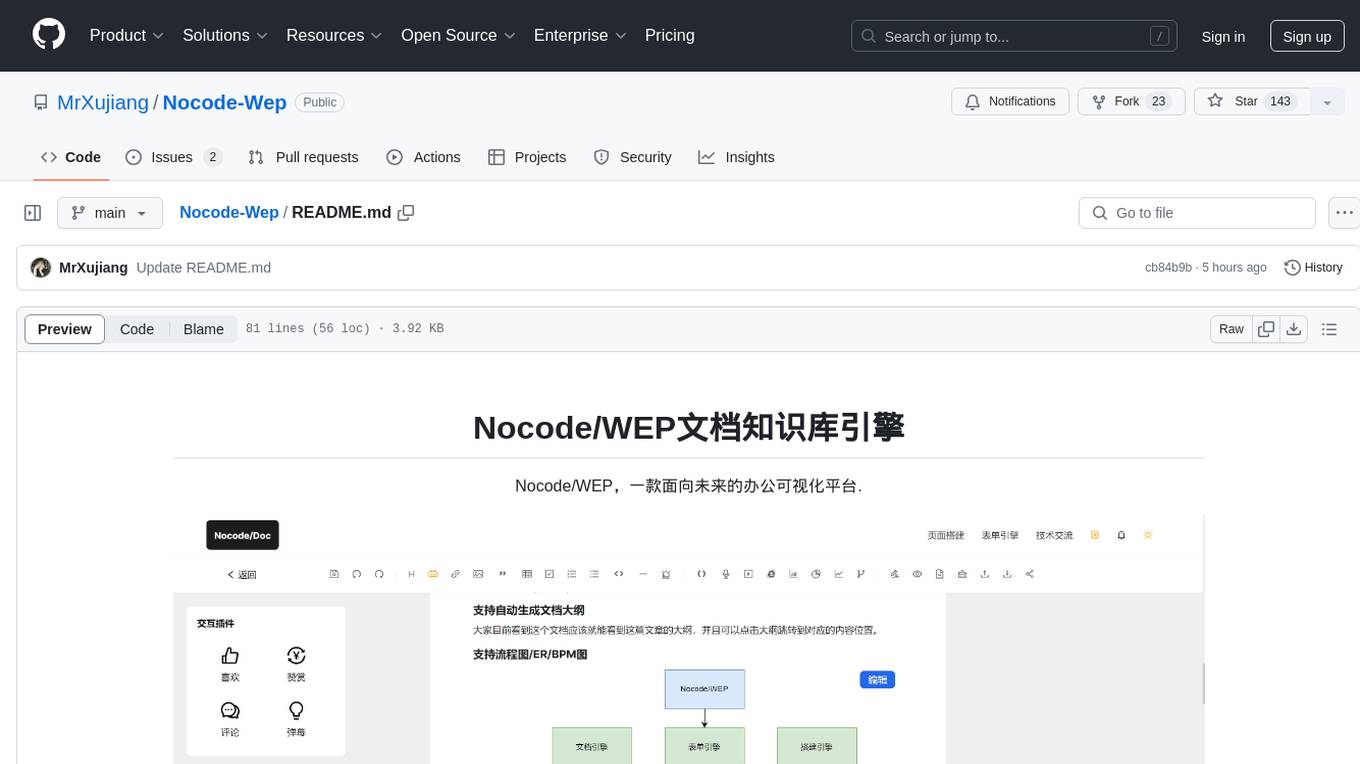

Nocode-Wep

Nocode/WEP is a forward-looking office visualization platform that includes modules for document building, web application creation, presentation design, and AI capabilities for office scenarios. It supports features such as configuring bullet comments, global article comments, multimedia content, custom drawing boards, flowchart editor, form designer, keyword annotations, article statistics, custom appreciation settings, JSON import/export, content block copying, and unlimited hierarchical directories. The platform is compatible with major browsers and aims to deliver content value, iterate products, share technology, and promote open-source collaboration.

Code-Review-GPT-Gitlab

A project that utilizes large models to help with Code Review on Gitlab, aimed at improving development efficiency. The project is customized for Gitlab and is developing a Multi-Agent plugin for collaborative review. It integrates various large models for code security issues and stays updated with the latest Code Review trends. The project architecture is designed to be powerful, flexible, and efficient, with easy integration of different models and high customization for developers.

robusta

Robusta is a tool designed to enhance Prometheus notifications for Kubernetes environments. It offers features such as smart grouping to reduce notification spam, AI investigation for alert analysis, alert enrichment with additional data like pod logs, self-healing capabilities for defining auto-remediation rules, advanced routing options, problem detection without PromQL, change-tracking for Kubernetes resources, auto-resolve functionality, and integration with various external systems like Slack, Teams, and Jira. Users can utilize Robusta with or without Prometheus, and it can be installed alongside existing Prometheus setups or as part of an all-in-one Kubernetes observability stack.

poco-agent

Poco Agent is a cloud-based tool that provides a secure sandbox environment for running tasks without affecting the host machine. It offers a modern UI with mobile adaptability, easy configuration through Docker, and extensive capabilities with support for MCP protocol and custom skills. Users can run tasks asynchronously and schedule them, even when the web interface is closed. Additional features include a built-in browser for internet research and GitHub repository integration. Poco Agent aims to be a more secure, visually appealing, and user-friendly alternative to OpenClaw.

CTFCrackTools

CTFCrackTools X is the next generation of CTFCrackTools, featuring extreme performance and experience, extensible node-based architecture, and future-oriented technology stack. It offers a visual node-based workflow for encoding and decoding processes, with 43+ built-in algorithms covering common CTF needs like encoding, classical ciphers, modern encryption, hashing, and text processing. The tool is lightweight (< 15MB), high-performance, and cross-platform, supporting Windows, macOS, and Linux without the need for a runtime environment. It aims to provide a beginner-friendly tool for CTF enthusiasts to easily work on challenges and improve their skills.

codemod

Codemod platform is a tool that helps developers create, distribute, and run codemods in codebases of any size. The AI-powered, community-led codemods enable automation of framework upgrades, large refactoring, and boilerplate programming with speed and developer experience. It aims to make dream migrations a reality for developers by providing a platform for seamless codemod operations.

ClaraVerse

ClaraVerse is a privacy-first AI assistant and agent builder that allows users to chat with AI, create intelligent agents, and turn them into fully functional apps. It operates entirely on open-source models running on the user's device, ensuring data privacy and security. With features like AI assistant, image generation, intelligent agent builder, and image gallery, ClaraVerse offers a versatile platform for AI interaction and app development. Users can install ClaraVerse through Docker, native desktop apps, or the web version, with detailed instructions provided for each option. The tool is designed to empower users with control over their AI stack and leverage community-driven innovations for AI development.

For similar tasks

fastapi-admin

智元 Fast API is a one-stop API management system that unifies various LLM APIs in terms of format, standards, and management to achieve the ultimate in functionality, performance, and user experience. It includes features such as model management with intelligent and regex matching, backup model functionality, key management, proxy management, company management, user management, and chat management for both admin and user ends. The project supports cluster deployment, multi-site deployment, and cross-region deployment. It also provides a public API site for registration with a contact to the author for a 10 million quota. The tool offers a comprehensive dashboard, model management, application management, key management, and chat management functionalities for users.

omnia

Omnia is a deployment tool designed to turn servers with RPM-based Linux images into functioning Slurm/Kubernetes clusters. It provides an Ansible playbook-based deployment for Slurm and Kubernetes on servers running an RPM-based Linux OS. The tool simplifies the process of setting up and managing clusters, making it easier for users to deploy and maintain their infrastructure.

mlflow

MLflow is a platform to streamline machine learning development, including tracking experiments, packaging code into reproducible runs, and sharing and deploying models. MLflow offers a set of lightweight APIs that can be used with any existing machine learning application or library (TensorFlow, PyTorch, XGBoost, etc), wherever you currently run ML code (e.g. in notebooks, standalone applications or the cloud). MLflow's current components are:

* `MLflow Tracking

model_server

OpenVINO™ Model Server (OVMS) is a high-performance system for serving models. Implemented in C++ for scalability and optimized for deployment on Intel architectures, the model server uses the same architecture and API as TensorFlow Serving and KServe while applying OpenVINO for inference execution. Inference service is provided via gRPC or REST API, making deploying new algorithms and AI experiments easy.

kitops

KitOps is a packaging and versioning system for AI/ML projects that uses open standards so it works with the AI/ML, development, and DevOps tools you are already using. KitOps simplifies the handoffs between data scientists, application developers, and SREs working with LLMs and other AI/ML models. KitOps' ModelKits are a standards-based package for models, their dependencies, configurations, and codebases. ModelKits are portable, reproducible, and work with the tools you already use.

CSGHub

CSGHub is an open source, trustworthy large model asset management platform that can assist users in governing the assets involved in the lifecycle of LLM and LLM applications (datasets, model files, codes, etc). With CSGHub, users can perform operations on LLM assets, including uploading, downloading, storing, verifying, and distributing, through Web interface, Git command line, or natural language Chatbot. Meanwhile, the platform provides microservice submodules and standardized OpenAPIs, which could be easily integrated with users' own systems. CSGHub is committed to bringing users an asset management platform that is natively designed for large models and can be deployed On-Premise for fully offline operation. CSGHub offers functionalities similar to a privatized Huggingface(on-premise Huggingface), managing LLM assets in a manner akin to how OpenStack Glance manages virtual machine images, Harbor manages container images, and Sonatype Nexus manages artifacts.

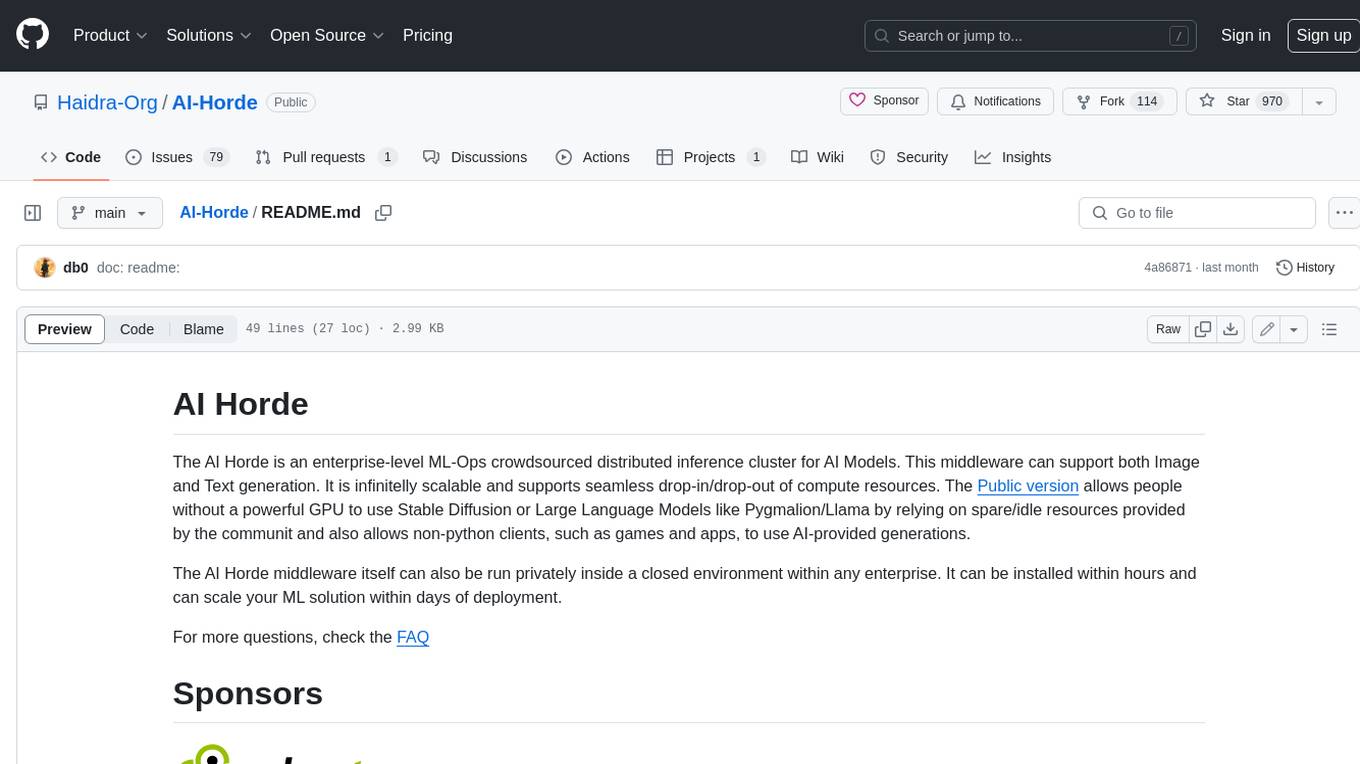

AI-Horde

The AI Horde is an enterprise-level ML-Ops crowdsourced distributed inference cluster for AI Models. This middleware can support both Image and Text generation. It is infinitely scalable and supports seamless drop-in/drop-out of compute resources. The Public version allows people without a powerful GPU to use Stable Diffusion or Large Language Models like Pygmalion/Llama by relying on spare/idle resources provided by the community and also allows non-python clients, such as games and apps, to use AI-provided generations.

caikit

Caikit is an AI toolkit that enables users to manage models through a set of developer friendly APIs. It provides a consistent format for creating and using AI models against a wide variety of data domains and tasks.

For similar jobs

google.aip.dev

API Improvement Proposals (AIPs) are design documents that provide high-level, concise documentation for API development at Google. The goal of AIPs is to serve as the source of truth for API-related documentation and to facilitate discussion and consensus among API teams. AIPs are similar to Python's enhancement proposals (PEPs) and are organized into different areas within Google to accommodate historical differences in customs, styles, and guidance.

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.

speakeasy

Speakeasy is a tool that helps developers create production-quality SDKs, Terraform providers, documentation, and more from OpenAPI specifications. It supports a wide range of languages, including Go, Python, TypeScript, Java, and C#, and provides features such as automatic maintenance, type safety, and fault tolerance. Speakeasy also integrates with popular package managers like npm, PyPI, Maven, and Terraform Registry for easy distribution.

apicat

ApiCat is an API documentation management tool that is fully compatible with the OpenAPI specification. With ApiCat, you can freely and efficiently manage your APIs. It integrates the capabilities of LLM, which not only helps you automatically generate API documentation and data models but also creates corresponding test cases based on the API content. Using ApiCat, you can quickly accomplish anything outside of coding, allowing you to focus your energy on the code itself.

aiohttp-pydantic

Aiohttp pydantic is an aiohttp view to easily parse and validate requests. You define using function annotations what your methods for handling HTTP verbs expect, and Aiohttp pydantic parses the HTTP request for you, validates the data, and injects the parameters you want. It provides features like query string, request body, URL path, and HTTP headers validation, as well as Open API Specification generation.

ain

Ain is a terminal HTTP API client designed for scripting input and processing output via pipes. It allows flexible organization of APIs using files and folders, supports shell-scripts and executables for common tasks, handles url-encoding, and enables sharing the resulting curl, wget, or httpie command-line. Users can put things that change in environment variables or .env-files, and pipe the API output for further processing. Ain targets users who work with many APIs using a simple file format and uses curl, wget, or httpie to make the actual calls.

OllamaKit

OllamaKit is a Swift library designed to simplify interactions with the Ollama API. It handles network communication and data processing, offering an efficient interface for Swift applications to communicate with the Ollama API. The library is optimized for use within Ollamac, a macOS app for interacting with Ollama models.

ollama4j

Ollama4j is a Java library that serves as a wrapper or binding for the Ollama server. It facilitates communication with the Ollama server and provides models for deployment. The tool requires Java 11 or higher and can be installed locally or via Docker. Users can integrate Ollama4j into Maven projects by adding the specified dependency. The tool offers API specifications and supports various development tasks such as building, running unit tests, and integration tests. Releases are automated through GitHub Actions CI workflow. Areas of improvement include adhering to Java naming conventions, updating deprecated code, implementing logging, using lombok, and enhancing request body creation. Contributions to the project are encouraged, whether reporting bugs, suggesting enhancements, or contributing code.