AI-Horde

A crowdsourced distributed cluster for AI art and text generation

Stars: 1132

The AI Horde is an enterprise-level ML-Ops crowdsourced distributed inference cluster for AI Models. This middleware can support both Image and Text generation. It is infinitely scalable and supports seamless drop-in/drop-out of compute resources. The Public version allows people without a powerful GPU to use Stable Diffusion or Large Language Models like Pygmalion/Llama by relying on spare/idle resources provided by the community and also allows non-python clients, such as games and apps, to use AI-provided generations.

README:

The AI Horde is a free community service that lets anyone create AI-generated images and text. In the spirit of projects like Folding@home (sharing compute for medical research) or SETI@home (sharing compute for the search for alien signals), AI Horde lets volunteers share their computer power through workers to help others create AI art and writing.

When you make a request - like asking for "a painting of a sunset over mountains" - the AI Horde system finds available volunteer computers that can handle your job. It's similar to how ride-sharing apps connect passengers with nearby drivers, but instead of rides, you're getting AI-generated content.

The system uses "kudos" points to keep things fair. Workers earn kudos when their computers help process requests, which they can use to get priority for their own requests or leave unspent to help others. Importantly, kudos can never be bought or sold - this is strictly against the Terms of Service. If you would like to learn more about kudos, see our detailed explanation.

What makes AI Horde special is that it's completely free and community-run, with a strong commitment to staying that way. The kudos system is specifically designed to ensure that access to these resources remains equitable. While users with more kudos get faster service, anyone can use it for free, even anonymously, and kudos never expire.

The AI-Horde hopes to ensure that everyone gets a chance to use these exciting AI technologies, regardless of their financial means or technical resources. You can read more about why we do this in the motivations document.

- AI-Horde: Community-Powered AI Generation - Table of Contents

- Sponsors

- Technical Introduction

- Getting Started with AI Horde

- Contribute your GPU (Become a worker)

- Integrate with the AI-Horde

- Community

The AI Horde is an enterprise-level ML-Ops crowd-sourced distributed inference cluster for AI Models. This middleware supports both image and text generation, making it highly versatile. It is designed to be highly scalable, allowing for seamless drop-in/drop-out of compute resources.

- Scalability: The system can scale effortlessly to accommodate varying workloads, ensuring efficient use of available resources.

- Flexibility: Supports both image and text generation, catering to a wide range of AI applications.

- Community-Powered: Relies on spare/idle resources provided by the community, making advanced AI accessible to everyone.

The public version allows individuals without powerful GPUs to use advanced AI models like Stable Diffusion or Large Language Models (LLMs) such as Pygmalion/Llama. This is achieved by leveraging the spare computing power provided by the community, running software we call 'workers'. Additionally, it supports clients, such as third party websites, games and apps, to utilize AI-generated content through a REST API. You can see the public instance's performance and application statistics on our grafana instance.

The AI Horde middleware can also be deployed privately within any enterprise environment. It can be installed within hours and can scale your ML solution within days of deployment, providing a robust and flexible solution for enterprise-level AI needs.

- Python: 3.9 or later

- PostgreSQL

- Redis: For caching

- Docker support for full containerization

For a high-level overview of how the AI Horde operates, including diagrams of the request/job lifecycle, see the request/job lifecycle explanation.

There are also individual readmes for each specific mode supported by the AI Horde.

For common questions, check the FAQ. If you'd like more details on features of the horde or the reasoning behind it, see the documentation which goes greater into depth.

You can use any service powered by the AI Horde either with a registered account or anonymously. You can find a partial list of services powered by the AI-Horde on our main website.

Note: The only information we store from your account is your unique ID. We do not use your id for any other purpose. See our privacy policy for more details.

- Visit AI Horde Registration

- Log in using one of the supported OAuth2 services

- Choose your username and receive your API key

- Benefits:

- Start with higher priority in generation queues

- Can change username or reset API key if needed

- Maintain and track your kudos balance

- Can recover account access through OAuth2 service

- Minimum kudos balance of 25

- Visit AI Horde Registration

- This method is the default if you do not log in with an OAuth2 service (google, github, discord, etc)

- Important: If you lose your API key, the account cannot be recovered

- Cannot change username or reset API key

- Still earns and maintains kudos

- Still better priority than anonymous users

- Use API key '0000000000'

- Lowest priority in generation queues

- No kudos tracking

- No need to register

- Service may be restricted for anonymous users during high load

The main benefit of registration is participating in the kudos system. Kudos determine your priority in the queue - the more kudos you have, the faster your requests are processed. You can earn kudos by:

- Running a worker to help process other users' requests

- Receiving kudos as a thank-you for donations (though kudos cannot be directly bought or sold)

- Being online and available as a worker

- Participating in the kudos economy on the official discord by logging in with the bot. (Use the command

/loginin any channel, click the blue button and enter your API key at the popup).

Remember: AI Horde is committed to remaining free and accessible. While the kudos system provides priority benefits, it's designed to encourage community contribution rather than commercialization. All users, even anonymous ones, can access the service's core features. Read about this on the official developer's blog.

Be sure to register first.

The officially supported way to add your GPU for image generation is by running the worker software horde-worker-reGen.

There are multiple ways to contribute your GPU for text generation:

- AI-Horde-Worker can connect to inference backends and 'bridge' you to the AI-Horde

- KoboldAI

- KoboldCPP

- Aphrodite Engine

- ... and many others. Join the discussion in the #horde channel of the KoboldAI Discord Server to learn more.

If you want to build an integration to the AI Horde (Bot, application, scripts etc), please consult our Integration Readme

If you have any questions or feedback, we have a vibrant community on discord

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AI-Horde

Similar Open Source Tools

AI-Horde

The AI Horde is an enterprise-level ML-Ops crowdsourced distributed inference cluster for AI Models. This middleware can support both Image and Text generation. It is infinitely scalable and supports seamless drop-in/drop-out of compute resources. The Public version allows people without a powerful GPU to use Stable Diffusion or Large Language Models like Pygmalion/Llama by relying on spare/idle resources provided by the community and also allows non-python clients, such as games and apps, to use AI-provided generations.

obsidian-Smart2Brain

Your Smart Second Brain is a free and open-source Obsidian plugin that serves as your personal assistant, powered by large language models like ChatGPT or Llama2. It can directly access and process your notes, eliminating the need for manual prompt editing, and it can operate completely offline, ensuring your data remains private and secure.

WilmerAI

WilmerAI is a middleware system designed to process prompts before sending them to Large Language Models (LLMs). It categorizes prompts, routes them to appropriate workflows, and generates manageable prompts for local models. It acts as an intermediary between the user interface and LLM APIs, supporting multiple backend LLMs simultaneously. WilmerAI provides API endpoints compatible with OpenAI API, supports prompt templates, and offers flexible connections to various LLM APIs. The project is under heavy development and may contain bugs or incomplete code.

rakis

Rakis is a decentralized verifiable AI network in the browser where nodes can accept AI inference requests, run local models, verify results, and arrive at consensus without servers. It is open-source, functional, multi-model, multi-chain, and browser-first, allowing anyone to participate in the network. The project implements an embedding-based consensus mechanism for verifiable inference. Users can run their own node on rakis.ai or use the compiled version hosted on Huggingface. The project is meant for educational purposes and is a work in progress.

recognize

Recognize is a smart media tagging tool for Nextcloud that automatically categorizes photos and music by recognizing faces, animals, landscapes, food, vehicles, buildings, landmarks, monuments, music genres, and human actions in videos. It uses pre-trained models for object detection, landmark recognition, face comparison, music genre classification, and video classification. The tool ensures privacy by processing images locally without sending data to cloud providers. However, it cannot process end-to-end encrypted files. Recognize is rated positively for ethical AI practices in terms of open-source software, freely available models, and training data transparency, except for music genre recognition due to limited access to training data.

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

AppFlowy

AppFlowy.IO is an open-source alternative to Notion, providing users with control over their data and customizations. It aims to offer functionality, data security, and cross-platform native experience to individuals, as well as building blocks and collaboration infra services to enterprises and hackers. The tool is built with Flutter and Rust, supporting multiple platforms and emphasizing long-term maintainability. AppFlowy prioritizes data privacy, reliable native experience, and community-driven extensibility, aiming to democratize the creation of complex workplace management tools.

digma

Digma is a Continuous Feedback platform that provides code-level insights related to performance, errors, and usage during development. It empowers developers to own their code all the way to production, improving code quality and preventing critical issues. Digma integrates with OpenTelemetry traces and metrics to generate insights in the IDE, helping developers analyze code scalability, bottlenecks, errors, and usage patterns.

Aimmy

Aimmy is a universal AI-Based Aim Alignment Mechanism developed by BabyHamsta, MarsQQ & Taylor to make gaming more accessible for users who have difficulty aiming. It utilizes DirectML, ONNX, and YOLOV8 for player detection, offering high accuracy and fast performance. Aimmy features an easy-to-use UI, extensive customizability, and is free of ads and paywalls. It is designed for gamers facing challenges like physical or mental disabilities, poor hand-eye coordination, or aiming difficulties due to environmental factors. Aimmy provides various features like AI detection, customizability, anti-recoil system, mouse movement methods, hotswappability, and a model/configuration store with repository support.

gpdb

Greenplum Database (GPDB) is an advanced, fully featured, open source data warehouse, based on PostgreSQL. It provides powerful and rapid analytics on petabyte scale data volumes. Uniquely geared toward big data analytics, Greenplum Database is powered by the world’s most advanced cost-based query optimizer delivering high analytical query performance on large data volumes.

autoMate

autoMate is an AI-powered local automation tool designed to help users automate repetitive tasks and reclaim their time. It leverages AI and RPA technology to operate computer interfaces, understand screen content, make autonomous decisions, and support local deployment for data security. With natural language task descriptions, users can easily automate complex workflows without the need for programming knowledge. The tool aims to transform work by freeing users from mundane activities and allowing them to focus on tasks that truly create value, enhancing efficiency and liberating creativity.

AutoGPT

AutoGPT is a revolutionary tool that empowers everyone to harness the power of AI. With AutoGPT, you can effortlessly build, test, and delegate tasks to AI agents, unlocking a world of possibilities. Our mission is to provide the tools you need to focus on what truly matters: innovation and creativity.

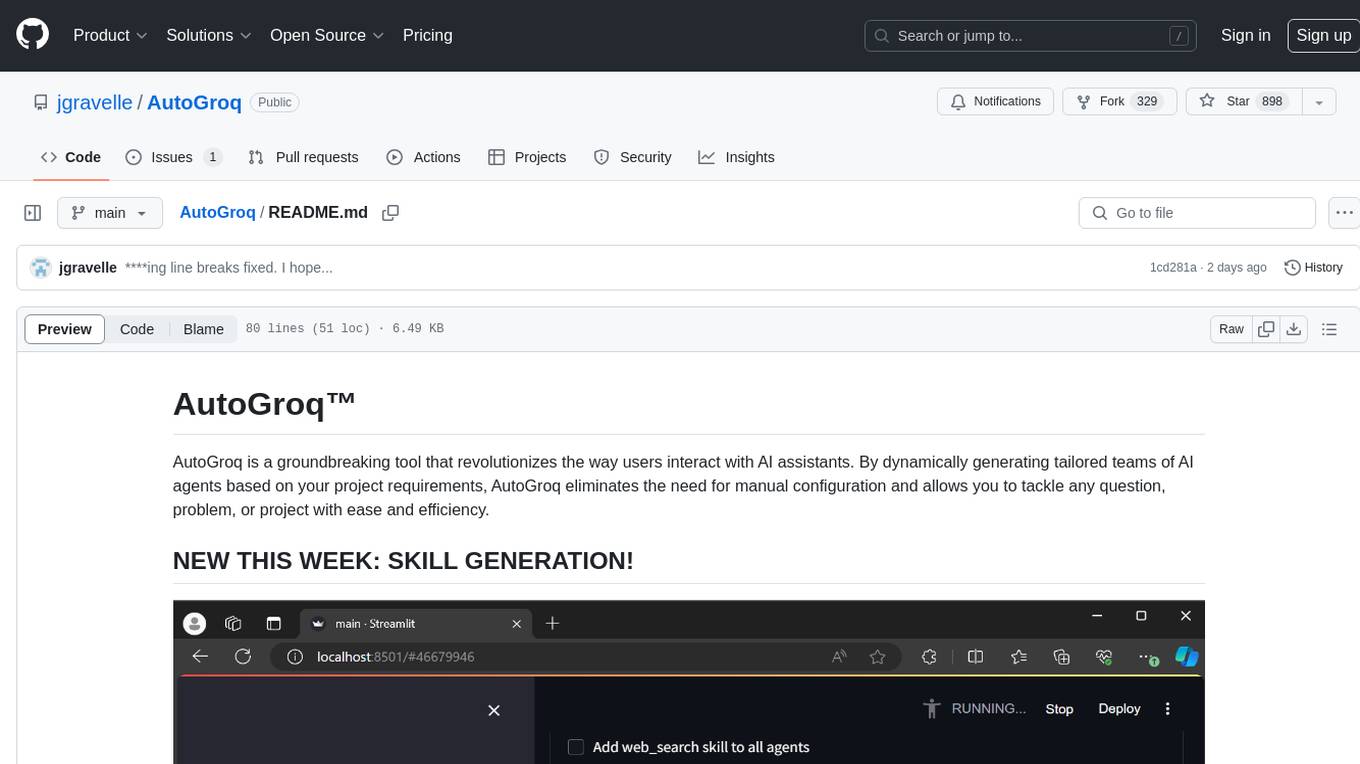

AutoGroq

AutoGroq is a revolutionary tool that dynamically generates tailored teams of AI agents based on project requirements, eliminating manual configuration. It enables users to effortlessly tackle questions, problems, and projects by creating expert agents, workflows, and skillsets with ease and efficiency. With features like natural conversation flow, code snippet extraction, and support for multiple language models, AutoGroq offers a seamless and intuitive AI assistant experience for developers and users.

Smart-Connections-Visualizer

The Smart Connections Visualizer Plugin is a tool designed to enhance note-taking and information visualization by creating dynamic force-directed graphs that represent connections between notes or excerpts. Users can customize visualization settings, preview notes, and interact with the graph to explore relationships and insights within their notes. The plugin aims to revolutionize communication with AI and improve decision-making processes by visualizing complex information in a more intuitive and context-driven manner.

morphik-core

Morphik is an AI-native toolset designed to help developers integrate context into their AI applications by providing tools to store, represent, and search unstructured data. It offers features such as multimodal search, fast metadata extraction, and integrations with existing tools. Morphik aims to address the challenges of traditional AI approaches that struggle with visually rich documents and provide a more comprehensive solution for understanding and processing complex data.

aide

Aide is an Open Source AI-native code editor that combines the powerful features of VS Code with advanced AI capabilities. It provides a combined chat + edit flow, proactive agents for fixing errors, inline editing widget, intelligent code completion, and AST navigation. Aide is designed to be an intelligent coding companion, helping users write better code faster while maintaining control over the development process.

For similar tasks

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

ray

Ray is a unified framework for scaling AI and Python applications. It consists of a core distributed runtime and a set of AI libraries for simplifying ML compute, including Data, Train, Tune, RLlib, and Serve. Ray runs on any machine, cluster, cloud provider, and Kubernetes, and features a growing ecosystem of community integrations. With Ray, you can seamlessly scale the same code from a laptop to a cluster, making it easy to meet the compute-intensive demands of modern ML workloads.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

djl

Deep Java Library (DJL) is an open-source, high-level, engine-agnostic Java framework for deep learning. It is designed to be easy to get started with and simple to use for Java developers. DJL provides a native Java development experience and allows users to integrate machine learning and deep learning models with their Java applications. The framework is deep learning engine agnostic, enabling users to switch engines at any point for optimal performance. DJL's ergonomic API interface guides users with best practices to accomplish deep learning tasks, such as running inference and training neural networks.

mlflow

MLflow is a platform to streamline machine learning development, including tracking experiments, packaging code into reproducible runs, and sharing and deploying models. MLflow offers a set of lightweight APIs that can be used with any existing machine learning application or library (TensorFlow, PyTorch, XGBoost, etc), wherever you currently run ML code (e.g. in notebooks, standalone applications or the cloud). MLflow's current components are:

* `MLflow Tracking

tt-metal

TT-NN is a python & C++ Neural Network OP library. It provides a low-level programming model, TT-Metalium, enabling kernel development for Tenstorrent hardware.

burn

Burn is a new comprehensive dynamic Deep Learning Framework built using Rust with extreme flexibility, compute efficiency and portability as its primary goals.

awsome-distributed-training

This repository contains reference architectures and test cases for distributed model training with Amazon SageMaker Hyperpod, AWS ParallelCluster, AWS Batch, and Amazon EKS. The test cases cover different types and sizes of models as well as different frameworks and parallel optimizations (Pytorch DDP/FSDP, MegatronLM, NemoMegatron...).

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.