AutoGPT

AutoGPT is the vision of accessible AI for everyone, to use and to build on. Our mission is to provide the tools, so that you can focus on what matters.

Stars: 181747

AutoGPT is a revolutionary tool that empowers everyone to harness the power of AI. With AutoGPT, you can effortlessly build, test, and delegate tasks to AI agents, unlocking a world of possibilities. Our mission is to provide the tools you need to focus on what truly matters: innovation and creativity.

README:

Deutsch | Español | français | 日本語 | 한국어 | Português | Русский | 中文

AutoGPT is a powerful platform that allows you to create, deploy, and manage continuous AI agents that automate complex workflows.

- Download to self-host (Free!)

- Join the Waitlist for the cloud-hosted beta (Closed Beta - Public release Coming Soon!)

[!NOTE] Setting up and hosting the AutoGPT Platform yourself is a technical process. If you'd rather something that just works, we recommend joining the waitlist for the cloud-hosted beta.

Before proceeding with the installation, ensure your system meets the following requirements:

- CPU: 4+ cores recommended

- RAM: Minimum 8GB, 16GB recommended

- Storage: At least 10GB of free space

- Operating Systems:

- Linux (Ubuntu 20.04 or newer recommended)

- macOS (10.15 or newer)

- Windows 10/11 with WSL2

- Required Software (with minimum versions):

- Docker Engine (20.10.0 or newer)

- Docker Compose (2.0.0 or newer)

- Git (2.30 or newer)

- Node.js (16.x or newer)

- npm (8.x or newer)

- VSCode (1.60 or newer) or any modern code editor

- Stable internet connection

- Access to required ports (will be configured in Docker)

- Ability to make outbound HTTPS connections

We've moved to a fully maintained and regularly updated documentation site.

👉 Follow the official self-hosting guide here

This tutorial assumes you have Docker, VSCode, git and npm installed.

Skip the manual steps and get started in minutes using our automatic setup script.

For macOS/Linux:

curl -fsSL https://setup.agpt.co/install.sh -o install.sh && bash install.sh

For Windows (PowerShell):

powershell -c "iwr https://setup.agpt.co/install.bat -o install.bat; ./install.bat"

This will install dependencies, configure Docker, and launch your local instance — all in one go.

The AutoGPT frontend is where users interact with our powerful AI automation platform. It offers multiple ways to engage with and leverage our AI agents. This is the interface where you'll bring your AI automation ideas to life:

Agent Builder: For those who want to customize, our intuitive, low-code interface allows you to design and configure your own AI agents.

Workflow Management: Build, modify, and optimize your automation workflows with ease. You build your agent by connecting blocks, where each block performs a single action.

Deployment Controls: Manage the lifecycle of your agents, from testing to production.

Ready-to-Use Agents: Don't want to build? Simply select from our library of pre-configured agents and put them to work immediately.

Agent Interaction: Whether you've built your own or are using pre-configured agents, easily run and interact with them through our user-friendly interface.

Monitoring and Analytics: Keep track of your agents' performance and gain insights to continually improve your automation processes.

Read this guide to learn how to build your own custom blocks.

The AutoGPT Server is the powerhouse of our platform This is where your agents run. Once deployed, agents can be triggered by external sources and can operate continuously. It contains all the essential components that make AutoGPT run smoothly.

Source Code: The core logic that drives our agents and automation processes.

Infrastructure: Robust systems that ensure reliable and scalable performance.

Marketplace: A comprehensive marketplace where you can find and deploy a wide range of pre-built agents.

Here are two examples of what you can do with AutoGPT:

-

Generate Viral Videos from Trending Topics

- This agent reads topics on Reddit.

- It identifies trending topics.

- It then automatically creates a short-form video based on the content.

-

Identify Top Quotes from Videos for Social Media

- This agent subscribes to your YouTube channel.

- When you post a new video, it transcribes it.

- It uses AI to identify the most impactful quotes to generate a summary.

- Then, it writes a post to automatically publish to your social media.

These examples show just a glimpse of what you can achieve with AutoGPT! You can create customized workflows to build agents for any use case.

🛡️ Polyform Shield License:

All code and content within the autogpt_platform folder is licensed under the Polyform Shield License. This new project is our in-developlemt platform for building, deploying and managing agents.Read more about this effort

🦉 MIT License:

All other portions of the AutoGPT repository (i.e., everything outside the autogpt_platform folder) are licensed under the MIT License. This includes the original stand-alone AutoGPT Agent, along with projects such as Forge, agbenchmark and the AutoGPT Classic GUI.We also publish additional work under the MIT Licence in other repositories, such as GravitasML which is developed for and used in the AutoGPT Platform. See also our MIT Licenced Code Ability project.

Our mission is to provide the tools, so that you can focus on what matters:

- 🏗️ Building - Lay the foundation for something amazing.

- 🧪 Testing - Fine-tune your agent to perfection.

- 🤝 Delegating - Let AI work for you, and have your ideas come to life.

Be part of the revolution! AutoGPT is here to stay, at the forefront of AI innovation.

📖 Documentation | 🚀 Contributing

Below is information about the classic version of AutoGPT.

🛠️ Build your own Agent - Quickstart

Forge your own agent! – Forge is a ready-to-go toolkit to build your own agent application. It handles most of the boilerplate code, letting you channel all your creativity into the things that set your agent apart. All tutorials are located here. Components from forge can also be used individually to speed up development and reduce boilerplate in your agent project.

🚀 Getting Started with Forge – This guide will walk you through the process of creating your own agent and using the benchmark and user interface.

📘 Learn More about Forge

Measure your agent's performance! The agbenchmark can be used with any agent that supports the agent protocol, and the integration with the project's CLI makes it even easier to use with AutoGPT and forge-based agents. The benchmark offers a stringent testing environment. Our framework allows for autonomous, objective performance evaluations, ensuring your agents are primed for real-world action.

📦 agbenchmark on Pypi

|

📘 Learn More about the Benchmark

Makes agents easy to use! The frontend gives you a user-friendly interface to control and monitor your agents. It connects to agents through the agent protocol, ensuring compatibility with many agents from both inside and outside of our ecosystem.

The frontend works out-of-the-box with all agents in the repo. Just use the CLI to run your agent of choice!

📘 Learn More about the Frontend

To make it as easy as possible to use all of the tools offered by the repository, a CLI is included at the root of the repo:

$ ./run

Usage: cli.py [OPTIONS] COMMAND [ARGS]...

Options:

--help Show this message and exit.

Commands:

agent Commands to create, start and stop agents

benchmark Commands to start the benchmark and list tests and categories

setup Installs dependencies needed for your system.Just clone the repo, install dependencies with ./run setup, and you should be good to go!

Get help - Discord 💬

To report a bug or request a feature, create a GitHub Issue. Please ensure someone else hasn't created an issue for the same topic.

To maintain a uniform standard and ensure seamless compatibility with many current and future applications, AutoGPT employs the agent protocol standard by the AI Engineer Foundation. This standardizes the communication pathways from your agent to the frontend and benchmark.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AutoGPT

Similar Open Source Tools

AutoGPT

AutoGPT is a revolutionary tool that empowers everyone to harness the power of AI. With AutoGPT, you can effortlessly build, test, and delegate tasks to AI agents, unlocking a world of possibilities. Our mission is to provide the tools you need to focus on what truly matters: innovation and creativity.

llmesh

LLM Agentic Tool Mesh is a platform by HPE Athonet that democratizes Generative Artificial Intelligence (Gen AI) by enabling users to create tools and web applications using Gen AI with Low or No Coding. The platform simplifies the integration process, focuses on key user needs, and abstracts complex libraries into easy-to-understand services. It empowers both technical and non-technical teams to develop tools related to their expertise and provides orchestration capabilities through an agentic Reasoning Engine based on Large Language Models (LLMs) to ensure seamless tool integration and enhance organizational functionality and efficiency.

langwatch

LangWatch is a monitoring and analytics platform designed to track, visualize, and analyze interactions with Large Language Models (LLMs). It offers real-time telemetry to optimize LLM cost and latency, a user-friendly interface for deep insights into LLM behavior, user analytics for engagement metrics, detailed debugging capabilities, and guardrails to monitor LLM outputs for issues like PII leaks and toxic language. The platform supports OpenAI and LangChain integrations, simplifying the process of tracing LLM calls and generating API keys for usage. LangWatch also provides documentation for easy integration and self-hosting options for interested users.

doc2plan

doc2plan is a browser-based application that helps users create personalized learning plans by extracting content from documents. It features a Creator for manual or AI-assisted plan construction and a Viewer for interactive plan navigation. Users can extract chapters, key topics, generate quizzes, and track progress. The application includes AI-driven content extraction, quiz generation, progress tracking, plan import/export, assistant management, customizable settings, viewer chat with text-to-speech and speech-to-text support, and integration with various Retrieval-Augmented Generation (RAG) models. It aims to simplify the creation of comprehensive learning modules tailored to individual needs.

BloxAI

Blox AI is a platform that allows users to effortlessly create flowcharts and diagrams, collaborate with teams, and receive explanations from the Google Gemini model. It offers rich text editing, versatile visualizations, secure workspaces, and limited files allotment. Users can install it as an app and use it for wireframes, mind maps, and algorithms. The platform is built using Next.Js, Typescript, ShadCN UI, TailwindCSS, Convex, Kinde, EditorJS, and Excalidraw.

Instrukt

Instrukt is a terminal-based AI integrated environment that allows users to create and instruct modular AI agents, generate document indexes for question-answering, and attach tools to any agent. It provides a platform for users to interact with AI agents in natural language and run them inside secure containers for performing tasks. The tool supports custom AI agents, chat with code and documents, tools customization, prompt console for quick interaction, LangChain ecosystem integration, secure containers for agent execution, and developer console for debugging and introspection. Instrukt aims to make AI accessible to everyone by providing tools that empower users without relying on external APIs and services.

obsidian-pieces

Pieces for Developers is a closed-source Obsidian plugin designed to revolutionize coding workflows by incorporating key capabilities and favorite features directly into the Obsidian environment. The plugin, Pieces Copilot for Obsidian, enhances coding and problem-solving experiences by providing insights on code snippets, generating samples, and facilitating navigation through PRs. Users can capture, manage, share, and discover code snippets and developer materials with ease, bringing efficiency and organization to their coding experience.

ZetaForge

ZetaForge is an open-source AI platform designed for rapid development of advanced AI and AGI pipelines. It allows users to assemble reusable, customizable, and containerized Blocks into highly visual AI Pipelines, enabling rapid experimentation and collaboration. With ZetaForge, users can work with AI technologies in any programming language, easily modify and update AI pipelines, dive into the code whenever needed, utilize community-driven blocks and pipelines, and share their own creations. The platform aims to accelerate the development and deployment of advanced AI solutions through its user-friendly interface and community support.

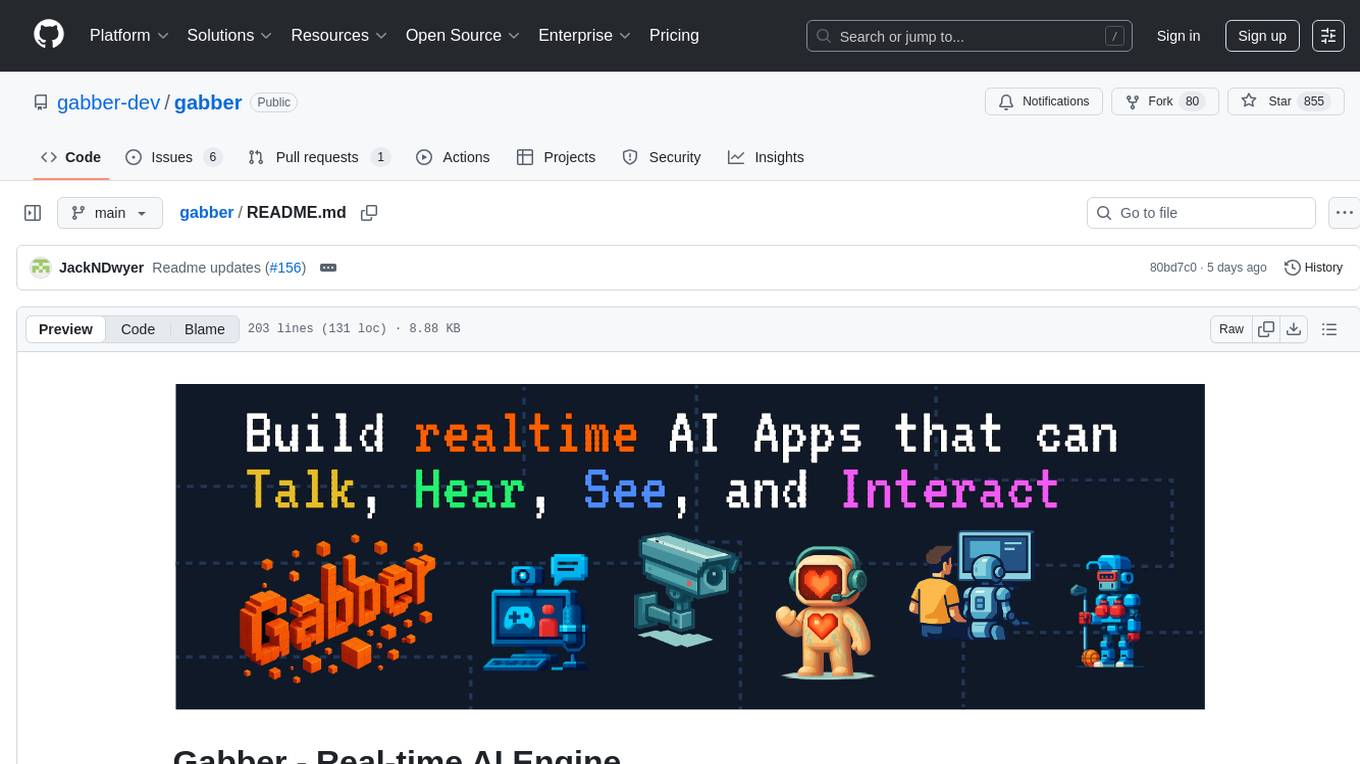

gabber

Gabber is a real-time AI engine that supports graph-based apps with multiple participants and simultaneous media streams. It allows developers to build powerful and developer-friendly AI applications across voice, text, video, and more. The engine consists of frontend and backend services including an editor, engine, and repository. Gabber provides SDKs for JavaScript/TypeScript, React, Python, Unity, and upcoming support for iOS, Android, React Native, and Flutter. The roadmap includes adding more nodes and examples, such as computer use nodes, Unity SDK with robotics simulation, SIP nodes, and multi-participant turn-taking. Users can create apps using nodes, pads, subgraphs, and state machines to define application flow and logic.

ai-workshop

The AI Workshop repository provides a comprehensive guide to utilizing OpenAI's APIs, including Chat Completion, Embedding, and Assistant APIs. It offers hands-on demonstrations and code examples to help users understand the capabilities of these APIs. The workshop covers topics such as creating interactive chatbots, performing semantic search using text embeddings, and building custom assistants with specific data and context. Users can enhance their understanding of AI applications in education, research, and other domains through practical examples and usage notes.

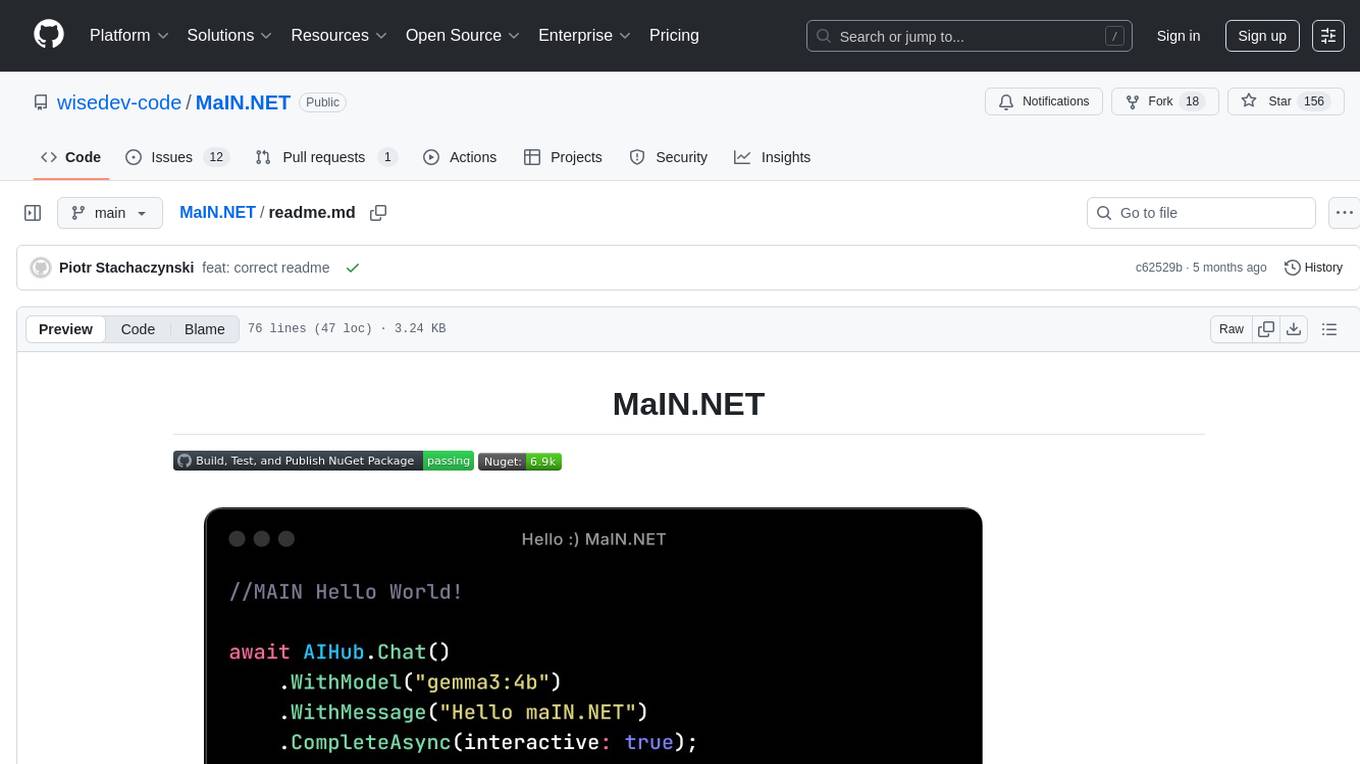

MaIN.NET

MaIN.NET (Modular Artificial Intelligence Network) is a versatile .NET package designed to streamline the integration of large language models (LLMs) into advanced AI workflows. It offers a flexible and robust foundation for developing chatbots, automating processes, and exploring innovative AI techniques. The package connects diverse AI methods into one unified ecosystem, empowering developers with a low-code philosophy to create powerful AI applications with ease.

OpenCopilot

OpenCopilot allows you to have your own product's AI copilot. It integrates with your underlying APIs and can execute API calls whenever needed. It uses LLMs to determine if the user's request requires calling an API endpoint. Then, it decides which endpoint to call and passes the appropriate payload based on the given API definition.

AgentPilot

Agent Pilot is an open source desktop app for creating, managing, and chatting with AI agents. It features multi-agent, branching chats with various providers through LiteLLM. Users can combine models from different providers, configure interactions, and run code using the built-in Open Interpreter. The tool allows users to create agents, manage chats, work with multi-agent workflows, branching workflows, context blocks, tools, and plugins. It also supports a code interpreter, scheduler, voice integration, and integration with various AI providers. Contributions to the project are welcome, and users can report known issues for improvement.

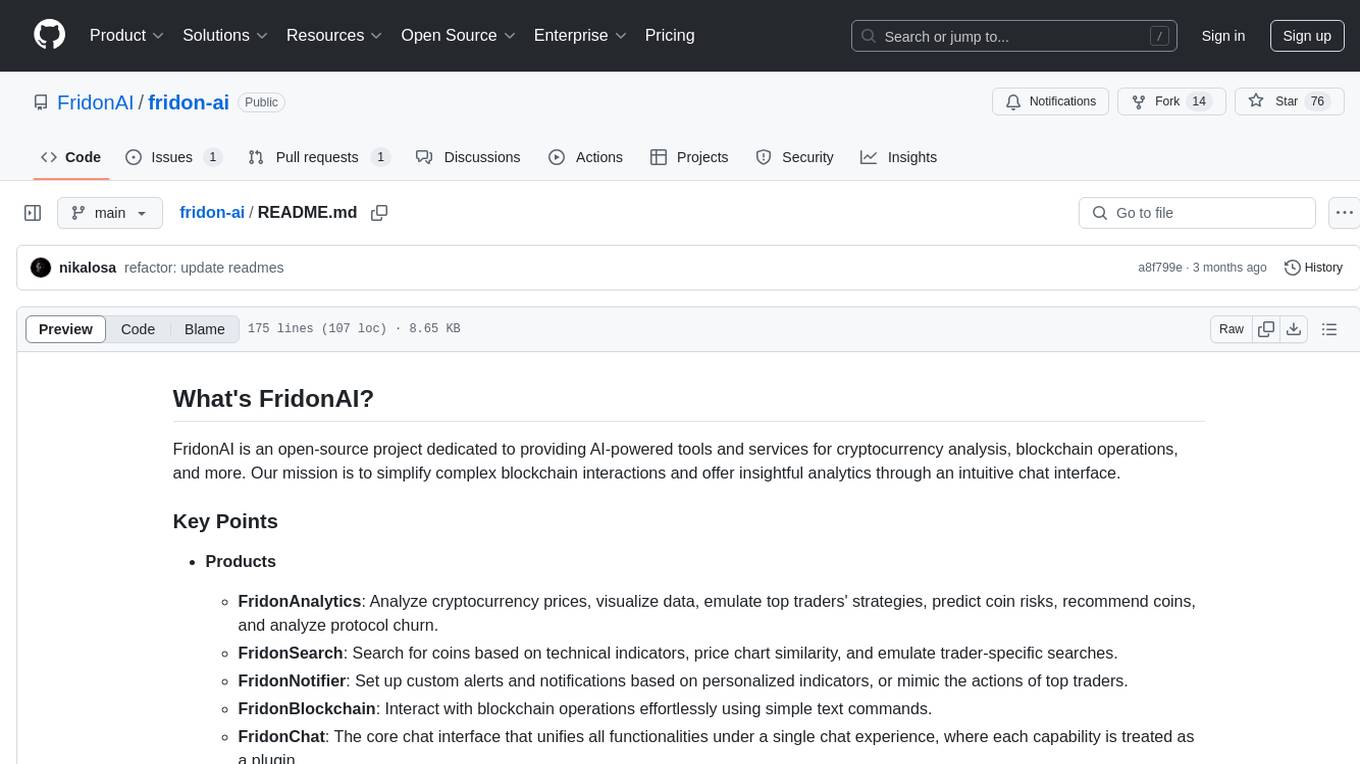

fridon-ai

FridonAI is an open-source project offering AI-powered tools for cryptocurrency analysis and blockchain operations. It includes modules like FridonAnalytics for price analysis, FridonSearch for technical indicators, FridonNotifier for custom alerts, FridonBlockchain for blockchain operations, and FridonChat as a unified chat interface. The platform empowers users to create custom AI chatbots, access crypto tools, and interact effortlessly through chat. The core functionality is modular, with plugins, tools, and utilities for easy extension and development. FridonAI implements a scoring system to assess user interactions and incentivize engagement. The application uses Redis extensively for communication and includes a Nest.js backend for system operations.

Open_Data_QnA

Open Data QnA is a Python library that allows users to interact with their PostgreSQL or BigQuery databases in a conversational manner, without needing to write SQL queries. The library leverages Large Language Models (LLMs) to bridge the gap between human language and database queries, enabling users to ask questions in natural language and receive informative responses. It offers features such as conversational querying with multiturn support, table grouping, multi schema/dataset support, SQL generation, query refinement, natural language responses, visualizations, and extensibility. The library is built on a modular design and supports various components like Database Connectors, Vector Stores, and Agents for SQL generation, validation, debugging, descriptions, embeddings, responses, and visualizations.

agent-lightning

Agent Lightning is a lightweight and efficient tool for automating repetitive tasks in the field of data analysis and machine learning. It provides a user-friendly interface to create and manage automated workflows, allowing users to easily schedule and execute data processing, model training, and evaluation tasks. With its intuitive design and powerful features, Agent Lightning streamlines the process of building and deploying machine learning models, making it ideal for data scientists, machine learning engineers, and AI enthusiasts looking to boost their productivity and efficiency in their projects.

For similar tasks

AutoGPT

AutoGPT is a revolutionary tool that empowers everyone to harness the power of AI. With AutoGPT, you can effortlessly build, test, and delegate tasks to AI agents, unlocking a world of possibilities. Our mission is to provide the tools you need to focus on what truly matters: innovation and creativity.

agent-os

The Agent OS is an experimental framework and runtime to build sophisticated, long running, and self-coding AI agents. We believe that the most important super-power of AI agents is to write and execute their own code to interact with the world. But for that to work, they need to run in a suitable environment—a place designed to be inhabited by agents. The Agent OS is designed from the ground up to function as a long-term computing substrate for these kinds of self-evolving agents.

chatdev

ChatDev IDE is a tool for building your AI agent, Whether it's NPCs in games or powerful agent tools, you can design what you want for this platform. It accelerates prompt engineering through **JavaScript Support** that allows implementing complex prompting techniques.

module-ballerinax-ai.agent

This library provides functionality required to build ReAct Agent using Large Language Models (LLMs).

npi

NPi is an open-source platform providing Tool-use APIs to empower AI agents with the ability to take action in the virtual world. It is currently under active development, and the APIs are subject to change in future releases. NPi offers a command line tool for installation and setup, along with a GitHub app for easy access to repositories. The platform also includes a Python SDK and examples like Calendar Negotiator and Twitter Crawler. Join the NPi community on Discord to contribute to the development and explore the roadmap for future enhancements.

ai-agents

The 'ai-agents' repository is a collection of books and resources focused on developing AI agents, including topics such as GPT models, building AI agents from scratch, machine learning theory and practice, and basic methods and tools for data analysis. The repository provides detailed explanations and guidance for individuals interested in learning about and working with AI agents.

llms

The 'llms' repository is a comprehensive guide on Large Language Models (LLMs), covering topics such as language modeling, applications of LLMs, statistical language modeling, neural language models, conditional language models, evaluation methods, transformer-based language models, practical LLMs like GPT and BERT, prompt engineering, fine-tuning LLMs, retrieval augmented generation, AI agents, and LLMs for computer vision. The repository provides detailed explanations, examples, and tools for working with LLMs.

ai-app

The 'ai-app' repository is a comprehensive collection of tools and resources related to artificial intelligence, focusing on topics such as server environment setup, PyCharm and Anaconda installation, large model deployment and training, Transformer principles, RAG technology, vector databases, AI image, voice, and music generation, and AI Agent frameworks. It also includes practical guides and tutorials on implementing various AI applications. The repository serves as a valuable resource for individuals interested in exploring different aspects of AI technology.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.