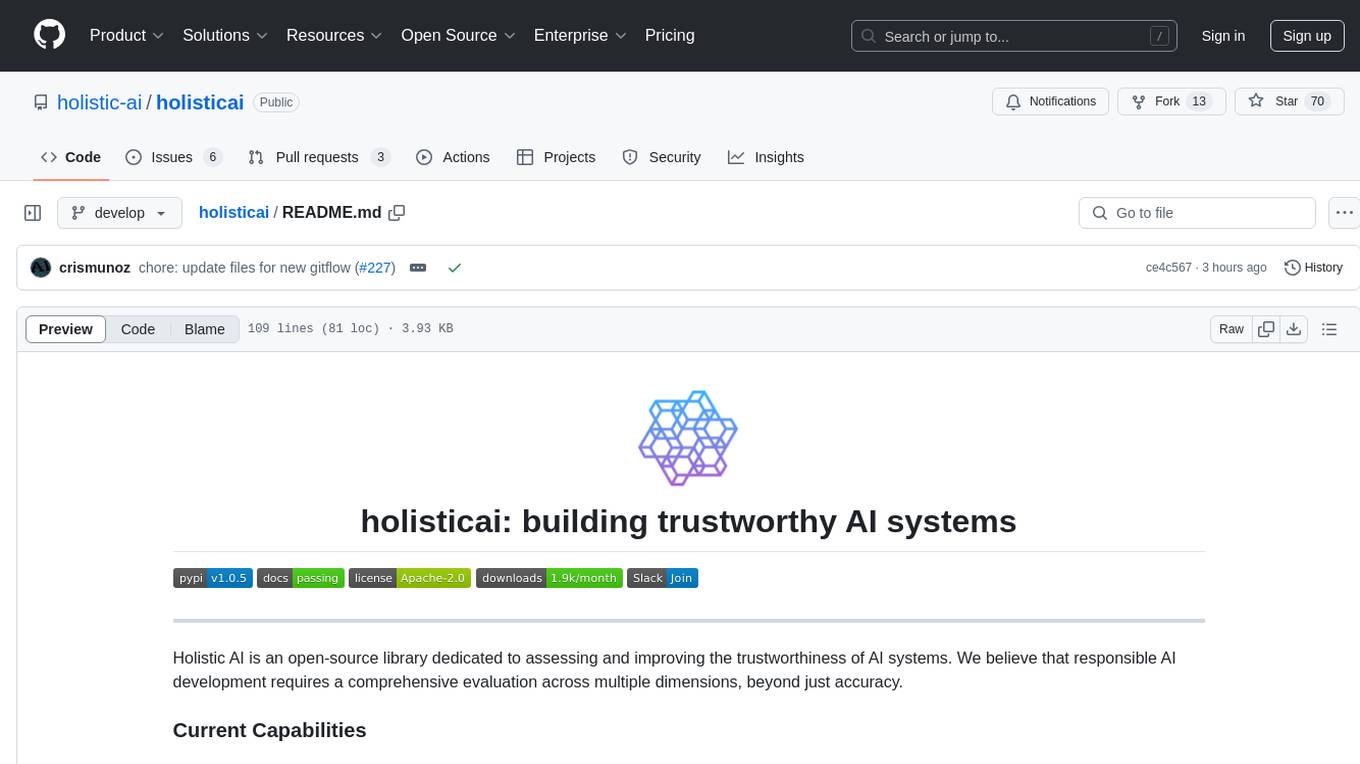

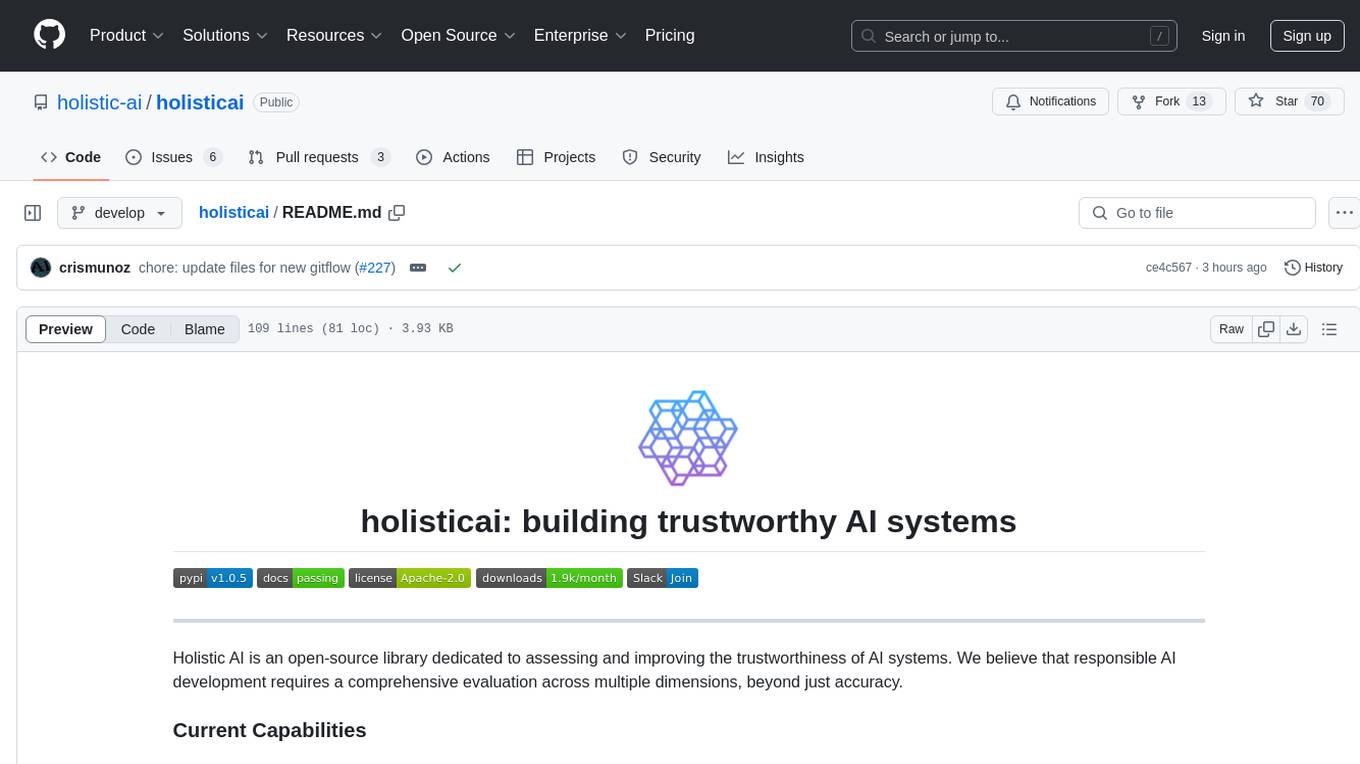

holisticai

This is an open-source tool to assess and improve the trustworthiness of AI systems.

Stars: 69

Holistic AI is an open-source library dedicated to assessing and improving the trustworthiness of AI systems. It focuses on measuring and mitigating bias, explainability, robustness, security, and efficacy in AI models. The tool provides comprehensive metrics, mitigation techniques, a user-friendly interface, and visualization tools to enhance AI system trustworthiness. It offers documentation, tutorials, and detailed installation instructions for easy integration into existing workflows.

README:

Holistic AI is an open-source library dedicated to assessing and improving the trustworthiness of AI systems. We believe that responsible AI development requires a comprehensive evaluation across multiple dimensions, beyond just accuracy.

Holistic AI currently focuses on five verticals of AI trustworthiness:

- Bias: measure and mitigate bias in AI models.

- Explainability: measure into model behavior and decision-making.

- Robustness: measure model performance under various conditions.

- Security: measure the privacy risks associated with AI models.

- Efficacy: measure the effectiveness of AI models.

pip install holisticai # Basic installation

pip install holisticai[bias] # Bias mitigation support

pip install holisticai[explainability] # For explainability metrics and plots

pip install holisticai[all] # Install all packages for bias and explainability# imports

from holisticai.bias.metrics import classification_bias_metrics

from holisticai.datasets import load_dataset

from holisticai.bias.plots import bias_metrics_report

from sklearn.linear_model import LogisticRegression

from sklearn.preprocessing import StandardScaler

# load an example dataset and split

dataset = load_dataset('law_school', protected_attribute="race")

dataset_split = dataset.train_test_split(test_size=0.3)

# separate the data into train and test sets

train_data = dataset_split['train']

test_data = dataset_split['test']

# rescale the data

scaler = StandardScaler()

X_train_t = scaler.fit_transform(train_data['X'])

X_test_t = scaler.transform(test_data['X'])

# train a logistic regression model

model = LogisticRegression(random_state=42, max_iter=500)

model.fit(X_train_t, train_data['y'])

# make predictions

y_pred = model.predict(X_test_t)

# compute bias metrics

metrics = classification_bias_metrics(

group_a = test_data['group_a'],

group_b = test_data['group_b'],

y_true = test_data['y'],

y_pred = y_pred

)

# create a comprehensive report

bias_metrics_report(model_type='binary_classification', table_metrics=metrics)- Comprehensive Metrics: Measure various aspects of AI system trustworthiness, including bias, fairness, and explainability.

- Mitigation Techniques: Implement strategies to address identified issues and improve the fairness and robustness of AI models.

- User-Friendly Interface: Intuitive API for easy integration into existing workflows.

- Visualization Tools: Generate insightful visualizations for better understanding of model behavior and bias patterns.

Troubleshooting (macOS):

Before installing the library, you may need to install these packages:

brew install cbc pkg-config

python -m pip install cylp

brew install cmakeWe welcome contributions from the community To learn more about contributing to Holistic AI, please refer to our Contributing Guide.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for holisticai

Similar Open Source Tools

holisticai

Holistic AI is an open-source library dedicated to assessing and improving the trustworthiness of AI systems. It focuses on measuring and mitigating bias, explainability, robustness, security, and efficacy in AI models. The tool provides comprehensive metrics, mitigation techniques, a user-friendly interface, and visualization tools to enhance AI system trustworthiness. It offers documentation, tutorials, and detailed installation instructions for easy integration into existing workflows.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.

ai

Jetify's AI SDK for Go is a unified interface for interacting with multiple AI providers including OpenAI, Anthropic, and more. It addresses the challenges of fragmented ecosystems, vendor lock-in, poor Go developer experience, and complex multi-modal handling by providing a unified interface, Go-first design, production-ready features, multi-modal support, and extensible architecture. The SDK supports language models, embeddings, image generation, multi-provider support, multi-modal inputs, tool calling, and structured outputs.

narratrix

NarratrixAI is an AI-powered tabletop roleplaying platform that leverages AI to create dynamic, responsive, and immersive storytelling experiences. It allows users to create their own stories, use it as character chat, or as a full tabletop RPG experience. The platform features a powerful chat system, flexible AI integration, rich character management, powerful storytelling tools, and developer-friendly customization options. Narratrix supports various AI providers through a manifest system and is built with Tauri for native performance across Windows, macOS, and Linux platforms.

TaskingAI

TaskingAI brings Firebase's simplicity to **AI-native app development**. The platform enables the creation of GPTs-like multi-tenant applications using a wide range of LLMs from various providers. It features distinct, modular functions such as Inference, Retrieval, Assistant, and Tool, seamlessly integrated to enhance the development process. TaskingAI’s cohesive design ensures an efficient, intelligent, and user-friendly experience in AI application development.

Mira

Mira is an agentic AI library designed for automating company research by gathering information from various sources like company websites, LinkedIn profiles, and Google Search. It utilizes a multi-agent architecture to collect and merge data points into a structured profile with confidence scores and clear source attribution. The core library is framework-agnostic and can be integrated into applications, pipelines, or custom workflows. Mira offers features such as real-time progress events, confidence scoring, company criteria matching, and built-in services for data gathering. The tool is suitable for users looking to streamline company research processes and enhance data collection efficiency.

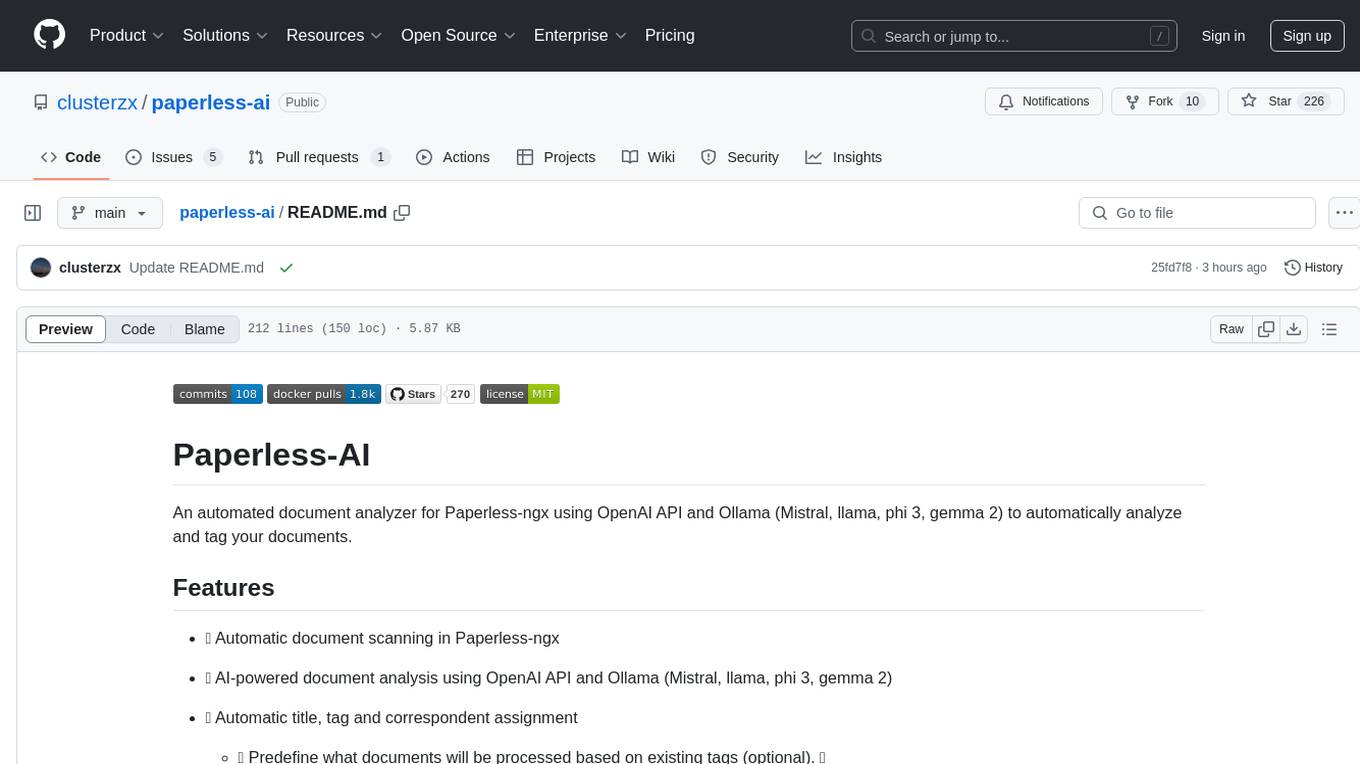

paperless-ai

Paperless-AI is an automated document analyzer tool designed for Paperless-ngx users. It utilizes the OpenAI API and Ollama (Mistral, llama, phi 3, gemma 2) to automatically scan, analyze, and tag documents. The tool offers features such as automatic document scanning, AI-powered document analysis, automatic title and tag assignment, manual mode for analyzing documents, easy setup through a web interface, document processing dashboard, error handling, and Docker support. Users can configure the tool through a web interface and access a debug interface for monitoring and troubleshooting. Paperless-AI aims to streamline document organization and analysis processes for users with access to Paperless-ngx and AI capabilities.

midscene

Midscene.js is an AI-powered automation SDK that allows users to control web pages, perform assertions, and extract data in JSON format using natural language. It offers features such as natural language interaction, understanding UI and providing responses in JSON, intuitive assertion based on AI understanding, compatibility with public multimodal LLMs like GPT-4o, visualization tool for easy debugging, and a brand new experience in automation development.

agentic-radar

The Agentic Radar is a security scanner designed to analyze and assess agentic systems for security and operational insights. It helps users understand how agentic systems function, identify potential vulnerabilities, and create security reports. The tool includes workflow visualization, tool identification, and vulnerability mapping, providing a comprehensive HTML report for easy reviewing and sharing. It simplifies the process of assessing complex workflows and multiple tools used in agentic systems, offering a structured view of potential risks and security frameworks.

memU

MemU is an open-source memory framework designed for AI companions, offering high accuracy, fast retrieval, and cost-effectiveness. It serves as an intelligent 'memory folder' that adapts to various AI companion scenarios. With MemU, users can create AI companions that remember them, learn their preferences, and evolve through interactions. The framework provides advanced retrieval strategies, 24/7 support, and is specialized for AI companions. MemU offers cloud, enterprise, and self-hosting options, with features like memory organization, interconnected knowledge graph, continuous self-improvement, and adaptive forgetting mechanism. It boasts high memory accuracy, fast retrieval, and low cost, making it suitable for building intelligent agents with persistent memory capabilities.

payload-ai

The Payload AI Plugin is an advanced extension that integrates modern AI capabilities into your Payload CMS, streamlining content creation and management. It offers features like text generation, voice and image generation, field-level prompt customization, prompt editor, document analyzer, fact checking, automated content workflows, internationalization support, editor AI suggestions, and AI chat support. Users can personalize and configure the plugin by setting environment variables. The plugin is actively developed and tested with Payload version v3.2.1, with regular updates expected.

MathVerse

MathVerse is an all-around visual math benchmark designed to evaluate the capabilities of Multi-modal Large Language Models (MLLMs) in visual math problem-solving. It collects high-quality math problems with diagrams to assess how well MLLMs can understand visual diagrams for mathematical reasoning. The benchmark includes 2,612 problems transformed into six versions each, contributing to 15K test samples. It also introduces a Chain-of-Thought (CoT) Evaluation strategy for fine-grained assessment of output answers.

aigne-framework

AIGNE Framework is a functional AI application development framework designed to simplify and accelerate the process of building modern applications. It combines functional programming features, powerful artificial intelligence capabilities, and modular design principles to help developers easily create scalable solutions. With key features like modular design, TypeScript support, multiple AI model support, flexible workflow patterns, MCP protocol integration, code execution capabilities, and Blocklet ecosystem integration, AIGNE Framework offers a comprehensive solution for developers. The framework provides various workflow patterns such as Workflow Router, Workflow Sequential, Workflow Concurrency, Workflow Handoff, Workflow Reflection, Workflow Orchestration, Workflow Code Execution, and Workflow Group Chat to address different application scenarios efficiently. It also includes built-in MCP support for running MCP servers and integrating with external MCP servers, along with packages for core functionality, agent library, CLI, and various models like OpenAI, Gemini, Claude, and Nova.

Mercury

Mercury is a code efficiency benchmark designed for code synthesis tasks. It includes 1,889 programming tasks of varying difficulty levels and provides test case generators for comprehensive evaluation. The benchmark aims to assess the efficiency of large language models in generating code solutions.

crawl4ai

Crawl4AI is a powerful and free web crawling service that extracts valuable data from websites and provides LLM-friendly output formats. It supports crawling multiple URLs simultaneously, replaces media tags with ALT, and is completely free to use and open-source. Users can integrate Crawl4AI into Python projects as a library or run it as a standalone local server. The tool allows users to crawl and extract data from specified URLs using different providers and models, with options to include raw HTML content, force fresh crawls, and extract meaningful text blocks. Configuration settings can be adjusted in the `crawler/config.py` file to customize providers, API keys, chunk processing, and word thresholds. Contributions to Crawl4AI are welcome from the open-source community to enhance its value for AI enthusiasts and developers.

multi-agent-orchestrator

Multi-Agent Orchestrator is a flexible and powerful framework for managing multiple AI agents and handling complex conversations. It intelligently routes queries to the most suitable agent based on context and content, supports dual language implementation in Python and TypeScript, offers flexible agent responses, context management across agents, extensible architecture for customization, universal deployment options, and pre-built agents and classifiers. It is suitable for various applications, from simple chatbots to sophisticated AI systems, accommodating diverse requirements and scaling efficiently.

For similar tasks

holisticai

Holistic AI is an open-source library dedicated to assessing and improving the trustworthiness of AI systems. It focuses on measuring and mitigating bias, explainability, robustness, security, and efficacy in AI models. The tool provides comprehensive metrics, mitigation techniques, a user-friendly interface, and visualization tools to enhance AI system trustworthiness. It offers documentation, tutorials, and detailed installation instructions for easy integration into existing workflows.

llm-app

Pathway's LLM (Large Language Model) Apps provide a platform to quickly deploy AI applications using the latest knowledge from data sources. The Python application examples in this repository are Docker-ready, exposing an HTTP API to the frontend. These apps utilize the Pathway framework for data synchronization, API serving, and low-latency data processing without the need for additional infrastructure dependencies. They connect to document data sources like S3, Google Drive, and Sharepoint, offering features like real-time data syncing, easy alert setup, scalability, monitoring, security, and unification of application logic.

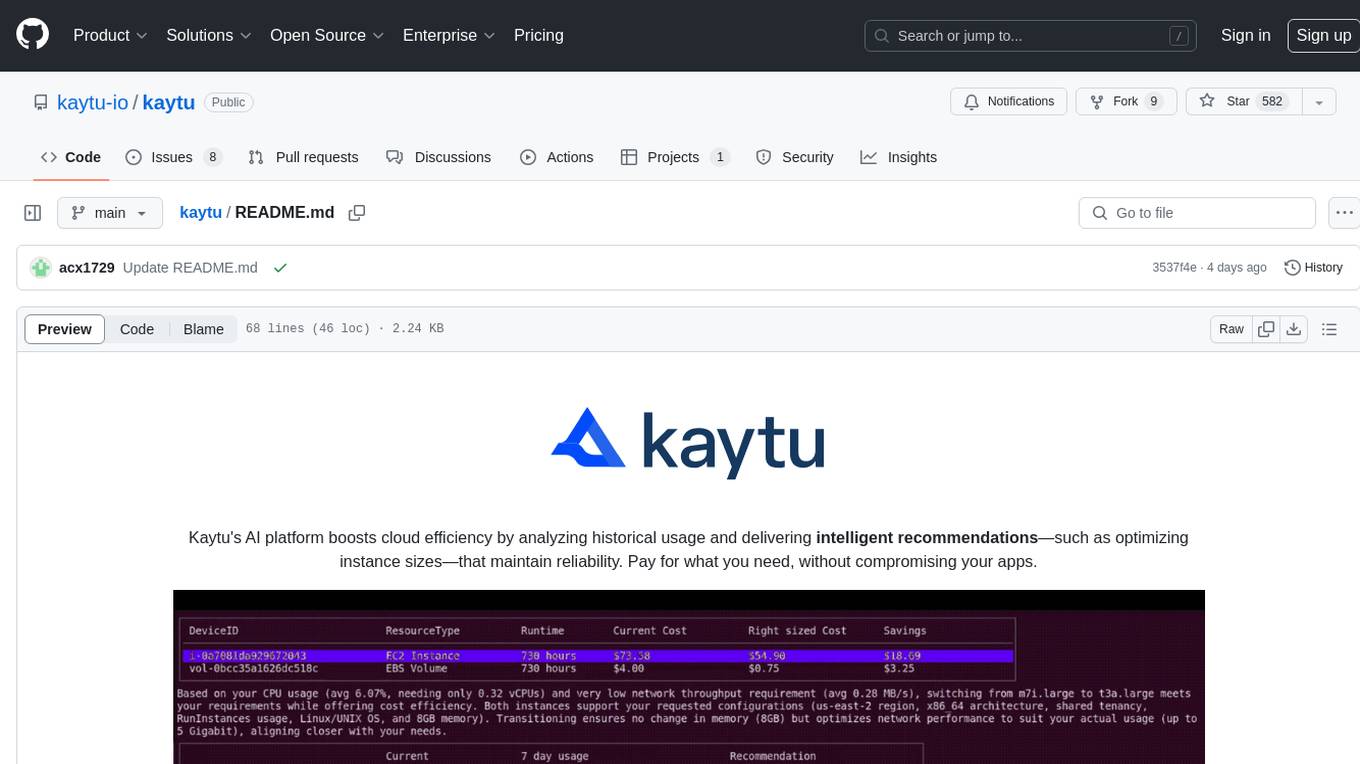

kaytu

Kaytu is an AI platform that enhances cloud efficiency by analyzing historical usage data and providing intelligent recommendations for optimizing instance sizes. Users can pay for only what they need without compromising the performance of their applications. The platform is easy to use with a one-line command, allows customization for specific requirements, and ensures security by extracting metrics from the client side. Kaytu is open-source and supports AWS services, with plans to expand to GCP, Azure, GPU optimization, and observability data from Prometheus in the future.

awesome-production-llm

This repository is a curated list of open-source libraries for production large language models. It includes tools for data preprocessing, training/finetuning, evaluation/benchmarking, serving/inference, application/RAG, testing/monitoring, and guardrails/security. The repository also provides a new category called LLM Cookbook/Examples for showcasing examples and guides on using various LLM APIs.

langkit

LangKit is an open-source text metrics toolkit for monitoring language models. It offers methods for extracting signals from input/output text, compatible with whylogs. Features include text quality, relevance, security, sentiment, toxicity analysis. Installation via PyPI. Modules contain UDFs for whylogs. Benchmarks show throughput on AWS instances. FAQs available.

nesa

Nesa is a tool that allows users to run on-prem AI for a fraction of the cost through a blind API. It provides blind privacy, zero latency on protected inference, wide model coverage, cost savings compared to cloud and on-prem AI, RAG support, and ChatGPT compatibility. Nesa achieves blind AI through Equivariant Encryption (EE), a new security technology that provides complete inference encryption with no additional latency. EE allows users to perform inference on neural networks without exposing the underlying data, preserving data privacy and security.

eulers-shield

Euler's Shield is a decentralized, AI-powered financial system designed to stabilize the value of Pi Coin at $314.159. It combines blockchain, machine learning, and cybersecurity to ensure the security, scalability, and decentralization of the Pi Coin ecosystem.

k8sgateway

K8sGateway is a feature-rich, fast, and flexible Kubernetes-native API gateway built on Envoy proxy and Kubernetes Gateway API. It excels in function-level routing, supports legacy apps, microservices, and serverless. It offers robust discovery capabilities, seamless integration with open-source projects, and supports hybrid applications with various technologies, architectures, protocols, and clouds.

For similar jobs

holisticai

Holistic AI is an open-source library dedicated to assessing and improving the trustworthiness of AI systems. It focuses on measuring and mitigating bias, explainability, robustness, security, and efficacy in AI models. The tool provides comprehensive metrics, mitigation techniques, a user-friendly interface, and visualization tools to enhance AI system trustworthiness. It offers documentation, tutorials, and detailed installation instructions for easy integration into existing workflows.

NanoLLM

NanoLLM is a tool designed for optimized local inference for Large Language Models (LLMs) using HuggingFace-like APIs. It supports quantization, vision/language models, multimodal agents, speech, vector DB, and RAG. The tool aims to provide efficient and effective processing for LLMs on local devices, enhancing performance and usability for various AI applications.

mslearn-ai-fundamentals

This repository contains materials for the Microsoft Learn AI Fundamentals module. It covers the basics of artificial intelligence, machine learning, and data science. The content includes hands-on labs, interactive learning modules, and assessments to help learners understand key concepts and techniques in AI. Whether you are new to AI or looking to expand your knowledge, this module provides a comprehensive introduction to the fundamentals of AI.

awesome-ai-tools

Awesome AI Tools is a curated list of popular tools and resources for artificial intelligence enthusiasts. It includes a wide range of tools such as machine learning libraries, deep learning frameworks, data visualization tools, and natural language processing resources. Whether you are a beginner or an experienced AI practitioner, this repository aims to provide you with a comprehensive collection of tools to enhance your AI projects and research. Explore the list to discover new tools, stay updated with the latest advancements in AI technology, and find the right resources to support your AI endeavors.

go2coding.github.io

The go2coding.github.io repository is a collection of resources for AI enthusiasts, providing information on AI products, open-source projects, AI learning websites, and AI learning frameworks. It aims to help users stay updated on industry trends, learn from community projects, access learning resources, and understand and choose AI frameworks. The repository also includes instructions for local and external deployment of the project as a static website, with details on domain registration, hosting services, uploading static web pages, configuring domain resolution, and a visual guide to the AI tool navigation website. Additionally, it offers a platform for AI knowledge exchange through a QQ group and promotes AI tools through a WeChat public account.

AI-Notes

AI-Notes is a repository dedicated to practical applications of artificial intelligence and deep learning. It covers concepts such as data mining, machine learning, natural language processing, and AI. The repository contains Jupyter Notebook examples for hands-on learning and experimentation. It explores the development stages of AI, from narrow artificial intelligence to general artificial intelligence and superintelligence. The content delves into machine learning algorithms, deep learning techniques, and the impact of AI on various industries like autonomous driving and healthcare. The repository aims to provide a comprehensive understanding of AI technologies and their real-world applications.

promptpanel

Prompt Panel is a tool designed to accelerate the adoption of AI agents by providing a platform where users can run large language models across any inference provider, create custom agent plugins, and use their own data safely. The tool allows users to break free from walled-gardens and have full control over their models, conversations, and logic. With Prompt Panel, users can pair their data with any language model, online or offline, and customize the system to meet their unique business needs without any restrictions.

ai-demos

The 'ai-demos' repository is a collection of example code from presentations focusing on building with AI and LLMs. It serves as a resource for developers looking to explore practical applications of artificial intelligence in their projects. The code snippets showcase various techniques and approaches to leverage AI technologies effectively. The repository aims to inspire and educate developers on integrating AI solutions into their applications.