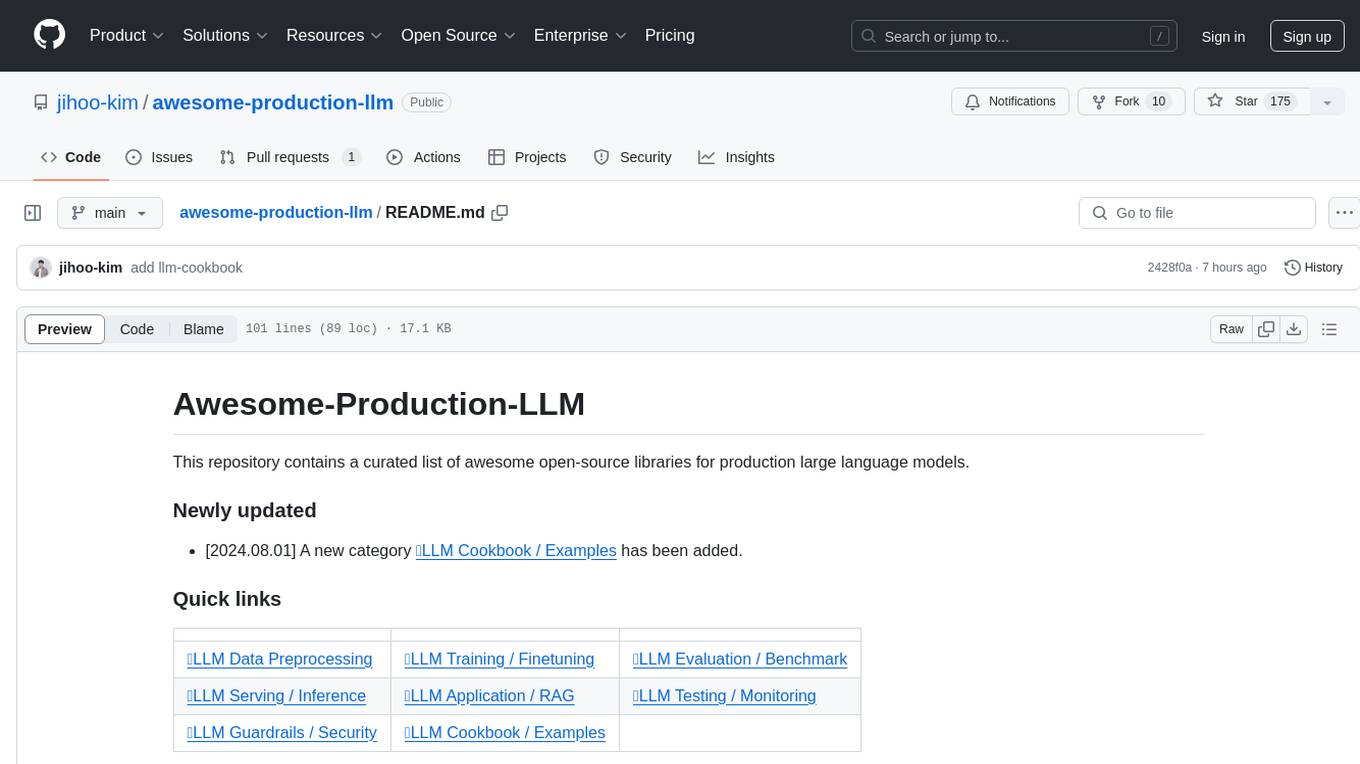

awesome-production-llm

A curated list of awesome open-source libraries for production LLM

Stars: 408

This repository is a curated list of open-source libraries for production large language models. It includes tools for data preprocessing, training/finetuning, evaluation/benchmarking, serving/inference, application/RAG, testing/monitoring, and guardrails/security. The repository also provides a new category called LLM Cookbook/Examples for showcasing examples and guides on using various LLM APIs.

README:

This repository contains a curated list of awesome open-source projects for production large language models.

- [2024.12.27] 🔥A new cateogry ⛏️LLM Extraction / Parsing has been added.

- [2024.10.26] A new category 🤖LLM Agent Benchmarks has been added.

- [2024.09.03] A new category 🎓LLM Courses / Education has been added.

- [2024.08.01] A new category 🍳LLM Cookbook / Examples has been added.

Newly added projects are marked with 📌.

-

data-juicer (

ModelScope)A one-stop data processing system to make data higher-quality, juicier, and more digestible for (multimodal) LLMs!

-

datatrove (

HuggingFace)Freeing data processing from scripting madness by providing a set of platform-agnostic customizable pipeline processing blocks.

-

dolma (

AllenAI)Data and tools for generating and inspecting OLMo pre-training data.

-

NeMo-Curator (

NVIDIA)Scalable toolkit for data curation

-

dataverse (

Upstage)The Universe of Data. All about data, data science, and data engineering

-

EasyInstruct (

ZJUNLP)An Easy-to-use Instruction Processing Framework for LLMs.

-

data-prep-kit (

IBM)Open source project for data preparation of LLM application builders

-

dps (

EleutherAI)Data processing system for polyglot

-

nanoGPT (

karpathy)The simplest, fastest repository for training/finetuning medium-sized GPTs.

-

LLaMA-Factory

A WebUI for Efficient Fine-Tuning of 100+ LLMs (ACL 2024)

-

unsloth (

Unsloth AI)Finetune Llama 3.2, Mistral, Phi & Gemma LLMs 2-5x faster with 80% less memory

-

peft (

HuggingFace)PEFT: State-of-the-art Parameter-Efficient Fine-Tuning.

-

llama-recipes (

Meta)Scripts for fine-tuning Meta Llama3 with composable FSDP & PEFT methods to cover single/multi-node GPUs.

-

litgpt (

LightningAI)20+ high-performance LLMs with recipes to pretrain, finetune and deploy at scale.

-

Megatron-LM (

NVIDIA)Ongoing research training transformer models at scale

-

trl (

HuggingFace)Train transformer language models with reinforcement learning.

-

LMFlow (

OptimalScale)An Extensible Toolkit for Finetuning and Inference of Large Foundation Models. Large Models for All.

-

gpt-neox (

EleutherAI)An implementation of model parallel autoregressive transformers on GPUs, based on the Megatron and DeepSpeed libraries

-

torchtune (

PyTorch)A Native-PyTorch Library for LLM Fine-tuning

-

xtuner (

InternLM)An efficient, flexible and full-featured toolkit for fine-tuning LLM (InternLM2, Llama3, Phi3, Qwen, Mistral, ...)

-

torchtitan (

PyTorch)A native PyTorch Library for large model training

-

nanotron (

HuggingFace)Minimalistic large language model 3D-parallelism training

-

evals (

OpenAI)Evals is a framework for evaluating LLMs and LLM systems, and an open-source registry of benchmarks.

-

ragas (

Exploding Gradients)Supercharge Your LLM Application Evaluations

-

lm-evaluation-harness (

EleutherAI)A framework for few-shot evaluation of language models.

-

opencompass (

OpenCompass)- OpenCompass is an LLM evaluation platform, supporting a wide range of models (Llama3, Mistral, InternLM2,GPT-4,LLaMa2, Qwen,GLM, Claude, etc) over 100+ datasets.

-

deepeval (

ConfidentAI)The LLM Evaluation Framework

-

simple-evals (

OpenAI)This repository contains a lightweight library for evaluating language models.

-

lighteval (

HuggingFace)LightEval is a lightweight LLM evaluation suite that Hugging Face has been using internally with the recently released LLM data processing library datatrove and LLM training library nanotron.

-

evalverse (

Upstage)The Universe of Evaluation. All about the evaluation for LLMs.

-

ollama (

Ollama)Get up and running with Llama 3.1, Mistral, Gemma 2, and other large language models.

-

gpt4all (

NomicAI)GPT4All: Chat with Local LLMs on Any Device

-

llama.cpp

LLM inference in C/C++

-

FastChat (

LMSYS)An open platform for training, serving, and evaluating large language models. Release repo for Vicuna and Chatbot Arena.

-

vllm

A high-throughput and memory-efficient inference and serving engine for LLMs

-

guidance (

guidance-ai)A guidance language for controlling large language models.

-

LiteLLM (

BerriAI)Call all LLM APIs using the OpenAI format. Use Bedrock, Azure, OpenAI, Cohere, Anthropic, Ollama, Sagemaker, HuggingFace, Replicate, Groq (100+ LLMs)

-

BitNet (

Microsoft)Official inference framework for 1-bit LLMs

-

OpenLLM (

BentoML)Run any open-source LLMs, such as Llama 3.1, Gemma, as OpenAI compatible API endpoint in the cloud.

-

text-generation-inference (

HuggingFace)Large Language Model Text Generation Inference

-

TensorRT-LLM (

NVIDIA)TensorRT-LLM provides users with an easy-to-use Python API to define Large Language Models (LLMs) and build TensorRT engines that contain state-of-the-art optimizations to perform inference efficiently on NVIDIA GPUs.

-

SGLang (

sgl-project)SGLang is a fast serving framework for large language models and vision language models.

-

LMDeploy (

InternLM)LMDeploy is a toolkit for compressing, deploying, and serving LLMs.

-

torchchat (

PyTorch)Run PyTorch LLMs locally on servers, desktop and mobile

-

RouteLLM (

LMSYS)A framework for serving and evaluating LLM routers - save LLM costs without compromising quality!

-

LightLLM (

ModelTC)LightLLM is a Python-based LLM (Large Language Model) inference and serving framework, notable for its lightweight design, easy scalability, and high-speed performance.

-

AutoGPT

AutoGPT is the vision of accessible AI for everyone, to use and to build on. Our mission is to provide the tools, so that you can focus on what matters.

-

langchain (

LangChain)Build context-aware reasoning applications

-

dify (

LangGenius)Dify is an open-source LLM app development platform. Dify's intuitive interface combines AI workflow, RAG pipeline, agent capabilities, model management, observability features and more, letting you quickly go from prototype to production.

-

MetaGPT

The Multi-Agent Framework: First AI Software Company, Towards Natural Language Programming

-

llama_index (

LlamaIndex)LlamaIndex is a data framework for your LLM applications

- 📌Quivr (

Quivr)Opiniated RAG for integrating GenAI in your apps. Focus on your product rather than the RAG. Easy integration in existing products with customisation! Any LLM: GPT4, Groq, Llama. Any Vectorstore: PGVector, Faiss. Any Files. Anyway you want.

-

AutoGen (

Microsoft)A programming framework for agentic AI

-

Flowise (

FlowiseAI)Drag & drop UI to build your customized LLM flow

- ⬆RAGFlow (

InfiniFlow)RAGFlow is an open-source RAG (Retrieval-Augmented Generation) engine based on deep document understanding.

-

mem0 (

Mem0)The memory layer for Personalized AI

-

crewAI (

crewAI)Framework for orchestrating role-playing, autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks.

-

GraphRAG (

Microsoft)A modular graph-based Retrieval-Augmented Generation (RAG) system

-

haystack (

Deepset)LLM orchestration framework to build customizable, production-ready LLM applications. Connect components (models, vector DBs, file converters) to pipelines or agents that can interact with your data.

-

swarm (

OpenAI)Educational framework exploring ergonomic, lightweight multi-agent orchestration. Managed by OpenAI Solution team.

-

Letta (

Letta)Letta (fka MemGPT) is a framework for creating stateful LLM services.

- 📌outlines (

.TXT)Structured Text Generation (Make LLMs speak the language of every application.)

- ⬆pathway (

Pathway)Python ETL framework for stream processing, real-time analytics, LLM pipelines, and RAG.

-

llmware (

LLMware.ai)Unified framework for building enterprise RAG pipelines with small, specialized models

- 📌browser-use (

Browser Use)Make websites accessible for AI agents (Browser use is the easiest way to connect your AI agents with the browser.)

-

TaskingAI (

TaskingAI)The open source platform for AI-native application development.

- ⬆llama-stack (

Meta)Model components of the Llama Stack APIs

-

AgentScope (

ModelScope)Start building LLM-empowered multi-agent applications in an easier way.

- ⬆Qwen-Agent (

QwenLM)Agent framework and applications built upon Qwen>=2.0, featuring Function Calling, Code Interpreter, RAG, and Chrome extension.

-

llama-stack-apps (

Meta)Agentic components of the Llama Stack APIs

- ⬆AutoRAG (

Markr Inc.)AutoML tool for RAG

-

Langroid (

Langroid)Harness LLMs with Multi-Agent Programming

-

AgentOps (

AgentOps-AI)Python SDK for AI agent monitoring, LLM cost tracking, benchmarking, and more. Integrates with most LLMs and agent frameworks like CrewAI, Langchain, and Autogen

-

Lagent (

InternLM)A lightweight framework for building LLM-based agents

- 📌Chonkie (

Chonkie.ai)CHONK your texts with Chonkie - The no-nonsense RAG chunking library

- 📌MarkItDown (

Microsoft)Python tool for converting files and office documents to Markdown.

- 📌MinerU (

OpenDataLab)A high-quality tool for convert PDF to Markdown and JSON.

- 📌Firecrawl (

Mendable AI)Turn entire websites into LLM-ready markdown or structured data. Scrape, crawl and extract with a single API.

- 📌Crawl4AI (

UncleCode)Crawl4AI: Crawl Smarter, Faster, Freely. For AI (LLMs, AI agents, and data pipelines).

- 📌Docling (

IBM)Get your documents ready for gen AI

- 📌Unstructured (

Unstructured.io)Open source libraries and APIs to build custom preprocessing pipelines for labeling, training, or production machine learning pipelines.

- 📌Zerox (

OmniAI)PDF to Markdown with vision models

- 📌PDF-Extract-Kit (

OpenDataLab)A Comprehensive Toolkit for High-Quality PDF Content Extraction

- 📌MegaParse (

Quivr)File Parser optimised for LLM Ingestion with no loss // Parse PDFs, Docx, PPTx in a format that is ideal for LLMs.

- 📌LlamaParse (

LlamaIndex)Parse files for optimal RAG. LlamaParse is a GenAI-native document parser that can parse complex document data for any downstream LLM use case (RAG, agents).

- 📌GitIngest

Replace 'hub' with 'ingest' in any github url to get a prompt-friendly extract of a codebase

- 📌Open-Parse

Improved file parsing for LLM’s

- 📌pdf-extract-api (

Catch The Tornado)Document (PDF) extraction and parse API using state of the art modern OCRs + Ollama supported models. Anonymize documents. Remove PII. Convert any document or picture to structured JSON or Markdown

- 📌nv-ingest (

NVIDIA)NVIDIA Ingest is an early access set of microservices for parsing hundreds of thousands of complex, messy unstructured PDFs and other enterprise documents into metadata and text to embed into retrieval systems.

-

promptflow (

Microsoft)Build high-quality LLM apps - from prototyping, testing to production deployment and monitoring.

-

langfuse (

Langfuse)Open source LLM engineering platform: Observability, metrics, evals, prompt management, playground, datasets. Integrates with LlamaIndex, Langchain, OpenAI SDK, LiteLLM, and more.

-

evidently (

EvidentlyAI)Evidently is an open-source ML and LLM observability framework. Evaluate, test, and monitor any AI-powered system or data pipeline. From tabular data to Gen AI. 100+ metrics.

-

promptfoo (

promptfoo)Test your prompts, agents, and RAGs. Redteaming, pentesting, vulnerability scanning for LLMs. Improve your app's quality and catch problems. Compare performance of GPT, Claude, Gemini, Llama, and more. Simple declarative configs with command line and CI/CD integration.

-

giskard (

Giskard)Open-Source Evaluation & Testing for LLMs and ML models

-

phoenix (

ArizeAI)AI Observability & Evaluation

-

Opik (

Comet)Open-source end-to-end LLM Development Platform

-

agenta (

Agenta.ai)The all-in-one LLM developer platform: prompt management, evaluation, human feedback, and deployment all in one place.

-

NeMo-Guardrails (

NVIDIA)NeMo Guardrails is an open-source toolkit for easily adding programmable guardrails to LLM-based conversational systems.

-

guardrails (

GuardrailsAI)Adding guardrails to large language models.

-

PurpleLlama (

Meta)Set of tools to assess and improve LLM security.

-

llm-guard (

ProtectAI)The Security Toolkit for LLM Interactions

-

openai-cookbook (

OpenAI)Examples and guides for using the OpenAI API

-

anthropic-cookbook (

Anthropic)A collection of notebooks/recipes showcasing some fun and effective ways of using Claude.

-

gemini-cookbook (

Google)Examples and guides for using the Gemini API.

-

Phi-3CookBook (

Microsoft)This is a Phi-3 book for getting started with Phi-3. Phi-3, a family of open AI models developed by Microsoft.

-

amazon-bedrock-workshop (

AWS)This is a workshop designed for Amazon Bedrock a foundational model service.

-

mistral-cookbook (

Mistral)The Mistral Cookbook features examples contributed by Mistralers and our community, as well as our partners.

-

gemma-cookbook (

Google)A collection of guides and examples for the Gemma open models from Google.

-

amazon-bedrock-samples (

AWS)This repository contains examples for customers to get started using the Amazon Bedrock Service. This contains examples for all available foundational models

-

cohere-notebooks (

Cohere)Code examples and jupyter notebooks for the Cohere Platform

-

upstage-cookbook (

Upstage)Upstage api examples and guides

-

generative-ai-for-beginners (

Microsoft)18 Lessons, Get Started Building with Generative AI

-

llm-course

Course to get into Large Language Models (LLMs) with roadmaps and Colab notebooks.

-

LLMs-from-scratch

Implementing a ChatGPT-like LLM in PyTorch from scratch, step by step

-

hands-on-llms

Learn about LLMs, LLMOps, and vector DBs for free by designing, training, and deploying a real-time financial advisor LLM system ~ source code + video & reading materials

-

llm-zoomcamp (

DataTalksClub)LLM Zoomcamp - a free online course about building a Q&A system

-

llm-twin-course (

DecodingML)Learn for free how to build an end-to-end production-ready LLM & RAG system using LLMOps best practices: ~ source code + 12 hands-on lessons

-

SWE-bench (

Princeton-NLP)SWE-bench is a benchmark for evaluating large language models on real world software issues collected from GitHub.

-

MMAU (axlearn) (

Apple)The Massive Multitask Agent Understanding (MMAU) benchmark is designed to evaluate the performance of large language models (LLMs) as agents across a wide variety of tasks.

-

mle-bench (

OpenAI)MLE-bench is a benchmark for measuring how well AI agents perform at machine learning engineering

-

WindowsAgentArena (

Microsoft)Windows Agent Arena (WAA) is a scalable OS platform for testing and benchmarking of multi-modal AI agents.

-

DevAI (agent-as-a-judge) (

METAUTO.ai)DevAI, a benchmark consisting of 55 realistic AI development tasks with 365 hierarchical user requirements.

- 📌AIOpsLab (

Microsoft)AIOpsLab is a holistic framework to enable the design, development, and evaluation of autonomous AIOps agents that, additionally, serves the purpose of building reproducible, standardized, interoperable and scalable benchmarks.

-

natural-plan (

Google DeepMind)Natural Plan is a realistic planning benchmark in natural language containing 3 key tasks: Trip Planning, Meeting Planning, and Calendar Scheduling.

This project is inspired by Awesome Production Machine Learning.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for awesome-production-llm

Similar Open Source Tools

awesome-production-llm

This repository is a curated list of open-source libraries for production large language models. It includes tools for data preprocessing, training/finetuning, evaluation/benchmarking, serving/inference, application/RAG, testing/monitoring, and guardrails/security. The repository also provides a new category called LLM Cookbook/Examples for showcasing examples and guides on using various LLM APIs.

towhee

Towhee is a cutting-edge framework designed to streamline the processing of unstructured data through the use of Large Language Model (LLM) based pipeline orchestration. It can extract insights from diverse data types like text, images, audio, and video files using generative AI and deep learning models. Towhee offers rich operators, prebuilt ETL pipelines, and a high-performance backend for efficient data processing. With a Pythonic API, users can build custom data processing pipelines easily. Towhee is suitable for tasks like sentence embedding, image embedding, video deduplication, question answering with documents, and cross-modal retrieval based on CLIP.

EvalAI

EvalAI is an open-source platform for evaluating and comparing machine learning (ML) and artificial intelligence (AI) algorithms at scale. It provides a central leaderboard and submission interface, making it easier for researchers to reproduce results mentioned in papers and perform reliable & accurate quantitative analysis. EvalAI also offers features such as custom evaluation protocols and phases, remote evaluation, evaluation inside environments, CLI support, portability, and faster evaluation.

cl-waffe2

cl-waffe2 is an experimental deep learning framework in Common Lisp, providing fast, systematic, and customizable matrix operations, reverse mode tape-based Automatic Differentiation, and neural network model building and training features accelerated by a JIT Compiler. It offers abstraction layers, extensibility, inlining, graph-level optimization, visualization, debugging, systematic nodes, and symbolic differentiation. Users can easily write extensions and optimize their networks without overheads. The framework is designed to eliminate barriers between users and developers, allowing for easy customization and extension.

catalyst

Catalyst is a C# Natural Language Processing library designed for speed, inspired by spaCy's design. It provides pre-trained models, support for training word and document embeddings, and flexible entity recognition models. The library is fast, modern, and pure-C#, supporting .NET standard 2.0. It is cross-platform, running on Windows, Linux, macOS, and ARM. Catalyst offers non-destructive tokenization, named entity recognition, part-of-speech tagging, language detection, and efficient binary serialization. It includes pre-built models for language packages and lemmatization. Users can store and load models using streams. Getting started with Catalyst involves installing its NuGet Package and setting the storage to use the online repository. The library supports lazy loading of models from disk or online. Users can take advantage of C# lazy evaluation and native multi-threading support to process documents in parallel. Training a new FastText word2vec embedding model is straightforward, and Catalyst also provides algorithms for fast embedding search and dimensionality reduction.

superagentx

SuperAgentX is a lightweight open-source AI framework designed for multi-agent applications with Artificial General Intelligence (AGI) capabilities. It offers goal-oriented multi-agents with retry mechanisms, easy deployment through WebSocket, RESTful API, and IO console interfaces, streamlined architecture with no major dependencies, contextual memory using SQL + Vector databases, flexible LLM configuration supporting various Gen AI models, and extendable handlers for integration with diverse APIs and data sources. It aims to accelerate the development of AGI by providing a powerful platform for building autonomous AI agents capable of executing complex tasks with minimal human intervention.

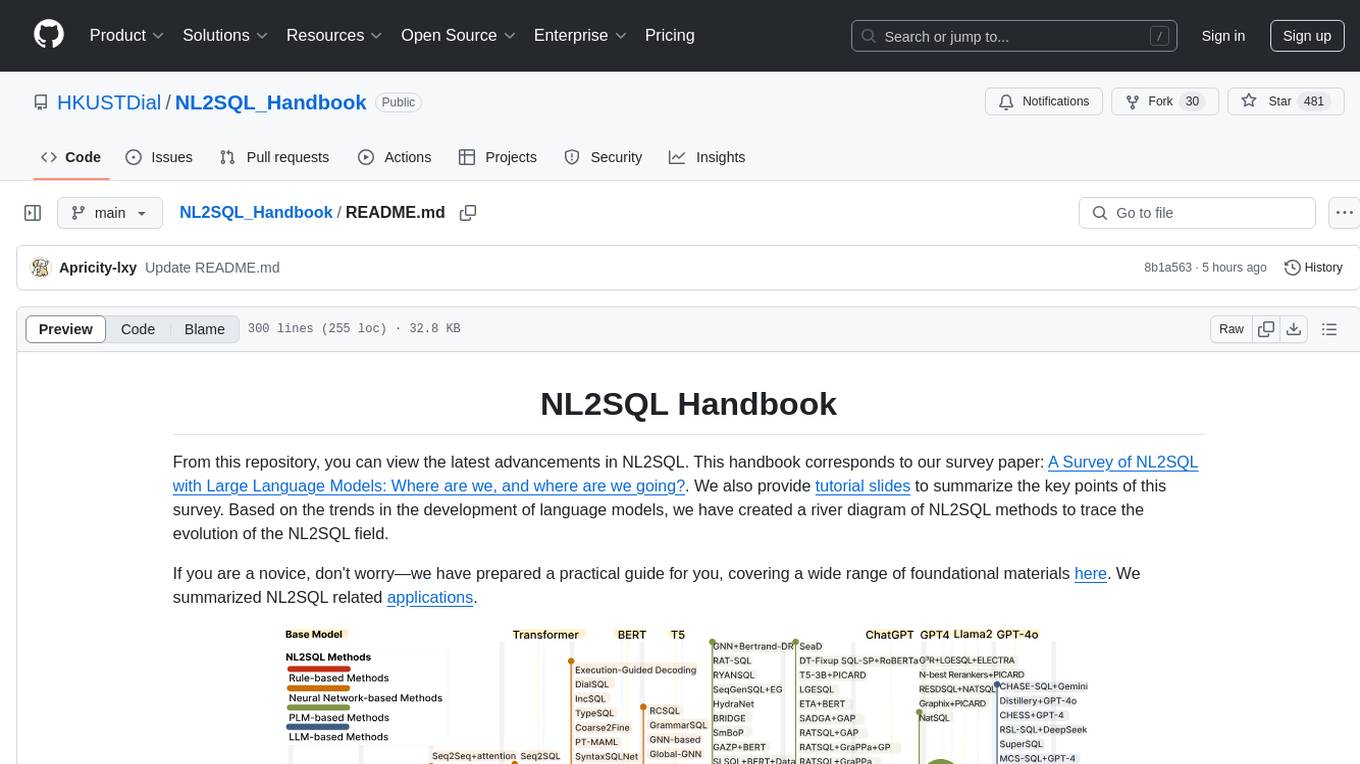

NL2SQL_Handbook

NL2SQL Handbook provides a comprehensive overview of Natural Language to SQL (NL2SQL) advancements, including survey papers, tutorial slides, and a river diagram of NL2SQL methods. It covers the evolution of NL2SQL solutions, module-based methods, benchmark development, and future directions. The repository also offers practical guides for beginners, access to high-performance language models, and evaluation metrics for NL2SQL models.

GPTSwarm

GPTSwarm is a graph-based framework for LLM-based agents that enables the creation of LLM-based agents from graphs and facilitates the customized and automatic self-organization of agent swarms with self-improvement capabilities. The library includes components for domain-specific operations, graph-related functions, LLM backend selection, memory management, and optimization algorithms to enhance agent performance and swarm efficiency. Users can quickly run predefined swarms or utilize tools like the file analyzer. GPTSwarm supports local LM inference via LM Studio, allowing users to run with a local LLM model. The framework has been accepted by ICML2024 and offers advanced features for experimentation and customization.

Awesome-LLM-Ensemble

Awesome-LLM-Ensemble is a collection of papers on LLM Ensemble, focusing on the comprehensive use of multiple large language models to benefit from their individual strengths. It provides a systematic review of recent developments in LLM Ensemble, including taxonomy, methods for ensemble before, during, and after inference, benchmarks, applications, and related surveys.

ExplainableAI.jl

ExplainableAI.jl is a Julia package that implements interpretability methods for black-box classifiers, focusing on local explanations and attribution maps in input space. The package requires models to be differentiable with Zygote.jl. It is similar to Captum and Zennit for PyTorch and iNNvestigate for Keras models. Users can analyze and visualize explanations for model predictions, with support for different XAI methods and customization. The package aims to provide transparency and insights into model decision-making processes, making it a valuable tool for understanding and validating machine learning models.

dom-to-semantic-markdown

DOM to Semantic Markdown is a tool that converts HTML DOM to Semantic Markdown for use in Large Language Models (LLMs). It maximizes semantic information, token efficiency, and preserves metadata to enhance LLMs' processing capabilities. The tool captures rich web content structure, including semantic tags, image metadata, table structures, and link destinations. It offers customizable conversion options and supports both browser and Node.js environments.

superlinked

Superlinked is a compute framework for information retrieval and feature engineering systems, focusing on converting complex data into vector embeddings for RAG, Search, RecSys, and Analytics stack integration. It enables custom model performance in machine learning with pre-trained model convenience. The tool allows users to build multimodal vectors, define weights at query time, and avoid postprocessing & rerank requirements. Users can explore the computational model through simple scripts and python notebooks, with a future release planned for production usage with built-in data infra and vector database integrations.

DeepResearch

Tongyi DeepResearch is an agentic large language model with 30.5 billion total parameters, designed for long-horizon, deep information-seeking tasks. It demonstrates state-of-the-art performance across various search benchmarks. The model features a fully automated synthetic data generation pipeline, large-scale continual pre-training on agentic data, end-to-end reinforcement learning, and compatibility with two inference paradigms. Users can download the model directly from HuggingFace or ModelScope. The repository also provides benchmark evaluation scripts and information on the Deep Research Agent Family.

rllm

rLLM (relationLLM) is a Pytorch library for Relational Table Learning (RTL) with LLMs. It breaks down state-of-the-art GNNs, LLMs, and TNNs as standardized modules and facilitates novel model building in a 'combine, align, and co-train' way using these modules. The library is LLM-friendly, processes various graphs as multiple tables linked by foreign keys, introduces new relational table datasets, and is supported by students and teachers from Shanghai Jiao Tong University and Tsinghua University.

DriveLM

DriveLM is a multimodal AI model that enables autonomous driving by combining computer vision and natural language processing. It is designed to understand and respond to complex driving scenarios using visual and textual information. DriveLM can perform various tasks related to driving, such as object detection, lane keeping, and decision-making. It is trained on a massive dataset of images and text, which allows it to learn the relationships between visual cues and driving actions. DriveLM is a powerful tool that can help to improve the safety and efficiency of autonomous vehicles.

MarkLLM

MarkLLM is an open-source toolkit designed for watermarking technologies within large language models (LLMs). It simplifies access, understanding, and assessment of watermarking technologies, supporting various algorithms, visualization tools, and evaluation modules. The toolkit aids researchers and the community in ensuring the authenticity and origin of machine-generated text.

For similar tasks

open-saas

Open SaaS is a free and open-source React and Node.js template for building SaaS applications. It comes with a variety of features out of the box, including authentication, payments, analytics, and more. Open SaaS is built on top of the Wasp framework, which provides a number of features to make it easy to build SaaS applications, such as full-stack authentication, end-to-end type safety, jobs, and one-command deploy.

airbroke

Airbroke is an open-source error catcher tool designed for modern web applications. It provides a PostgreSQL-based backend with an Airbrake-compatible HTTP collector endpoint and a React-based frontend for error management. The tool focuses on simplicity, maintaining a small database footprint even under heavy data ingestion. Users can ask AI about issues, replay HTTP exceptions, and save/manage bookmarks for important occurrences. Airbroke supports multiple OAuth providers for secure user authentication and offers occurrence charts for better insights into error occurrences. The tool can be deployed in various ways, including building from source, using Docker images, deploying on Vercel, Render.com, Kubernetes with Helm, or Docker Compose. It requires Node.js, PostgreSQL, and specific system resources for deployment.

llmops-promptflow-template

LLMOps with Prompt flow is a template and guidance for building LLM-infused apps using Prompt flow. It provides centralized code hosting, lifecycle management, variant and hyperparameter experimentation, A/B deployment, many-to-many dataset/flow relationships, multiple deployment targets, comprehensive reporting, BYOF capabilities, configuration-based development, local prompt experimentation and evaluation, endpoint testing, and optional Human-in-loop validation. The tool is customizable to suit various application needs.

cheat-sheet-pdf

The Cheat-Sheet Collection for DevOps, Engineers, IT professionals, and more is a curated list of cheat sheets for various tools and technologies commonly used in the software development and IT industry. It includes cheat sheets for Nginx, Docker, Ansible, Python, Go (Golang), Git, Regular Expressions (Regex), PowerShell, VIM, Jenkins, CI/CD, Kubernetes, Linux, Redis, Slack, Puppet, Google Cloud Developer, AI, Neural Networks, Machine Learning, Deep Learning & Data Science, PostgreSQL, Ajax, AWS, Infrastructure as Code (IaC), System Design, and Cyber Security.

awesome-production-llm

This repository is a curated list of open-source libraries for production large language models. It includes tools for data preprocessing, training/finetuning, evaluation/benchmarking, serving/inference, application/RAG, testing/monitoring, and guardrails/security. The repository also provides a new category called LLM Cookbook/Examples for showcasing examples and guides on using various LLM APIs.

generative-ai-on-aws

Generative AI on AWS by O'Reilly Media provides a comprehensive guide on leveraging generative AI models on the AWS platform. The book covers various topics such as generative AI use cases, prompt engineering, large-language models, fine-tuning techniques, optimization, deployment, and more. Authors Chris Fregly, Antje Barth, and Shelbee Eigenbrode offer insights into cutting-edge AI technologies and practical applications in the field. The book is a valuable resource for data scientists, AI enthusiasts, and professionals looking to explore generative AI capabilities on AWS.

palico-ai

Palico AI is a tech stack designed for rapid iteration of LLM applications. It allows users to preview changes instantly, improve performance through experiments, debug issues with logs and tracing, deploy applications behind a REST API, and manage applications with a UI control panel. Users have complete flexibility in building their applications with Palico, integrating with various tools and libraries. The tool enables users to swap models, prompts, and logic easily using AppConfig. It also facilitates performance improvement through experiments and provides options for deploying applications to cloud providers or using managed hosting. Contributions to the project are welcomed, with easy ways to get involved by picking issues labeled as 'good first issue'.

opencharacter

OpenCharacter is an open-source tool that allows users to create and run characters locally with local models or use the hosted version. The stack includes Next.js for frontend, TailwindCSS for styling, Drizzle ORM for database access, NextAuth for authentication, Cloudflare D1 for serverless databases, Cloudflare Pages for hosting, and ShadcnUI as the component library. Users can integrate OpenCharacter with OpenRouter by configuring the OpenRouter API key. The tool is fully scalable, composable, and cost-effective, with powerful tools like Wrangler for database management and migrations. No environment variables are needed, making it easy to use and deploy.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.