vectordb-recipes

High quality resources & applications for LLMs, multi-modal models and VectorDBs

Stars: 845

This repository contains examples, applications, starter code, & tutorials to help you kickstart your GenAI projects. * These are built using LanceDB, a free, open-source, serverless vectorDB that **requires no setup**. * It **integrates into python data ecosystem** so you can simply start using these in your existing data pipelines in pandas, arrow, pydantic etc. * LanceDB has **native Typescript SDK** using which you can **run vector search** in serverless functions! This repository is divided into 3 sections: - Examples - Get right into the code with minimal introduction, aimed at getting you from an idea to PoC within minutes! - Applications - Ready to use Python and web apps using applied LLMs, VectorDB and GenAI tools - Tutorials - A curated list of tutorials, blogs, Colabs and courses to get you started with GenAI in greater depth.

README:

Dive into building GenAI applications! This repository contains examples, applications, starter code, & tutorials to help you kickstart your GenAI projects.

- These are built using LanceDB, a free, open-source, serverless vectorDB that requires no setup.

- It integrates into Python data ecosystem so you can simply start using these in your existing data pipelines in pandas, arrow, pydantic etc.

- LanceDB has native Typescript SDK using which you can run vector search in serverless functions!

Join our community for support - Discord • Twitter

This repository is divided into 2 sections:

- Examples - Get right into the code with minimal introduction, aimed at getting you from an idea to PoC within minutes!

- Applications - Ready to use Python and web apps using applied LLMs, VectorDB and GenAI tools

The following examples are organized into different tables to make similar types of examples easily accessible.

- Build from Scratch - Step-by-step guides to create AI applications from scratch.

- Multimodal - Build apps that process and search across both text and images.

- RAG - Combine document retrieval with LLM-powered responses.

- Vector Search - Learn to efficiently find relevant documents using vector-based search.

- Chatbot - Create AI chatbots that fetch information and generate intelligent replies.

- Evalution - Measure the quality and accuracy of AI-generated answers.

- AI Agents - Build LLM-driven applications where multiple agents collaborate and interact.

- Recommender Systems - Develop AI-powered recommendation systems for personalized suggestions.

- Concepts - Tutorials and explanations of key techniques used in AI applications.

Stay up to date with the latest projects, tools, and improvements added to the repository.

Start with the basics! These examples guide you through creating AI applications from the ground up using LanceDB for efficient document retrieval and search.

| Build from Scratch | Interactive Notebook & Scripts |

|---|---|

| Build RAG from Scratch |

|

| Local RAG from Scratch with Llama3 |

|

| Multi-Head RAG from Scratch |

|

| Fintech AI Agent from Scratch |

|

Search across different types of data (text, images, and more). Build powerful search applications that work with diverse inputs.

| Multimodal | Interactive Notebook & Scripts | Blog |

|---|---|---|

| V-JEPA Video Search | ||

| Multimodal CLIP: DiffusionDB |

|

|

| Multimodal CLIP: Youtube videos |

|

|

| Cambrian-1: Vision centric exploration of images |

|

|

| Multimodal Jina CLIP-V2 : Food Search |

|

|

| Multimodal vector search: Voyage AI X LanceDB |

|

|

Generated Responses by retrieving relevant documents before answering. This section covers different approaches to implementing RAG in your projects.

Find relevant documents quickly! These projects show how to use vector-based search techniques to make AI-powered searches faster and smarter.

Create chatbots that understand user queries and fetch relevant responses using LanceDB’s vector search capabilities.

These projects provide tools to compare AI-generated responses against reference data and fine-tune accuracy.

| Evaluation | Interactive Notebook & Scripts | Blog |

|---|---|---|

| Monitoring and Tracing RAG using HoneyHive |

|

|

| Evaluating RAG with RAGAs |

|

|

Build applications where multiple AI agents interact to complete tasks efficiently. These projects show how agents can collaborate, exchange data, and automate workflows.

Personalized AI recommendations! These projects help you build recommendation engines that suggest content based on user preferences.

| Recommender Systems | Interactive Notebook & Scripts | Blog |

|---|---|---|

| Movie Recommender |

|

|

| Product Recommender |

|

|

| Arxiv paper recommender |

|

|

| Music Recommender |

|

|

Learn the core ideas behind AI applications—including text chunking, retrieval strategies, and optimization techniques—to improve your understanding of vector search and AI pipelines.

Ready-to-use AI applications built with LanceDB! Use these projects as-is, customize them, or integrate them into your own applications.

| Project Name | Description | Screenshot |

|---|---|---|

| Writing assistant | Writing assistant app using lanchain.js with LanceDB, allows you to get real time relevant suggestions and facts based on you written text to help you with your writing. | |

| Sentence Auto-Complete | Sentance auto complete app using lanchain.js with LanceDB, allows you to get real time relevant auto complete suggestions and facts based on you written text to help you with your writing.You can also upload your data source in the form of a pdf file.You can switch between gpt models to get faster results. |  |

| Article Recommendation | Article Recommender: Explore vast data set of articles with Instant, Context-Aware Suggestions. Leveraging Advanced NLP, Vector Search, and Customizable Datasets, Our App Delivers Real-Time, Precise Article Recommendations. Perfect for Research, Content Curation, and Staying Informed. Unlock Smarter Insights with State-of-the-Art Technology in Content Retrieval and Discovery!". |  |

| AI Powered Job Search | Transform your job search experience with this AI-driven application. Powered by LangChain.js, LanceDB, and advanced semantic search, it provides real-time, highly accurate job listings tailored to your preferences. Featuring customizable datasets and advanced filtering options (e.g., skills, location, job type, and salary range), this app ensures you find the right opportunities quickly and effortlessly. Best suited for job seekers, recruiters, career platforms, custom job boards. |  |

| AI Powered Multimodal meme search | An advanced AI-powered meme search engine that allows users to find memes using both text and image queries. By leveraging LanceDB as a high-performance vector database and Roboflow's CLIP model for embedding generation, the platform delivers fast and accurate meme retrieval. |  |

| AI Powered Feedback search and analysis | An AI-powered employee feedback analysis platform designed to collect, store, analyze, and retrieve insightful employee feedback. This system leverages LanceDB for high-speed vector-based semantic search, React.js for an interactive UI, Node.js for backend processing, and LangChain.js with an Ambient Agent for intelligent analysis and actionable insights. |  |

| Hierarchical Multi Agent | The AI-Powered Law Assistant is a Hierarchical Multi-Agent System leveraging LangGraph, LangChain, and LanceDB for efficient legal query processing. It features a Supervisor Agent that delegates tasks to specialized agents for IPC and NDPS laws, each with sub-agents for case retrieval and legal summarization. Using LanceDB, it stores and retrieves vectorized legal documents, enabling fast, structured, and context-aware responses for legal professionals, researchers, and law students. |  |

| Project Name | Description | Screenshot |

|---|---|---|

| YOLOExplorer | Iterate on your YOLO / CV datasets using SQL, Vector semantic search, and more within seconds | |

| Website Chatbot (Deployable Vercel Template) | Create a chatbot from the sitemap of any website/docs of your choice. Built using vectorDB serverless native javascript package. |  |

| Advanced Chatbot with Parler TTS | This Chatbot app uses Lancedb Hybrid search, FTS & reranker method with Parlers TTS library. |  |

| Multi-Modal Search Engine | Create a Multi-modal search engine app, to search images using both images or text | |

| Evaluate RAG | A working Streamlit RAG App designed to demonstrate end to to end production grade evaluation using 50+ scores and metrics which include guards, software metrics, traditional metrics and LLM as judge metrics. It uses mixture of specialised deep learning models and LLM as Judge models to do the evaluations |  |

| Multi-Agent Collaboration Chatbot | Multi-Agent collabration chatbot using langgraph for share-market use case using Lancedb & tools such as Polygon ,Tavily |  |

| Multimodal Myntra Fashion Search Engine | This app uses OpenAI's CLIP to make a search engine that can understand and deal with both written words and pictures. |  |

| Multilingual-RAG | Multilingual RAG with cohere embedding & support 100+ languages | |

| Music Recommender | Music Recommendation system using audio feature extraction and vector similarity search. By utilizing LanceDB, PANNs for audio tagging, and Librosa for audio feature extraction, the system finds and recommends tracks with similar audio characteristics based on a query song. |  |

| NoOCR | End-to-end solution for complex PDFs, powered by ColPali and LanceDB. |  |

🌟 New! 🌟 Applied GenAI and VectorDB course on Udacity Learn about GenAI and vectorDBs using LanceDB in the recently launched Udacity Course

If you're working on some cool applications that you'd like to add to this repo, please open a PR!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for vectordb-recipes

Similar Open Source Tools

vectordb-recipes

This repository contains examples, applications, starter code, & tutorials to help you kickstart your GenAI projects. * These are built using LanceDB, a free, open-source, serverless vectorDB that **requires no setup**. * It **integrates into python data ecosystem** so you can simply start using these in your existing data pipelines in pandas, arrow, pydantic etc. * LanceDB has **native Typescript SDK** using which you can **run vector search** in serverless functions! This repository is divided into 3 sections: - Examples - Get right into the code with minimal introduction, aimed at getting you from an idea to PoC within minutes! - Applications - Ready to use Python and web apps using applied LLMs, VectorDB and GenAI tools - Tutorials - A curated list of tutorials, blogs, Colabs and courses to get you started with GenAI in greater depth.

Genkit

Genkit is an open-source framework for building full-stack AI-powered applications, used in production by Google's Firebase. It provides SDKs for JavaScript/TypeScript (Stable), Go (Beta), and Python (Alpha) with unified interface for integrating AI models from providers like Google, OpenAI, Anthropic, Ollama. Rapidly build chatbots, automations, and recommendation systems using streamlined APIs for multimodal content, structured outputs, tool calling, and agentic workflows. Genkit simplifies AI integration with open-source SDK, unified APIs, and offers text and image generation, structured data generation, tool calling, prompt templating, persisted chat interfaces, AI workflows, and AI-powered data retrieval (RAG).

genkit

Firebase Genkit (beta) is a framework with powerful tooling to help app developers build, test, deploy, and monitor AI-powered features with confidence. Genkit is cloud optimized and code-centric, integrating with many services that have free tiers to get started. It provides unified API for generation, context-aware AI features, evaluation of AI workflow, extensibility with plugins, easy deployment to Firebase or Google Cloud, observability and monitoring with OpenTelemetry, and a developer UI for prototyping and testing AI features locally. Genkit works seamlessly with Firebase or Google Cloud projects through official plugins and templates.

azure-openai-samples

This repository provides resources to understand and utilize GPT (Generative Pre-trained Transformer) by Azure OpenAI. It includes sample solutions, use cases, and quick start guides. Users can explore various applications of GPT, such as chatbots, customer service, and content generation. The repository also offers Langchain, Semantic Kernel, and Prompt Flow samples, along with Serverless SQL GPT for natural language processing in Azure Synapse Analytics. The samples are based on GPT 3.5, with plans to update for GPT-4. Users are encouraged to contribute to keep the repository updated with the latest technologies and solutions.

obsidian-textgenerator-plugin

Text Generator is an open-source AI Assistant Tool that leverages Generative Artificial Intelligence to enhance knowledge creation and organization in Obsidian. It allows users to generate ideas, titles, summaries, outlines, and paragraphs based on their knowledge database, offering endless possibilities. The plugin is free and open source, compatible with Obsidian for a powerful Personal Knowledge Management system. It provides flexible prompts, template engine for repetitive tasks, community templates for shared use cases, and highly flexible configuration with services like Google Generative AI, OpenAI, and HuggingFace.

baserow

Baserow is a secure, open-source platform that allows users to build databases, applications, automations, and AI agents without writing any code. With enterprise-grade security compliance and both cloud and self-hosted deployment options, Baserow empowers teams to structure data, automate processes, create internal tools, and build custom dashboards. It features a spreadsheet database hybrid, AI Assistant for natural language database creation, GDPR, HIPAA, and SOC 2 Type II compliance, and seamless integration with existing tools. Baserow is API-first, extensible, and uses frameworks like Django, Vue.js, and PostgreSQL.

llmariner

LLMariner is an extensible open source platform built on Kubernetes to simplify the management of generative AI workloads. It enables efficient handling of training and inference data within clusters, with OpenAI-compatible APIs for seamless integration with a wide range of AI-driven applications.

langchain

LangChain is a framework for building LLM-powered applications that simplifies AI application development by chaining together interoperable components and third-party integrations. It helps developers connect LLMs to diverse data sources, swap models easily, and future-proof decisions as technology evolves. LangChain's ecosystem includes tools like LangSmith for agent evals, LangGraph for complex task handling, and LangGraph Platform for deployment and scaling. Additional resources include tutorials, how-to guides, conceptual guides, a forum, API reference, and chat support.

enthusiast

Enthusiast is a production-ready agentic AI framework for E-commerce, offering tools like Retrieval-Argumented Generation (RAG), vector search, and workflow orchestrator. It helps in building AI-powered tools with customized agents for tasks like smart information search, customer support, content generation, and knowledge base automation. Enthusiast provides validation and evaluation components to ensure responses are grounded in actual data, reducing time, cost, and complexity in AI development.

learn-modern-ai-python

This repository is part of the Certified Agentic & Robotic AI Engineer program, covering the first quarter of the course work. It focuses on Modern AI Python Programming, emphasizing static typing for robust and scalable AI development. The course includes modules on Python fundamentals, object-oriented programming, advanced Python concepts, AI-assisted Python programming, web application basics with Python, and the future of Python in AI. Upon completion, students will be able to write proficient Modern Python code, apply OOP principles, implement asynchronous programming, utilize AI-powered tools, develop basic web applications, and understand the future directions of Python in AI.

goose

Codename Goose is an open-source, extensible AI agent designed to provide functionalities beyond code suggestions. Users can install, execute, edit, and test with any LLM. The tool aims to enhance the coding experience by offering advanced features and capabilities. Stay updated for the upcoming 1.0 release scheduled by the end of January 2025. Explore the v0.X documentation available on the project's GitHub pages.

mindsdb

MindsDB is a platform for customizing AI from enterprise data. You can create, serve, and fine-tune models in real-time from your database, vector store, and application data. MindsDB "enhances" SQL syntax with AI capabilities to make it accessible for developers worldwide. With MindsDB’s nearly 200 integrations, any developer can create AI customized for their purpose, faster and more securely. Their AI systems will constantly improve themselves — using companies’ own data, in real-time.

embedJs

EmbedJs is a NodeJS framework that simplifies RAG application development by efficiently processing unstructured data. It segments data, creates relevant embeddings, and stores them in a vector database for quick retrieval.

nocobase

NocoBase is an extensible AI-powered no-code platform that offers total control, infinite extensibility, and AI collaboration. It enables teams to adapt quickly and reduce costs without the need for years of development or wasted resources. With NocoBase, users can deploy the platform in minutes and have complete control over their projects. The platform is data model-driven, allowing for unlimited possibilities by decoupling UI and data structure. It integrates AI capabilities seamlessly into business systems, enabling roles such as translator, analyst, researcher, or assistant. NocoBase provides a simple and intuitive user experience with a 'what you see is what you get' approach. It is designed for extension through its plugin-based architecture, allowing users to customize and extend functionalities easily.

ServerlessLLM

ServerlessLLM is a fast, affordable, and easy-to-use library designed for multi-LLM serving, optimized for environments with limited GPU resources. It supports loading various leading LLM inference libraries, achieving fast load times, and reducing model switching overhead. The library facilitates easy deployment via Ray Cluster and Kubernetes, integrates with the OpenAI Query API, and is actively maintained by contributors.

llmesh

LLM Agentic Tool Mesh is a platform by HPE Athonet that democratizes Generative Artificial Intelligence (Gen AI) by enabling users to create tools and web applications using Gen AI with Low or No Coding. The platform simplifies the integration process, focuses on key user needs, and abstracts complex libraries into easy-to-understand services. It empowers both technical and non-technical teams to develop tools related to their expertise and provides orchestration capabilities through an agentic Reasoning Engine based on Large Language Models (LLMs) to ensure seamless tool integration and enhance organizational functionality and efficiency.

For similar tasks

imodels

Python package for concise, transparent, and accurate predictive modeling. All sklearn-compatible and easy to use. _For interpretability in NLP, check out our new package:imodelsX _

vectordb-recipes

This repository contains examples, applications, starter code, & tutorials to help you kickstart your GenAI projects. * These are built using LanceDB, a free, open-source, serverless vectorDB that **requires no setup**. * It **integrates into python data ecosystem** so you can simply start using these in your existing data pipelines in pandas, arrow, pydantic etc. * LanceDB has **native Typescript SDK** using which you can **run vector search** in serverless functions! This repository is divided into 3 sections: - Examples - Get right into the code with minimal introduction, aimed at getting you from an idea to PoC within minutes! - Applications - Ready to use Python and web apps using applied LLMs, VectorDB and GenAI tools - Tutorials - A curated list of tutorials, blogs, Colabs and courses to get you started with GenAI in greater depth.

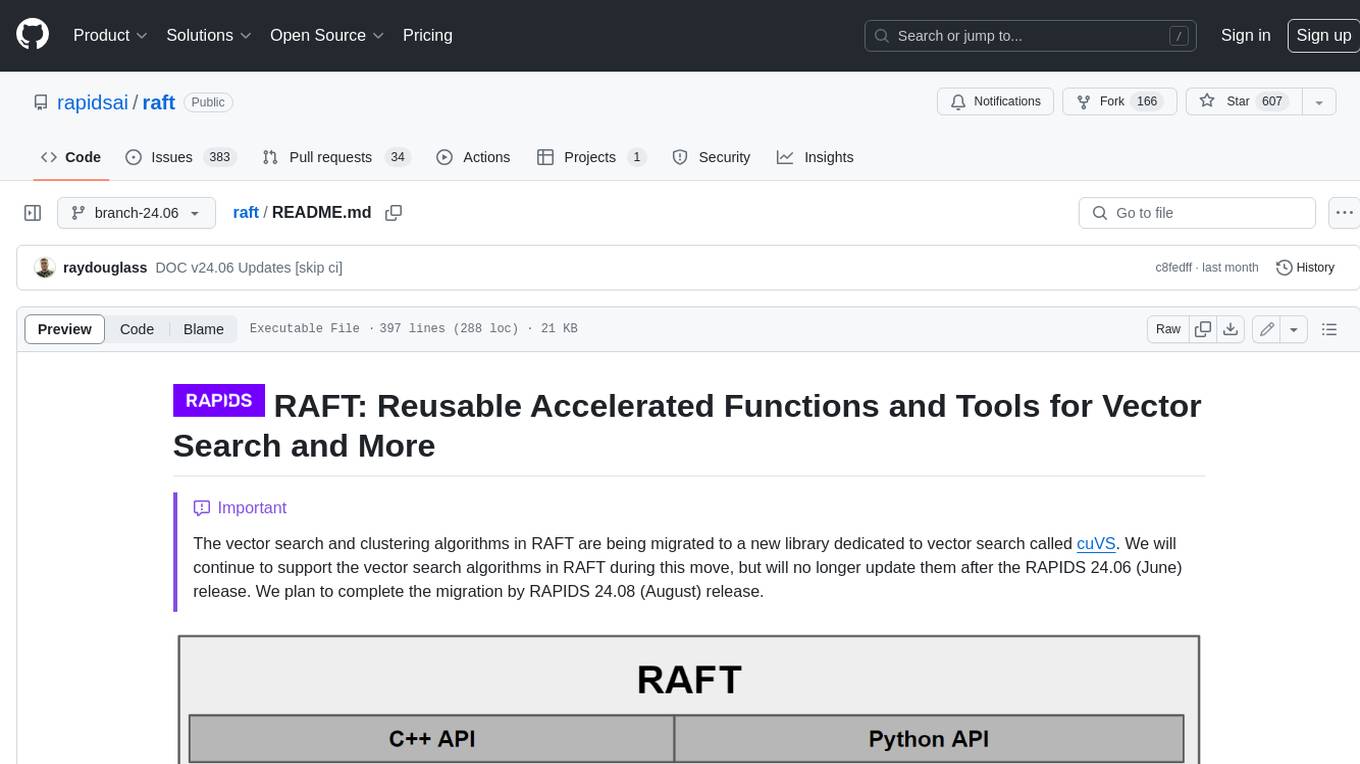

raft

RAFT (Reusable Accelerated Functions and Tools) is a C++ header-only template library with an optional shared library that contains fundamental widely-used algorithms and primitives for machine learning and information retrieval. The algorithms are CUDA-accelerated and form building blocks for more easily writing high performance applications.

superduperdb

SuperDuperDB is a Python framework for integrating AI models, APIs, and vector search engines directly with your existing databases, including hosting of your own models, streaming inference and scalable model training/fine-tuning. Build, deploy and manage any AI application without the need for complex pipelines, infrastructure as well as specialized vector databases, and moving our data there, by integrating AI at your data's source: - Generative AI, LLMs, RAG, vector search - Standard machine learning use-cases (classification, segmentation, regression, forecasting recommendation etc.) - Custom AI use-cases involving specialized models - Even the most complex applications/workflows in which different models work together SuperDuperDB is **not** a database. Think `db = superduper(db)`: SuperDuperDB transforms your databases into an intelligent platform that allows you to leverage the full AI and Python ecosystem. A single development and deployment environment for all your AI applications in one place, fully scalable and easy to manage.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.

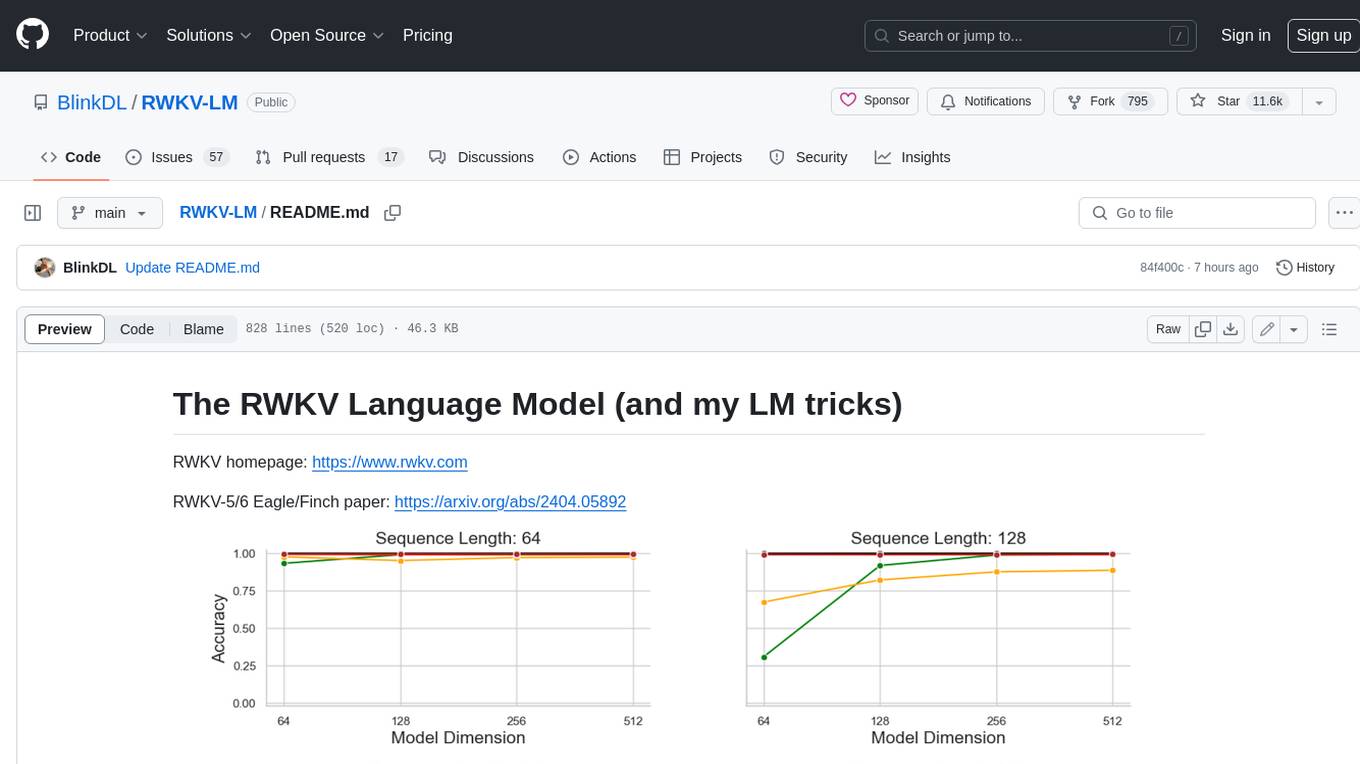

RWKV-LM

RWKV is an RNN with Transformer-level LLM performance, which can also be directly trained like a GPT transformer (parallelizable). And it's 100% attention-free. You only need the hidden state at position t to compute the state at position t+1. You can use the "GPT" mode to quickly compute the hidden state for the "RNN" mode. So it's combining the best of RNN and transformer - **great performance, fast inference, saves VRAM, fast training, "infinite" ctx_len, and free sentence embedding** (using the final hidden state).

LLMs-from-scratch

This repository contains the code for coding, pretraining, and finetuning a GPT-like LLM and is the official code repository for the book Build a Large Language Model (From Scratch). In _Build a Large Language Model (From Scratch)_, you'll discover how LLMs work from the inside out. In this book, I'll guide you step by step through creating your own LLM, explaining each stage with clear text, diagrams, and examples. The method described in this book for training and developing your own small-but-functional model for educational purposes mirrors the approach used in creating large-scale foundational models such as those behind ChatGPT.

Tutorial

The Bookworm·Puyu large model training camp aims to promote the implementation of large models in more industries and provide developers with a more efficient platform for learning the development and application of large models. Within two weeks, you will learn the entire process of fine-tuning, deploying, and evaluating large models.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.