ServerlessLLM

Serverless LLM Serving for Everyone.

Stars: 447

ServerlessLLM is a fast, affordable, and easy-to-use library designed for multi-LLM serving, optimized for environments with limited GPU resources. It supports loading various leading LLM inference libraries, achieving fast load times, and reducing model switching overhead. The library facilitates easy deployment via Ray Cluster and Kubernetes, integrates with the OpenAI Query API, and is actively maintained by contributors.

README:

| Documentation | Paper | Discord | WeChat |

ServerlessLLM (sllm, pronounced "slim") is an open-source serverless framework designed to make custom and elastic LLM deployment easy, fast, and affordable. As LLMs grow in size and complexity, deploying them on AI hardware has become increasingly costly and technically challenging, limiting custom LLM deployment to only a select few. ServerlessLLM solves these challenges with a full-stack, LLM-centric serverless system design, optimizing everything from checkpoint formats and inference runtimes to the storage layer and cluster scheduler.

Curious about how it works under the hood? Check out our System Walkthrough for a deep dive into the technical design—perfect if you're exploring your own research or building with ServerlessLLM.

- [03/25] We're excited to share that we'll be giving a ServerlessLLM tutorial at the SESAME workshop, co-located with ASPLOS/EuroSys 2025 in Rotterdam, Netherlands, on March 31. More info

- [11/24] We have added experimental support of fast checkpoint loading for AMD GPUs (ROCm) when using with vLLM, PyTorch and HuggingFace Accelerate. Please refer to the documentation for more details.

- [10/24] ServerlessLLM was invited to present at a global AI tech vision forum in Singapore.

- [10/24] We hosted the first ServerlessLLM developer meetup in Edinburgh, attracting over 50 attendees both offline and online. Together, we brainstormed many exciting new features to develop. If you have great ideas, we’d love for you to join us!

- [10/24] We made the first public release of ServerlessLLM. Check out the details of the release here.

- [09/24] ServerlessLLM now supports embedding-based RAG + LLM deployment. We’re preparing a blog and demo—stay tuned!

- [08/24] ServerlessLLM added support for vLLM.

- [07/24] We presented ServerlessLLM at Nvidia's headquarters.

- [06/24] ServerlessLLM officially went public.

ServerlessLLM is designed to support multiple LLMs in efficiently sharing limited AI hardware and dynamically switching between them on demand, which can increase hardware utilization and reduce the cost of LLM services. This multi-LLM scenario, commonly referred to as Serverless, is highly sought after by AI practitioners, as seen in solutions like Serverless Inference, Inference Endpoints, and Model Endpoints. However, these existing offerings often face performance overhead and scalability challenges, which ServerlessLLM effectively addresses through three key capabilities:

ServerlessLLM is Fast:

- Supports leading LLM inference libraries like vLLM and HuggingFace Transformers. Through vLLM, ServerlessLLM can support various types of AI hardware (summarized by vLLM at here)

- Achieves 5-10X faster loading speeds compared to Safetensors and the PyTorch Checkpoint Loader.

- Features an optimized model loading scheduler, offering 5-100X lower start-up latency than Ray Serve and KServe.

ServerlessLLM is Cost-Efficient:

- Allows multiple LLM models to share GPUs with minimal model switching overhead and supports seamless inference live migration.

- Maximizes the use of local storage on multi-GPU servers, reducing the need for expensive storage servers and excessive network bandwidth.

ServerlessLLM is Easy-to-Use:

- Simplifies deployment through Ray Cluster and Kubernetes via KubeRay.

- Supports seamless deployment of HuggingFace Transformers and custom LLM models.

- Supports NVIDIA and AMD GPUs

- Easily integrates with the OpenAI Query API.

- Install ServerlessLLM with pip or from source.

# On the head node

conda create -n sllm python=3.10 -y

conda activate sllm

pip install serverless-llm

# On a worker node

conda create -n sllm-worker python=3.10 -y

conda activate sllm-worker

pip install serverless-llm[worker]-

Start a local ServerlessLLM cluster using the Quick Start Guide.

-

Want to try fast checkpoint loading in your own code? Check out the ServerlessLLM Store Guide.

To install ServerlessLLM, please follow the steps outlined in our documentation. ServerlessLLM also offers Python APIs for loading and unloading checkpoints, as well as CLI tools to launch an LLM cluster. Both the CLI tools and APIs are demonstrated in the documentation.

Benchmark results for ServerlessLLM can be found here.

ServerlessLLM is maintained by a global team of over 10 developers, and this number is growing. If you're interested in learning more or getting involved, we invite you to join our community on Discord and WeChat. Share your ideas, ask questions, and contribute to the development of ServerlessLLM. For becoming a contributor, please refer to our Contributor Guide.

If you use ServerlessLLM for your research, please cite our paper:

@inproceedings{fu2024serverlessllm,

title={ServerlessLLM: Low-Latency Serverless Inference for Large Language Models},

author={Fu, Yao and Xue, Leyang and Huang, Yeqi and Brabete, Andrei-Octavian and Ustiugov, Dmitrii and Patel, Yuvraj and Mai, Luo},

booktitle={18th USENIX Symposium on Operating Systems Design and Implementation (OSDI 24)},

pages={135--153},

year={2024}

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ServerlessLLM

Similar Open Source Tools

ServerlessLLM

ServerlessLLM is a fast, affordable, and easy-to-use library designed for multi-LLM serving, optimized for environments with limited GPU resources. It supports loading various leading LLM inference libraries, achieving fast load times, and reducing model switching overhead. The library facilitates easy deployment via Ray Cluster and Kubernetes, integrates with the OpenAI Query API, and is actively maintained by contributors.

hopsworks

Hopsworks is a data platform for ML with a Python-centric Feature Store and MLOps capabilities. It provides collaboration for ML teams, offering a secure, governed platform for developing, managing, and sharing ML assets. Hopsworks supports project-based multi-tenancy, team collaboration, development tools for Data Science, and is available on any platform including managed cloud services and on-premise installations. The platform enables end-to-end responsibility from raw data to managed features and models, supports versioning, lineage, and provenance, and facilitates the complete MLOps life cycle.

ml-engineering

This repository provides a comprehensive collection of methodologies, tools, and step-by-step instructions for successful training of large language models (LLMs) and multi-modal models. It is a technical resource suitable for LLM/VLM training engineers and operators, containing numerous scripts and copy-n-paste commands to facilitate quick problem-solving. The repository is an ongoing compilation of the author's experiences training BLOOM-176B and IDEFICS-80B models, and currently focuses on the development and training of Retrieval Augmented Generation (RAG) models at Contextual.AI. The content is organized into six parts: Insights, Hardware, Orchestration, Training, Development, and Miscellaneous. It includes key comparison tables for high-end accelerators and networks, as well as shortcuts to frequently needed tools and guides. The repository is open to contributions and discussions, and is licensed under Attribution-ShareAlike 4.0 International.

langwatch

LangWatch is a monitoring and analytics platform designed to track, visualize, and analyze interactions with Large Language Models (LLMs). It offers real-time telemetry to optimize LLM cost and latency, a user-friendly interface for deep insights into LLM behavior, user analytics for engagement metrics, detailed debugging capabilities, and guardrails to monitor LLM outputs for issues like PII leaks and toxic language. The platform supports OpenAI and LangChain integrations, simplifying the process of tracing LLM calls and generating API keys for usage. LangWatch also provides documentation for easy integration and self-hosting options for interested users.

supervisely

Supervisely is a computer vision platform that provides a range of tools and services for developing and deploying computer vision solutions. It includes a data labeling platform, a model training platform, and a marketplace for computer vision apps. Supervisely is used by a variety of organizations, including Fortune 500 companies, research institutions, and government agencies.

mastra

Mastra is an opinionated Typescript framework designed to help users quickly build AI applications and features. It provides primitives such as workflows, agents, RAG, integrations, syncs, and evals. Users can run Mastra locally or deploy it to a serverless cloud. The framework supports various LLM providers, offers tools for building language models, workflows, and accessing knowledge bases. It includes features like durable graph-based state machines, retrieval-augmented generation, integrations, syncs, and automated tests for evaluating LLM outputs.

mindsdb

MindsDB is a platform for customizing AI from enterprise data. You can create, serve, and fine-tune models in real-time from your database, vector store, and application data. MindsDB "enhances" SQL syntax with AI capabilities to make it accessible for developers worldwide. With MindsDB’s nearly 200 integrations, any developer can create AI customized for their purpose, faster and more securely. Their AI systems will constantly improve themselves — using companies’ own data, in real-time.

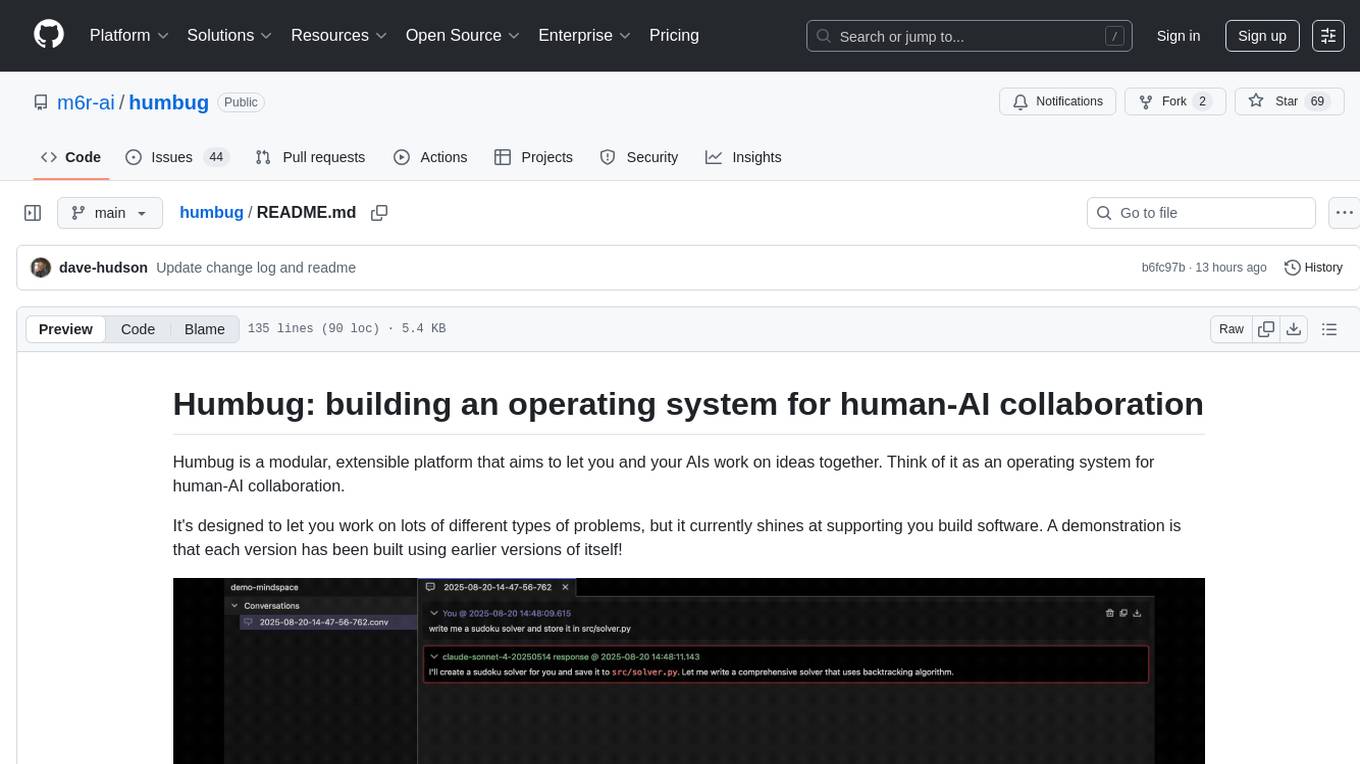

humbug

Humbug is a modular platform designed for human-AI collaboration, providing a project-centric workspace with multiple large language models, structured context engineering, and powerful, pluggable tools. It allows users to work on various problems, particularly in software development, with the flexibility to add new AI backends and tools. Humbug is open-source, OS-agnostic, and minimal in dependencies, offering a unified experience on Windows, macOS, and Linux.

kitops

KitOps is a packaging and versioning system for AI/ML projects that uses open standards so it works with the AI/ML, development, and DevOps tools you are already using. KitOps simplifies the handoffs between data scientists, application developers, and SREs working with LLMs and other AI/ML models. KitOps' ModelKits are a standards-based package for models, their dependencies, configurations, and codebases. ModelKits are portable, reproducible, and work with the tools you already use.

nextpy

Nextpy is a cutting-edge software development framework optimized for AI-based code generation. It provides guardrails for defining AI system boundaries, structured outputs for prompt engineering, a powerful prompt engine for efficient processing, better AI generations with precise output control, modularity for multiplatform and extensible usage, developer-first approach for transferable knowledge, and containerized & scalable deployment options. It offers 4-10x faster performance compared to Streamlit apps, with a focus on cooperation within the open-source community and integration of key components from various projects.

OpenDAN-Personal-AI-OS

OpenDAN is an open source Personal AI OS that consolidates various AI modules for personal use. It empowers users to create powerful AI agents like assistants, tutors, and companions. The OS allows agents to collaborate, integrate with services, and control smart devices. OpenDAN offers features like rapid installation, AI agent customization, connectivity via Telegram/Email, building a local knowledge base, distributed AI computing, and more. It aims to simplify life by putting AI in users' hands. The project is in early stages with ongoing development and future plans for user and kernel mode separation, home IoT device control, and an official OpenDAN SDK release.

reductstore

ReductStore is a high-performance time series database designed for storing and managing large amounts of unstructured blob data. It offers features such as real-time querying, batching data, and HTTP(S) API for edge computing, computer vision, and IoT applications. The database ensures data integrity, implements retention policies, and provides efficient data access, making it a cost-effective solution for applications requiring unstructured data storage and access at specific time intervals.

morphik-core

Morphik is an AI-native toolset designed to help developers integrate context into their AI applications by providing tools to store, represent, and search unstructured data. It offers features such as multimodal search, fast metadata extraction, and integrations with existing tools. Morphik aims to address the challenges of traditional AI approaches that struggle with visually rich documents and provide a more comprehensive solution for understanding and processing complex data.

AgentUp

AgentUp is an active development tool that provides a developer-first agent framework for creating AI agents with enterprise-grade infrastructure. It allows developers to define agents with configuration, ensuring consistent behavior across environments. The tool offers secure design, configuration-driven architecture, extensible ecosystem for customizations, agent-to-agent discovery, asynchronous task architecture, deterministic routing, and MCP support. It supports multiple agent types like reactive agents and iterative agents, making it suitable for chatbots, interactive applications, research tasks, and more. AgentUp is built by experienced engineers from top tech companies and is designed to make AI agents production-ready, secure, and reliable.

AppFlowy

AppFlowy.IO is an open-source alternative to Notion, providing users with control over their data and customizations. It aims to offer functionality, data security, and cross-platform native experience to individuals, as well as building blocks and collaboration infra services to enterprises and hackers. The tool is built with Flutter and Rust, supporting multiple platforms and emphasizing long-term maintainability. AppFlowy prioritizes data privacy, reliable native experience, and community-driven extensibility, aiming to democratize the creation of complex workplace management tools.

miniLLMFlow

Mini LLM Flow is a 100-line minimalist LLM framework designed for agents, task decomposition, RAG, etc. It aims to be the framework used by LLMs, focusing on high-level programming paradigms while stripping away low-level implementation details. It serves as a learning resource and allows LLMs to design, build, and maintain projects themselves.

For similar tasks

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

ray

Ray is a unified framework for scaling AI and Python applications. It consists of a core distributed runtime and a set of AI libraries for simplifying ML compute, including Data, Train, Tune, RLlib, and Serve. Ray runs on any machine, cluster, cloud provider, and Kubernetes, and features a growing ecosystem of community integrations. With Ray, you can seamlessly scale the same code from a laptop to a cluster, making it easy to meet the compute-intensive demands of modern ML workloads.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

djl

Deep Java Library (DJL) is an open-source, high-level, engine-agnostic Java framework for deep learning. It is designed to be easy to get started with and simple to use for Java developers. DJL provides a native Java development experience and allows users to integrate machine learning and deep learning models with their Java applications. The framework is deep learning engine agnostic, enabling users to switch engines at any point for optimal performance. DJL's ergonomic API interface guides users with best practices to accomplish deep learning tasks, such as running inference and training neural networks.

mlflow

MLflow is a platform to streamline machine learning development, including tracking experiments, packaging code into reproducible runs, and sharing and deploying models. MLflow offers a set of lightweight APIs that can be used with any existing machine learning application or library (TensorFlow, PyTorch, XGBoost, etc), wherever you currently run ML code (e.g. in notebooks, standalone applications or the cloud). MLflow's current components are:

* `MLflow Tracking

tt-metal

TT-NN is a python & C++ Neural Network OP library. It provides a low-level programming model, TT-Metalium, enabling kernel development for Tenstorrent hardware.

burn

Burn is a new comprehensive dynamic Deep Learning Framework built using Rust with extreme flexibility, compute efficiency and portability as its primary goals.

awsome-distributed-training

This repository contains reference architectures and test cases for distributed model training with Amazon SageMaker Hyperpod, AWS ParallelCluster, AWS Batch, and Amazon EKS. The test cases cover different types and sizes of models as well as different frameworks and parallel optimizations (Pytorch DDP/FSDP, MegatronLM, NemoMegatron...).

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.