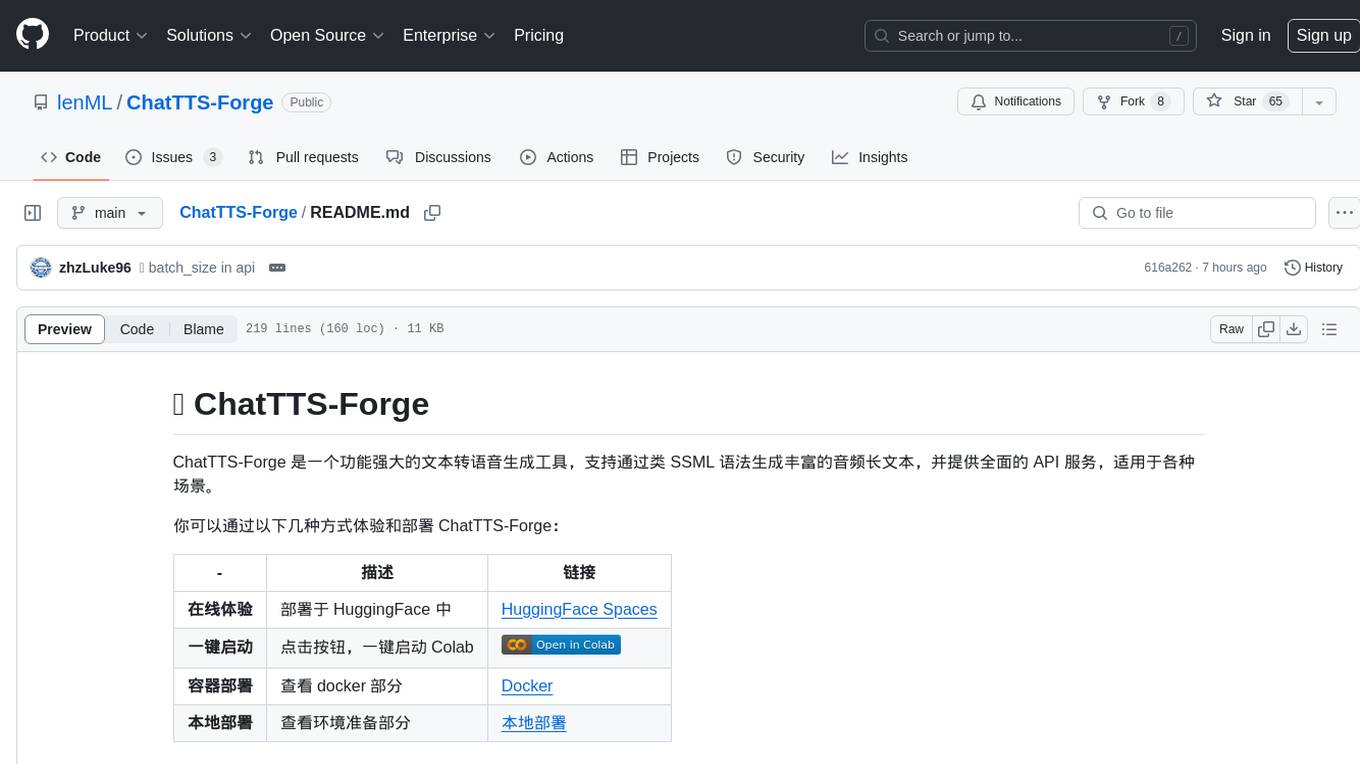

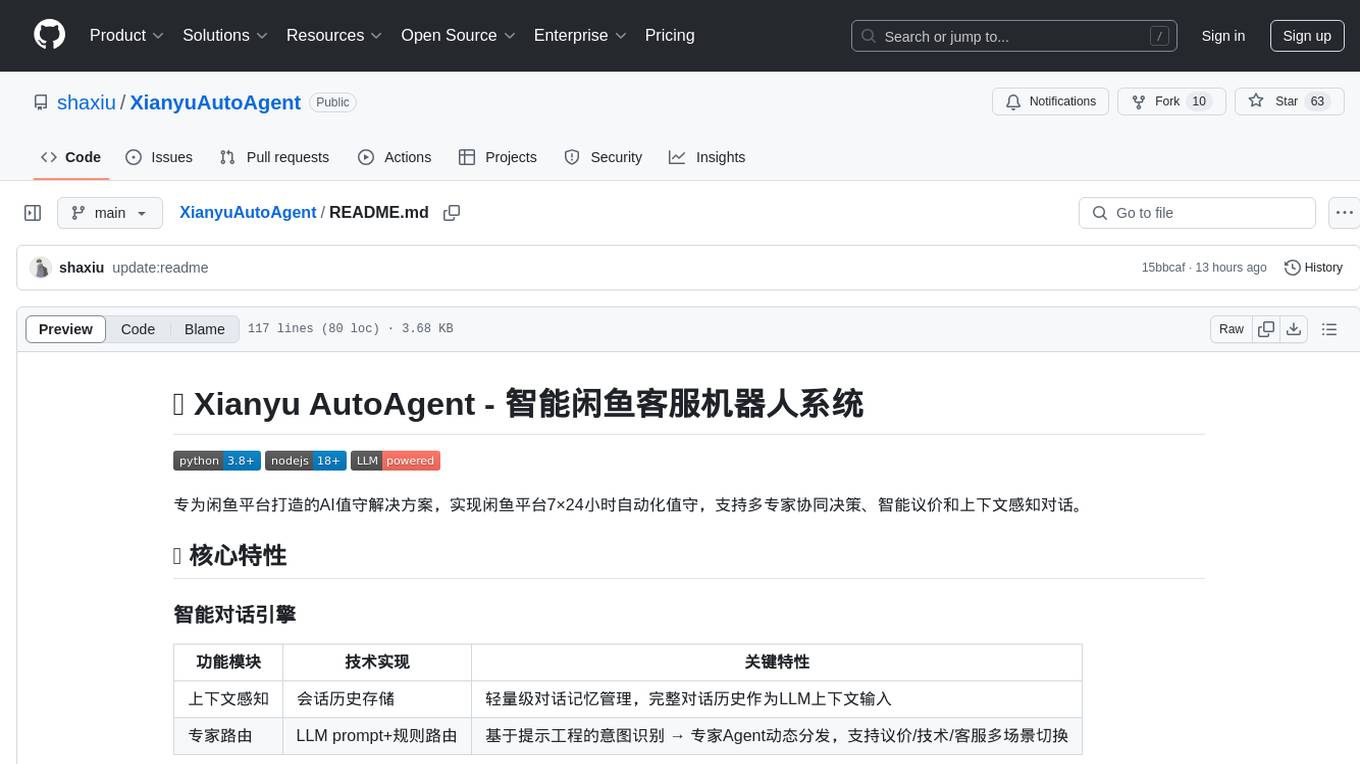

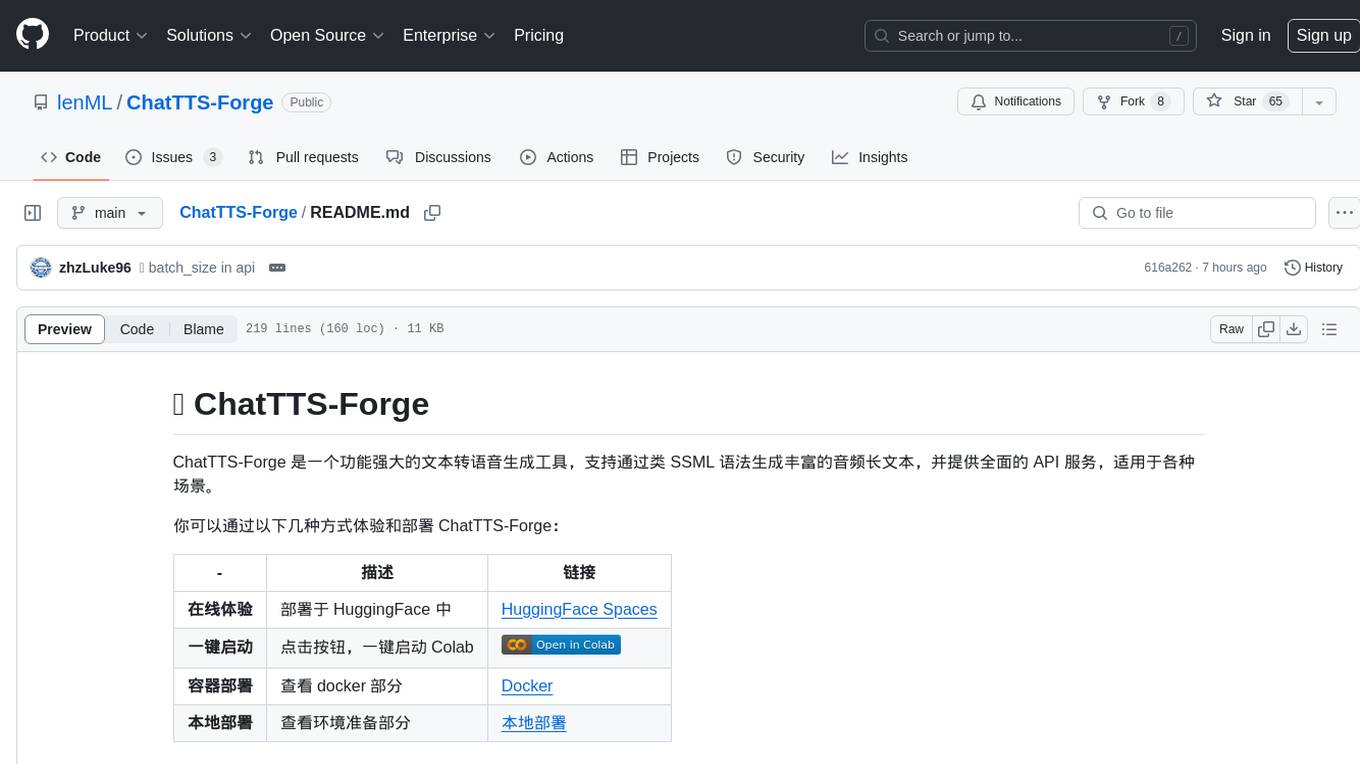

ChatTTS-Forge

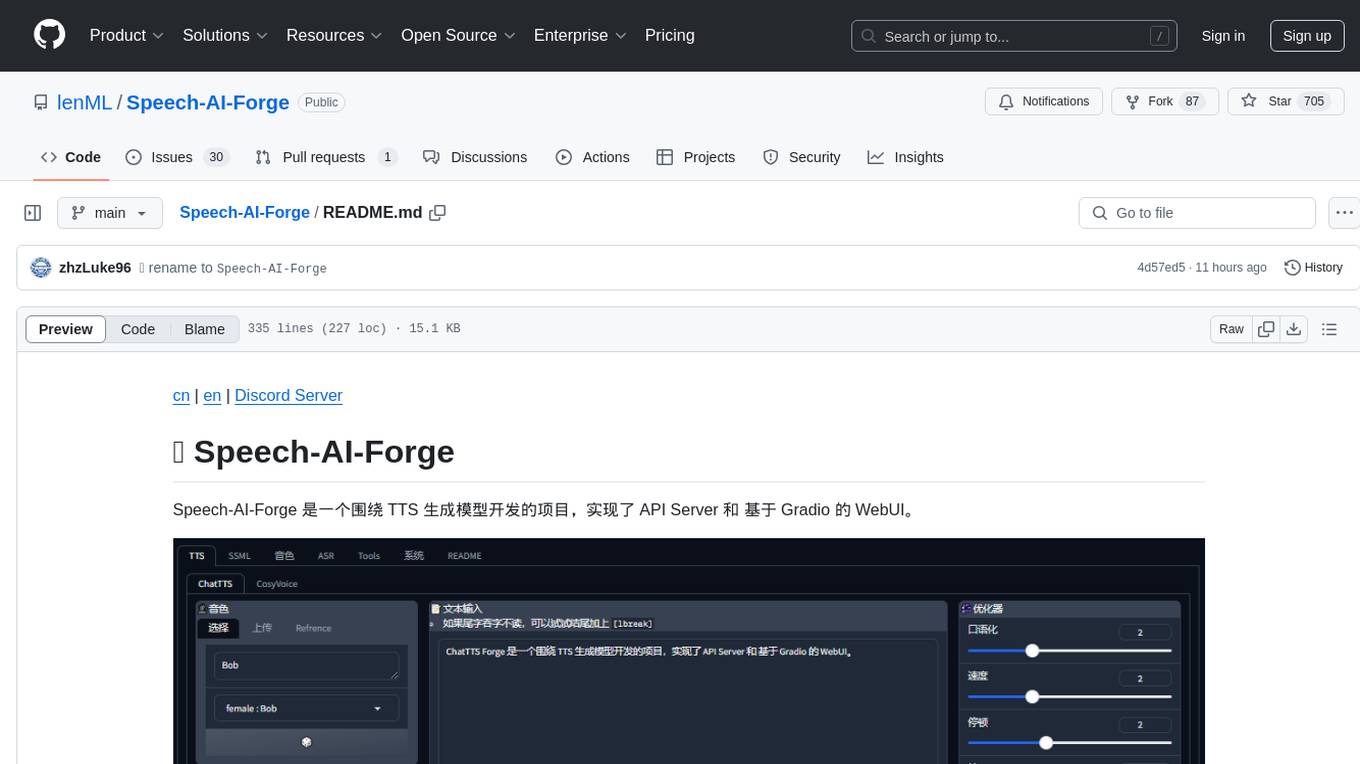

🍦 ChatTTS-Forge is a project developed around TTS generation model, implementing an API Server and a Gradio-based WebUI.

Stars: 692

ChatTTS-Forge is a powerful text-to-speech generation tool that supports generating rich audio long texts using a SSML-like syntax and provides comprehensive API services, suitable for various scenarios. It offers features such as batch generation, support for generating super long texts, style prompt injection, full API services, user-friendly debugging GUI, OpenAI-style API, Google-style API, support for SSML-like syntax, speaker management, style management, independent refine API, text normalization optimized for ChatTTS, and automatic detection and processing of markdown format text. The tool can be experienced and deployed online through HuggingFace Spaces, launched with one click on Colab, deployed using containers, or locally deployed after cloning the project, preparing models, and installing necessary dependencies.

README:

cn | en | Discord Server

ChatTTS-Forge 是一个围绕 TTS 生成模型开发的项目,实现了 API Server 和 基于 Gradio 的 WebUI。

你可以通过以下几种方式体验和部署 ChatTTS-Forge:

| - | 描述 | 链接 |

|---|---|---|

| 在线体验 | 部署于 HuggingFace 中 | HuggingFace Spaces |

| 一键启动 | 点击按钮,一键启动 Colab | |

| 容器部署 | 查看 docker 部分 | Docker |

| 本地部署 | 查看环境准备部分 | 本地部署 |

首先,确保 相关依赖 已经正确安装

启动:

python webui.py

- TTS: tts 模型的功能

- Speaker Switch: 可以切换音色

- 内置音色: 内置多个音色可使用,

27 ChatTTS/7 CosyVoice音色 +1 参考音色 - 音色上传: 支持上传自定义音色文件,并实时推理

- 参考音色: 支持上传参考音频/文本,直接使用参考音频进行

tts推理

- 内置音色: 内置多个音色可使用,

- Style: 风格控制内置多种风格控制

- Long Text: 支持超长文本推理,自动分割文本

- Batch Size: 可设置

Batch size,对于支持batch推理的模型长文本推理速度更快

- Batch Size: 可设置

- Refiner: 支持

ChatTTS原生文本refiner,同时支持无限长文本 - 分割器: 可调整分割器配置,控制分割器

eos和分割阈值 - 调节器: 支持对

速度/音调/音量调整,并增加实用的响度均衡功能 - 人声增强: 支持使用

Enhancer模型增强TTS输出结果,进一步提高输出质量 - 生成历史: 支持保留最近三次生成结果,方便对比

- 多模型: 支持多种

TTS模型推理,包括ChatTTS/CosyVoice/FishSpeech/GPT-SoVITS等

- Speaker Switch: 可以切换音色

- SSML: 类 XML 语法的高级 TTS 合成控制工具

- 分割器: 在这里面可以更加细致的控制长文本分割结果

- PodCast: 博客工具,帮助你根据博客脚本创建

长文本、多角色音频 - From subtitle: 从字幕文件创建

SSML脚本

- 音色 (说话人):

- Builder: 创建音色,目前可以从 ChatTTS seed 创建音色、或者使用 Refrence Audio 创建

参考音色 - Test Voice: 试音,上传音色文件,简单测试音色

- ChatTTS: 针对 ChatTTS 音色的调试工具

- 抽卡: 使用随机种子抽卡,创建随机音色

- 融合: 融合不同种子创建的音色

- Builder: 创建音色,目前可以从 ChatTTS seed 创建音色、或者使用 Refrence Audio 创建

- ASR:

- Whisper: 使用 whisper 模型进行 asr

- SenseVoice: WIP

- Tools: 一些实用的工具

- Post Process: 后处理工具,可以在这里

剪辑、调整、增强音频

- Post Process: 后处理工具,可以在这里

某些情况,你并不需要 webui 或者需要更高的 api 吞吐,那么可以使用这个脚本启动单纯的 api 服务。

启动:

python launch.py

启动之后开启 http://localhost:7870/docs 可以查看开启了哪些 api 端点

更多帮助信息:

- 通过

python launch.py -h查看脚本参数 - 查看 API 文档

通过 /v1/xtts_v2 系列 api,你可以方便的将 ChatTTS-Forge 连接到你的 SillyTavern 中。

下面是一个简单的配置指南:

- 点开 插件拓展

- 点开

TTS插件配置部分 - 切换

TTS Provider为XTTSv2 - 勾选

Enabled - 选择/配置

Voice -

[关键] 设置

Provider Endpoint到http://localhost:7870/v1/xtts_v2

input

<speak version="0.1">

<voice spk="Bob" seed="42" style="narration-relaxed">

下面是一个 ChatTTS 用于合成多角色多情感的有声书示例[lbreak]

</voice>

<voice spk="Bob" seed="42" style="narration-relaxed">

黛玉冷笑道:[lbreak]

</voice>

<voice spk="female2" seed="42" style="angry">

我说呢 [uv_break] ,亏了绊住,不然,早就飞起来了[lbreak]

</voice>

<voice spk="Bob" seed="42" style="narration-relaxed">

宝玉道:[lbreak]

</voice>

<voice spk="Alice" seed="42" style="unfriendly">

“只许和你玩 [uv_break] ,替你解闷。不过偶然到他那里,就说这些闲话。”[lbreak]

</voice>

<voice spk="female2" seed="42" style="angry">

“好没意思的话![uv_break] 去不去,关我什么事儿? 又没叫你替我解闷儿 [uv_break],还许你不理我呢” [lbreak]

</voice>

<voice spk="Bob" seed="42" style="narration-relaxed">

说着,便赌气回房去了 [lbreak]

</voice>

</speak>output

input

中华美食,作为世界饮食文化的瑰宝,以其丰富的种类、独特的风味和精湛的烹饪技艺而闻名于世。中国地大物博,各地区的饮食习惯和烹饪方法各具特色,形成了独树一帜的美食体系。从北方的京鲁菜、东北菜,到南方的粤菜、闽菜,无不展现出中华美食的多样性。

在中华美食的世界里,五味调和,色香味俱全。无论是辣味浓郁的川菜,还是清淡鲜美的淮扬菜,都能够满足不同人的口味需求。除了味道上的独特,中华美食还注重色彩的搭配和形态的美感,让每一道菜品不仅是味觉的享受,更是一场视觉的盛宴。

中华美食不仅仅是食物,更是一种文化的传承。每一道菜背后都有着深厚的历史背景和文化故事。比如,北京的烤鸭,代表着皇家气派;而西安的羊肉泡馍,则体现了浓郁的地方风情。中华美食的精髓在于它追求的“天人合一”,讲究食材的自然性和烹饪过程中的和谐。

总之,中华美食博大精深,其丰富的口感和多样的烹饪技艺,构成了一个充满魅力和无限可能的美食世界。无论你来自哪里,都会被这独特的美食文化所吸引和感动。

output

WIP 开发中

下载模型: python -m scripts.download_models --source modelscope

此脚本将下载

chat-tts和enhancer模型,如需下载其他模型,请看后续的模型下载介绍

- webui:

docker-compose -f ./docker-compose.webui.yml up -d - api:

docker-compose -f ./docker-compose.api.yml up -d

环境变量配置

- webui: .env.webui

- api: .env.api

| 模型名称 | 流式级别 | 支持复刻 | 支持训练 | 支持 prompt | 实现情况 |

|---|---|---|---|---|---|

| ChatTTS | token 级 | ✅ | ❓ | ❓ | ✅ |

| FishSpeech | 句子级 | ✅ | ❓ | ❓ | ✅ (SFT 版本开发中 🚧) |

| CosyVoice | 句子级 | ✅ | ❓ | ✅ | ✅ |

| GPTSoVits | 句子级 | ✅ | ❓ | ❓ | 🚧 |

| 模型名称 | 流式识别 | 支持训练 | 支持多语言 | 实现情况 |

|---|---|---|---|---|

| Whisper | ✅ | ❓ | ✅ | ✅ |

| SenseVoice | ✅ | ❓ | ✅ | 🚧 |

| 模型名称 | 实现情况 |

|---|---|

| OpenVoice | ✅ |

| RVC | 🚧 |

| 模型名称 | 实现情况 |

|---|---|

| ResembleEnhance | ✅ |

由于 forge 主要是面向 api 功能开发,所以目前暂未实现自动下载逻辑,下载模型需手动调用下载脚本,具体脚本在 ./scripts 目录下。

下面列出一些下载脚本使用示例:

- TTS

- 下载 ChatTTS:

python -m scripts.dl_chattts --source huggingface - 下载 FishSpeech:

python -m scripts.downloader.fish_speech_1_2sft --source huggingface - 下载 CosyVoice:

python -m scripts.downloader.dl_cosyvoice_instruct --source huggingface

- 下载 ChatTTS:

- ASR

- 下载 Whisper:

python -m scripts.downloader.faster_whisper --source huggingface

- 下载 Whisper:

- CV

- OpenVoice:

python -m scripts.downloader.open_voice --source huggingface

- OpenVoice:

- Enhancer:

python -m scripts.dl_enhance --source huggingface

其中若需要使用 model scope 下载模型,使用

--source modelscope即可。 注:部分模型无法使用 model scope 下载,因为其中没有

关于

CosyVoice: 老实说不太清楚应该用哪个模型,整体看 instruct 模型应该是功能最多的,但是可能质量不是最好的,如果要用其他模型可自行使用dl_cosyvoice_base.py或者dl_cosyvoice_instruct.py或者 sft 脚本。根据文件夹是否存在来判断加载哪个,加载优先级为base>instruct>sft。

目前已经支持各个模型的语音复刻功能,且在 skpv1 格式中也适配了参考音频等格式,下面是几种方法使用语音复刻:

- 在 webui 中:在音色选择栏可以上传参考音色,这里可以最简单的使用语音复刻功能

- 使用 api 时:使用 api 需要通过音色(即说话人)来使用语音复刻功能,所以,首先你需要创建一个你需要的说话人文件(.spkv1.json),并在调用 api 时填入 spk 参数为说话人的 name,即可使用。

- Voice Clone:现在还支持使用 voice clone 模型进行语音复刻,使用 api 时配置相应

参考即可。(由于现目前只支持 OpenVoice 用于 voice clone,所以不需要指定模型名称)

相关讨论 #118

很大可能是上传音频配置有问题,所以建议一下几个方式解决:

- 更新:更新代码更新依赖库版本,最重要的是更新 gradio (不出意外的话推荐尽量用最新版本)

- 处理音频:用 ffmpeg 或者其他软件编辑音频,转为单声道然后再上传,也可以尝试转码为 wav 格式

- 检查文本:检查参考文本是否有不支持的字符。同时,建议参考文本使用

"。"号结尾(这是模型特性 😂) - 用 colab 创建:可以考虑使用

colab环境来创建 spk 文件,最大限度减少运行环境导致的问题 - TTS 测试:目前 webui tts 页面里,你可以直接上传参考音频,可以先测试音频和文本,调整之后,再生成 spk 文件

现在没有,本库主要是提供推理服务框架。 有计划增加一些训练相关的功能,但是预计不会太积极的推进。

首先,无特殊情况本库只计划整合和开发工程化方案,而对于模型推理优化比较依赖上游仓库或者社区实现 如果有好的推理优化欢迎提 issue 和 pr

现目前,最实际的优化是开启多 workers,启动 launch.py 脚本时开启 --workers N 以增加服务吞吐

还有其他待选不完善的提速优化,有兴趣的可尝试探索:

- compile: 模型都支持 compile 加速,大约有 30% 增益,但是编译期很慢

- flash_attn:使用 flash attn 加速,有支持(

--flash_attn参数),但是也不完善 - vllm:未实现,待上游仓库更新

仅限 ChatTTS

Prompt1 和 Prompt2 都是系统提示(system prompt),区别在于插入点不同。因为测试发现当前模型对第一个 [Stts] token 非常敏感,所以需要两个提示。

- Prompt1 插入到第一个 [Stts] 之前

- Prompt2 插入到第一个 [Stts] 之后

仅限 ChatTTS

Prefix 主要用于控制模型的生成能力,类似于官方示例中的 refine prompt。这个 prefix 中应该只包含特殊的非语素 token,如 [laugh_0]、[oral_0]、[speed_0]、[break_0] 等。

Style 中带有 _p 的使用了 prompt + prefix,而不带 _p 的则只使用 prefix。

由于还未实现推理 padding 所以如果每次推理 shape 改变都可能触发 torch 进行 compile

暂时不建议开启

请确保使用 gpu 而非 cpu。

- 点击菜单栏 【修改】

- 点击 【笔记本设置】

- 选择 【硬件加速器】 => T4 GPU

感谢 @Phrixus2023 提供的整合包: https://pan.baidu.com/s/1Q1vQV5Gs0VhU5J76dZBK4Q?pwd=d7xu

相关讨论: https://github.com/lenML/ChatTTS-Forge/discussions/65

在这里可以找到 更多文档

To contribute, clone the repository, make your changes, commit and push to your clone, and submit a pull request.

-

ChatTTS: https://github.com/2noise/ChatTTS

-

PaddleSpeech: https://github.com/PaddlePaddle/PaddleSpeech

-

resemble-enhance: https://github.com/resemble-ai/resemble-enhance

-

OpenVoice: https://github.com/myshell-ai/OpenVoice

-

FishSpeech: https://github.com/fishaudio/fish-speech

-

SenseVoice: https://github.com/FunAudioLLM/SenseVoice

-

CosyVoice: https://github.com/FunAudioLLM/CosyVoice

-

Whisper: https://github.com/openai/whisper

-

ChatTTS 默认说话人: https://github.com/2noise/ChatTTS/issues/238

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ChatTTS-Forge

Similar Open Source Tools

ChatTTS-Forge

ChatTTS-Forge is a powerful text-to-speech generation tool that supports generating rich audio long texts using a SSML-like syntax and provides comprehensive API services, suitable for various scenarios. It offers features such as batch generation, support for generating super long texts, style prompt injection, full API services, user-friendly debugging GUI, OpenAI-style API, Google-style API, support for SSML-like syntax, speaker management, style management, independent refine API, text normalization optimized for ChatTTS, and automatic detection and processing of markdown format text. The tool can be experienced and deployed online through HuggingFace Spaces, launched with one click on Colab, deployed using containers, or locally deployed after cloning the project, preparing models, and installing necessary dependencies.

AIStudioToAPI

AIStudioToAPI is a tool that encapsulates the Google AI Studio web interface to be compatible with OpenAI API, Gemini API, and Anthropic API. It acts as a proxy, converting API requests into interactions with the AI Studio web interface. The tool supports API compatibility with OpenAI, Gemini, and Anthropic, browser automation with the AI Studio web interface, secure authentication mechanism based on API keys, tool calls for OpenAI, Gemini, and Anthropic interfaces, access to various Gemini models including image models and TTS speech synthesis models through AI Studio, and provides a visual Web console for account management and VNC login operations.

llmio

LLMIO is a Go-based LLM load balancing gateway that provides a unified REST API, weight scheduling, logging, and modern management interface for your LLM clients. It helps integrate different model capabilities from OpenAI, Anthropic, Gemini, and more in a single service. Features include unified API compatibility, weight scheduling with two strategies, visual management dashboard, rate and failure handling, and local persistence with SQLite. The tool supports multiple vendors' APIs and authentication methods, making it versatile for various AI model integrations.

Langchain-Chatchat

LangChain-Chatchat is an open-source, offline-deployable retrieval-enhanced generation (RAG) large model knowledge base project based on large language models such as ChatGLM and application frameworks such as Langchain. It aims to establish a knowledge base Q&A solution that is friendly to Chinese scenarios, supports open-source models, and can run offline.

Speech-AI-Forge

Speech-AI-Forge is a project developed around TTS generation models, implementing an API Server and a WebUI based on Gradio. The project offers various ways to experience and deploy Speech-AI-Forge, including online experience on HuggingFace Spaces, one-click launch on Colab, container deployment with Docker, and local deployment. The WebUI features include TTS model functionality, speaker switch for changing voices, style control, long text support with automatic text segmentation, refiner for ChatTTS native text refinement, various tools for voice control and enhancement, support for multiple TTS models, SSML synthesis control, podcast creation tools, voice creation, voice testing, ASR tools, and post-processing tools. The API Server can be launched separately for higher API throughput. The project roadmap includes support for various TTS models, ASR models, voice clone models, and enhancer models. Model downloads can be manually initiated using provided scripts. The project aims to provide inference services and may include training-related functionalities in the future.

Muice-Chatbot

Muice-Chatbot is an AI chatbot designed to proactively engage in conversations with users. It is based on the ChatGLM2-6B and Qwen-7B models, with a training dataset of 1.8K+ dialogues. The chatbot has a speaking style similar to a 2D girl, being somewhat tsundere but willing to share daily life details and greet users differently every day. It provides various functionalities, including initiating chats and offering 5 available commands. The project supports model loading through different methods and provides onebot service support for QQ users. Users can interact with the chatbot by running the main.py file in the project directory.

pi-browser

Pi-Browser is a CLI tool for automating browsers based on multiple AI models. It supports various AI models like Google Gemini, OpenAI, Anthropic Claude, and Ollama. Users can control the browser using natural language commands and perform tasks such as web UI management, Telegram bot integration, Notion integration, extension mode for maintaining Chrome login status, parallel processing with multiple browsers, and offline execution with the local AI model Ollama.

xiaogpt

xiaogpt is a tool that allows you to play ChatGPT and other LLMs with Xiaomi AI Speaker. It supports ChatGPT, New Bing, ChatGLM, Gemini, Doubao, and Tongyi Qianwen. You can use it to ask questions, get answers, and have conversations with AI assistants. xiaogpt is easy to use and can be set up in a few minutes. It is a great way to experience the power of AI and have fun with your Xiaomi AI Speaker.

LangChain-SearXNG

LangChain-SearXNG is an open-source AI search engine built on LangChain and SearXNG. It supports faster and more accurate search and question-answering functionalities. Users can deploy SearXNG and set up Python environment to run LangChain-SearXNG. The tool integrates AI models like OpenAI and ZhipuAI for search queries. It offers two search modes: Searxng and ZhipuWebSearch, allowing users to control the search workflow based on input parameters. LangChain-SearXNG v2 version enhances response speed and content quality compared to the previous version, providing a detailed configuration guide and showcasing the effectiveness of different search modes through comparisons.

md

The WeChat Markdown editor automatically renders Markdown documents as WeChat articles, eliminating the need to worry about WeChat content layout! As long as you know basic Markdown syntax (now with AI, you don't even need to know Markdown), you can create a simple and elegant WeChat article. The editor supports all basic Markdown syntax, mathematical formulas, rendering of Mermaid charts, GFM warning blocks, PlantUML rendering support, ruby annotation extension support, rich code block highlighting themes, custom theme colors and CSS styles, multiple image upload functionality with customizable configuration of image hosting services, convenient file import/export functionality, built-in local content management with automatic draft saving, integration of mainstream AI models (such as DeepSeek, OpenAI, Tongyi Qianwen, Tencent Hanyuan, Volcano Ark, etc.) to assist content creation.

Awesome-ChatTTS

Awesome-ChatTTS is an official recommended guide for ChatTTS beginners, compiling common questions and related resources. It provides a comprehensive overview of the project, including official introduction, quick experience options, popular branches, parameter explanations, voice seed details, installation guides, FAQs, and error troubleshooting. The repository also includes video tutorials, discussion community links, and project trends analysis. Users can explore various branches for different functionalities and enhancements related to ChatTTS.

DeepAI

DeepAI is a proxy server that enhances the interaction experience of large language models (LLMs) by integrating the 'thinking chain' process. It acts as an intermediary layer, receiving standard OpenAI API compatible requests, using independent 'thinking services' to generate reasoning processes, and then forwarding the enhanced requests to the LLM backend of your choice. This ensures that responses are not only generated by the LLM but also based on pre-inference analysis, resulting in more insightful and coherent answers. DeepAI supports seamless integration with applications designed for the OpenAI API, providing endpoints for '/v1/chat/completions' and '/v1/models', making it easy to integrate into existing applications. It offers features such as reasoning chain enhancement, flexible backend support, API key routing, weighted random selection, proxy support, comprehensive logging, and graceful shutdown.

WeClone

WeClone is a tool that fine-tunes large language models using WeChat chat records. It utilizes approximately 20,000 integrated and effective data points, resulting in somewhat satisfactory outcomes that are occasionally humorous. The tool's effectiveness largely depends on the quantity and quality of the chat data provided. It requires a minimum of 16GB of GPU memory for training using the default chatglm3-6b model with LoRA method. Users can also opt for other models and methods supported by LLAMA Factory, which consume less memory. The tool has specific hardware and software requirements, including Python, Torch, Transformers, Datasets, Accelerate, and other optional packages like CUDA and Deepspeed. The tool facilitates environment setup, data preparation, data preprocessing, model downloading, parameter configuration, model fine-tuning, and inference through a browser demo or API service. Additionally, it offers the ability to deploy a WeChat chatbot, although users should be cautious due to the risk of account suspension by WeChat.

XianyuAutoAgent

Xianyu AutoAgent is an AI customer service robot system specifically designed for the Xianyu platform, providing 24/7 automated customer service, supporting multi-expert collaborative decision-making, intelligent bargaining, and context-aware conversations. The system includes intelligent conversation engine with features like context awareness and expert routing, business function matrix with modules like core engine, bargaining system, technical support, and operation monitoring. It requires Python 3.8+ and NodeJS 18+ for installation and operation. Users can customize prompts for different experts and contribute to the project through issues or pull requests.

ai-daily-digest

AI Daily Digest is a tool that fetches the latest articles from the top 90 Hacker News technology blogs recommended by Andrej Karpathy. It uses AI multi-dimensional scoring to curate a structured daily digest. The tool supports Gemini by default and can automatically degrade to OpenAI compatible API. It offers a five-step processing pipeline including RSS fetching, time filtering, AI scoring and classification, AI summarization and translation, and trend summarization. The generated daily digest includes sections like today's highlights, must-read articles, data overview, and categorized article lists. The tool is designed to be dependency-free, bilingual, with structured summaries, visual statistics, intelligent categorization, trend insights, and persistent configuration memory.

huge-ai-search

Huge AI Search MCP Server integrates Google AI Mode search into clients like Cursor, Claude Code, and Codex, supporting continuous follow-up questions and source links. It allows AI clients to directly call 'huge-ai-search' for online searches, providing AI summary results and source links. The tool supports text and image searches, with the ability to ask follow-up questions in the same session. It requires Microsoft Edge for installation and supports various IDEs like VS Code. The tool can be used for tasks such as searching for specific information, asking detailed questions, and avoiding common pitfalls in development tasks.

For similar tasks

ChatTTS-Forge

ChatTTS-Forge is a powerful text-to-speech generation tool that supports generating rich audio long texts using a SSML-like syntax and provides comprehensive API services, suitable for various scenarios. It offers features such as batch generation, support for generating super long texts, style prompt injection, full API services, user-friendly debugging GUI, OpenAI-style API, Google-style API, support for SSML-like syntax, speaker management, style management, independent refine API, text normalization optimized for ChatTTS, and automatic detection and processing of markdown format text. The tool can be experienced and deployed online through HuggingFace Spaces, launched with one click on Colab, deployed using containers, or locally deployed after cloning the project, preparing models, and installing necessary dependencies.

RAVE

RAVE is a variational autoencoder for fast and high-quality neural audio synthesis. It can be used to generate new audio samples from a given dataset, or to modify the style of existing audio samples. RAVE is easy to use and can be trained on a variety of audio datasets. It is also computationally efficient, making it suitable for real-time applications.

awesome-generative-ai

A curated list of Generative AI projects, tools, artworks, and models

WavCraft

WavCraft is an LLM-driven agent for audio content creation and editing. It applies LLM to connect various audio expert models and DSP function together. With WavCraft, users can edit the content of given audio clip(s) conditioned on text input, create an audio clip given text input, get more inspiration from WavCraft by prompting a script setting and let the model do the scriptwriting and create the sound, and check if your audio file is synthesized by WavCraft.

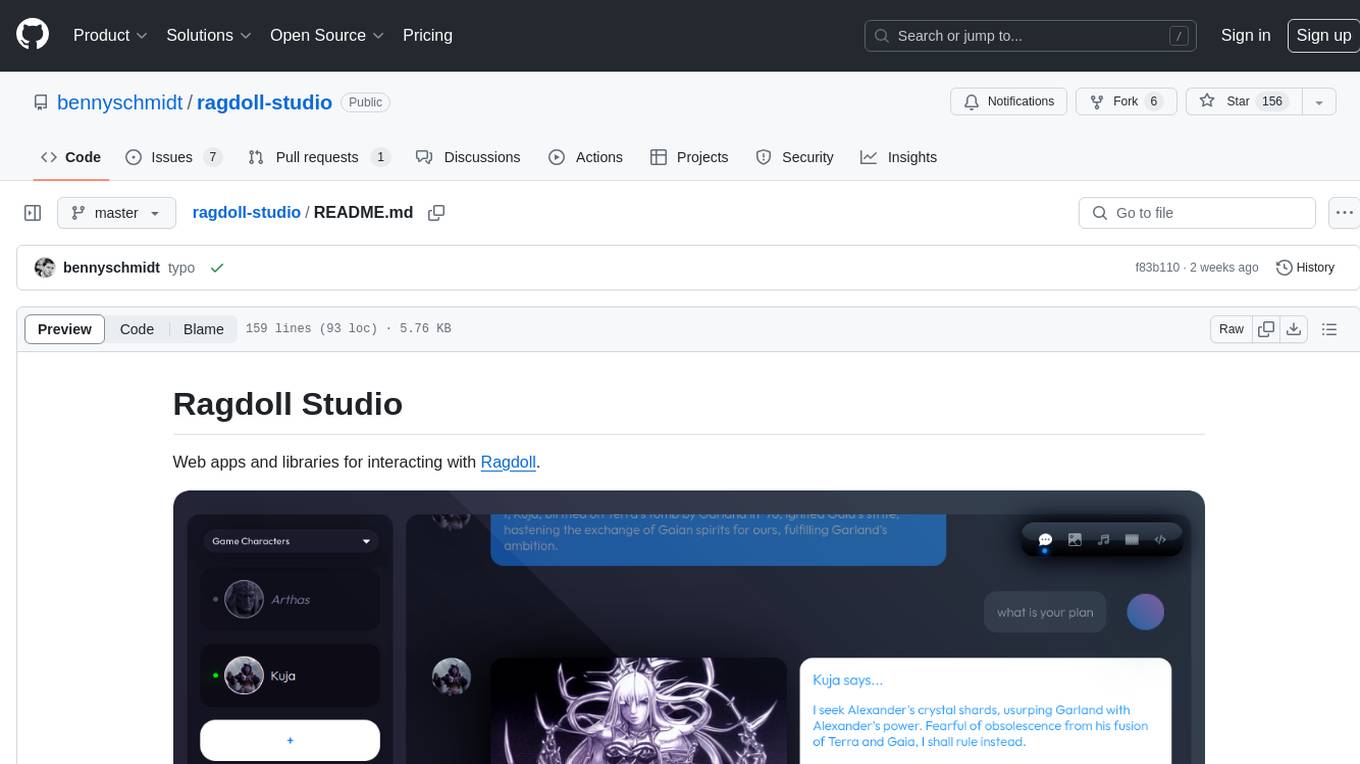

ragdoll-studio

Ragdoll Studio is a platform offering web apps and libraries for interacting with Ragdoll, enabling users to go beyond fine-tuning and create flawless creative deliverables, rich multimedia, and engaging experiences. It provides various modes such as Story Mode for creating and chatting with characters, Vector Mode for producing vector art, Raster Mode for producing raster art, Video Mode for producing videos, Audio Mode for producing audio, and 3D Mode for producing 3D objects. Users can export their content in various formats and share their creations on the community site. The platform consists of a Ragdoll API and a front-end React application for seamless usage.

simple-openai

Simple-OpenAI is a Java library that provides a simple way to interact with the OpenAI API. It offers consistent interfaces for various OpenAI services like Audio, Chat Completion, Image Generation, and more. The library uses CleverClient for HTTP communication, Jackson for JSON parsing, and Lombok to reduce boilerplate code. It supports asynchronous requests and provides methods for synchronous calls as well. Users can easily create objects to communicate with the OpenAI API and perform tasks like text-to-speech, transcription, image generation, and chat completions.

AI

AI is an open-source Swift framework for interfacing with generative AI. It provides functionalities for text completions, image-to-text vision, function calling, DALLE-3 image generation, audio transcription and generation, and text embeddings. The framework supports multiple AI models from providers like OpenAI, Anthropic, Mistral, Groq, and ElevenLabs. Users can easily integrate AI capabilities into their Swift projects using AI framework.

RAG-Survey

This repository is dedicated to collecting and categorizing papers related to Retrieval-Augmented Generation (RAG) for AI-generated content. It serves as a survey repository based on the paper 'Retrieval-Augmented Generation for AI-Generated Content: A Survey'. The repository is continuously updated to keep up with the rapid growth in the field of RAG.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.