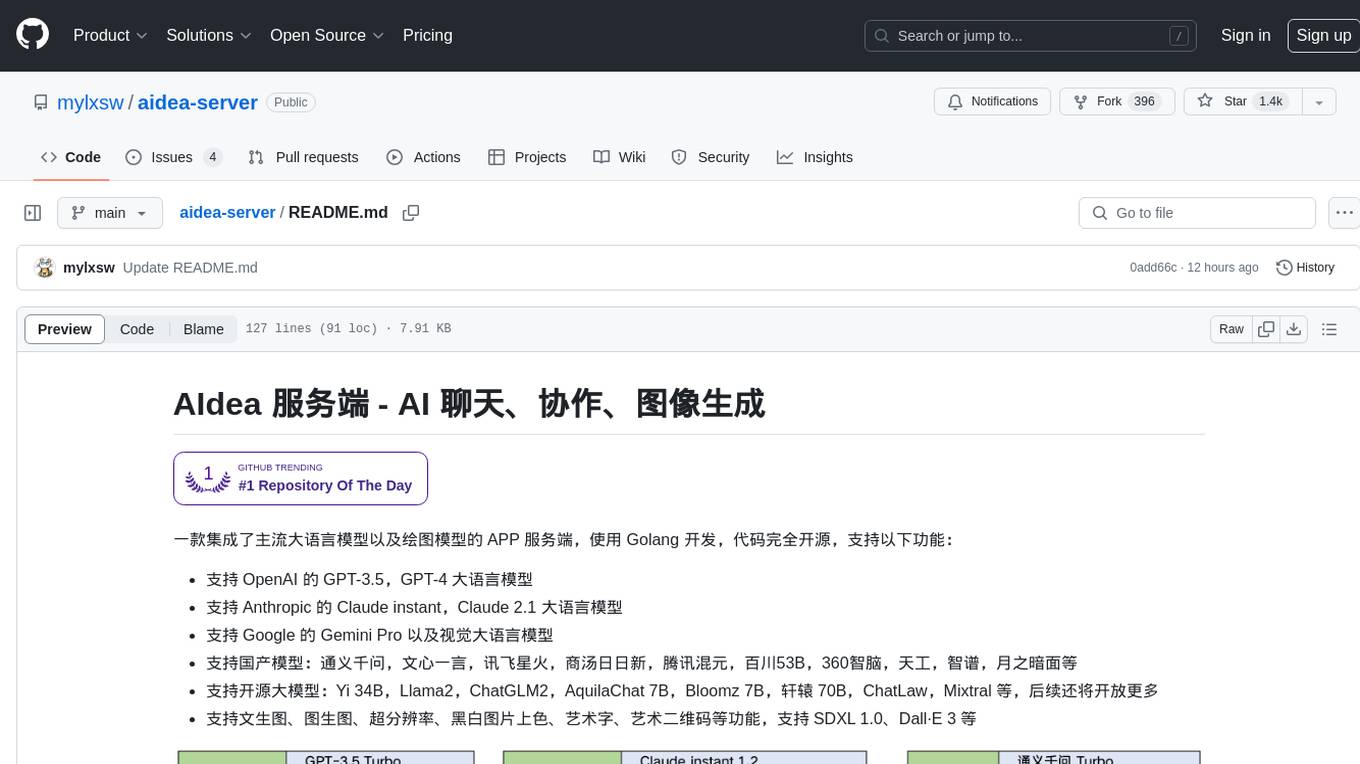

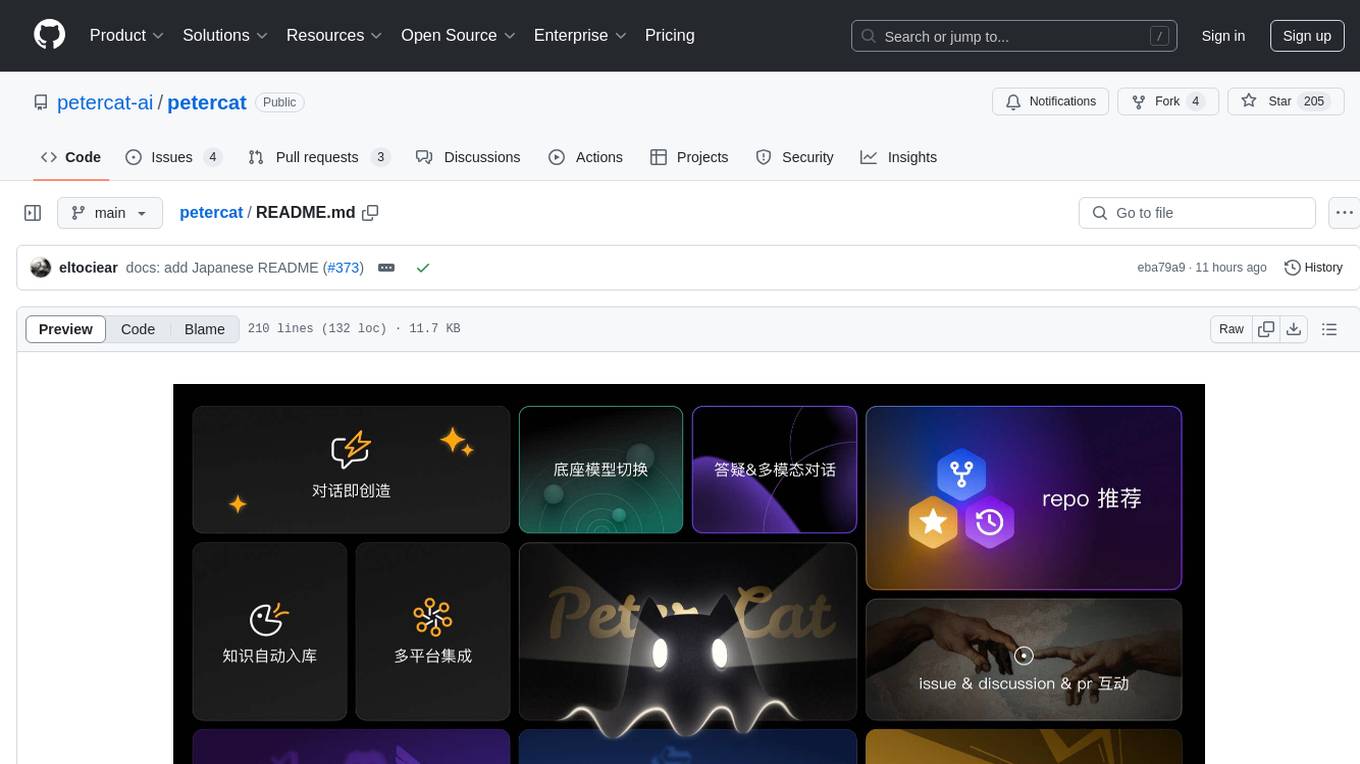

petercat

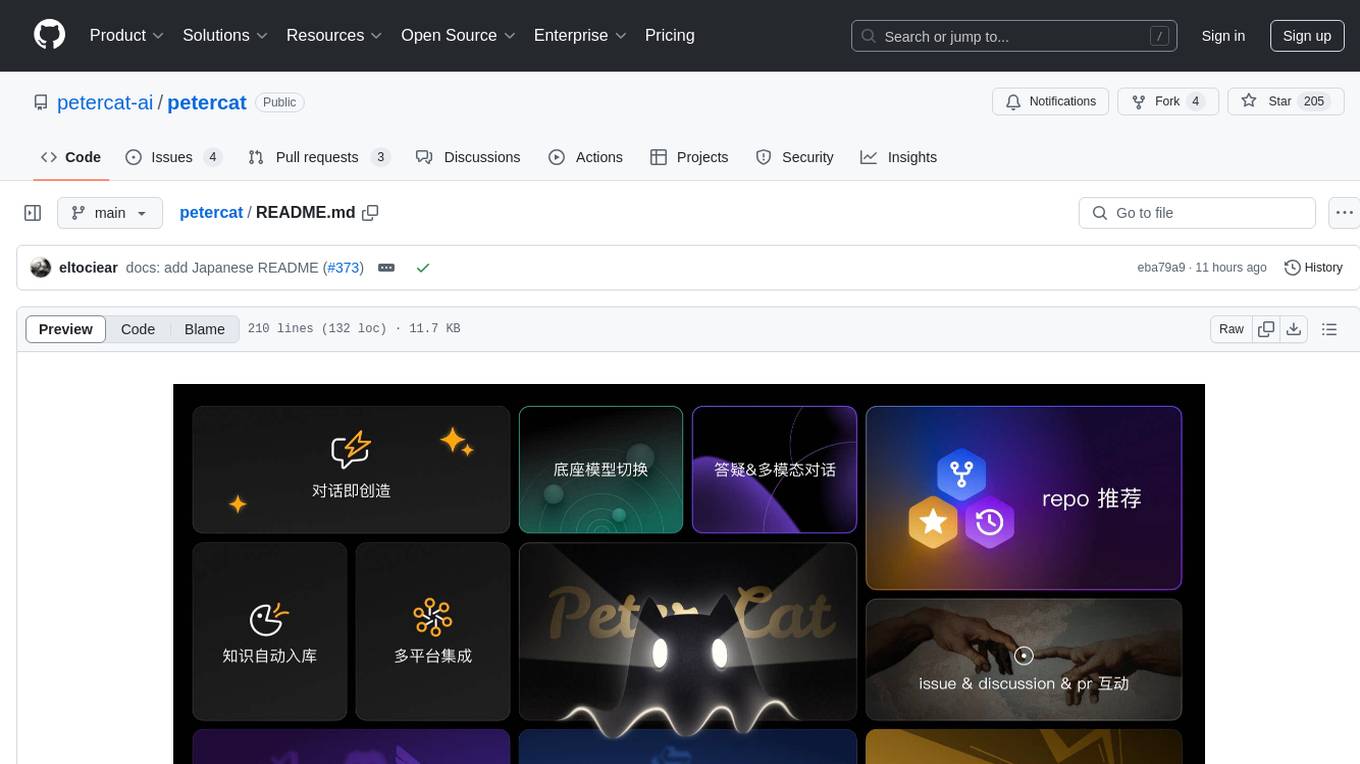

A conversational Q&A agent configuration system, self-hosted deployment solutions, and a convenient all-in-one application SDK, allowing you to create intelligent Q&A bots for your GitHub repositories

Stars: 1303

Peter Cat is an intelligent Q&A chatbot solution designed for community maintainers and developers. It provides a conversational Q&A agent configuration system, self-hosting deployment solutions, and a convenient integrated application SDK. Users can easily create intelligent Q&A chatbots for their GitHub repositories and quickly integrate them into various official websites or projects to provide more efficient technical support for the community.

README:

我们提供对话式答疑 Agent 配置系统、自托管部署方案和便捷的一体化应用 SDK,让您能够为自己的 GitHub 仓库一键创建智能答疑机器人,并快速集成到各类官网或项目中, 为社区提供更高效的技术支持生态。

仅需要告知你的仓库地址或名称,PeterCat 即可自动完成创建机器人的全部流程

机器人创建后,所有相关 Github 文档和 issue 将自动入库,作为机器人的知识依据

多种集成方式自由选择,如对话应用 SDK 集成至官网,Github APP 一键安装至 Github 仓库等

|

|

|---|

| 项目信息查询 | 回复 Discussion |

|---|---|

| PR Summary | Code Review |

|---|---|

|

|

| 查 Issue | 提 Issue | 回 Issue |

|---|---|---|

我们为猫猫预置了一个创建机器人的机器人,当得到用户 GitHub 仓库地址或名称时,它会使用创建工具,生成该仓库答疑机器人的各项配置(Prompt,、名字、 头像、开场白、引导语、工具集……),同时触发 Issue 和 Markdown 的入库任务。这些任务会拆分为多个子任务,将该仓库的所有已解决 issue 、高票回复以及所有 Markdown 文件内容经过 load -> split -> embed -> store 的加工过程进行知识库构建,作为机器人的回复知识依据。

你可以在这里看到完整方案:

本项目需要进行环境变量进行设置:

.env.local

| 环境变量 | 类型 | 描述 | 示例 |

|---|---|---|---|

NEXT_PUBLIC_API_DOMAIN |

必选 | 后端服务的 API 域名。 | https://api.petercat.ai |

.env

| 环境变量 | 类型 | 描述 | 示例 |

|---|---|---|---|

| 应用基础环境变量 | |||

API_URL |

必选 | 后端服务的 API 域名 | https://api.petercat.ai |

WEB_URL |

必选 | 前端 Web 服务的域名 | https://petercat.ai |

STATIC_URL |

必选 | 静态资源域名 | https://static.petercat.ai |

| AWS 相关环境变量 | |||

X_GITHUB_SECRET_NAME |

必选 | AWS 托管的 Github 私钥文件名 | prod/githubapp/petercat/pem |

STATIC_SECRET_NAME |

可选 | AWS 托管的 CloudFront 签名私钥名称。如果配置了该项,将使用 CloudFront 签名 URL 来保护你的资源。更多信息请参阅 AWS 文档。 | prod/petercat/static |

LLM_TOKEN_SECRET_NAME |

可选 | AWS 托管的 llm 签名私钥名称。如果配置了该项,petercat 将使用 RSA 算法托管用户的 LLM Token | prod/petercat/llm |

LLM_TOKEN_PUBLIC_NAME |

可选 | AWS 托管的 llm 签名公钥名称。如果配置了该项,petercat 将使用 RSA 算法托管用户的 LLM Token | prod/petercat/llm/pub |

STATIC_KEYPAIR_ID |

可选 | AWS CloudFront 的 Key Pair ID。如果配置了该项,将使用 CloudFront 签名 URL 来保护你的资源。更多信息请参阅 AWS 文档。 | APKxxxxxxxx |

S3_TEMP_BUCKET_NAME |

可选 | 用于托管 AWS 临时图片文件 S3 的 bucket | xxx-temp |

| SUPABASE 相关 env | |||

SUPABASE_URL |

必选 | supabase 服务的 URL,可以在这里找到 | https://***.supabase.co |

SUPABASE_SERVICE_KEY |

必选 | supabase 服务密钥,可以在这里找到 | {{SUPABASE_SERVICE_KEY}} |

| Auth0 相关 env | |||

AUTH0_DOMAIN |

必选 | auth0 服务域名,从 auth0 / Application / Basic Information 下获取 | petercat.us.auth0.com |

AUTH0_CLIENT_ID |

必选 | auth0 客户端 ID,从 auth0 / Application / Basic Information 下获取 | artfiUxxxx |

AUTH0_CLIENT_SECRET |

必选 | auth0 客户端密钥, 从 auth0 / Application / Basic Information 下获取 | xxxx-xxxx-xxx |

API_IDENTIFIER |

必选 | auth0 的 API Identifier | https://petercat.us.auth0.com/api/v2/ |

| LLM 相关的 env | |||

OPENAI_API_KEY |

必选 | OpenAI 的密钥 | sk-xxxx |

OPENAI_BASE_URL |

可选 | API 请求的基础 URL。仅在使用代理或服务模拟器时指定。 | https://api.openai.com/v1 |

GEMINI_API_KEY |

可选 | Gemini 的密钥 | xxxx |

TAVILY_API_KEY |

必选 | Tavily 的密钥 | tvly-xxxxx |

| 注册为 Github App 的 env | |||

X_GITHUB_APP_ID |

可选 | 注册为 Github App 时,APPID | 123456 |

X_GITHUB_APPS_CLIENT_ID |

可选 | 注册为 Github App 时,APP 的 Client ID | Iv1.xxxxxxx |

X_GITHUB_APPS_CLIENT_SECRET |

可选 | 注册为 Github App 时,APP 的 Client 密钥 | xxxxxxxx |

| 限流配置 | |||

RATE_LIMIT_ENABLED |

可选 | 限流配置是否开启 | True |

RATE_LIMIT_REQUESTS |

可选 | 限流的请求数量 | 100 |

RATE_LIMIT_DURATION |

可选 | 限流的统计时长,单位为分钟 | 1 |

| RAG 服务配置 | |||

WHISKER_API_URL |

必选 | WHISKER RAG 服务地址 | http://.... |

WHISKER_API_KEY |

必选 | WHISKER RAG 服务的 KEY | sk-xxxx |

PeterCat 使用 yarn 作为包管理器

git clone https://github.com/petercat-ai/petercat.git

# 安装依赖

yarn run bootstrap

# 调试 client

yarn run client

# 调试 assistant

yarn run assistant

# 调试 server

yarn run server

# 本地启动网站

yarn run client:server

# 本地启动 assistant 组件

yarn run assistant:server

# assistant 构建

cd assistant

yarn run build

npm publish

# docker 构建

yarn run build:docker

# pypi 构建

yarn run build:pypi

yarn run publish:pypi

请把您的项目地址,使用场景,使用频率等信息发送至 [email protected]

猫猫还在养成阶段,难免有些 “小脾气”,遇到问题请对它宽容一些,可以通过以下两种途径告知铲屎官:

MIT@PeterCat

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for petercat

Similar Open Source Tools

petercat

Peter Cat is an intelligent Q&A chatbot solution designed for community maintainers and developers. It provides a conversational Q&A agent configuration system, self-hosting deployment solutions, and a convenient integrated application SDK. Users can easily create intelligent Q&A chatbots for their GitHub repositories and quickly integrate them into various official websites or projects to provide more efficient technical support for the community.

daily_stock_analysis

The daily_stock_analysis repository is an intelligent stock analysis system based on AI large models for A-share/Hong Kong stock/US stock selection. It automatically analyzes and pushes a 'decision dashboard' to WeChat Work/Feishu/Telegram/email daily. The system features multi-dimensional analysis, global market support, market review, AI backtesting validation, multi-channel notifications, and scheduled execution using GitHub Actions. It utilizes AI models like Gemini, OpenAI, DeepSeek, and data sources like AkShare, Tushare, Pytdx, Baostock, YFinance for analysis. The system includes built-in trading disciplines like risk warning, trend trading, precise entry/exit points, and checklist marking for conditions.

llmio

LLMIO is a Go-based LLM load balancing gateway that provides a unified REST API, weight scheduling, logging, and modern management interface for your LLM clients. It helps integrate different model capabilities from OpenAI, Anthropic, Gemini, and more in a single service. Features include unified API compatibility, weight scheduling with two strategies, visual management dashboard, rate and failure handling, and local persistence with SQLite. The tool supports multiple vendors' APIs and authentication methods, making it versatile for various AI model integrations.

agentkit-samples

AgentKit Samples is a repository containing a series of examples and tutorials to help users understand, implement, and integrate various functionalities of AgentKit into their applications. The platform offers a complete solution for building, deploying, and maintaining AI agents, significantly reducing the complexity of developing intelligent applications. The repository provides different levels of examples and tutorials, including basic tutorials for understanding AgentKit's concepts and use cases, as well as more complex examples for experienced developers.

jiwu-mall-chat-tauri

Jiwu Chat Tauri APP is a desktop chat application based on Nuxt3 + Tauri + Element Plus framework. It provides a beautiful user interface with integrated chat and social functions. It also supports AI shopping chat and global dark mode. Users can engage in real-time chat, share updates, and interact with AI customer service through this application.

DeepAudit

DeepAudit is an AI audit team accessible to everyone, making vulnerability discovery within reach. It is a next-generation code security audit platform based on Multi-Agent collaborative architecture. It simulates the thinking mode of security experts, achieving deep code understanding, vulnerability discovery, and automated sandbox PoC verification through multiple intelligent agents (Orchestrator, Recon, Analysis, Verification). DeepAudit aims to address the three major pain points of traditional SAST tools: high false positive rate, blind spots in business logic, and lack of verification means. Users only need to import the project, and DeepAudit automatically starts working: identifying the technology stack, analyzing potential risks, generating scripts, sandbox verification, and generating reports, ultimately outputting a professional audit report. The core concept is to let AI attack like a hacker and defend like an expert.

jimeng-free-api-all

Jimeng AI Free API is a reverse-engineered API server that encapsulates Jimeng AI's image and video generation capabilities into OpenAI-compatible API interfaces. It supports the latest jimeng-5.0-preview, jimeng-4.6 text-to-image models, Seedance 2.0 multi-image intelligent video generation, zero-configuration deployment, and multi-token support. The API is fully compatible with OpenAI API format, seamlessly integrating with existing clients and supporting multiple session IDs for polling usage.

MedicalGPT

MedicalGPT is a training medical GPT model with ChatGPT training pipeline, implement of Pretraining, Supervised Finetuning, RLHF(Reward Modeling and Reinforcement Learning) and DPO(Direct Preference Optimization).

XiaoXinAir14IML_2019_hackintosh

XiaoXinAir14IML_2019_hackintosh is a repository dedicated to enabling macOS installation on Lenovo XiaoXin Air-14 IML 2019 laptops. The repository provides detailed information on the hardware specifications, supported systems, BIOS versions, related models, installation methods, updates, patches, and recommended settings. It also includes tools and guides for BIOS modifications, enabling high-resolution display settings, Bluetooth synchronization between macOS and Windows 10, voltage adjustments for efficiency, and experimental support for YogaSMC. The repository offers solutions for various issues like sleep support, sound card emulation, and battery information. It acknowledges the contributions of developers and tools like OpenCore, itlwm, VoodooI2C, and ALCPlugFix.

md

The WeChat Markdown editor automatically renders Markdown documents as WeChat articles, eliminating the need to worry about WeChat content layout! As long as you know basic Markdown syntax (now with AI, you don't even need to know Markdown), you can create a simple and elegant WeChat article. The editor supports all basic Markdown syntax, mathematical formulas, rendering of Mermaid charts, GFM warning blocks, PlantUML rendering support, ruby annotation extension support, rich code block highlighting themes, custom theme colors and CSS styles, multiple image upload functionality with customizable configuration of image hosting services, convenient file import/export functionality, built-in local content management with automatic draft saving, integration of mainstream AI models (such as DeepSeek, OpenAI, Tongyi Qianwen, Tencent Hanyuan, Volcano Ark, etc.) to assist content creation.

AstrBot

AstrBot is a powerful and versatile tool that leverages the capabilities of large language models (LLMs) like GPT-3, GPT-3.5, and GPT-4 to enhance communication and automate tasks. It seamlessly integrates with popular messaging platforms such as QQ, QQ Channel, and Telegram, enabling users to harness the power of AI within their daily conversations and workflows.

aidea-server

AIdea Server is an open-source Golang-based server that integrates mainstream large language models and drawing models. It supports various functionalities including OpenAI's GPT-3.5 and GPT-4, Anthropic's Claude instant and Claude 2.1, Google's Gemini Pro, as well as Chinese models like Tongyi Qianwen, Wenxin Yiyuan, and more. It also supports open-source large models like Yi 34B, Llama2, and AquilaChat 7B. Additionally, it provides features for text-to-image, super-resolution, coloring black and white images, generating art fonts and QR codes, among others.

search2ai

S2A allows your large model API to support networking, searching, news, and web page summarization. It currently supports OpenAI, Gemini, and Moonshot (non-streaming). The large model will determine whether to connect to the network based on your input, and it will not connect to the network for searching every time. You don't need to install any plugins or replace keys. You can directly replace the custom address in your commonly used third-party client. You can also deploy it yourself, which will not affect other functions you use, such as drawing and voice.

xiaogpt

xiaogpt is a tool that allows you to play ChatGPT and other LLMs with Xiaomi AI Speaker. It supports ChatGPT, New Bing, ChatGLM, Gemini, Doubao, and Tongyi Qianwen. You can use it to ask questions, get answers, and have conversations with AI assistants. xiaogpt is easy to use and can be set up in a few minutes. It is a great way to experience the power of AI and have fun with your Xiaomi AI Speaker.

web-builder

Web Builder is a low-code front-end framework based on Material for Angular, offering a rich component library for excellent digital innovation experience. It allows rapid construction of modern responsive UI, multi-theme, multi-language web pages through drag-and-drop visual configuration. The framework includes a beautiful admin theme, complete front-end solutions, and AI integration in the Pro version for optimizing copy, creating components, and generating pages with a single sentence.

Chinese-Mixtral-8x7B

Chinese-Mixtral-8x7B is an open-source project based on Mistral's Mixtral-8x7B model for incremental pre-training of Chinese vocabulary, aiming to advance research on MoE models in the Chinese natural language processing community. The expanded vocabulary significantly improves the model's encoding and decoding efficiency for Chinese, and the model is pre-trained incrementally on a large-scale open-source corpus, enabling it with powerful Chinese generation and comprehension capabilities. The project includes a large model with expanded Chinese vocabulary and incremental pre-training code.

For similar tasks

petercat

Peter Cat is an intelligent Q&A chatbot solution designed for community maintainers and developers. It provides a conversational Q&A agent configuration system, self-hosting deployment solutions, and a convenient integrated application SDK. Users can easily create intelligent Q&A chatbots for their GitHub repositories and quickly integrate them into various official websites or projects to provide more efficient technical support for the community.

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

unsloth

Unsloth is a tool that allows users to fine-tune large language models (LLMs) 2-5x faster with 80% less memory. It is a free and open-source tool that can be used to fine-tune LLMs such as Gemma, Mistral, Llama 2-5, TinyLlama, and CodeLlama 34b. Unsloth supports 4-bit and 16-bit QLoRA / LoRA fine-tuning via bitsandbytes. It also supports DPO (Direct Preference Optimization), PPO, and Reward Modelling. Unsloth is compatible with Hugging Face's TRL, Trainer, Seq2SeqTrainer, and Pytorch code. It is also compatible with NVIDIA GPUs since 2018+ (minimum CUDA Capability 7.0).

beyondllm

Beyond LLM offers an all-in-one toolkit for experimentation, evaluation, and deployment of Retrieval-Augmented Generation (RAG) systems. It simplifies the process with automated integration, customizable evaluation metrics, and support for various Large Language Models (LLMs) tailored to specific needs. The aim is to reduce LLM hallucination risks and enhance reliability.

aiwechat-vercel

aiwechat-vercel is a tool that integrates AI capabilities into WeChat public accounts using Vercel functions. It requires minimal server setup, low entry barriers, and only needs a domain name that can be bound to Vercel, with almost zero cost. The tool supports various AI models, continuous Q&A sessions, chat functionality, system prompts, and custom commands. It aims to provide a platform for learning and experimentation with AI integration in WeChat public accounts.

hugging-chat-api

Unofficial HuggingChat Python API for creating chatbots, supporting features like image generation, web search, memorizing context, and changing LLMs. Users can log in, chat with the ChatBot, perform web searches, create new conversations, manage conversations, switch models, get conversation info, use assistants, and delete conversations. The API also includes a CLI mode with various commands for interacting with the tool. Users are advised not to use the application for high-stakes decisions or advice and to avoid high-frequency requests to preserve server resources.

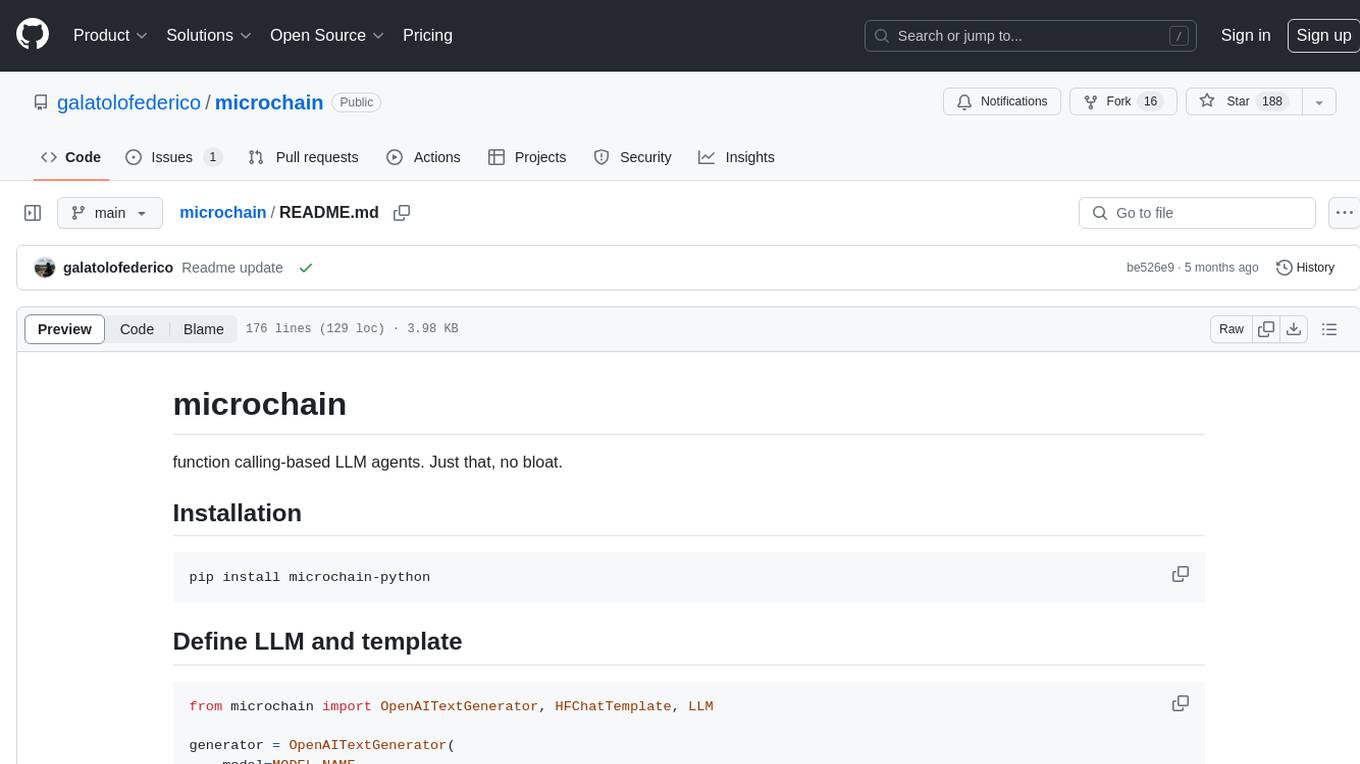

microchain

Microchain is a function calling-based LLM agents tool with no bloat. It allows users to define LLM and templates, use various functions like Sum and Product, and create LLM agents for specific tasks. The tool provides a simple and efficient way to interact with OpenAI models and create conversational agents for various applications.

embedchain

Embedchain is an Open Source Framework for personalizing LLM responses. It simplifies the creation and deployment of personalized AI applications by efficiently managing unstructured data, generating relevant embeddings, and storing them in a vector database. With diverse APIs, users can extract contextual information, find precise answers, and engage in interactive chat conversations tailored to their data. The framework follows the design principle of being 'Conventional but Configurable' to cater to both software engineers and machine learning engineers.

For similar jobs

ChatFAQ

ChatFAQ is an open-source comprehensive platform for creating a wide variety of chatbots: generic ones, business-trained, or even capable of redirecting requests to human operators. It includes a specialized NLP/NLG engine based on a RAG architecture and customized chat widgets, ensuring a tailored experience for users and avoiding vendor lock-in.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.