kantv

workbench for learing&practising AI tech in real scenario on Android device, powered by GGML(Georgi Gerganov Machine Learning) and NCNN(Tencent NCNN) and FFmpeg

Stars: 75

KanTV is an open-source project that focuses on studying and practicing state-of-the-art AI technology in real applications and scenarios, such as online TV playback, transcription, translation, and video/audio recording. It is derived from the original ijkplayer project and includes many enhancements and new features, including: * Watching online TV and local media using a customized FFmpeg 6.1. * Recording online TV to automatically generate videos. * Studying ASR (Automatic Speech Recognition) using whisper.cpp. * Studying LLM (Large Language Model) using llama.cpp. * Studying SD (Text to Image by Stable Diffusion) using stablediffusion.cpp. * Generating real-time English subtitles for English online TV using whisper.cpp. * Running/experiencing LLM on Xiaomi 14 using llama.cpp. * Setting up a customized playlist and using the software to watch the content for R&D activity. * Refactoring the UI to be closer to a real commercial Android application (currently only supports English). Some goals of this project are: * To provide a well-maintained "workbench" for ASR researchers interested in practicing state-of-the-art AI technology in real scenarios on mobile devices (currently focusing on Android). * To provide a well-maintained "workbench" for LLM researchers interested in practicing state-of-the-art AI technology in real scenarios on mobile devices (currently focusing on Android). * To create an Android "turn-key project" for AI experts/researchers (who may not be familiar with regular Android software development) to focus on device-side AI R&D activity, where part of the AI R&D activity (algorithm improvement, model training, model generation, algorithm validation, model validation, performance benchmark, etc.) can be done very easily using Android Studio IDE and a powerful Android phone.

README:

KanTV("Kan", aka Chinese PinYin "Kan" or Chinese HanZi "看" or English "watch/listen") , an open source project focus on study and practise state-of-the-art AI technology in real scenario(such as online-TV playback and online-TV transcription(real-time subtitle) and online-TV language translation and online-TV video&audio recording works at the same time) on Android phone/device, derived from original , with much enhancements and new features:

-

Watch online TV and local media by my customized

, source code of my customized FFmpeg 6.1 could be found in external/ffmpeg according to FFmpeg's license

-

Record online TV to automatically generate videos (useful for short video creators to generate short video materials but pls respect IPR of original content creator/provider); record online TV's video / audio content for gather video / audio data which might be required of/useful for AI R&D activity

-

AI subtitle(real-time English subtitle for English online-TV(aka OTT TV) by the great & excellent & amazing whisper.cpp ), pls attention Xiaomi 14 or other powerful Android mobile phone is HIGHLY required/recommended for AI subtitle feature otherwise unexpected behavior would happen

-

2D graphic performance

-

Set up a customized playlist and then use this software to watch the content of the customized playlist for R&D activity

-

UI refactor(closer to real commercial Android application and only English is supported in UI language currently)

-

Well-maintained "workbench" for ASR(Automatic Speech Recognition) researchers/developers/programmers who was interested in practise state-of-the-art AI tech(such as whisper.cpp) in real scenario on Android phone/device(PoC: realtime AI subtitle for online-TV(aka OTT TV) on Xiaomi 14 finished from 03/05/2024 to 03/16/2024)

-

Well-maintained "workbench" for LLM(Large Language Model) researchers/developers who was interested in practise state-of-the-art AI tech(such as llama.cpp) in real scenario on Android phone/device, or Run/experience LLM model(such as llama-2-7b, baichuan2-7b, qwen1_5-1_8b, gemma-2b) on Android phone/device using the magic llama.cpp

-

Well-maintained "workbench" for GGML beginners to study internal mechanism of GGML inference framework on Android phone/device(PoC:Qualcomm QNN backend for ggml finished from 03/29/2024 to 04/26/2024)

-

Well-maintained "workbench" for NCNN beginners to study and practise NCNN inference framework on Android phone/device

-

Well-maintained turn-key / self-contained project for AI researchers(whom mightbe not familiar with regular Android software development)/developers/beginners focus on edge/device-side AI learning / R&D activity, some AI R&D activities (AI algorithm validation / AI model validation / performance benchmark in ASR, LLM, TTS, NLP, CV......field) could be done by Android Studio IDE + a powerful Android phone very easily

(depend on https://github.com/zhouwg/kantv/issues/121)

git clone https://github.com/zhouwg/kantv.git

cd kantv

git checkout master

cd kantv

-

Build docker image

docker build build -t kantv --build-arg USER_ID=$(id -u) --build-arg GROUP_ID=$(id -g) --build-arg USER_NAME=$(whoami)

-

Run docker container

# map source code directory into docker container docker run -it --name=kantv --volume=`pwd`:/home/`whoami`/kantv kantv # in docker container . build/envsetup.sh ./build/prebuild-download.sh

-

Prerequisites

- tools & utilities

-

Android Studio

download and install Android Studio manually

-

vim settings

Host OS information:uname -a Linux 5.8.0-43-generic #49~20.04.1-Ubuntu SMP Fri Feb 5 09:57:56 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux cat /etc/issue Ubuntu 20.04.2 LTS \n \lsudo apt-get update sudo apt-get install build-essential -y sudo apt-get install cmake -y sudo apt-get install curl -y sudo apt-get install wget -y sudo apt-get install python -y sudo apt-get install tcl expect -y sudo apt-get install nginx -y sudo apt-get install git -y sudo apt-get install vim -y sudo apt-get install spawn-fcgi -y sudo apt-get install u-boot-tools -y sudo apt-get install ffmpeg -y sudo apt-get install openssh-client -y sudo apt-get install nasm -y sudo apt-get install yasm -y sudo apt-get install openjdk-17-jdk -y sudo dpkg --add-architecture i386 sudo apt-get install lib32z1 -y sudo apt-get install -y android-tools-adb android-tools-fastboot autoconf \ automake bc bison build-essential ccache cscope curl device-tree-compiler \ expect flex ftp-upload gdisk acpica-tools libattr1-dev libcap-dev \ libfdt-dev libftdi-dev libglib2.0-dev libhidapi-dev libncurses5-dev \ libpixman-1-dev libssl-dev libtool make \ mtools netcat python-crypto python3-crypto python-pyelftools \ python3-pycryptodome python3-pyelftools python3-serial \ rsync unzip uuid-dev xdg-utils xterm xz-utils zlib1g-dev sudo apt-get install python3-pip -y sudo apt-get install indent -y pip3 install meson ninja echo "export PATH=/home/`whoami`/.local/bin:\$PATH" >> ~/.bashrcor run below script accordingly after fetch project's source code

./build/prebuild.shborrow from http://ffmpeg.org/developer.html#Editor-configuration

set ai set nu set expandtab set tabstop=4 set shiftwidth=4 set softtabstop=4 set noundofile set nobackup set fileformat=unix set undodir=~/.undodir set cindent set cinoptions=(0 " Allow tabs in Makefiles. autocmd FileType make,automake set noexpandtab shiftwidth=8 softtabstop=8 " Trailing whitespace and tabs are forbidden, so highlight them. highlight ForbiddenWhitespace ctermbg=red guibg=red match ForbiddenWhitespace /\s\+$\|\t/ " Do not highlight spaces at the end of line while typing on that line. autocmd InsertEnter * match ForbiddenWhitespace /\t\|\s\+\%#\@<!$/ -

Download android-ndk-r26c to prebuilts/toolchain, skip this step if android-ndk-r26c is already exist

. build/envsetup.sh ./build/prebuild-download.sh -

Modify ggml/CMakeLists.txt and ncnn/CMakeLists.txt accordingly if target Android device is Xiaomi 14 or Qualcomm Snapdragon 8 Gen 3 SoC based Android phone

-

Modify ggml/CMakeLists.txt and ncnn/CMakeLists.txt accordingly if target Android phone is Qualcomm SoC based Android phone and enable QNN backend for inference framework on Qualcomm SoC based Android phone

-

Remove the hardcoded debug flag in Android NDK android-ndk issue

# open $ANDROID_NDK/build/cmake/android.toolchain.cmake for ndk < r23 # or $ANDROID_NDK/build/cmake/android-legacy.toolchain.cmake for ndk >= r23 # delete "-g" line list(APPEND ANDROID_COMPILER_FLAGS -g -DANDROID

. build/envsetup.sh

-

Option 1: Build APK from source code by Android Studio IDE

-

Option 2: Build APK from source code by command line

. build/envsetup.sh lunch 1 ./build-all.sh android

This project is a learning&research project, so the Android APK will not collect/upload user data in Android device. The Android APK should be works well on any mainstream Android phone(report issue in various Android phone to this project is greatly welcomed) and the following four permissions are required:

- Access to storage is required to generate necessary temporary files

- Access to device information is required to obtain current phone network status information, distinguishing whether the current network is Wi-Fi or mobile when playing online TV

- Access to camera is needed for AI Agent

- Access to mic(audio recorder) is needed for AI Agent

here is a short video to demostrate AI subtitle by running the great & excellent & amazing whisper.cpp on a Xiaomi 14 device - fully offline, on-device.

https://github.com/zhouwg/kantv/assets/6889919/2fabcb24-c00b-4289-a06e-05b98ecd22b8

here is a screenshot to demostrate LLM inference by running the magic llama.cpp on a Xiaomi 14 device - fully offline, on-device.

here is a screenshot to demostrate ASR inference by running the excellent whisper.cpp on a Xiaomi 14 device - fully offline, on-device.

here are some screenshots to demostrate CV inference by running the excellent ncnn on a Xiaomi 14 device - fully offline, on-device.

-

improve the quality of Qualcomm QNN backend for GGML

-

improve the performance of edge-AI inference on Android phone

-

bugfix in UI layer(Java)

-

bugfix in native layer(C/C++)

Be sure to review the opening issues before contribute to project KanTV, We use GitHub issues for tracking requests and bugs, please see how to submit issue in this project .

Report issue in various Android-based phone or even submit PR to this project is greatly welcomed.

- How to verify Qualcomm QNN backend for GGML on Qualcomm mobile SoC based android device

- How to setup customized KanTV server in local dev env

- How to create customized playlist for kantv apk

- How to integrate proprietary/open source codes to project KanTV for personal/proprietary/commercial R&D activity

- How to use whisper.cpp and ffmpeg to add subtitle to video

- How to reduce the size of apk

- How to sign apk

- How to validate AI algorithm/model on Android using this project

- Why focus on ggml & ncnn edge-AI inference framework

- What is the most difficult problem for this project

- Acknowledgement

- ChangeLog

- F.A.Q

- AI inference framework

- GGML by Georgi Gerganov

- NCNN by Tencent

- AI application engine

- ASR engine whisper.cpp by Georgi Gerganov

- LLM engine llama.cpp by Georgi Gerganov

Copyright (c) 2021 - 2023 Project KanTV

Copyright (c) 2024 - Authors of Project KanTV

Licensed under Apachev2.0 or later

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for kantv

Similar Open Source Tools

kantv

KanTV is an open-source project that focuses on studying and practicing state-of-the-art AI technology in real applications and scenarios, such as online TV playback, transcription, translation, and video/audio recording. It is derived from the original ijkplayer project and includes many enhancements and new features, including: * Watching online TV and local media using a customized FFmpeg 6.1. * Recording online TV to automatically generate videos. * Studying ASR (Automatic Speech Recognition) using whisper.cpp. * Studying LLM (Large Language Model) using llama.cpp. * Studying SD (Text to Image by Stable Diffusion) using stablediffusion.cpp. * Generating real-time English subtitles for English online TV using whisper.cpp. * Running/experiencing LLM on Xiaomi 14 using llama.cpp. * Setting up a customized playlist and using the software to watch the content for R&D activity. * Refactoring the UI to be closer to a real commercial Android application (currently only supports English). Some goals of this project are: * To provide a well-maintained "workbench" for ASR researchers interested in practicing state-of-the-art AI technology in real scenarios on mobile devices (currently focusing on Android). * To provide a well-maintained "workbench" for LLM researchers interested in practicing state-of-the-art AI technology in real scenarios on mobile devices (currently focusing on Android). * To create an Android "turn-key project" for AI experts/researchers (who may not be familiar with regular Android software development) to focus on device-side AI R&D activity, where part of the AI R&D activity (algorithm improvement, model training, model generation, algorithm validation, model validation, performance benchmark, etc.) can be done very easily using Android Studio IDE and a powerful Android phone.

serverless-rag-demo

The serverless-rag-demo repository showcases a solution for building a Retrieval Augmented Generation (RAG) system using Amazon Opensearch Serverless Vector DB, Amazon Bedrock, Llama2 LLM, and Falcon LLM. The solution leverages generative AI powered by large language models to generate domain-specific text outputs by incorporating external data sources. Users can augment prompts with relevant context from documents within a knowledge library, enabling the creation of AI applications without managing vector database infrastructure. The repository provides detailed instructions on deploying the RAG-based solution, including prerequisites, architecture, and step-by-step deployment process using AWS Cloudshell.

aircrack-ng

Aircrack-ng is a comprehensive suite of tools designed to evaluate the security of WiFi networks. It covers various aspects of WiFi security, including monitoring, attacking (replay attacks, deauthentication, fake access points), testing WiFi cards and driver capabilities, and cracking WEP and WPA PSK. The tools are command line-based, allowing for extensive scripting and have been utilized by many GUIs. Aircrack-ng primarily works on Linux but also supports Windows, macOS, FreeBSD, OpenBSD, NetBSD, Solaris, and eComStation 2.

Windows-Use

Windows-Use is a powerful automation agent that interacts directly with the Windows OS at the GUI layer. It bridges the gap between AI agents and Windows to perform tasks such as opening apps, clicking buttons, typing, executing shell commands, and capturing UI state without relying on traditional computer vision models. It enables any large language model (LLM) to perform computer automation instead of relying on specific models for it.

quark-engine

Quark Engine is an AI-powered tool designed for analyzing Android APK files. It focuses on enhancing the detection process for auto-suggestion, enabling users to create detection workflows without coding. The tool offers an intuitive drag-and-drop interface for workflow adjustments and updates. Quark Agent, the core component, generates Quark Script code based on natural language input and feedback. The project is committed to providing a user-friendly experience for designing detection workflows through textual and visual methods. Various features are still under development and will be rolled out gradually.

Sunshine-AIO

Sunshine-AIO is an all-in-one step-by-step guide to set up Sunshine with all necessary tools for Windows users. It provides a dedicated display for game streaming, virtual monitor switching, automatic resolution adjustment, resource-saving features, game launcher integration, and stream management. The project aims to evolve into an AIO tool as it progresses, welcoming contributions from users.

tock

Tock is an open conversational AI platform for building bots. It offers a natural language processing open source stack compatible with various tools, a user interface for building stories and analytics, a conversational DSL for different programming languages, built-in connectors for text/voice channels, toolkits for custom web/mobile integration, and the ability to deploy anywhere in the cloud or on-premise with Docker.

svelte-commerce

Svelte Commerce is an open-source frontend for eCommerce, utilizing a PWA and headless approach with a modern JS stack. It supports integration with various eCommerce backends like MedusaJS, Woocommerce, Bigcommerce, and Shopify. The API flexibility allows seamless connection with third-party tools such as payment gateways, POS systems, and AI services. Svelte Commerce offers essential eCommerce features, is both SSR and SPA, superfast, and free to download and modify. Users can easily deploy it on Netlify or Vercel with zero configuration. The tool provides features like headless commerce, authentication, cart & checkout, TailwindCSS styling, server-side rendering, proxy + API integration, animations, lazy loading, search functionality, faceted filters, and more.

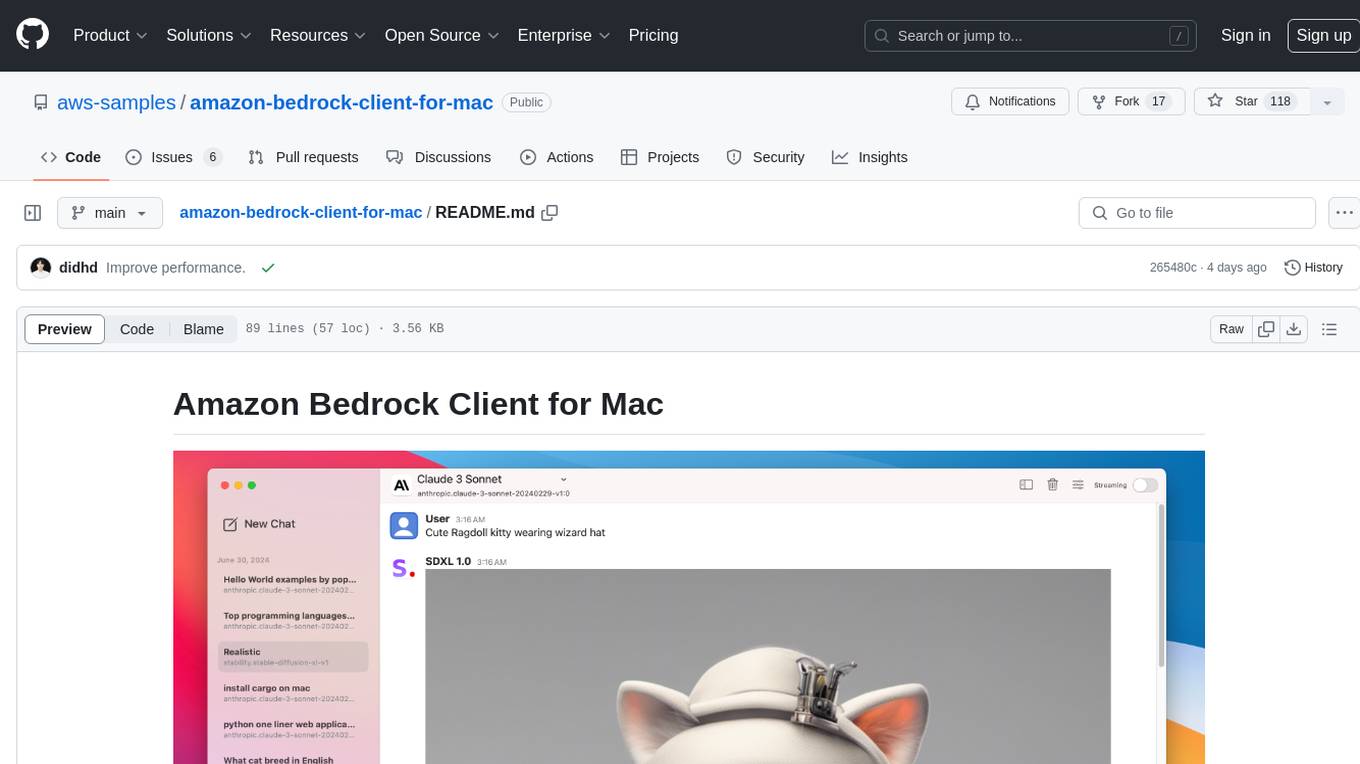

amazon-bedrock-client-for-mac

A sleek and powerful macOS client for Amazon Bedrock, bringing AI models to your desktop. It provides seamless interaction with multiple Amazon Bedrock models, real-time chat interface, easy model switching, support for various AI tasks, and native Dark Mode support. Built with SwiftUI for optimal performance and modern UI.

denser-retriever

Denser Retriever is an enterprise-grade AI retriever designed to streamline AI integration into applications, combining keyword-based searches, vector databases, and machine learning rerankers using xgboost. It provides state-of-the-art accuracy on MTEB Retrieval benchmarking and supports various heterogeneous retrievers for end-to-end applications like chatbots and semantic search.

open-autonomy

Open Autonomy is a framework for creating agent services that run as a multi-agent-system and offer enhanced functionalities on-chain. It enables executing complex operations like machine-learning algorithms in a decentralized, trust-minimized, transparent, and robust manner.

xpander.ai

xpander.ai is a Backend-as-a-Service for autonomous agents that abstracts the ops layer, allowing AI engineers to focus on behavior and outcomes. It provides managed agent hosting with version control and CI/CD, a fully managed PostgreSQL memory layer, and a library of 2,000+ functions. The platform features an AI native triggering system that processes inputs from various sources and delivers unified messages to agents. With support for any agent framework or SDK, including Agno and OpenAI, xpander.ai enables users to build intelligent, production-ready AI agents without dealing with infrastructure complexity.

modelscope-agent

ModelScope-Agent is a customizable and scalable Agent framework. A single agent has abilities such as role-playing, LLM calling, tool usage, planning, and memory. It mainly has the following characteristics: - **Simple Agent Implementation Process**: Simply specify the role instruction, LLM name, and tool name list to implement an Agent application. The framework automatically arranges workflows for tool usage, planning, and memory. - **Rich models and tools**: The framework is equipped with rich LLM interfaces, such as Dashscope and Modelscope model interfaces, OpenAI model interfaces, etc. Built in rich tools, such as **code interpreter**, **weather query**, **text to image**, **web browsing**, etc., make it easy to customize exclusive agents. - **Unified interface and high scalability**: The framework has clear tools and LLM registration mechanism, making it convenient for users to expand more diverse Agent applications. - **Low coupling**: Developers can easily use built-in tools, LLM, memory, and other components without the need to bind higher-level agents.

tidb.ai

TiDB.AI is a conversational search RAG (Retrieval-Augmented Generation) app based on TiDB Serverless Vector Storage. It provides an out-of-the-box and embeddable QA robot experience based on knowledge from official and documentation sites. The platform features a Perplexity-style Conversational Search page with an advanced built-in website crawler for comprehensive coverage. Users can integrate an embeddable JavaScript snippet into their website for instant responses to product-related queries. The tech stack includes Next.js, TypeScript, Tailwind CSS, shadcn/ui for design, TiDB for database storage, Kysely for SQL query building, NextAuth.js for authentication, Vercel for deployments, and LlamaIndex for the RAG framework. TiDB.AI is open-source under the Apache License, Version 2.0.

macai

Macai is a native macOS client for interacting with modern AI tools, such as ChatGPT and Ollama. It features organized chats with custom system messages, system-defined light/dark themes, backup and restore functionality, customizable context size, support for any model with a compatible API, formatted code blocks and tables, multiple chat tabs, CoreData data storage, streamed responses, and automatic chat name generation. Macai is in active development, with contributions welcome.

xpert

Xpert is a powerful tool for data analysis and visualization. It provides a user-friendly interface to explore and manipulate datasets, perform statistical analysis, and create insightful visualizations. With Xpert, users can easily import data from various sources, clean and preprocess data, analyze trends and patterns, and generate interactive charts and graphs. Whether you are a data scientist, analyst, researcher, or student, Xpert simplifies the process of data analysis and visualization, making it accessible to users with varying levels of expertise.

For similar tasks

kantv

KanTV is an open-source project that focuses on studying and practicing state-of-the-art AI technology in real applications and scenarios, such as online TV playback, transcription, translation, and video/audio recording. It is derived from the original ijkplayer project and includes many enhancements and new features, including: * Watching online TV and local media using a customized FFmpeg 6.1. * Recording online TV to automatically generate videos. * Studying ASR (Automatic Speech Recognition) using whisper.cpp. * Studying LLM (Large Language Model) using llama.cpp. * Studying SD (Text to Image by Stable Diffusion) using stablediffusion.cpp. * Generating real-time English subtitles for English online TV using whisper.cpp. * Running/experiencing LLM on Xiaomi 14 using llama.cpp. * Setting up a customized playlist and using the software to watch the content for R&D activity. * Refactoring the UI to be closer to a real commercial Android application (currently only supports English). Some goals of this project are: * To provide a well-maintained "workbench" for ASR researchers interested in practicing state-of-the-art AI technology in real scenarios on mobile devices (currently focusing on Android). * To provide a well-maintained "workbench" for LLM researchers interested in practicing state-of-the-art AI technology in real scenarios on mobile devices (currently focusing on Android). * To create an Android "turn-key project" for AI experts/researchers (who may not be familiar with regular Android software development) to focus on device-side AI R&D activity, where part of the AI R&D activity (algorithm improvement, model training, model generation, algorithm validation, model validation, performance benchmark, etc.) can be done very easily using Android Studio IDE and a powerful Android phone.

ai-demos

The 'ai-demos' repository is a collection of example code from presentations focusing on building with AI and LLMs. It serves as a resource for developers looking to explore practical applications of artificial intelligence in their projects. The code snippets showcase various techniques and approaches to leverage AI technologies effectively. The repository aims to inspire and educate developers on integrating AI solutions into their applications.

amazon-sagemaker-generativeai

Repository for training and deploying Generative AI models, including text-text, text-to-image generation, prompt engineering playground and chain of thought examples using SageMaker Studio. The tool provides a platform for users to experiment with generative AI techniques, enabling them to create text and image outputs based on input data. It offers a range of functionalities for training and deploying models, as well as exploring different generative AI applications.

AgentGPT

AgentGPT is a platform that allows users to configure and deploy autonomous AI agents. Users can name their own custom AI and set it on any goal. The AI will think of tasks, execute them, and learn from the results to reach the goal. The platform provides a demo experience, automatic setup CLI, and a tech stack including Next.js, FastAPI, Prisma, TailwindCSS, Zod, and more. AgentGPT is designed to help users easily create and deploy AI agents for various tasks.

openvino_build_deploy

The OpenVINO Build and Deploy repository provides pre-built components and code samples to accelerate the development and deployment of production-grade AI applications across various industries. With the OpenVINO Toolkit from Intel, users can enhance the capabilities of both Intel and non-Intel hardware to meet specific needs. The repository includes AI reference kits, interactive demos, workshops, and step-by-step instructions for building AI applications. Additional resources such as Jupyter notebooks and a Medium blog are also available. The repository is maintained by the AI Evangelist team at Intel, who provide guidance on real-world use cases for the OpenVINO toolkit.

sre

SmythOS is an operating system designed for building, deploying, and managing intelligent AI agents at scale. It provides a unified SDK and resource abstraction layer for various AI services, making it easy to scale and flexible. With an agent-first design, developer-friendly SDK, modular architecture, and enterprise security features, SmythOS offers a robust foundation for AI workloads. The system is built with a philosophy inspired by traditional operating system kernels, ensuring autonomy, control, and security for AI agents. SmythOS aims to make shipping production-ready AI agents accessible and open for everyone in the coming Internet of Agents era.

oreilly-ai-agents

This repository contains code for O'Reilly Live Online Training for AI Agents A-Z and Modern Automated AI Agents video series. It provides a guide to understanding, implementing, and managing AI agents, covering frameworks like CrewAI, LangChain, and AutoGen. Participants learn to build agents from scratch using prompt engineering techniques, deploy AI agents, evaluate performance, and make informed decisions in AI projects.

sagentic-af

Sagentic.ai Agent Framework is a tool for creating AI agents with hot reloading dev server. It allows users to spawn agents locally by calling specific endpoint. The framework comes with detailed documentation and supports contributions, issues, and feature requests. It is MIT licensed and maintained by Ahyve Inc.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.