Sunshine-AIO

An all-in-one step-by-step guide to setup Sunshine with additional tools.

Stars: 54

Sunshine-AIO is an all-in-one step-by-step guide to set up Sunshine with all necessary tools for Windows users. It provides a dedicated display for game streaming, virtual monitor switching, automatic resolution adjustment, resource-saving features, game launcher integration, and stream management. The project aims to evolve into an AIO tool as it progresses, welcoming contributions from users.

README:

An all-in-one step-by-step guide to setup Sunshine with all needed tools (Windows only at the moment).

(It's initially just a guide, but as it progresses, it will become more like an AIO tool.)

Contributions to this project are welcomed and highly appreciated.

There are several reasons:

-

A dedicated display for your game stream will be created by the Virtual Display Driver.

-

Sunshine Virtual Monitor allows you to switch between your current desktop (or any number of displays you have) and the Virtual Display.

-

It will also automatically adjust the resolution, quality, HDR option, and frame rate of the Virtual Display based on client settings (Moonlight settings).

-

To save resources for your gaming experience, it will deactivate your current displays and return to your first setup once the stream is finished.

-

Playnite will allow you to gather all your games from any platform (otherwise downloaded games included) in one launcher for your convenience.

-

Playnite Watcher will simply allow you to stop the stream when you close your game. (Sunshine does not support it natively)

-

It will also allow you to automatically import all your games into Sunshine with a click.

- Sunshine Installation

- Virtual Display Driver

- Sunshine Virtual Monitor

- Playnite Installation

- Playnite Watcher

- Enjoy

- Contributing

- License

- Acknowledgements

- Star History

Sunshine Installation

-

Download Sunshine and install it on your computer.

For Windows System, download the file

sunshine-windows-installer.exe.

To stream remotely, make sure to open these ports in your router settings and redirect them to your PC.

-

TCP:- 47984

- 47989

- 47990

- 48010

-

UDP:- 47998

- 47999

- 48000

-

Follow Installation steps then come back here when done.

-

Disable the new display freshly created from Device Manager or open a privileged terminal and run the command

pnputil /disable-device /deviceid root\iddsampledriver.

If you plan to use Moonlight from a Phone, make sure to add the correct resolution of all your clients into the

C:\IddSampleDriver\option.txtfile if they don't exist already.

-

Download Sunshine Virtual Monitor

- Extract the

sunshine-virtual-monitor-main.zipfile to a secure location (if the folder is deleted, the tool will not work anymore) and open it.

- Extract the

In the next steps, you can either choose to follow these quick steps or follow the original steps from sunshine-virtual-monitor

-

Download MultiMonitorTool for Windows 64-bits (Recommended) or MultiMonitorTool for Windows 32-bits (Old computers)

- Extract the

multimonitortool*.zipfile tomultimonitortool-x64folder and copy this folder to thesunshine-virtual-monitor-mainfolder.

- Extract the

-

Open a Privileged Powershell by entering your Windows key then type

powershelland enterCtrl + Shift + Enter.-

Install the module WindowsDisplayManager by typing the command :

Install-Module -Name WindowsDisplayManager

-

To enable the script execution you need to set your Execution Policy from

DefaulttoRemoteSigned:Set-ExecutionPolicy RemoteSignedSource: PowerShell execution policies

-

-

Download vsync-toggle and copy the file to the

sunshine-virtual-monitor-mainfolder.

Follow the steps in Sunshine Setup.

(Tip) Copy paste these commands on a PowerShell to get the config.do_cmd and config.undo_cmd commands written for you:

$folderName = "sunshine-virtual-monitor-main"

$folderPath = Get-ChildItem -Path "C:\" -Directory -Filter $folderName -Recurse -ErrorAction SilentlyContinue | Select-Object -First 1

$setupPath = $folderPath.FullName + "\setup_sunvdm.ps1"

$teardownPath = $folderPath.FullName + "\teardown_sunvdm.ps1"

$sunvdmLogPath = $folderPath.FullName + "\sunvdm.log"

Write-Host "$(Clear-Host)config.do_cmd:`n`ncmd /C powershell.exe -File $setupPath %SUNSHINE_CLIENT_WIDTH% %SUNSHINE_CLIENT_HEIGHT% %SUNSHINE_CLIENT_FPS% %SUNSHINE_CLIENT_HDR% > $sunvdmLogPath 2>&1`n`n`n`nconfig.undo_cmd:`n`ncmd /C powershell.exe -File $teardownPath >> $sunvdmLogPath 2>&1`n`n`n`n"

If you relocated the sunshine-virtual-monitor-main to a different disk, change the letter of the $folderPath in line 2 to match the new one. For example "D:\"

Playnite Installation

Download Playnite, install it and add all of your games.

Download Playnite Watcher and extract it to a secure location.

Make sure to follow these steps: PlayNite Watcher Script Guide

Configure your Moonlight client to connect to Sunshine and enjoy optimized streaming :)

Any contributions you make are greatly appreciated.

- Fork the Project

- Create your Feature Branch (

git checkout -b feature/NewFeature) - Commit your Changes (

git commit -m 'Add some NewFeature') - Push to the Branch (

git push origin feature/NewFeature) - Open a Pull Request

Thanks to every contributors who have contributed in this project.

Distributed under the MIT License. See LICENSE for more information.

Shoutout to LizardByte for the Sunshine repo: https://github.com/LizardByte/Sunshine

Shoutout to itsmikethetech for the Virtual Display Driver repo: https://github.com/itsmikethetech/Virtual-Display-Driver

Thanks to Cynary for the Sunshine Virtual Monitor scripts: https://github.com/Cynary/sunshine-virtual-monitor

Shoutout to JosefNemec for Playnite: https://github.com/JosefNemec/Playnite

Shoutout to Nonary for the PlayNiteWatcher script: https://github.com/Nonary/PlayNiteWatcher

Author/Maintainer: Garoh | Discord: garohrl

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Sunshine-AIO

Similar Open Source Tools

Sunshine-AIO

Sunshine-AIO is an all-in-one step-by-step guide to set up Sunshine with all necessary tools for Windows users. It provides a dedicated display for game streaming, virtual monitor switching, automatic resolution adjustment, resource-saving features, game launcher integration, and stream management. The project aims to evolve into an AIO tool as it progresses, welcoming contributions from users.

Windows-Use

Windows-Use is a powerful automation agent that interacts directly with the Windows OS at the GUI layer. It bridges the gap between AI agents and Windows to perform tasks such as opening apps, clicking buttons, typing, executing shell commands, and capturing UI state without relying on traditional computer vision models. It enables any large language model (LLM) to perform computer automation instead of relying on specific models for it.

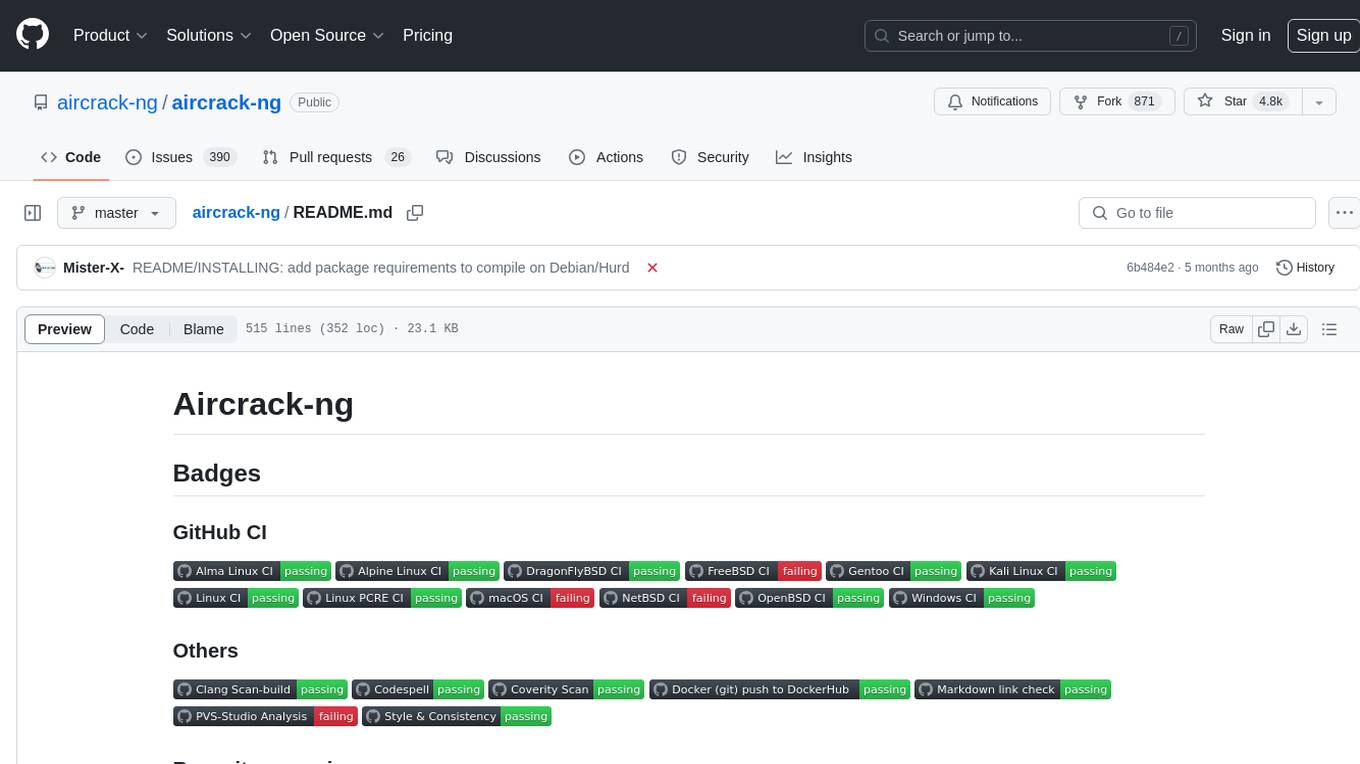

aircrack-ng

Aircrack-ng is a comprehensive suite of tools designed to evaluate the security of WiFi networks. It covers various aspects of WiFi security, including monitoring, attacking (replay attacks, deauthentication, fake access points), testing WiFi cards and driver capabilities, and cracking WEP and WPA PSK. The tools are command line-based, allowing for extensive scripting and have been utilized by many GUIs. Aircrack-ng primarily works on Linux but also supports Windows, macOS, FreeBSD, OpenBSD, NetBSD, Solaris, and eComStation 2.

modelscope-agent

ModelScope-Agent is a customizable and scalable Agent framework. A single agent has abilities such as role-playing, LLM calling, tool usage, planning, and memory. It mainly has the following characteristics: - **Simple Agent Implementation Process**: Simply specify the role instruction, LLM name, and tool name list to implement an Agent application. The framework automatically arranges workflows for tool usage, planning, and memory. - **Rich models and tools**: The framework is equipped with rich LLM interfaces, such as Dashscope and Modelscope model interfaces, OpenAI model interfaces, etc. Built in rich tools, such as **code interpreter**, **weather query**, **text to image**, **web browsing**, etc., make it easy to customize exclusive agents. - **Unified interface and high scalability**: The framework has clear tools and LLM registration mechanism, making it convenient for users to expand more diverse Agent applications. - **Low coupling**: Developers can easily use built-in tools, LLM, memory, and other components without the need to bind higher-level agents.

llama-assistant

Llama Assistant is an AI-powered assistant that helps with daily tasks, such as voice recognition, natural language processing, summarizing text, rephrasing sentences, answering questions, and more. It runs offline on your local machine, ensuring privacy by not sending data to external servers. The project is a work in progress with regular feature additions.

OutofFocus

Out of Focus v1.0 is a flexible tool in Gradio for image manipulation through prompt manipulation by reconstruction via diffusion inversion process. Users can modify images using this tool, which is the first version of the Image modification tool by Out of AI.

ai-toolkit

The AI Toolkit by Ostris is a collection of tools for machine learning, specifically designed for image generation, LoRA (latent representations of attributes) extraction and manipulation, and model training. It provides a user-friendly interface and extensive documentation to make it accessible to both developers and non-developers. The toolkit is actively under development, with new features and improvements being added regularly. Some of the key features of the AI Toolkit include: - Batch Image Generation: Allows users to generate a batch of images based on prompts or text files, using a configuration file to specify the desired settings. - LoRA (lierla), LoCON (LyCORIS) Extractor: Facilitates the extraction of LoRA and LoCON representations from pre-trained models, enabling users to modify and manipulate these representations for various purposes. - LoRA Rescale: Provides a tool to rescale LoRA weights, allowing users to adjust the influence of specific attributes in the generated images. - LoRA Slider Trainer: Enables the training of LoRA sliders, which can be used to control and adjust specific attributes in the generated images, offering a powerful tool for fine-tuning and customization. - Extensions: Supports the creation and sharing of custom extensions, allowing users to extend the functionality of the toolkit with their own tools and scripts. - VAE (Variational Auto Encoder) Trainer: Facilitates the training of VAEs for image generation, providing users with a tool to explore and improve the quality of generated images. The AI Toolkit is a valuable resource for anyone interested in exploring and utilizing machine learning for image generation and manipulation. Its user-friendly interface, extensive documentation, and active development make it an accessible and powerful tool for both beginners and experienced users.

llama-assistant

Llama Assistant is a local AI assistant that respects your privacy. It is an AI-powered assistant that can recognize your voice, process natural language, and perform various actions based on your commands. It can help with tasks like summarizing text, rephrasing sentences, answering questions, writing emails, and more. The assistant runs offline on your local machine, ensuring privacy by not sending data to external servers. It supports voice recognition, natural language processing, and customizable UI with adjustable transparency. The project is a work in progress with new features being added regularly.

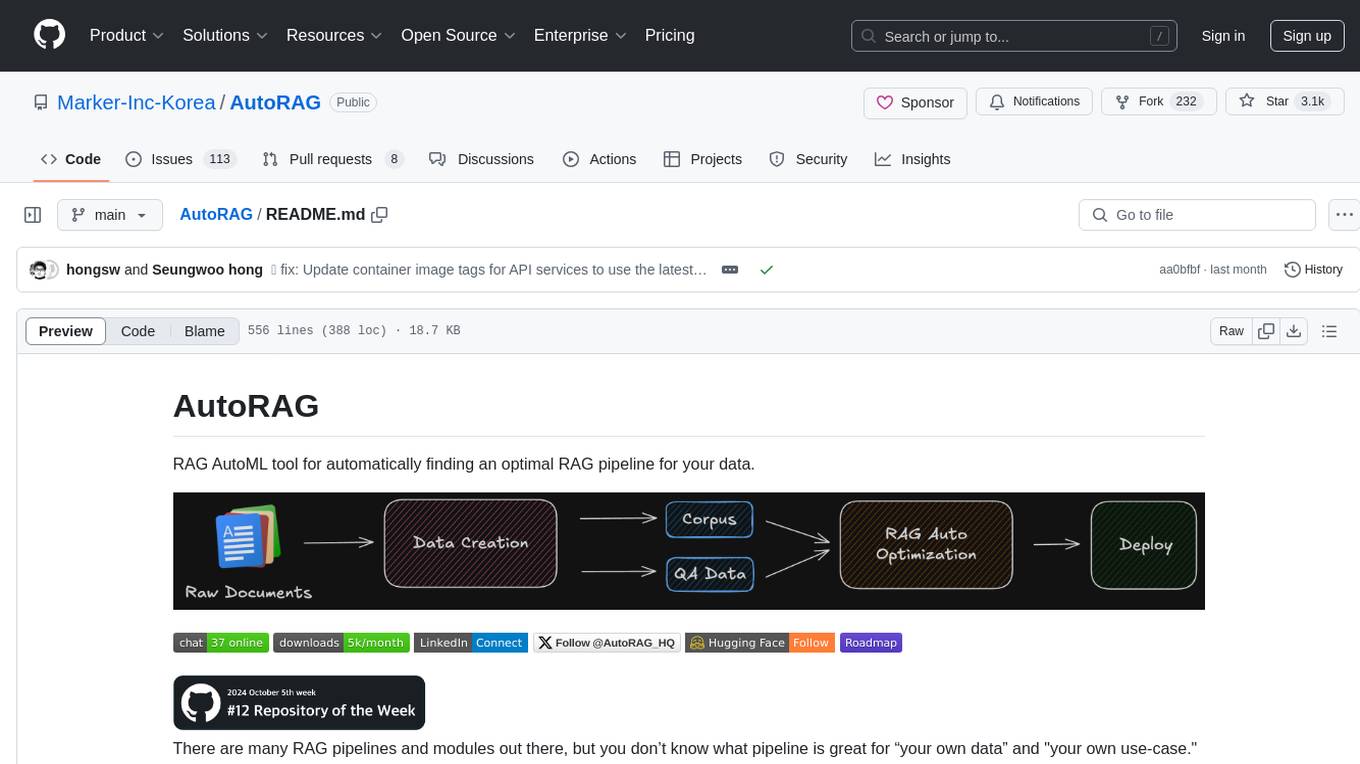

AutoRAG

AutoRAG is an AutoML tool designed to automatically find the optimal RAG pipeline for your data. It simplifies the process of evaluating various RAG modules to identify the best pipeline for your specific use-case. The tool supports easy evaluation of different module combinations, making it efficient to find the most suitable RAG pipeline for your needs. AutoRAG also offers a cloud beta version to assist users in running and optimizing the tool, along with building RAG evaluation datasets for a starting price of $9.99 per optimization.

open-autonomy

Open Autonomy is a framework for creating agent services that run as a multi-agent-system and offer enhanced functionalities on-chain. It enables executing complex operations like machine-learning algorithms in a decentralized, trust-minimized, transparent, and robust manner.

fiftyone

FiftyOne is an open-source tool designed for building high-quality datasets and computer vision models. It supercharges machine learning workflows by enabling users to visualize datasets, interpret models faster, and improve efficiency. With FiftyOne, users can explore scenarios, identify failure modes, visualize complex labels, evaluate models, find annotation mistakes, and much more. The tool aims to streamline the process of improving machine learning models by providing a comprehensive set of features for data analysis and model interpretation.

infinity

Infinity is a high-throughput, low-latency REST API for serving vector embeddings, supporting all sentence-transformer models and frameworks. It is developed under the MIT License and powers inference behind Gradient.ai. The API allows users to deploy models from SentenceTransformers, offers fast inference backends utilizing various accelerators, dynamic batching for efficient processing, correct and tested implementation, and easy-to-use API built on FastAPI with Swagger documentation. Users can embed text, rerank documents, and perform text classification tasks using the tool. Infinity supports various models from Huggingface and provides flexibility in deployment via CLI, Docker, Python API, and cloud services like dstack. The tool is suitable for tasks like embedding, reranking, and text classification.

claude-code.nvim

Claude Code Neovim Plugin is a seamless integration between Claude Code AI assistant and Neovim. It allows users to toggle Claude Code in a terminal window with a single key press, automatically detect and reload files modified by Claude Code, provide real-time buffer updates when files are changed externally, offer customizable window position and size, integrate with which-key, use git project root as working directory, maintain a modular code structure, provide type annotations with LuaCATS for better IDE support, offer configuration validation, and include a testing framework for reliability. The plugin creates a terminal buffer running the Claude Code CLI, sets up autocommands to detect file changes on disk, automatically reloads files modified by Claude Code, provides keymaps and commands for toggling the terminal, and detects git repositories to set the working directory to the git root.

openkf

OpenKF (Open Knowledge Flow) is an online intelligent customer service system. It is an open-source customer service system based on OpenIM, supporting LLM (Local Knowledgebase) customer service and multi-channel customer service. It is easy to integrate with third-party systems, deploy, and perform secondary development. The system provides features like login page, config page, dashboard page, platform page, and session page. Users can quickly get started with OpenKF by following the installation and run instructions. The architecture follows MVC design with a standardized directory structure. The community encourages involvement through community meetings, contributions, and development. OpenKF is licensed under the Apache 2.0 license.

easy-dataset

Easy Dataset is a specialized application designed to streamline the creation of fine-tuning datasets for Large Language Models (LLMs). It offers an intuitive interface for uploading domain-specific files, intelligently splitting content, generating questions, and producing high-quality training data for model fine-tuning. With Easy Dataset, users can transform domain knowledge into structured datasets compatible with all OpenAI-format compatible LLM APIs, making the fine-tuning process accessible and efficient.

genius-ai

Genius is a modern Next.js 14 SaaS AI platform that provides a comprehensive folder structure for app development. It offers features like authentication, dashboard management, landing pages, API integration, and more. The platform is built using React JS, Next JS, TypeScript, Tailwind CSS, and integrates with services like Netlify, Prisma, MySQL, and Stripe. Genius enables users to create AI-powered applications with functionalities such as conversation generation, image processing, code generation, and more. It also includes features like Clerk authentication, OpenAI integration, Replicate API usage, Aiven database connectivity, and Stripe API/webhook setup. The platform is fully configurable and provides a seamless development experience for building AI-driven applications.

For similar tasks

Sunshine-AIO

Sunshine-AIO is an all-in-one step-by-step guide to set up Sunshine with all necessary tools for Windows users. It provides a dedicated display for game streaming, virtual monitor switching, automatic resolution adjustment, resource-saving features, game launcher integration, and stream management. The project aims to evolve into an AIO tool as it progresses, welcoming contributions from users.

For similar jobs

Sunshine-AIO

Sunshine-AIO is an all-in-one step-by-step guide to set up Sunshine with all necessary tools for Windows users. It provides a dedicated display for game streaming, virtual monitor switching, automatic resolution adjustment, resource-saving features, game launcher integration, and stream management. The project aims to evolve into an AIO tool as it progresses, welcoming contributions from users.

better-genshin-impact

BetterGI is a project based on computer vision technology, which aims to make Genshin Impact better. It can automatically pick up items, skip dialogues, automatically select options, automatically submit items, close pop-up pages, etc. When talking to Katherine, it can automatically receive the "Daily Commission" rewards and automatically re-dispatch. When the automatic plot function is turned on, this function will take effect, and the invitation options will be automatically selected. AI recognizes automatic casting, automatically reels in when the fish is hooked, and automatically completes the fishing progress. Help you easily complete the Seven Saint Summoning character invitation, weekly visitor challenge and other PVE content. Automatically use the "King Tree Blessing" with the `Z` key, and use the principle of refreshing wood by going online and offline to hang up a backpack full of wood. Write combat scripts to let the team fight automatically according to your strategy. Fully automatic secret realm hangs up to restore physical strength, automatically enters the secret realm to open the key, fight, walk to the ancient tree and receive rewards. Click the teleportation point on the map, or if there is a teleportation point in the list that appears after clicking, it will automatically click the teleportation point and teleport. Set a shortcut key, and long press to continuously rotate the perspective horizontally (of course you can also use it to rotate the grass god). Quickly switch between "Details" and "Enhance" pages to skip the display of holy relic enhancement results and quickly +20. You can quickly purchase items in the store in full quantity, which is suitable for quickly clearing event redemptions,塵歌壺 store redemptions, etc.

vector_companion

Vector Companion is an AI tool designed to act as a virtual companion on your computer. It consists of two personalities, Axiom and Axis, who can engage in conversations based on what is happening on the screen. The tool can transcribe audio output and user microphone input, take screenshots, and read text via OCR to create lifelike interactions. It requires specific prerequisites to run on Windows and uses VB Cable to capture audio. Users can interact with Axiom and Axis by running the main script after installation and configuration.