choco-builder

ChocoBuilder (Chocolate Factory) is a cutting-edge LLM toolkit designed to empower you in creating your very own AI assistant.Chocolate Factory 是一款开源的 LLM 应用开发框架,旨在帮助您轻松打造强大的软件开发 SDLC + LLM 生成助手。无论您是需要生成前端页面、后端 API、SQL 图表,还是测试用例数据,Chocolate Factory 都能满足您的需求。

Stars: 182

ChocoBuilder (aka Chocolate Factory) is an open-source LLM application development framework designed to help you easily create powerful software development SDLC + LLM generation assistants. It provides modules for integration into JVM projects, usage with RAGScript, and local deployment examples. ChocoBuilder follows a Domain Driven Problem-Solving design philosophy with key concepts like ProblemClarifier, ProblemAnalyzer, SolutionDesigner, SolutionReviewer, and SolutionExecutor. It offers use cases for desktop/IDE, server, and Android applications, with examples for frontend design, semantic code search, testcase generation, and code interpretation.

README:

ChocoBuilder (origin Chocolate Factory) 是一款开源的 LLM 应用开发框架,旨在帮助您轻松打造强大的软件开发 SDLC + LLM 生成助手。

For native (Android/iOS/Embedded device) SDK: see in https://github.com/unit-mesh/edge-infer

方式 1:集成到 JVM 项目中

模块列表:https://central.sonatype.com/namespace/cc.unitmesh

dependencies {

// 核心模块

implementation 'cc.unitmesh:cocoa-core:0.4.6'

// Pinecone

implementation 'cc.unitmesh:store-pinecone:0.4.6'

// ElasticSearch

implementation 'cc.unitmesh:store-elasticsearch:0.4.6'

//...其它模块

}更多示例见:examples/

方式 2:使用 RAGScript

@file:DependsOn("cc.unitmesh:rag-script:0.4.6")

import cc.unitmesh.rag.*

rag {

indexing {

val chunks = document("README.md").split()

store.indexing(chunks)

}

querying {

store.findRelevant("workflow dsl design ")

.lowInMiddle()

.also {

println(it)

}

}

}方式 3:本地部署示例

git clone https://github.com/unit-mesh/chocolate-factory

# modify OPENAI_API_KEY and OPENAI_HOST in docker-compose.yml

docker-compose upDesktop/IDE:

- https://github.com/unit-mesh/auto-dev - The AI-powered coding wizard with multilingual support 🌐, auto code generation 🏗️, and a helpful bug-slaying assistant

- https://github.com/phodal/shire - AI Coding Agent Language

Server:

Android:

See in https://framework.unitmesh.cc/ or see in documents

The key concepts of ChocoBuilder are:

(PS: Origin made by Michael Plöd at Aligning organization and architecture with strategic DDD)

A user's problem is processed by the following steps:

- Online Demo: https://framework.unitmesh.cc/ (TODO)

- Video Demo: https://www.bilibili.com/video/BV1T14y1C7p2

- Gif Demo:

- 步骤 1:ProblemClarifier:使用响应式布局,编写一个聊天页面

- 步骤 1.1:ProblemClarifier:左边是一个导航,中间是聊天区,聊天区的下方是一个输入按钮。

- 步骤 2:SolutionDesigner:请确认以下的设计是否符合您的要求。如果符合,请回复"YES",如果不符合,请提出你的要求。

- 步骤 3:SolutionExecutor:生成一个聊天页面

- 步骤 1:ProblemAnalyzer 分析用户的需求,转为成多个语义化的查询

- 中文、英文、HyDE 模式

- 步骤 2:SolutionExecutor:根据用户的需求,从数据库中检索出最相关的代码片段,由 ChatGPT 做总结

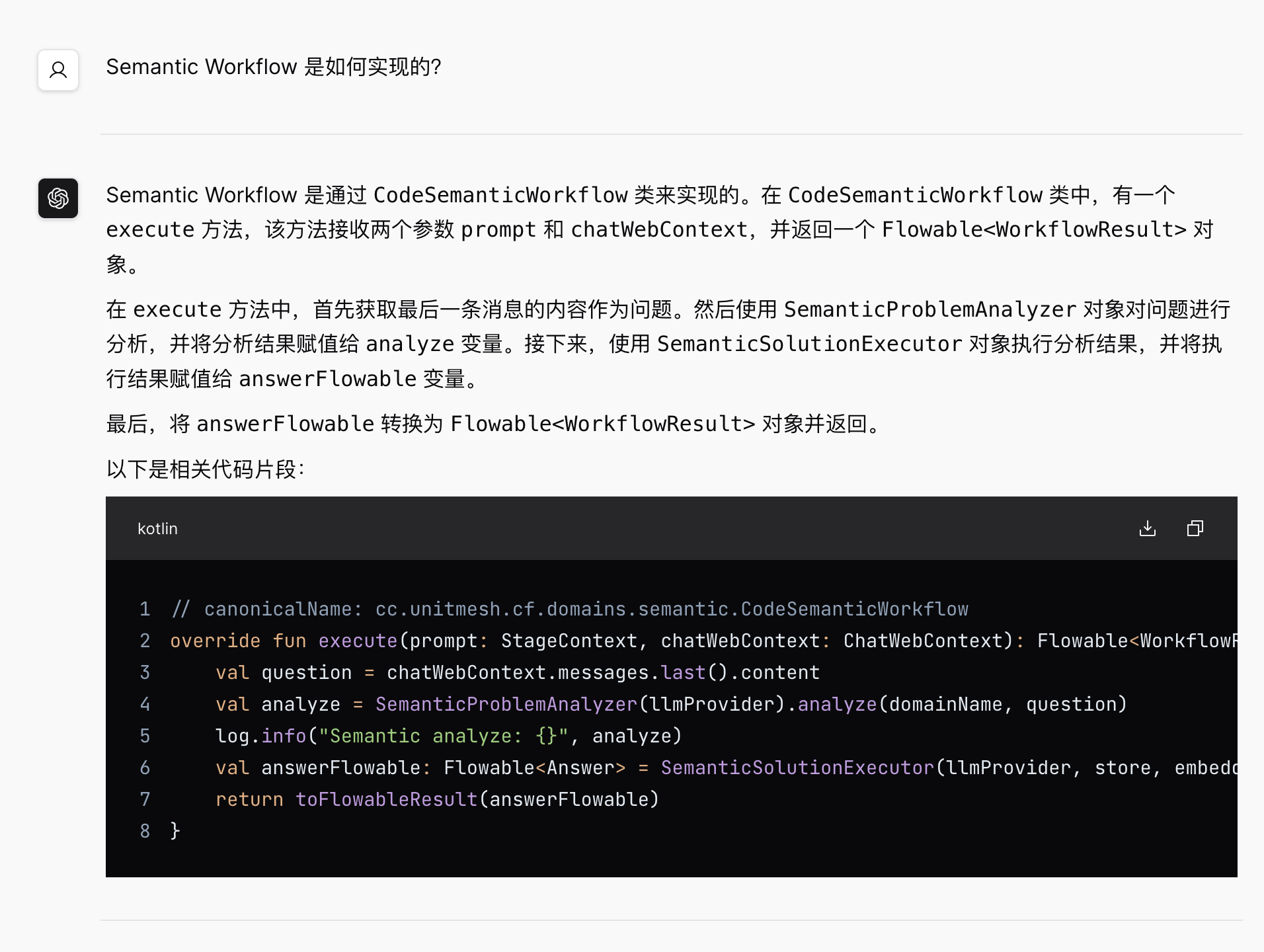

示例输入:Semantic Workflow 是如何实现的?

最终输出:

- 步骤 1:ProblemAnalyzer 分析用户的需求,确认是否是一个测试用例生成的需求

- [x] 多 Temperature 模式:TemperatureMode.Default, TemperatureMode.Creative

- 步骤 2:SolutionDesigner 设计测试用例生成的方案

- 步骤 3:SolutionReviewer 确认方案是否符合用户的需求

示例输入:用户发表文章

最终输出:

- 步骤 1:SolutionExecutor

输出示例:

1 2 3 4 5 6 7 8 9

2 4 6 8 10 12 14 16 18

3 6 9 12 15 18 21 24 27

4 8 12 16 20 24 28 32 36

5 10 15 20 25 30 35 40 45

6 12 18 24 30 36 42 48 54

7 14 21 28 35 42 49 56 63

8 16 24 32 40 48 56 64 72

9 18 27 36 45 54 63 72 81 生成一个 2023 年上半年电费图,信息如下:###1~6 月:201.2,222,234.3,120.2,90,90.4###

过程代码:

%use lets-plot

import kotlin.math.PI

import kotlin.random.Random

val incomeData = mapOf(

"x" to listOf("一月", "二月", "三月", "四月", "五月", "六月"),

"y" to listOf(201.2, 222, 234.3, 120.2, 90, 94.4)

)

letsPlot(incomeData) { x = "x"; y = "y" } +

geomBar(stat = Stat.identity) +

geomText(labelFormat = "\${.2f}") { label = "y"; } +

ggtitle("2023 年上半年电费")

最终输出:

RAG relevant modules were inspired by

Some RAG modules based on LangChain4j and Spring AI which is licensed under the Apache License 2.0.

This code is distributed under the MPL 2.0 license. See LICENSE in this directory.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for choco-builder

Similar Open Source Tools

choco-builder

ChocoBuilder (aka Chocolate Factory) is an open-source LLM application development framework designed to help you easily create powerful software development SDLC + LLM generation assistants. It provides modules for integration into JVM projects, usage with RAGScript, and local deployment examples. ChocoBuilder follows a Domain Driven Problem-Solving design philosophy with key concepts like ProblemClarifier, ProblemAnalyzer, SolutionDesigner, SolutionReviewer, and SolutionExecutor. It offers use cases for desktop/IDE, server, and Android applications, with examples for frontend design, semantic code search, testcase generation, and code interpretation.

chocolate-factory

Chocolate Factory is an open-source LLM application development framework designed to help you easily create powerful software development SDLC + LLM assistants. It provides a set of modules for integration into JVM projects and offers RAGScript for querying and local deployment examples. The tool follows a domain-driven problem-solving approach with key concepts like ProblemClarifier, ProblemAnalyzer, SolutionDesigner, SolutionReviewer, and SolutionExecutor. It supports use cases in desktop/IDE, server, and Android development, with a focus on AI-powered coding assistance and semantic search capabilities.

llama_ros

This repository provides a set of ROS 2 packages to integrate llama.cpp into ROS 2. By using the llama_ros packages, you can easily incorporate the powerful optimization capabilities of llama.cpp into your ROS 2 projects by running GGUF-based LLMs and VLMs.

llama.cpp

llama.cpp is a C++ implementation of LLaMA, a large language model from Meta. It provides a command-line interface for inference and can be used for a variety of tasks, including text generation, translation, and question answering. llama.cpp is highly optimized for performance and can be run on a variety of hardware, including CPUs, GPUs, and TPUs.

Verbiverse

Verbiverse is a tool that uses a large language model to assist in reading PDFs and watching videos, aimed at improving language proficiency. It provides a more convenient and efficient way to use large models through predefined prompts, designed for those looking to enhance their language skills. The tool analyzes unfamiliar words and sentences in foreign language PDFs or video subtitles, providing better contextual understanding compared to traditional dictionary translations or ambiguous meanings. It offers features such as automatic loading of subtitles, word analysis by clicking or double-clicking, and a word database for collecting words. Users can run the tool on Windows x86_64 or ubuntu_22.04 x86_64 platforms by downloading the precompiled packages or by cloning the source code and setting up a virtual environment with Python. It is recommended to use a local model or smaller PDF files for testing due to potential token consumption issues with large files.

aiwechat-vercel

aiwechat-vercel is a tool that integrates AI capabilities into WeChat public accounts using Vercel functions. It requires minimal server setup, low entry barriers, and only needs a domain name that can be bound to Vercel, with almost zero cost. The tool supports various AI models, continuous Q&A sessions, chat functionality, system prompts, and custom commands. It aims to provide a platform for learning and experimentation with AI integration in WeChat public accounts.

ChatPilot

ChatPilot is a chat agent tool that enables AgentChat conversations, supports Google search, URL conversation (RAG), and code interpreter functionality, replicates Kimi Chat (file, drag and drop; URL, send out), and supports OpenAI/Azure API. It is based on LangChain and implements ReAct and OpenAI Function Call for agent Q&A dialogue. The tool supports various automatic tools such as online search using Google Search API, URL parsing tool, Python code interpreter, and enhanced RAG file Q&A with query rewriting support. It also allows front-end and back-end service separation using Svelte and FastAPI, respectively. Additionally, it supports voice input/output, image generation, user management, permission control, and chat record import/export.

qianfan-starter

WenXin-Starter is a spring-boot-starter for Baidu's 'WenXin Workshop' large model, facilitating quick integration of Baidu's AI capabilities. It provides complete integration with WenXin Workshop's official API documentation, supports WenShengTu, built-in conversation memory, and supports conversation streaming. It also supports QPS control for individual models and queuing mechanism, with upcoming plugin support.

auto-round

AutoRound is an advanced weight-only quantization algorithm for low-bits LLM inference. It competes impressively against recent methods without introducing any additional inference overhead. The method adopts sign gradient descent to fine-tune rounding values and minmax values of weights in just 200 steps, often significantly outperforming SignRound with the cost of more tuning time for quantization. AutoRound is tailored for a wide range of models and consistently delivers noticeable improvements.

feast

Feast is an open source feature store for machine learning, providing a fast path to manage infrastructure for productionizing analytic data. It allows ML platform teams to make features consistently available, avoid data leakage, and decouple ML from data infrastructure. Feast abstracts feature storage from retrieval, ensuring portability across different model training and serving scenarios.

new-api

New API is an open-source project based on One API with additional features and improvements. It offers a new UI interface, supports Midjourney-Proxy(Plus) interface, online recharge functionality, model-based charging, channel weight randomization, data dashboard, token-controlled models, Telegram authorization login, Suno API support, Rerank model integration, and various third-party models. Users can customize models, retry channels, and configure caching settings. The deployment can be done using Docker with SQLite or MySQL databases. The project provides documentation for Midjourney and Suno interfaces, and it is suitable for AI enthusiasts and developers looking to enhance AI capabilities.

VideoRefer

VideoRefer Suite is a tool designed to enhance the fine-grained spatial-temporal understanding capabilities of Video Large Language Models (Video LLMs). It consists of three primary components: Model (VideoRefer) for perceiving, reasoning, and retrieval for user-defined regions at any specified timestamps, Dataset (VideoRefer-700K) for high-quality object-level video instruction data, and Benchmark (VideoRefer-Bench) to evaluate object-level video understanding capabilities. The tool can understand any object within a video.

wenxin-starter

WenXin-Starter is a spring-boot-starter for Baidu's "Wenxin Qianfan WENXINWORKSHOP" large model, which can help you quickly access Baidu's AI capabilities. It fully integrates the official API documentation of Wenxin Qianfan. Supports text-to-image generation, built-in dialogue memory, and supports streaming return of dialogue. Supports QPS control of a single model and supports queuing mechanism. Plugins will be added soon.

langchain-rust

LangChain Rust is a library for building applications with Large Language Models (LLMs) through composability. It provides a set of tools and components that can be used to create conversational agents, document loaders, and other applications that leverage LLMs. LangChain Rust supports a variety of LLMs, including OpenAI, Azure OpenAI, Ollama, and Anthropic Claude. It also supports a variety of embeddings, vector stores, and document loaders. LangChain Rust is designed to be easy to use and extensible, making it a great choice for developers who want to build applications with LLMs.

GPULlama3.java

GPULlama3.java powered by TornadoVM is a Java-native implementation of Llama3 that automatically compiles and executes Java code on GPUs via TornadoVM. It supports Llama3, Mistral, Qwen2.5, Qwen3, and Phi3 models in the GGUF format. The repository aims to provide GPU acceleration for Java code, enabling faster execution and high-performance access to off-heap memory. It offers features like interactive and instruction modes, flexible backend switching between OpenCL and PTX, and cross-platform compatibility with NVIDIA, Intel, and Apple GPUs.

llm_model_hub

Model Hub V2 is a one-stop platform for model fine-tuning, deployment, and debugging without code, providing users with a visual interface to quickly validate the effects of fine-tuning various open-source models, facilitating rapid experimentation and decision-making, and lowering the threshold for users to fine-tune large models. For detailed instructions, please refer to the Feishu documentation.

For similar tasks

choco-builder

ChocoBuilder (aka Chocolate Factory) is an open-source LLM application development framework designed to help you easily create powerful software development SDLC + LLM generation assistants. It provides modules for integration into JVM projects, usage with RAGScript, and local deployment examples. ChocoBuilder follows a Domain Driven Problem-Solving design philosophy with key concepts like ProblemClarifier, ProblemAnalyzer, SolutionDesigner, SolutionReviewer, and SolutionExecutor. It offers use cases for desktop/IDE, server, and Android applications, with examples for frontend design, semantic code search, testcase generation, and code interpretation.

gollm

gollm is a Go package designed to simplify interactions with Large Language Models (LLMs) for AI engineers and developers. It offers a unified API for multiple LLM providers, easy provider and model switching, flexible configuration options, advanced prompt engineering, prompt optimization, memory retention, structured output and validation, provider comparison tools, high-level AI functions, robust error handling and retries, and extensible architecture. The package enables users to create AI-powered golems for tasks like content creation workflows, complex reasoning tasks, structured data generation, model performance analysis, prompt optimization, and creating a mixture of agents.

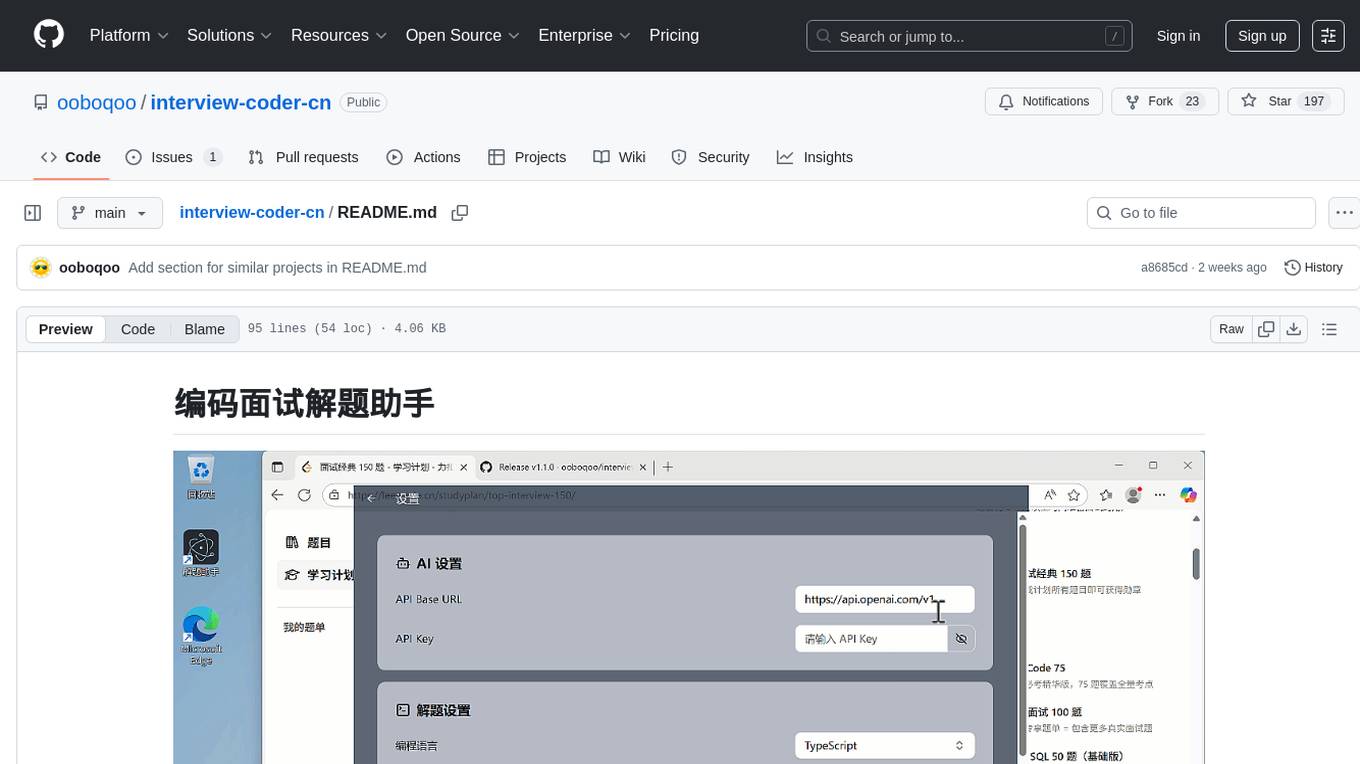

interview-coder-cn

This is a coding problem-solving assistant for Chinese users, tailored to the domestic AI ecosystem, simple and easy to use. It provides real-time problem-solving ideas and code analysis for coding interviews, avoiding detection during screen sharing. Users can also extend its functionality for other scenarios by customizing prompt words. The tool supports various programming languages and has stealth capabilities to hide its interface from interviewers even when screen sharing.

evolving-agents

A toolkit for agent autonomy, evolution, and governance enabling agents to learn from experience, collaborate, communicate, and build new tools within governance guardrails. It focuses on autonomous evolution, agent self-discovery, governance firmware, self-building systems, and agent-centric architecture. The toolkit leverages existing frameworks to enable agent autonomy and self-governance, moving towards truly autonomous AI systems.

pai-opencode

PAI-OpenCode is a complete port of Daniel Miessler's Personal AI Infrastructure (PAI) to OpenCode, an open-source, provider-agnostic AI coding assistant. It brings modular capabilities, dynamic multi-agent orchestration, session history, and lifecycle automation to personalize AI assistants for users. With support for 75+ AI providers, PAI-OpenCode offers dynamic per-task model routing, full PAI infrastructure, real-time session sharing, and multiple client options. The tool optimizes cost and quality with a 3-tier model strategy and a 3-tier research system, allowing users to switch presets for different routing strategies. PAI-OpenCode's architecture preserves PAI's design while adapting to OpenCode, documented through Architecture Decision Records (ADRs).

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.