VT.ai

VT.ai - Minimal multimodal AI chat app with dynamic conversation routing

Stars: 66

VT.ai is a multimodal AI platform that offers dynamic conversation routing with SemanticRouter, multi-modal interactions (text/image/audio), an assistant framework with code interpretation, real-time response streaming, cross-provider model switching, and local model support with Ollama integration. It supports various AI providers such as OpenAI, Anthropic, Google Gemini, Groq, Cohere, and OpenRouter, providing a wide range of core capabilities for AI orchestration.

README:

Multimodal AI chat application with semantic-based conversation routing

Supported AI Model Providers:

- OpenAI (GPT-o1, GPT-o3, GPT-4.5, GPT-4o)

- Anthropic (Claude 3.5, Claude 3.7 Sonnet)

- Google (Gemini 1.5/2.0/2.5 Pro/Flash series)

- DeepSeek (DeepSeek R1 and V3 series)

- Meta (Llama 3 & 4, including Maverick & Scout)

- Cohere (Command, Command-R, Command-R-Plus)

- Local Models via Ollama (Llama3, Phi-3, Mistral, DeepSeek R1)

- Groq (Llama 3 70B, Mixtral 8x7B)

- OpenRouter (unified access to multiple providers)

Core Capabilities:

- Semantic-based routing using embedding-based classification

- Multi-modal interactions across text, image, and audio

- Vision analysis for images and URLs

- Image generation with DALL-E 3

- Text-to-Speech with multiple voice models

- Assistant framework with code interpreter

- Thinking mode with transparent reasoning steps

- Real-time streaming responses

- Provider-agnostic model switching

VT.ai implements a modular architecture centered on a semantic routing system that directs user queries to specialized handlers:

┌────────────────┐ ┌─────────────────┐ ┌───────────────────────┐

│ User Request │────▶│ Semantic Router │────▶│ Route-Specific Handler │

└────────────────┘ └─────────────────┘ └───────────────────────┘

│ │

▼ ▼

┌──────────────┐ ┌──────────────────┐

│ Model Select │◀─────────▶│ Provider API │

└──────────────┘ │ (OpenAI,Gemini, │

│ │ Anthropic,etc.) │

▼ └──────────────────┘

┌──────────────┐

│ Response │

│ Processing │

└──────────────┘

-

Semantic Router (

/src/router/)- Vector-based query classification system using FastEmbed embeddings

- Routes user queries to specialized handlers using the

SemanticRouterTypeenum - Supports five distinct routing categories:

- Text processing (summaries, translations, analysis)

- Image generation (DALL-E prompt crafting)

- Vision analysis (image interpretation)

- Casual conversation (social interactions)

- Curious inquiries (informational requests)

-

Model Management (

/src/utils/llm_settings_config.py)- Unified API abstractions through LiteLLM

- Provider-agnostic model switching with dynamic parameters

- Centralized configuration for model settings and routing

-

Conversation Handling (

/src/utils/conversation_handlers.py)- Streaming response processing with backpressure handling

- Multi-modal content parsing and rendering

- Thinking mode implementation showing reasoning steps

- Error handling with graceful fallbacks

-

Assistant Framework (

/src/assistants/mino/)- Tool use capabilities with function calling

- Code interpreter integration for computation

- File attachment processing with multiple formats

- Assistant state management

-

Media Processing (

/src/utils/media_processors.py)- Image generation pipeline for DALL-E 3

- Vision analysis with cross-provider model support

- Audio transcription and Text-to-Speech integration

- Python 3.11+ (specified in

.python-version) - uv for dependency management

- Ollama (optional, for local models)

# Clone repository

git clone https://github.com/vinhnx/VT.ai.git

cd VT.ai

# Setup environment using uv

uv venv

source .venv/bin/activate # Linux/Mac

.venv\Scripts\activate # Windows

# Install dependencies using uv

uv pip install -e . # Install main dependencies

uv pip install -e ".[dev]" # Optional: Install development dependencies

# Configure environment

cp .env.example .env

# Edit .env with your API keysEdit the .env file with your API keys for the models you intend to use:

# Required for basic functionality (at least one of these)

OPENAI_API_KEY=your-openai-key

GEMINI_API_KEY=your-gemini-key

# Optional providers (enable as needed)

ANTHROPIC_API_KEY=your-anthropic-key

COHERE_API_KEY=your-cohere-key

GROQ_API_KEY=your-groq-key

OPENROUTER_API_KEY=your-openrouter-key

MISTRAL_API_KEY=your-mistral-key

HUGGINGFACE_API_KEY=your-huggingface-key

# For local models

OLLAMA_HOST=http://localhost:11434# Activate the virtual environment (if not already active)

source .venv/bin/activate # Linux/Mac

.venv\Scripts\activate # Windows

# Launch the interface

chainlit run src/app.py -wThe -w flag enables auto-reloading during development.

The project uses uv for faster and more reliable dependency management:

# Add a new dependency

uv pip install package-name

# Update a dependency

uv pip install --upgrade package-name

# Export dependencies to requirements.txt

uv pip freeze > requirements.txt

# Install from requirements.txt

uv pip install -r requirements.txtTo customize the semantic router for specific use cases:

python src/router/trainer.pyThis utility updates the layers.json file with new routing rules and requires an OpenAI API key to generate embeddings.

| Shortcut | Action |

|---|---|

| Ctrl+/ | Switch model provider |

| Ctrl+, | Open settings panel |

| Ctrl+L | Clear conversation history |

-

Standard Chat

- Access to all configured LLM providers

- Dynamic conversation routing based on query classification

- Support for text, image, and audio inputs

- Advanced thinking mode with reasoning trace (use "" tag)

-

Assistant Mode (Beta)

- Code interpreter for computations and data analysis

- File attachment support (PDF/CSV/Images)

- Persistent conversation threads

- Function calling for external integrations

- Image Generation: Generate images through prompts ("Generate an image of...")

- Image Analysis: Upload or provide URL for image interpretation

- Text Processing: Request summaries, translations, or content transformation

- Voice Interaction: Use speech recognition for input and TTS for responses

- Thinking Mode: Access step-by-step reasoning from the models

| Category | Models |

|---|---|

| Chat | GPT-o1, GPT-o3 Mini, GPT-4o, Claude 3.5/3.7, Gemini 2.0/2.5 |

| Vision | GPT-4o, Gemini 1.5 Pro/Flash, Llama3.2 Vision |

| Image Gen | DALL-E 3 |

| TTS | GPT-4o mini TTS, TTS-1, TTS-1-HD |

| Local | Llama3, Mistral, DeepSeek R1 (1.5B to 70B) |

# Activate the virtual environment

source .venv/bin/activate # Linux/Mac

.venv\Scripts\activate # Windows

# Install development dependencies

uv pip install -e ".[dev]"

# Format code

black .

# Run linting

flake8 src/

isort src/

# Run tests

pytest# Add a project dependency

uv pip install package-name

# Update pyproject.toml manually after confirming compatibility

# Add a development dependency

uv pip install --dev package-name

# Update pyproject.toml's project.optional-dependencies.dev section

# Sync all project dependencies after pulling updates

uv pip install -e .

uv pip install -e ".[dev]"- Fork the repository

- Create a feature branch (

git checkout -b feature/new-capability) - Add type hints for new functions

- Update documentation to reflect changes

- Submit a Pull Request with comprehensive description

MIT License - See LICENSE for details.

- Chainlit - Chat interface framework

- LiteLLM - Model abstraction layer

- SemanticRouter - Intent classification

- FastEmbed - Embedding models for routing

I'm @vinhnx on the internet.

Thank you, and have a great day!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for VT.ai

Similar Open Source Tools

VT.ai

VT.ai is a multimodal AI platform that offers dynamic conversation routing with SemanticRouter, multi-modal interactions (text/image/audio), an assistant framework with code interpretation, real-time response streaming, cross-provider model switching, and local model support with Ollama integration. It supports various AI providers such as OpenAI, Anthropic, Google Gemini, Groq, Cohere, and OpenRouter, providing a wide range of core capabilities for AI orchestration.

zcf

ZCF (Zero-Config Claude-Code Flow) is a tool that provides zero-configuration, one-click setup for Claude Code with bilingual support, intelligent agent system, and personalized AI assistant. It offers an interactive menu for easy operations and direct commands for quick execution. The tool supports bilingual operation with automatic language switching and customizable AI output styles. ZCF also includes features like BMad Workflow for enterprise-grade workflow system, Spec Workflow for structured feature development, CCR (Claude Code Router) support for proxy routing, and CCometixLine for real-time usage tracking. It provides smart installation, complete configuration management, and core features like professional agents, command system, and smart configuration. ZCF is cross-platform compatible, supports Windows and Termux environments, and includes security features like dangerous operation confirmation mechanism.

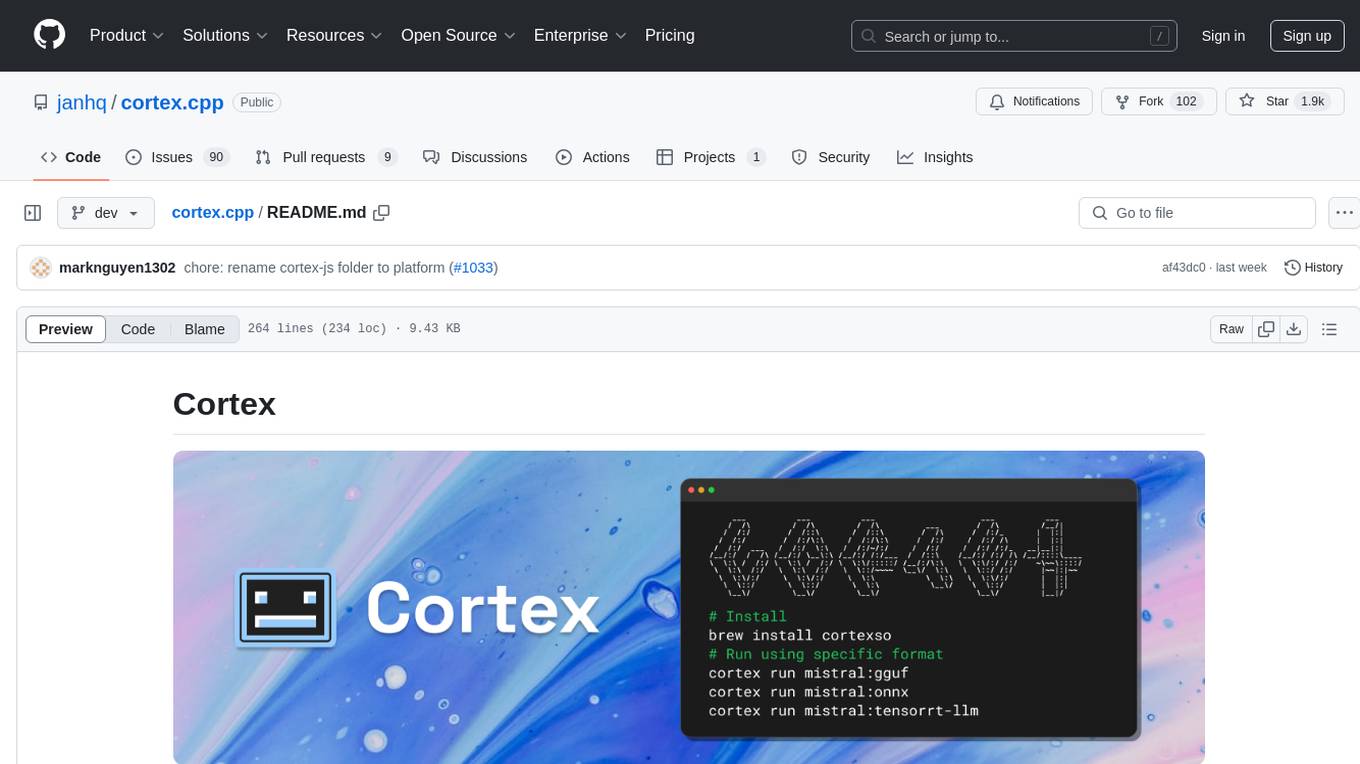

cortex.cpp

Cortex is a C++ AI engine with a Docker-like command-line interface and client libraries. It supports running AI models using ONNX, TensorRT-LLM, and llama.cpp engines. Cortex can function as a standalone server or be integrated as a library. The tool provides support for various engines and models, allowing users to easily deploy and interact with AI models. It offers a range of CLI commands for managing models, embeddings, and engines, as well as a REST API for interacting with models. Cortex is designed to simplify the deployment and usage of AI models in C++ applications.

GPULlama3.java

GPULlama3.java powered by TornadoVM is a Java-native implementation of Llama3 that automatically compiles and executes Java code on GPUs via TornadoVM. It supports Llama3, Mistral, Qwen2.5, Qwen3, and Phi3 models in the GGUF format. The repository aims to provide GPU acceleration for Java code, enabling faster execution and high-performance access to off-heap memory. It offers features like interactive and instruction modes, flexible backend switching between OpenCL and PTX, and cross-platform compatibility with NVIDIA, Intel, and Apple GPUs.

easy-dataset

Easy Dataset is a specialized application designed to streamline the creation of fine-tuning datasets for Large Language Models (LLMs). It offers an intuitive interface for uploading domain-specific files, intelligently splitting content, generating questions, and producing high-quality training data for model fine-tuning. With Easy Dataset, users can transform domain knowledge into structured datasets compatible with all OpenAI-format compatible LLM APIs, making the fine-tuning process accessible and efficient.

BrowserAI

BrowserAI is a production-ready tool that allows users to run AI models directly in the browser, offering simplicity, speed, privacy, and open-source capabilities. It provides WebGPU acceleration for fast inference, zero server costs, offline capability, and developer-friendly features. Perfect for web developers, companies seeking privacy-conscious AI solutions, researchers experimenting with browser-based AI, and hobbyists exploring AI without infrastructure overhead. The tool supports various AI tasks like text generation, speech recognition, and text-to-speech, with pre-configured popular models ready to use. It offers a simple SDK with multiple engine support and seamless switching between MLC and Transformers engines.

nexa-sdk

Nexa SDK is a comprehensive toolkit supporting ONNX and GGML models for text generation, image generation, vision-language models (VLM), and text-to-speech (TTS) capabilities. It offers an OpenAI-compatible API server with JSON schema mode and streaming support, along with a user-friendly Streamlit UI. Users can run Nexa SDK on any device with Python environment, with GPU acceleration supported. The toolkit provides model support, conversion engine, inference engine for various tasks, and differentiating features from other tools.

auto-subs

Auto-subs is a tool designed to automatically transcribe editing timelines using OpenAI Whisper and Stable-TS for extreme accuracy. It generates subtitles in a custom style, is completely free, and runs locally within Davinci Resolve. It works on Mac, Linux, and Windows, supporting both Free and Studio versions of Resolve. Users can jump to positions on the timeline using the Subtitle Navigator and translate from any language to English. The tool provides a user-friendly interface for creating and customizing subtitles for video content.

pipecat

Pipecat is an open-source framework designed for building generative AI voice bots and multimodal assistants. It provides code building blocks for interacting with AI services, creating low-latency data pipelines, and transporting audio, video, and events over the Internet. Pipecat supports various AI services like speech-to-text, text-to-speech, image generation, and vision models. Users can implement new services and contribute to the framework. Pipecat aims to simplify the development of applications like personal coaches, meeting assistants, customer support bots, and more by providing a complete framework for integrating AI services.

LongLLaVA

LongLLaVA is a tool for scaling multi-modal LLMs to 1000 images efficiently via hybrid architecture. It includes stages for single-image alignment, instruction-tuning, and multi-image instruction-tuning, with evaluation through a command line interface and model inference. The tool aims to achieve GPT-4V level capabilities and beyond, providing reproducibility of results and benchmarks for efficiency and performance.

daytona

Daytona is a secure and elastic infrastructure tool designed for running AI-generated code. It offers lightning-fast infrastructure with sub-90ms sandbox creation, separated and isolated runtime for executing AI code with zero risk, massive parallelization for concurrent AI workflows, programmatic control through various APIs, unlimited sandbox persistence, and OCI/Docker compatibility. Users can create sandboxes using Python or TypeScript SDKs, run code securely inside the sandbox, and clean up the sandbox after execution. Daytona is open source under the GNU Affero General Public License and welcomes contributions from developers.

SG-Nav

SG-Nav is an online 3D scene graph prompting tool designed for LLM-based zero-shot object navigation. It proposes a framework that constructs an online 3D scene graph to prompt LLMs, allowing direct application to various scenes and categories without the need for training.

ChatGPT-Next-Web

ChatGPT Next Web is a well-designed cross-platform ChatGPT web UI tool that supports Claude, GPT4, and Gemini Pro models. It allows users to deploy their private ChatGPT applications with ease. The tool offers features like one-click deployment, compact client for Linux/Windows/MacOS, compatibility with self-deployed LLMs, privacy-first approach with local data storage, markdown support, responsive design, fast loading speed, prompt templates, awesome prompts, chat history compression, multilingual support, and more.

MAVIS

MAVIS (Math Visual Intelligent System) is an AI-driven application that allows users to analyze visual data such as images and generate interactive answers based on them. It can perform complex mathematical calculations, solve programming tasks, and create professional graphics. MAVIS supports Python for coding and frameworks like Matplotlib, Plotly, Seaborn, Altair, NumPy, Math, SymPy, and Pandas. It is designed to make projects more efficient and professional.

llm-context.py

LLM Context is a tool designed to assist developers in quickly injecting relevant content from code/text projects into Large Language Model chat interfaces. It leverages `.gitignore` patterns for smart file selection and offers a streamlined clipboard workflow using the command line. The tool also provides direct integration with Large Language Models through the Model Context Protocol (MCP). LLM Context is optimized for code repositories and collections of text/markdown/html documents, making it suitable for developers working on projects that fit within an LLM's context window. The tool is under active development and aims to enhance AI-assisted development workflows by harnessing the power of Large Language Models.

AiToEarn

AiToEarn is a one-click publishing tool for multiple self-media platforms such as Douyin, Xiaohongshu, Video Number, and Kuaishou. It allows users to publish videos with ease, observe popular content across the web, and view rankings of explosive articles on Xiaohongshu. The tool is also capable of providing daily and weekly rankings of popular content on Xiaohongshu, Douyin, Video Number, and Kuaishou. In progress features include expanding publishing parameters to support short video e-commerce, adding an AI tool ranking list, enabling AI automatic comments, and AI comment search.

For similar tasks

wenxin-starter

WenXin-Starter is a spring-boot-starter for Baidu's "Wenxin Qianfan WENXINWORKSHOP" large model, which can help you quickly access Baidu's AI capabilities. It fully integrates the official API documentation of Wenxin Qianfan. Supports text-to-image generation, built-in dialogue memory, and supports streaming return of dialogue. Supports QPS control of a single model and supports queuing mechanism. Plugins will be added soon.

modelfusion

ModelFusion is an abstraction layer for integrating AI models into JavaScript and TypeScript applications, unifying the API for common operations such as text streaming, object generation, and tool usage. It provides features to support production environments, including observability hooks, logging, and automatic retries. You can use ModelFusion to build AI applications, chatbots, and agents. ModelFusion is a non-commercial open source project that is community-driven. You can use it with any supported provider. ModelFusion supports a wide range of models including text generation, image generation, vision, text-to-speech, speech-to-text, and embedding models. ModelFusion infers TypeScript types wherever possible and validates model responses. ModelFusion provides an observer framework and logging support. ModelFusion ensures seamless operation through automatic retries, throttling, and error handling mechanisms. ModelFusion is fully tree-shakeable, can be used in serverless environments, and only uses a minimal set of dependencies.

freeGPT

freeGPT provides free access to text and image generation models. It supports various models, including gpt3, gpt4, alpaca_7b, falcon_40b, prodia, and pollinations. The tool offers both asynchronous and non-asynchronous interfaces for text completion and image generation. It also features an interactive Discord bot that provides access to all the models in the repository. The tool is easy to use and can be integrated into various applications.

generative-ai-go

The Google AI Go SDK enables developers to use Google's state-of-the-art generative AI models (like Gemini) to build AI-powered features and applications. It supports use cases like generating text from text-only input, generating text from text-and-images input (multimodal), building multi-turn conversations (chat), and embedding.

ai-flow

AI Flow is an open-source, user-friendly UI application that empowers you to seamlessly connect multiple AI models together, specifically leveraging the capabilities of multiples AI APIs such as OpenAI, StabilityAI and Replicate. In a nutshell, AI Flow provides a visual platform for crafting and managing AI-driven workflows, thereby facilitating diverse and dynamic AI interactions.

runpod-worker-comfy

runpod-worker-comfy is a serverless API tool that allows users to run any ComfyUI workflow to generate an image. Users can provide input images as base64-encoded strings, and the generated image can be returned as a base64-encoded string or uploaded to AWS S3. The tool is built on Ubuntu + NVIDIA CUDA and provides features like built-in checkpoints and VAE models. Users can configure environment variables to upload images to AWS S3 and interact with the RunPod API to generate images. The tool also supports local testing and deployment to Docker hub using Github Actions.

liboai

liboai is a simple C++17 library for the OpenAI API, providing developers with access to OpenAI endpoints through a collection of methods and classes. It serves as a spiritual port of OpenAI's Python library, 'openai', with similar structure and features. The library supports various functionalities such as ChatGPT, Audio, Azure, Functions, Image DALL·E, Models, Completions, Edit, Embeddings, Files, Fine-tunes, Moderation, and Asynchronous Support. Users can easily integrate the library into their C++ projects to interact with OpenAI services.

OpenAI-DotNet

OpenAI-DotNet is a simple C# .NET client library for OpenAI to use through their RESTful API. It is independently developed and not an official library affiliated with OpenAI. Users need an OpenAI API account to utilize this library. The library targets .NET 6.0 and above, working across various platforms like console apps, winforms, wpf, asp.net, etc., and on Windows, Linux, and Mac. It provides functionalities for authentication, interacting with models, assistants, threads, chat, audio, images, files, fine-tuning, embeddings, and moderations.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.