Learn_Prompting

Prompt Engineering, Generative AI, and LLM Guide by Learn Prompting | Join our discord for the largest Prompt Engineering learning community

Stars: 4330

Learn Prompting is a platform offering free resources, courses, and webinars to master prompt engineering and generative AI. It provides a Prompt Engineering Guide, courses on Generative AI, workshops, and the HackAPrompt competition. The platform also offers AI Red Teaming and AI Safety courses, research reports on prompting techniques, and welcomes contributions in various forms such as content suggestions, translations, artwork, and typo fixes. Users can locally develop the website using Visual Studio Code, Git, and Node.js, and run it in development mode to preview changes.

README:

Learn prompt engineering and generative AI with our free resources, courses, and on-demand webinars.

Website • Discord • Twitter (X) • LinkedIn • Newsletter • Free ChatGPT Course • Free Prompt Engineering Guide • Course Catalog • Book a Demo • Contact us

The Learn Prompting team are creators of:

- The free Prompt Engineering Guide, cited by OpenAI and Google.

- 15 courses on Generative AI to help you develop cutting-edge AI skills.

- On-demand workshops and training for individuals and businesses.

- HackAPrompt, the largest AI red-teaming competition ever.

- 🏆 HackAPrompt 2.0 is here with $500,000 in prizes and 5 exciting tracks! Join the waitlist and learn more in this article.

- 🎓 We’ve launched a cohort-based AI Red Teaming and AI Safety course! Enroll here.

- 💼 Our team has hosted workshops at OpenAI, Microsoft, Deloitte, Dropbox, and more. Contact us for custom solutions.

- The Prompt Report: A Systematic Survey of Prompting Techniques (blog post): The most comprehensive study of prompting techniques to date.

- Ignore This Title and HackAPrompt: Exposing Systemic Vulnerabilities of LLMs: Insights from analyzing over 600K adversarial prompts across state-of-the-art LLMs.

We welcome contributions of all kinds! Here’s how you can help:

- Suggest new content ideas or improvements.

- Translate resources into other languages.

- Contribute artwork or additional resources.

- Help fix typos or improve clarity.

Every contribution is appreciated, no matter how big or small! ❤️

Before you start, ensure you have the following installed:

- Visual Studio Code

- Git

-

Node.js (version 18.0.0 or higher,

node -v)

If you're on macOS or Linux, you can use Homebrew, a package manager, to install the necessary tools.

To begin:

- Clone the repository from GitHub:

git clone https://github.com/trigaten/Learn_Prompting_nextjs.git

- Navigate to the project folder:

cd Learn_Prompting_nextjs

Once the setup is complete, you can run the website locally to preview your changes:

- Ensure you are using Node.js version 18.0.0 or higher:

node -v

- Install the required Node.js modules:

npm install

- Run the website in development mode:

npm run dev

This will start a local development server, and your changes will be reflected live in the browser.

We’re grateful for all the amazing contributions from our community! 🙌 Check out our contributors below:

Use the provided GitHub citation in this repository:

@software{Schulhoff_Learn_Prompting_2022,

author = {Schulhoff, Sander and Community Contributors},

month = dec,

title = {{Learn Prompting}},

url = {https://github.com/trigaten/Learn_Prompting},

year = {2022}

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Learn_Prompting

Similar Open Source Tools

Learn_Prompting

Learn Prompting is a platform offering free resources, courses, and webinars to master prompt engineering and generative AI. It provides a Prompt Engineering Guide, courses on Generative AI, workshops, and the HackAPrompt competition. The platform also offers AI Red Teaming and AI Safety courses, research reports on prompting techniques, and welcomes contributions in various forms such as content suggestions, translations, artwork, and typo fixes. Users can locally develop the website using Visual Studio Code, Git, and Node.js, and run it in development mode to preview changes.

MetaGPT

MetaGPT is a multi-agent framework that enables GPT to work in a software company, collaborating to tackle more complex tasks. It assigns different roles to GPTs to form a collaborative entity for complex tasks. MetaGPT takes a one-line requirement as input and outputs user stories, competitive analysis, requirements, data structures, APIs, documents, etc. Internally, MetaGPT includes product managers, architects, project managers, and engineers. It provides the entire process of a software company along with carefully orchestrated SOPs. MetaGPT's core philosophy is "Code = SOP(Team)", materializing SOP and applying it to teams composed of LLMs.

mlflow

MLflow is a platform to streamline machine learning development, including tracking experiments, packaging code into reproducible runs, and sharing and deploying models. MLflow offers a set of lightweight APIs that can be used with any existing machine learning application or library (TensorFlow, PyTorch, XGBoost, etc), wherever you currently run ML code (e.g. in notebooks, standalone applications or the cloud). MLflow's current components are:

* `MLflow Tracking

MarkFlowy

MarkFlowy is a lightweight and feature-rich Markdown editor with built-in AI capabilities. It supports one-click export of conversations, translation of articles, and obtaining article abstracts. Users can leverage large AI models like DeepSeek and Chatgpt as intelligent assistants. The editor provides high availability with multiple editing modes and custom themes. Available for Linux, macOS, and Windows, MarkFlowy aims to offer an efficient, beautiful, and data-safe Markdown editing experience for users.

OpenDevin

OpenDevin is an open-source project aiming to replicate Devin, an autonomous AI software engineer capable of executing complex engineering tasks and collaborating actively with users on software development projects. The project aspires to enhance and innovate upon Devin through the power of the open-source community. Users can contribute to the project by developing core functionalities, frontend interface, or sandboxing solutions, participating in research and evaluation of LLMs in software engineering, and providing feedback and testing on the OpenDevin toolset.

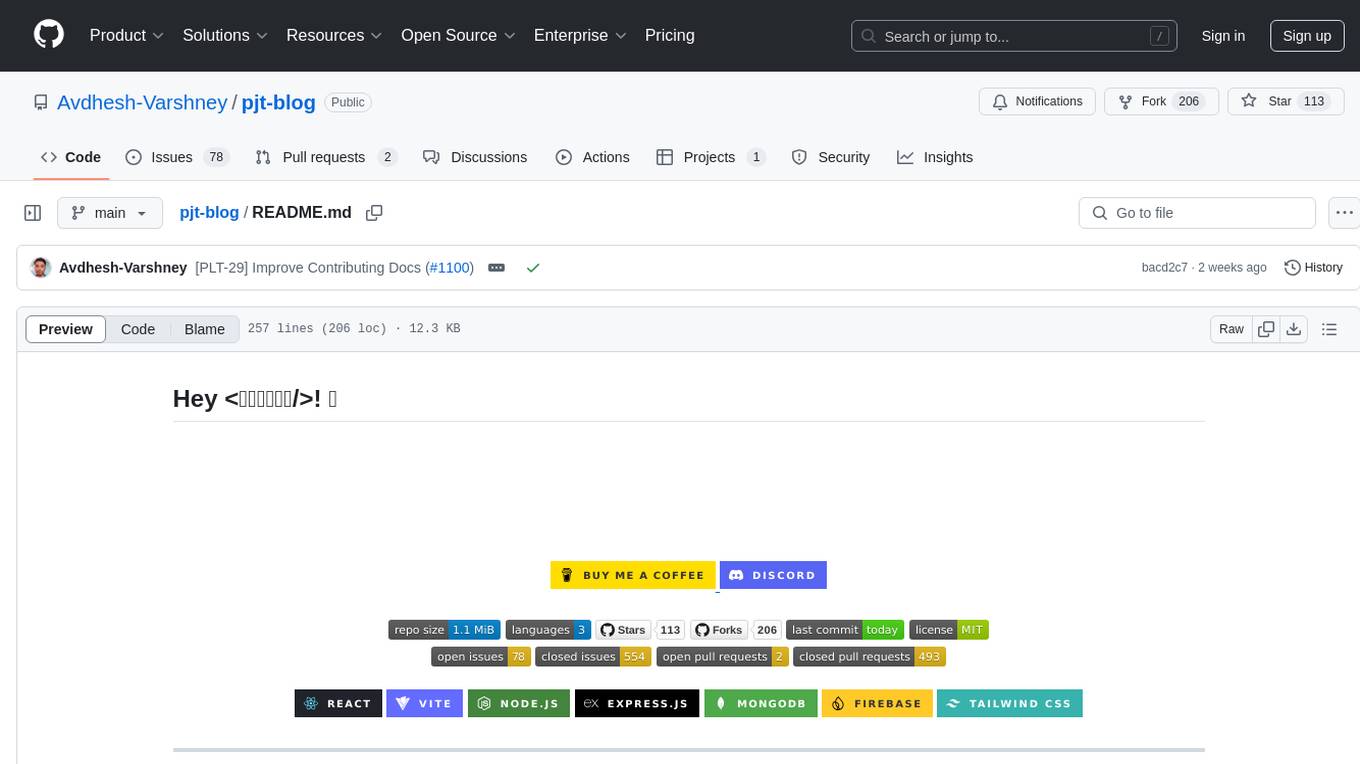

project-blog

Welcome to the Blog Script Project, a collaborative platform for developers and writers to create, manage, and share content. With features like Markdown support, submodule integration, customizable templates, project contribution workflow, global visibility, community discussions, SEO optimization, and role-based dashboard, Blog Script enhances collaboration and visibility for your work. You can contribute by adding new projects, improving existing projects, updating documentation, fixing bugs, optimizing, and ensuring code readability. Follow the contribution guidelines to star the repository, find tasks, fork the repository, make changes, add screenshots, submit a pull request, and contribute to the open-source community. Additionally, you can add your project as a submodule by following the provided guidelines. Join us, contribute, and grow together!

lerobot

LeRobot is a state-of-the-art AI library for real-world robotics in PyTorch. It aims to provide models, datasets, and tools to lower the barrier to entry to robotics, focusing on imitation learning and reinforcement learning. LeRobot offers pretrained models, datasets with human-collected demonstrations, and simulation environments. It plans to support real-world robotics on affordable and capable robots. The library hosts pretrained models and datasets on the Hugging Face community page.

ChatDev

ChatDev is a virtual software company powered by intelligent agents like CEO, CPO, CTO, programmer, reviewer, tester, and art designer. These agents collaborate to revolutionize the digital world through programming. The platform offers an easy-to-use, highly customizable, and extendable framework based on large language models, ideal for studying collective intelligence. ChatDev introduces innovative methods like Iterative Experience Refinement and Experiential Co-Learning to enhance software development efficiency. It supports features like incremental development, Docker integration, Git mode, and Human-Agent-Interaction mode. Users can customize ChatChain, Phase, and Role settings, and share their software creations easily. The project is open-source under the Apache 2.0 License and utilizes data licensed under CC BY-NC 4.0.

lingoose

LinGoose is a modular Go framework designed for building AI/LLM applications. It offers the flexibility to import only the necessary modules, abstracts features for customization, and provides a comprehensive solution for developing AI/LLM applications from scratch. The framework simplifies the process of creating intelligent applications by allowing users to choose preferred implementations or create their own. LinGoose empowers developers to leverage its capabilities to streamline the development of cutting-edge AI and LLM projects.

biochatter

Generative AI models have shown tremendous usefulness in increasing accessibility and automation of a wide range of tasks. This repository contains the `biochatter` Python package, a generic backend library for the connection of biomedical applications to conversational AI. It aims to provide a common framework for deploying, testing, and evaluating diverse models and auxiliary technologies in the biomedical domain. BioChatter is part of the BioCypher ecosystem, connecting natively to BioCypher knowledge graphs.

liveblocks

Liveblocks provides customizable pre-built features for human and AI collaboration, used to make your product multiplayer, engaging, and AI-ready. All without derailing your roadmap. Liveblocks offers ready-to-use features like Comments, Text Editor, AI Copilots, Presence, and Notifications, along with the ability to host and scale local-first sync engines. It also provides packages and SDKs for various libraries and frameworks to add collaborative experiences to your product.

lunary

Lunary is an open-source observability and prompt platform for Large Language Models (LLMs). It provides a suite of features to help AI developers take their applications into production, including analytics, monitoring, prompt templates, fine-tuning dataset creation, chat and feedback tracking, and evaluations. Lunary is designed to be usable with any model, not just OpenAI, and is easy to integrate and self-host.

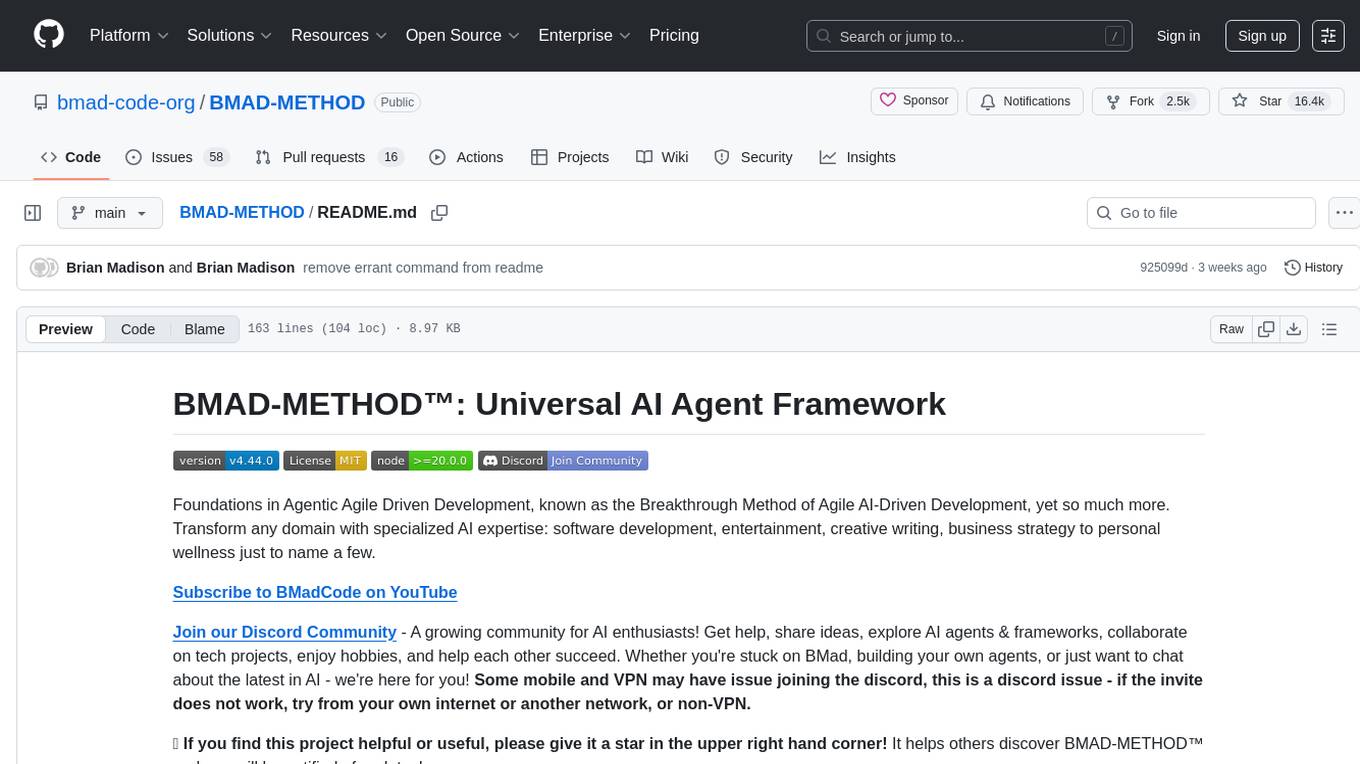

BMAD-METHOD

BMAD-METHOD™ is a universal AI agent framework that revolutionizes Agile AI-Driven Development. It offers specialized AI expertise across various domains, including software development, entertainment, creative writing, business strategy, and personal wellness. The framework introduces two key innovations: Agentic Planning, where dedicated agents collaborate to create detailed specifications, and Context-Engineered Development, which ensures complete understanding and guidance for developers. BMAD-METHOD™ simplifies the development process by eliminating planning inconsistency and context loss, providing a seamless workflow for creating AI agents and expanding functionality through expansion packs.

openrl

OpenRL is an open-source general reinforcement learning research framework that supports training for various tasks such as single-agent, multi-agent, offline RL, self-play, and natural language. Developed based on PyTorch, the goal of OpenRL is to provide a simple-to-use, flexible, efficient and sustainable platform for the reinforcement learning research community. It supports a universal interface for all tasks/environments, single-agent and multi-agent tasks, offline RL training with expert dataset, self-play training, reinforcement learning training for natural language tasks, DeepSpeed, Arena for evaluation, importing models and datasets from Hugging Face, user-defined environments, models, and datasets, gymnasium environments, callbacks, visualization tools, unit testing, and code coverage testing. It also supports various algorithms like PPO, DQN, SAC, and environments like Gymnasium, MuJoCo, Atari, and more.

AI-Engineering.academy

AI Engineering Academy aims to provide a structured learning path for individuals looking to learn Applied AI effectively. The platform offers multiple roadmaps covering topics like Retrieval Augmented Generation, Fine-tuning, and Deployment. Each roadmap equips learners with the knowledge and skills needed to excel in applied GenAI. Additionally, the platform will feature Hands-on End-to-End AI projects in the future.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

For similar tasks

Learn_Prompting

Learn Prompting is a platform offering free resources, courses, and webinars to master prompt engineering and generative AI. It provides a Prompt Engineering Guide, courses on Generative AI, workshops, and the HackAPrompt competition. The platform also offers AI Red Teaming and AI Safety courses, research reports on prompting techniques, and welcomes contributions in various forms such as content suggestions, translations, artwork, and typo fixes. Users can locally develop the website using Visual Studio Code, Git, and Node.js, and run it in development mode to preview changes.

ai-driven-dev-community

AI Driven Dev Community is a repository aimed at helping developers become more efficient by utilizing AI tools in their daily coding tasks. It provides a collection of tools, prompts, snippets, and agents for developers to integrate AI into their workflow. The repository is regularly updated with new resources and focuses on best practices for using AI in development work. Users can find tools like Espanso, ChatGPT, GitHub Copilot, and VSCode recommended for enhancing their coding experience. Additionally, the repository offers guidance on customizing AI for developers, installing AI toolbox for software engineers, and contributing to the community through easy steps.

Building-AI-Applications-with-ChatGPT-APIs

This repository is for the book 'Building AI Applications with ChatGPT APIs' published by Packt. It provides code examples and instructions for mastering ChatGPT, Whisper, and DALL-E APIs through building innovative AI projects. Readers will learn to develop AI applications using ChatGPT APIs, integrate them with frameworks like Flask and Django, create AI-generated art with DALL-E APIs, and optimize ChatGPT models through fine-tuning.

chatgpt-lite

ChatGPT Lite is a lightweight web interface developed using Next.js and the OpenAI Chat API. It allows users to deploy a custom ChatGPT interface supporting markdown, prompt storage, and multi-person chats. Users can create private web-based ChatGPT instances for friends without sharing API keys. The codebase is clear and expandable, making it an ideal starting point for AI projects.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.