awesome-deliberative-prompting

Awesome deliberative prompting: How to ask LLMs to produce reliable reasoning and make reason-responsive decisions.

Stars: 74

The 'awesome-deliberative-prompting' repository focuses on how to ask Large Language Models (LLMs) to produce reliable reasoning and make reason-responsive decisions through deliberative prompting. It includes success stories, prompting patterns and strategies, multi-agent deliberation, reflection and meta-cognition, text generation techniques, self-correction methods, reasoning analytics, limitations, failures, puzzles, datasets, tools, and other resources related to deliberative prompting. The repository provides a comprehensive overview of research, techniques, and tools for enhancing reasoning capabilities of LLMs.

README:

How to ask Large Language Models (LLMs) to produce reliable reasoning and make reason-responsive decisions.

deliberation, n.

The action of thinking carefully about something, esp. in order to reach a decision; careful consideration; an act or instance of this. (OED)

- Success Stories

- Prompting Patterns and Strategies

- Text Generation Techniques

- Self-Correction

- Reasoning Analytics

- Limitations, Failures, Puzzles

- Datasets

- Tools and Frameworks

- Other Resources

Striking evidence for effectiveness of deliberative prompting.

- 🎓 The original "chain of though" (CoT) paper, first to give clear evidence that deliberative prompting works. "Chain-of-Thought Prompting Elicits Reasoning in Large Language Models." 2022-01-28. [>paper]

- 🎓 Deliberative prompting improves ability of Google's LLMs to solve unseen difficult problems, and instruction-finetuned (Flan-) models are much better at it.

- 🎓 Deliberative prompting is highly effective for OpenAI's models (Text-Davinci-003, ChatGPT, GPT-4), increasing accuracy in many (yet not all) reasoning tasks in the EvalAGI benchmark. "AGIEval: A Human-Centric Benchmark for Evaluating Foundation Models." 2023-04-13. [>paper]

- 🎓 Deliberative prompting unlocks latent cognitive skills and is more effective for bigger models. "Challenging BIG-Bench tasks and whether chain-of-thought can solve them." 2022-10-17. [>paper]

- 🎓 Experimentally introducing errors in CoT reasoning traces decreases decision accuracy, which provides indirect evidence for reason-responsiveness of LLMs. "Stress Testing Chain-of-Thought Prompting for Large Language Models." 2023-09-28. [>paper]

- 🎓 Reasoning (about retrieval candidates) improves RAG. "Self-RAG: Learning to Retrieve, Generate, and Critique through Self-Reflection." 2023-10-17. [>paper]

- 🎓 Deliberative reading notes improve RAG. "Chain-of-Note: Enhancing Robustness in Retrieval-Augmented Language Models." 2023-11-15. [>paper]

- 🎓 Good reasoning (CoT) causes good answers (i.e., LLMs are reason-responsive). "Causal Abstraction for Chain-of-Thought Reasoning in Arithmetic Word Problems." 2023-12-07. [>paper]

- 🎓 Logical interpretation of internal layer-wise processing of reasoning tasks yields further evidence for reason-responsiveness. "Towards a Mechanistic Interpretation of Multi-Step Reasoning Capabilities of Language Model." 2023-12-07. [>paper]

- 🎓 Reasoning about alternative drafts improves text generation. "Self-Evaluation Improves Selective Generation in Large Language Models." 2023-12-14. [>paper]

- 🎓 CoT with carefully retrieved, diverse reasoning demonstrations boosts multi-modal LLMs. "Retrieval-augmented Multi-modal Chain-of-Thoughts Reasoning for Large Language Models." 2023-12-04. [>paper]

- 🎓 Effective multi-hop CoT for visual question answering. "II-MMR: Identifying and Improving Multi-modal Multi-hop Reasoning in Visual Question Answering." 2024-02-16. [>paper]

- 🎓 👩💻 DPO on synthetic CoT traces increases reason-responsiveness of small LLMs. "Making Reasoning Matter: Measuring and Improving Faithfulness of Chain-of-Thought Reasoning" 2024-02-23. [>paper] [>code]

Prompting strategies and patterns to make LLMs deliberate.

Instructing LLMs to reason (in a specific way).

- 🎓 Asking GPT-4 to provide a correct and a wrong answers boosts accuracy. "Large Language Models are Contrastive Reasoners." 2024-03-13. [>paper]

- 🔥🎓 Guided dynamic prompting increases GPT-4 CoT performance by up to 30 percentage points. "Structure Guided Prompt: Instructing Large Language Model in Multi-Step Reasoning by Exploring Graph Structure of the Text" 2024-02-20. [>paper]

- 🎓 Letting LLMs choose and combine reasoning strategies is cost-efficient and improves performance. "SELF-DISCOVER: Large Language Models Self-Compose Reasoning Structures." 2024-02-06. [>paper]

- 🎓 CoA: Produce an abstract reasoning trace first, and fill in the details (using tools) later. "Efficient Tool Use with Chain-of-Abstraction Reasoning." 2024-01-30. [>paper]

- 🎓 Reason over and over again until verification test is passed. "Plan, Verify and Switch: Integrated Reasoning with Diverse X-of-Thoughts." 2023-10-23. [>paper]

- 🎓 Generate multiple diverse deliberations, then synthesize those in a single reasoning path. "Ask One More Time: Self-Agreement Improves Reasoning of Language Models in (Almost) All Scenarios." 2023-11-14. [>paper]

- 🎓 Survey of CoT regarding task types, prompt designs, and reasoning quality metrics. "Towards Better Chain-of-Thought Prompting Strategies: A Survey." 2023-10-08. [>paper]

- 🎓 Asking a LLM about a problem's broader context leads to better answers. "Take a Step Back: Evoking Reasoning via Abstraction in Large Language Models." 2023-10-09. [>paper]

- Weighing Pros and Cons: This universal deliberation paradigm can be implemented with LLMs.

- 👩💻 A {{guidance}} program that does: 1. Identify Options → 2. Generate Pros and Cons → 3. Weigh Reasons → 4. Decide. [>code]

- 🎓 👩💻 Plan-and-Solve Prompting. "Plan-and-Solve Prompting: Improving Zero-Shot Chain-of-Thought Reasoning by Large Language Models." 2023-05-06. [>paper] [>code]

- 🎓 Note-Taking. "Learning to Reason and Memorize with Self-Notes." 2023-05-01. [>paper]

- 🎓 Deliberate-then-Generate improves text quality. "Deliberate then Generate: Enhanced Prompting Framework for Text Generation." 2023-05-31. [>paper]

- 🎓 Make LLM spontaneously interleave reasoning and Q/A. "ReAct: Synergizing Reasoning and Acting in Language Models." 2022-10-06. [>paper]

- 🎓 'Divide-and-Conquer' instructions substantially outperform standard CoT. "Least-to-Most Prompting Enables Complex Reasoning in Large Language Models" 2022-05-21. [>paper]

Let one (or many) LLMs simulate a free controversy.

- 🎓 👩💻 Carefully selected open LLMs that iteratively review and improve their answers outperform GPT4-o. "Mixture-of-Agents Enhances Large Language Model Capabilities." 2024-06-10. [>paper] [>code]

- 🎓 More elaborate and costly multi-agent-system designs are typically more effective, according to this review: "Are we going MAD? Benchmarking Multi-Agent Debate between Language Models for Medical Q&A." 2023-11-19. [>paper]

- 🎓 Systematic peer review is even better than multi-agent debate. "Towards Reasoning in Large Language Models via Multi-Agent Peer Review Collaboration." 2023-11-14. [>paper]

- 🎓 Collective critique and reflection reduce factual hallucinations and toxicity. "N-Critics: Self-Refinement of Large Language Models with Ensemble of Critics." 2023-10-28. [>paper]

- 🎓 👩💻 Delphi-process with diverse LLMs is veristically more valuable than simple debating. "ReConcile: Round-Table Conference Improves Reasoning via Consensus among Diverse LLMs." 2023-09-22. [>paper] [>code]

- 🎓 Multi-agent debate increases cognitive diversity increases performance. "Encouraging Divergent Thinking in Large Language Models through Multi-Agent Debate." 2023-05-30. [>paper]

- 🎓 Leverage wisdom of the crowd effects through debate simulation. "Improving Factuality and Reasoning in Language Models through Multiagent Debate." 2023-05-23. [>paper]

- 🎓 👩💻 Emulate Socratic dialogue to collaboratively solve problems with multiple AI agents. "The Socratic Method for Self-Discovery in Large Language Models." 2023-05-05. [>blog] [>code]

Higher-order reasoning strategies that may improve first-order deliberation.

- 🎓 👩💻 Keeping track of general insights gained from CoT problem solving improves future accuracy and efficiency. "Buffer of Thoughts: Thought-Augmented Reasoning with Large Language Models." 2024-06-06. [>paper] [>code]

- 🎓 👩💻 Processing task in function of self-assessed difficulty boosts CoT effectiveness. "Divide and Conquer for Large Language Models Reasoning." 2024-01-10. [>paper] [>code]

- 🎓 👩💻 Reflecting on task allows LLM to autogenerate more effective instructions, demonstration, and reasoning traces. "Meta-CoT: Generalizable Chain-of-Thought Prompting in Mixed-task Scenarios with Large Language Models." 2023-10-11. [>paper] [>code]

- 🎓 👩💻 LLM-based AI Instructor devises effective first-order CoT-instructions (open source models improve by up to 20%). "Agent Instructs Large Language Models to be General Zero-Shot Reasoners." 2023-10-05. [>paper] [>code]

- 🎓 👩💻 Clarify→Judge→Evaluate→Confirm→Qualify Paradigm. "Metacognitive Prompting Improves Understanding in Large Language Models." 2023-08-10. [>paper] [>code]

- 🎓 👩💻 Find-then-simulate-an-expert-for-this-problem Strategy. "Prompt Programming for Large Language Models: Beyond the Few-Shot Paradigm." 2021-02-15. [>paper] [>lmql]

Text generation techniques, which can be combined with prompting patterns and strategies.

- 🔥🎓 Iterative revision of reasoning in light of previous CoT traces improves accuracy by 10-20%. "RAT: Retrieval Augmented Thoughts Elicit Context-Aware Reasoning in Long-Horizon Generation". 2024-03-08. [>paper]

- 🎓 Pipeline for self-generating & choosing effective CoT few-shot demonstrations. "Universal Self-adaptive Prompting". 2023-05-24. [>paper]

- 🎓 More reasoning (= longer reasoning traces) is better. "The Impact of Reasoning Step Length on Large Language Models". 2024-01-10. [>paper]

- 🎓 Having (accordingly labeled) correct and erroneous (few-shot) reasoning demonstrations improves CoT. "Contrastive Chain-of-Thought Prompting." 2023-11-17. [>paper]

- 🎓 Better problem-solving and deliberation through few-shot trial-and-error (in-context RL). "Reflexion: Language Agents with Verbal Reinforcement Learning." 2023-03-20. [>paper]

- 🎓 External guides that constrain generation of reasoning improve accuracy by up to 35% on selected tasks. "Certified Reasoning with Language Models." 2023-06-06. [>paper]

- 🎓 👩💻 Highly effective beam search for generating complex, multi-step reasoning episodes. "Tree of Thoughts: Deliberate Problem Solving with Large Language Models." 2023-05-17. [>paper] [>code]

- 🎓 👩💻 LLM auto-generates diverse reasoning demonstration to-be-used in deliberative prompting. "Automatic Chain of Thought Prompting in Large Language Models." 2022-10-07. [>paper] [>code]

Let LLMs self-correct their deliberation.

- 🎓 Consistency between multiple CoT-traces is an indicator of reasoning reliability, which can be exploited for self-check / aggregation. "Can We Verify Step by Step for Incorrect Answer Detection?" 2024-02-16. [>paper]

- 🎓 Turn LLMs into intrinsic self-checkers by appending self-correction steps to standard CoT traces for finetuning. "Small Language Model Can Self-correct." 2024-01-14. [>paper]

- 🎓 Reinforced Self-Training improves retrieval-augmented multi-hop Q/A. "ReST meets ReAct: Self-Improvement for Multi-Step Reasoning LLM Agent." 2023-12-15. [>paper]

- 🎓 Conditional self-correction depending on whether critical questions have been addressed in reasoning trace. "The ART of LLM Refinement: Ask, Refine, and Trust." 2023-11-14. [>paper]

- 🎓 Iteratively refining reasoning given diverse feedback increases accuaracy by up tp 10% (ChatGPT). "MAF: Multi-Aspect Feedback for Improving Reasoning in Large Language Models." 2023-10-19. [>paper]

- 🎓 Instructing a model just to "review" its answer and "find problems" doesn't lead to effective self-correction. "Large Language Models Cannot Self-Correct Reasoning Yet." 2023-09-25. [>paper]

- 🎓 LLMs can come up with, and address critical questions to improve their drafts. "Chain-of-Verification Reduces Hallucination in Large Language Models." 2023-09-25. [>paper]

- 🎓 LogiCoT: Self-check and revision after each CoT step improves performance (for selected tasks and models). "Enhancing Zero-Shot Chain-of-Thought Reasoning in Large Language Models through Logic." 2023-09-23. [>paper]

- 🎓 Excellent review about self-correcting LLMs, with application to unfaithful reasoning. "Automatically Correcting Large Language Models: Surveying the landscape of diverse self-correction strategies." 2023-08-06. [>paper]

Methods for analysing LLM deliberation and assessing reasoning quality.

- 🎓👩💻 Comprehensive LLM-based reasoning analytics that breaks texts down into individual reasons. "DCR-Consistency: Divide-Conquer-Reasoning for Consistency Evaluation and Improvement of Large Language Models." 2024-01-04. [>paper] [>code]

- 🎓🤗 Highly performant, open LLM (T5-based) for inference verification. "Minds versus Machines: Rethinking Entailment Verification with Language Models." 2024-02-06. [>paper] [>model]

- 🎓👩💻 Test dataset for CoT evaluators. "A Chain-of-Thought Is as Strong as Its Weakest Link: A Benchmark for Verifiers of Reasoning Chains." 2023-11-23. [>paper] [>dataset]

- 🎓👩💻 Framework for evaluating reasoning chains by viewing them as informal proofs that derive the final answer. "ReCEval: Evaluating Reasoning Chains via Correctness and Informativeness." 2023-11-23. [>paper] [>code]

- 🎓 GPT-4 is 5x better at predicting whether math reasoning is correct than GPT-3.5. "Challenge LLMs to Reason About Reasoning: A Benchmark to Unveil Cognitive Depth in LLMs." 2023-12-28. [>paper]

- 🎓 Minimalistic GPT-4 prompts for assessing reasoning quality. "SocREval: Large Language Models with the Socratic Method for Reference-Free Reasoning Evaluation." 2023-09-29. [>paper] [>code]

- 🎓👩💻 Automatic, semantic-similarity based metrics for assessing CoT traces (redundancy, faithfulness, consistency, etc.). "ROSCOE: A Suite of Metrics for Scoring Step-by-Step Reasoning." 2023-09-12. [>paper]

Things that don't work, or are poorly understood.

- 🎓 Structured generation risks to degrade reasoning quality and CoT effectiveness. "Let Me Speak Freely? A Study on the Impact of Format Restrictions on Performance of Large Language Models." 2024-08-05. [>paper]

- 🎓 Filler tokens can be as effective as sound reasoning traces for eliciting correct answers. "Let's Think Dot by Dot: Hidden Computation in Transformer Language Models." 2024-04-24. [>paper]

- 🔥🎓 Causal analysis shows that LLMs sometimes ignore CoT traces, but reason responsiveness increases with model size, and is shaped by fine-tuning. "LLMs with Chain-of-Thought Are Non-Causal Reasoners" 2024-02-25. [>paper]

- 🎓 Bad reasoning may lead to correct conclusions, hence better methods for CoT evaluation are needed. "SCORE: A framework for Self-Contradictory Reasoning Evaluation." 2023-11-16. [>paper]

- 🎓 LLMs may produce "encoded reasoning" that's unintelligable to humans, which may nullify any XAI gains from deliberative prompting. "Preventing Language Models From Hiding Their Reasoning." 2023-10-27. [>paper]

- 🎓 LLMs judge and decide in function of available arguments (reason-responsiveness), but are more strongly influenced by fallacious and deceptive reasons as compared to sound ones. "How susceptible are LLMs to Logical Fallacies?" 2023-08-18. [>paper]

- 🎓 Incorrect reasoning improves answer accuracy (nearly) as much as correct one. "Invalid Logic, Equivalent Gains: The Bizarreness of Reasoning in Language Model Prompting." 2023-07-20. [>paper]

- 🎓 Zeroshot CoT reasoning in sensitive domains increases a LLM's likelihood to produce harmful or undesirable output. "On Second Thought, Let's Not Think Step by Step! Bias and Toxicity in Zero-Shot Reasoning." 2023-06-23. [>paper]

- 🎓 LLMs may systematically fabricate erroneous CoT rationales for wrong answers, NYU/Anthropic team finds. "Language Models Don't Always Say What They Think: Unfaithful Explanations in Chain-of-Thought Prompting." 2023-05-07. [>paper]

- 🎓 LLMs' practical deliberation is not robust, but easily let astray by re-wording scenarios. "Despite 'super-human' performance, current LLMs are unsuited for decisions about ethics and safety" 2022-12-13. [>paper]

Datasets containing examples of deliberative prompting, potentially useful for training models / assessing their deliberation skills.

- Instruction-following dataset augmented with "reasoning traces" generated by LLMs.

- 🎓 ORCA - Microsoft's original paper. "Orca: Progressive Learning from Complex Explanation Traces of GPT-4." 2023-06-05. [>paper]

- 👩💻 OpenOrca - Open source replication of ORCA datasets. [>dataset]

- 👩💻 Dolphin - Open source replication of ORCA datasets. [>dataset]

- 🎓 ORCA 2 - Improved Orca by Microsoft, e.g. with meta reasoning. "Orca 2: Teaching Small Language Models How to Reason." 2023-11-18. [>paper]

- 🎓👩💻 CoT Collection - 1.84 million reasoning traces for 1,060 tasks. "The CoT Collection: Improving Zero-shot and Few-shot Learning of Language Models via Chain-of-Thought Fine-Tuning." [>paper] [>code]

- 👩💻 OASST1 - contains more than 200 instructions to generate pros and cons (acc. to nomic.ai's map). [>dataset]

- 🎓 LegalBench - a benchmark for legal reasoning in LLMs [>paper]

- 🎓👩💻 ThoughtSource - an open resource for data and tools related to chain-of-thought reasoning in large language models. [>paper] [>code]

- 🎓👩💻 Review with lots of hints to CoT relevant datasets. "Datasets for Large Language Models: A Comprehensive Survey" [>paper] [>code]

- 👩💻 Maxime Labonne's LLM datasets list [github]

Tools and Frameworks to implement deliberative prompting.

- 👩💻 LMQL - a programming language for language model interaction. [>site]

- 👩💻 {{guidance}} - a language for controlling large language models. [>code]

- 👩💻 outlines ~ - a language for guided text generation. [>code]

- 👩💻 DSPy - a programmatic interface to LLMs. [>code]

- 👩💻 llm-reasoners – A library for advanced large language model reasoning. [>code]

- 👩💻 ThinkGPT - framework and building blocks for chain-of-thought workflows. [>code]

- 👩💻 LangChain - a python library for building LLM chains and agents. [>code]

- 👩💻 PromptBench -a unified library for evaluating LLMS, inter alia effectiveness of CoT prompts. [>code]

- 👩💻 SymbolicAI - a library for compositional differentiable programming with LLMs. [>code]

More awesome and useful material.

- 📚 Survey of Autonomous LLM Agents (continuously updated). [>site]

- 👩💻 LLM Dashboard - explore task-specific reasoning performance of open LLMs [>app]

- 📚 Prompt Engineering Guide set up by DAIR. [>site]

- 📚 ATLAS - principles and benchmark for systematic prompting [>code]

- 📚 Deliberative Prompting Guide set up by Logikon. [>site]

- 📚 Arguing with Arguments – recent and wonderful piece by H. Siegel discussing what it actually means to evaluate an argument. [>paper]

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for awesome-deliberative-prompting

Similar Open Source Tools

awesome-deliberative-prompting

The 'awesome-deliberative-prompting' repository focuses on how to ask Large Language Models (LLMs) to produce reliable reasoning and make reason-responsive decisions through deliberative prompting. It includes success stories, prompting patterns and strategies, multi-agent deliberation, reflection and meta-cognition, text generation techniques, self-correction methods, reasoning analytics, limitations, failures, puzzles, datasets, tools, and other resources related to deliberative prompting. The repository provides a comprehensive overview of research, techniques, and tools for enhancing reasoning capabilities of LLMs.

Awesome-RL-based-LLM-Reasoning

This repository is dedicated to enhancing Language Model (LLM) reasoning with reinforcement learning (RL). It includes a collection of the latest papers, slides, and materials related to RL-based LLM reasoning, aiming to facilitate quick learning and understanding in this field. Starring this repository allows users to stay updated and engaged with the forefront of RL-based LLM reasoning.

lobehub

LobeHub is the ultimate space for work and life, where users can find, build, and collaborate with agent teammates that grow with them. It aims to create the world's largest human-agent co-evolving network. LobeHub treats agents as the unit of work, providing an infrastructure for humans and agents to co-evolve. Users can create personalized AI teams, collaborate with agent groups, and experience the co-evolution of humans and agents. The platform supports various features like model visual recognition, TTS & STT voice conversation, text to image generation, plugin system for function calling, and an agent market for GPTs. LobeHub also offers self-hosting options with Vercel, Alibaba Cloud, and Docker Image, along with an ecosystem of UI components, icons, TTS libraries, and lint configurations.

awesome-llm-security

Awesome LLM Security is a curated collection of tools, documents, and projects related to Large Language Model (LLM) security. It covers various aspects of LLM security including white-box, black-box, and backdoor attacks, defense mechanisms, platform security, and surveys. The repository provides resources for researchers and practitioners interested in understanding and safeguarding LLMs against adversarial attacks. It also includes a list of tools specifically designed for testing and enhancing LLM security.

read-frog

Read-frog is a powerful text analysis tool designed to help users extract valuable insights from text data. It offers a wide range of features including sentiment analysis, keyword extraction, entity recognition, and text summarization. With its user-friendly interface and robust algorithms, Read-frog is suitable for both beginners and advanced users looking to analyze text data for various purposes such as market research, social media monitoring, and content optimization. Whether you are a data scientist, marketer, researcher, or student, Read-frog can streamline your text analysis workflow and provide actionable insights to drive decision-making and enhance productivity.

AceCoder

AceCoder is a tool that introduces a fully automated pipeline for synthesizing large-scale reliable tests used for reward model training and reinforcement learning in the coding scenario. It curates datasets, trains reward models, and performs RL training to improve coding abilities of language models. The tool aims to unlock the potential of RL training for code generation models and push the boundaries of LLM's coding abilities.

transformers

Transformers is a state-of-the-art pretrained models library that acts as the model-definition framework for machine learning models in text, computer vision, audio, video, and multimodal tasks. It centralizes model definition for compatibility across various training frameworks, inference engines, and modeling libraries. The library simplifies the usage of new models by providing simple, customizable, and efficient model definitions. With over 1M+ Transformers model checkpoints available, users can easily find and utilize models for their tasks.

amazon-sagemaker-llm-fine-tuning-remote-decorator

This repository provides interactive fine-tuning of Foundation Models with Amazon SageMaker Training using the @remote decorator. It showcases the use of SageMaker AI capabilities for Small/Large Language Models fine-tuning by employing different distribution techniques like FSDP and DDP. Users can run the repository from Amazon SageMaker Studio or a local IDE. The notebooks cover various supervised and self-supervised fine-tuning scenarios for different models, along with instructions for updating configurations based on the AWS region and Python version compatibility.

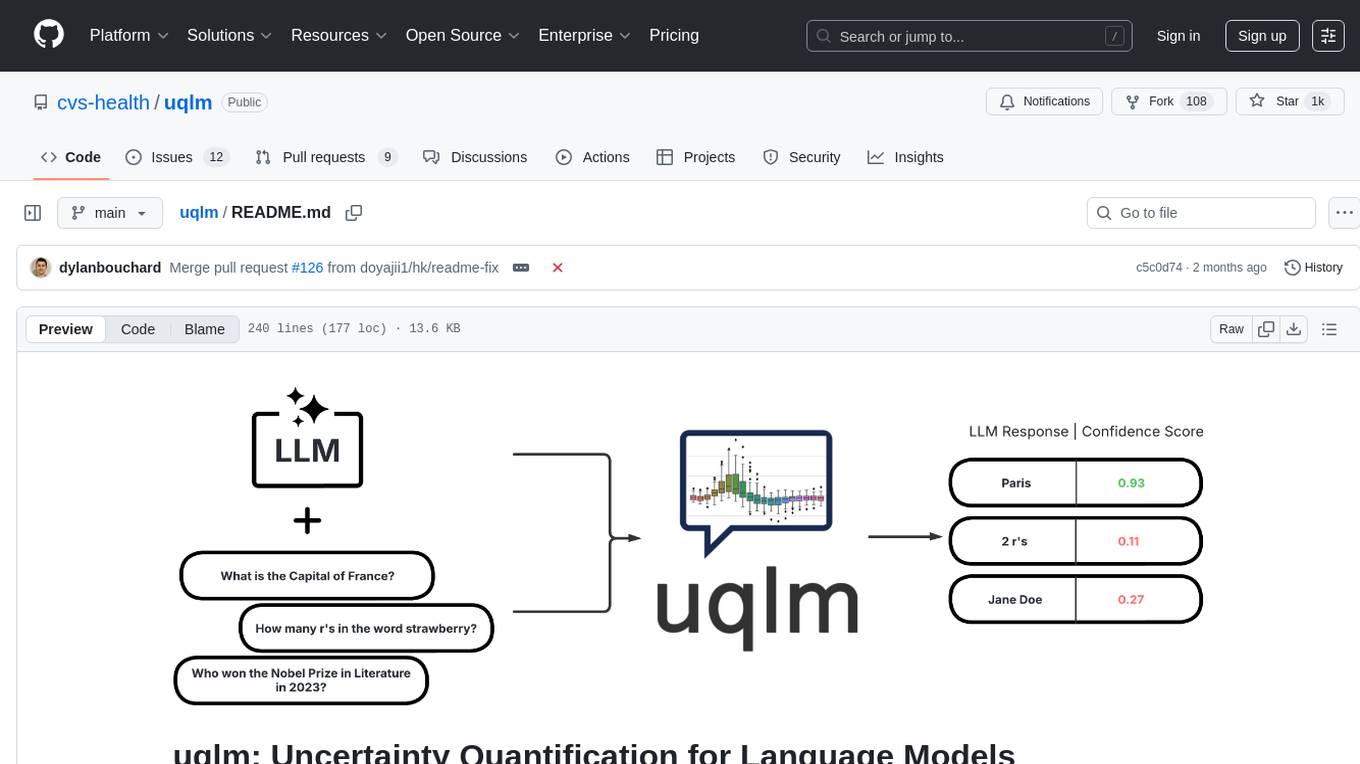

uqlm

UQLM is a Python library for Large Language Model (LLM) hallucination detection using state-of-the-art uncertainty quantification techniques. It provides response-level scorers for quantifying uncertainty of LLM outputs, categorized into four main types: Black-Box Scorers, White-Box Scorers, LLM-as-a-Judge Scorers, and Ensemble Scorers. Users can leverage different scorers to assess uncertainty in generated responses, with options for off-the-shelf usage or customization. The library offers illustrative code snippets and detailed information on available scorers for each type, along with example usage for conducting hallucination detection. Additionally, UQLM includes documentation, example notebooks, and associated research for further exploration and understanding.

Hands-On-Large-Language-Models

Hands-On Large Language Models is a repository containing code examples from the book 'The Illustrated LLM Book' by Jay Alammar and Maarten Grootendorst. The repository provides practical tools and concepts for using Large Language Models with over 250 custom-made figures. It covers topics such as language model introduction, tokens and embeddings, transformer LLMs, text classification, text clustering, prompt engineering, text generation techniques, semantic search, multimodal LLMs, text embedding models, fine-tuning representation models, and fine-tuning generation models. The examples are designed to be run on Google Colab with T4 GPU support, but can be adapted to other cloud platforms as well.

PURE

PURE (Process-sUpervised Reinforcement lEarning) is a framework that trains a Process Reward Model (PRM) on a dataset and fine-tunes a language model to achieve state-of-the-art mathematical reasoning capabilities. It uses a novel credit assignment method to calculate return and supports multiple reward types. The final model outperforms existing methods with minimal RL data or compute resources, achieving high accuracy on various benchmarks. The tool addresses reward hacking issues and aims to enhance long-range decision-making and reasoning tasks using large language models.

FlagEmbedding

FlagEmbedding focuses on retrieval-augmented LLMs, consisting of the following projects currently: * **Long-Context LLM** : Activation Beacon * **Fine-tuning of LM** : LM-Cocktail * **Embedding Model** : Visualized-BGE, BGE-M3, LLM Embedder, BGE Embedding * **Reranker Model** : llm rerankers, BGE Reranker * **Benchmark** : C-MTEB

CuMo

CuMo is a project focused on scaling multimodal Large Language Models (LLMs) with Co-Upcycled Mixture-of-Experts. It introduces CuMo, which incorporates Co-upcycled Top-K sparsely-gated Mixture-of-experts blocks into the vision encoder and the MLP connector, enhancing the capabilities of multimodal LLMs. The project adopts a three-stage training approach with auxiliary losses to stabilize the training process and maintain a balanced loading of experts. CuMo achieves comparable performance to other state-of-the-art multimodal LLMs on various Visual Question Answering (VQA) and visual-instruction-following benchmarks.

rageval

Rageval is an evaluation tool for Retrieval-augmented Generation (RAG) methods. It helps evaluate RAG systems by performing tasks such as query rewriting, document ranking, information compression, evidence verification, answer generation, and result validation. The tool provides metrics for answer correctness and answer groundedness, along with benchmark results for ASQA and ALCE datasets. Users can install and use Rageval to assess the performance of RAG models in question-answering tasks.

VideoTuna

VideoTuna is a codebase for text-to-video applications that integrates multiple AI video generation models for text-to-video, image-to-video, and text-to-image generation. It provides comprehensive pipelines in video generation, including pre-training, continuous training, post-training, and fine-tuning. The models in VideoTuna include U-Net and DiT architectures for visual generation tasks, with upcoming releases of a new 3D video VAE and a controllable facial video generation model.

EmbodiedScan

EmbodiedScan is a holistic multi-modal 3D perception suite designed for embodied AI. It introduces a multi-modal, ego-centric 3D perception dataset and benchmark for holistic 3D scene understanding. The dataset includes over 5k scans with 1M ego-centric RGB-D views, 1M language prompts, 160k 3D-oriented boxes spanning 760 categories, and dense semantic occupancy with 80 common categories. The suite includes a baseline framework named Embodied Perceptron, capable of processing multi-modal inputs for 3D perception tasks and language-grounded tasks.

For similar tasks

awesome-deliberative-prompting

The 'awesome-deliberative-prompting' repository focuses on how to ask Large Language Models (LLMs) to produce reliable reasoning and make reason-responsive decisions through deliberative prompting. It includes success stories, prompting patterns and strategies, multi-agent deliberation, reflection and meta-cognition, text generation techniques, self-correction methods, reasoning analytics, limitations, failures, puzzles, datasets, tools, and other resources related to deliberative prompting. The repository provides a comprehensive overview of research, techniques, and tools for enhancing reasoning capabilities of LLMs.

llm-verified-with-monte-carlo-tree-search

This prototype synthesizes verified code with an LLM using Monte Carlo Tree Search (MCTS). It explores the space of possible generation of a verified program and checks at every step that it's on the right track by calling the verifier. This prototype uses Dafny, Coq, Lean, Scala, or Rust. By using this technique, weaker models that might not even know the generated language all that well can compete with stronger models.

flashinfer

FlashInfer is a library for Language Languages Models that provides high-performance implementation of LLM GPU kernels such as FlashAttention, PageAttention and LoRA. FlashInfer focus on LLM serving and inference, and delivers state-the-art performance across diverse scenarios.

dolma

Dolma is a dataset and toolkit for curating large datasets for (pre)-training ML models. The dataset consists of 3 trillion tokens from a diverse mix of web content, academic publications, code, books, and encyclopedic materials. The toolkit provides high-performance, portable, and extensible tools for processing, tagging, and deduplicating documents. Key features of the toolkit include built-in taggers, fast deduplication, and cloud support.

web-llm

WebLLM is a modular and customizable javascript package that directly brings language model chats directly onto web browsers with hardware acceleration. Everything runs inside the browser with no server support and is accelerated with WebGPU. WebLLM is fully compatible with OpenAI API. That is, you can use the same OpenAI API on any open source models locally, with functionalities including json-mode, function-calling, streaming, etc. We can bring a lot of fun opportunities to build AI assistants for everyone and enable privacy while enjoying GPU acceleration.

nlp-llms-resources

The 'nlp-llms-resources' repository is a comprehensive resource list for Natural Language Processing (NLP) and Large Language Models (LLMs). It covers a wide range of topics including traditional NLP datasets, data acquisition, libraries for NLP, neural networks, sentiment analysis, optical character recognition, information extraction, semantics, topic modeling, multilingual NLP, domain-specific LLMs, vector databases, ethics, costing, books, courses, surveys, aggregators, newsletters, papers, conferences, and societies. The repository provides valuable information and resources for individuals interested in NLP and LLMs.

h2o-llmstudio

H2O LLM Studio is a framework and no-code GUI designed for fine-tuning state-of-the-art large language models (LLMs). With H2O LLM Studio, you can easily and effectively fine-tune LLMs without the need for any coding experience. The GUI is specially designed for large language models, and you can finetune any LLM using a large variety of hyperparameters. You can also use recent finetuning techniques such as Low-Rank Adaptation (LoRA) and 8-bit model training with a low memory footprint. Additionally, you can use Reinforcement Learning (RL) to finetune your model (experimental), use advanced evaluation metrics to judge generated answers by the model, track and compare your model performance visually, and easily export your model to the Hugging Face Hub and share it with the community.

KULLM

KULLM (구름) is a Korean Large Language Model developed by Korea University NLP & AI Lab and HIAI Research Institute. It is based on the upstage/SOLAR-10.7B-v1.0 model and has been fine-tuned for instruction. The model has been trained on 8×A100 GPUs and is capable of generating responses in Korean language. KULLM exhibits hallucination and repetition phenomena due to its decoding strategy. Users should be cautious as the model may produce inaccurate or harmful results. Performance may vary in benchmarks without a fixed system prompt.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.