evedel

Instructed LLM programmer/assistant for Emacs

Stars: 80

Evedel is an Emacs package designed to streamline the interaction with LLMs during programming. It aims to reduce manual code writing by creating detailed instruction annotations in the source files for LLM models. The tool leverages overlays to track instructions, categorize references with tags, and provide a seamless workflow for managing and processing directives. Evedel offers features like saving instruction overlays, complex query expressions for directives, and easy navigation through instruction overlays across all buffers. It is versatile and can be used in various types of buffers beyond just programming buffers.

README:

#+title: Evedel: Instructed LLM Programmer/Assistant

[[file:https://stable.melpa.org/packages/evedel-badge.svg]] [[file:https://melpa.org/packages/evedel-badge.svg]]

#+begin_quote [!WARNING] This is a fledgling project. Expect bugs, missing features, and API instability. #+end_quote

Evedel is an Emacs package that adds a workflow for interacting with LLMs during programming. Its primary goal is to shift workload from manually writing code to creating detailed, thoughtful instruction annotations in the source files for LLM models, leveraging them as indexed references to provide them with contextual understanding of the working environment.

Evedel is versatile enough so that it can be utilized in various types of buffers, and isn't limited to just programming buffers.

** Features

[[file:media/complex-labeling-example.png]]

- Uses [[https://github.com/karthink/gptel][gptel]] as a backend, so no extra setup is necessary if you already use it.

- Uses overlays for tracking instructions instead of raw text, decoupling instructions from your raw text. The overlays are mostly intuitive and can be customized, and try not to interfere with the regular Emacs workflow.

- Can save your instruction overlays so you won't have to restart the labeling process each session.

- Can categorize your references with tags, and use complex query expressions to determine what to sent to the model in directives.

- Can easily cycle through between instruction overlays across all buffers.

** Requirements :PROPERTIES: :CUSTOM_ID: requirements :END:

- [[https://github.com/karthink/gptel][gptel]]

- Emacs version 29.1 or higher

** Usage

Evedel's function revolves around the creation and manipulation of references and directives within your Emacs buffers. Directives are prompts you send to the model, while references provide context to help complete these directives more accurately.

[[file:media/basic-demo.gif]]

*** Management

|------------------------------+-----------------------------------------------------------------------| | Command | Command Description | |------------------------------+-----------------------------------------------------------------------| | =evedel-create-reference= | Create or resize a reference instruction within a region. | | =evedel-create-directive= | Create or resize a directive instruction at point or within a region. | | =evedel-delete-instructions= | Remove instructions either at point or within the selected region. | | =evedel-delete-all= | Delete all Evedel instructions across all buffers. | |------------------------------+-----------------------------------------------------------------------|

- If the region mark started from outside the reference/directive overlay and a part of it is within the selected region, the instruction will be "shrunk" to fit the new region boundaries.

- If the region mark started from inside the reference/directive overlay, the instruction will grow to include the entire new selection.

Below is an example of scaling existing instruction overlay (in this case, a reference) by invoking the =evedel-create-reference= command within a region that contains one:

[[file:media/scaling-demo.gif]]

*** Saving & Loading

|----------------------------+--------------------------------------------------------| | Command | Command Description | |----------------------------+--------------------------------------------------------| | =evedel-save-instructions= | Save current instruction overlays to a specified file. | | =evedel-load-instructions= | Load instruction overlays from a specified file. | | =evedel-instruction-count= | Return the number of instructions currently loaded. | |----------------------------+--------------------------------------------------------|

|--------------------------------------+------------------------------------------------------------------| | Custom Variable | Variable Description | |--------------------------------------+------------------------------------------------------------------| | =evedel-patch-outdated-instructions= | Automatically patch instructions when the save file is outdated. | |--------------------------------------+------------------------------------------------------------------|

The variable =evedel-patch-outdated-instructions= controls the automatic patching of instructions during loading when the save file is outdated. The process is not perfect (word-wise diff), so you should always try and maintain a separate instruction file per branch.

*** Modification

|--------------------------------------+-------------------------------------------------------------------| | Command | Command Description | |--------------------------------------+-------------------------------------------------------------------| | =evedel-convert-instructions= | Convert between reference and directive types at point. | | =evedel-modify-directive= | Modify an existing directive instruction at point. | | =evedel-modify-reference-commentary= | Modify reference commentary at the current point. | | =evedel-add-tags= | Add tags to the reference under the point. | | =evedel-remove-tags= | Remove tags from the reference under the point. | | =evedel-modify-directive-tag-query= | Enter a tag search query for a directive under the current point. | | =evedel-link-instructions= | Link instructions by their ids. | | =evedel-unlink-instructions= | Unlink instructions by their ids. | | =evedel-directive-undo= | Undo the last change of the directive history at point. | |--------------------------------------+-------------------------------------------------------------------|

|-------------------------------------------+------------------------------------------------------| | Custom Variable | Variable Description | |-------------------------------------------+------------------------------------------------------| | =evedel-empty-tag-query-matches-all= | Determines matching behavior of queryless directives | | =evedel-always-match-untagged-references= | Determines matching behavior of untagged references | |-------------------------------------------+------------------------------------------------------|

**** Categorization

[[file:media/tag-query-demo.gif]]

The categorization system in allows you to use tags to label and organize references. You can add or remove tags to a reference using the commands =evedel-add-tags= and =evedel-remove-tags=. Each tag is a symbolic label that helps identify the nature or purpose of the reference.

You can also modify the tag query for a directive, which is a way to filter and search for references by tags. The tag query uses an infix notation system, allowing complex expressions with the operators =and=, =or=, and =not=. For example, the query =signature and function and doc= means the directive should match references tagged with =signature=, =function=, and =doc=. You may use parentheses in these expressions.

Additionally, there are special meta tag symbols that have exclusive meanings:

- =is:bufferlevel=: Returns only references that contain the entire buffer.

- =is:tagless=: Returns references with no tags whatsoever.

- =is:directly-tagless=: Returns references which may have inherited tags, but no tags of their own.

- =is:subreference=: Returns references which have another reference as their parent.

- =is:with-commentary=: Returns references that directly contain commentary text.

- =id:=: Returns references the id matched by =positive-integer=.

=evedel-empty-tag-query-matches-all= determines the behavior of directives without a tag search query. If set to =t=, directives lacking a specific tag search query will use all available references. Alternatively, if set to =nil=, such directives will not use any references, leading to potentially narrower results.

=evedel-always-match-untagged-references= controls the inclusion of untagged references in directive prompts. When set to =t=, untagged references are always incorporated into directive references, ensuring comprehensive coverage. Conversely, when set to =nil=, untagged references are ignored unless =evedel-empty-tag-query-matches-all= is set to =t=.

**** Commentary

You can add commentaries to references with the =evedel-modify-reference-commentary= command. Commentaries can add extra context and information to a reference. Example:

[[file:media/commentary-example.png]]

**** Linking

References can be linked to one another, which sets up a dependency or of automatic inclusion relationship between the two. This means that when the first reference is utilized, it will automatically bring into play the reference it is linked to, as well. This chaining of references is recursive: if a linked reference is itself linked to another, and so forth, all these links will be followed automatically. This continues until either there are no more links to follow or a cycle is detected in the linkage graph.

Linked references are also included when a directive is executed from within a reference which links to another, in a similar fashion to commentaries.

Currently, linking is only relevant for references.

*** Processing

|-----------------------------------+------------------------------------------------| | Command | Command Description | |-----------------------------------+------------------------------------------------| | =evedel-process-directives= | Process directives by sending them to gptel. | | =evedel-preview-directive-prompt= | Preview directive prompt at the current point. | |-----------------------------------+------------------------------------------------|

|----------------------------------------+------------------------------------------------------------| | Custom Variable | Variable Description | |----------------------------------------+------------------------------------------------------------| | =evedel-descriptive-mode-roles= | Alist mapping major modes to model roles association list | |----------------------------------------+------------------------------------------------------------|

You can use the =evedel-preview-directive-prompt= command to do a dry-run and see how the AI prompt will look like. Here's an example of previewing a directive prompt:

[[file:media/preview-directive-demo.gif]]

The =evedel-process-directives= command processes the directives.

- If at point: sends the directive under the point.

- If a region is selected: sends all directives within the selected region.

- Otherwise, processes all directives in the current buffer.

*** Navigation

|--------------------------------------+-------------------------------------------------------------| | Command | Command Description | |--------------------------------------+-------------------------------------------------------------| | =evedel-next-instruction= | Cycle through instructions in the forward direction. | | =evedel-previous-instruction= | Cycle through instructions in the backward direction. | | =evedel-next-reference= | Cycle through references in the forward direction. | | =evedel-previous-reference= | Cycle through references in the backward direction. | | =evedel-next-directive= | Cycle through directives in the forward direction. | | =evedel-previous-directive= | Cycle through directives in the backward direction. | | =evedel-cycle-instructions-at-point= | Cycle through instructions at the point, highlighting them. | |--------------------------------------+-------------------------------------------------------------|

*** Customization

|-------------------------------------------+--------------------------------------------------| | Custom Variable | Variable Description | |-------------------------------------------+--------------------------------------------------| | =evedel-reference-color= | Tint color for reference overlays | | =evedel-directive-color= | Tint color for directive overlays | | =evedel-directive-processing-color= | Tint color for directives being processed | | =evedel-directive-success-color= | Tint color for successfully processed directives | | =evedel-directive-fail-color= | Tint color for failed directives | | =evedel-instruction-bg-tint-intensity= | Intensity for instruction background tint | | =evedel-instruction-label-tint-intensity= | Intensity for instruction label tint | | =evedel-subinstruction-tint-intensity= | Coefficient for adjusting subinstruction tints | |-------------------------------------------+--------------------------------------------------|

** Installation

Evedel can be installed in Emacs out of the box with =M-x package-install RET evedel=. This installs the latest commit.

If you want the stable version instead, add MELPA-stable to your list of package sources:

#+begin_src (add-to-list 'package-archives '("melpa-stable" . "https://stable.melpa.org/packages/") t) #+end_src

Then, install Evedel with =M-x package-install RET evedel= from these sources.

You can also optionally install =markdown-mode= to have its formatting in certain cases.

*** Manual

Download or clone the repository with

#+begin_src sh git clone https://github.com/daedsidog/evedel.git #+end_src

then run =M-x package-install-file RET= on the repository directory.

** Setup

Evedel doesn't require any additional setup and can be used straight after installation. Still, it is advised you customize keymaps for its commands. As a reference, I listed below my personal Evedel configuration:

#+begin_src emacs-lisp (use-package evedel :defer t :config (customize-set-variable 'evedel-empty-tag-query-matches-all nil) :bind (("C-c e r" . evedel-create-reference) ("C-c e d" . evedel-create-directive) ("C-c e s" . evedel-save-instructions) ("C-c e l" . evedel-load-instructions) ("C-c e p" . evedel-process-directives) ("C-c e m" . evedel-modify-directive) ("C-c e C" . evedel-modify-reference-commentary) ("C-c e k" . evedel-delete-instructions) ("C-c e c" . evedel-convert-instructions) ("C->" . evedel-next-instruction) ("C-<" . evedel-previous-instruction) ("C-." . evedel-cycle-instructions-at-point) ("C-c e t" . evedel-add-tags) ("C-c e T" . evedel-remove-tags) ("C-c e D" . evedel-modify-directive-tag-query) ("C-c e P" . evedel-preview-directive-prompt) ("C-c e /" . evedel-directive-undo) ("C-c e ?" . (lambda () (interactive) (evedel-directive-undo t))) #+end_src

** Planned Features

Mark indicates previously planned features that have been implemented.

*** Instruction Navigation

- [X] Basic cyclic navigation between instruction across buffers

- [ ] Reference navigation based on a tag query

*** Reference Management

- [X] Reference categorization via tags

- [X] Filter references via tag query when sending directives

- [ ] Tag autocompletion when writing directive tag query

- [ ] Windows references: describe to the model the contents of a particular Emacs window.

- [ ] Whole-Emacs references: describe to the model the entire status of the Emacs session.

- [X] Reference commentary

*** Directive Management

- [ ] Sequential execution of dependent directives

- [ ] Interactive directive result diff & extra procedures

- [ ] Automatic RAG

*** Interface

- [ ] Auto-saving/loading

- [X] Persistence with version controls, e.g. switching branches should not mess up the instructions [1].

- [ ] Preservation of sub-instructions returned as part of a successful directive

- [X] Instruction undoing/redoing history

- [X] Better/more precise instruction selection resolution for tightly nested instructions

[1] While the current patching is able to fix outdated instructions pretty accurately, it is still a better idea to maintain a separate save file for each branch. This feature solves the problem where even the most minor change in the file completely broke the overlay structure.

*** Documentation

- [X] Ability to preview directive to be sent

- [ ] Instruction help tooltips

** Acknowledgments

- Special thanks to [[https://github.com/karthink][Karthik Chikmagalur]] for the excellent [[https://github.com/karthink/gptel][gptel]] package

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for evedel

Similar Open Source Tools

evedel

Evedel is an Emacs package designed to streamline the interaction with LLMs during programming. It aims to reduce manual code writing by creating detailed instruction annotations in the source files for LLM models. The tool leverages overlays to track instructions, categorize references with tags, and provide a seamless workflow for managing and processing directives. Evedel offers features like saving instruction overlays, complex query expressions for directives, and easy navigation through instruction overlays across all buffers. It is versatile and can be used in various types of buffers beyond just programming buffers.

CuMo

CuMo is a project focused on scaling multimodal Large Language Models (LLMs) with Co-Upcycled Mixture-of-Experts. It introduces CuMo, which incorporates Co-upcycled Top-K sparsely-gated Mixture-of-experts blocks into the vision encoder and the MLP connector, enhancing the capabilities of multimodal LLMs. The project adopts a three-stage training approach with auxiliary losses to stabilize the training process and maintain a balanced loading of experts. CuMo achieves comparable performance to other state-of-the-art multimodal LLMs on various Visual Question Answering (VQA) and visual-instruction-following benchmarks.

pytorch-forecasting

PyTorch Forecasting is a PyTorch-based package designed for state-of-the-art timeseries forecasting using deep learning architectures. It offers a high-level API and leverages PyTorch Lightning for efficient training on GPU or CPU with automatic logging. The package aims to simplify timeseries forecasting tasks by providing a flexible API for professionals and user-friendly defaults for beginners. It includes features such as a timeseries dataset class for handling data transformations, missing values, and subsampling, various neural network architectures optimized for real-world deployment, multi-horizon timeseries metrics, and hyperparameter tuning with optuna. Built on pytorch-lightning, it supports training on CPUs, single GPUs, and multiple GPUs out-of-the-box.

ludwig

Ludwig is a declarative deep learning framework designed for scale and efficiency. It is a low-code framework that allows users to build custom AI models like LLMs and other deep neural networks with ease. Ludwig offers features such as optimized scale and efficiency, expert level control, modularity, and extensibility. It is engineered for production with prebuilt Docker containers, support for running with Ray on Kubernetes, and the ability to export models to Torchscript and Triton. Ludwig is hosted by the Linux Foundation AI & Data.

lobe-chat

Lobe Chat is an open-source, modern-design ChatGPT/LLMs UI/Framework. Supports speech-synthesis, multi-modal, and extensible ([function call][docs-functionc-call]) plugin system. One-click **FREE** deployment of your private OpenAI ChatGPT/Claude/Gemini/Groq/Ollama chat application.

PURE

PURE (Process-sUpervised Reinforcement lEarning) is a framework that trains a Process Reward Model (PRM) on a dataset and fine-tunes a language model to achieve state-of-the-art mathematical reasoning capabilities. It uses a novel credit assignment method to calculate return and supports multiple reward types. The final model outperforms existing methods with minimal RL data or compute resources, achieving high accuracy on various benchmarks. The tool addresses reward hacking issues and aims to enhance long-range decision-making and reasoning tasks using large language models.

PowerInfer

PowerInfer is a high-speed Large Language Model (LLM) inference engine designed for local deployment on consumer-grade hardware, leveraging activation locality to optimize efficiency. It features a locality-centric design, hybrid CPU/GPU utilization, easy integration with popular ReLU-sparse models, and support for various platforms. PowerInfer achieves high speed with lower resource demands and is flexible for easy deployment and compatibility with existing models like Falcon-40B, Llama2 family, ProSparse Llama2 family, and Bamboo-7B.

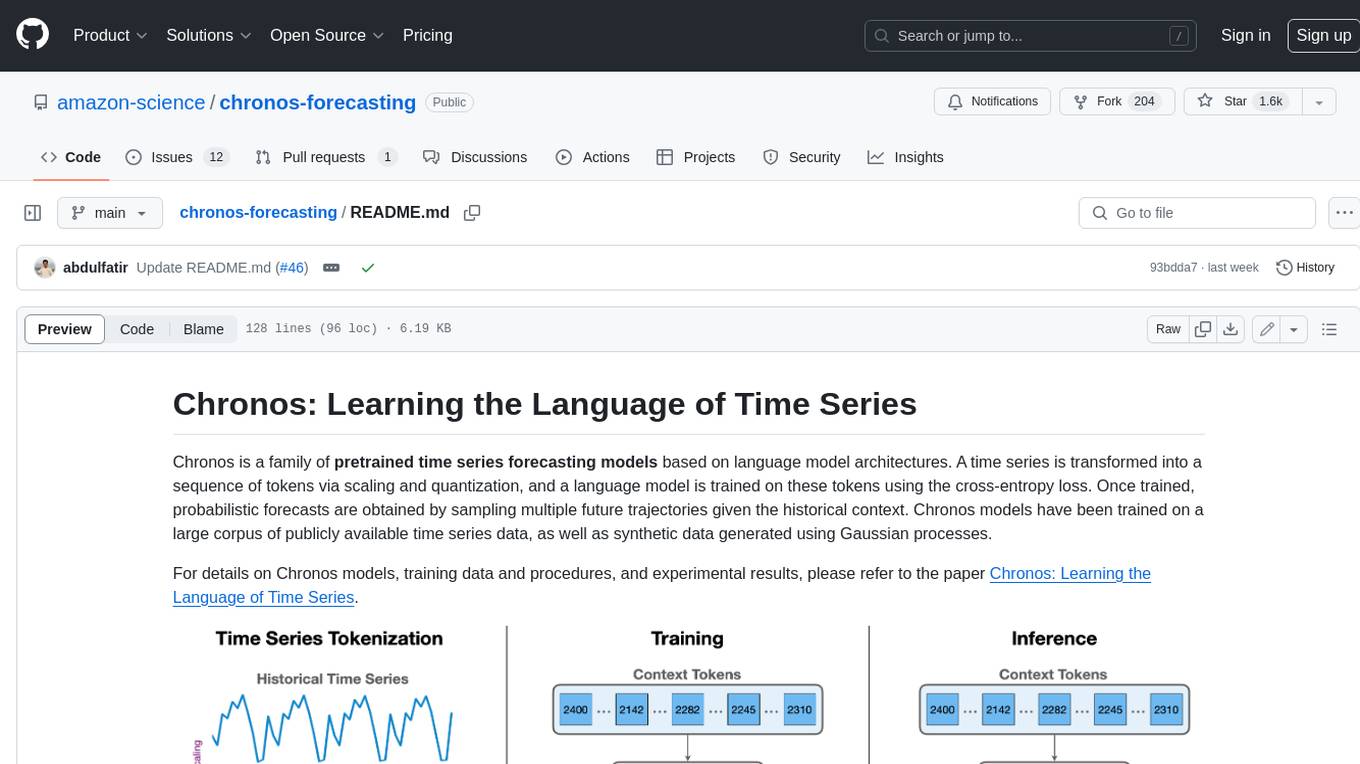

chronos-forecasting

Chronos is a family of pretrained time series forecasting models based on language model architectures. A time series is transformed into a sequence of tokens via scaling and quantization, and a language model is trained on these tokens using the cross-entropy loss. Once trained, probabilistic forecasts are obtained by sampling multiple future trajectories given the historical context. Chronos models have been trained on a large corpus of publicly available time series data, as well as synthetic data generated using Gaussian processes.

twelvet

Twelvet is a permission management system based on Spring Cloud Alibaba that serves as a framework for rapid development. It is a scaffolding framework based on microservices architecture, aiming to reduce duplication of business code and provide a common core business code for both microservices and monoliths. It is designed for learning microservices concepts and development, suitable for website management, CMS, CRM, OA, and other system development. The system is intended to quickly meet business needs, improve user experience, and save time by incubating practical functional points in lightweight, highly portable functional plugins.

LLMs-from-scratch

This repository contains the code for coding, pretraining, and finetuning a GPT-like LLM and is the official code repository for the book Build a Large Language Model (From Scratch). In _Build a Large Language Model (From Scratch)_, you'll discover how LLMs work from the inside out. In this book, I'll guide you step by step through creating your own LLM, explaining each stage with clear text, diagrams, and examples. The method described in this book for training and developing your own small-but-functional model for educational purposes mirrors the approach used in creating large-scale foundational models such as those behind ChatGPT.

EmbodiedScan

EmbodiedScan is a holistic multi-modal 3D perception suite designed for embodied AI. It introduces a multi-modal, ego-centric 3D perception dataset and benchmark for holistic 3D scene understanding. The dataset includes over 5k scans with 1M ego-centric RGB-D views, 1M language prompts, 160k 3D-oriented boxes spanning 760 categories, and dense semantic occupancy with 80 common categories. The suite includes a baseline framework named Embodied Perceptron, capable of processing multi-modal inputs for 3D perception tasks and language-grounded tasks.

kubesphere

KubeSphere is a distributed operating system for cloud-native application management, using Kubernetes as its kernel. It provides a plug-and-play architecture, allowing third-party applications to be seamlessly integrated into its ecosystem. KubeSphere is also a multi-tenant container platform with full-stack automated IT operation and streamlined DevOps workflows. It provides developer-friendly wizard web UI, helping enterprises to build out a more robust and feature-rich platform, which includes most common functionalities needed for enterprise Kubernetes strategy.

langtest

LangTest is a comprehensive evaluation library for custom LLM and NLP models. It aims to deliver safe and effective language models by providing tools to test model quality, augment training data, and support popular NLP frameworks. LangTest comes with benchmark datasets to challenge and enhance language models, ensuring peak performance in various linguistic tasks. The tool offers more than 60 distinct types of tests with just one line of code, covering aspects like robustness, bias, representation, fairness, and accuracy. It supports testing LLMS for question answering, toxicity, clinical tests, legal support, factuality, sycophancy, and summarization.

SeerAttention

SeerAttention is a novel trainable sparse attention mechanism that learns intrinsic sparsity patterns directly from LLMs through self-distillation at post-training time. It achieves faster inference while maintaining accuracy for long-context prefilling. The tool offers features such as trainable sparse attention, block-level sparsity, self-distillation, efficient kernel, and easy integration with existing transformer architectures. Users can quickly start using SeerAttention for inference with AttnGate Adapter and training attention gates with self-distillation. The tool provides efficient evaluation methods and encourages contributions from the community.

eslint-config-airbnb-extended

A powerful ESLint configuration extending the popular Airbnb style guide, with added support for TypeScript. It provides a one-to-one replacement for old Airbnb ESLint configs, TypeScript support, customizable settings, pre-configured rules, and a CLI utility for quick setup. The package 'eslint-config-airbnb-extended' fully supports TypeScript to enforce consistent coding standards across JavaScript and TypeScript files. The 'create-airbnb-x-config' tool automates the setup of the ESLint configuration package and ensures correct ESLint rules application across JavaScript and TypeScript code.

semantic-router

The Semantic Router is an intelligent routing tool that utilizes a Mixture-of-Models (MoM) approach to direct OpenAI API requests to the most suitable models based on semantic understanding. It enhances inference accuracy by selecting models tailored to different types of tasks. The tool also automatically selects relevant tools based on the prompt to improve tool selection accuracy. Additionally, it includes features for enterprise security such as PII detection and prompt guard to protect user privacy and prevent misbehavior. The tool implements similarity caching to reduce latency. The comprehensive documentation covers setup instructions, architecture guides, and API references.

For similar tasks

evedel

Evedel is an Emacs package designed to streamline the interaction with LLMs during programming. It aims to reduce manual code writing by creating detailed instruction annotations in the source files for LLM models. The tool leverages overlays to track instructions, categorize references with tags, and provide a seamless workflow for managing and processing directives. Evedel offers features like saving instruction overlays, complex query expressions for directives, and easy navigation through instruction overlays across all buffers. It is versatile and can be used in various types of buffers beyond just programming buffers.

neocodeium

NeoCodeium is a free AI completion plugin powered by Codeium, designed for Neovim users. It aims to provide a smoother experience by eliminating flickering suggestions and allowing for repeatable completions using the `.` key. The plugin offers performance improvements through cache techniques, displays suggestion count labels, and supports Lua scripting. Users can customize keymaps, manage suggestions, and interact with the AI chat feature. NeoCodeium enhances code completion in Neovim, making it a valuable tool for developers seeking efficient coding assistance.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.