Awesome-local-LLM

A curated list of awesome platforms, tools, practices and resources that helps run LLMs locally

Stars: 285

Awesome-local-LLM is a curated list of platforms, tools, practices, and resources that help run Large Language Models (LLMs) locally. It includes sections on inference platforms, engines, user interfaces, specific models for general purpose, coding, vision, audio, and miscellaneous tasks. The repository also covers tools for coding agents, agent frameworks, retrieval-augmented generation, computer use, browser automation, memory management, testing, evaluation, research, training, and fine-tuning. Additionally, there are tutorials on models, prompt engineering, context engineering, inference, agents, retrieval-augmented generation, and miscellaneous topics, along with a section on communities for LLM enthusiasts.

README:

A curated list of awesome platforms, tools, practices and resources that helps run LLMs locally

- Inference platforms

- Inference engines

- User Interfaces

- Large Language Models

- Tools

- Hardware

- Tutorials

- Communities

- LM Studio - discover, download and run local LLMs

-

jan - an open source alternative to ChatGPT that runs 100% offline on your computer

-

ChatBox - user-friendly desktop client app for AI models/LLMs

-

LocalAI - the free, open-source alternative to OpenAI, Claude and others

-

lemonade - a local LLM server with GPU and NPU Acceleration

-

ollama - get up and running with LLMs

-

llama.cpp - LLM inference in C/C++

-

vllm - a high-throughput and memory-efficient inference and serving engine for LLMs

-

exo - run your own AI cluster at home with everyday devices

-

sglang - a fast serving framework for large language models and vision language models

-

koboldcpp - run GGUF models easily with a KoboldAI UI

-

Nano-vLLM - a lightweight vLLM implementation built from scratch

-

gpustack - simple, scalable AI model deployment on GPU clusters

-

distributed-llama - connect home devices into a powerful cluster to accelerate LLM inference

-

mlx-lm - generate text and fine-tune large language models on Apple silicon with MLX

-

ik_llama.cpp - llama.cpp fork with additional SOTA quants and improved performance

-

vllm-gfx906 - vLLM for AMD gfx906 GPUs, e.g. Radeon VII / MI50 / MI60

-

FastFlowLM - run LLMs on AMD Ryzen™ AI NPUs

-

llm-scaler - run LLMs on Intel Arc™ Pro B60 GPUs

-

Open WebUI - User-friendly AI Interface (Supports Ollama, OpenAI API, ...)

-

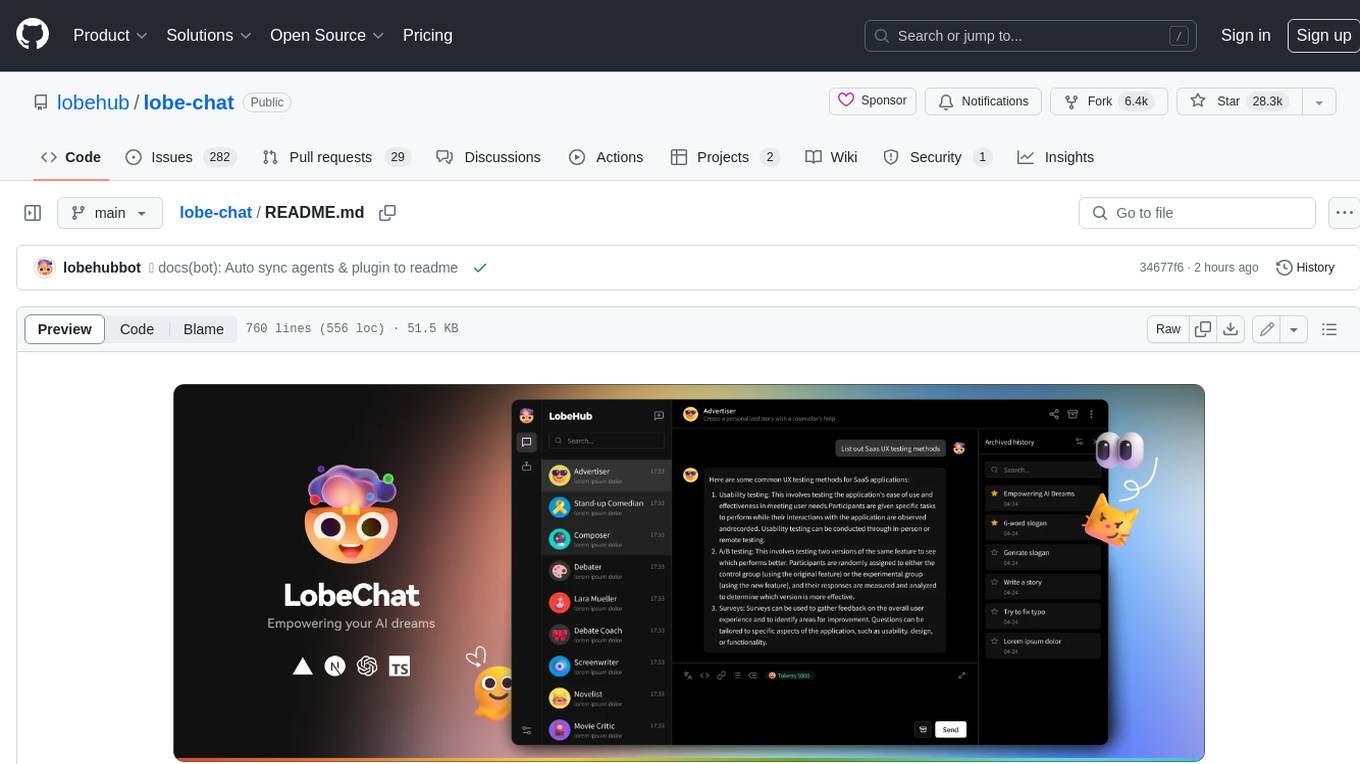

Lobe Chat - an open-source, modern design AI chat framework

-

Text generation web UI - LLM UI with advanced features, easy setup, and multiple backend support

-

SillyTavern - LLM Frontend for Power Users

-

Page Assist - Use your locally running AI models to assist you in your web browsing

- AI Models & API Providers Analysis - understand the AI landscape to choose the best model and provider for your use case

- LLM Explorer - explore list of the open-source LLM models

- Dubesor LLM Benchmark table - small-scale manual performance comparison benchmark

- oobabooga benchmark - a list sorted by size (on disk) for each score

- Qwen - powered by Alibaba Cloud

-

Mistral AI - a pioneering French artificial intelligence startup

- Tencent - a profile of a Chinese multinational technology conglomerate and holding company

- Unsloth AI - focusing on making AI more accessible to everyone (GGUFs etc.)

- bartowski - providing GGUF versions of popular LLMs

- Beijing Academy of Artificial Intelligence - a private non-profit organization engaged in AI research and development

- Open Thoughts - a team of researchers and engineers curating the best open reasoning datasets

- Qwen3-Next - a collection of the latest generation Qwen LLMs

-

Gemma 3 - a family of lightweight, state-of-the-art open models from Google, built from the same research and technology used to create the Gemini models

-

gpt-oss - a collection of open-weight models from OpenAI, designed for powerful reasoning, agentic tasks, and versatile developer use cases

-

Mistral-Small-3.2-24B-Instruct-2506 - a versatile model designed to handle a wide range of generative AI tasks, including instruction following, conversational assistance, image understanding, and function calling

-

Magistral-Small-2509 - a Mistral Small 3.2 (2506) with added reasoning capabilities

- GLM-4.5 - a collection of hybrid reasoning models designed for intelligent agents

- Hunyuan - a collection of Tencent's open-source efficient LLMs designed for versatile deployment across diverse computational environments

- Phi-4-mini-instruct - a lightweight open model built upon synthetic data and filtered publicly available websites

- NVIDIA Nemotron - a collection of open, production-ready enterprise models trained from scratch by NVIDIA

- Llama Nemotron - a collection of open, production-ready enterprise models from NVIDIA

- OpenReasoning-Nemotron - a collection of models from NVIDIA, trained on 5M reasoning traces for math, code and science

- Granite 3.3 - a collection of LLMs from IBM, fine-tuned for improved reasoning and instruction-following capabilities

- EXAONE-4.0 - a collection of LLMs from LG AI Research, integrating non-reasoning and reasoning modes

- ERNIE 4.5 - a collection of large-scale multimodal models from Baidu

- Seed-OSS - a collection of LLMs developed by ByteDance's Seed Team, designed for powerful long-context, reasoning, agent and general capabilities, and versatile developer-friendly features

- Qwen3-Coder - a collection of the Qwen's most agentic code models to date

-

Devstral-Small-2507 - an agentic LLM for software engineering tasks fine-tuned from Mistral-Small-3.1

- Mellum-4b-base - an LLM from JetBrains, optimized for code-related tasks

- OlympicCoder-32B - a code model that achieves very strong performance on competitive coding benchmarks such as LiveCodeBench and the 2024 International Olympiad in Informatics

- NextCoder - a family of code-editing LLMs developed using the Qwen2.5-Coder Instruct variants as base

- Qwen3-Omni - a collection of the natively end-to-end multilingual omni-modal foundation models from Qwen

- Qwen-Image - an image generation foundation model in the Qwen series that achieves significant advances in complex text rendering and precise image editing

- Qwen-Image-Edit-2509 - the image editing version of Qwen-Image extending the base model's unique text rendering capabilities to image editing tasks, enabling precise text editing

- GLM-4.5V - a VLLM based on ZhipuAI’s next-generation flagship text foundation model GLM-4.5-Air

- HunyuanImage-2.1 - an efficient diffusion model for high-resolution (2K) text-to-image generation

- FastVLM - a collection of VLMs with efficient vision encoding from Apple

- MiniCPM-V-4_5 - a GPT-4o Level MLLM for single image, multi image and high-FPS video understanding on your phone

- LFM2-VL - a colection of vision-language models, designed for on-device deployment

- ClipTagger-12b - a vision-language model (VLM) designed for video understanding at massive scale

-

Voxtral-Small-24B-2507 - an enhancement of Mistral Small 3, incorporating state-of-the-art audio input capabilities while retaining best-in-class text performance

- chatterbox - first production-grade open-source TTS model

- VibeVoice - a collection of frontier text-to-speech models from Microsoft

- canary-1b-v2 - a multitask speech transcription and translation model from NVIDIA

- parakeet-tdt-0.6b-v3 - a multilingual speech-to-text model from NVIDIA

- Kitten TTS - a collection of open-source realistic text-to-speech models designed for lightweight deployment and high-quality voice synthesis

- Jan-v1-4B - the first release in the Jan Family, designed for agentic reasoning and problem-solving within the Jan App

- Jan-nano - a compact 4-billion parameter language model specifically designed and trained for deep research tasks

- Jan-nano-128k - an enhanced version of Jan-nano features a native 128k context window that enables deeper, more comprehensive research capabilities without the performance degradation typically associated with context extension method

- Arch-Router-1.5B - the fastest LLM router model that aligns to subjective usage preferences

- HunyuanWorld-1 - an open-source 3D world generation model

- Hunyuan-GameCraft-1.0 - a novel framework for high-dynamic interactive video generation in game environments

-

unsloth - fine-tuning & reinforcement learning for LLMs

-

AutoGPT - a powerful platform that allows you to create, deploy, and manage continuous AI agents that automate complex workflows

-

langchain - build context-aware reasoning applications

-

langflow - a powerful tool for building and deploying AI-powered agents and workflows

-

autogen - a programming framework for agentic AI

-

anything-llm - the all-in-one Desktop & Docker AI application with built-in RAG, AI agents, No-code agent builder, MCP compatibility, and more

-

llama_index - the leading framework for building LLM-powered agents over your data

-

Flowise - build AI agents, visually

-

crewAI - a framework for orchestrating role-playing, autonomous AI agents

-

agno - a full-stack framework for building Multi-Agent Systems with memory, knowledge and reasoning

-

SuperAGI - an open-source framework to build, manage and run useful Autonomous AI Agents

-

camel - the first and the best multi-agent framework

-

openai-agents-python - a lightweight, powerful framework for multi-agent workflows

-

pydantic-ai - a Python agent framework designed to help you quickly, confidently, and painlessly build production grade applications and workflows with Generative AI

-

txtai - all-in-one open-source AI framework for semantic search, LLM orchestration and language model workflows

-

archgw - a high-performance proxy server that handles the low-level work in building agents: like applying guardrails, routing prompts to the right agent, and unifying access to LLMs, etc.

-

ClaraVerse - privacy-first, fully local AI workspace with Ollama LLM chat, tool calling, agent builder, Stable Diffusion, and embedded n8n-style automation

-

ragbits - building blocks for rapid development of GenAI applications

-

graphrag - a modular graph-based RAG system

-

haystack - AI orchestration framework to build customizable, production-ready LLM applications, best suited for building RAG, question answering, semantic search or conversational agent chatbots

-

LightRAG - simple and fast RAG

-

vanna - an open-source Python RAG framework for SQL generation and related functionality

-

graphiti - build real-time knowledge graphs for AI Agents

-

onyx - the AI platform connected to your company's docs, apps, and people

-

zed - a next-generation code editor designed for high-performance collaboration with humans and AI

-

OpenHands - a platform for software development agents powered by AI

-

cline - autonomous coding agent right in your IDE, capable of creating/editing files, executing commands, using the browser, and more with your permission every step of the way

-

aider - AI pair programming in your terminal

-

tabby - an open-source GitHub Copilot alternative, set up your own LLM-powered code completion server

-

continue - create, share, and use custom AI code assistants with our open-source IDE extensions and hub of models, rules, prompts, docs, and other building blocks

-

void - an open-source Cursor alternative, use AI agents on your codebase, checkpoint and visualize changes, and bring any model or host locally

-

Roo-Code - a whole dev team of AI agents in your code editor

-

goose - an open-source, extensible AI agent that goes beyond code suggestions

-

opencode - a AI coding agent built for the terminal

-

crush - the glamourous AI coding agent for your favourite terminal

-

kilocode - open source AI coding assistant for planning, building, and fixing code

-

ProxyAI - the leading open-source AI copilot for JetBrains

-

open-interpreter - a natural language interface for computers

-

OmniParser - a simple screen parsing tool towards pure vision based GUI agent

-

self-operating-computer - a framework to enable multimodal models to operate a computer

-

cua - the Docker Container for Computer-Use AI Agents

-

Agent-S - an open agentic framework that uses computers like a human

-

puppeteer - a JavaScript API for Chrome and Firefox

-

playwright - a framework for Web Testing and Automation

-

Playwright MCP server - an MCP server that provides browser automation capabilities using Playwright

-

browser-use - make websites accessible for AI agents

-

firecrawl - turn entire websites into LLM-ready markdown or structured data

-

stagehand - the AI Browser Automation Framework

-

mem0 - universal memory layer for AI Agents

-

letta - the stateful agents framework with memory, reasoning, and context management

-

cognee - memory for AI Agents in 5 lines of code

-

LMCache - supercharge your LLM with the fastest KV Cache Layer

-

memU - an open-source memory framework for AI companions

-

langfuse - an open-source LLM engineering platform: LLM Observability, metrics, evals, prompt management, playground, datasets. Integrates with OpenTelemetry, Langchain, OpenAI SDK, LiteLLM, and more

-

opik - debug, evaluate, and monitor your LLM applications, RAG systems, and agentic workflows with comprehensive tracing, automated evaluations, and production-ready dashboards

-

openllmetry - an open-source observability for your LLM application, based on OpenTelemetry

-

giskard - an open-source evaluation & testing for AI & LLM systems

-

agenta - an open-source LLMOps platform: prompt playground, prompt management, LLM evaluation, and LLM observability all in one place

-

Perplexica - an open-source alternative to Perplexity AI, the AI-powered search engine

-

gpt-researcher - an LLM based autonomous agent that conducts deep local and web research on any topic and generates a long report with citations

-

local-deep-researcher - fully local web research and report writing assistant

-

SurfSense - an open-source alternative to NotebookLM / Perplexity / Glean

-

local-deep-research - an AI-powered research assistant for deep, iterative research

-

maestro - an AI-powered research application designed to streamline complex research tasks

-

open-notebook - an open-source implementation of Notebook LM with more flexibility and features

-

OpenRLHF - an easy-to-use, high-performance open-source RLHF framework built on Ray, vLLM, ZeRO-3 and HuggingFace Transformers, designed to make RLHF training simple and accessible

-

Kiln - the easiest tool for fine-tuning LLM models, synthetic data generation, and collaborating on datasets

-

augmentoolkit - train an open-source LLM on new facts

-

context7 - up-to-date code documentation for LLMs and AI code editors

-

cai - Cybersecurity AI (CAI), the framework for AI Security

-

speakr - a personal, self-hosted web application designed for transcribing audio recordings

-

presenton - an open-source AI presentation generator and API

-

OmniGen2 - exploration to advanced multimodal generation

-

4o-ghibli-at-home - a powerful, self-hosted AI photo stylizer built for performance and privacy

-

Observer - local open-source micro-agents that observe, log and react, all while keeping your data private and secure

-

mobile-use - a powerful, open-source AI agent that controls your Android or IOS device using natural language

-

gabber - build AI applications that can see, hear, and speak using your screens, microphones, and cameras as inputs

-

promptcat - a zero-dependency prompt manager/catalog/library in a single HTML file

-

Alex Ziskind - tests of pcs, laptops, gpus etc. capable of running LLMs

-

Digital Spaceport - reviews of various builds designed for LLM inference

-

JetsonHacks - information about developing on NVIDIA Jetson Development Kits

-

Miyconst - tests of various types of hardware capable of running LLMs

- LLM Inference VRAM & GPU Requirement Calculator - calculate how many GPUs you need to deploy LLMs

-

ZLUDA - CUDA on non-NVIDIA GPUs

-

Prompt Engineering by NirDiamant - a comprehensive collection of tutorials and implementations for Prompt Engineering techniques, ranging from fundamental concepts to advanced strategies

-

Prompting guide 101 - a quick-start handbook for effective prompts by Google

-

Prompt Engineering by Google - prompt engineering by Google

-

Prompt Engineering by Anthropic - prompt engineering by Anthropic

-

Prompt Engineering Interactive Tutorial - Prompt Engineering Interactive Tutorial by Anthropic

-

Real world prompting - real world prompting tutorial by Anthropic

-

Prompt evaluations - prompt evaluations course by Anthropic

-

system-prompts-and-models-of-ai-tools - a collection of system prompts extracted from AI tools

-

system_prompts_leaks - a collection of extracted System Prompts from popular chatbots like ChatGPT, Claude & Gemini

-

Prompt from Codex - Prompt used to steer behavior of OpenAI's Codex

-

Context-Engineering - a frontier, first-principles handbook inspired by Karpathy and 3Blue1Brown for moving beyond prompt engineering to the wider discipline of context design, orchestration, and optimization

-

Awesome-Context-Engineering - a comprehensive survey on Context Engineering: from prompt engineering to production-grade AI systems

-

vLLM Production Stack - vLLM’s reference system for K8S-native cluster-wide deployment with community-driven performance optimization

-

GenAI Agents - tutorials and implementations for various Generative AI Agent techniques

-

12-Factor Agents - principles for building reliable LLM applications

-

Agents towards production - end-to-end, code-first tutorials covering every layer of production-grade GenAI agents, guiding you from spark to scale with proven patterns and reusable blueprints for real-world launches

-

LLM Agents & Ecosystem Handbook - one-stop handbook for building, deploying, and understanding LLM agents with 60+ skeletons, tutorials, ecosystem guides, and evaluation tools

-

601 real-world gen AI use cases - 601 real-world gen AI use cases from the world's leading organizations by Google

-

A practical guide to building agents - a practical guide to building agents by OpenAI

-

RAG Techniques - various advanced techniques for Retrieval-Augmented Generation (RAG) systems

-

Controllable RAG Agent - an advanced Retrieval-Augmented Generation (RAG) solution for complex question answering that uses sophisticated graph based algorithm to handle the tasks

-

LangChain RAG Cookbook - a collection of modular RAG techniques, implemented in LangChain + Python

We welcome contributions! Please see CONTRIBUTING.md for guidelines on how to get started.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome-local-LLM

Similar Open Source Tools

Awesome-local-LLM

Awesome-local-LLM is a curated list of platforms, tools, practices, and resources that help run Large Language Models (LLMs) locally. It includes sections on inference platforms, engines, user interfaces, specific models for general purpose, coding, vision, audio, and miscellaneous tasks. The repository also covers tools for coding agents, agent frameworks, retrieval-augmented generation, computer use, browser automation, memory management, testing, evaluation, research, training, and fine-tuning. Additionally, there are tutorials on models, prompt engineering, context engineering, inference, agents, retrieval-augmented generation, and miscellaneous topics, along with a section on communities for LLM enthusiasts.

lobe-chat

Lobe Chat is an open-source, modern-design ChatGPT/LLMs UI/Framework. Supports speech-synthesis, multi-modal, and extensible ([function call][docs-functionc-call]) plugin system. One-click **FREE** deployment of your private OpenAI ChatGPT/Claude/Gemini/Groq/Ollama chat application.

SLAM-LLM

SLAM-LLM is a deep learning toolkit designed for researchers and developers to train custom multimodal large language models (MLLM) focusing on speech, language, audio, and music processing. It provides detailed recipes for training and high-performance checkpoints for inference. The toolkit supports tasks such as automatic speech recognition (ASR), text-to-speech (TTS), visual speech recognition (VSR), automated audio captioning (AAC), spatial audio understanding, and music caption (MC). SLAM-LLM features easy extension to new models and tasks, mixed precision training for faster training with less GPU memory, multi-GPU training with data and model parallelism, and flexible configuration based on Hydra and dataclass.

LLMGA

LLMGA (Multimodal Large Language Model-based Generation Assistant) is a tool that leverages Large Language Models (LLMs) to assist users in image generation and editing. It provides detailed language generation prompts for precise control over Stable Diffusion (SD), resulting in more intricate and precise content in generated images. The tool curates a dataset for prompt refinement, similar image generation, inpainting & outpainting, and visual question answering. It offers a two-stage training scheme to optimize SD alignment and a reference-based restoration network to alleviate texture, brightness, and contrast disparities in image editing. LLMGA shows promising generative capabilities and enables wider applications in an interactive manner.

Crypto-Nft-Airdrop-Tool

Crypto-Nft-Airdrop-Tool is a Python tool designed for conducting airdrops of NFTs in the crypto space. It provides functionality for distributing NFTs to a specified audience efficiently. The tool is compatible with Windows platform and requires Python 3. Users can easily manage and execute airdrop campaigns using this tool, enhancing their engagement with the NFT community. The tool simplifies the process of distributing NFTs and ensures a seamless experience for both creators and recipients.

DocsGPT

DocsGPT is an open-source documentation assistant powered by GPT models. It simplifies the process of searching for information in project documentation by allowing developers to ask questions and receive accurate answers. With DocsGPT, users can say goodbye to manual searches and quickly find the information they need. The tool aims to revolutionize project documentation experiences and offers features like live previews, Discord community, guides, and contribution opportunities. It consists of a Flask app, Chrome extension, similarity search index creation script, and a frontend built with Vite and React. Users can quickly get started with DocsGPT by following the provided setup instructions and can contribute to its development by following the guidelines in the CONTRIBUTING.md file. The project follows a Code of Conduct to ensure a harassment-free community environment for all participants. DocsGPT is licensed under MIT and is built with LangChain.

joliGEN

JoliGEN is an integrated framework for training custom generative AI image-to-image models. It implements GAN, Diffusion, and Consistency models for various image translation tasks, including domain and style adaptation with conservation of semantics. The tool is designed for real-world applications such as Controlled Image Generation, Augmented Reality, Dataset Smart Augmentation, and Synthetic to Real transforms. JoliGEN allows for fast and stable training with a REST API server for simplified deployment. It offers a wide range of options and parameters with detailed documentation available for models, dataset formats, and data augmentation.

FuseAI

FuseAI is a repository that focuses on knowledge fusion of large language models. It includes FuseChat, a state-of-the-art 7B LLM on MT-Bench, and FuseLLM, which surpasses Llama-2-7B by fusing three open-source foundation LLMs. The repository provides tech reports, releases, and datasets for FuseChat and FuseLLM, showcasing their performance and advancements in the field of chat models and large language models.

AutoPatent

AutoPatent is a multi-agent framework designed for automatic patent generation. It challenges large language models to generate full-length patents based on initial drafts. The framework leverages planner, writer, and examiner agents along with PGTree and RRAG to craft lengthy, intricate, and high-quality patent documents. It introduces a new metric, IRR (Inverse Repetition Rate), to measure sentence repetition within patents. The tool aims to streamline the patent generation process by automating the creation of detailed and specialized patent documents.

FinRobot

FinRobot is an open-source AI agent platform designed for financial applications using large language models. It transcends the scope of FinGPT, offering a comprehensive solution that integrates a diverse array of AI technologies. The platform's versatility and adaptability cater to the multifaceted needs of the financial industry. FinRobot's ecosystem is organized into four layers, including Financial AI Agents Layer, Financial LLMs Algorithms Layer, LLMOps and DataOps Layers, and Multi-source LLM Foundation Models Layer. The platform's agent workflow involves Perception, Brain, and Action modules to capture, process, and execute financial data and insights. The Smart Scheduler optimizes model diversity and selection for tasks, managed by components like Director Agent, Agent Registration, Agent Adaptor, and Task Manager. The tool provides a structured file organization with subfolders for agents, data sources, and functional modules, along with installation instructions and hands-on tutorials.

semantic-router

The Semantic Router is an intelligent routing tool that utilizes a Mixture-of-Models (MoM) approach to direct OpenAI API requests to the most suitable models based on semantic understanding. It enhances inference accuracy by selecting models tailored to different types of tasks. The tool also automatically selects relevant tools based on the prompt to improve tool selection accuracy. Additionally, it includes features for enterprise security such as PII detection and prompt guard to protect user privacy and prevent misbehavior. The tool implements similarity caching to reduce latency. The comprehensive documentation covers setup instructions, architecture guides, and API references.

CHATPGT-MEV-BOT-ETH

This tool is a bot that monitors the performance of MEV transactions on the Ethereum blockchain. It provides real-time data on MEV profitability, transaction volume, and network congestion. The bot can be used to identify profitable MEV opportunities and to track the performance of MEV strategies.

agentgateway

Agentgateway is an open source data plane optimized for agentic AI connectivity within or across any agent framework or environment. It provides drop-in security, observability, and governance for agent-to-agent and agent-to-tool communication, supporting leading interoperable protocols like Agent2Agent (A2A) and Model Context Protocol (MCP). Highly performant, security-first, multi-tenant, dynamic, and supporting legacy API transformation, agentgateway is designed to handle any scale and run anywhere with any agent framework.

IvyGPT

IvyGPT is a medical large language model that aims to generate the most realistic doctor consultation effects. It has been fine-tuned on high-quality medical Q&A data and trained using human feedback reinforcement learning. The project features full-process training on medical Q&A LLM, multiple fine-tuning methods support, efficient dataset creation tools, and a dataset of over 300,000 high-quality doctor-patient dialogues for training.

dash-infer

DashInfer is a C++ runtime tool designed to deliver production-level implementations highly optimized for various hardware architectures, including x86 and ARMv9. It supports Continuous Batching and NUMA-Aware capabilities for CPU, and can fully utilize modern server-grade CPUs to host large language models (LLMs) up to 14B in size. With lightweight architecture, high precision, support for mainstream open-source LLMs, post-training quantization, optimized computation kernels, NUMA-aware design, and multi-language API interfaces, DashInfer provides a versatile solution for efficient inference tasks. It supports x86 CPUs with AVX2 instruction set and ARMv9 CPUs with SVE instruction set, along with various data types like FP32, BF16, and InstantQuant. DashInfer also offers single-NUMA and multi-NUMA architectures for model inference, with detailed performance tests and inference accuracy evaluations available. The tool is supported on mainstream Linux server operating systems and provides documentation and examples for easy integration and usage.

For similar tasks

AutoGPT

AutoGPT is a revolutionary tool that empowers everyone to harness the power of AI. With AutoGPT, you can effortlessly build, test, and delegate tasks to AI agents, unlocking a world of possibilities. Our mission is to provide the tools you need to focus on what truly matters: innovation and creativity.

agent-os

The Agent OS is an experimental framework and runtime to build sophisticated, long running, and self-coding AI agents. We believe that the most important super-power of AI agents is to write and execute their own code to interact with the world. But for that to work, they need to run in a suitable environment—a place designed to be inhabited by agents. The Agent OS is designed from the ground up to function as a long-term computing substrate for these kinds of self-evolving agents.

chatdev

ChatDev IDE is a tool for building your AI agent, Whether it's NPCs in games or powerful agent tools, you can design what you want for this platform. It accelerates prompt engineering through **JavaScript Support** that allows implementing complex prompting techniques.

module-ballerinax-ai.agent

This library provides functionality required to build ReAct Agent using Large Language Models (LLMs).

npi

NPi is an open-source platform providing Tool-use APIs to empower AI agents with the ability to take action in the virtual world. It is currently under active development, and the APIs are subject to change in future releases. NPi offers a command line tool for installation and setup, along with a GitHub app for easy access to repositories. The platform also includes a Python SDK and examples like Calendar Negotiator and Twitter Crawler. Join the NPi community on Discord to contribute to the development and explore the roadmap for future enhancements.

ai-agents

The 'ai-agents' repository is a collection of books and resources focused on developing AI agents, including topics such as GPT models, building AI agents from scratch, machine learning theory and practice, and basic methods and tools for data analysis. The repository provides detailed explanations and guidance for individuals interested in learning about and working with AI agents.

llms

The 'llms' repository is a comprehensive guide on Large Language Models (LLMs), covering topics such as language modeling, applications of LLMs, statistical language modeling, neural language models, conditional language models, evaluation methods, transformer-based language models, practical LLMs like GPT and BERT, prompt engineering, fine-tuning LLMs, retrieval augmented generation, AI agents, and LLMs for computer vision. The repository provides detailed explanations, examples, and tools for working with LLMs.

ai-app

The 'ai-app' repository is a comprehensive collection of tools and resources related to artificial intelligence, focusing on topics such as server environment setup, PyCharm and Anaconda installation, large model deployment and training, Transformer principles, RAG technology, vector databases, AI image, voice, and music generation, and AI Agent frameworks. It also includes practical guides and tutorials on implementing various AI applications. The repository serves as a valuable resource for individuals interested in exploring different aspects of AI technology.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.