Awesome_Mamba

Computation-Efficient Era: A Comprehensive Survey of State Space Models in Medical Image Analysis

Stars: 125

Awesome Mamba is a curated collection of groundbreaking research papers and articles on Mamba Architecture, a pioneering framework in deep learning known for its selective state spaces and efficiency in processing complex data structures. The repository offers a comprehensive exploration of Mamba architecture through categorized research papers covering various domains like visual recognition, speech processing, remote sensing, video processing, activity recognition, image enhancement, medical imaging, reinforcement learning, natural language processing, 3D recognition, multi-modal understanding, time series analysis, graph neural networks, point cloud analysis, and tabular data handling.

README:

Awesome Mamba

🔥🔥 This is a collection of awesome articles about Mamba models (With a particular emphasis on Medical Image Analysis)🔥🔥

- Our survey paper on arXiv: Computation-Efficient Era: A Comprehensive Survey of State Space Models in Medical Image Analysis ❤️

@misc{heidari2024computationefficient,

title={Computation-Efficient Era: A Comprehensive Survey of State Space Models in Medical Image Analysis},

author={Moein Heidari and Sina Ghorbani Kolahi and Sanaz Karimijafarbigloo and Bobby Azad and Afshin Bozorgpour and Soheila Hatami and Reza Azad and Ali Diba and Ulas Bagci and Dorit Merhof and Ilker Hacihaliloglu},

year={2024},

eprint={2406.03430},

archivePrefix={arXiv},

primaryClass={eess.IV}

}- 😎 First release: June 05, 2024

- Survey Papers

- Architecture Redesign

- Remote Sensing

- Speech Processing

- Video Processing

- Activity Recognition

- Image Enhancement

- Image & Video Generation

- Medical Imaging

- Image Segmentation

- Reinforcement Learning

- Natural Language Processing

- 3D Recognition

- Multi-Modal Understanding

- Time Series

- GNN

- Point Cloud

- Tabular Data

- From Generalization Analysis to Optimization Designs for State Space Models

- MambaOut: Do We Really Need Mamba for Vision? [Github]

- State Space Model for New-Generation Network Alternative to Transformers: A Survey [Github]

- A Survey on Visual Mamba

- Mamba-360: Survey of State Space Models as Transformer Alternative for Long Sequence Modelling: Methods, Applications, and Challenges [Github]

- Vision Mamba: A Comprehensive Survey and Taxonomy [Github]

- A Survey on Vision Mamba: Models, Applications and Challenges [Github]

- HiPPO: Recurrent Memory with Optimal Polynomial Projections [Github]

- S4: Efficiently Modeling Long Sequences with Structured State Spaces [Github]

- H3: Hungry Hungry Hippos: Toward Language Modeling with State Space Models [Github]

- LOCOST: State-Space Models for Long Document Abstractive Summarization [Github]

- Theoretical Foundations of Deep Selective State-Space Models

- S4++: Elevating Long Sequence Modeling with State Memory Reply

- Hieros: Hierarchical Imagination on Structured State Space Sequence World Models [Github]

- State Space Models as Foundation Models: A Control Theoretic Overview

- Selective Structured State-Spaces for Long-Form Video Understanding

- Retentive Network: A Successor to Transformer for Large Language Models[Github]

- Convolutional State Space Models for Long-Range Spatiotemporal Modeling[Github]

- Laughing Hyena Distillery: Extracting Compact Recurrences From Convolutions[Github]

- Resurrecting Recurrent Neural Networks for Long Sequences

- Hyena Hierarchy: Towards Larger Convolutional Language Models[Github]

- Mamba: Linear-time sequence modeling with selective state spaces [Github]

- Transformers are SSMs: Generalized Models and Efficient Algorithms Through Structured State Space Duality [Github]

- Locating and Editing Factual Associations in Mamba [Github]

- MambaMixer: Efficient Selective State Space Models with Dual Token and Channel Selection [Github]

- Jamba: A Hybrid Transformer-Mamba Language Model

- Mamba-ND: Selective State Space Modeling for Multi-Dimensional Data[Github]

- Incorporating Exponential Smoothing into MLP: A Simple but Effective Sequence Model [Github]

- PlainMamba: Improving Non-Hierarchical Mamba in Visual Recognition [Github]

- Understanding Robustness of Visual State Space Models for Image Classification

- Efficientvmamba: Atrous selective scan for light weight visual mamba [Github]

- Localmamba: Visual state space model with windowed selective scan [Github]

- Mamba4Rec: Towards Efficient Sequential Recommendation with Selective State Space Models [Github]

- The hidden attention of mamba models [Github]

- Learning method for S4 with Diagonal State Space Layers using Balanced Truncation

- BlackMamba: Mixture of Experts for State-Space Models[Github]

- MoE-Mamba: Efficient Selective State Space Models with Mixture of Experts[Github]

- Scalable Diffusion Models with State Space Backbone [Github]

- ZigMa: Zigzag Mamba Diffusion Model[Github]

- Spectral State Space Models

- Mamba-unet: Unet-like pure visual mamba for medical image segmentation [Github]

- Mambabyte: Token-free selective state space model

- Vmamba: Visual state space model [Github]

- Vision mamba: Efficient visual representation learning with bidirectional state space model [Github]

- ChangeMamba: Remote Sensing Change Detection with Spatio-Temporal State Space Model [Github]

- RS-Mamba for Large Remote Sensing Image Dense Prediction [Github]

- RS3Mamba: Visual State Space Model for Remote Sensing Images Semantic Segmentation [Github]

- HSIMamba: Hyperspectral Imaging Efficient Feature Learning with Bidirectional State Space for Classification [Github]

- Rsmamba: Remote sensing image classification with state space model [Github]

- HSIMamba: Hyperpsectral Imaging Efficient Feature Learning with Bidirectional State Space for Classification [Github]

- Samba: Semantic Segmentation of Remotely Sensed Images with State Space Model [Github]

- SPMamba: State-space model is all you need in speech separation [Github]

- Dual-path Mamba: Short and Long-term Bidirectional Selective Structured State Space Models for Speech Separation [Github]

- SpikeMba: Multi-Modal Spiking Saliency Mamba for Temporal Video Grounding

- Video mamba suite: State space model as a versatile alternative for video understanding [Github]

- SSM Meets Video Diffusion Models: Efficient Video Generation with Structured State Spaces [Github]

- Videomamba: State space model for efficient video understanding [Github]

- HARMamba: Efficient Wearable Sensor Human Activity Recognition Based on Bidirectional Selective SSM

- VMRNN: Integrating Vision Mamba and LSTM for Efficient and Accurate Spatiotemporal Forecasting[Github]

- Aggregating Local and Global Features via Selective State Spaces Model for Efficient Image Deblurring

- Serpent: Scalable and Efficient Image Restoration via Multi-scale Structured State Space Models

- VmambaIR: Visual State Space Model for Image Restoration [Github]

- Activating Wider Areas in Image Super-Resolution [Github]

- MambaIR: A Simple Baseline for Image Restoration with State-Space Model [Github]

- Pan-Mamba: Effective pan-sharpening with State Space Model [Github]

- U-shaped Vision Mamba for Single Image Dehazing [Github]

- Vim4Path: Self-Supervised Vision Mamba for Histopathology Images [Github]

- VMambaMorph: a Visual Mamba-based Framework with Cross-Scan Module for Deformable 3D Image Registration [Github]

- UltraLight VM-UNet: Parallel Vision Mamba Significantly Reduces Parameters for Skin Lesion Segmentation [Github]

- Rotate to Scan: UNet-like Mamba with Triplet SSM Module for Medical Image Segmentation

- Integrating Mamba Sequence Model and Hierarchical Upsampling Network for Accurate Semantic Segmentation of Multiple Sclerosis Legion

- CMViM: Contrastive Masked Vim Autoencoder for 3D Multi-modal Representation Learning for AD classification

- H-vmunet: High-order vision mamba unet for medical image segmentation [Github]

- ProMamba: Prompt-Mamba for polyp segmentation

- Vm-unet-v2 rethinking vision mamba unet for medical image segmentation [Github]

- MD-Dose: A Diffusion Model based on the Mamba for Radiotherapy Dose Prediction [Github]

- Large Window-based Mamba UNet for Medical Image Segmentation: Beyond Convolution and Self-attention [Github]

- MambaMIL: Enhancing Long Sequence Modeling with Sequence Reordering in Computational Pathology [Github]

- Clinicalmamba: A generative clinical language model on longitudinal clinical notes [Github]

- Lightm-unet: Mamba assists in lightweight unet for medical image segmentation [Github]

- MedMamba: Vision Mamba for Medical Image Classification [Github]

- Weak-Mamba-UNet: Visual Mamba Makes CNN and ViT Work Better for Scribble-based Medical Image Segmentation [Github]

- P-Mamba: Marrying Perona Malik Diffusion with Mamba for Efficient Pediatric Echocardiographic Left Ventricular Segmentation

- Semi-Mamba-UNet: Pixel-Level Contrastive Cross-Supervised Visual Mamba-based UNet for Semi-Supervised Medical Image Segmentation [Github]

- FD-Vision Mamba for Endoscopic Exposure Correction [Github]

- Swin-umamba: Mamba-based unet with imagenet-based pretraining [Github]

- Vm-unet: Vision mamba unet for medical image segmentation[Github]

- Vivim: a video vision mamba for medical video object segmentation [Github]

- Segmamba: Long-range sequential modeling mamba for 3d medical image segmentation [Github]

- T-Mamba: Frequency-Enhanced Gated Long-Range Dependency for Tooth 3D CBCT Segmentation [Github]

- U-mamba: Enhancing long-range dependency for biomedical image segmentation [Github]

- MambaMorph: a Mamba-based Backbone with Contrastive Feature Learning for Deformable MR-CT Registration[Github]

- nnMamba: 3D Biomedical Image Segmentation, Classification and Landmark Detection with State Space Model[Github]

- MambaMIR: An Arbitrary-Masked Mamba for Joint Medical Image Reconstruction and Uncertainty Estimation[Github]

- ViM-UNet: Vision Mamba for Biomedical Segmentation[Github]

- VM-DDPM: Vision Mamba Diffusion for Medical Image Synthesis

- HC-Mamba: Vision MAMBA with Hybrid Convolutional Techniques for Medical Image Segmentation

- I2I-Mamba: Multi-modal Medical Image Synthesis via Selective State Space Modeling [GitHub]

- Decision Mamba: Reinforcement Learning via Sequence Modeling with Selective State Spaces [Github]

- MAMBA: an Effective World Model Approach for Meta-Reinforcement Learning [Github]

- RankMamba Benchmarking Mamba's Document Ranking Performance in the Era of Transformers [Github]

- Densemamba: State space models with dense hidden connection for efficient large language models [Github]

- Is Mamba Capable of In-Context Learning?

- Gamba: Marry Gaussian Splatting with Mamba for single view 3D reconstruction

- Motion mamba: Efficient and long sequence motion generation with hierarchical and bidirectional selective ssm [Github]

- Meteor: Mamba-based Traversal of Rationale for Large Language and Vision Models[Github]

- ReMamber: Referring Image Segmentation with Mamba Twister

- VL-Mamba: Exploring State Space Models for Multimodal Learning

- Cobra: Extending Mamba to Multi-Modal Large Language Model for Efficient Inference[Github]

- SurvMamba: State Space Model with Multi-grained Multi-modal Interaction for Survival Prediction

- MambaDFuse: A Mamba-based Dual-phase Model forMulti-modality Image Fusion

- APRICOT-Mamba: Acuity Prediction in Intensive Care Unit (ICU): Development and Validation of a Stability, Transitions, and Life-Sustaining Therapies Prediction Model

- SiMBA: Simplified Mamba-Based Architecture for Vision and Multivariate Time series [Github]

- Is Mamba Effective for Time Series Forecasting? [Github]

- TimeMachine: A Time Series is Worth 4 Mambas for Long-term Forecasting [Github]

- MambaStock: Selective state space model for stock prediction [Github]

- Bi-Mamba4TS: Bidirectional Mamba for Time Series Forecasting

- STG-Mamba: Spatial-Temporal Graph Learning via Selective State Space Model

- Graph Mamba: Towards Learning on Graphs with State Space Models [Github]

- Graph-Mamba: Towards long-range graph sequence modeling with selective state spaces [Github]

- Recurrent Distance Filtering for Graph Representation Learning[Github]

- Modeling multivariate biosignals with graph neural networks and structured state space models[Github]

- Point mamba: A novel point cloud backbone based on state space model with octree-based ordering strategy [Github]

- Point Could Mamba: Point Cloud Learning via State Space Model [Github]

- PointMamba: A Simple State Space Model for Point Cloud Analysis [Github]

- 3DMambaIPF: A State Space Model for Iterative Point Cloud Filtering via Differentiable Rendering

- 3DMambaComplete: Exploring Structured State Space Model for Point Cloud Completion

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome_Mamba

Similar Open Source Tools

Awesome_Mamba

Awesome Mamba is a curated collection of groundbreaking research papers and articles on Mamba Architecture, a pioneering framework in deep learning known for its selective state spaces and efficiency in processing complex data structures. The repository offers a comprehensive exploration of Mamba architecture through categorized research papers covering various domains like visual recognition, speech processing, remote sensing, video processing, activity recognition, image enhancement, medical imaging, reinforcement learning, natural language processing, 3D recognition, multi-modal understanding, time series analysis, graph neural networks, point cloud analysis, and tabular data handling.

LLMSys-PaperList

This repository provides a comprehensive list of academic papers, articles, tutorials, slides, and projects related to Large Language Model (LLM) systems. It covers various aspects of LLM research, including pre-training, serving, system efficiency optimization, multi-model systems, image generation systems, LLM applications in systems, ML systems, survey papers, LLM benchmarks and leaderboards, and other relevant resources. The repository is regularly updated to include the latest developments in this rapidly evolving field, making it a valuable resource for researchers, practitioners, and anyone interested in staying abreast of the advancements in LLM technology.

DecryptPrompt

This repository does not provide a tool, but rather a collection of resources and strategies for academics in the field of artificial intelligence who are feeling depressed or overwhelmed by the rapid advancements in the field. The resources include articles, blog posts, and other materials that offer advice on how to cope with the challenges of working in a fast-paced and competitive environment.

cosmos-predict1

Cosmos-Predict1 is a specialized branch of Cosmos World Foundation Models (WFMs) focused on future state prediction, offering diffusion-based and autoregressive-based world foundation models for Text2World and Video2World generation. It includes image and video tokenizers for efficient tokenization, along with post-training and pre-training scripts for Physical AI builders. The tool provides various models for different tasks such as text to visual world generation, video-based future visual world generation, and tokenization of images and videos.

rlhf_thinking_model

This repository is a collection of research notes and resources focusing on training large language models (LLMs) and Reinforcement Learning from Human Feedback (RLHF). It includes methodologies, techniques, and state-of-the-art approaches for optimizing preferences and model alignment in LLM training. The purpose is to serve as a reference for researchers and engineers interested in reinforcement learning, large language models, model alignment, and alternative RL-based methods.

awesome-AIOps

awesome-AIOps is a curated list of academic researches and industrial materials related to Artificial Intelligence for IT Operations (AIOps). It includes resources such as competitions, white papers, blogs, tutorials, benchmarks, tools, companies, academic materials, talks, workshops, papers, and courses covering various aspects of AIOps like anomaly detection, root cause analysis, incident management, microservices, dependency tracing, and more.

Awesome-LLM-Survey

This repository, Awesome-LLM-Survey, serves as a comprehensive collection of surveys related to Large Language Models (LLM). It covers various aspects of LLM, including instruction tuning, human alignment, LLM agents, hallucination, multi-modal capabilities, and more. Researchers are encouraged to contribute by updating information on their papers to benefit the LLM survey community.

ai_all_resources

This repository is a compilation of excellent ML and DL tutorials created by various individuals and organizations. It covers a wide range of topics, including machine learning fundamentals, deep learning, computer vision, natural language processing, reinforcement learning, and more. The resources are organized into categories, making it easy to find the information you need. Whether you're a beginner or an experienced practitioner, you're sure to find something valuable in this repository.

long-llms-learning

A repository sharing the panorama of the methodology literature on Transformer architecture upgrades in Large Language Models for handling extensive context windows, with real-time updating the newest published works. It includes a survey on advancing Transformer architecture in long-context large language models, flash-ReRoPE implementation, latest news on data engineering, lightning attention, Kimi AI assistant, chatglm-6b-128k, gpt-4-turbo-preview, benchmarks like InfiniteBench and LongBench, long-LLMs-evals for evaluating methods for enhancing long-context capabilities, and LLMs-learning for learning technologies and applicated tasks about Large Language Models.

Awesome-AI-Data-Guided-Projects

A curated list of data science & AI guided projects to start building your portfolio. The repository contains guided projects covering various topics such as large language models, time series analysis, computer vision, natural language processing (NLP), and data science. Each project provides detailed instructions on how to implement specific tasks using different tools and technologies.

xllm

xLLM is an efficient LLM inference framework optimized for Chinese AI accelerators, enabling enterprise-grade deployment with enhanced efficiency and reduced cost. It adopts a service-engine decoupled inference architecture, achieving breakthrough efficiency through technologies like elastic scheduling, dynamic PD disaggregation, multi-stream parallel computing, graph fusion optimization, and global KV cache management. xLLM supports deployment of mainstream large models on Chinese AI accelerators, empowering enterprises in scenarios like intelligent customer service, risk control, supply chain optimization, ad recommendation, and more.

interpret

InterpretML is an open-source package that incorporates state-of-the-art machine learning interpretability techniques under one roof. With this package, you can train interpretable glassbox models and explain blackbox systems. InterpretML helps you understand your model's global behavior, or understand the reasons behind individual predictions. Interpretability is essential for: - Model debugging - Why did my model make this mistake? - Feature Engineering - How can I improve my model? - Detecting fairness issues - Does my model discriminate? - Human-AI cooperation - How can I understand and trust the model's decisions? - Regulatory compliance - Does my model satisfy legal requirements? - High-risk applications - Healthcare, finance, judicial, ...

dash-infer

DashInfer is a C++ runtime tool designed to deliver production-level implementations highly optimized for various hardware architectures, including x86 and ARMv9. It supports Continuous Batching and NUMA-Aware capabilities for CPU, and can fully utilize modern server-grade CPUs to host large language models (LLMs) up to 14B in size. With lightweight architecture, high precision, support for mainstream open-source LLMs, post-training quantization, optimized computation kernels, NUMA-aware design, and multi-language API interfaces, DashInfer provides a versatile solution for efficient inference tasks. It supports x86 CPUs with AVX2 instruction set and ARMv9 CPUs with SVE instruction set, along with various data types like FP32, BF16, and InstantQuant. DashInfer also offers single-NUMA and multi-NUMA architectures for model inference, with detailed performance tests and inference accuracy evaluations available. The tool is supported on mainstream Linux server operating systems and provides documentation and examples for easy integration and usage.

joliGEN

JoliGEN is an integrated framework for training custom generative AI image-to-image models. It implements GAN, Diffusion, and Consistency models for various image translation tasks, including domain and style adaptation with conservation of semantics. The tool is designed for real-world applications such as Controlled Image Generation, Augmented Reality, Dataset Smart Augmentation, and Synthetic to Real transforms. JoliGEN allows for fast and stable training with a REST API server for simplified deployment. It offers a wide range of options and parameters with detailed documentation available for models, dataset formats, and data augmentation.

LLMs4TS

LLMs4TS is a repository focused on the application of cutting-edge AI technologies for time-series analysis. It covers advanced topics such as self-supervised learning, Graph Neural Networks for Time Series, Large Language Models for Time Series, Diffusion models, Mixture-of-Experts architectures, and Mamba models. The resources in this repository span various domains like healthcare, finance, and traffic, offering tutorials, courses, and workshops from prestigious conferences. Whether you're a professional, data scientist, or researcher, the tools and techniques in this repository can enhance your time-series data analysis capabilities.

slime

Slime is an LLM post-training framework for RL scaling that provides high-performance training and flexible data generation capabilities. It connects Megatron with SGLang for efficient training and enables custom data generation workflows through server-based engines. The framework includes modules for training, rollout, and data buffer management, offering a comprehensive solution for RL scaling.

For similar tasks

Awesome_Mamba

Awesome Mamba is a curated collection of groundbreaking research papers and articles on Mamba Architecture, a pioneering framework in deep learning known for its selective state spaces and efficiency in processing complex data structures. The repository offers a comprehensive exploration of Mamba architecture through categorized research papers covering various domains like visual recognition, speech processing, remote sensing, video processing, activity recognition, image enhancement, medical imaging, reinforcement learning, natural language processing, 3D recognition, multi-modal understanding, time series analysis, graph neural networks, point cloud analysis, and tabular data handling.

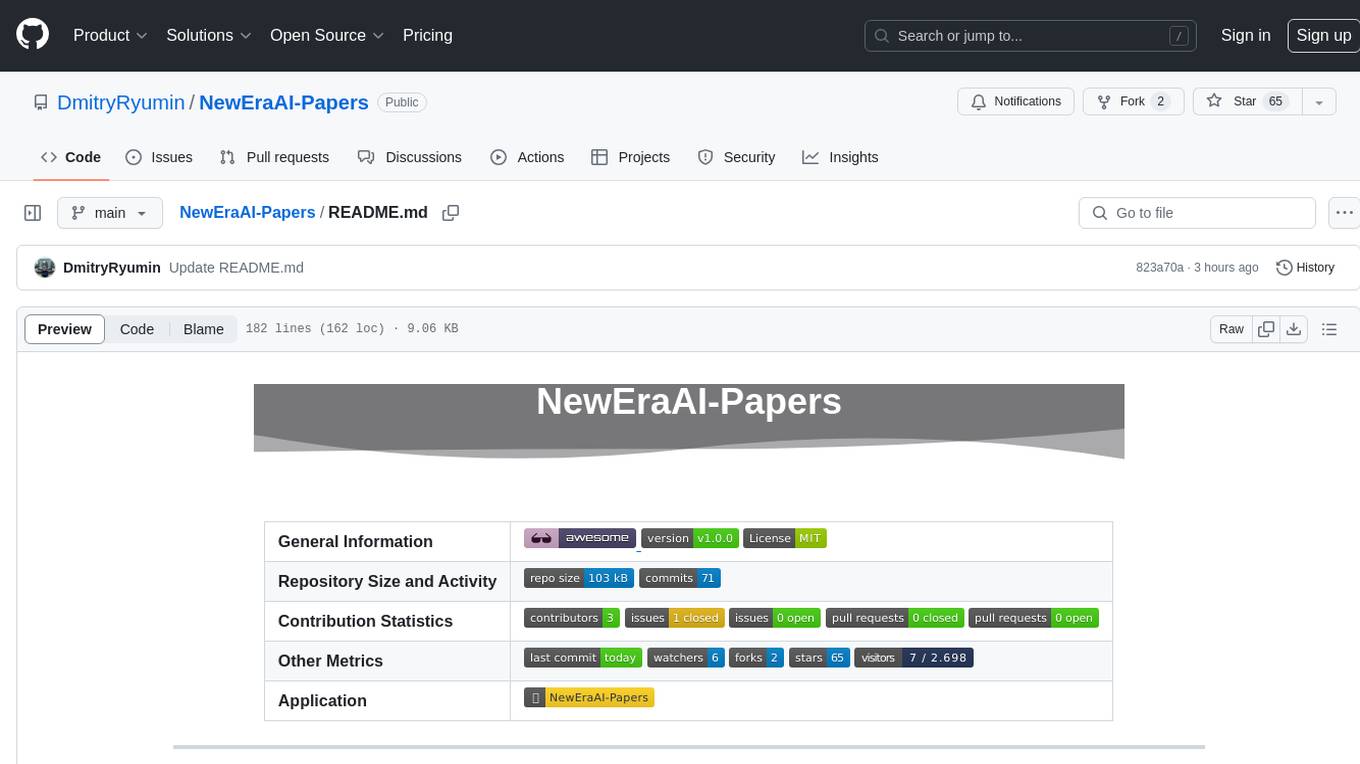

NewEraAI-Papers

The NewEraAI-Papers repository provides links to collections of influential and interesting research papers from top AI conferences, along with open-source code to promote reproducibility and provide detailed implementation insights beyond the scope of the article. Users can stay up to date with the latest advances in AI research by exploring this repository. Contributions to improve the completeness of the list are welcomed, and users can create pull requests, open issues, or contact the repository owner via email to enhance the repository further.

OnAIR

The On-board Artificial Intelligence Research (OnAIR) Platform is a framework that enables AI algorithms written in Python to interact with NASA's cFS. It is intended to explore research concepts in autonomous operations in a simulated environment. The platform provides tools for generating environments, handling telemetry data through Redis, running unit tests, and contributing to the repository. Users can set up a conda environment, configure telemetry and Redis examples, run simulations, and conduct unit tests to ensure the functionality of their AI algorithms. The platform also includes guidelines for licensing, copyright, and contributions to the repository.

model-catalog

model-catalog is a repository containing standardized JSON descriptors for Large Language Model (LLM) model files. Each model is described in a JSON file with details about the model, authors, additional resources, available model files, and providers. The format captures factors like model size, architecture, file format, and quantization format. A Github action merges individual JSON files from the `models/` directory into a `catalog.json` file, which is validated using a JSON schema. Contributors can help by adding new model JSON files following the contribution process.

22AIE111-Object-Oriented-Programming-in-Java-S2-2025

The 'Object Oriented Programming in Java' repository provides notes and code examples organized into units to help users understand and practice Java concepts step-by-step. It includes theoretical notes, practical Java examples, setup files for Visual Studio Code and IntelliJ IDEA, instructions on setting up Java, running Java programs from the command line, and loading projects in VS Code or IntelliJ IDEA. Users can contribute by opening issues or submitting pull requests. The repository is intended for educational purposes, allowing forking and modification for personal study or classroom use.

support-genAI-book

This repository serves as a support page for the book 'Deciphering Generative AI from Original Papers' released by Gijutsu-Hyohron Co., Ltd. It includes answers to exercises and errata from the book. The exercises are provided in chapter-specific .md files in the 'exercises' directory. Please note that there may be some rendering issues with GitHub's math rendering, and the answers are just examples for reference. Contributions to this repository can be made by following the guidelines in CONTRIBUTING.md.

Devon

Devon is an open-source pair programmer tool designed to facilitate collaborative coding sessions. It provides features such as multi-file editing, codebase exploration, test writing, bug fixing, and architecture exploration. The tool supports Anthropic, OpenAI, and Groq APIs, with plans to add more models in the future. Devon is community-driven, with ongoing development goals including multi-model support, plugin system for tool builders, self-hostable Electron app, and setting SOTA on SWE-bench Lite. Users can contribute to the project by developing core functionality, conducting research on agent performance, providing feedback, and testing the tool.

Perplexica

Perplexica is an open-source AI-powered search engine that utilizes advanced machine learning algorithms to provide clear answers with sources cited. It offers various modes like Copilot Mode, Normal Mode, and Focus Modes for specific types of questions. Perplexica ensures up-to-date information by using SearxNG metasearch engine. It also features image and video search capabilities and upcoming features include finalizing Copilot Mode and adding Discover and History Saving features.

For similar jobs

Awesome_Mamba

Awesome Mamba is a curated collection of groundbreaking research papers and articles on Mamba Architecture, a pioneering framework in deep learning known for its selective state spaces and efficiency in processing complex data structures. The repository offers a comprehensive exploration of Mamba architecture through categorized research papers covering various domains like visual recognition, speech processing, remote sensing, video processing, activity recognition, image enhancement, medical imaging, reinforcement learning, natural language processing, 3D recognition, multi-modal understanding, time series analysis, graph neural networks, point cloud analysis, and tabular data handling.

unilm

The 'unilm' repository is a collection of tools, models, and architectures for Foundation Models and General AI, focusing on tasks such as NLP, MT, Speech, Document AI, and Multimodal AI. It includes various pre-trained models, such as UniLM, InfoXLM, DeltaLM, MiniLM, AdaLM, BEiT, LayoutLM, WavLM, VALL-E, and more, designed for tasks like language understanding, generation, translation, vision, speech, and multimodal processing. The repository also features toolkits like s2s-ft for sequence-to-sequence fine-tuning and Aggressive Decoding for efficient sequence-to-sequence decoding. Additionally, it offers applications like TrOCR for OCR, LayoutReader for reading order detection, and XLM-T for multilingual NMT.

llm-app-stack

LLM App Stack, also known as Emerging Architectures for LLM Applications, is a comprehensive list of available tools, projects, and vendors at each layer of the LLM app stack. It covers various categories such as Data Pipelines, Embedding Models, Vector Databases, Playgrounds, Orchestrators, APIs/Plugins, LLM Caches, Logging/Monitoring/Eval, Validators, LLM APIs (proprietary and open source), App Hosting Platforms, Cloud Providers, and Opinionated Clouds. The repository aims to provide a detailed overview of tools and projects for building, deploying, and maintaining enterprise data solutions, AI models, and applications.

awesome-deeplogic

Awesome deep logic is a curated list of papers and resources focusing on integrating symbolic logic into deep neural networks. It includes surveys, tutorials, and research papers that explore the intersection of logic and deep learning. The repository aims to provide valuable insights and knowledge on how logic can be used to enhance reasoning, knowledge regularization, weak supervision, and explainability in neural networks.

Awesome-LLMs-on-device

Welcome to the ultimate hub for on-device Large Language Models (LLMs)! This repository is your go-to resource for all things related to LLMs designed for on-device deployment. Whether you're a seasoned researcher, an innovative developer, or an enthusiastic learner, this comprehensive collection of cutting-edge knowledge is your gateway to understanding, leveraging, and contributing to the exciting world of on-device LLMs.

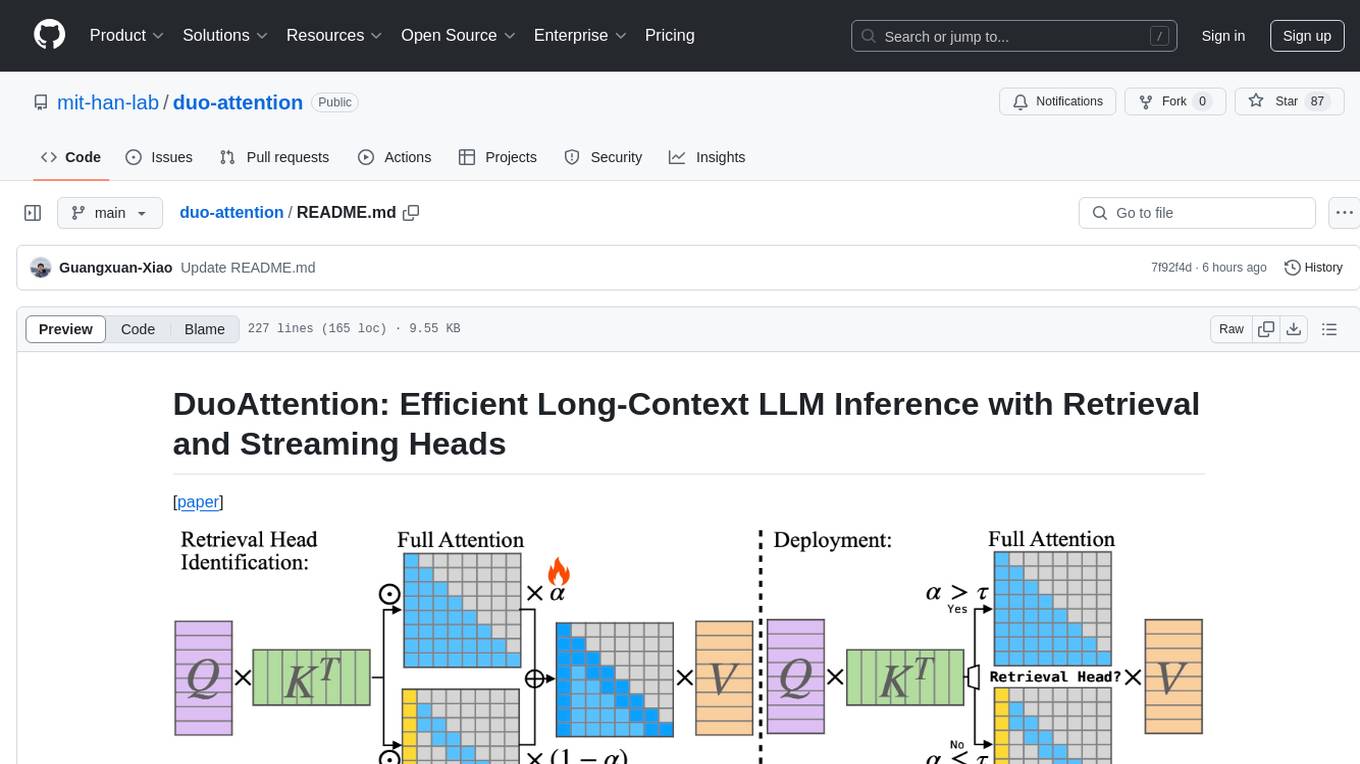

duo-attention

DuoAttention is a framework designed to optimize long-context large language models (LLMs) by reducing memory and latency during inference without compromising their long-context abilities. It introduces a concept of Retrieval Heads and Streaming Heads to efficiently manage attention across tokens. By applying a full Key and Value (KV) cache to retrieval heads and a lightweight, constant-length KV cache to streaming heads, DuoAttention achieves significant reductions in memory usage and decoding time for LLMs. The framework uses an optimization-based algorithm with synthetic data to accurately identify retrieval heads, enabling efficient inference with minimal accuracy loss compared to full attention. DuoAttention also supports quantization techniques for further memory optimization, allowing for decoding of up to 3.3 million tokens on a single GPU.

llm_note

LLM notes repository contains detailed analysis on transformer models, language model compression, inference and deployment, high-performance computing, and system optimization methods. It includes discussions on various algorithms, frameworks, and performance analysis related to large language models and high-performance computing. The repository serves as a comprehensive resource for understanding and optimizing language models and computing systems.

Awesome-Resource-Efficient-LLM-Papers

A curated list of high-quality papers on resource-efficient Large Language Models (LLMs) with a focus on various aspects such as architecture design, pre-training, fine-tuning, inference, system design, and evaluation metrics. The repository covers topics like efficient transformer architectures, non-transformer architectures, memory efficiency, data efficiency, model compression, dynamic acceleration, deployment optimization, support infrastructure, and other related systems. It also provides detailed information on computation metrics, memory metrics, energy metrics, financial cost metrics, network communication metrics, and other metrics relevant to resource-efficient LLMs. The repository includes benchmarks for evaluating the efficiency of NLP models and references for further reading.