OnAIR

The On-board Artificial Intelligence Research (OnAIR) Platform is a framework that enables AI algorithms written in Python to interact with NASA's cFS. It is intended to explore research concepts in autonomous operations in a simulated environment.

Stars: 66

The On-board Artificial Intelligence Research (OnAIR) Platform is a framework that enables AI algorithms written in Python to interact with NASA's cFS. It is intended to explore research concepts in autonomous operations in a simulated environment. The platform provides tools for generating environments, handling telemetry data through Redis, running unit tests, and contributing to the repository. Users can set up a conda environment, configure telemetry and Redis examples, run simulations, and conduct unit tests to ensure the functionality of their AI algorithms. The platform also includes guidelines for licensing, copyright, and contributions to the repository.

README:

The On-board Artificial Intelligence Research (OnAIR) Platform is a framework that enables AI algorithms written in Python to interact with NASA's cFS. It is intended to explore research concepts in autonomous operations in a simulated environment.

Create a conda environment with the necessary packages

conda env create -f environment.yml

Using the redis_adapter.py as the DataSource, telemetry can be received through multiple Redis channels and inserted into the full data frame.

The redis_example.ini uses a very basic setup:

- meta : redis_example_CONFIG.json

- parser : onair/data_handling/redis_adapter.py

- plugins : one of each type, all of them 'generic' that do nothing

The telemetry file defines the subscribed channels, data frame and subsystems.

- subscriptions : defines channel names where telemetry will be received

- order : designation of where each 'channel.telemetry_item' is to be put in full data frame (the data header)

- subsystems : data for each specific telemetry item (descriptions only for redis example)

The Redis adapter expects any published telemetry on a channel to include:

- time

- every telemetry_item as described under "order" as 'channel.telemetry_item'

All messages sent must be json format (key to value) and will warn when it is not then discard the message (outputting what was received first). Keys should match the required telemetry_item names with the addition of "time." Values should be floats.

If not already running, start a Redis server on 'localhost', port:6379 (typical defaults)

redis-server

Start up OnAIR with the redis_example.ini file:

python driver.py onair/config/redis_example.ini

You should see:

Redis Adapter ignoring file

---- Redis adapter connecting to server...

---- ... connected!

---- Subscribing to channel: state_0

---- Subscribing to channel: state_1

---- Subscribing to channel: state_2

---- Redis adapter: channel 'state_0' received message type: subscribe.

---- Redis adapter: channel 'state_1' received message type: subscribe.

---- Redis adapter: channel 'state_2' received message type: subscribe.

***************************************************

************ SIMULATION STARTED ************

***************************************************

In another process run the experimental publisher:

python redis-experiment-publisher.py

This will send telemetry every 2 seconds, one channel at random until all 3 channels have recieved data then repeat for a total of 9 times (all of which can be changed in the file). Its output should be similar to this:

Published data to state_0, [0, 0.1, 0.2]

Published data to state_1, [1, 1.1, 1.2]

Published data to state_2, [2, 2.1, 2.2]

Completed 1 loops

Published data to state_2, [3, 3.1, 3.2]

Published data to state_1, [4, 4.1, 4.2]

And OnAir should begin receiving data similarly to this:

--------------------- STEP 1 ---------------------

CURRENT DATA: [0, 0.1, 0.2, '-', '-', '-', '-']

INTERPRETED SYSTEM STATUS: ---

--------------------- STEP 2 ---------------------

CURRENT DATA: [1, 0.1, 0.2, 1.1, 1.2, '-', '-']

INTERPRETED SYSTEM STATUS: ---

--------------------- STEP 3 ---------------------

CURRENT DATA: [2, 0.1, 0.2, 1.1, 1.2, 2.1, 2.2]

INTERPRETED SYSTEM STATUS: ---

--------------------- STEP 4 ---------------------

CURRENT DATA: [3, 0.1, 0.2, 1.1, 1.2, 3.1, 3.2]

INTERPRETED SYSTEM STATUS: ---

--------------------- STEP 5 ---------------------

CURRENT DATA: [4, 0.1, 0.2, 4.1, 4.2, 3.1, 3.2]

INTERPRETED SYSTEM STATUS: ---

Instructions on how to run unit tests for OnAIR

pytest, pytest-mock, coverage

pytest-randomly

From the parent directory of your local repository:

python driver.py -t

Options that may be added to the driver.py test run. Use these at your own discretion.

--conftest-seed=### - set the random values seed for this run

--randomly-seed=### - set the random order seed for this run

--verbose or -v - set verbosity level, also -vv, -vvv, etc.

-k KEYWORD - only run tests that match the KEYWORD (see pytest --help)

NOTE: Running tests will output results using provided seeds, but each seed is random when not set directly. Example start of test output:

Using --conftest-seed=1691289424

===== test session starts =======

platform linux -- Python 3.11.2, pytest-7.2.0, pluggy-1.3.0

Using --randomly-seed=1956010105

Copy and paste previously output seeds (or type them out) as the arguments to repeat results.

For the equivalent of the driver.py run:

python -m coverage run --branch --source=onair,plugins -m pytest ./test/

python -m - invokes the python runtime on the library following the -m

coverage run - runs coverage data collection during testing, wrapping itself on the test runner used

--branch - includes code branching information in the coverage report

--source=onair,plugins - tells coverage where the code under test exists for reporting line hits

-m pytest - tells coverage what test runner (framework) to wrap

./test - run all tests found in this directory and subdirectories

Options that may be added to the command line test run. Use these at your own discretion.

--disable-warnings - removes the warning reports, but displays count (i.e., 124 passed, 1 warning in 0.65s)

-p no:randomly - ONLY required to stop random order testing IFF pytest-randomly installed

--conftest-seed=### - set the random values seed for this run

--randomly-seed=### - set the random order seed for this run

--verbose or -v - set verbosity level, also -vv, -vvv, etc.

-k KEYWORD - only run tests that match the KEYWORD (see pytest --help)

NOTE: see note about seeds in driver.py section above

NOTE: you may or may not need the python -m to run coverage report or html

coverage report - prints basic results in terminal

or

coverage html - creates htmlcov/index.html, automatic when using driver.py for testing

then

<browser_here> htmlcov/index.html - browsable coverage (i.e., firefox htmlcov/index.html)

OnAIR can be setup to subscribe to and recieve messages from cFS. For more information see doc/cfs-onair-guide.md

Please refer to NOSA GSC-19165-1 OnAIR.pdf and COPYRIGHT.

Please open an issue if you find any problems. We are a small team, but will try to respond in a timely fashion.

If you would like to contribute to the repository, GREAT! First you will need to complete the Individual Contributor License Agreement (pdf). Then, email it to [email protected] with [email protected] CCed. Please include your github username in the email.

Next, please create an issue for the fix or feature and note that you intend to work on it. Fork the repository and create a branch with a name that starts with the issue number. Once done, submit your pull request and we'll take a look. You may want to make draft pull requests to solicit feedback on larger changes.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for OnAIR

Similar Open Source Tools

OnAIR

The On-board Artificial Intelligence Research (OnAIR) Platform is a framework that enables AI algorithms written in Python to interact with NASA's cFS. It is intended to explore research concepts in autonomous operations in a simulated environment. The platform provides tools for generating environments, handling telemetry data through Redis, running unit tests, and contributing to the repository. Users can set up a conda environment, configure telemetry and Redis examples, run simulations, and conduct unit tests to ensure the functionality of their AI algorithms. The platform also includes guidelines for licensing, copyright, and contributions to the repository.

probsem

ProbSem is a repository that provides a framework to leverage large language models (LLMs) for assigning context-conditional probability distributions over queried strings. It supports OpenAI engines and HuggingFace CausalLM models, and is flexible for research applications in linguistics, cognitive science, program synthesis, and NLP. Users can define prompts, contexts, and queries to derive probability distributions over possible completions, enabling tasks like cloze completion, multiple-choice QA, semantic parsing, and code completion. The repository offers CLI and API interfaces for evaluation, with options to customize models, normalize scores, and adjust temperature for probability distributions.

oasis

OASIS is a scalable, open-source social media simulator that integrates large language models with rule-based agents to realistically mimic the behavior of up to one million users on platforms like Twitter and Reddit. It facilitates the study of complex social phenomena such as information spread, group polarization, and herd behavior, offering a versatile tool for exploring diverse social dynamics and user interactions in digital environments. With features like scalability, dynamic environments, diverse action spaces, and integrated recommendation systems, OASIS provides a comprehensive platform for simulating social media interactions at a large scale.

storm

STORM is a LLM system that writes Wikipedia-like articles from scratch based on Internet search. While the system cannot produce publication-ready articles that often require a significant number of edits, experienced Wikipedia editors have found it helpful in their pre-writing stage. **Try out our [live research preview](https://storm.genie.stanford.edu/) to see how STORM can help your knowledge exploration journey and please provide feedback to help us improve the system 🙏!**

Bard-API

The Bard API is a Python package that returns responses from Google Bard through the value of a cookie. It is an unofficial API that operates through reverse-engineering, utilizing cookie values to interact with Google Bard for users struggling with frequent authentication problems or unable to authenticate via Google Authentication. The Bard API is not a free service, but rather a tool provided to assist developers with testing certain functionalities due to the delayed development and release of Google Bard's API. It has been designed with a lightweight structure that can easily adapt to the emergence of an official API. Therefore, using it for any other purposes is strongly discouraged. If you have access to a reliable official PaLM-2 API or Google Generative AI API, replace the provided response with the corresponding official code. Check out https://github.com/dsdanielpark/Bard-API/issues/262.

CJA_Comprehensive_Jailbreak_Assessment

This public repository contains the paper 'Comprehensive Assessment of Jailbreak Attacks Against LLMs'. It provides a labeling method to label results using Python and offers the opportunity to submit evaluation results to the leaderboard. Full codes will be released after the paper is accepted.

llm-random

This repository contains code for research conducted by the LLM-Random research group at IDEAS NCBR in Warsaw, Poland. The group focuses on developing and using this repository to conduct research. For more information about the group and its research, refer to their blog, llm-random.github.io.

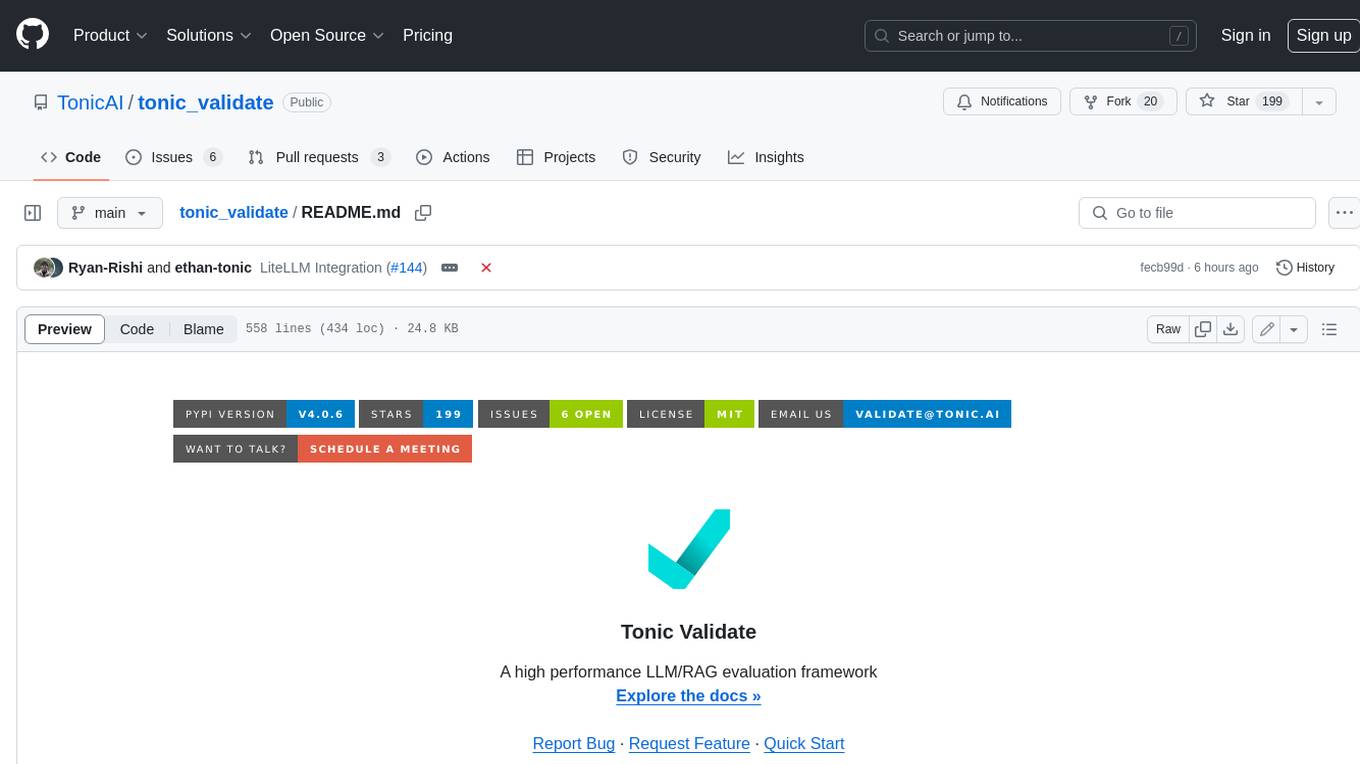

tonic_validate

Tonic Validate is a framework for the evaluation of LLM outputs, such as Retrieval Augmented Generation (RAG) pipelines. Validate makes it easy to evaluate, track, and monitor your LLM and RAG applications. Validate allows you to evaluate your LLM outputs through the use of our provided metrics which measure everything from answer correctness to LLM hallucination. Additionally, Validate has an optional UI to visualize your evaluation results for easy tracking and monitoring.

LeanCopilot

Lean Copilot is a tool that enables the use of large language models (LLMs) in Lean for proof automation. It provides features such as suggesting tactics/premises, searching for proofs, and running inference of LLMs. Users can utilize built-in models from LeanDojo or bring their own models to run locally or on the cloud. The tool supports platforms like Linux, macOS, and Windows WSL, with optional CUDA and cuDNN for GPU acceleration. Advanced users can customize behavior using Tactic APIs and Model APIs. Lean Copilot also allows users to bring their own models through ExternalGenerator or ExternalEncoder. The tool comes with caveats such as occasional crashes and issues with premise selection and proof search. Users can get in touch through GitHub Discussions for questions, bug reports, feature requests, and suggestions. The tool is designed to enhance theorem proving in Lean using LLMs.

WindowsAgentArena

Windows Agent Arena (WAA) is a scalable Windows AI agent platform designed for testing and benchmarking multi-modal, desktop AI agents. It provides researchers and developers with a reproducible and realistic Windows OS environment for AI research, enabling testing of agentic AI workflows across various tasks. WAA supports deploying agents at scale using Azure ML cloud infrastructure, allowing parallel running of multiple agents and delivering quick benchmark results for hundreds of tasks in minutes.

mentals-ai

Mentals AI is a tool designed for creating and operating agents that feature loops, memory, and various tools, all through straightforward markdown syntax. This tool enables you to concentrate solely on the agent’s logic, eliminating the necessity to compose underlying code in Python or any other language. It redefines the foundational frameworks for future AI applications by allowing the creation of agents with recursive decision-making processes, integration of reasoning frameworks, and control flow expressed in natural language. Key concepts include instructions with prompts and references, working memory for context, short-term memory for storing intermediate results, and control flow from strings to algorithms. The tool provides a set of native tools for message output, user input, file handling, Python interpreter, Bash commands, and short-term memory. The roadmap includes features like a web UI, vector database tools, agent's experience, and tools for image generation and browsing. The idea behind Mentals AI originated from studies on psychoanalysis executive functions and aims to integrate 'System 1' (cognitive executor) with 'System 2' (central executive) to create more sophisticated agents.

vectara-answer

Vectara Answer is a sample app for Vectara-powered Summarized Semantic Search (or question-answering) with advanced configuration options. For examples of what you can build with Vectara Answer, check out Ask News, LegalAid, or any of the other demo applications.

vulnerability-analysis

The NVIDIA AI Blueprint for Vulnerability Analysis for Container Security showcases accelerated analysis on common vulnerabilities and exposures (CVE) at an enterprise scale, reducing mitigation time from days to seconds. It enables security analysts to determine software package vulnerabilities using large language models (LLMs) and retrieval-augmented generation (RAG). The blueprint is designed for security analysts, IT engineers, and AI practitioners in cybersecurity. It requires NVAIE developer license and API keys for vulnerability databases, search engines, and LLM model services. Hardware requirements include L40 GPU for pipeline operation and optional LLM NIM and Embedding NIM. The workflow involves LLM pipeline for CVE impact analysis, utilizing LLM planner, agent, and summarization nodes. The blueprint uses NVIDIA NIM microservices and Morpheus Cybersecurity AI SDK for vulnerability analysis.

llm-memorization

The 'llm-memorization' project is a tool designed to index, archive, and search conversations with a local LLM using a SQLite database enriched with automatically extracted keywords. It aims to provide personalized context at the start of a conversation by adding memory information to the initial prompt. The tool automates queries from local LLM conversational management libraries, offers a hybrid search function, enhances prompts based on posed questions, and provides an all-in-one graphical user interface for data visualization. It supports both French and English conversations and prompts for bilingual use.

KernelBench

KernelBench is a benchmark tool designed to evaluate Large Language Models' (LLMs) ability to generate GPU kernels. It focuses on transpiling operators from PyTorch to CUDA kernels at different levels of granularity. The tool categorizes problems into four levels, ranging from single-kernel operators to full model architectures, and assesses solutions based on compilation, correctness, and speed. The repository provides a structured directory layout, setup instructions, usage examples for running single or multiple problems, and upcoming roadmap features like additional GPU platform support and integration with other frameworks.

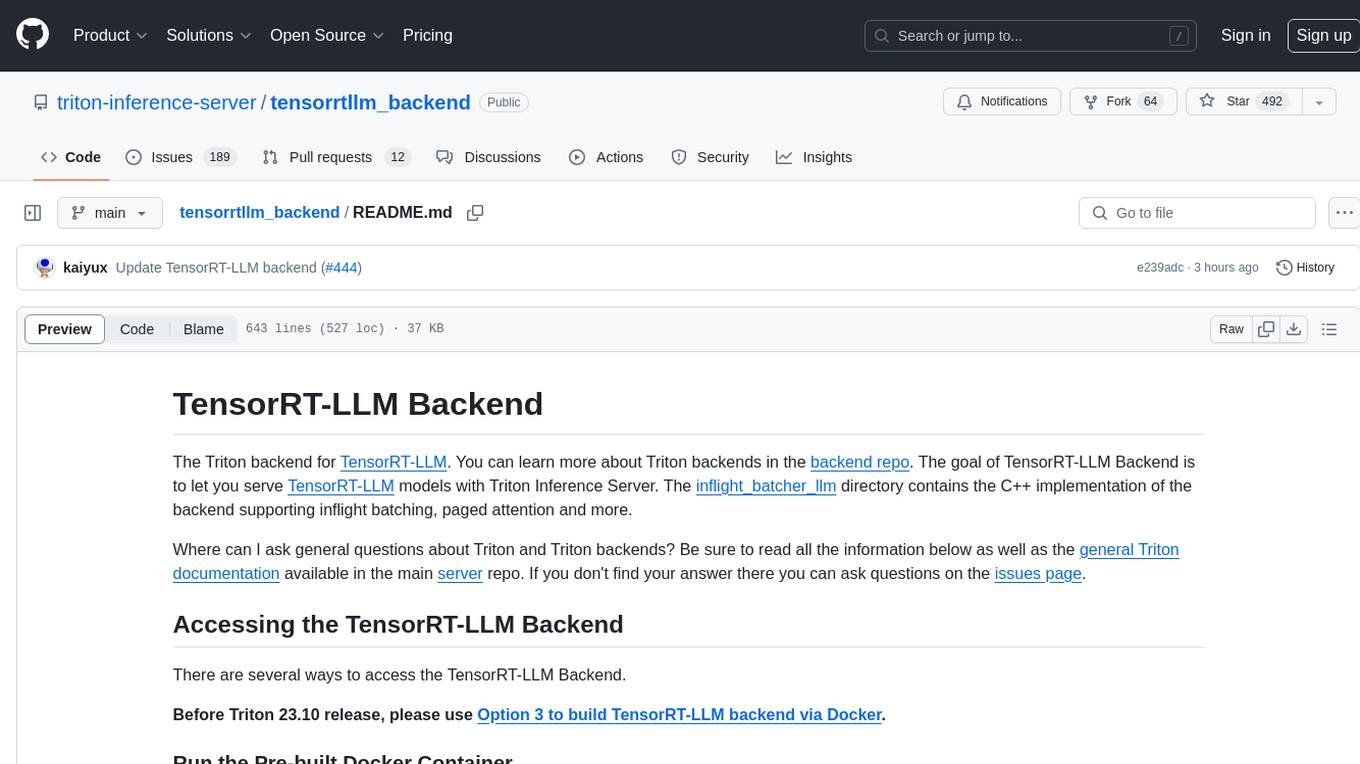

tensorrtllm_backend

The TensorRT-LLM Backend is a Triton backend designed to serve TensorRT-LLM models with Triton Inference Server. It supports features like inflight batching, paged attention, and more. Users can access the backend through pre-built Docker containers or build it using scripts provided in the repository. The backend can be used to create models for tasks like tokenizing, inferencing, de-tokenizing, ensemble modeling, and more. Users can interact with the backend using provided client scripts and query the server for metrics related to request handling, memory usage, KV cache blocks, and more. Testing for the backend can be done following the instructions in the 'ci/README.md' file.

For similar tasks

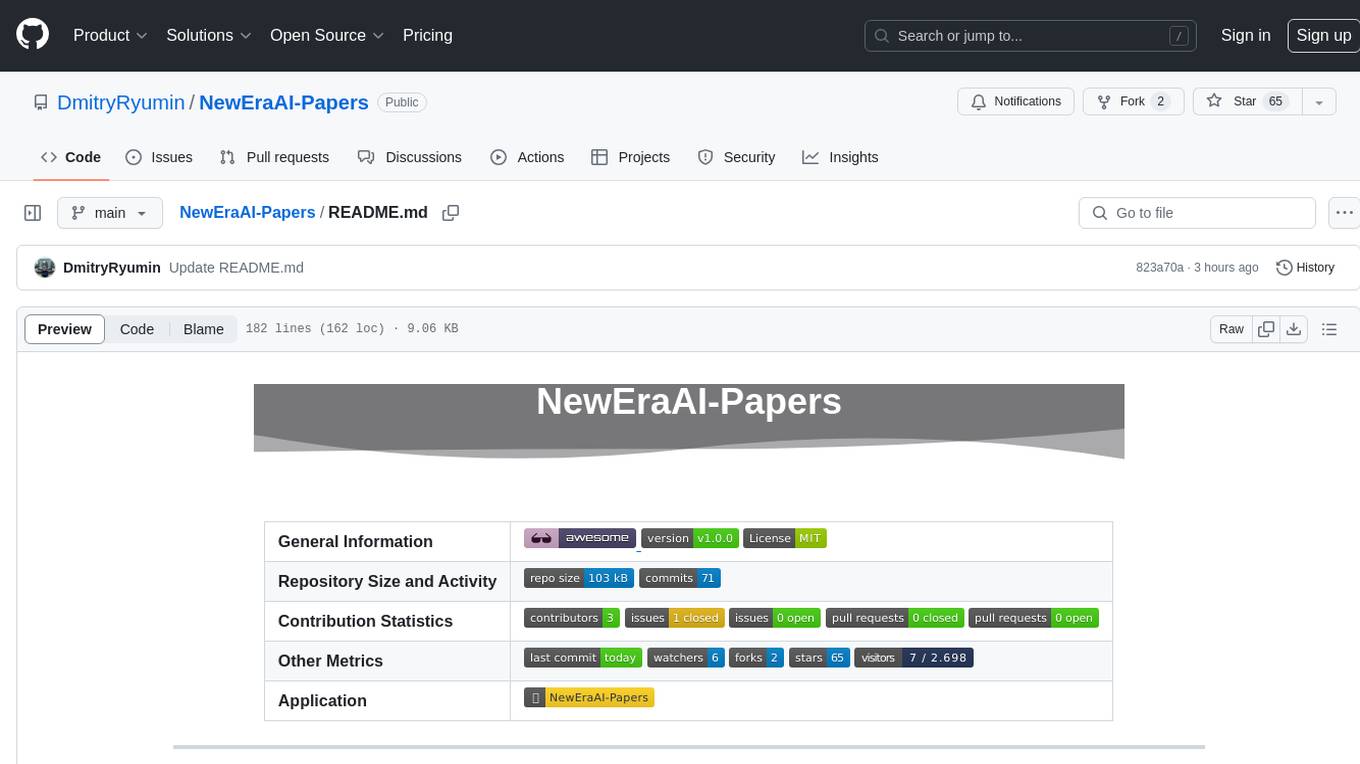

NewEraAI-Papers

The NewEraAI-Papers repository provides links to collections of influential and interesting research papers from top AI conferences, along with open-source code to promote reproducibility and provide detailed implementation insights beyond the scope of the article. Users can stay up to date with the latest advances in AI research by exploring this repository. Contributions to improve the completeness of the list are welcomed, and users can create pull requests, open issues, or contact the repository owner via email to enhance the repository further.

Awesome_Mamba

Awesome Mamba is a curated collection of groundbreaking research papers and articles on Mamba Architecture, a pioneering framework in deep learning known for its selective state spaces and efficiency in processing complex data structures. The repository offers a comprehensive exploration of Mamba architecture through categorized research papers covering various domains like visual recognition, speech processing, remote sensing, video processing, activity recognition, image enhancement, medical imaging, reinforcement learning, natural language processing, 3D recognition, multi-modal understanding, time series analysis, graph neural networks, point cloud analysis, and tabular data handling.

OnAIR

The On-board Artificial Intelligence Research (OnAIR) Platform is a framework that enables AI algorithms written in Python to interact with NASA's cFS. It is intended to explore research concepts in autonomous operations in a simulated environment. The platform provides tools for generating environments, handling telemetry data through Redis, running unit tests, and contributing to the repository. Users can set up a conda environment, configure telemetry and Redis examples, run simulations, and conduct unit tests to ensure the functionality of their AI algorithms. The platform also includes guidelines for licensing, copyright, and contributions to the repository.

model-catalog

model-catalog is a repository containing standardized JSON descriptors for Large Language Model (LLM) model files. Each model is described in a JSON file with details about the model, authors, additional resources, available model files, and providers. The format captures factors like model size, architecture, file format, and quantization format. A Github action merges individual JSON files from the `models/` directory into a `catalog.json` file, which is validated using a JSON schema. Contributors can help by adding new model JSON files following the contribution process.

22AIE111-Object-Oriented-Programming-in-Java-S2-2025

The 'Object Oriented Programming in Java' repository provides notes and code examples organized into units to help users understand and practice Java concepts step-by-step. It includes theoretical notes, practical Java examples, setup files for Visual Studio Code and IntelliJ IDEA, instructions on setting up Java, running Java programs from the command line, and loading projects in VS Code or IntelliJ IDEA. Users can contribute by opening issues or submitting pull requests. The repository is intended for educational purposes, allowing forking and modification for personal study or classroom use.

support-genAI-book

This repository serves as a support page for the book 'Deciphering Generative AI from Original Papers' released by Gijutsu-Hyohron Co., Ltd. It includes answers to exercises and errata from the book. The exercises are provided in chapter-specific .md files in the 'exercises' directory. Please note that there may be some rendering issues with GitHub's math rendering, and the answers are just examples for reference. Contributions to this repository can be made by following the guidelines in CONTRIBUTING.md.

Paper-Replications

Paper-Replications is a repository dedicated to replicating classic and state-of-the-art AI/ML papers and architectures from scratch using PyTorch. It serves as a valuable resource for researchers and enthusiasts looking to understand and implement cutting-edge algorithms in the field of artificial intelligence and machine learning.

ray

Ray is a unified framework for scaling AI and Python applications. It consists of a core distributed runtime and a set of AI libraries for simplifying ML compute, including Data, Train, Tune, RLlib, and Serve. Ray runs on any machine, cluster, cloud provider, and Kubernetes, and features a growing ecosystem of community integrations. With Ray, you can seamlessly scale the same code from a laptop to a cluster, making it easy to meet the compute-intensive demands of modern ML workloads.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.