Best AI tools for< Model Compression >

Infographic

20 - AI tool Sites

Granica

Granica is an AI tool designed for data compression and optimization, enabling users to transform petabytes of data into terabytes with self-optimizing, lossless compression. It offers state-of-the-art technology that works seamlessly across various platforms like Iceberg, Delta, Trino, Spark, Snowflake, and Databricks. Granica helps organizations reduce storage costs, improve query performance, and enhance data accessibility for AI and analytics workloads.

Doclingo

Doclingo is an AI-powered document translation tool that supports translating documents in various formats such as PDF, Word, Excel, PowerPoint, SRT subtitles, ePub ebooks, AR&ZIP packages, and more. It utilizes large language models to provide accurate and professional translations, preserving the original layout of the documents. Users can enjoy a limited-time free trial upon registration, with the option to subscribe for more features. Doclingo aims to offer high-quality translation services through continuous algorithm improvements.

Vidux AI

Vidux AI is a professional AI video generation and processing platform that offers cutting-edge tools to transform, enhance, and create videos using advanced deep learning models. With features like AI video generation, image to video conversion, video compression, upscaling, and enhancement, Vidux AI provides a one-stop solution for all video creation needs. The platform caters to both individual creators and businesses, offering a wide range of tools and features to streamline the video production process.

Compassionate AI

Compassionate AI is a cutting-edge AI-powered platform that empowers individuals and organizations to create and deploy AI solutions that are ethical, responsible, and aligned with human values. With Compassionate AI, users can access a comprehensive suite of tools and resources to design, develop, and implement AI systems that prioritize fairness, transparency, and accountability.

Enhans AI Model Generator

Enhans AI Model Generator is an advanced AI tool designed to help users generate AI models efficiently. It utilizes cutting-edge algorithms and machine learning techniques to streamline the model creation process. With Enhans AI Model Generator, users can easily input their data, select the desired parameters, and obtain a customized AI model tailored to their specific needs. The tool is user-friendly and does not require extensive programming knowledge, making it accessible to a wide range of users, from beginners to experts in the field of AI.

Frontier Model Forum

The Frontier Model Forum (FMF) is a collaborative effort among leading AI companies to advance AI safety and responsibility. The FMF brings together technical and operational expertise to identify best practices, conduct research, and support the development of AI applications that meet society's most pressing needs. The FMF's core objectives include advancing AI safety research, identifying best practices, collaborating across sectors, and helping AI meet society's greatest challenges.

Role Model AI

Role Model AI is a revolutionary multi-dimensional assistant that combines practicality and innovation. It offers four dynamic interfaces for seamless interaction: phone calls for on-the-go assistance, an interactive agent dashboard for detailed task management, lifelike 3D avatars for immersive communication, and an engaging Fortnite world integration for a gaming-inspired experience. Role Model AI adapts to your lifestyle, blending seamlessly into your personal and professional worlds, providing unparalleled convenience and a unique, versatile solution for managing tasks and interactions.

Flux LoRA Model Library

Flux LoRA Model Library is an AI tool that provides a platform for finding and using Flux LoRA models suitable for various projects. Users can browse a catalog of popular Flux LoRA models and learn about FLUX models and LoRA (Low-Rank Adaptation) technology. The platform offers resources for fine-tuning models and ensuring responsible use of generated images.

OpenAI Strawberry Model

OpenAI Strawberry Model is a cutting-edge AI initiative that represents a significant leap in AI capabilities, focusing on enhancing reasoning, problem-solving, and complex task execution. It aims to improve AI's ability to handle mathematical problems, programming tasks, and deep research, including long-term planning and action. The project showcases advancements in AI safety and aims to reduce errors in AI responses by generating high-quality synthetic data for training future models. Strawberry is designed to achieve human-like reasoning and is expected to play a crucial role in the development of OpenAI's next major model, codenamed 'Orion.'

Ray3 AI Video Model

Ray3 is a cutting-edge AI video model developed by Luma, designed to revolutionize HDR video creation for storytellers worldwide. With its native 16-bit HDR output, intelligent scene reasoning, and precise keyframe control, Ray3 empowers creators to generate professional-quality 4K HDR videos with unparalleled creative freedom. It goes beyond simple text-to-video generation, offering a complete creative partnership for modern storytellers, enabling them to bring their cinematic visions to life effortlessly.

HUAWEI Cloud Pangu Drug Molecule Model

HUAWEI Cloud Pangu is an AI tool designed for accelerating drug discovery by optimizing drug molecules. It offers features such as Molecule Search, Molecule Optimizer, and Pocket Molecule Design. Users can submit molecules for optimization and view historical optimization results. The tool is based on the MindSpore framework and has been visited over 300,000 times since August 23, 2021.

LiteLLM

LiteLLM is a platform that simplifies model access, spend tracking, and fallbacks across 100+ LLMs. It provides a gateway to manage model access and offers features like logging, budget tracking, pass-through endpoints, and self-serve key management. LiteLLM is open-source and compatible with the OpenAI format, allowing users to access various LLMs seamlessly.

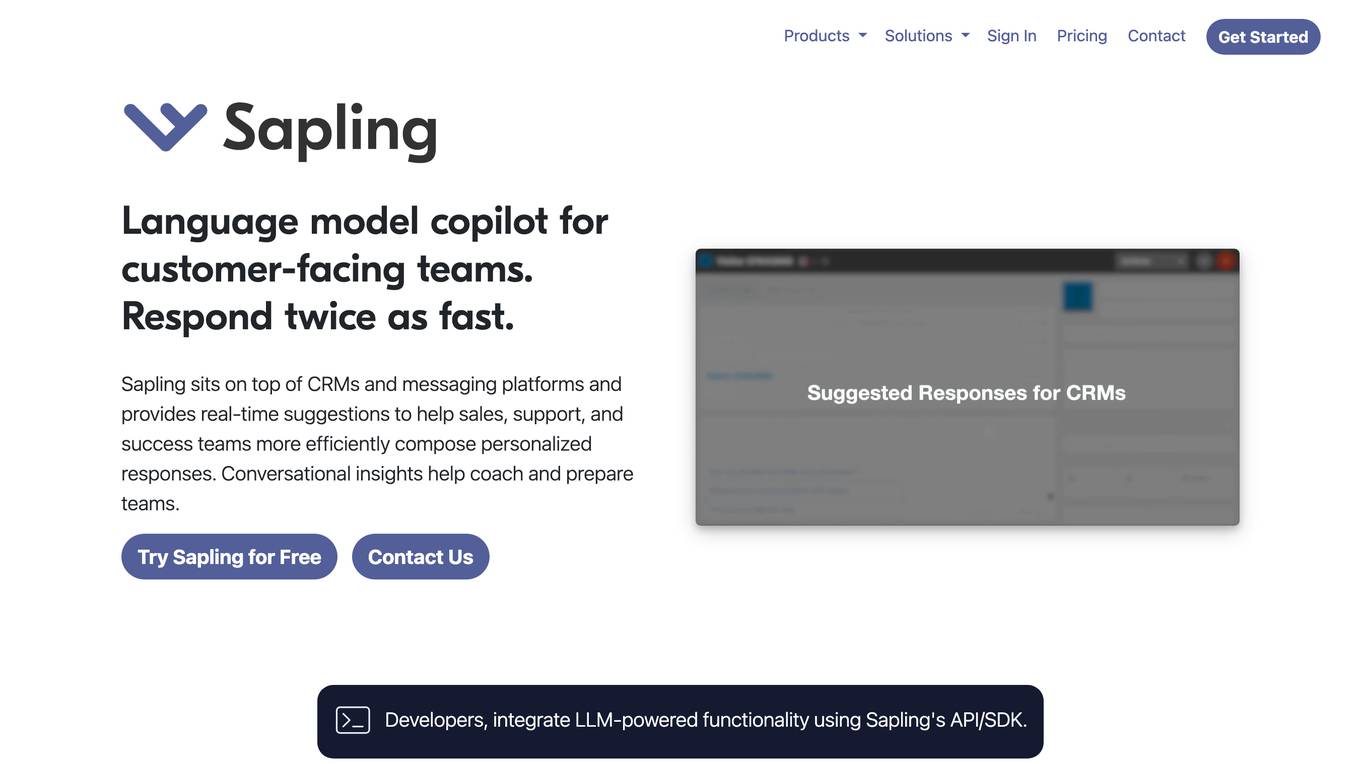

Sapling

Sapling is a language model copilot and API for businesses. It provides real-time suggestions to help sales, support, and success teams more efficiently compose personalized responses. Sapling also offers a variety of features to help businesses improve their customer service, including: * Autocomplete Everywhere: Provides deep learning-powered autocomplete suggestions across all messaging platforms, allowing agents to compose replies more quickly. * Sapling Suggest: Retrieves relevant responses from a team response bank and allows agents to respond more quickly to customer inquiries by simply clicking on suggested responses in real time. * Snippet macros: Allow for quick insertion of common responses. * Grammar and language quality improvements: Sapling catches 60% more language quality issues than other spelling and grammar checkers using a machine learning system trained on millions of English sentences. * Enterprise teams can define custom settings for compliance and content governance. * Distribute knowledge: Ensure team knowledge is shared in a snippet library accessible on all your web applications. * Perform blazing fast search on your knowledge library for compliance, upselling, training, and onboarding.

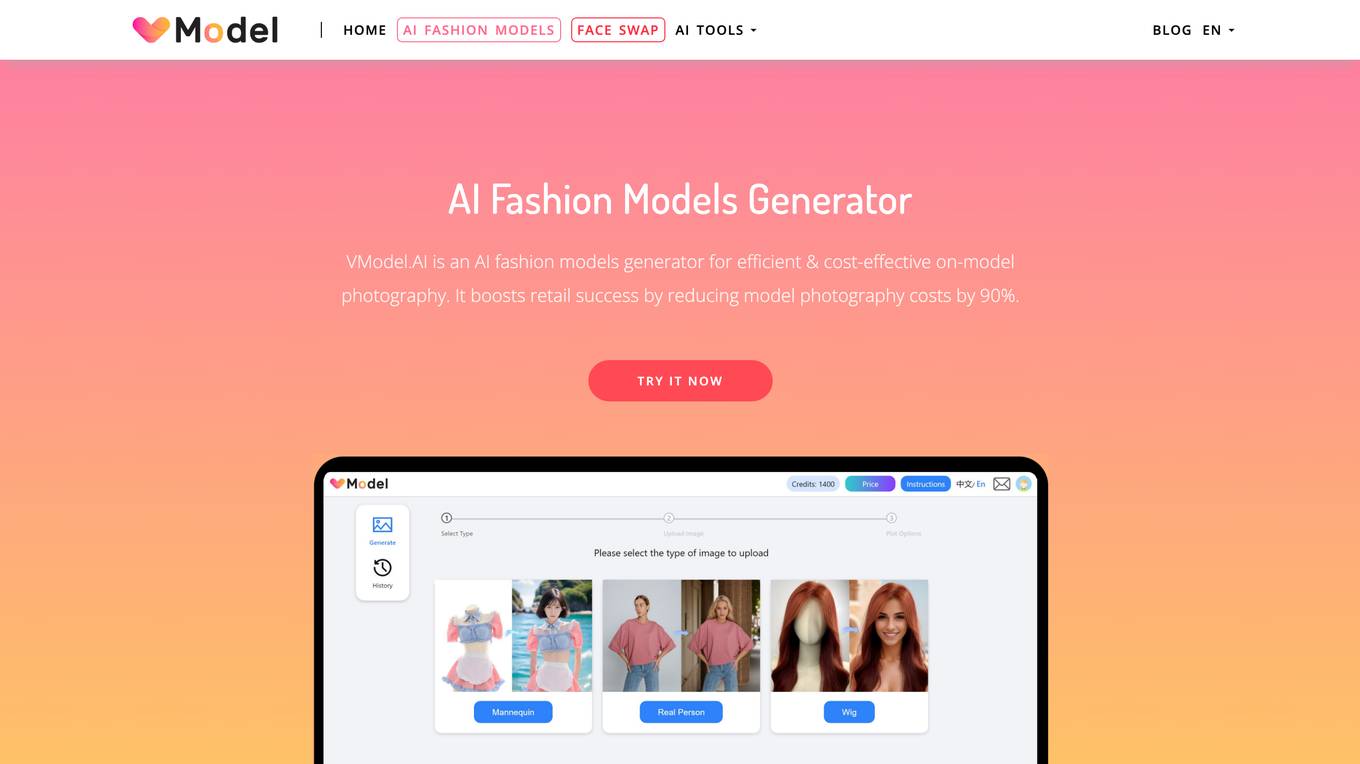

VModel.AI

VModel.AI is an AI fashion models generator that revolutionizes on-model photography for fashion retailers. It utilizes artificial intelligence to create high-quality on-model photography without the need for elaborate photoshoots, reducing model photography costs by 90%. The tool helps diversify stores, improve E-commerce engagement, reduce returns, promote diversity and inclusion in fashion, and enhance product offerings.

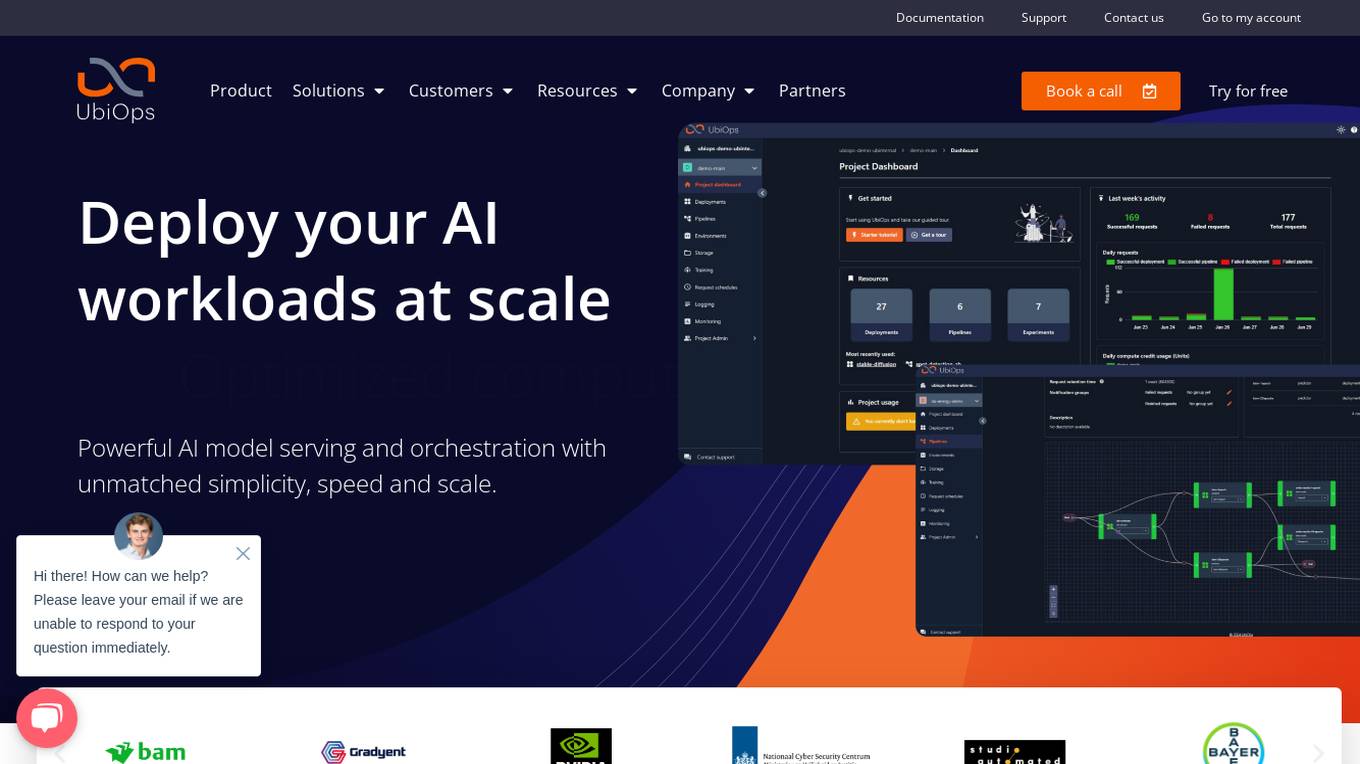

UbiOps

UbiOps is an AI infrastructure platform that helps teams quickly run their AI & ML workloads as reliable and secure microservices. It offers powerful AI model serving and orchestration with unmatched simplicity, speed, and scale. UbiOps allows users to deploy models and functions in minutes, manage AI workloads from a single control plane, integrate easily with tools like PyTorch and TensorFlow, and ensure security and compliance by design. The platform supports hybrid and multi-cloud workload orchestration, rapid adaptive scaling, and modular applications with unique workflow management system.

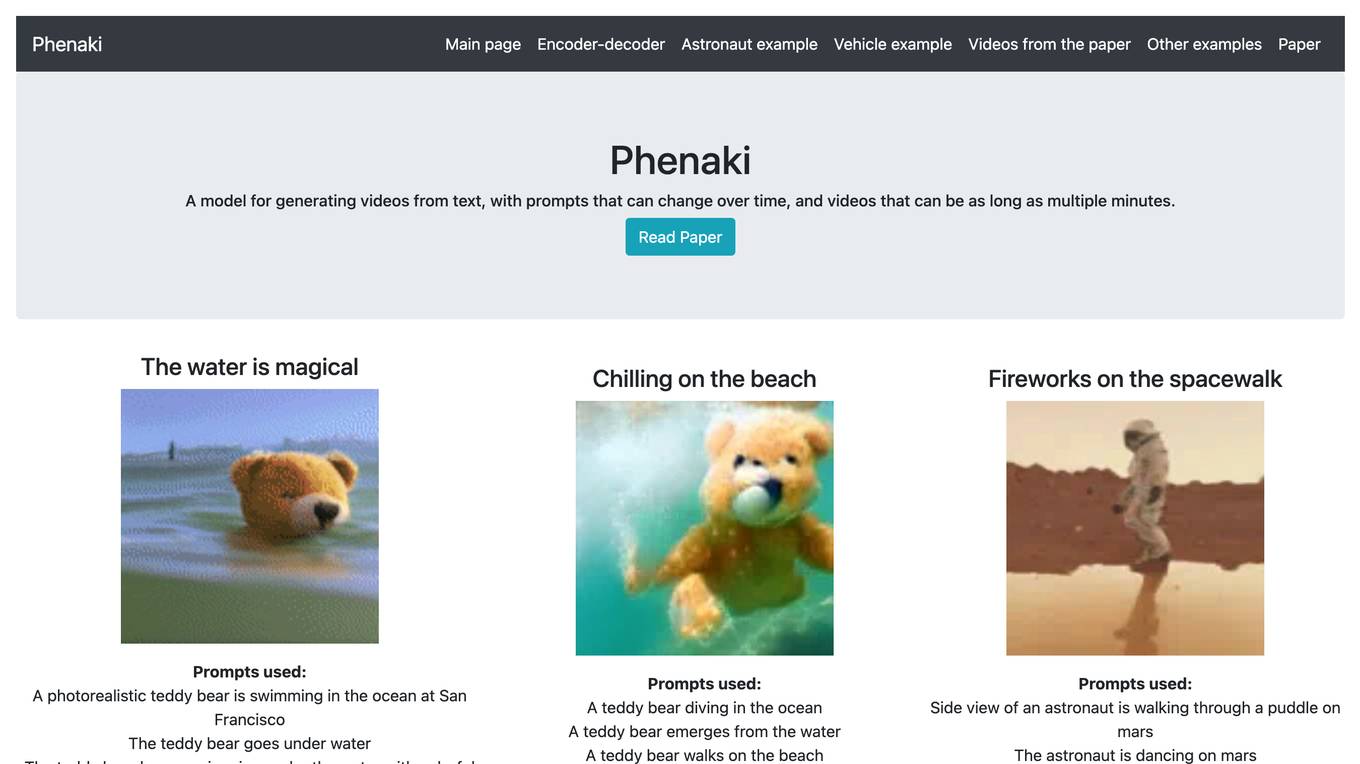

Phenaki

Phenaki is a model capable of generating realistic videos from a sequence of textual prompts. It is particularly challenging to generate videos from text due to the computational cost, limited quantities of high-quality text-video data, and variable length of videos. To address these issues, Phenaki introduces a new causal model for learning video representation, which compresses the video to a small representation of discrete tokens. This tokenizer uses causal attention in time, which allows it to work with variable-length videos. To generate video tokens from text, Phenaki uses a bidirectional masked transformer conditioned on pre-computed text tokens. The generated video tokens are subsequently de-tokenized to create the actual video. To address data issues, Phenaki demonstrates how joint training on a large corpus of image-text pairs as well as a smaller number of video-text examples can result in generalization beyond what is available in the video datasets. Compared to previous video generation methods, Phenaki can generate arbitrarily long videos conditioned on a sequence of prompts (i.e., time-variable text or a story) in an open domain. To the best of our knowledge, this is the first time a paper studies generating videos from time-variable prompts. In addition, the proposed video encoder-decoder outperforms all per-frame baselines currently used in the literature in terms of spatio-temporal quality and the number of tokens per video.

Artiko.ai

Artiko.ai is a multi-model AI chat platform that integrates advanced AI models such as ChatGPT, Claude 3, Gemini 1.5, and Mistral AI. It offers a convenient and cost-effective solution for work, business, or study by providing a single chat interface to harness the power of multi-model AI. Users can save time and money while achieving better results through features like text rewriting, data conversation, AI assistants, website chatbot, PDF and document chat, translation, brainstorming, and integration with various tools like Woocommerce, Amazon, Salesforce, and more.

LLMWise

LLMWise is a multi-model LLM API tool that allows users to compare, blend, and route AI models simultaneously. It offers 5 modes - Chat, Compare, Blend, Judge, and Failover - to help users make informed decisions based on model outputs. With 31+ available models, LLMWise provides a user-friendly platform for orchestrating AI models, ensuring reliability, and optimizing costs. The tool is designed to streamline the integration of various AI models through one API call, offering features like real-time responses, per-model metrics, and failover routing.

Claude

Claude is a large multi-modal model, trained by Google. It is similar to GPT-3, but it is trained on a larger dataset and with more advanced techniques. Claude is capable of generating human-like text, translating languages, answering questions, and writing different kinds of creative content.

SuperAnnotate

SuperAnnotate is an AI data platform that simplifies and accelerates model-building by unifying the AI pipeline. It enables users to create, curate, and evaluate datasets efficiently, leading to the development of better models faster. The platform offers features like connecting any data source, building customizable UIs, creating high-quality datasets, evaluating models, and deploying models seamlessly. SuperAnnotate ensures global security and privacy measures for data protection.

2 - Open Source Tools

hqq

HQQ is a fast and accurate model quantizer that skips the need for calibration data. It's super simple to implement (just a few lines of code for the optimizer). It can crunch through quantizing the Llama2-70B model in only 4 minutes! 🚀

Efficient-LLMs-Survey

This repository provides a systematic and comprehensive review of efficient LLMs research. We organize the literature in a taxonomy consisting of three main categories, covering distinct yet interconnected efficient LLMs topics from **model-centric** , **data-centric** , and **framework-centric** perspective, respectively. We hope our survey and this GitHub repository can serve as valuable resources to help researchers and practitioners gain a systematic understanding of the research developments in efficient LLMs and inspire them to contribute to this important and exciting field.

20 - OpenAI Gpts

Gentle Companion

A compassionate companion, tailoring discussions to user's age and origin.

Seabiscuit Business Model Master

Discover A More Robust Business: Craft tailored value proposition statements, develop a comprehensive business model canvas, conduct detailed PESTLE analysis, and gain strategic insights on enhancing business model elements like scalability, cost structure, and market competition strategies. (v1.18)

Create A Business Model Canvas For Your Business

Let's get started by telling me about your business: What do you offer? Who do you serve? ------------------------------------------------------- Need help Prompt Engineering? Reach out on LinkedIn: StephenHnilica

Business Model Canvas Strategist

Business Model Canvas Creator - Build and evaluate your business model

BITE Model Analyzer by Dr. Steven Hassan

Discover if your group, relationship or organization uses specific methods to recruit and maintain control over people

EIA model

Generates Environmental impact assessment templates based on specific global locations and parameters.

Business Model Canvas Wizard

Un aiuto a costruire il Business Model Canvas della tua iniziativa

Business Model Advisor

Business model expert, create detailed reports based on business ideas.

AI Model NFT Marketplace- Joy Marketplace

Expert on AI Model NFT Marketplace, offering insights on blockchain tech and NFTs.