ERNIE

The official repository for ERNIE 4.5 and ERNIEKit – its industrial-grade development toolkit based on PaddlePaddle.

Stars: 7490

ERNIE 4.5 is a family of large-scale multimodal models with 10 distinct variants, including Mixture-of-Experts (MoE) models with 47B and 3B active parameters. The models feature a novel heterogeneous modality structure supporting parameter sharing across modalities while allowing dedicated parameters for each individual modality. Trained with optimal efficiency using PaddlePaddle deep learning framework, ERNIE 4.5 models achieve state-of-the-art performance across text and multimodal benchmarks, enhancing multimodal understanding without compromising performance on text-related tasks. The open-source development toolkits for ERNIE 4.5 offer industrial-grade capabilities, resource-efficient training and inference workflows, and multi-hardware compatibility.

README:

We introduce ERNIE 4.5, a new family of large-scale multimodal models comprising 10 distinct variants. The model family consist of Mixture-of-Experts (MoE) models with 47B and 3B active parameters, with the largest model having 424B total parameters, as well as a 0.3B dense model. For the MoE architecture, we propose a novel heterogeneous modality structure, which supports parameter sharing across modalities while also allowing dedicated parameters for each individual modality. This MoE architecture has the advantage to enhance multimodal understanding without compromising, and even improving, performance on text-related tasks. All of our models are trained with optimal efficiency using the PaddlePaddle deep learning framework, which also enables high-performance inference and streamlined deployment for them. We achieve 47% Model FLOPs Utilization (MFU) in our largest ERNIE 4.5 language model pre-training. Experimental results show that our models achieve state-of-the-art performance across multiple text and multimodal benchmarks, especially in instruction following, world knowledge memorization, visual understanding and multimodal reasoning. All models are publicly accessible under Apache 2.0 to support future research and development in the field. Additionally, we open source the development toolkits for ERNIE 4.5, featuring industrial-grade capabilities, resource-efficient training and inference workflows, and multi-hardware compatibility.

ERNIE 4.5

| ERNIE 4.5 Models | Model Information | |||

|---|---|---|---|---|

| Model Category | Model | Input Modality | Output Modality | Context Window |

| Large Language Models (LLMs) | ERNIE-4.5-300B-A47B-Base | Text | Text | 128K |

| ERNIE-4.5-300B-A47B | ||||

| ERNIE-4.5-21B-A3B-Base | ||||

| ERNIE-4.5-21B-A3B | ||||

| Vision-Language Models (VLMs) | ERNIE-4.5-VL-424B-A47B-Base | Text/Image/Video | Text | |

| ERNIE-4.5-VL-424B-A47B | ||||

| ERNIE-4.5-VL-28B-A3B-Base | ||||

| ERNIE-4.5-VL-28B-A3B | ||||

| Dense Models | ERNIE-4.5-0.3B-Base | Text | Text | |

| ERNIE-4.5-0.3B | ||||

Note: All models (including pre-trained weights and inference code) have been released on 🤗Hugging Face, and AI Studio. Check our blog for more details.

Our model family is characterized by three key innovations:

-

Multimodal Heterogeneous MoE Pre-Training: Our models are jointly trained on both textual and visual modalities to better capture the nuances of multimodal information and improve performance on tasks involving text understanding and generation, image understanding, and cross-modal reasoning. To achieve this without one modality hindering the learning of another, we designed a heterogeneous MoE structure, incorporated modality-isolated routing, and employed router orthogonal loss and multimodal token-balanced loss. These architectural choices ensure that both modalities are effectively represented, allowing for mutual reinforcement during training.

-

Scaling-Efficient Infrastructure: We propose a novel heterogeneous hybrid parallelism and hierarchical load balancing strategy for efficient training of ERNIE 4.5 models. By using intra-node expert parallelism, memory-efficient pipeline scheduling, FP8 mixed-precision training and finegrained recomputation methods, we achieve remarkable pre-training throughput. For inference, we propose multi-expert parallel collaboration method and convolutional code quantization algorithm to achieve 4-bit/2-bit lossless quantization. Furthermore, we introduce PD disaggregation with dynamic role switching for effective resource utilization to enhance inference performance for ERNIE 4.5 MoE models. Built on PaddlePaddle, ERNIE 4.5 delivers high-performance inference across a wide range of hardware platforms.

-

Modality-Specific Post-Training: To meet the diverse requirements of real-world applications, we fine-tuned variants of the pre-trained model for specific modalities. Our LLMs are optimized for general-purpose language understanding and generation. The VLMs focuses on visuallanguage understanding and supports both thinking and non-thinking modes. Each model employed a combination of Supervised Fine-tuning (SFT), Direct Preference Optimization (DPO) or a modified reinforcement learning method named Unified Preference Optimization (UPO) for post-training.

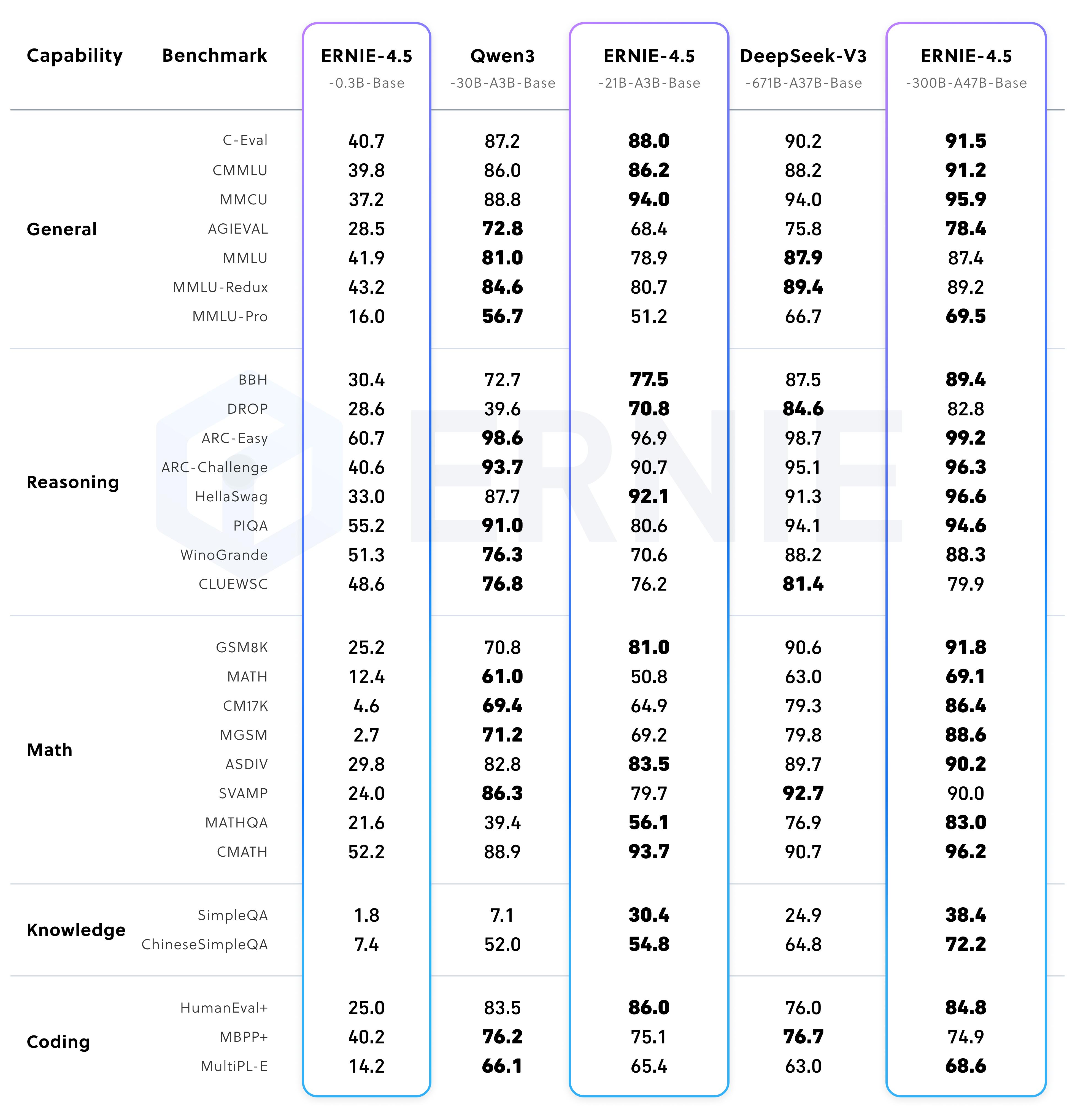

ERNIE-4.5-300B-A47B-Base surpasses DeepSeek-V3-671B-A37B-Base on 22 out of 28 benchmarks, demonstrating leading performance across all major capability categories. This underscores the substantial improvements in generalization, reasoning, and knowledge-intensive tasks brought about by scaling up the ERNIE-4.5-Base model relative to other state-of-the-art large models. With a total parameter size of 21B (approximately 70% that of Qwen3-30B), ERNIE-4.5-21B-A3B-Base outperforms Qwen3-30B-A3B-Base on several math and reasoning benchmarks, including BBH and CMATH. ERNIE-4.5-21B-A3B-Base remains highly competitive given its significantly smaller model size, demonstrating notable parameter efficiency and favorable performance trade-offs.

ERNIE-4.5-300B-A47B, the post trained model, demonstrates significant strengths in instruction following and knowledge tasks, as evidenced by the state-of-the-art scores on benchmarks such as IFEval, Multi-IF, SimpleQA, and ChineseSimpleQA. The lightweight model ERNIE-4.5-21B-A3B achieves competitive performance compared to Qwen3-30B-A3B, despite having approximately 30% fewer total parameters.

In the non-thinking mode, ERNIE-4.5-VL exhibits outstanding proficiency in visual perception, document and chart understanding, and visual knowledge, performing strongly across a range of established benchmarks. Under the thinking mode, ERNIE-4.5-VL not only demonstrates enhanced reasoning abilities compared to the non-thinking mode, but also retains the strong perception capabilities of the latter. ERNIE-4.5-VL-424B-A47B delivers consistently strong results across the full multimodal evaluation suite. Its thinking mode provides a distinct advantage on reasoning-centric tasks, narrowing or even surpassing the gap to OpenAI-o1 on challenging benchmarks such as MathVista, MMMU, and VisualPuzzle, while maintaining competitive performance on perception-focused datasets like CV-Bench and RealWorldQA. The lightweight vision-language model ERNIE-4.5-VL-28B-A3B achieves competitive or even superior performance compared to Qwen2.5-VL-7B and Qwen2.5-VL-32B across most benchmarks, despite using significantly fewer activation parameters. Notably, our lightweight model also supports both thinking and non-thinking modes, offering functionalities consistent with ERNIE-4.5-VL-424B-A47B.

ERNIE 4.5 models are trained and deployed for inference using the PaddlePaddle framework. The full workflow of training, compression, and inference for ERNIE 4.5 is supported through the ERNIEKit and FastDeploy toolkit. The table below details the feature matrix of the ERNIE 4.5 model family for training and inference.

| Model | Training | Inference |

|---|---|---|

| ERNIE-4.5-300B-A47B-Base | SFT/SFT-LoRA/DPO/DPO-LoRA | BF16 / W4A16C16 / W8A16C16 / FP8 |

| ERNIE-4.5-300B-A47B | SFT/SFT-LoRA/DPO/DPO-LoRA/QAT | BF16 / W4A16C16 / W8A16C16 / W4A8C8 / FP8 / 2Bits |

| ERNIE-4.5-21B-A3B-Base | SFT/SFT-LoRA/DPO/DPO-LoRA | BF16 / W4A16C16 / W8A16C16 / FP8 |

| ERNIE-4.5-21B-A3B | SFT/SFT-LoRA/DPO/DPO-LoRA | BF16 / W4A16C16 / W8A16C16 / FP8 |

| ERNIE-4.5-VL-424B-A47B-Base | Coming Soon | BF16 / W4A16C16 / W8A16C16 / FP8 |

| ERNIE-4.5-VL-424B-A47B | Coming Soon | BF16 / W4A16C16 / W8A16C16 / FP8 |

| ERNIE-4.5-VL-28B-A3B-Base | Coming Soon | BF16 / W4A16C16 / W8A16C16 / FP8 |

| ERNIE-4.5-VL-28B-A3B | Coming Soon | BF16 / W4A16C16 / W8A16C16 / FP8 |

| ERNIE-4.5-0.3B-Base | SFT/SFT-LoRA/DPO/DPO-LoRA | BF16 / W8A16C16 / FP8 |

| ERNIE-4.5-0.3B | SFT/SFT-LoRA/DPO/DPO-LoRA | BF16 / W8A16C16 / FP8 |

Note: For different ERNIE 4.5 model, we provide diverse quantization schemes using the notation WxAxCx, where: W indicates weight precision, A indicates activation precision, C indicates KV Cache precision, x represents numerical precision.

ERNIEKit is an industrial-grade training and compression development toolkit for ERNIE models based on PaddlePaddle, offering full-cycle development support for the ERNIE 4.5 model family. Key capabilities include:

- High-performance pre-training implementation

- Full-parameter supervised fine-tuning (SFT)

- Direct Preference Optimization (DPO)

- Parameter-efficient fine-tuning and alignment (SFT-LoRA/DPO-LoRA)

- Quantization-Aware Training (QAT)

- Post-Training Quantization (PTQ) [WIP]

Minimum hardware requirements for training each model are documented here.

When you install ERNIEKit successfully, you can start training ERNIE 4.5 models with the following command:

# download model from huggingface

huggingface-cli download baidu/ERNIE-4.5-0.3B-Paddle --local-dir baidu/ERNIE-4.5-0.3B-Paddle

# 8K Sequence Length, SFT

erniekit train examples/configs/ERNIE-4.5-0.3B/sft/run_sft_8k.yamlFor detailed guides on installation, CLI usage, WebUI, multi-node training, and advanced features, please refer to ERNIEKit Training Document.

For detailed guides on High-performance pre-training, please refer to Pre-Training Document.

ERNIEKit WebUI demo:

https://github.com/user-attachments/assets/6d44cb92-0826-42df-aa80-7656445e0f73

FastDeploy:High-performance Inference and Deployment Toolkit for LLMs and VLMs Based on PaddlePaddle

FastDeploy is an inference and deployment toolkit for large language models and visual language models, developed based on PaddlePaddle. It delivers production-ready, easy-to-use multi-hardware deployment solutions with multi-level load-balanced PD disaggregation, comprehensive quantization format support, OpenAI API server and vLLM compatible etc.

For installation please refer to FastDeploy.

from fastdeploy import LLM, SamplingParams

prompt = "Write me a poem about large language model."

sampling_params = SamplingParams(temperature=0.8, top_p=0.95)

llm = LLM(model="baidu/ERNIE-4.5-0.3B-Paddle", max_model_len=32768)

outputs = llm.generate(prompt, sampling_params)python -m fastdeploy.entrypoints.openai.api_server \

--model "baidu/ERNIE-4.5-0.3B-Paddle" \

--max-model-len 32768 \

--port 9904For more inference and deployment guides, please refer to FastDeploy.

Discover best-practice guides showcasing ERNIE’s capabilities across multiple domains:

| Cookbook | Description | Gradio Demo |

|---|---|---|

| Conversation | Building conversational applications. | conversation_demo.py |

| Simple ERNIE Bot | Creating a lightweight web-based ERNIE Bot. | simple_ernie_bot_demo.py |

| Web-Search-Enhanced Conversation | Building conversational apps with integrated web search. | web_search_demo.py |

| Knowledge Retrieval-based Q&A | Building intelligent Q&A systems with private knowledge bases. | knowledge_retrieval_demo.py |

| Advanced Search | Building article-generation applications using deep information extraction. | advanced_search_demo.py |

| SFT tutorial | Optimizing task performance through supervised fine-tuning with ERNIEKit. | - |

| DPO tutorial | Aligning models with human preferences using ERNIEKit. | - |

| Text Recognition | A Comprehensive Guide to Developing Text Recognition for Non-Chinese and Non-English Languages Using ERNIE and PaddleOCR. | - |

| Document Translation | Document Translation Practice Based on ERNIE and PaddleOCR. | - |

| Key Information Extraction | Key Information Extraction in Contract Scenarios Based on ERNIE and PaddleOCR. | - |

| PaddlePaddle WeChat official account | Join the tech discussion group |

|---|---|

The ERNIE 4.5 models are provided under the Apache License 2.0. This license permits commercial use, subject to its terms and conditions.

If you find ERNIE 4.5 useful or wish to use it in your projects, please kindly cite our technical report:

@misc{ernie2025technicalreport,

title={ERNIE 4.5 Technical Report},

author={Baidu-ERNIE-Team},

year={2025},

eprint={},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={}

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ERNIE

Similar Open Source Tools

ERNIE

ERNIE 4.5 is a family of large-scale multimodal models with 10 distinct variants, including Mixture-of-Experts (MoE) models with 47B and 3B active parameters. The models feature a novel heterogeneous modality structure supporting parameter sharing across modalities while allowing dedicated parameters for each individual modality. Trained with optimal efficiency using PaddlePaddle deep learning framework, ERNIE 4.5 models achieve state-of-the-art performance across text and multimodal benchmarks, enhancing multimodal understanding without compromising performance on text-related tasks. The open-source development toolkits for ERNIE 4.5 offer industrial-grade capabilities, resource-efficient training and inference workflows, and multi-hardware compatibility.

Awesome-LLM

Awesome-LLM is a curated list of resources related to large language models, focusing on papers, projects, frameworks, tools, tutorials, courses, opinions, and other useful resources in the field. It covers trending LLM projects, milestone papers, other papers, open LLM projects, LLM training frameworks, LLM evaluation frameworks, tools for deploying LLM, prompting libraries & tools, tutorials, courses, books, and opinions. The repository provides a comprehensive overview of the latest advancements and resources in the field of large language models.

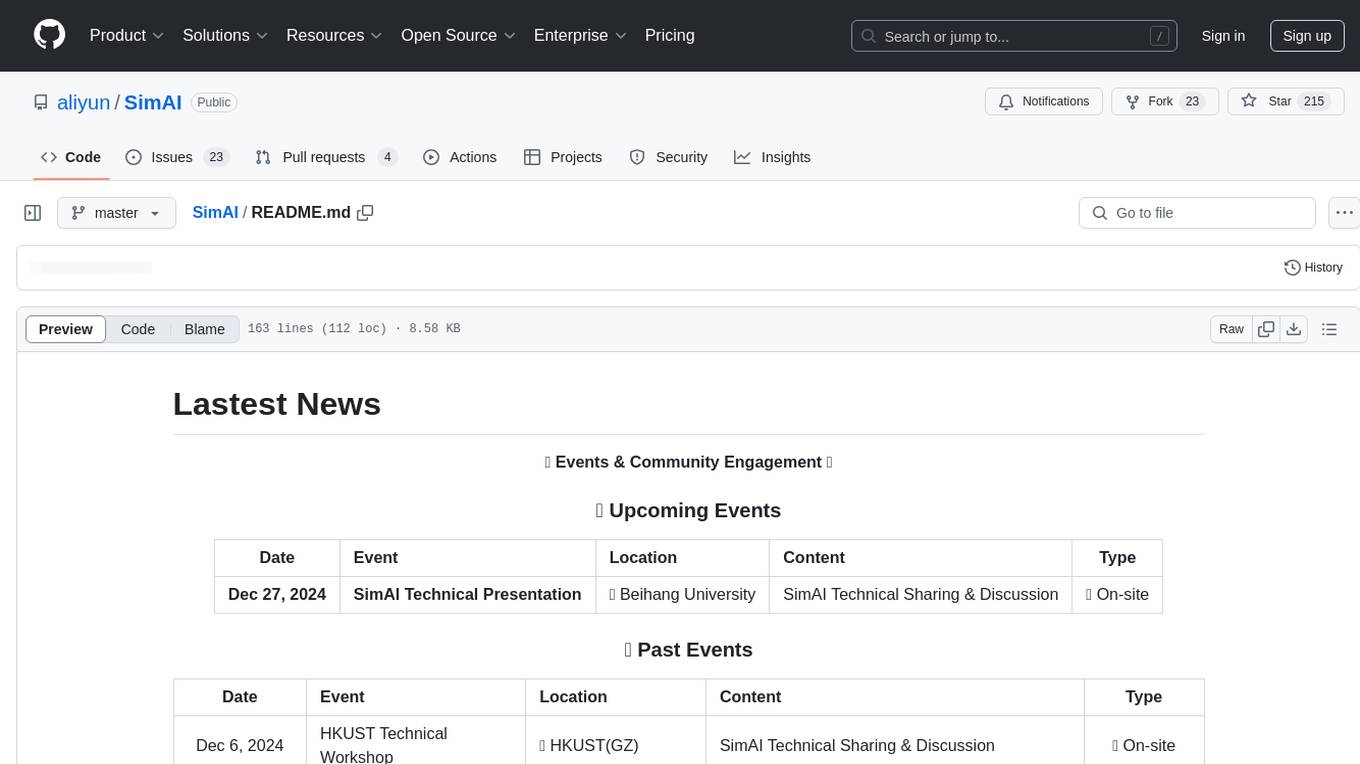

SimAI

SimAI is the industry's first full-stack, high-precision simulator for AI large-scale training. It provides detailed modeling and simulation of the entire LLM training process, encompassing framework, collective communication, network layers, and more. This comprehensive approach offers end-to-end performance data, enabling researchers to analyze training process details, evaluate time consumption of AI tasks under specific conditions, and assess performance gains from various algorithmic optimizations.

AceCoder

AceCoder is a tool that introduces a fully automated pipeline for synthesizing large-scale reliable tests used for reward model training and reinforcement learning in the coding scenario. It curates datasets, trains reward models, and performs RL training to improve coding abilities of language models. The tool aims to unlock the potential of RL training for code generation models and push the boundaries of LLM's coding abilities.

FlagEmbedding

FlagEmbedding focuses on retrieval-augmented LLMs, consisting of the following projects currently: * **Long-Context LLM** : Activation Beacon * **Fine-tuning of LM** : LM-Cocktail * **Embedding Model** : Visualized-BGE, BGE-M3, LLM Embedder, BGE Embedding * **Reranker Model** : llm rerankers, BGE Reranker * **Benchmark** : C-MTEB

inference

Xorbits Inference (Xinference) is a powerful and versatile library designed to serve language, speech recognition, and multimodal models. With Xorbits Inference, you can effortlessly deploy and serve your or state-of-the-art built-in models using just a single command. Whether you are a researcher, developer, or data scientist, Xorbits Inference empowers you to unleash the full potential of cutting-edge AI models.

Awesome-Attention-Heads

Awesome-Attention-Heads is a platform providing the latest research on Attention Heads, focusing on enhancing understanding of Transformer structure for model interpretability. It explores attention mechanisms for behavior, inference, and analysis, alongside feed-forward networks for knowledge storage. The repository aims to support researchers studying LLM interpretability and hallucination by offering cutting-edge information on Attention Head Mining.

FuseAI

FuseAI is a repository that focuses on knowledge fusion of large language models. It includes FuseChat, a state-of-the-art 7B LLM on MT-Bench, and FuseLLM, which surpasses Llama-2-7B by fusing three open-source foundation LLMs. The repository provides tech reports, releases, and datasets for FuseChat and FuseLLM, showcasing their performance and advancements in the field of chat models and large language models.

txtai

Txtai is an all-in-one embeddings database for semantic search, LLM orchestration, and language model workflows. It combines vector indexes, graph networks, and relational databases to enable vector search with SQL, topic modeling, retrieval augmented generation, and more. Txtai can stand alone or serve as a knowledge source for large language models (LLMs). Key features include vector search with SQL, object storage, topic modeling, graph analysis, multimodal indexing, embedding creation for various data types, pipelines powered by language models, workflows to connect pipelines, and support for Python, JavaScript, Java, Rust, and Go. Txtai is open-source under the Apache 2.0 license.

rai

RAI is a framework designed to bring general multi-agent system capabilities to robots, enhancing human interactivity, flexibility in problem-solving, and out-of-the-box AI features. It supports multi-modalities, incorporates an advanced database for agent memory, provides ROS 2-oriented tooling, and offers a comprehensive task/mission orchestrator. The framework includes features such as voice interaction, customizable robot identity, camera sensor access, reasoning through ROS logs, and integration with LangChain for AI tools. RAI aims to support various AI vendors, improve human-robot interaction, provide an SDK for developers, and offer a user interface for configuration.

MNN

MNN is a highly efficient and lightweight deep learning framework that supports inference and training of deep learning models. It has industry-leading performance for on-device inference and training. MNN has been integrated into various Alibaba Inc. apps and is used in scenarios like live broadcast, short video capture, search recommendation, and product searching by image. It is also utilized on embedded devices such as IoT. MNN-LLM and MNN-Diffusion are specific runtime solutions developed based on the MNN engine for deploying language models and diffusion models locally on different platforms. The framework is optimized for devices, supports various neural networks, and offers high performance with optimized assembly code and GPU support. MNN is versatile, easy to use, and supports hybrid computing on multiple devices.

camel

CAMEL is an open-source library designed for the study of autonomous and communicative agents. We believe that studying these agents on a large scale offers valuable insights into their behaviors, capabilities, and potential risks. To facilitate research in this field, we implement and support various types of agents, tasks, prompts, models, and simulated environments.

PURE

PURE (Process-sUpervised Reinforcement lEarning) is a framework that trains a Process Reward Model (PRM) on a dataset and fine-tunes a language model to achieve state-of-the-art mathematical reasoning capabilities. It uses a novel credit assignment method to calculate return and supports multiple reward types. The final model outperforms existing methods with minimal RL data or compute resources, achieving high accuracy on various benchmarks. The tool addresses reward hacking issues and aims to enhance long-range decision-making and reasoning tasks using large language models.

beeai-framework

BeeAI Framework is a versatile tool for building production-ready multi-agent systems. It offers flexibility in orchestrating agents, seamless integration with various models and tools, and production-grade controls for scaling. The framework supports Python and TypeScript libraries, enabling users to implement simple to complex multi-agent patterns, connect with AI services, and optimize token usage and resource management.

Awesome-LLMs-on-device

Welcome to the ultimate hub for on-device Large Language Models (LLMs)! This repository is your go-to resource for all things related to LLMs designed for on-device deployment. Whether you're a seasoned researcher, an innovative developer, or an enthusiastic learner, this comprehensive collection of cutting-edge knowledge is your gateway to understanding, leveraging, and contributing to the exciting world of on-device LLMs.

For similar tasks

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

ray

Ray is a unified framework for scaling AI and Python applications. It consists of a core distributed runtime and a set of AI libraries for simplifying ML compute, including Data, Train, Tune, RLlib, and Serve. Ray runs on any machine, cluster, cloud provider, and Kubernetes, and features a growing ecosystem of community integrations. With Ray, you can seamlessly scale the same code from a laptop to a cluster, making it easy to meet the compute-intensive demands of modern ML workloads.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

djl

Deep Java Library (DJL) is an open-source, high-level, engine-agnostic Java framework for deep learning. It is designed to be easy to get started with and simple to use for Java developers. DJL provides a native Java development experience and allows users to integrate machine learning and deep learning models with their Java applications. The framework is deep learning engine agnostic, enabling users to switch engines at any point for optimal performance. DJL's ergonomic API interface guides users with best practices to accomplish deep learning tasks, such as running inference and training neural networks.

mlflow

MLflow is a platform to streamline machine learning development, including tracking experiments, packaging code into reproducible runs, and sharing and deploying models. MLflow offers a set of lightweight APIs that can be used with any existing machine learning application or library (TensorFlow, PyTorch, XGBoost, etc), wherever you currently run ML code (e.g. in notebooks, standalone applications or the cloud). MLflow's current components are:

* `MLflow Tracking

tt-metal

TT-NN is a python & C++ Neural Network OP library. It provides a low-level programming model, TT-Metalium, enabling kernel development for Tenstorrent hardware.

burn

Burn is a new comprehensive dynamic Deep Learning Framework built using Rust with extreme flexibility, compute efficiency and portability as its primary goals.

awsome-distributed-training

This repository contains reference architectures and test cases for distributed model training with Amazon SageMaker Hyperpod, AWS ParallelCluster, AWS Batch, and Amazon EKS. The test cases cover different types and sizes of models as well as different frameworks and parallel optimizations (Pytorch DDP/FSDP, MegatronLM, NemoMegatron...).

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.