arena-hard-auto

Arena-Hard-Auto: An automatic LLM benchmark.

Stars: 394

Arena-Hard-Auto-v0.1 is an automatic evaluation tool for instruction-tuned LLMs. It contains 500 challenging user queries. The tool prompts GPT-4-Turbo as a judge to compare models' responses against a baseline model (default: GPT-4-0314). Arena-Hard-Auto employs an automatic judge as a cheaper and faster approximator to human preference. It has the highest correlation and separability to Chatbot Arena among popular open-ended LLM benchmarks. Users can evaluate their models' performance on Chatbot Arena by using Arena-Hard-Auto.

README:

🚨 New feature (08/31): Style Control is now added to Arena Hard Auto! Check this section to start using style control!

Arena-Hard-Auto-v0.1 (See Paper) is an automatic evaluation tool for instruction-tuned LLMs. It contains 500 challenging user queries. We prompt GPT-4-Turbo as judge to compare the models' responses against a baseline model (default: GPT-4-0314). Notably, Arena-Hard-Auto has the highest correlation and separability to Chatbot Arena among popular open-ended LLM benchmarks (See Paper). If you are curious to see how well your model might perform on Chatbot Arena, we recommend trying Arena-Hard-Auto.

Although both Arena-Hard-Auto and Chatbot Arena Category Hard (See Blog) employ similar pipeline to select hard prompts, Arena-Hard-Auto employs automatic judge as a cheaper and faster approximator to human preference. Checkout BenchBuilder folder for code and resources on how we curate Arena-Hard-Auto.

claude-3-5-sonnet-20240620 | score: 79.3 | 95% CI: (-2.1, 2.0) | average #tokens: 567

gpt-4o-2024-05-13 | score: 79.2 | 95% CI: (-1.9, 1.7) | average #tokens: 696

gpt-4-0125-preview | score: 78.0 | 95% CI: (-2.1, 2.4) | average #tokens: 619

gpt-4o-2024-08-06 | score: 77.9 | 95% CI: (-2.0, 2.1) | average #tokens: 594

athene-70b | score: 77.6 | 95% CI: (-2.7, 2.2) | average #tokens: 684

gpt-4o-mini | score: 74.9 | 95% CI: (-2.5, 1.9) | average #tokens: 668

gemini-1.5-pro-api-preview | score: 72.0 | 95% CI: (-2.1, 2.5) | average #tokens: 676

mistral-large-2407 | score: 70.4 | 95% CI: (-1.6, 2.1) | average #tokens: 623

llama-3.1-405b-instruct | score: 69.3 | 95% CI: (-2.4, 2.2) | average #tokens: 658

glm-4-0520 | score: 63.8 | 95% CI: (-2.9, 2.8) | average #tokens: 636

yi-large | score: 63.7 | 95% CI: (-2.6, 2.4) | average #tokens: 626

deepseek-coder-v2 | score: 62.3 | 95% CI: (-2.1, 1.8) | average #tokens: 578

claude-3-opus-20240229 | score: 60.4 | 95% CI: (-2.5, 2.5) | average #tokens: 541

gemma-2-27b-it | score: 57.5 | 95% CI: (-2.1, 2.4) | average #tokens: 577

llama-3.1-70b-instruct | score: 55.7 | 95% CI: (-2.9, 2.7) | average #tokens: 628

glm-4-0116 | score: 55.7 | 95% CI: (-2.4, 2.3) | average #tokens: 622

glm-4-air | score: 50.9 | 95% CI: (-2.9, 2.7) | average #tokens: 619

gpt-4-0314 | score: 50.0 | 95% CI: (0.0, 0.0) | average #tokens: 423

gemini-1.5-flash-api-preview | score: 49.6 | 95% CI: (-2.2, 2.8) | average #tokens: 642

qwen2-72b-instruct | score: 46.9 | 95% CI: (-2.5, 2.7) | average #tokens: 515

claude-3-sonnet-20240229 | score: 46.8 | 95% CI: (-2.3, 2.7) | average #tokens: 552

llama-3-70b-instruct | score: 46.6 | 95% CI: (-2.3, 2.6) | average #tokens: 591

claude-3-haiku-20240307 | score: 41.5 | 95% CI: (-2.5, 2.5) | average #tokens: 505

gpt-4-0613 | score: 37.9 | 95% CI: (-2.8, 2.4) | average #tokens: 354

mistral-large-2402 | score: 37.7 | 95% CI: (-2.1, 2.6) | average #tokens: 400

mixtral-8x22b-instruct-v0.1 | score: 36.4 | 95% CI: (-2.4, 2.6) | average #tokens: 430

Qwen1.5-72B-Chat | score: 36.1 | 95% CI: (-2.0, 2.7) | average #tokens: 474

phi-3-medium-4k-instruct | score: 33.4 | 95% CI: (-2.6, 2.1) | average #tokens: 517

command-r-plus | score: 33.1 | 95% CI: (-2.8, 2.4) | average #tokens: 541

mistral-medium | score: 31.9 | 95% CI: (-1.9, 2.2) | average #tokens: 485

internlm2.5-20b-chat | score: 31.2 | 95% CI: (-2.4, 2.8) | average #tokens: 576

phi-3-small-8k-instruct | score: 29.8 | 95% CI: (-1.8, 1.9) | average #tokens: 568

mistral-next | score: 27.4 | 95% CI: (-2.4, 2.4) | average #tokens: 297

gpt-3.5-turbo-0613 | score: 24.8 | 95% CI: (-1.9, 2.3) | average #tokens: 401

dbrx-instruct-preview | score: 24.6 | 95% CI: (-2.0, 2.6) | average #tokens: 415

internlm2-20b-chat | score: 24.4 | 95% CI: (-2.0, 2.2) | average #tokens: 667

claude-2.0 | score: 24.0 | 95% CI: (-1.8, 1.8) | average #tokens: 295

Mixtral-8x7B-Instruct-v0.1 | score: 23.4 | 95% CI: (-2.0, 1.9) | average #tokens: 457

gpt-3.5-turbo-0125 | score: 23.3 | 95% CI: (-2.2, 1.9) | average #tokens: 329

Yi-34B-Chat | score: 23.1 | 95% CI: (-1.6, 1.8) | average #tokens: 611

Starling-LM-7B-beta | score: 23.0 | 95% CI: (-1.8, 1.8) | average #tokens: 530

claude-2.1 | score: 22.8 | 95% CI: (-2.3, 1.8) | average #tokens: 290

llama-3.1-8b-instruct | score: 21.3 | 95% CI: (-1.9, 2.2) | average #tokens: 861

Snorkel-Mistral-PairRM-DPO | score: 20.7 | 95% CI: (-1.8, 2.2) | average #tokens: 564

llama-3-8b-instruct | score: 20.6 | 95% CI: (-2.0, 1.9) | average #tokens: 585

gpt-3.5-turbo-1106 | score: 18.9 | 95% CI: (-1.8, 1.6) | average #tokens: 285

gpt-3.5-turbo-0301 | score: 18.1 | 95% CI: (-1.9, 2.1) | average #tokens: 334

gemini-1.0-pro | score: 17.8 | 95% CI: (-1.2, 2.2) | average #tokens: 322

snowflake-arctic-instruct | score: 17.6 | 95% CI: (-1.8, 1.5) | average #tokens: 365

command-r | score: 17.0 | 95% CI: (-1.7, 1.8) | average #tokens: 432

phi-3-mini-128k-instruct | score: 15.4 | 95% CI: (-1.4, 1.4) | average #tokens: 609

tulu-2-dpo-70b | score: 15.0 | 95% CI: (-1.6, 1.3) | average #tokens: 550

Starling-LM-7B-alpha | score: 12.8 | 95% CI: (-1.6, 1.4) | average #tokens: 483

mistral-7b-instruct | score: 12.6 | 95% CI: (-1.7, 1.4) | average #tokens: 541

gemma-1.1-7b-it | score: 12.1 | 95% CI: (-1.3, 1.3) | average #tokens: 341

Llama-2-70b-chat-hf | score: 11.6 | 95% CI: (-1.5, 1.2) | average #tokens: 595

vicuna-33b-v1.3 | score: 8.6 | 95% CI: (-1.1, 1.1) | average #tokens: 451

gemma-7b-it | score: 7.5 | 95% CI: (-1.2, 1.3) | average #tokens: 378

Llama-2-7b-chat-hf | score: 4.6 | 95% CI: (-0.8, 0.8) | average #tokens: 561

gemma-1.1-2b-it | score: 3.4 | 95% CI: (-0.6, 0.8) | average #tokens: 316

gemma-2b-it | score: 3.0 | 95% CI: (-0.6, 0.6) | average #tokens: 369git clone https://github.com/lm-sys/arena-hard.git

cd arena-hard

pip install -r requirements.txt

pip install -r requirements-optional.txt # Optional dependencies (e.g., anthropic sdk)

We have pre-generated many popular models answers and judgments. You can browse them with an online demo or download them (with git-lfs installed) by

> git clone https://huggingface.co/spaces/lmsys/arena-hard-browser

// copy answers/judgments to the data directory

> cp -r arena-hard-browser/data . Then run

> python show_result.py

gpt-4-0125-preview | score: 78.0 | 95% CI: (-1.8, 2.2) | average #tokens: 619

claude-3-opus-20240229 | score: 60.4 | 95% CI: (-2.6, 2.1) | average #tokens: 541

gpt-4-0314 | score: 50.0 | 95% CI: (0.0, 0.0) | average #tokens: 423

claude-3-sonnet-20240229 | score: 46.8 | 95% CI: (-2.7, 2.3) | average #tokens: 552

claude-3-haiku-20240307 | score: 41.5 | 95% CI: (-2.4, 2.5) | average #tokens: 505

gpt-4-0613 | score: 37.9 | 95% CI: (-2.1, 2.2) | average #tokens: 354

mistral-large-2402 | score: 37.7 | 95% CI: (-2.9, 2.8) | average #tokens: 400

Qwen1.5-72B-Chat | score: 36.1 | 95% CI: (-2.1, 2.4) | average #tokens: 474

command-r-plus | score: 33.1 | 95% CI: (-2.0, 1.9) | average #tokens: 541Running show_result.py will save generated battles into data/arena_hard_battles.jsonl and bootstrapping statistics into data/bootstrapping_results.jsonl. If you don't want to regenerate battles or bootstrapping statistics, simply toggle argument --load-battles or --load-bootstrap, respectively.

Fill in your API endpoint in config/api_config.yaml. We support OpenAI compatible API server. You can specify parallel to indicate the number of concurrent API requests (default: 1).

# example

gpt-3.5-turbo-0125:

model_name: gpt-3.5-turbo-0125

endpoints: null

api_type: openai

parallel: 8

[YOUR-MODEL-NAME]:

model_name: [YOUR-MODEL-NAME]

endpoints:

- api_base: [YOUR-ENDPOINT-URL]

api_key: [YOUR-API-KEY]

api_type: openai

parallel: 8You may use inference engine such as Latest TGI version or vLLM or SGLang to host your model with an OpenAI compatible API server.

TGI Quick start

hf_pat=

model=

volume=/path/to/cache

port=1996

huggingface-cli download $model

sudo docker run --gpus 8 -e HUGGING_FACE_HUB_TOKEN=$hf_pat --shm-size 2000g -p $port:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:2.0.4 --model-id $model --max-input-length 8192 --max-batch-total-tokens 8193 --max-batch-prefill-tokens 8193 --max-total-tokens 8193

In config/gen_answer_config.yaml, add your model name in model_list.

bench_name: arena-hard-v0.1

temperature: 0.0

max_tokens: 4096

num_choices: 1

model_list:

- [YOUR-MODEL-NAME]Run the command to generate answers:

python gen_answer.pyCaching feature is implemented. The code will skip generating an answer when there is already an existing answer/judgment to the same prompt.

In config/judge_config.yaml, add your model name in model_list.

...

# Add your model below for evaluation

model_list:

- gpt-3.5-turbo-0125

- [YOUR-MODEL-NAME]Run the command to generate judgments:

python gen_judgment.pyJudgment caching is also implemented. It will skip generating judgments that has already been generated or lacks one of the model answers.

Output model win rates. Optionally, use --full-stats for detailed results. To save a csv file of the model rankings, use --output

> python show_result.pyYou can review individual judgment results using our UI code.

> python qa_browser.py --shareFollowing the newly introduced Style Control on Chatbot Arena, we release Style Control on Arena Hard Auto! We employ the same Style Control methods as proposed in the blogpost. Please refer to the blogpost for methodology and technical background.

Before applying style control, make sure your model answers has proper style attribute generated. Either pull the latest data from huggingface repo, or run the following script!

To add style attribute to your model answers, use add_markdown_info.py. The following command takes model answers from --dir, append style attributes (token length, number of headers, etc), and save the new answers in --output-dir.

> python add_markdown_info.py --dir data/arena-hard-v0.1/model_answer --output-dir data/arena-hard-v0.1/model_answerTo control for style (token length and markdown elements), use --style-control when running show_result.py.

> python show_result.py --style-controlTo control for length and markdown separately, use --length-control-only and --markdown-control-only.

Coming soon...

The code in this repository is mostly developed for or derived from the papers below. Please cite it if you find the repository helpful.

@misc{li2024crowdsourceddatahighqualitybenchmarks,

title={From Crowdsourced Data to High-Quality Benchmarks: Arena-Hard and BenchBuilder Pipeline},

author={Tianle Li and Wei-Lin Chiang and Evan Frick and Lisa Dunlap and Tianhao Wu and Banghua Zhu and Joseph E. Gonzalez and Ion Stoica},

year={2024},

eprint={2406.11939},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/abs/2406.11939},

}

@misc{chiang2024chatbot,

title={Chatbot Arena: An Open Platform for Evaluating LLMs by Human Preference},

author={Wei-Lin Chiang and Lianmin Zheng and Ying Sheng and Anastasios Nikolas Angelopoulos and Tianle Li and Dacheng Li and Hao Zhang and Banghua Zhu and Michael Jordan and Joseph E. Gonzalez and Ion Stoica},

year={2024},

eprint={2403.04132},

archivePrefix={arXiv},

primaryClass={cs.AI}

}

@misc{arenahard2024,

title = {From Live Data to High-Quality Benchmarks: The Arena-Hard Pipeline},

url = {https://lmsys.org/blog/2024-04-19-arena-hard/},

author = {Tianle Li*, Wei-Lin Chiang*, Evan Frick, Lisa Dunlap, Banghua Zhu, Joseph E. Gonzalez, Ion Stoica},

month = {April},

year = {2024}

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for arena-hard-auto

Similar Open Source Tools

arena-hard-auto

Arena-Hard-Auto-v0.1 is an automatic evaluation tool for instruction-tuned LLMs. It contains 500 challenging user queries. The tool prompts GPT-4-Turbo as a judge to compare models' responses against a baseline model (default: GPT-4-0314). Arena-Hard-Auto employs an automatic judge as a cheaper and faster approximator to human preference. It has the highest correlation and separability to Chatbot Arena among popular open-ended LLM benchmarks. Users can evaluate their models' performance on Chatbot Arena by using Arena-Hard-Auto.

FlipAttack

FlipAttack is a jailbreak attack tool designed to exploit black-box Language Model Models (LLMs) by manipulating text inputs. It leverages insights into LLMs' autoregressive nature to construct noise on the left side of the input text, deceiving the model and enabling harmful behaviors. The tool offers four flipping modes to guide LLMs in denoising and executing malicious prompts effectively. FlipAttack is characterized by its universality, stealthiness, and simplicity, allowing users to compromise black-box LLMs with just one query. Experimental results demonstrate its high success rates against various LLMs, including GPT-4o and guardrail models.

llm4s

llm4s is an experimental Scala 3 bindings tool for llama.cpp using Slinc. It provides version compatibility with Scala 3.3.0 and JDK 17, 19 for llama.cpp. Users can utilize llm4s to work with llama.cpp shared library and model, enabling completion and embeddings functionalities in Scala.

InternVL

InternVL scales up the ViT to _**6B parameters**_ and aligns it with LLM. It is a vision-language foundation model that can perform various tasks, including: **Visual Perception** - Linear-Probe Image Classification - Semantic Segmentation - Zero-Shot Image Classification - Multilingual Zero-Shot Image Classification - Zero-Shot Video Classification **Cross-Modal Retrieval** - English Zero-Shot Image-Text Retrieval - Chinese Zero-Shot Image-Text Retrieval - Multilingual Zero-Shot Image-Text Retrieval on XTD **Multimodal Dialogue** - Zero-Shot Image Captioning - Multimodal Benchmarks with Frozen LLM - Multimodal Benchmarks with Trainable LLM - Tiny LVLM InternVL has been shown to achieve state-of-the-art results on a variety of benchmarks. For example, on the MMMU image classification benchmark, InternVL achieves a top-1 accuracy of 51.6%, which is higher than GPT-4V and Gemini Pro. On the DocVQA question answering benchmark, InternVL achieves a score of 82.2%, which is also higher than GPT-4V and Gemini Pro. InternVL is open-sourced and available on Hugging Face. It can be used for a variety of applications, including image classification, object detection, semantic segmentation, image captioning, and question answering.

solon

Solon is a Java enterprise application development framework that is restrained, efficient, and open. It offers better cost performance for computing resources with 700% higher concurrency and 50% memory savings. It enables faster development productivity with less code and easy startup, 10 times faster than traditional methods. Solon provides a better production and deployment experience by packing applications 90% smaller. It supports a greater range of compatibility with non-Java-EE architecture and compatibility with Java 8 to Java 24, including GraalVM native image support. Solon is built from scratch with flexible interface specifications and an open ecosystem.

tiny-llm-zh

Tiny LLM zh is a project aimed at building a small-parameter Chinese language large model for quick entry into learning large model-related knowledge. The project implements a two-stage training process for large models and subsequent human alignment, including tokenization, pre-training, instruction fine-tuning, human alignment, evaluation, and deployment. It is deployed on ModeScope Tiny LLM website and features open access to all data and code, including pre-training data and tokenizer. The project trains a tokenizer using 10GB of Chinese encyclopedia text to build a Tiny LLM vocabulary. It supports training with Transformers deepspeed, multiple machine and card support, and Zero optimization techniques. The project has three main branches: llama2_torch, main tiny_llm, and tiny_llm_moe, each with specific modifications and features.

rag-web-ui

RAG Web UI is an intelligent dialogue system based on RAG (Retrieval-Augmented Generation) technology. It helps enterprises and individuals build intelligent Q&A systems based on their own knowledge bases. By combining document retrieval and large language models, it delivers accurate and reliable knowledge-based question-answering services. The system is designed with features like intelligent document management, advanced dialogue engine, and a robust architecture. It supports multiple document formats, async document processing, multi-turn contextual dialogue, and reference citations in conversations. The architecture includes a backend stack with Python FastAPI, MySQL + ChromaDB, MinIO, Langchain, JWT + OAuth2 for authentication, and a frontend stack with Next.js, TypeScript, Tailwind CSS, Shadcn/UI, and Vercel AI SDK for AI integration. Performance optimization includes incremental document processing, streaming responses, vector database performance tuning, and distributed task processing. The project is licensed under the Apache-2.0 License and is intended for learning and sharing RAG knowledge only, not for commercial purposes.

Wechat-AI-Assistant

Wechat AI Assistant is a project that enables multi-modal interaction with ChatGPT AI assistant within WeChat. It allows users to engage in conversations, role-playing, respond to voice messages, analyze images and videos, summarize articles and web links, and search the internet. The project utilizes the WeChatFerry library to control the Windows PC desktop WeChat client and leverages the OpenAI Assistant API for intelligent multi-modal message processing. Users can interact with ChatGPT AI in WeChat through text or voice, access various tools like bing_search, browse_link, image_to_text, text_to_image, text_to_speech, video_analysis, and more. The AI autonomously determines which code interpreter and external tools to use to complete tasks. Future developments include file uploads for AI to reference content, integration with other APIs, and login support for enterprise WeChat and WeChat official accounts.

flute

FLUTE (Flexible Lookup Table Engine for LUT-quantized LLMs) is a tool designed for uniform quantization and lookup table quantization of weights in lower-precision intervals. It offers flexibility in mapping intervals to arbitrary values through a lookup table. FLUTE supports various quantization formats such as int4, int3, int2, fp4, fp3, fp2, nf4, nf3, nf2, and even custom tables. The tool also introduces new quantization algorithms like Learned Normal Float (NFL) for improved performance and calibration data learning. FLUTE provides benchmarks, model zoo, and integration with frameworks like vLLM and HuggingFace for easy deployment and usage.

llm4regression

This project explores the capability of Large Language Models (LLMs) to perform regression tasks using in-context examples. It compares the performance of LLMs like GPT-4 and Claude 3 Opus with traditional supervised methods such as Linear Regression and Gradient Boosting. The project provides preprints and results demonstrating the strong performance of LLMs in regression tasks. It includes datasets, models used, and experiments on adaptation and contamination. The code and data for the experiments are available for interaction and analysis.

hcaptcha-challenger

hCaptcha Challenger is a tool designed to gracefully face hCaptcha challenges using a multimodal large language model. It does not rely on Tampermonkey scripts or third-party anti-captcha services, instead implementing interfaces for 'AI vs AI' scenarios. The tool supports various challenge types such as image labeling, drag and drop, and advanced tasks like self-supervised challenges and Agentic Workflow. Users can access documentation in multiple languages and leverage resources for tasks like model training, dataset annotation, and model upgrading. The tool aims to enhance user experience in handling hCaptcha challenges with innovative AI capabilities.

awesome-mobile-llm

Awesome Mobile LLMs is a curated list of Large Language Models (LLMs) and related studies focused on mobile and embedded hardware. The repository includes information on various LLM models, deployment frameworks, benchmarking efforts, applications, multimodal LLMs, surveys on efficient LLMs, training LLMs on device, mobile-related use-cases, industry announcements, and related repositories. It aims to be a valuable resource for researchers, engineers, and practitioners interested in mobile LLMs.

EVE

EVE is an official PyTorch implementation of Unveiling Encoder-Free Vision-Language Models. The project aims to explore the removal of vision encoders from Vision-Language Models (VLMs) and transfer LLMs to encoder-free VLMs efficiently. It also focuses on bridging the performance gap between encoder-free and encoder-based VLMs. EVE offers a superior capability with arbitrary image aspect ratio, data efficiency by utilizing publicly available data for pre-training, and training efficiency with a transparent and practical strategy for developing a pure decoder-only architecture across modalities.

Chinese-Mixtral-8x7B

Chinese-Mixtral-8x7B is an open-source project based on Mistral's Mixtral-8x7B model for incremental pre-training of Chinese vocabulary, aiming to advance research on MoE models in the Chinese natural language processing community. The expanded vocabulary significantly improves the model's encoding and decoding efficiency for Chinese, and the model is pre-trained incrementally on a large-scale open-source corpus, enabling it with powerful Chinese generation and comprehension capabilities. The project includes a large model with expanded Chinese vocabulary and incremental pre-training code.

awesome-llm

Awesome LLM is a curated list of resources related to Large Language Models (LLMs), including models, projects, datasets, benchmarks, materials, papers, posts, GitHub repositories, HuggingFace repositories, and reading materials. It provides detailed information on various LLMs, their parameter sizes, announcement dates, and contributors. The repository covers a wide range of LLM-related topics and serves as a valuable resource for researchers, developers, and enthusiasts interested in the field of natural language processing and artificial intelligence.

rulm

This repository contains language models for the Russian language, as well as their implementation and comparison. The models are trained on a dataset of ChatGPT-generated instructions and chats in Russian. They can be used for a variety of tasks, including question answering, text generation, and translation.

For similar tasks

arena-hard-auto

Arena-Hard-Auto-v0.1 is an automatic evaluation tool for instruction-tuned LLMs. It contains 500 challenging user queries. The tool prompts GPT-4-Turbo as a judge to compare models' responses against a baseline model (default: GPT-4-0314). Arena-Hard-Auto employs an automatic judge as a cheaper and faster approximator to human preference. It has the highest correlation and separability to Chatbot Arena among popular open-ended LLM benchmarks. Users can evaluate their models' performance on Chatbot Arena by using Arena-Hard-Auto.

max

The Modular Accelerated Xecution (MAX) platform is an integrated suite of AI libraries, tools, and technologies that unifies commonly fragmented AI deployment workflows. MAX accelerates time to market for the latest innovations by giving AI developers a single toolchain that unlocks full programmability, unparalleled performance, and seamless hardware portability.

ai-hub

AI Hub Project aims to continuously test and evaluate mainstream large language models, while accumulating and managing various effective model invocation prompts. It has integrated all mainstream large language models in China, including OpenAI GPT-4 Turbo, Baidu ERNIE-Bot-4, Tencent ChatPro, MiniMax abab5.5-chat, and more. The project plans to continuously track, integrate, and evaluate new models. Users can access the models through REST services or Java code integration. The project also provides a testing suite for translation, coding, and benchmark testing.

long-context-attention

Long-Context-Attention (YunChang) is a unified sequence parallel approach that combines the strengths of DeepSpeed-Ulysses-Attention and Ring-Attention to provide a versatile and high-performance solution for long context LLM model training and inference. It addresses the limitations of both methods by offering no limitation on the number of heads, compatibility with advanced parallel strategies, and enhanced performance benchmarks. The tool is verified in Megatron-LM and offers best practices for 4D parallelism, making it suitable for various attention mechanisms and parallel computing advancements.

marlin

Marlin is a highly optimized FP16xINT4 matmul kernel designed for large language model (LLM) inference, offering close to ideal speedups up to batchsizes of 16-32 tokens. It is suitable for larger-scale serving, speculative decoding, and advanced multi-inference schemes like CoT-Majority. Marlin achieves optimal performance by utilizing various techniques and optimizations to fully leverage GPU resources, ensuring efficient computation and memory management.

MMC

This repository, MMC, focuses on advancing multimodal chart understanding through large-scale instruction tuning. It introduces a dataset supporting various tasks and chart types, a benchmark for evaluating reasoning capabilities over charts, and an assistant achieving state-of-the-art performance on chart QA benchmarks. The repository provides data for chart-text alignment, benchmarking, and instruction tuning, along with existing datasets used in experiments. Additionally, it offers a Gradio demo for the MMCA model.

Tiktoken

Tiktoken is a high-performance implementation focused on token count operations. It provides various encodings like o200k_base, cl100k_base, r50k_base, p50k_base, and p50k_edit. Users can easily encode and decode text using the provided API. The repository also includes a benchmark console app for performance tracking. Contributions in the form of PRs are welcome.

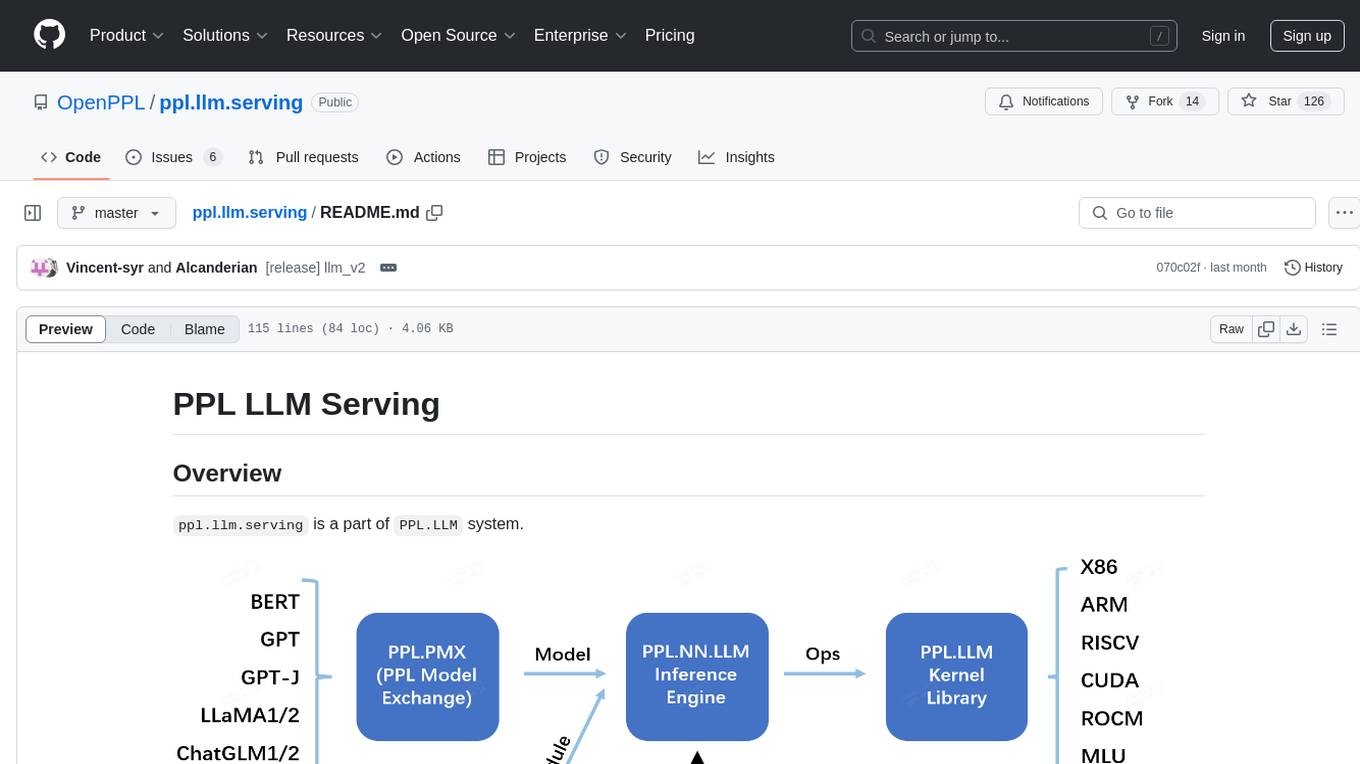

ppl.llm.serving

ppl.llm.serving is a serving component for Large Language Models (LLMs) within the PPL.LLM system. It provides a server based on gRPC and supports inference for LLaMA. The repository includes instructions for prerequisites, quick start guide, model exporting, server setup, client usage, benchmarking, and offline inference. Users can refer to the LLaMA Guide for more details on using this serving component.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.