GraphGen

GraphGen: Enhancing Supervised Fine-Tuning for LLMs with Knowledge-Driven Synthetic Data Generation

Stars: 898

GraphGen is a framework for synthetic data generation guided by knowledge graphs. It enhances supervised fine-tuning for large language models (LLMs) by generating synthetic data based on a fine-grained knowledge graph. The tool identifies knowledge gaps in LLMs, prioritizes generating QA pairs targeting high-value knowledge, incorporates multi-hop neighborhood sampling, and employs style-controlled generation to diversify QA data. Users can use LLaMA-Factory and xtuner for fine-tuning LLMs after data generation.

README:

GraphGen: Enhancing Supervised Fine-Tuning for LLMs with Knowledge-Driven Synthetic Data Generation

📚 Table of Contents

GraphGen is a framework for synthetic data generation guided by knowledge graphs. Please check the paper and best practice.

Here is post-training result which over 50% SFT data comes from GraphGen and our data clean pipeline.

| Domain | Dataset | Ours | Qwen2.5-7B-Instruct (baseline) |

|---|---|---|---|

| Plant | SeedBench | 65.9 | 51.5 |

| Common | CMMLU | 73.6 | 75.8 |

| Knowledge | GPQA-Diamond | 40.0 | 33.3 |

| Math | AIME24 | 20.6 | 16.7 |

| AIME25 | 22.7 | 7.2 |

It begins by constructing a fine-grained knowledge graph from the source text,then identifies knowledge gaps in LLMs using the expected calibration error metric, prioritizing the generation of QA pairs that target high-value, long-tail knowledge. Furthermore, GraphGen incorporates multi-hop neighborhood sampling to capture complex relational information and employs style-controlled generation to diversify the resulting QA data.

After data generation, you can use LLaMA-Factory and xtuner to finetune your LLMs.

- 2026.02.04: We support HuggingFace Datasets as input data source for data generation now.

- 2026.01.15: LLM benchmark synthesis now supports single/multiple-choice & fill-in-the-blank & true-or-false—ideal for education 🌟🌟

- 2025.12.26: Knowledge graph evaluation metrics about accuracy (entity/relation), consistency (conflict detection), structural robustness (noise, connectivity, degree distribution)

History

- 2025.12.16: Added rocksdb for key-value storage backend and kuzudb for graph database backend support.

- 2025.12.16: Added vllm for local inference backend support.

- 2025.12.16: Refactored the data generation pipeline using ray to improve the efficiency of distributed execution and resource management.

- 2025.12.1: Added search support for NCBI and RNAcentral databases, enabling extraction of DNA and RNA data from these bioinformatics databases.

- 2025.10.30: We support several new LLM clients and inference backends including Ollama_client, http_client, HuggingFace Transformers and SGLang.

-

2025.10.23: We support VQA(Visual Question Answering) data generation now. Run script:

bash scripts/generate/generate_vqa.sh. - 2025.10.21: We support PDF as input format for data generation now via MinerU.

- 2025.09.29: We auto-update gradio demo on Hugging Face and ModelScope.

- 2025.08.14: We have added support for community detection in knowledge graphs using the Leiden algorithm, enabling the synthesis of Chain-of-Thought (CoT) data.

- 2025.07.31: We have added Google, Bing, Wikipedia, and UniProt as search back-ends.

- 2025.04.21: We have released the initial version of GraphGen.

We support various LLM inference servers, API servers, inference clients, input file formats, data modalities, output data formats, and output data types. Users can flexibly configure according to the needs of synthetic data.

| Inference Server | Api Server | Inference Client | Data Source | Data Modal | Data Type |

|---|---|---|---|---|---|

|

|

|

HTTP |

Files(CSV, JSON, PDF, TXT, etc.) Databases( Search Engines( Knowledge Graphs( |

TEXT IMAGE |

Aggregated Atomic CoT Multi-hop VQA |

Experience GraphGen Demo through Huggingface or Modelscope.

For any questions, please check FAQ, open new issue or join our wechat group and ask.

-

Install uv

# You could try pipx or pip to install uv when meet network issues, refer the uv doc for more details curl -LsSf https://astral.sh/uv/install.sh | sh

-

Clone the repository

git clone --depth=1 https://github.com/open-sciencelab/GraphGen cd GraphGen -

Create a new uv environment

uv venv --python 3.10

-

Configure the dependencies

uv pip install -r requirements.txt

python -m webui.app-

Install GraphGen

uv pip install graphg

-

Run in CLI

SYNTHESIZER_MODEL=your_synthesizer_model_name \ SYNTHESIZER_BASE_URL=your_base_url_for_synthesizer_model \ SYNTHESIZER_API_KEY=your_api_key_for_synthesizer_model \ TRAINEE_MODEL=your_trainee_model_name \ TRAINEE_BASE_URL=your_base_url_for_trainee_model \ TRAINEE_API_KEY=your_api_key_for_trainee_model \ graphg --output_dir cache

-

Configure the environment

- Create an

.envfile in the root directorycp .env.example .env

- Set the following environment variables:

# Tokenizer TOKENIZER_MODEL= # LLM # Support different backends: http_api, openai_api, ollama_api, ollama, huggingface, tgi, sglang, tensorrt # Synthesizer is the model used to construct KG and generate data # Trainee is the model used to train with the generated data # http_api / openai_api SYNTHESIZER_BACKEND=openai_api SYNTHESIZER_MODEL=gpt-4o-mini SYNTHESIZER_BASE_URL= SYNTHESIZER_API_KEY= TRAINEE_BACKEND=openai_api TRAINEE_MODEL=gpt-4o-mini TRAINEE_BASE_URL= TRAINEE_API_KEY= # azure_openai_api # SYNTHESIZER_BACKEND=azure_openai_api # The following is the same as your "Deployment name" in Azure # SYNTHESIZER_MODEL=<your-deployment-name> # SYNTHESIZER_BASE_URL=https://<your-resource-name>.openai.azure.com/openai/deployments/<your-deployment-name>/chat/completions # SYNTHESIZER_API_KEY= # SYNTHESIZER_API_VERSION=<api-version> # # ollama_api # SYNTHESIZER_BACKEND=ollama_api # SYNTHESIZER_MODEL=gemma3 # SYNTHESIZER_BASE_URL=http://localhost:11434 # # Note: TRAINEE with ollama_api backend is not supported yet as ollama_api does not support logprobs. # # huggingface # SYNTHESIZER_BACKEND=huggingface # SYNTHESIZER_MODEL=Qwen/Qwen2.5-0.5B-Instruct # # TRAINEE_BACKEND=huggingface # TRAINEE_MODEL=Qwen/Qwen2.5-0.5B-Instruct # # sglang # SYNTHESIZER_BACKEND=sglang # SYNTHESIZER_MODEL=Qwen/Qwen2.5-0.5B-Instruct # SYNTHESIZER_TP_SIZE=1 # SYNTHESIZER_NUM_GPUS=1 # TRAINEE_BACKEND=sglang # TRAINEE_MODEL=Qwen/Qwen2.5-0.5B-Instruct # SYNTHESIZER_TP_SIZE=1 # SYNTHESIZER_NUM_GPUS=1 # # vllm # SYNTHESIZER_BACKEND=vllm # SYNTHESIZER_MODEL=Qwen/Qwen2.5-0.5B-Instruct # SYNTHESIZER_NUM_GPUS=1 # TRAINEE_BACKEND=vllm # TRAINEE_MODEL=Qwen/Qwen2.5-0.5B-Instruct # TRAINEE_NUM_GPUS=1

- Create an

-

(Optional) Customize generation parameters in

config.yaml.Edit the corresponding YAML file, e.g.:

# examples/generate/generate_aggregated_qa/aggregated_config.yaml global_params: working_dir: cache graph_backend: kuzu # graph database backend, support: kuzu, networkx kv_backend: rocksdb # key-value store backend, support: rocksdb, json_kv nodes: - id: read_files # id is unique in the pipeline, and can be referenced by other steps op_name: read type: source dependencies: [] params: input_path: - examples/input_examples/jsonl_demo.jsonl # input file path, support json, jsonl, txt, pdf. See examples/input_examples for examples # additional settings...

-

Generate data

Pick the desired format and run the matching script:

Format Script to run Notes cotbash examples/generate/generate_cot_qa/generate_cot.shChain-of-Thought Q&A pairs atomicbash examples/generate/generate_atomic_qa/generate_atomic.shAtomic Q&A pairs covering basic knowledge aggregatedbash examples/generate/generate_aggregated_qa/generate_aggregated.shAggregated Q&A pairs incorporating complex, integrated knowledge multi-hopexamples/generate/generate_multi_hop_qa/generate_multi_hop.shMulti-hop reasoning Q&A pairs vqabash examples/generate/generate_vqa/generate_vqa.shVisual Question Answering pairs combining visual and textual understanding multi_choicebash examples/generate/generate_multi_choice_qa/generate_multi_choice.shMultiple-choice question-answer pairs multi_answerbash examples/generate/generate_multi_answer_qa/generate_multi_answer.shMultiple-answer question-answer pairs fill_in_blankbash examples/generate/generate_fill_in_blank_qa/generate_fill_in_blank.shFill-in-the-blank question-answer pairs

| | true_false | bash examples/generate/generate_true_false_qa/generate_true_false.sh | True-or-false question-answer pairs |

- Get the generated data

ls cache/output

- Build the Docker image

docker build -t graphgen . - Run the Docker container

docker run -p 7860:7860 graphgen

See analysis by deepwiki for a technical overview of the GraphGen system, its architecture, and core functionalities.

- SiliconFlow Abundant LLM API, some models are free

- LightRAG Simple and efficient graph retrieval solution

- ROGRAG A robustly optimized GraphRAG framework

- DB-GPT An AI native data app development framework

If you find this repository useful, please consider citing our work:

@misc{chen2025graphgenenhancingsupervisedfinetuning,

title={GraphGen: Enhancing Supervised Fine-Tuning for LLMs with Knowledge-Driven Synthetic Data Generation},

author={Zihong Chen and Wanli Jiang and Jinzhe Li and Zhonghang Yuan and Huanjun Kong and Wanli Ouyang and Nanqing Dong},

year={2025},

eprint={2505.20416},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2505.20416},

}This project is licensed under the Apache License 2.0.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for GraphGen

Similar Open Source Tools

GraphGen

GraphGen is a framework for synthetic data generation guided by knowledge graphs. It enhances supervised fine-tuning for large language models (LLMs) by generating synthetic data based on a fine-grained knowledge graph. The tool identifies knowledge gaps in LLMs, prioritizes generating QA pairs targeting high-value knowledge, incorporates multi-hop neighborhood sampling, and employs style-controlled generation to diversify QA data. Users can use LLaMA-Factory and xtuner for fine-tuning LLMs after data generation.

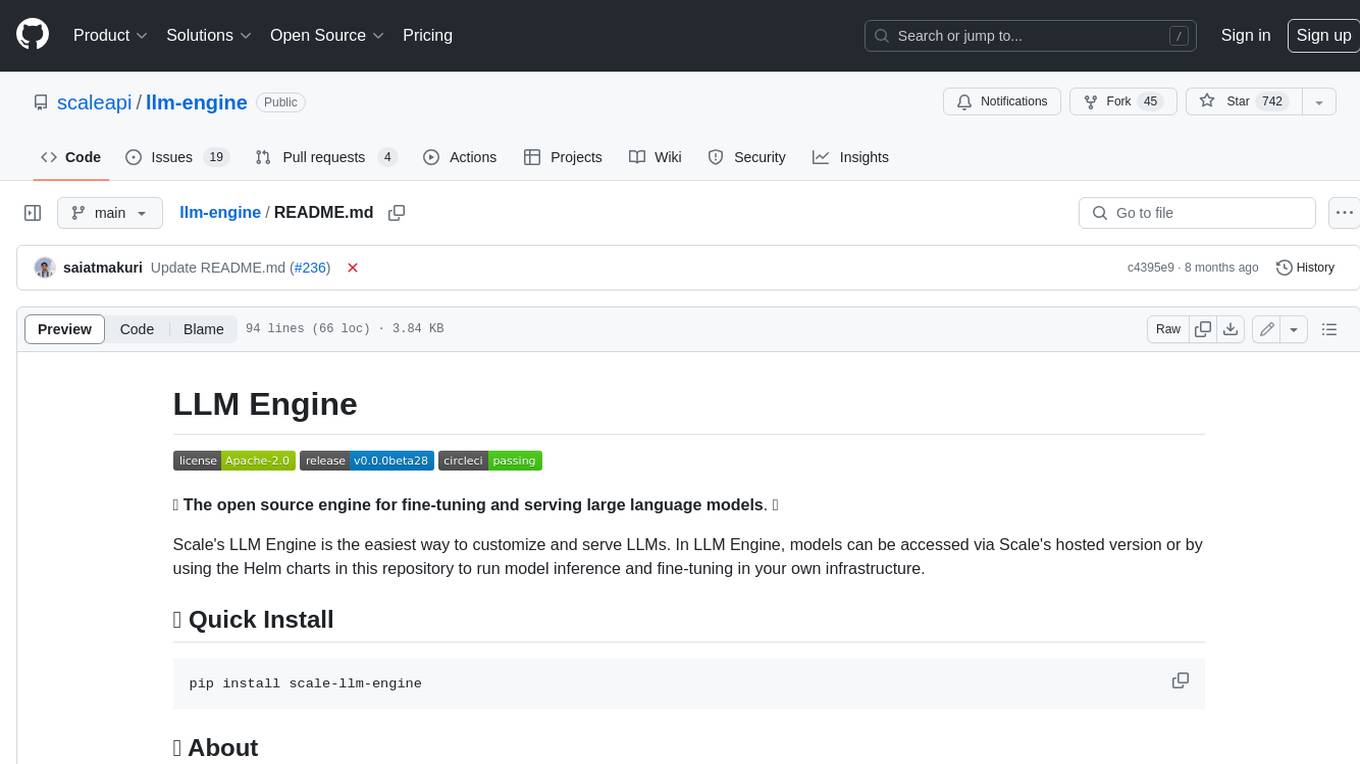

ScaleLLM

ScaleLLM is a cutting-edge inference system engineered for large language models (LLMs), meticulously designed to meet the demands of production environments. It extends its support to a wide range of popular open-source models, including Llama3, Gemma, Bloom, GPT-NeoX, and more. ScaleLLM is currently undergoing active development. We are fully committed to consistently enhancing its efficiency while also incorporating additional features. Feel free to explore our **_Roadmap_** for more details. ## Key Features * High Efficiency: Excels in high-performance LLM inference, leveraging state-of-the-art techniques and technologies like Flash Attention, Paged Attention, Continuous batching, and more. * Tensor Parallelism: Utilizes tensor parallelism for efficient model execution. * OpenAI-compatible API: An efficient golang rest api server that compatible with OpenAI. * Huggingface models: Seamless integration with most popular HF models, supporting safetensors. * Customizable: Offers flexibility for customization to meet your specific needs, and provides an easy way to add new models. * Production Ready: Engineered with production environments in mind, ScaleLLM is equipped with robust system monitoring and management features to ensure a seamless deployment experience.

ASTRA.ai

ASTRA is an open-source platform designed for developing applications utilizing large language models. It merges the ideas of Backend-as-a-Service and LLM operations, allowing developers to swiftly create production-ready generative AI applications. Additionally, it empowers non-technical users to engage in defining and managing data operations for AI applications. With ASTRA, you can easily create real-time, multi-modal AI applications with low latency, even without any coding knowledge.

agentscope

AgentScope is a multi-agent platform designed to empower developers to build multi-agent applications with large-scale models. It features three high-level capabilities: Easy-to-Use, High Robustness, and Actor-Based Distribution. AgentScope provides a list of `ModelWrapper` to support both local model services and third-party model APIs, including OpenAI API, DashScope API, Gemini API, and ollama. It also enables developers to rapidly deploy local model services using libraries such as ollama (CPU inference), Flask + Transformers, Flask + ModelScope, FastChat, and vllm. AgentScope supports various services, including Web Search, Data Query, Retrieval, Code Execution, File Operation, and Text Processing. Example applications include Conversation, Game, and Distribution. AgentScope is released under Apache License 2.0 and welcomes contributions.

pytorch-lightning

PyTorch Lightning is a framework for training and deploying AI models. It provides a high-level API that abstracts away the low-level details of PyTorch, making it easier to write and maintain complex models. Lightning also includes a number of features that make it easy to train and deploy models on multiple GPUs or TPUs, and to track and visualize training progress. PyTorch Lightning is used by a wide range of organizations, including Google, Facebook, and Microsoft. It is also used by researchers at top universities around the world. Here are some of the benefits of using PyTorch Lightning: * **Increased productivity:** Lightning's high-level API makes it easy to write and maintain complex models. This can save you time and effort, and allow you to focus on the research or business problem you're trying to solve. * **Improved performance:** Lightning's optimized training loops and data loading pipelines can help you train models faster and with better performance. * **Easier deployment:** Lightning makes it easy to deploy models to a variety of platforms, including the cloud, on-premises servers, and mobile devices. * **Better reproducibility:** Lightning's logging and visualization tools make it easy to track and reproduce training results.

ASTRA.ai

Astra.ai is a multimodal agent powered by TEN, showcasing its capabilities in speech, vision, and reasoning through RAG from local documentation. It provides a platform for developing AI agents with features like RTC transportation, extension store, workflow builder, and local deployment. Users can build and test agents locally using Docker and Node.js, with prerequisites including Agora App ID, Azure's speech-to-text and text-to-speech API keys, and OpenAI API key. The platform offers advanced customization options through config files and API keys setup, enabling users to create and deploy their AI agents for various tasks.

gitmesh

GitMesh is an AI-powered Git collaboration network designed to address contributor dropout in open source projects. It offers real-time branch-level insights, intelligent contributor-task matching, and automated workflows. The platform transforms complex codebases into clear contribution journeys, fostering engagement through gamified rewards and integration with open source support programs. GitMesh's mascot, Meshy/Mesh Wolf, symbolizes agility, resilience, and teamwork, reflecting the platform's ethos of efficiency and power through collaboration.

TempCompass

TempCompass is a benchmark designed to evaluate the temporal perception ability of Video LLMs. It encompasses a diverse set of temporal aspects and task formats to comprehensively assess the capability of Video LLMs in understanding videos. The benchmark includes conflicting videos to prevent models from relying on single-frame bias and language priors. Users can clone the repository, install required packages, prepare data, run inference using examples like Video-LLaVA and Gemini, and evaluate the performance of their models across different tasks such as Multi-Choice QA, Yes/No QA, Caption Matching, and Caption Generation.

new-api

New API is a next-generation large model gateway and AI asset management system that provides a wide range of features, including a new UI interface, multi-language support, online recharge function, key query for usage quota, compatibility with the original One API database, model charging by usage count, channel weighted randomization, data dashboard, token grouping and model restrictions, support for various authorization login methods, support for Rerank models, OpenAI Realtime API, Claude Messages format, reasoning effort setting, content reasoning, user-specific model rate limiting, request format conversion, cache billing support, and various model support such as gpts, Midjourney-Proxy, Suno API, custom channels, Rerank models, Claude Messages format, Dify, and more.

readme-ai

README-AI is a developer tool that auto-generates README.md files using a combination of data extraction and generative AI. It streamlines documentation creation and maintenance, enhancing developer productivity. This project aims to enable all skill levels, across all domains, to better understand, use, and contribute to open-source software. It offers flexible README generation, supports multiple large language models (LLMs), provides customizable output options, works with various programming languages and project types, and includes an offline mode for generating boilerplate README files without external API calls.

retinify

Retinify is an advanced AI-powered stereo vision library designed for robotics, enabling real-time, high-precision 3D perception by leveraging GPU and NPU acceleration. It is open source under Apache-2.0 license, offers high precision 3D mapping and object recognition, runs computations on GPU for fast performance, accepts stereo images from any rectified camera setup, is cost-efficient using minimal hardware, and has minimal dependencies on CUDA Toolkit, cuDNN, and TensorRT. The tool provides a pipeline for stereo matching and supports various image data types independently of OpenCV.

GPULlama3.java

GPULlama3.java powered by TornadoVM is a Java-native implementation of Llama3 that automatically compiles and executes Java code on GPUs via TornadoVM. It supports Llama3, Mistral, Qwen2.5, Qwen3, and Phi3 models in the GGUF format. The repository aims to provide GPU acceleration for Java code, enabling faster execution and high-performance access to off-heap memory. It offers features like interactive and instruction modes, flexible backend switching between OpenCL and PTX, and cross-platform compatibility with NVIDIA, Intel, and Apple GPUs.

Edit-Banana

Edit Banana is a universal content re-editor that allows users to transform fixed content into fully manipulatable assets. Powered by SAM 3 and multimodal large models, it enables high-fidelity reconstruction while preserving original diagram details and logical relationships. The platform offers advanced segmentation, fixed multi-round VLM scanning, high-quality OCR, user system with credits, multi-user concurrency, and a web interface. Users can upload images or PDFs to get editable DrawIO (XML) or PPTX files in seconds. The project structure includes components for segmentation, text extraction, frontend, models, and scripts, with detailed installation and setup instructions provided. The tool is open-source under the Apache License 2.0, allowing commercial use and secondary development.

monoscope

Monoscope is an open-source monitoring and observability platform that uses artificial intelligence to understand and monitor systems automatically. It allows users to ingest and explore logs, traces, and metrics in S3 buckets, query in natural language via LLMs, and create AI agents to detect anomalies. Key capabilities include universal data ingestion, AI-powered understanding, natural language interface, cost-effective storage, and zero configuration. Monoscope is designed to reduce alert fatigue, catch issues before they impact users, and provide visibility across complex systems.

intlayer

Intlayer is an open-source, flexible i18n toolkit with AI-powered translation and CMS capabilities. It is a modern i18n solution for web and mobile apps, framework-agnostic, and includes features like per-locale content files, TypeScript autocompletion, tree-shakable dictionaries, and CI/CD integration. With Intlayer, internationalization becomes faster, cleaner, and smarter, offering benefits such as cross-framework support, JavaScript-powered content management, simplified setup, enhanced routing, AI-powered translation, and more.

local-deep-research

Local Deep Research is a powerful AI-powered research assistant that performs deep, iterative analysis using multiple LLMs and web searches. It can be run locally for privacy or configured to use cloud-based LLMs for enhanced capabilities. The tool offers advanced research capabilities, flexible LLM support, rich output options, privacy-focused operation, enhanced search integration, and academic & scientific integration. It also provides a web interface, command line interface, and supports multiple LLM providers and search engines. Users can configure AI models, search engines, and research parameters for customized research experiences.

For similar tasks

GraphGen

GraphGen is a framework for synthetic data generation guided by knowledge graphs. It enhances supervised fine-tuning for large language models (LLMs) by generating synthetic data based on a fine-grained knowledge graph. The tool identifies knowledge gaps in LLMs, prioritizes generating QA pairs targeting high-value knowledge, incorporates multi-hop neighborhood sampling, and employs style-controlled generation to diversify QA data. Users can use LLaMA-Factory and xtuner for fine-tuning LLMs after data generation.

litgpt

LitGPT is a command-line tool designed to easily finetune, pretrain, evaluate, and deploy 20+ LLMs **on your own data**. It features highly-optimized training recipes for the world's most powerful open-source large-language-models (LLMs).

llm.c

LLM training in simple, pure C/CUDA. There is no need for 245MB of PyTorch or 107MB of cPython. For example, training GPT-2 (CPU, fp32) is ~1,000 lines of clean code in a single file. It compiles and runs instantly, and exactly matches the PyTorch reference implementation. I chose GPT-2 as the first working example because it is the grand-daddy of LLMs, the first time the modern stack was put together.

torchtune

Torchtune is a PyTorch-native library for easily authoring, fine-tuning, and experimenting with LLMs. It provides native-PyTorch implementations of popular LLMs using composable and modular building blocks, easy-to-use and hackable training recipes for popular fine-tuning techniques, YAML configs for easily configuring training, evaluation, quantization, or inference recipes, and built-in support for many popular dataset formats and prompt templates to help you quickly get started with training.

llm-engine

Scale's LLM Engine is an open-source Python library, CLI, and Helm chart that provides everything you need to serve and fine-tune foundation models, whether you use Scale's hosted infrastructure or do it in your own cloud infrastructure using Kubernetes.

LLaMA-Factory

LLaMA Factory is a unified framework for fine-tuning 100+ large language models (LLMs) with various methods, including pre-training, supervised fine-tuning, reward modeling, PPO, DPO and ORPO. It features integrated algorithms like GaLore, BAdam, DoRA, LongLoRA, LLaMA Pro, LoRA+, LoftQ and Agent tuning, as well as practical tricks like FlashAttention-2, Unsloth, RoPE scaling, NEFTune and rsLoRA. LLaMA Factory provides experiment monitors like LlamaBoard, TensorBoard, Wandb, MLflow, etc., and supports faster inference with OpenAI-style API, Gradio UI and CLI with vLLM worker. Compared to ChatGLM's P-Tuning, LLaMA Factory's LoRA tuning offers up to 3.7 times faster training speed with a better Rouge score on the advertising text generation task. By leveraging 4-bit quantization technique, LLaMA Factory's QLoRA further improves the efficiency regarding the GPU memory.

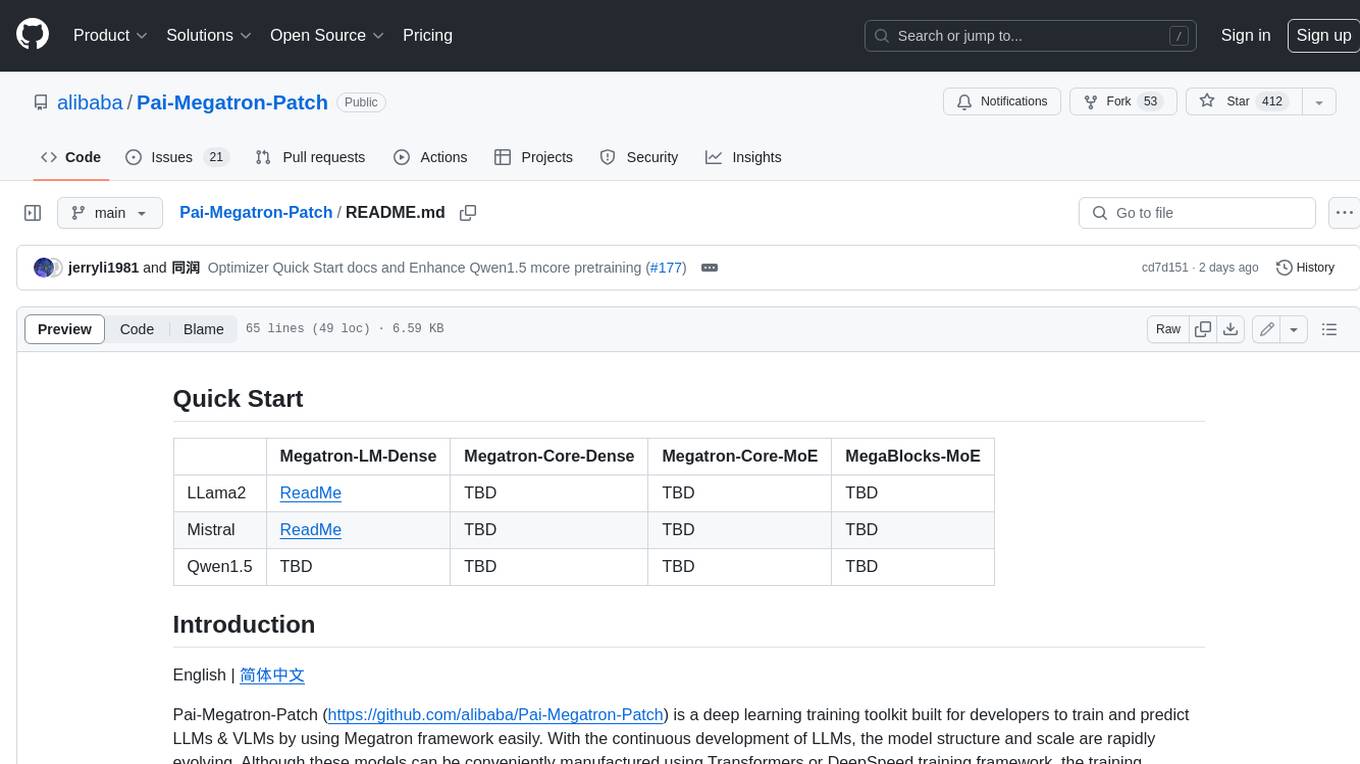

Pai-Megatron-Patch

Pai-Megatron-Patch is a deep learning training toolkit built for developers to train and predict LLMs & VLMs by using Megatron framework easily. With the continuous development of LLMs, the model structure and scale are rapidly evolving. Although these models can be conveniently manufactured using Transformers or DeepSpeed training framework, the training efficiency is comparably low. This phenomenon becomes even severer when the model scale exceeds 10 billion. The primary objective of Pai-Megatron-Patch is to effectively utilize the computational power of GPUs for LLM. This tool allows convenient training of commonly used LLM with all the accelerating techniques provided by Megatron-LM.

LLM-PowerHouse-A-Curated-Guide-for-Large-Language-Models-with-Custom-Training-and-Inferencing

LLM-PowerHouse is a comprehensive and curated guide designed to empower developers, researchers, and enthusiasts to harness the true capabilities of Large Language Models (LLMs) and build intelligent applications that push the boundaries of natural language understanding. This GitHub repository provides in-depth articles, codebase mastery, LLM PlayLab, and resources for cost analysis and network visualization. It covers various aspects of LLMs, including NLP, models, training, evaluation metrics, open LLMs, and more. The repository also includes a collection of code examples and tutorials to help users build and deploy LLM-based applications.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.